DeepMap+: Recognizing High-Level Indoor Semantics Using Virtual Features and Samples Based on a Multi-Length Window Framework

Abstract

:1. Introduction

- (1)

- Due to the large number of indoor semantics in some important indoor public areas such as supermarket and so on, there inevitably exist similar interaction activities between each user and different indoor facilities. It is hard to recognize the similar activities for inferring various indoor semantics. In particular, most of the interaction activities should have much more fine-grained and complex hand gestures in these indoor public areas than in activities of daily living (ADLs). For example, the hand gestures of taking a sandwich by using a bread tong are very similar to the movements of ladling out rice with a measuring cup. The above two activities can be utilized to infer the bread counter and the rice storage shelf, respectively. Therefore, it is necessary that the classifier system have an improved discriminating power for the fine hand movements.

- (2)

- To improve the classification accuracy of high-level indoor semantics, an effective way is by increasing the number of sensors, collected samples or extracted features, but all of them would burden the power constrained by mobile device and impact user comfort.

- (3)

- TransitLabel [10] enables automatic inference of high-level indoor semantics relying on a tree structure with some prior knowledge (such as vertical speed threshold and altitude threshold, etc.). The tree structure of TransitLabel decides that every inference of indoor semantics is dependent. However, as we know, indoor semantics are being updated and indoor mobile sensing (such as air pressure, audio and so on) is highly susceptible to wild fluctuations in accuracy when used in diverse indoor environments. In our opinion, the above prior knowledge is not absolutely reliable and the tree structure is not beneficial for the dynamic update of the inference system, so it is necessary to make the inference system more intelligent.

- (1)

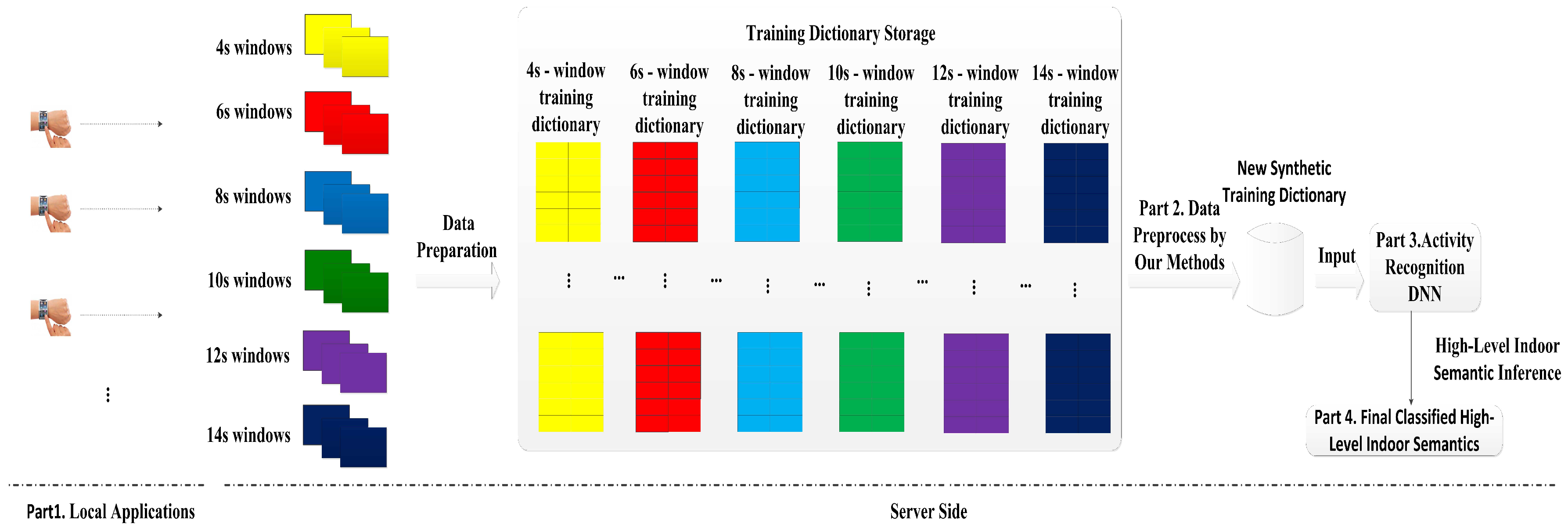

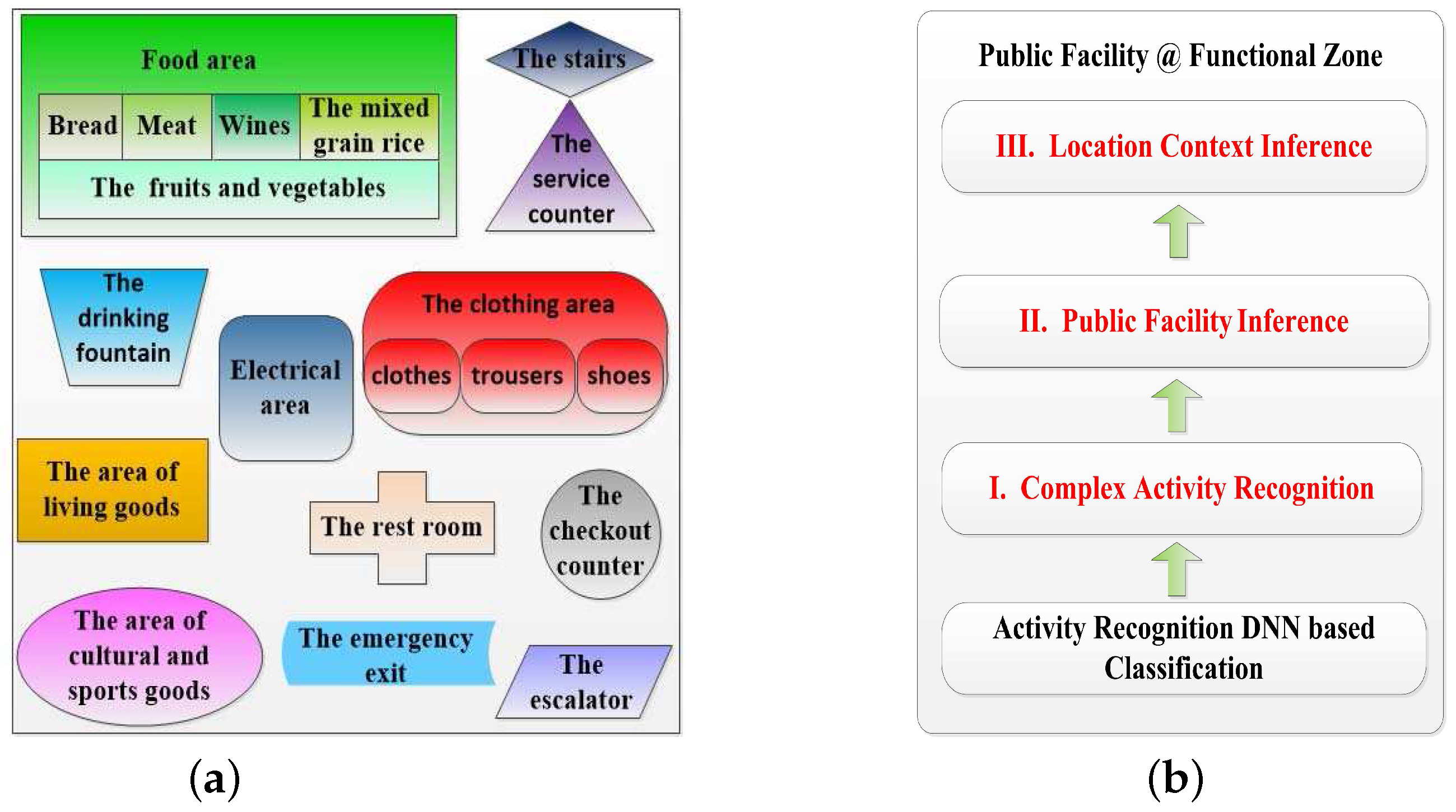

- We present DeepMap+, which is the first deep computation model based on the multi-length window framework for recognizing high-level indoor semantics using complex human activities. Instead of the conventional single-length window framework, the multi-length window framework can greatly enrich our data storage. In addition, we design a high-level indoor semantic inference to infer users’ location contexts and high-level indoor semantics (consisting of public facilities and functional zones) at a Wal-Mart supermarket.

- (2)

- We discover the characteristics and the correlations between the different-length windows and find out that their properties are beneficial to human activity classification. Based on this, for finer grained activity recognition we propose several methods of increasing virtual features and samples which are helpful to generate a valuable synthetic training dictionary. By integrating the deep learning (DL) technique, DeepMap+ can learn robust representations from the synthetic training dictionary.

- (3)

- We implement an Android application for the mobile client and Python program that runs on the server side. The Android application is developed for wrist-worn sensing, and a deep neural network (DNN)-based classifier is trained and its parameters are tuned with supervised learning.

- (4)

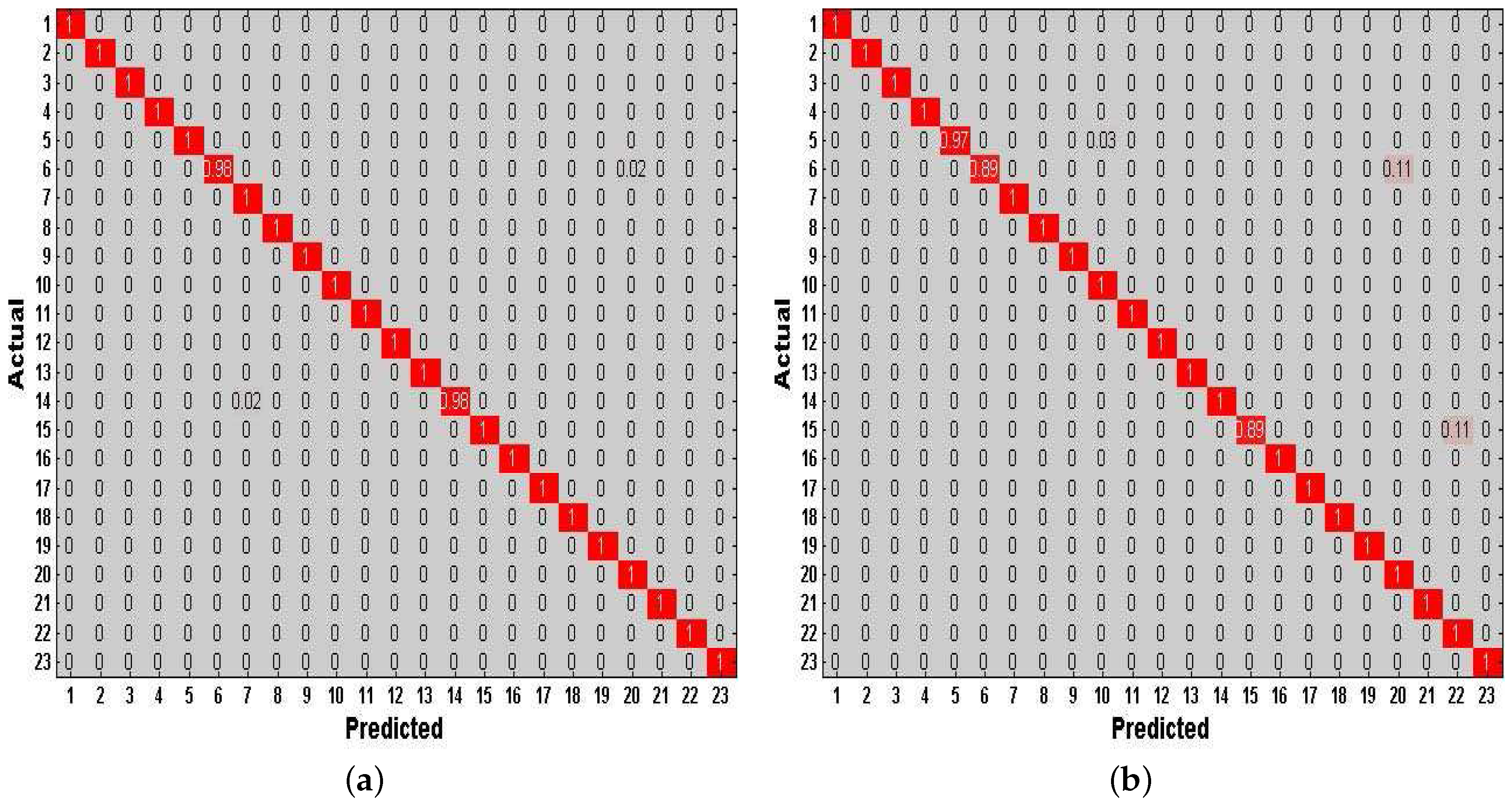

- We conduct performance validation with an exhaustive experimental study consisting of wrist-worn data collection of 23 high-level indoor semantics by two users at a Wal-Mart supermarket.

2. The Related Work

3. The DeepMap+ System

3.1. Overview

3.2. Feature Extraction

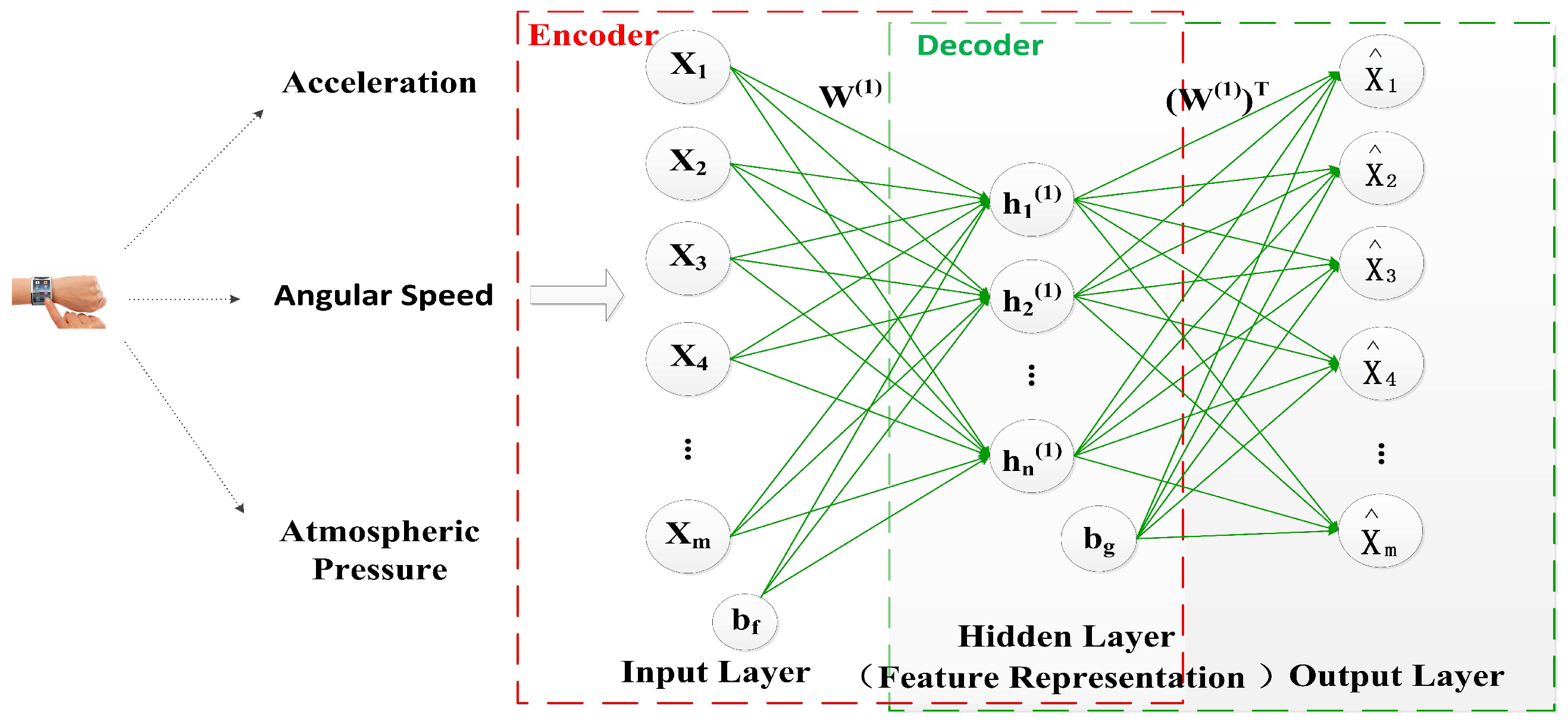

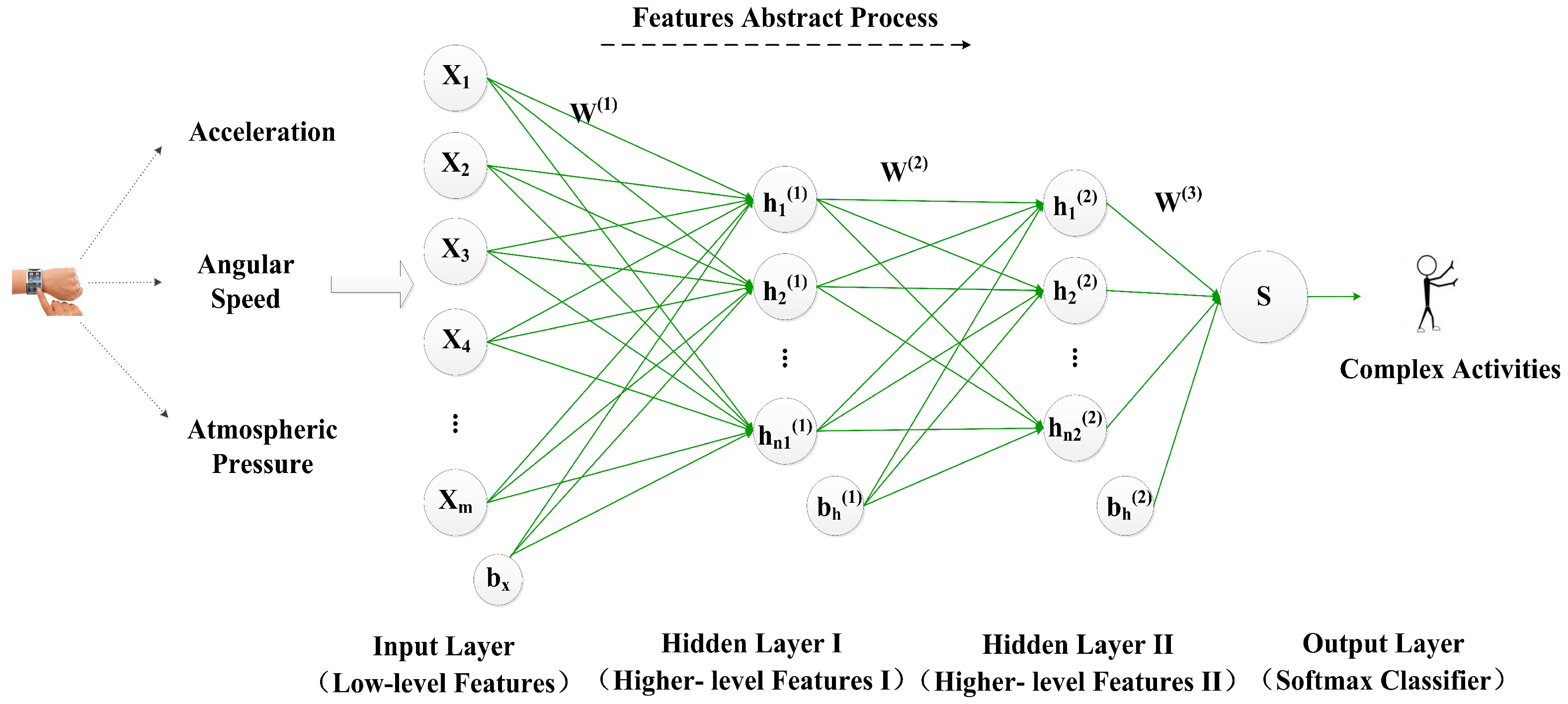

3.3. Deep Learning-Based Activity Recognition

3.4. High-Level Indoor Semantic Inference

4. The Description of Our Proposed Methods

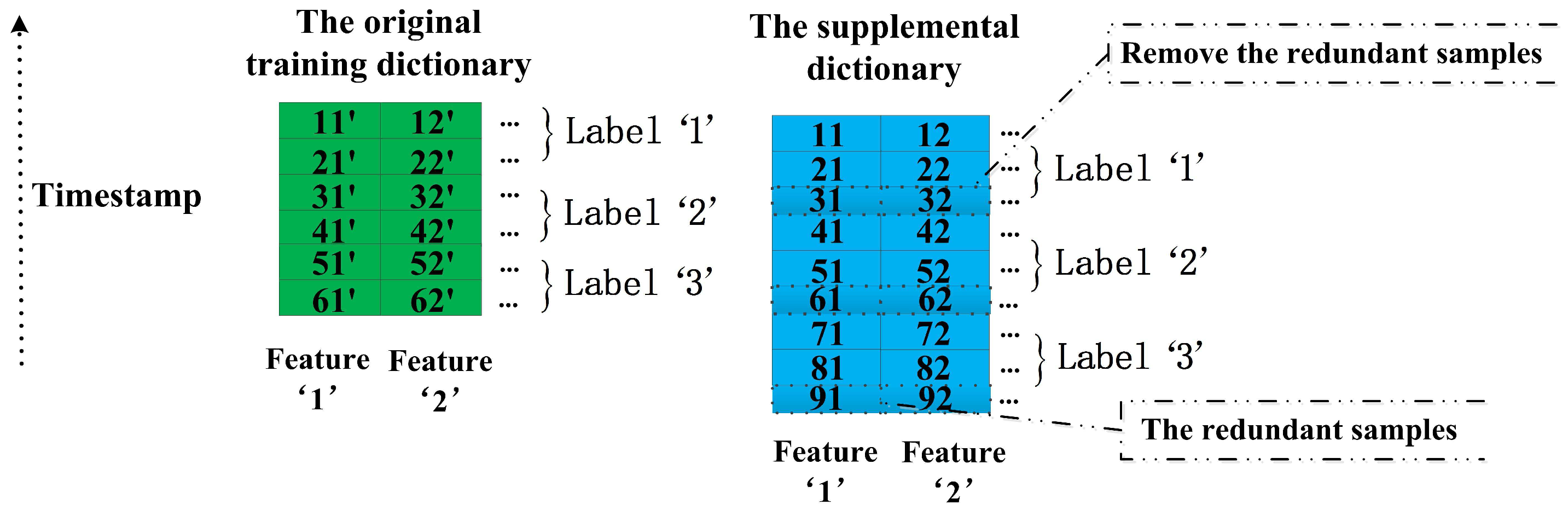

4.1. The Preprocess of the Supplemental Dictionary

4.2. The Methods of Increasing Features

4.2.1. The Method of Double-Length Window Features

4.2.2. Increasing Virtual Features Based on Double-Length Windows

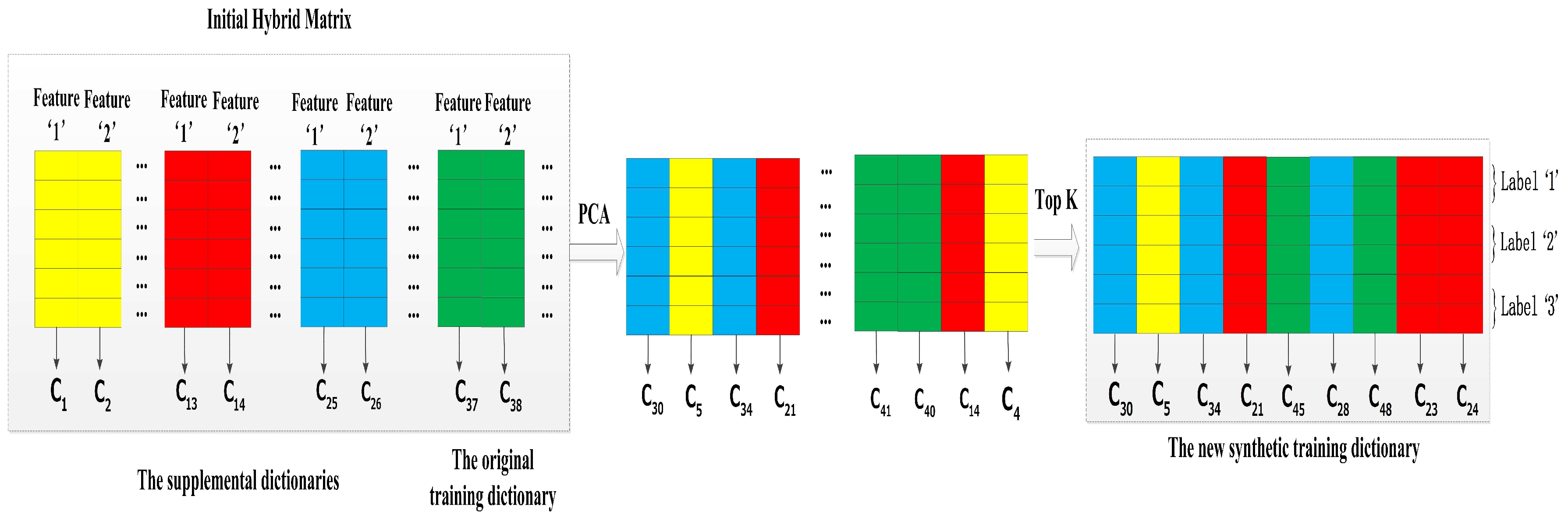

4.2.3. Increasing Virtual Features Based on Multi-Length Windows

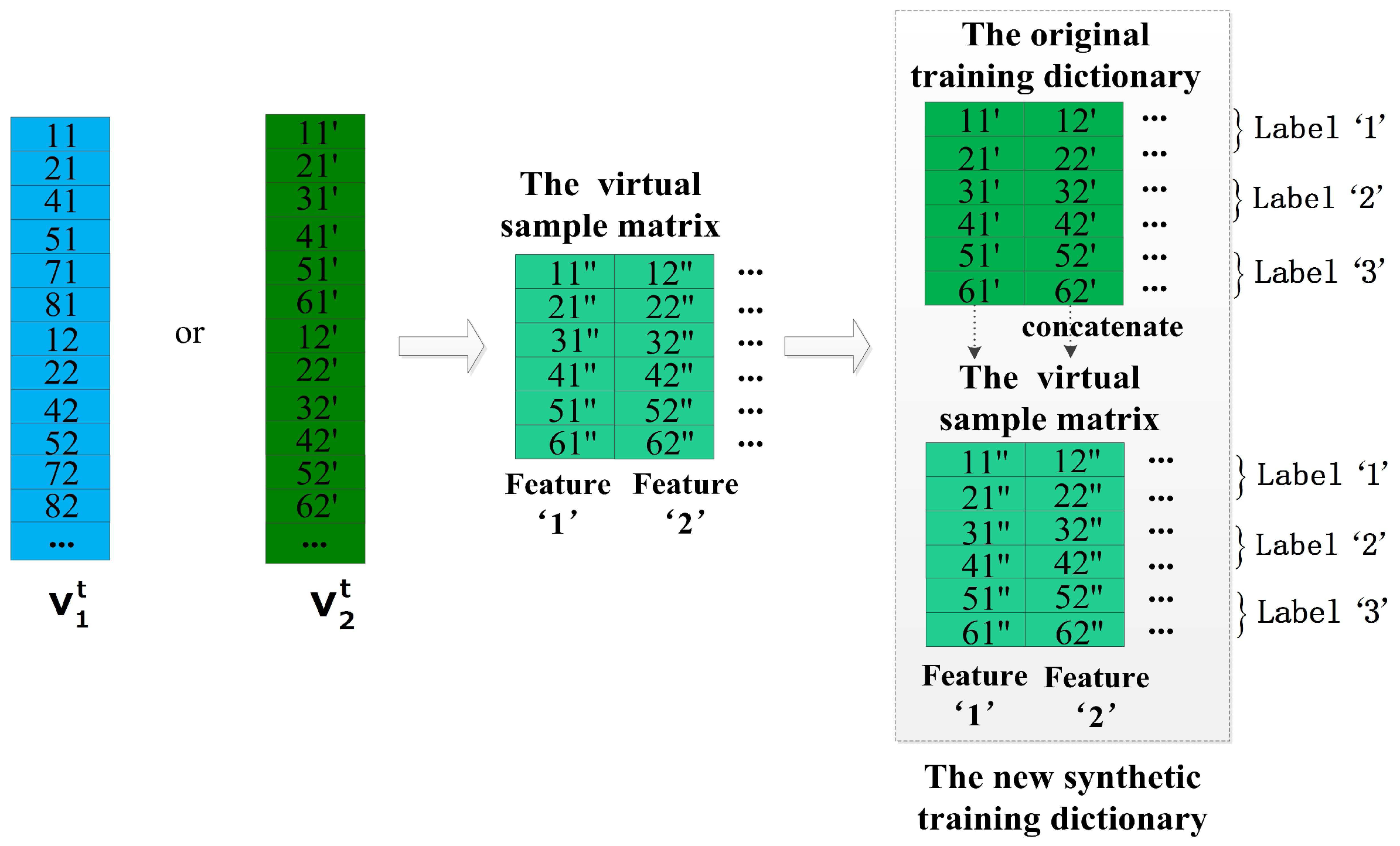

4.3. The Method of Increasing Virtual Samples

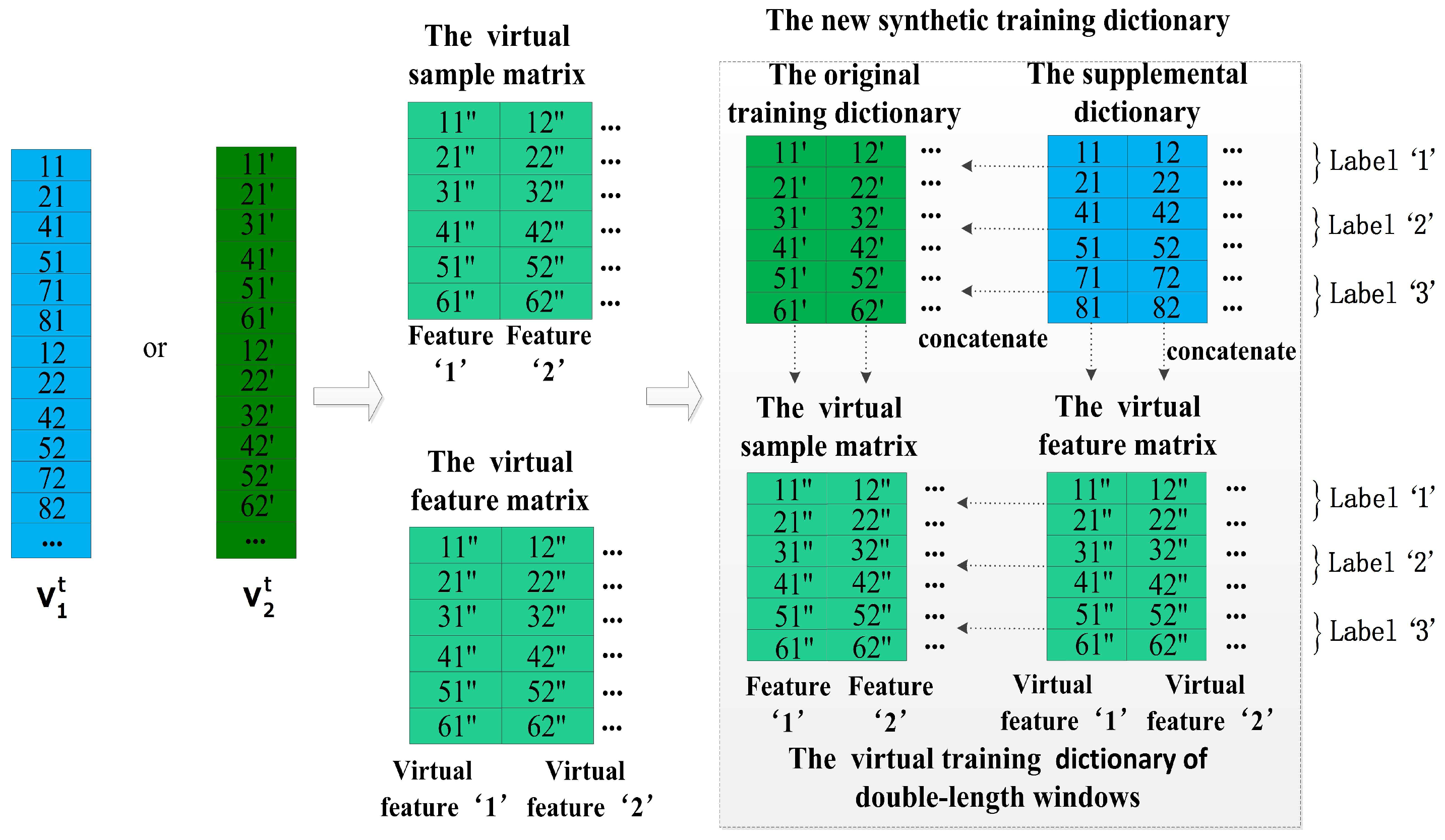

4.4. The Method of Increasing Features and Virtual Samples

4.5. Analysis and Advantages of the Proposed Methods

5. Model Robustness and Comparisons

5.1. Data Collection

5.2. Experimental Setup

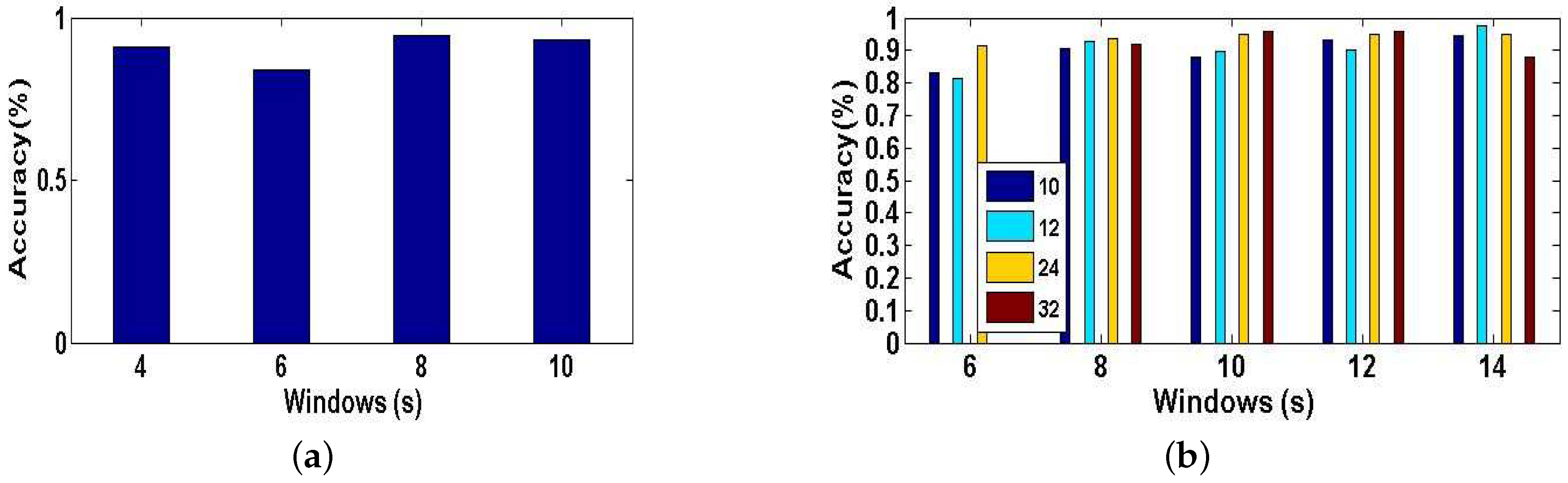

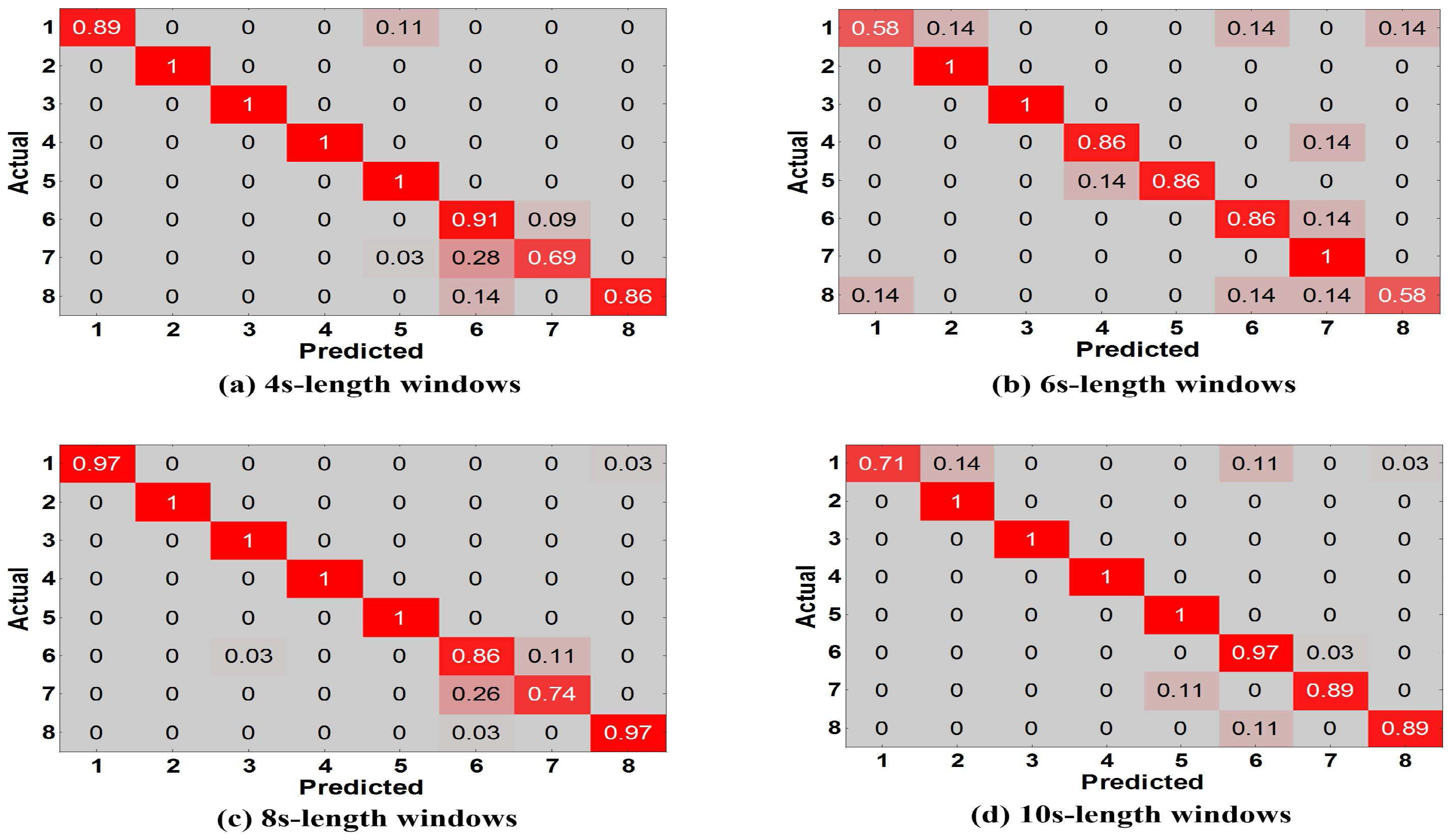

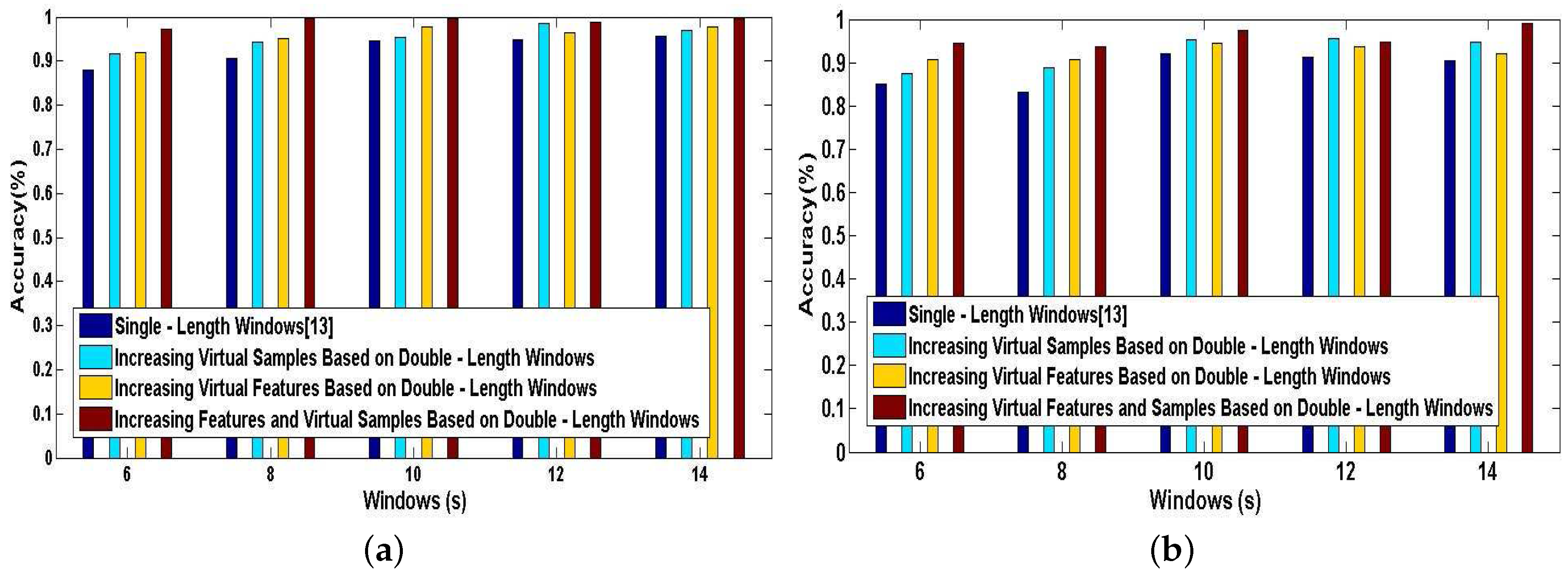

5.3. High-Level Indoor Semantic Classification

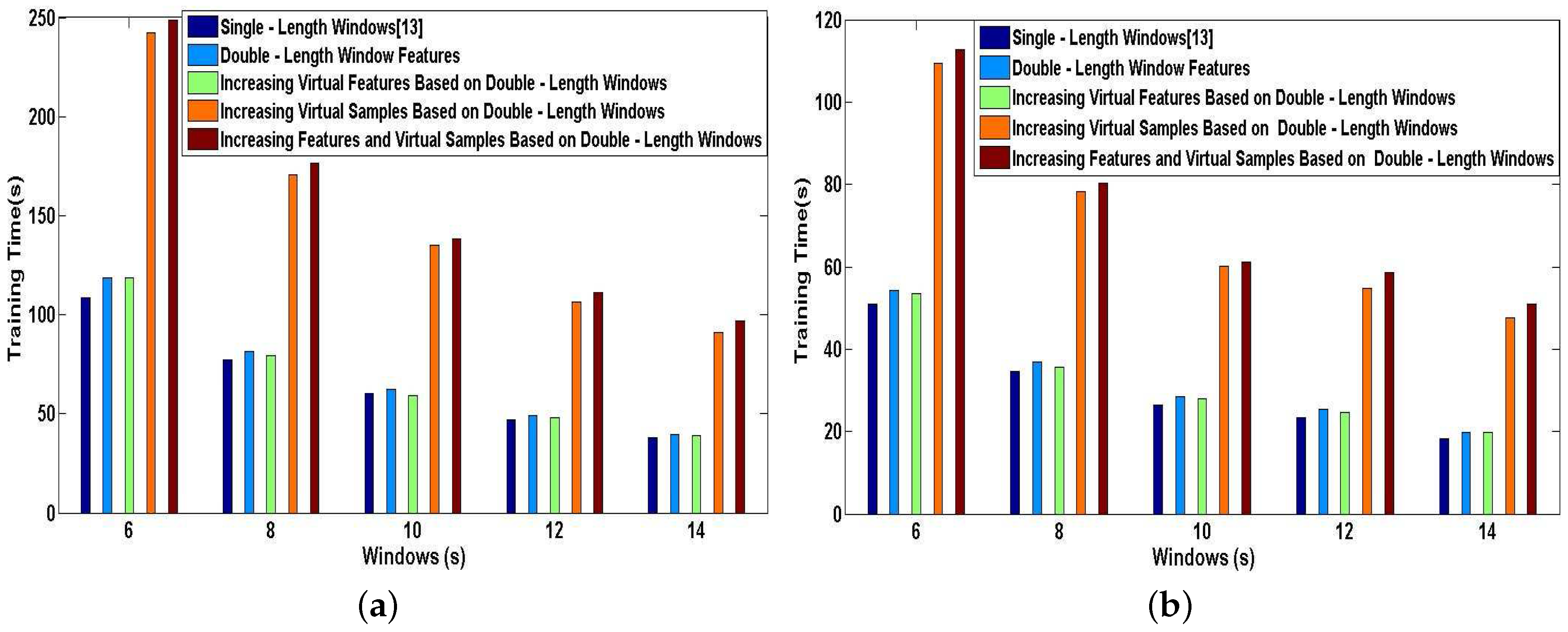

5.4. System Efficiency

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Office of Research and Development, U.S. Environmental Protection Agency. EPA’s Report on the Environment; U.S. Environmental Protection Agency: Chicago, IL, USA, 2015.

- Xie, B.; Tan, G.; He, T. SpinLight: A High Accuracy and Robust Light Positioning System for Indoor Applications. In Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems, Seoul, Korea, 1–4 November 2015; pp. 211–223. [Google Scholar]

- Ramer, C.; Sessner, J.; Scholz, M.; Zhang, X. Fusing low-cost sensor data for localization and mapping of automated guided vehicle fleets in indoor applications. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, San Diego, CA, USA, 14–16 September 2015; pp. 65–70. [Google Scholar]

- Shen, G.; Chen, Z.; Zhang, P.; Moscibroda, T.; Zhang, Y. Walkie-Markie: Indoor pathway mapping made easy. In Proceedings of the Usenix Conference on Networked Systems Design and Implementation, Lombard, IL, USA, 2–5 April 2013; pp. 85–98. [Google Scholar]

- Zhang, C.; Li, F.; Luo, J.; He, Y. iLocScan: Harnessing multipath for simultaneous indoor source localization and space scanning. In Proceedings of the ACM Conference on Embedded Networked Sensor Systems, Memphis, TN, USA, 3–6 November 2014; pp. 91–104. [Google Scholar]

- Alzantot, M.; Youssef, M. CrowdInside: Automatic construction of indoor floorplans. In Proceedings of the 20th International Conference on Advances in Geographic Information Systems, Redondo Beach, CA, USA, 6–9 November 2012; pp. 99–108. [Google Scholar]

- Jiang, Y.; Xiang, Y.; Pan, X.; Li, K.; Lv, Q.; Dick, R.P.; Shang, L.; Hannigan, M. Hallway based automatic indoor floorplan construction using room fingerprints. In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Zurich, Switzerland, 8–12 September 2013; pp. 315–324. [Google Scholar]

- Gao, R.; Zhao, M.; Ye, T.; Ye, F.; Wang, Y.; Bian, K.; Wang, T.; Li, X. Jigsaw: Indoor floor plan reconstruction via mobile crowdsensing. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, Maui, HI, USA, 7–11 September 2014; pp. 249–260. [Google Scholar]

- Cheng, L. Automatic Inference of Indoor Semantics Exploiting Opportunistic Smartphone Sensing. In Proceedings of the IEEE International Conference on Sensing, Communication, and Networking, Seattle, WA, USA, 22–25 June 2015. [Google Scholar]

- Elhamshary, M.; Youssef, M.; Uchiyama, A.; Yamaguchi, H.; Higashino, T. TransitLabel: A Crowd-Sensing System for Automatic Labeling of Transit Stations Semantics. In Proceedings of the ACM International Conference on Mobile Systems, Applications, and Services, Singapore, 26–30 June 2016; pp. 193–206. [Google Scholar]

- Sheikh, S.J.; Basalamah, A.; Aly, H.; Youssef, M. Demonstrating Map++: A crowd-sensing system for automatic map semantics identification. In Proceedings of the 2014 Eleventh IEEE International Conference on Sensing, Communication, and Networking, Singapore, 30 June–3 July 2014; pp. 152–154. [Google Scholar]

- De, D.; Bharti, P.; Das, S.K.; Chellappan, S. Multimodal Wearable Sensing for Fine-Grained Activity Recognition in Healthcare. IEEE Internet Comput. 2015, 19, 1. [Google Scholar] [CrossRef]

- Vepakomma, P.; De, D.; Das, S.K.; Bhansali, S. A-Wristocracy: Deep learning on wrist-worn sensing for recognition of user complex activities. In Proceedings of the IEEE International Conference on Wearable and Implantable Body Sensor Networks, Cambridge, MA, USA, 9–12 June 2015; pp. 1–6. [Google Scholar]

- Margarito, J.; Helaoui, R.; Bianchi, A.M.; Sartor, F.; Bonomi, A.G. User-Independent Recognition of Sports Activities From a Single Wrist-Worn Accelerometer: A Template-Matching-Based Approach. IEEE Trans. Bio-Med. Eng. 2015, 63, 788–796. [Google Scholar] [CrossRef] [PubMed]

- Bonomi, A.G.; Westerterp, K.R. Advances in physical activity monitoring and lifestyle interventions in obesity: A review. Int. J. Obes. 2011, 36, 167–177. [Google Scholar] [CrossRef] [PubMed]

- Yan, Z.; Chakraborty, D.; Misra, A.; Jeung, H.; Aberer, K. SAMMPLE: Detecting Semantic Indoor Activities in Practical Settings Using Locomotive Signatures. In Proceedings of the 2012 16th International Symposium on Wearable Computers, Newcastle, UK, 18–22 June 2012; pp. 37–40. [Google Scholar]

- Wang, W.; Liu, A.X.; Shahzad, M.; Ling, K.; Lu, S. Understanding and Modeling of WiFi Signal Based Human Activity Recognition. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking, Paris, France, 7–11 September 2015; pp. 65–76. [Google Scholar]

- Roy, N.; Misra, A.; Cook, D. Infrastructure-assisted smartphone-based ADL recognition in multi-inhabitant smart environments. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications, San Diego, CA, USA, 18–22 March 2013; pp. 38–46. [Google Scholar]

- Muhammad, S.; Stephan, B.; Durmaz, I.O.; Hans, S.; Havinga, P.J.M. Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors. Sensors 2016, 16, 426. [Google Scholar]

- Noda, K.; Yamaguchi, Y.; Nakadai, K.; Okuno, H.G.; Ogata, T. Audio-visual speech recognition using deep learning. Appl. Intell. 2015, 42, 722–737. [Google Scholar] [CrossRef]

- Cichy, R.M.; Khosla, A.; Pantazis, D.; Torralba, A.; Oliva, A. Deep Neural Networks Predict Hierarchical Spatio-temporal Cortical Dynamics of Human Visual Object Recognition. arXiv 2016. [Google Scholar]

- Lenz, I.; Lee, H.; Saxena, A. Deep Learning for Detecting Robotic Grasps. Int. J. Robot. Res. 2013, 34, 705–724. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Lane, N.D.; Georgiev, P.; Qendro, L. DeepEar: Robust smartphone audio sensing in unconstrained acoustic environments using deep learning. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Osaka, Japan, 7–11 September 2015; pp. 283–294. [Google Scholar]

- Ordóñez, F.J.; Roggen, D. Deep Convolutional and LSTM Recurrent Neural Networks for Multimodal Wearable Activity Recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Yao, S.; Hu, S.; Zhao, Y.; Zhang, A.; Abdelzaher, T. DeepSense: A Unified Deep Learning Framework for Time-Series Mobile Sensing Data Processing. arXiv 2016. [Google Scholar]

- Plchot, O.; Burget, L.; Aronowitz, H.; Matejka, P. Audio enhancing with DNN autoencoder for speaker recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Shanghai, China, 20–25 March 2016; pp. 5090–5094. [Google Scholar]

- Kang, H.L.; Kang, S.J.; Kang, W.H.; Kim, N.S. Two-stage noise aware training using asymmetric deep denoising autoencoder. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Shanghai, China, 20–25 March 2016; pp. 5765–5769. [Google Scholar]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A. Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Zeng, R.; Wu, J.; Shao, Z.; Senhadji, L. Quaternion softmax classifier. Electron. Lett. 2014, 50, 1929–1931. [Google Scholar] [CrossRef]

- Schölkopf, B.; Platt, J.; Hofmann, T. Greedy Layer-Wise Training of Deep Networks. Adv. Neural Inf. Process. Syst. 2007, 19, 153–160. [Google Scholar]

- Dahl, G.E.; Sainath, T.N.; Hinton, G.E. Improving deep neural networks for LVCSR using rectified linear units and dropout. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8609–8613. [Google Scholar]

| ID | Layer-1: Complex Activity | Layer-2: Public Facility | Layer-3: Functional Zone | The Detailed Description of the Activity |

|---|---|---|---|---|

| 1 | Opening a one-door soda fountain | A one-door soda fountain | The food area | Subject opens the door of the soda fountain with |

| one hand, then removes the goods and closes the door. | ||||

| 2 | Grabbing a cling wrap | A cling wrap supply shelf | The food area | Subject grabs a cling wrap, then pulls it out |

| and tears it off from the shelf. | ||||

| 3 | Bagging bulk food | A bulk food display shelf | The food area | Subject picks up the bulk food with a hand and puts them |

| into a freshness packet which is grasped by another hand. | ||||

| 4 | Opening a two-door upright freezer | A two-door upright freezer | The bread section | Subject opens the doors of the upright freezer with two |

| hands, then picks up the goods and closes the door. | ||||

| 5 | Taking a bread or sandwich with a food clip | A cake counter | The bread section | Subject pulls out the drawer of bread counter, then picks |

| up the bread with a clip and finally pushes back the drawer. | ||||

| 6 | Opening a horizontal freezer | A horizontal freezer | The meat section | Subject pushes the freezer door open with the palm of the hand downward, |

| then picks up the meat and pulls back the door until it is closed. | ||||

| 7 | Selecting a bottle of wine | A wine cabinet | The wines section | Subject grasps the bottleneck with one hand and holds the bottom |

| of the bottle with the other hand, then rotates the bottle body. | ||||

| 8 | Filling rice into storage bag with a measured cup | A rice display shelf | The mixed grain rice section | Subject picks a measured cup and |

| ladles a cup of rice into a storage bag. | ||||

| 9 | Picking over an apple | A fruit and vegetable storage shelf | The section of fruits and vegetables | Subject picks up the fruit and the wrist rotates |

| so that his palm turns from downward to upward. | ||||

| 10 | Trying on a trousers | A fitting room | The trousers section | Subject takes off his trousers, and then puts another pair of trousers on. |

| 11 | Trying on a shoe | A shoe display shelf | The shoes section | Subject bends down to untie the shoelace, then takes off the shoe, |

| next puts on another shoe and ties the shoelace. | ||||

| 12 | Trying on a jacket | A jacket display shelf | The clothes section | Subject takes off his jacket, and then puts another jacket on. |

| 13 | Getting a cup of water from a drinking fountain | A drinking fountain | The drinking fountain | Subject takes a cup at the front of the machine, then presses |

| down the button and waits 2–3 s, finally takes away the cup. | ||||

| 14 | Touching a cotton goods like mattress | A bedding articles display shelf | The area of living goods | Subject lightly touches and beats the cotton |

| goods with a hand to feel the softness of it. | ||||

| 15 | Browsing a book or notebook | A book display shelf | The area of cultural and sports goods | Subject holds a book or a notebook with both hands and flips through its pages. |

| 16 | Writing | A pen display shelf | The area of cultural and sports goods | Subject picks up a pen and writes several characters. |

| 17 | Examining a drum washing machine | A drum washing machine | The Electrical area | Subject bends over and opens the door of drum washing machine from the upper |

| right, then examines the internal structure and closes the door. | ||||

| 18 | Putting goods on the checkout counter | A checkout counter | The checkout counter | Subject picks up the goods from the shopping basket |

| and puts them on the checkout counter. | ||||

| 19 | Opening a door of emergency exit | A emergency exit | The emergency exit | Subject pushes forward the pole of the emergency exit and opens the door. |

| 20 | Heating food with a microwave oven | A utilizable microwave oven | The service counter | Subject presses down the door open button, then takes into the foods and closes |

| the door, next spins the button to turn on the heat. | ||||

| 21 | Washing hands | A tap | The rest room | Subject turns on the tap, and scrubs his hands repeatedly. |

| 22 | Standing in an escalator | An escalator | The escalator | Subject holds the handrail of escalator and stands motionless. |

| 23 | Walking in the stairs | A stairs | The stairs | Subject walks in the stairs. |

| Total Layers | Hidden Layers | Units in the First Hidden Layer | Units in the Second Hidden Layer |

|---|---|---|---|

| 4 | 2 | 100 | 300 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, W.; Zhou, S. DeepMap+: Recognizing High-Level Indoor Semantics Using Virtual Features and Samples Based on a Multi-Length Window Framework. Sensors 2017, 17, 1214. https://doi.org/10.3390/s17061214

Zhang W, Zhou S. DeepMap+: Recognizing High-Level Indoor Semantics Using Virtual Features and Samples Based on a Multi-Length Window Framework. Sensors. 2017; 17(6):1214. https://doi.org/10.3390/s17061214

Chicago/Turabian StyleZhang, Wei, and Siwang Zhou. 2017. "DeepMap+: Recognizing High-Level Indoor Semantics Using Virtual Features and Samples Based on a Multi-Length Window Framework" Sensors 17, no. 6: 1214. https://doi.org/10.3390/s17061214