An Iterative Distortion Compensation Algorithm for Camera Calibration Based on Phase Target

Abstract

:1. Introduction

2. Principle and Methods

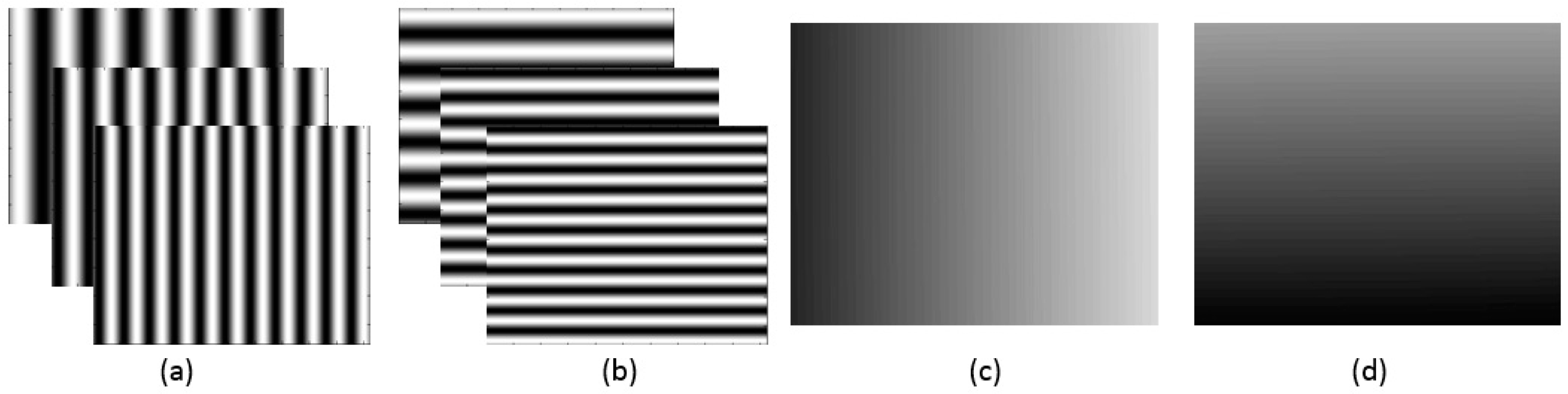

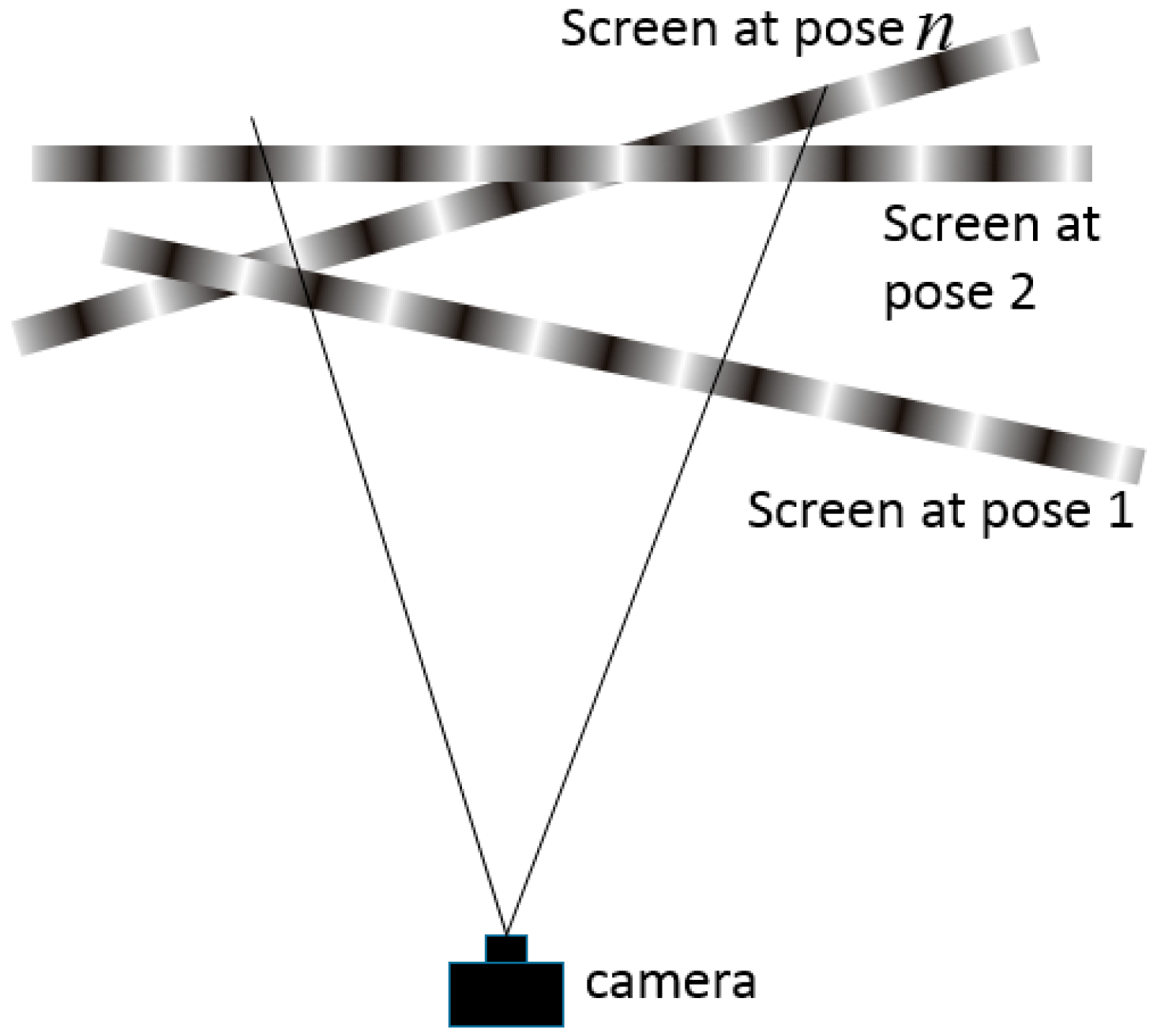

2.1. Phase Target

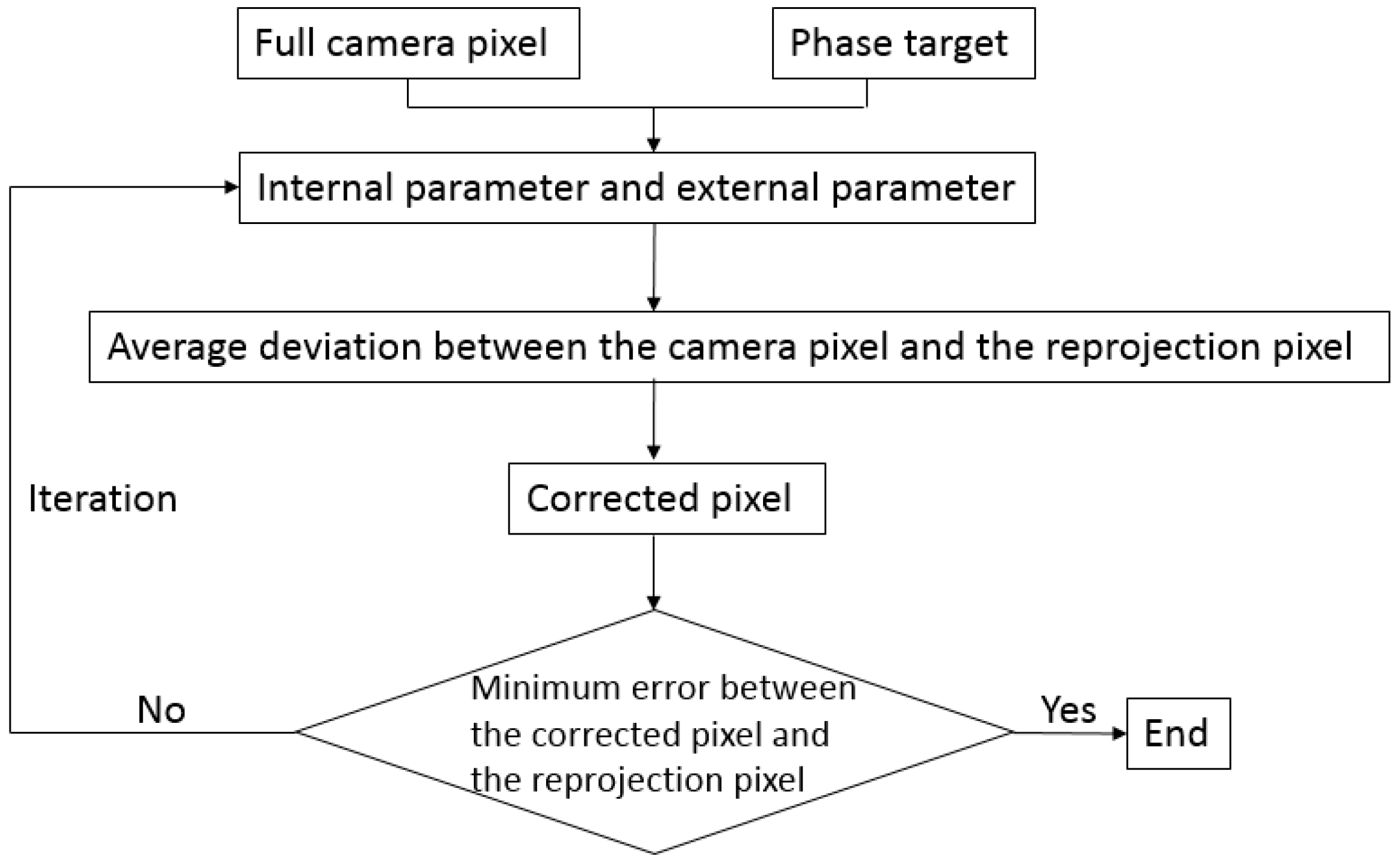

2.2. Calibration with Iterative Distortion Compensation Algorithm

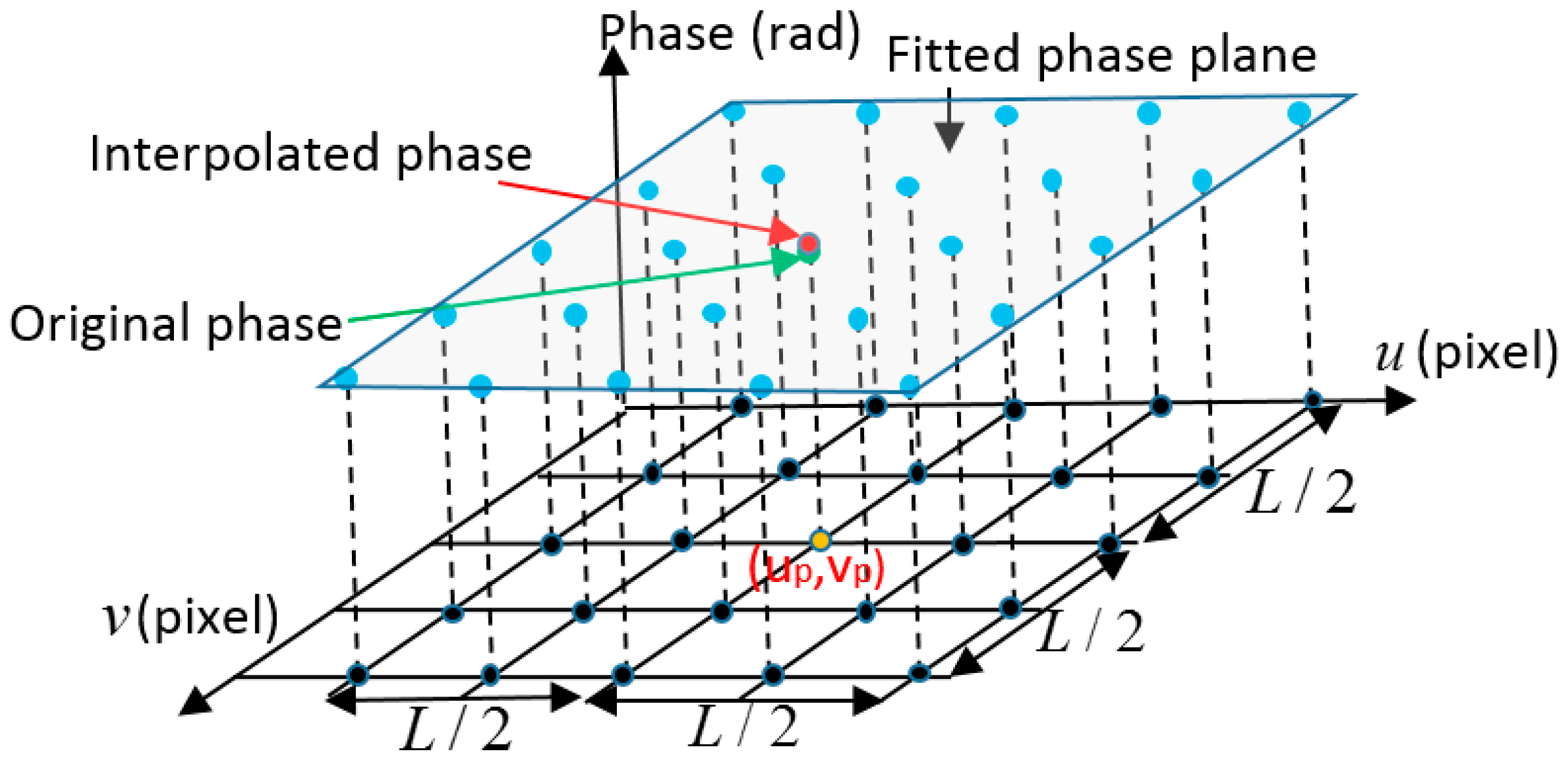

2.3. Compensation Algorithm for Phase Target Error

3. Results and Discussion

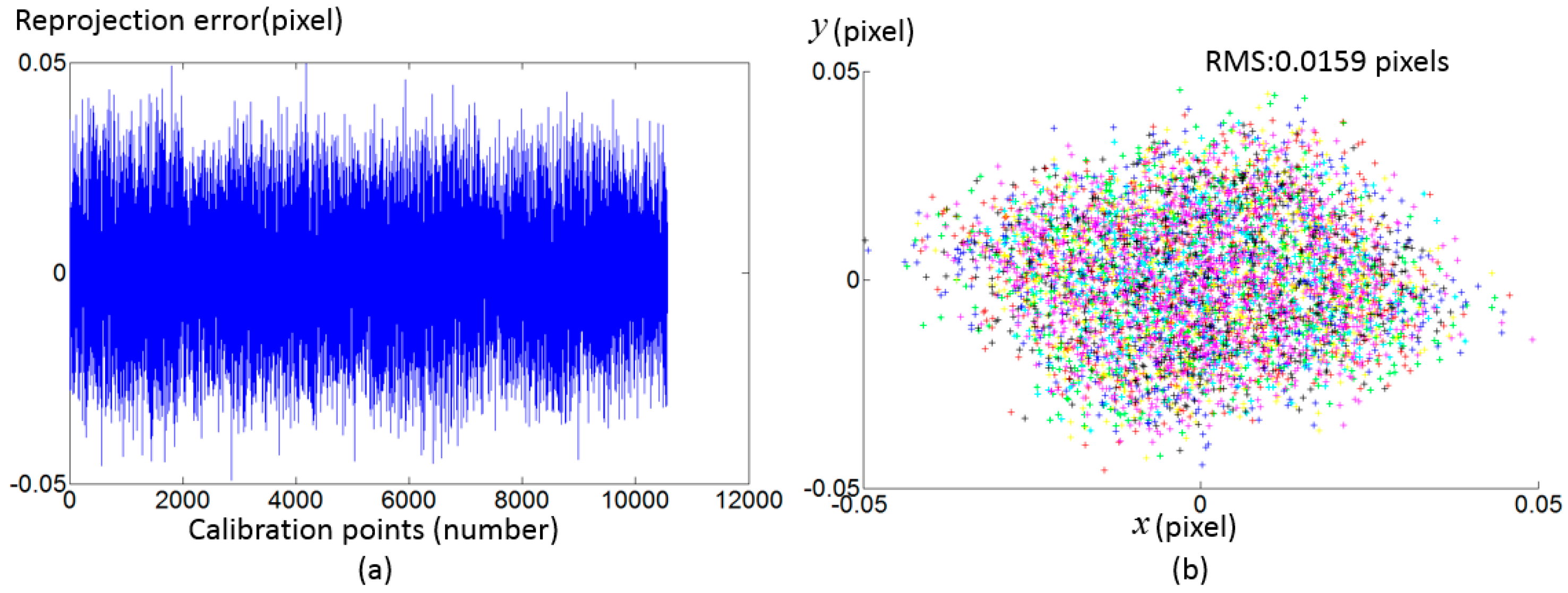

3.1. Simulation Study

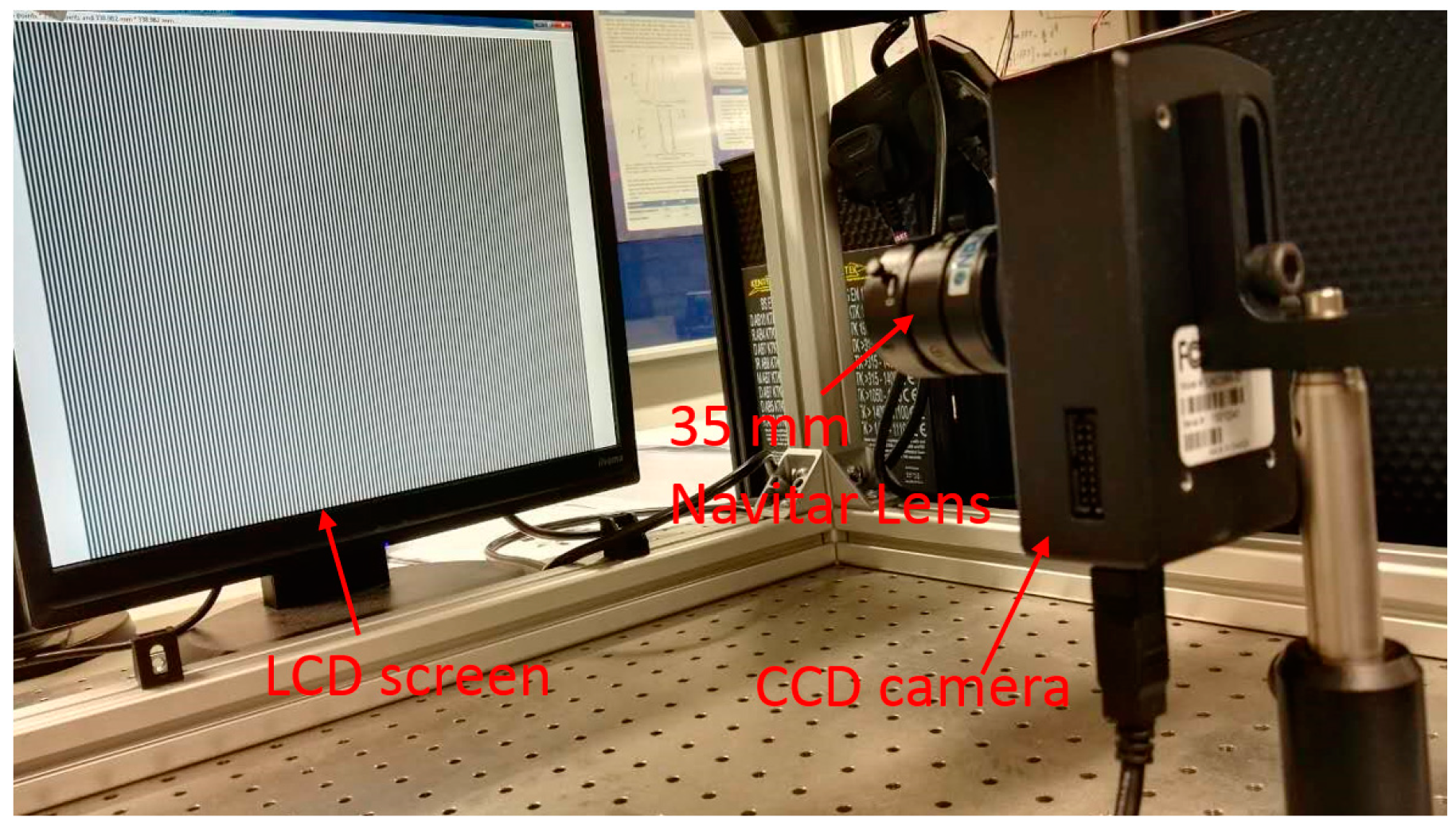

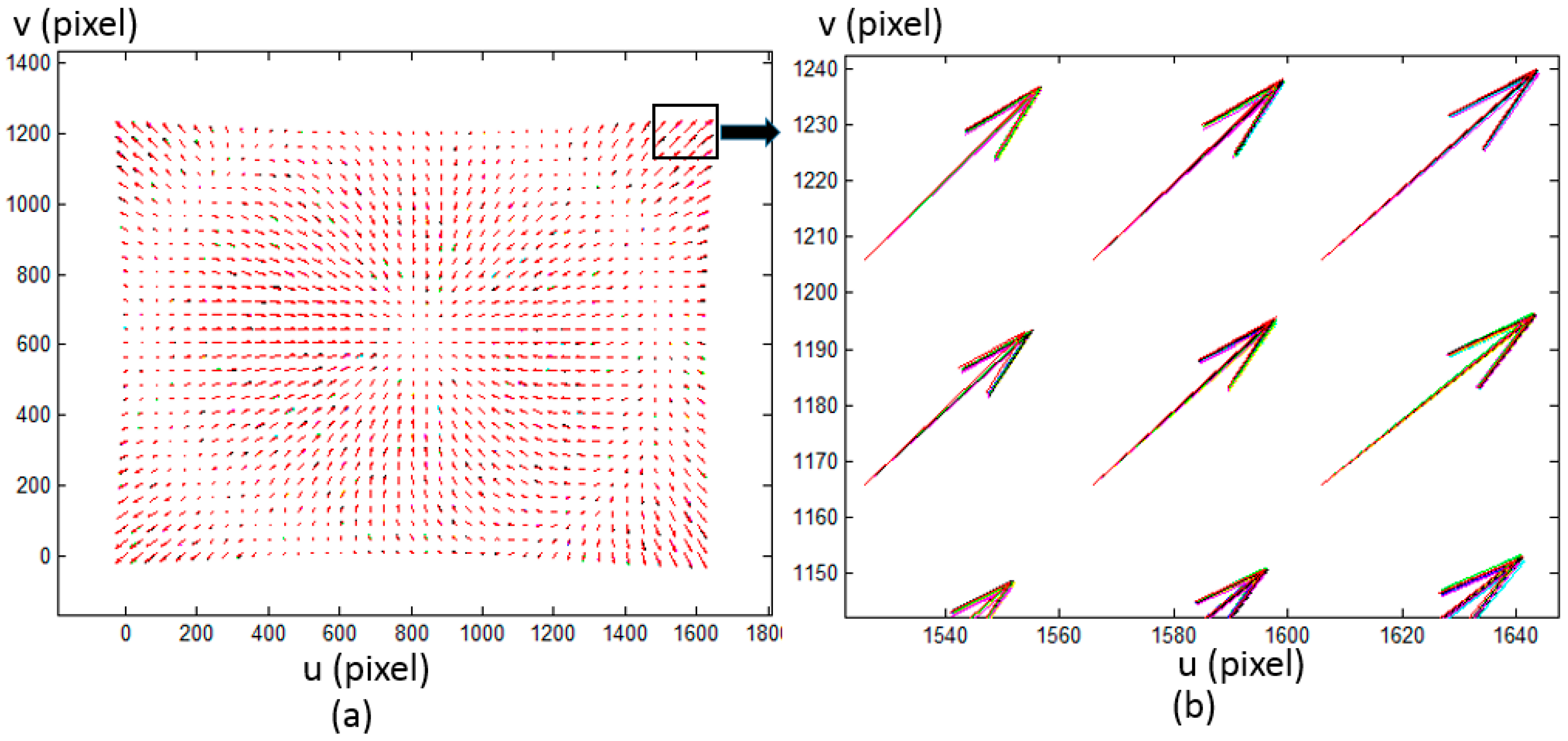

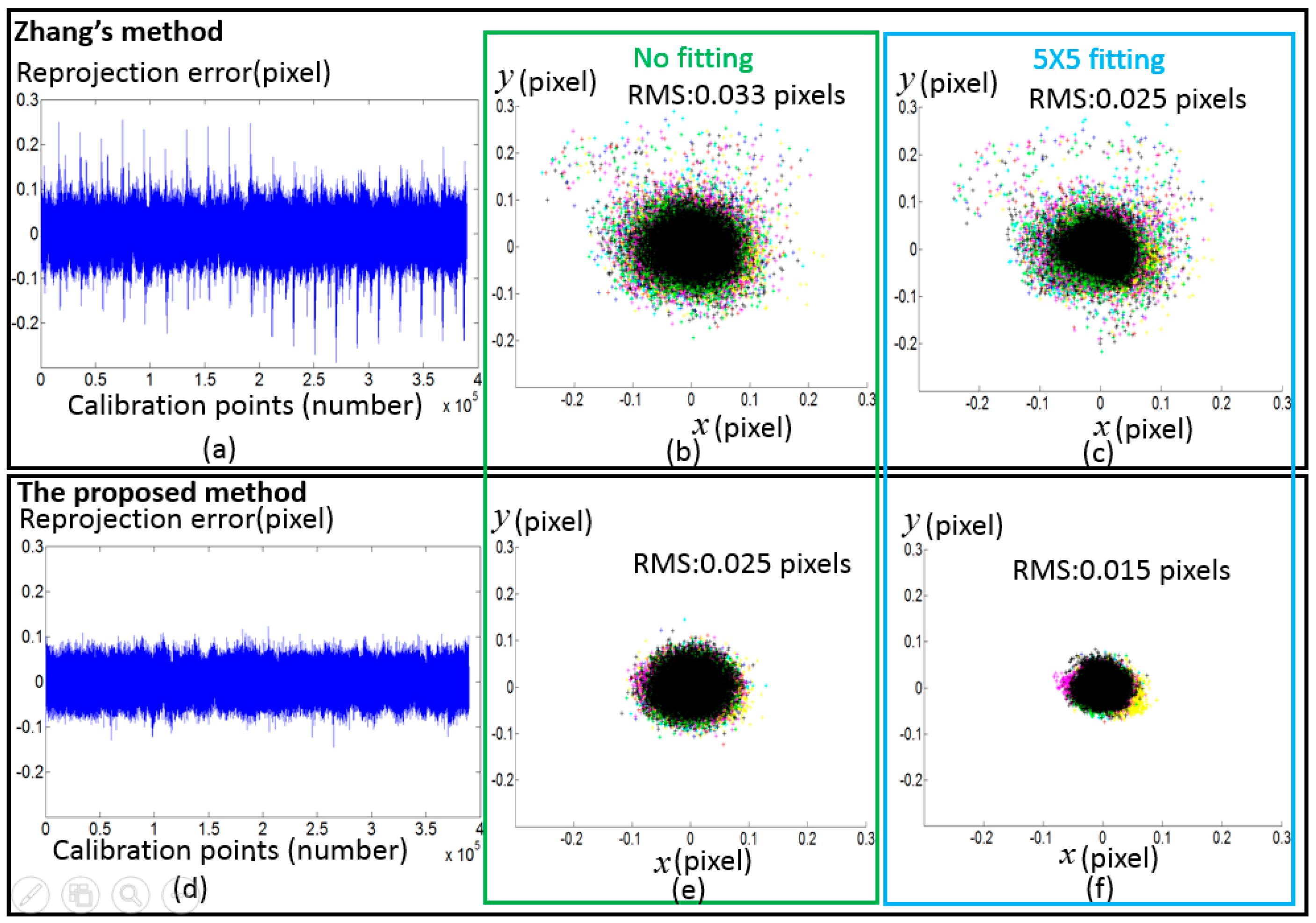

3.2. Experiment Study

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Wei, Z.; Zhao, K. Structural parameters calibration for binocular stereo vision sensors using a double-sphere target. Sensors 2016, 16, 1074. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Chen, C.; Huang, S.; Zhang, Z. Simultaneously measuring 3D shape and colour texture of moving objects using IR and colour fringe projection techniques. Opt. Laser Eng. 2014, 61, 1–7. [Google Scholar] [CrossRef]

- Zuo, C.; Chen, Q.; Gu, G.; Feng, S.; Feng, F.; Li, R.; Shen, G. High-speed three-dimensional shape measurement for dynamic scenes using bi-frequency tripolar pulse-width-modulation fringe projection. Opt. Laser Eng. 2013, 51, 953–960. [Google Scholar] [CrossRef]

- Xu, J.; Liu, S.L.; Wan, A.; Gao, B.T.; Yi, Q.; Zhao, D.P.; Luo, R.K.; Chen, K. An absolute phase technique for 3D profile measurement using four-step structured light pattern. Opt. Laser Eng. 2012, 50, 1274–1280. [Google Scholar] [CrossRef]

- Ren, H.; Gao, F.; Jiang, X. Iterative optimization calibration method for stereo deflectometry. Opt. Express 2015, 23, 22060–22068. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Ng, C.S.; Asundi, A.K. Dynamic three-dimensional sensing for specular surface with monoscopic fringe reflectometry. Opt. Express 2011, 19, 12809–12814. [Google Scholar] [CrossRef] [PubMed]

- Olesch, E.; Faber, C.; Hausler, G. Deflectometric Self-Calibration for Arbitrary Specular Surface. Proc. DGao. 2011. Available online: http://www.dgao-proceedings.de/download/112/112_a3.pdf (accessed on 19 May 2017).

- Xu, J.; Douet, J.; Zhao, J.G.; Song, L.B.; Chen, K. A simple calibration method for structured light-based 3D profile measurement. Opt. Laser Eng. 2013, 48, 187–193. [Google Scholar] [CrossRef]

- Camera Calibration Toolbox for Matlab. Available online: https://www.vision.caltech.edu/bouguetj/calib_doc (accessed on 19 May 2017).

- Alvarez, L.; Gómez, L.; Henríquez, P. Zoom dependent lens distortion mathematical models. J. Math. Imaging Vis. 2012, 44, 480–490. [Google Scholar] [CrossRef]

- Santana-Cedrés, D.; Gomez, L.; Alemán-Flores, M.; Salgado, A.; Esclarín, J.; Mazorra, L.; Alvarez, L. Estimation of the lens distortion model by minimizing a line reprojection error. IEEE Sens. J. 2017, 17, 2848–2855. [Google Scholar] [CrossRef]

- Tang, Z.; Grompone, R.; Monasse, P.; Morel, J.M. A precision analysis of camera distortion models. IEEE Trans. Image Process. 2017, 26, 2694–2704. [Google Scholar] [CrossRef] [PubMed]

- Sanz-Ablanedo, E.; Rodríguez-Pérez, J.R.; Armesto, J.; Taboada, M.F.Á. Geometric stability and lens decentering in compact digital cameras. Sensors 2010, 10, 1553–1572. [Google Scholar] [CrossRef] [PubMed]

- Bosch, J.; Gracias, N.; Ridao, P.; Ribas, D. Omnidirectional underwater camera design and calibration. Sensors 2015, 15, 6033–6065. [Google Scholar] [CrossRef] [PubMed]

- Thirthala, S.R.; Pollefeys, M. Multi-view geometry of 1D radial cameras and its application to omnidirectional camera calibration. In Proceedings of the Tenth IEEE International Conference on Computer Vision, ICCV 2005, Beijing, China, 17–21 October 2005; Volume 2, pp. 1539–1546. [Google Scholar]

- Hartley, R.; Kang, S.B. Parameter-free radial distortion correction with center of distortion estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1309–1321. [Google Scholar] [CrossRef] [PubMed]

- Sagawa, R.; Takatsuji, M.; Echigo, T.; Yagi, Y. Calibration of lens distortion by structured-light scanning. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005. [Google Scholar]

- De la Escalera, A.; Armingol, J.M. Automatic chessboard detection for intrinsic and extrinsic camera parameter calibration. Sensors 2010, 10, 2027–2044. [Google Scholar] [CrossRef] [PubMed]

- Donné, S.; De Vylder, J.; Goossens, B.; Philips, W. MATE: Machine learning for adaptive calibration template detection. Sensors 2016, 16, 1858. [Google Scholar] [CrossRef] [PubMed]

- Schmalz, C.; Forster, F.; Angilopoulou, E. Camera calibration: Active versus passive targets. Opt. Eng. 2011, 50, 113601. [Google Scholar]

- Huang, L.; Zhang, Q.; Asundi, A. Camera calibration with active phase target: Improvement on feature detection and optimization. Opt. Lett. 2013, 38, 1446–1448. [Google Scholar] [CrossRef] [PubMed]

- Xue, J.; Su, X.; Xiang, L.; Chen, W. Using concentric circles and wedge grating for camera calibration. Appl. Opt. 2012, 51, 3811–3816. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Su, X. Camera calibration with planar crossed fringe patterns. Optik 2012, 123, 171–175. [Google Scholar] [CrossRef]

- Ma, M.; Chen, X.; Wang, K. Camera calibration by using fringe patterns and 2D phase-difference pulse detection. Optik 2014, 125, 671–674. [Google Scholar] [CrossRef]

- Bell, T.; Xu, J.; Zhang, S. Method for out-of-focus camera calibration. Appl. Opt. 2016, 55, 2346–2352. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Chen, X.; Tao, J.; Wang, K.; Ma, M. Accurate feature detection for out-of-focus camera calibration. Appl. Opt. 2016, 55, 7964–7971. [Google Scholar] [CrossRef] [PubMed]

- Zuo, C.; Huang, L.; Zhang, M.; Chen, Q.; Asundi, A. Temporal phase unwrapping algorithms for fringe projection profilometry: A comparative review. Opt. Laser Eng. 2016, 85, 84–103. [Google Scholar] [CrossRef]

- Towers, C.E.; Towers, D.P.; Towers, J.J. Absolute fringe order calculation using optimised multi-frequency selection in full-field profilometry. Opt. Laser Eng. 2005, 43, 788–800. [Google Scholar] [CrossRef]

- Zhang, Z.; Towers, C.E.; Towers, D.P. Time efficient color fringe projection system for 3D shape and color using optimum 3-Frequency selection. Opt. Express 2006, 14, 6444–6455. [Google Scholar] [CrossRef] [PubMed]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Y.; Gao, F.; Ren, H.; Zhang, Z.; Jiang, X. An Iterative Distortion Compensation Algorithm for Camera Calibration Based on Phase Target. Sensors 2017, 17, 1188. https://doi.org/10.3390/s17061188

Xu Y, Gao F, Ren H, Zhang Z, Jiang X. An Iterative Distortion Compensation Algorithm for Camera Calibration Based on Phase Target. Sensors. 2017; 17(6):1188. https://doi.org/10.3390/s17061188

Chicago/Turabian StyleXu, Yongjia, Feng Gao, Hongyu Ren, Zonghua Zhang, and Xiangqian Jiang. 2017. "An Iterative Distortion Compensation Algorithm for Camera Calibration Based on Phase Target" Sensors 17, no. 6: 1188. https://doi.org/10.3390/s17061188