Multimodal Bio-Inspired Tactile Sensing Module for Surface Characterization

Abstract

:1. Introduction

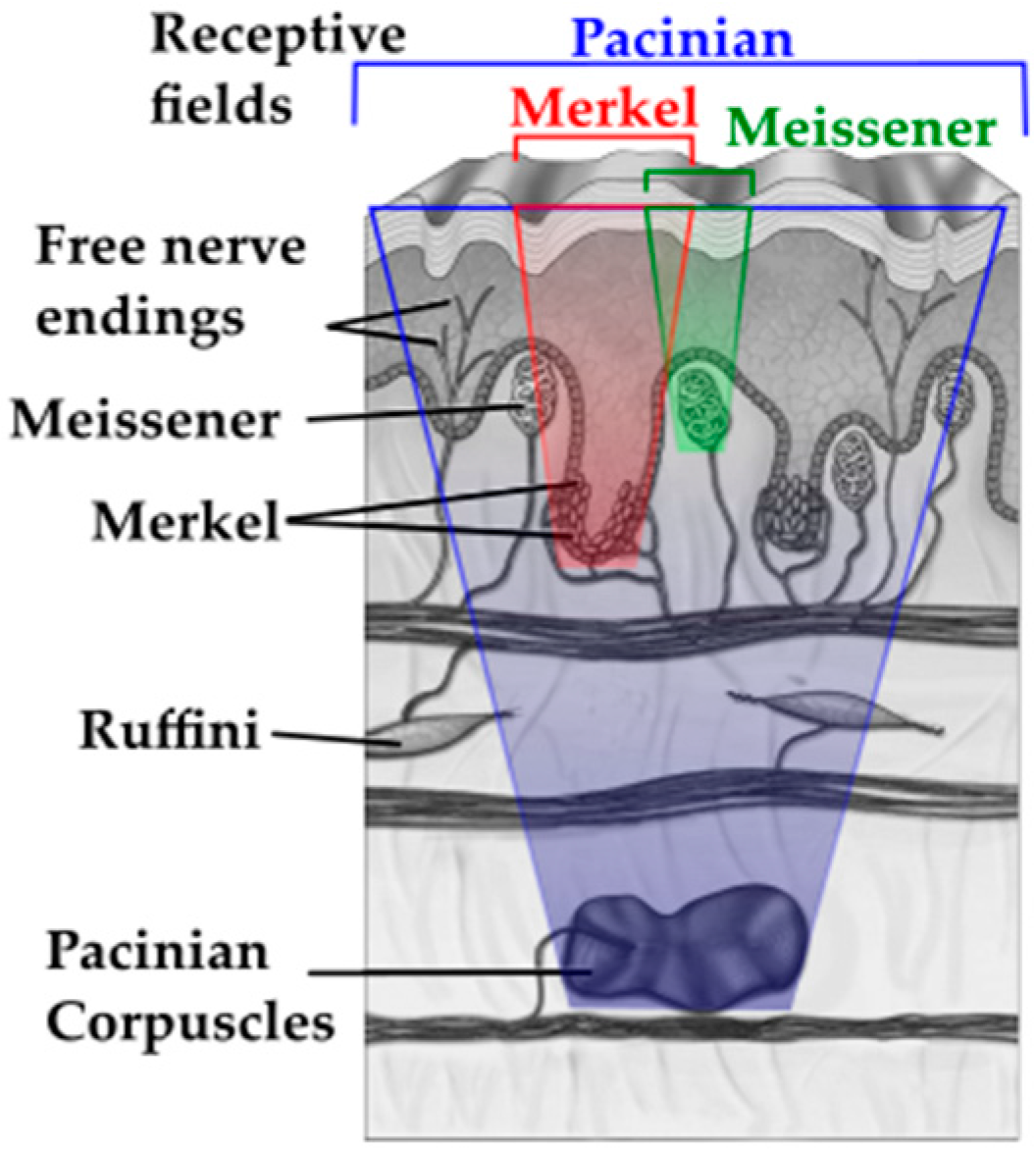

2. Literature Review

3. Bio-Inspired Tactile Sensor Module and Surface Characterization Experiments

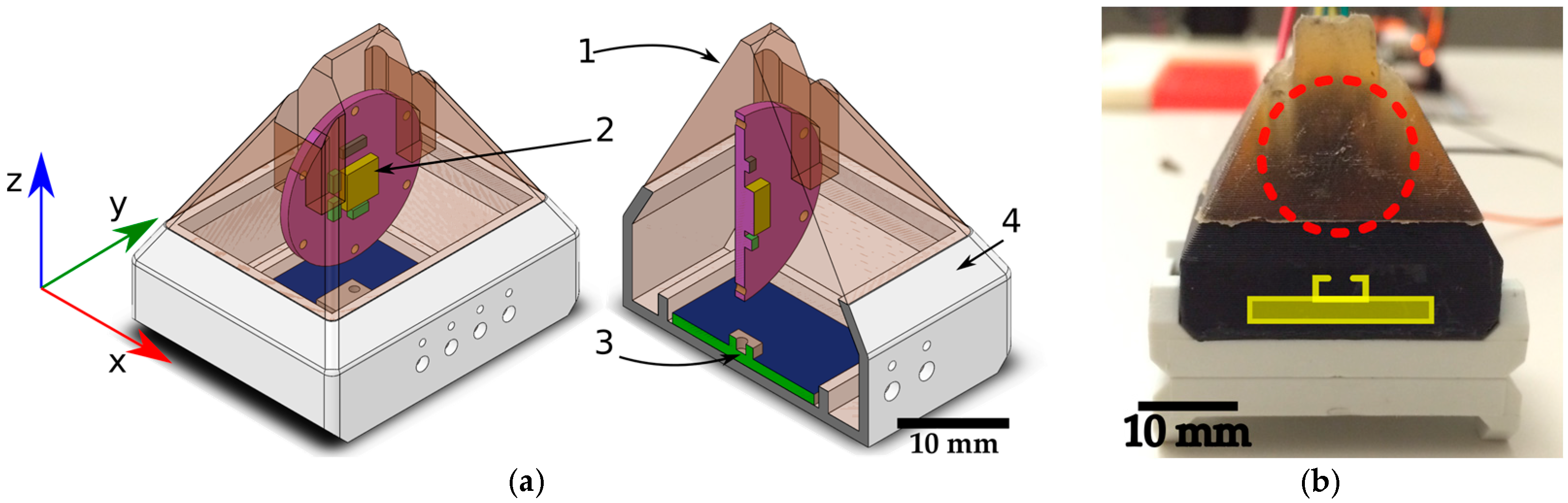

3.1. Bio-Inspired MEMS Based Tactile Module

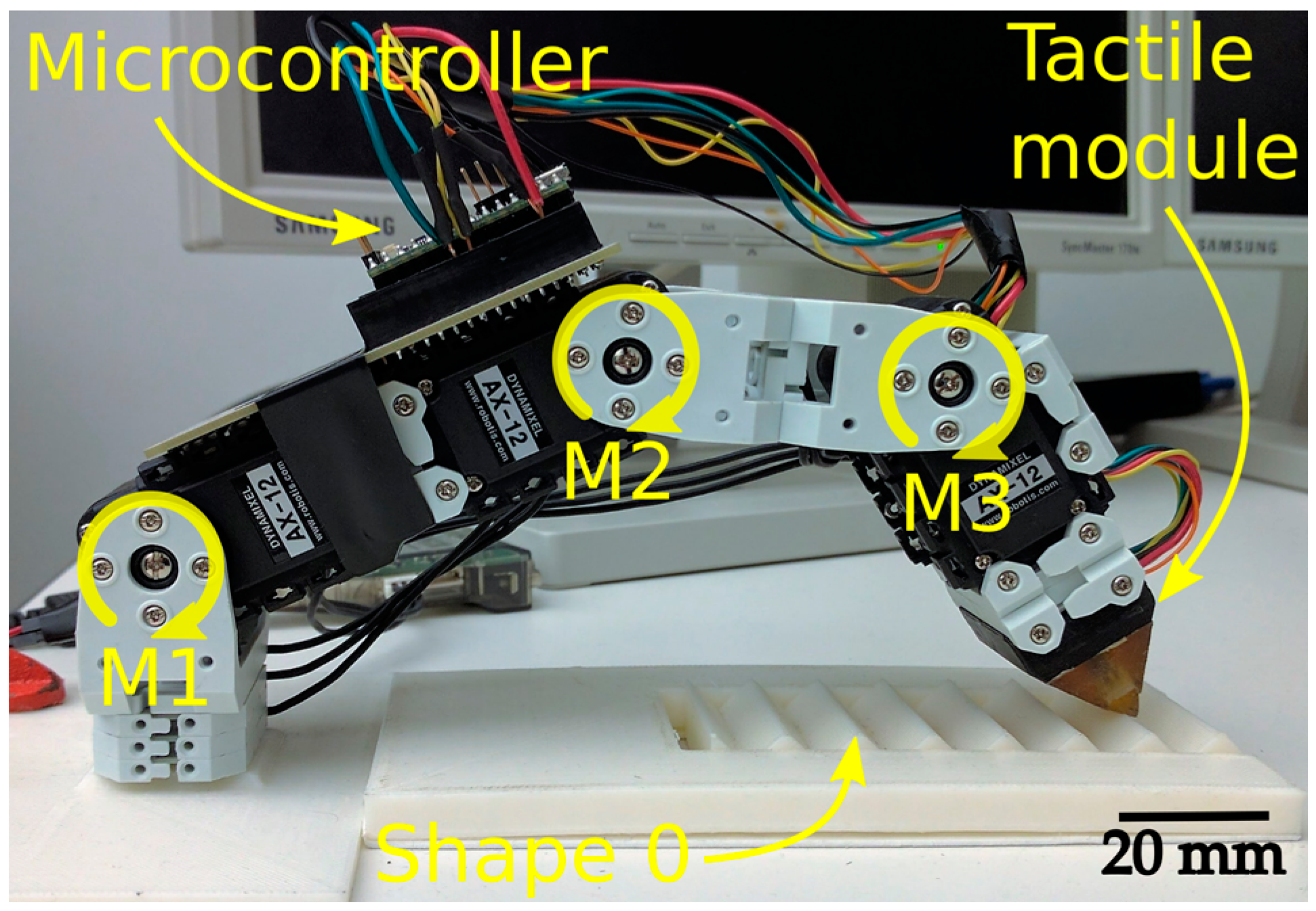

3.2. Experimental Setup

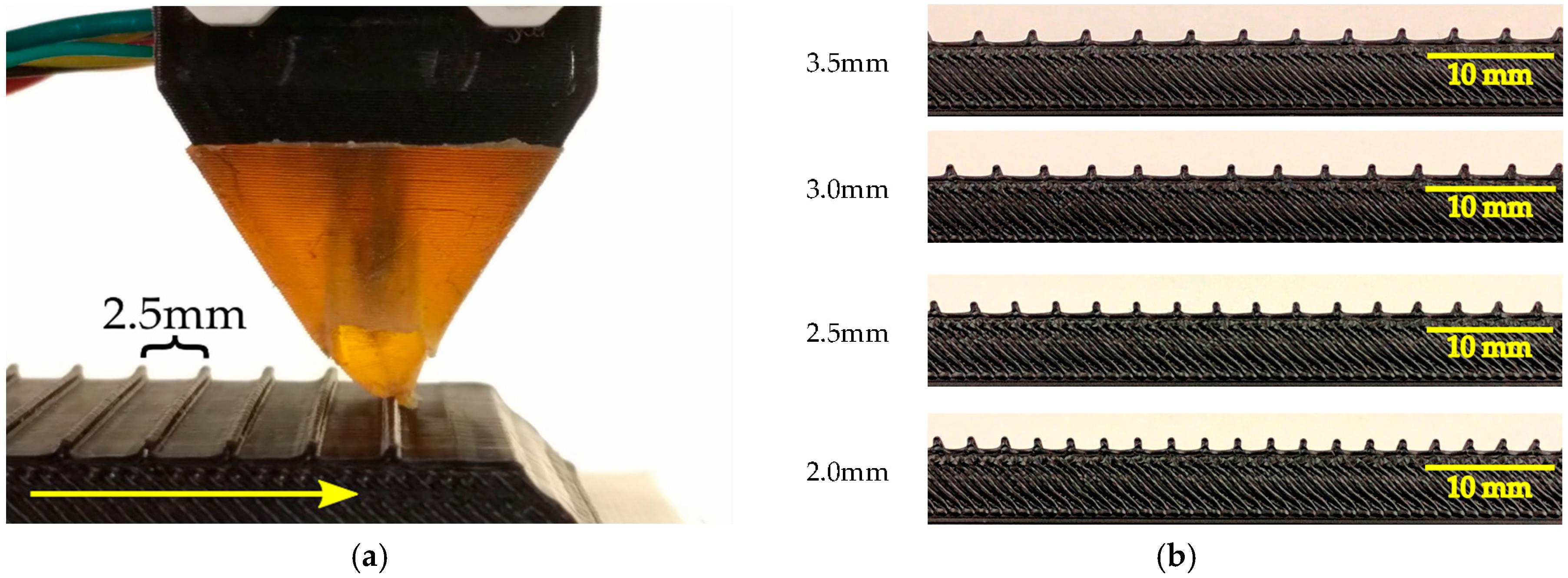

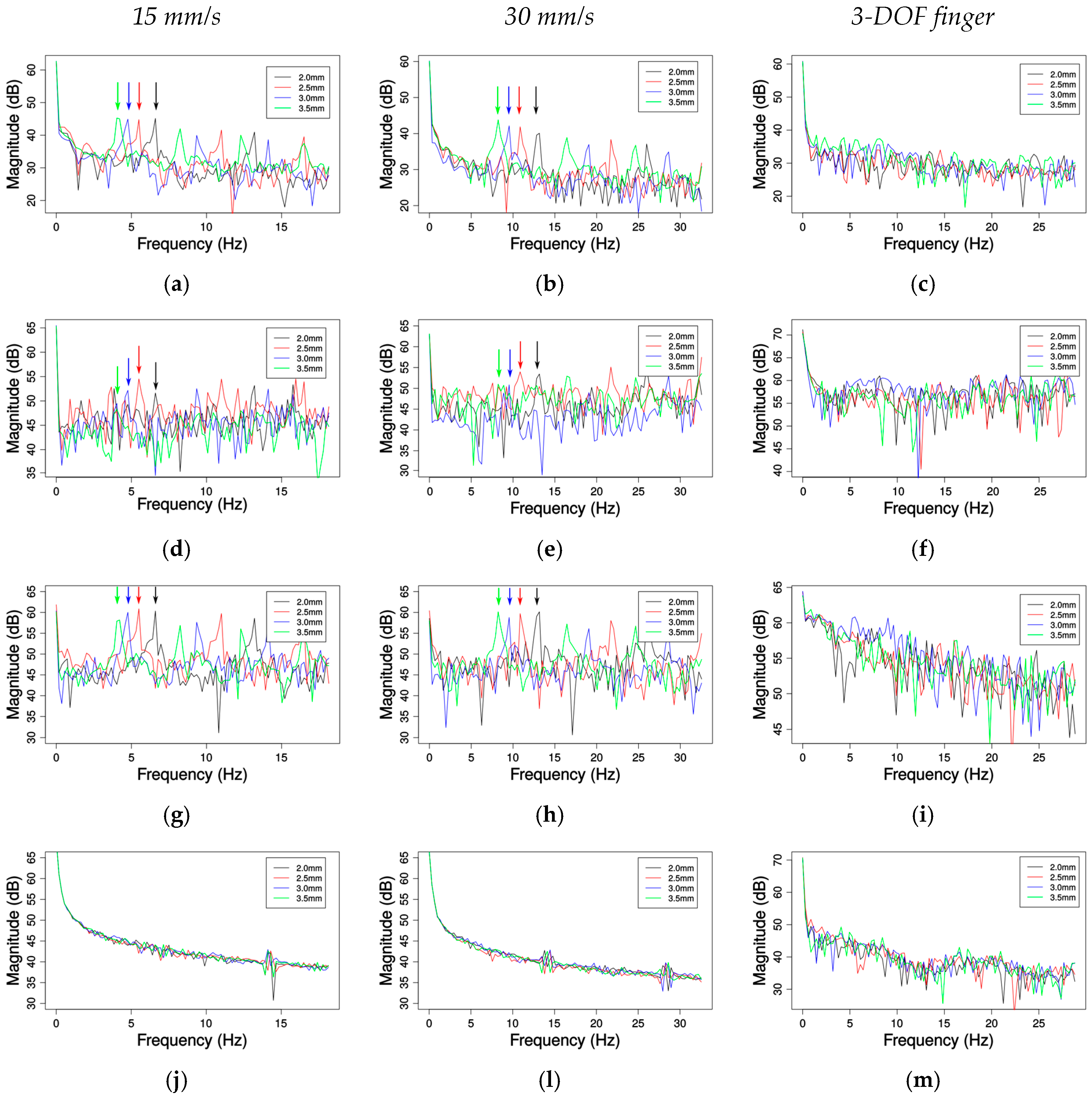

3.2.1. Experiment 1: Sensors Response to Ridged Surfaces (Grating Patterns)

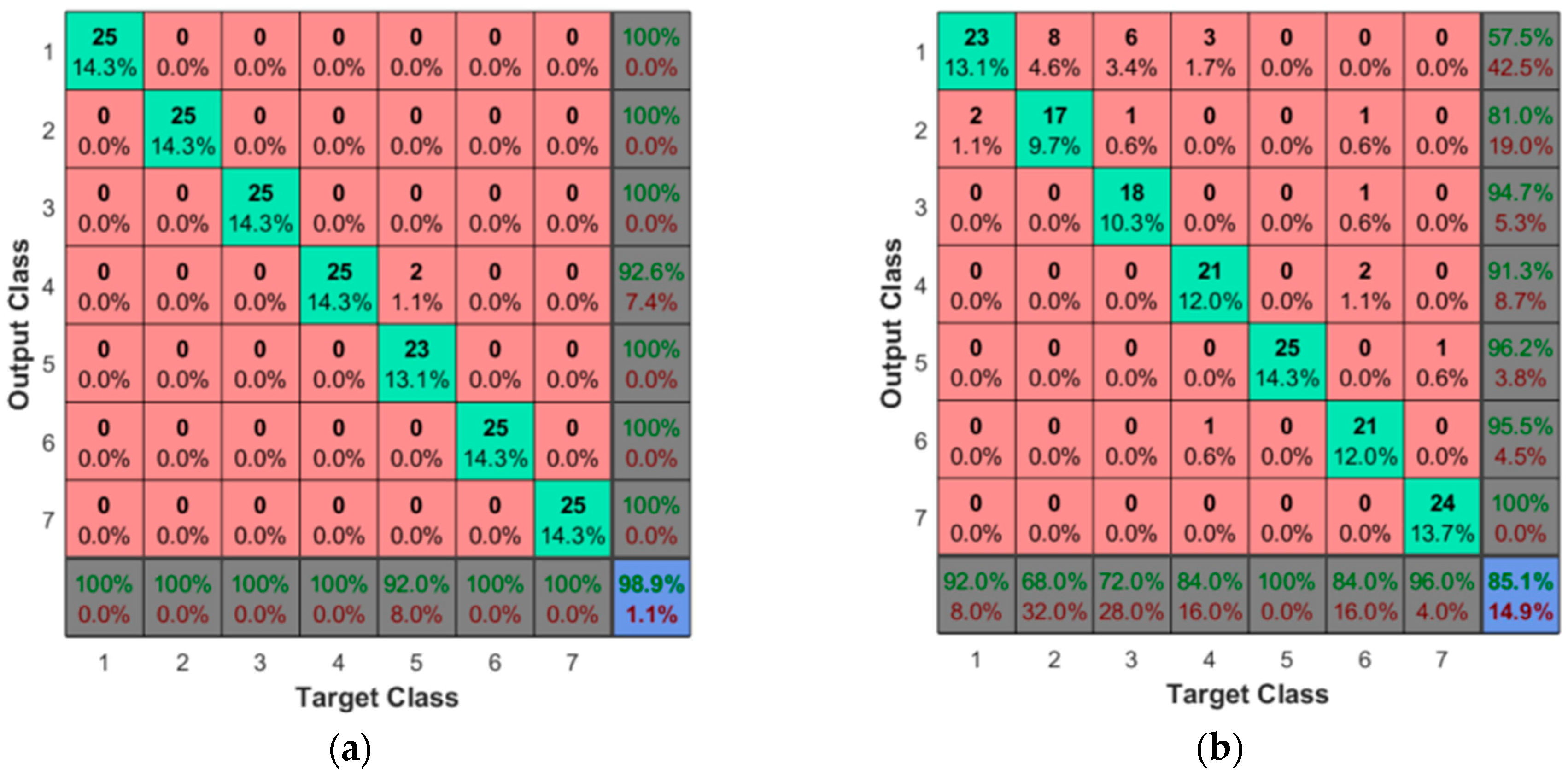

3.2.2. Experiment 2: Surface Profile Classification

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Klatzky, R.L.; Lederman, S.J. Object Recognition by Touch. In Blindness and Brain Plasticity in Navigation and Object Perception; Taylor & Francis Group: New York, NY, USA, 2008; pp. 185–205. [Google Scholar]

- Alves de Oliveira, T.E.; Rocha Lima, B.M.; Cretu, A.; Petriu, E. Tactile profile classification using a multimodal MEMs-based sensing module. In Proceedings of the 3rd International Electronic Conference on Sensors and Applications; MDPI: Basel, Switzerland, 2016; Volume 3, p. E007. [Google Scholar]

- Dahiya, R.S.; Mittendorfer, P.; Valle, M.; Cheng, G.; Lumelsky, V.J. Directions toward effective utilization of tactile skin: A review. IEEE Sens. J. 2013, 13, 4121–4138. [Google Scholar] [CrossRef]

- Oddo, C.M.; Beccai, L.; Felder, M.; Giovacchini, F.; Carrozza, M.C. Artificial roughness encoding with a bio-inspired MEMS-Based tactile sensor array. Sensors 2009, 9, 3161–3183. [Google Scholar] [CrossRef] [PubMed]

- Oddo, C.M.; Controzzi, M.; Beccai, L.; Cipriani, C.; Carrozza, M.C. Roughness encoding for discrimination of surfaces in artificial active-touch. IEEE Trans. Robot. 2011, 27, 522–533. [Google Scholar] [CrossRef]

- De Boissieu, F.; Godin, C.; Guilhamat, B.; David, D.; Serviere, C.; Baudois, D. Tactile Texture Recognition with a 3-Axial Force MEMS integrated Artificial Finger. In Proceedings of the Robotics: Science and Systems, Seattle, WA, USA, 28 June–1 July 2009; pp. 49–56. [Google Scholar]

- Jamali, N.; Sammut, C. Material Classification by Tactile Sensing using Surface Textures. IEEE Trans. Robot. 2010, 27, 2336–2341. [Google Scholar]

- Dallaire, P.; Giguère, P.; Émond, D.; Chaib-Draa, B. Autonomous tactile perception: A combined improved sensing and Bayesian nonparametric approach. Robot. Auton. Syst. 2014, 62, 422–435. [Google Scholar] [CrossRef]

- De Oliveira, T.E.A.; Cretu, A.M.; Da Fonseca, V.P.; Petriu, E.M. Touch sensing for humanoid robots. IEEE Instrum. Meas. Mag. 2015, 18, 13–19. [Google Scholar] [CrossRef]

- Dallaire, P.; Emond, D.; Giguere, P.; Chaib-Draa, B. Artificial tactile perception for surface identification using a triple axis accelerometer probe. In Proceedings of the 2011 IEEE International Symposium on Robotic and Sensors Environments (ROSE), Montreal, QC, Canada, 17–18 September 2011; pp. 101–106. [Google Scholar]

- Giguere, P.; Dudek, G. Surface identification using simple contact dynamics for mobile robots. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3301–3306. [Google Scholar]

- Giguere, P.; Dudek, G. A Simple Tactile Probe for Surface Identification by Mobile Robots. IEEE Trans. Robot. 2011, 27, 534–544. [Google Scholar] [CrossRef]

- Sinapov, J.; Sukhoy, V.; Sahai, R.; Stoytchev, A. Vibrotactile Recognition and Categorization of Surfaces by a Humanoid Robot. IEEE Trans. Robot. 2011, 27, 488–497. [Google Scholar] [CrossRef]

- De Maria, G.; Natale, C.; Pirozzi, S. Force/tactile sensor for robotic applications. Sens. Actuators A Phys. 2012, 175, 60–72. [Google Scholar] [CrossRef]

- De Maria, G.; Natale, C.; Pirozzi, S. Tactile data modeling and interpretation for stable grasping and manipulation. Robot. Auton. Syst. 2013, 61, 1008–1020. [Google Scholar] [CrossRef]

- De Maria, G.; Natale, C.; Pirozzi, S. Tactile sensor for human-like manipulation. In Proceedings of the 4th IEEE RAS EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), Rome, Italy, 24–27 June 2012; pp. 1686–1691. [Google Scholar]

- Chorley, C.; Melhuish, C.; Pipe, T.; Rossiter, J. Development of a tactile sensor based on biologically inspired edge encoding. In Proceedings of the International Conference on Advanced Robotics (ICAR 2009), Munich, Germany, 22–26 June 2009. [Google Scholar]

- Assaf, T.; Roke, C.; Rossiter, J.; Pipe, T.; Melhuish, C. Seeing by touch: Evaluation of a soft biologically-inspired artificial fingertip in real-time active touch. Sensors 2014, 14, 2561–2577. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.-L.; Black, R.J.; Moslehi, B.; Cutkosky, M.R. Fingertip force control with embedded fiber Bragg grating sensors. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 3431–3436. [Google Scholar]

- Edwards, J.; Lawry, J.; Rossiter, J.; Melhuish, C. Extracting textural features from tactile sensors. Bioinspir. Biomim. 2008, 3, 35002. [Google Scholar] [CrossRef] [PubMed]

- Johnsson, M.; Balkenius, C. Recognizing texture and hardness by touch. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 482–487. [Google Scholar]

- Johnsson, M.; Balkenius, C. Sense of Touch in Robots with Self-Organizing Maps. IEEE Trans. Robot. 2011, 27, 498–507. [Google Scholar] [CrossRef]

- Dahiya, R.S.; Metta, G.; Valle, M.; Sandini, G. Tactile sensing—From humans to humanoids. IEEE Trans. Robot. 2010, 26, 1–20. [Google Scholar] [CrossRef]

- Chathuranga, D.S.; Wang, Z.; Ho, V.A.; Mitani, A.; Hirai, S. A biomimetic soft fingertip applicable to haptic feedback systems for texture identification. In Proceedings of the 2013 IEEE International Symposium on Haptic Audio Visual Environments and Games (HAVE), Istanbul, Turkey, 26–27 October 2013; pp. 29–33. [Google Scholar]

- Kroemer, O.; Lampert, C.H.; Peters, J. Learning Dynamic Tactile Sensing With Robust Vision-Based Training. IEEE Trans. Robot. 2011, 27, 545–557. [Google Scholar] [CrossRef]

- De Oliveira, T.E.A.; da Fonseca, V.P.; Huluta, E.; Rosa, P.F.F.; Petriu, E.M. Data-driven analysis of kinaesthetic and tactile information for shape classification. In Proceedings of the 2015 IEEE International Conference on Computational Intelligence and Virtual Environments for Measurement Systems and Applications (CIVEMSA), Shenzhen, China, 12–14 June 2015; pp. 1–5. [Google Scholar]

- Chathuranga, K.V.D.S.; Ho, V.A.; Hirai, S. A bio-mimetic fingertip that detects force and vibration modalities and its application to surface identification. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 575–581. [Google Scholar]

- Chathuranga, D.S.; Ho, V.A.; Hirai, S. Investigation of a biomimetic fingertip’s ability to discriminate fabrics based on surface textures. In Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Wollongong, Australia, 9–12 July 2013; pp. 1667–1674. [Google Scholar]

- Xu, D.; Loeb, G.E.; Fishel, J.A. Tactile identification of objects using Bayesian exploration. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 3056–3061. [Google Scholar]

- Loomis, J.M.; Lederman, S. CHAPTER 31: TACTUAL PERCEPTION. In Handbook of Perception and Human Performance. Volume 2. Cognitive Processes and Performance; Thomas, J.P., Kaufman, L., Boff, K.R., Eds.; John Wiley & Sons: New York, NY, USA, 1986. [Google Scholar]

- Sekuler, R.; Blake, R. Perception; McGraw-Hill: New York, NY, USA, 2002. [Google Scholar]

- Purves, D.; Augustine, G.J.; Fitzpatrick, D.; Hall, W.C.; LaMantia, A.-S.; White, L.E. Neuroscience, 5th ed.; Sinauer Associates, Inc: Sunderland, MA, USA, 2011. [Google Scholar]

- Kuroki, S.; Kajimoto, H.; Nii, H.; Kawakami, N.; Tachi, S. Proposal of the stretch detection hypothesis of the meissner corpuscle. In Proceedings of the 6th international conference on Haptics: Perception, Devices and Scenarios (EuroHaptics 2008), Madrid, Spain, 10–13 June 2008; pp. 245–254. [Google Scholar]

- Ballesteros, S.; Reales, J.M.; de Leon, L.P.; Garcia, B. The perception of ecological textures by touch: Does the perceptual space change under bimodal visual and haptic exploration? In Proceedings of the First Joint Eurohaptics Conference, 2005 and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Pisa, Italy, 18–20 March 2005; pp. 635–638. [Google Scholar]

- Falanga, V.; Bucalo, B. Use of a durometer to assess skin hardness. J. Am. Acad. Dermatol. 1993, 29, 47–51. [Google Scholar] [CrossRef]

- Jentoft, L.P.; Howe, R.D. Determining object geometry with compliance and simple sensors. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Francisco, CA, USA, 25–30 September 2011; pp. 3468–3473. [Google Scholar]

- LSM9DS0: iNEMO Inertial Module: 3D Accelerometer, 3D Gyroscope, 3D Magnetometer. Available onine: http://www.st.com/en/mems-and-sensors/lsm9ds0.html (accessed on 19 May 2017).

- MPL115A2, Miniature I2C Digital Barometer. Available onine: http://www.nxp.com/assets/documents/data/en/data-sheets/MPL115A2.pdf (accessed on 19 May 2017).

- Darian-Smith, I.; Oke, L.E. Peripheral neural representation of the spatial frequency of a grating moving across the monkey’s finger pad. J. Physiol. 1980, 309, 117–133. [Google Scholar] [CrossRef] [PubMed]

- Konyo, M.; Tadokoro, S.; Yoshida, A.; Saiwaki, N. A tactile synthesis method using multiple frequency vibrations for representing virtual touch. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 3965–3971. [Google Scholar]

- Cooley, J.W.; Tukey, J.W. An algorithm for the machine calculation of complex Fourier series. Math. Comput. 1965, 19, 297. [Google Scholar] [CrossRef]

- Mallat, S.G. A Theory for Multiresolution Signal Decomposition: The Wavelet Representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 674–693. [Google Scholar] [CrossRef]

- Bro, R.; Smilde, A.K. Principal component analysis. Anal. Methods 2014, 6, 2812. [Google Scholar] [CrossRef]

- Møller, M.F. A scaled conjugate gradient algorithm for fast supervised learning. Neural Netw. 1993, 6, 525–533. [Google Scholar] [CrossRef]

| Receptor | Properties and Functions |

|---|---|

| Merkel disks |

|

| Ruffini corpuscles |

|

| Meissner’s corpuscles |

|

| Pacinian corpuscles |

|

| Magnetic Sensor | Gravity Sensor | Angular Velocity | Pressure Sensor | |

|---|---|---|---|---|

| Measur. range | ±2–±12 Gs | ±2–±16 g | ±245–±2000 dps | 50–115 kPa |

| Selected M. range | ±2 Gs | ±2 g | ±245 dps | Not applicable |

| Frequency range | 3.125 Hz–100 Hz | 3.125 Hz–1600 Hz | 3.125 Hz–1600 Hz | 333.3 Hz (max) |

| Selected F. range | 100 Hz | 800 Hz | 800 Hz | 333.3 Hz |

| Resolution | 0.08 mG | 0.061 mg | 8.75 mdps | 0.15 kPa |

| 3.66 Hz | 4.17 Hz | 4.84 Hz | 5.77 Hz | |

| 7.32 Hz | 8.33 Hz | 9.68 Hz | 11.54 Hz |

| Expected | 3.66 Hz | 4.17 Hz | 4.84 Hz | 5.77 Hz | |

| Mean detected | 3.66 Hz | 4.10 Hz | 4.83 Hz | 5.86 Hz | |

| RMS error | 0 | 0.07 | 0.01 | −0.09 | |

| Expected | 7.32 Hz | 8.33 Hz | 9.68 Hz | 11.54 Hz | |

| Mean detected | 7.32 Hz | 8.35 Hz | 9.67 Hz | 11.44 Hz | |

| RMS error | 0 | −0.02 | 0.01 | 0.10 | |

| Sensor | Accuracy (%) |

|---|---|

| Accelerometer X | 92 |

| Accelerometer Y | 92.6 |

| Accelerometer Z | 85.1 |

| Gyroscope X | 98.3 |

| Gyroscope Y | 93.3 |

| Gyroscope Z | 98.9 |

| Magnetometer X | 88 |

| Magnetometer Y | 86.9 |

| Magnetometer Z | 91.4 |

| Barometer | 98.9 |

| Acc. Y—Gyro. Z—Magn. Z—Barometer | 100 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alves de Oliveira, T.E.; Cretu, A.-M.; Petriu, E.M. Multimodal Bio-Inspired Tactile Sensing Module for Surface Characterization . Sensors 2017, 17, 1187. https://doi.org/10.3390/s17061187

Alves de Oliveira TE, Cretu A-M, Petriu EM. Multimodal Bio-Inspired Tactile Sensing Module for Surface Characterization . Sensors. 2017; 17(6):1187. https://doi.org/10.3390/s17061187

Chicago/Turabian StyleAlves de Oliveira, Thiago Eustaquio, Ana-Maria Cretu, and Emil M. Petriu. 2017. "Multimodal Bio-Inspired Tactile Sensing Module for Surface Characterization " Sensors 17, no. 6: 1187. https://doi.org/10.3390/s17061187