Optimal Fusion Estimation with Multi-Step Random Delays and Losses in Transmission

Abstract

:1. Introduction

2. Observation Model and Preliminaries

2.1. Signal Process

- (A1)

- The -dimensional signal process has zero mean and its autocovariance function is expressed in a separable form, where are known matrices.

2.2. Multisensor Observation Model

- (A2)

- , , are independent sequences of independent random parameter matrices, whose entries have known means and second-order moments; we will denote .

- (A3)

- , are white noise sequences with zero mean and known second-order moments, satisfying

2.3. Measurement Model with Transmission Random Delays and Packet Losses

- (A4)

- For each , , are independent sequences of independent Bernoulli random variables with and

- (A5)

- , are white noise sequences with zero mean and known second-order moments, satisfying

- (A6)

- For and , the processes , , , and are mutually independent.

3. Problem Statement

3.1. Stacked Observation Model

- (P1)

- is a sequence of independent random parameter matrices with known means, , andwhere , for or , and the entries of are computed as follows:where denotes the entry of the matrix .

- (P2)

- The noises and are zero-mean sequences with known second-order moments given by the matrices and .

- (P3)

- , , are sequences of independent random matrices with known means, , and if we denote and , the covariance matrices , for , are also known matrices. Specifically,with Moreover, for any deterministic matrix S, the Hadamard product properties guarantee that

- (P4)

- For , the signal, , and the processes , , and are mutually independent.

3.2. Innovation Approach to the LS Linear Estimation Problem

4. Least-Squares Linear Signal Estimators

4.1. Signal Predictor and Filter

- −

- For , using Equation (3), it holds that , for , and, by denoting , , we obtain that

- −

- For , since , for , we can see that

4.2. Estimators of the Observations

4.3. Signal Fixed-Point Smoother

4.4. Recursive Algorithms: Computational Procedure

- (1)

- (2)

- LS Linear Prediction and Filtering Recursive Algorithm. At the sampling time k, once the -th iteration is finished and , , and are known, the proposed prediction and filtering algorithm operates as follows:

- (2a)

- (2b)

- (2c)

- (3)

- LS linear fixed-point smoothing recursive algorithm. Once the filter, , and the filtering error covariance matrix, are available, the proposed smoothing estimators and the corresponding error covariance matrix are obtained as follows:

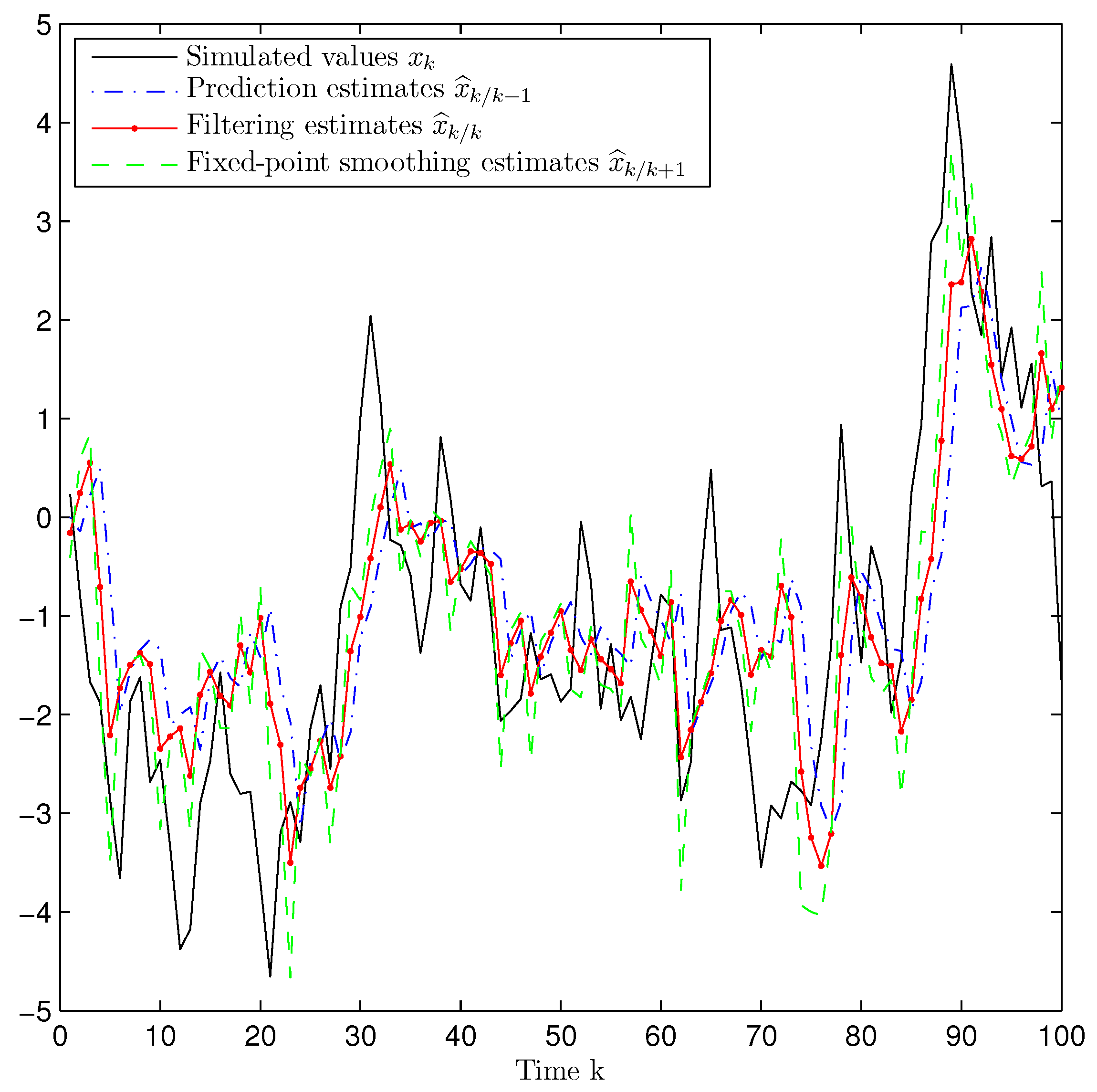

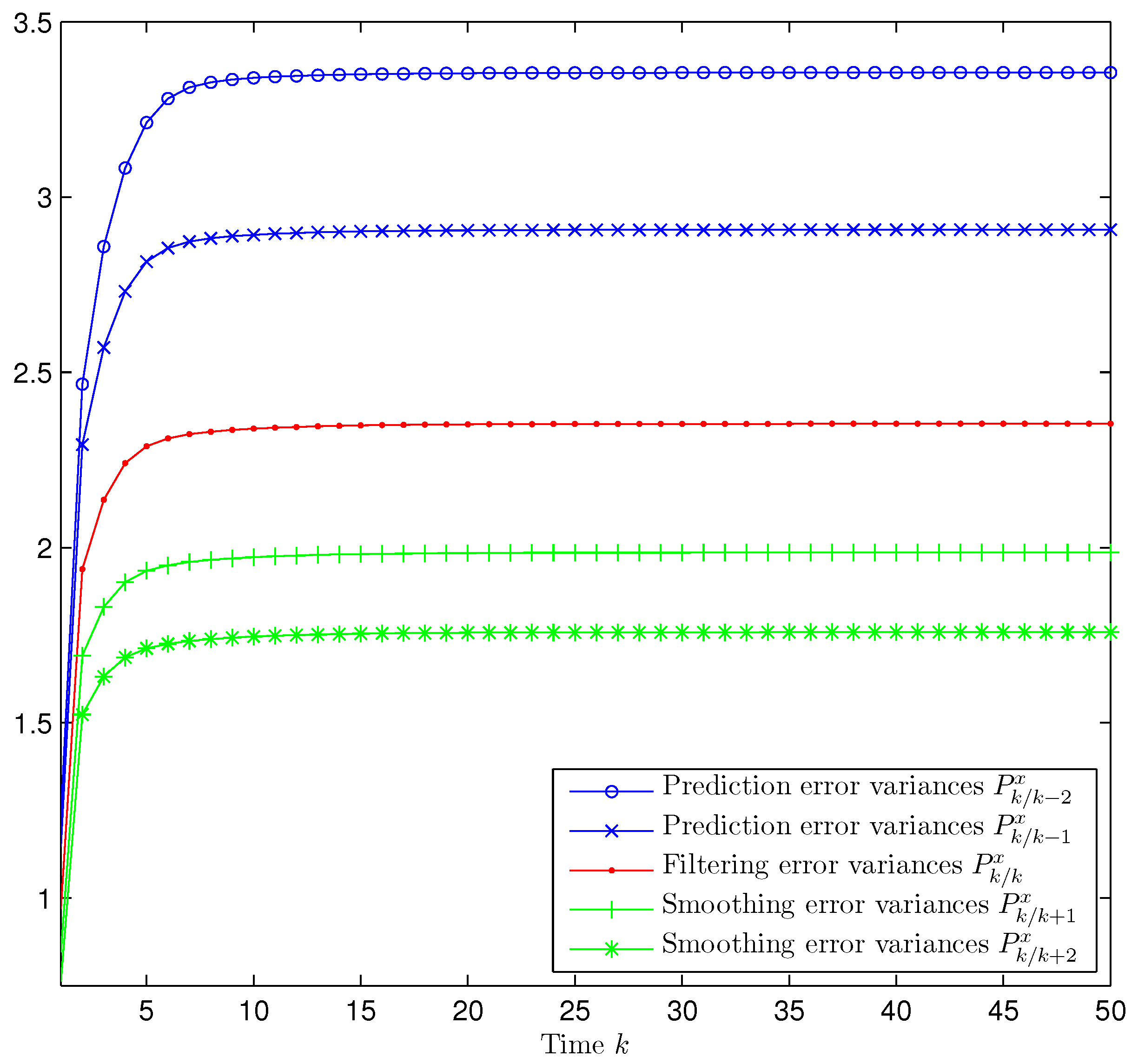

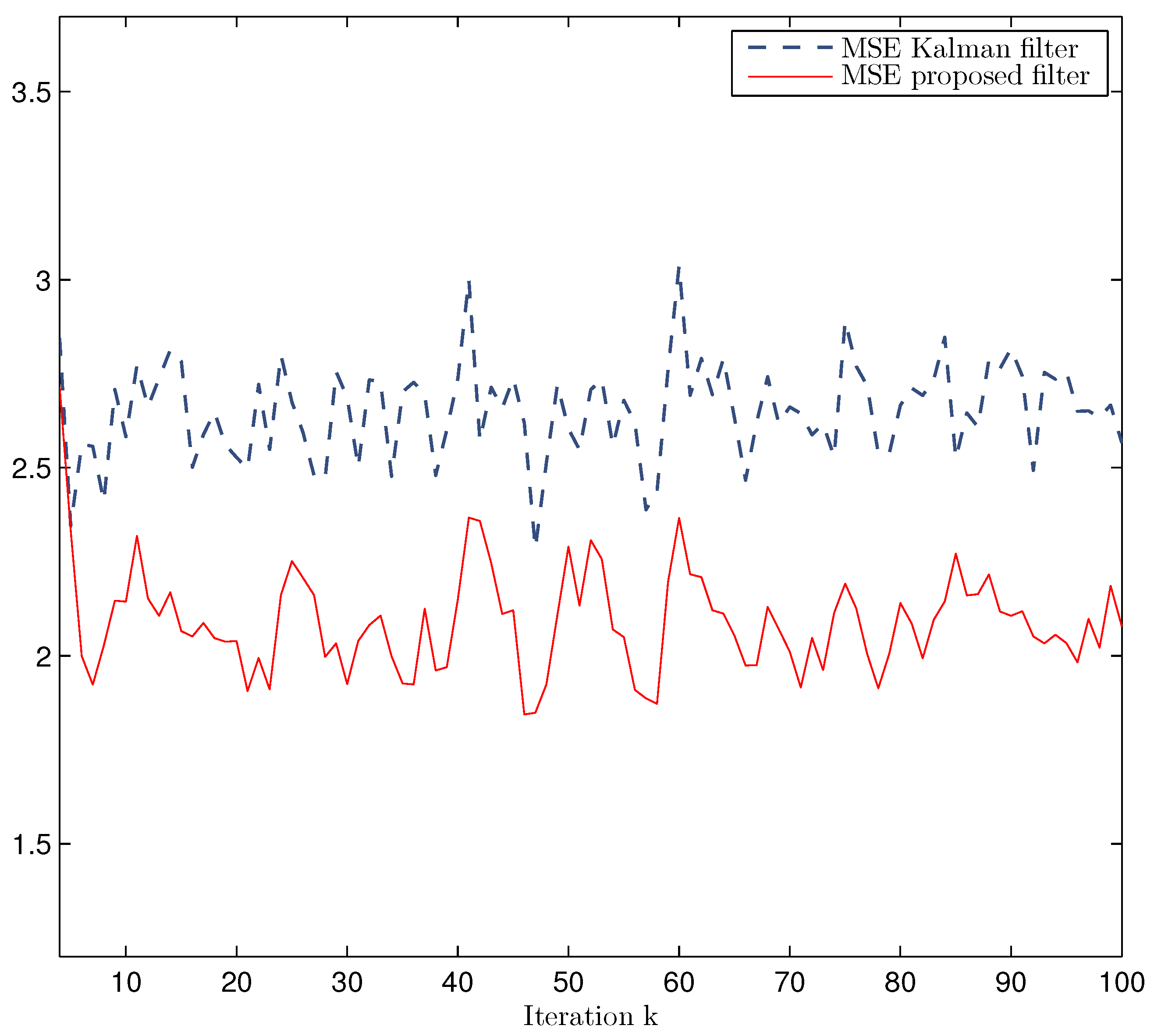

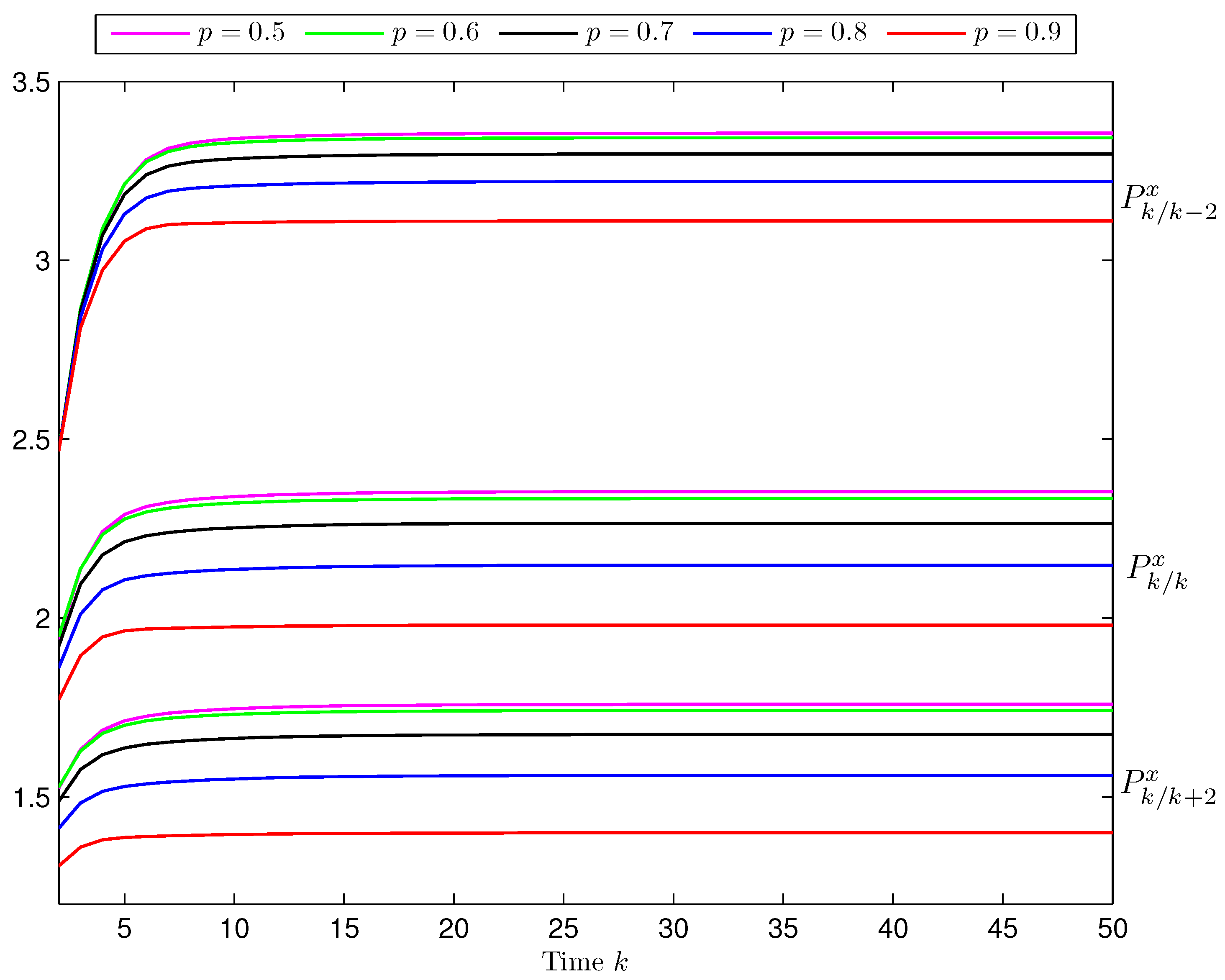

5. Computer Simulation Results

- For , and , where , , , and is a zero-mean Gaussian white process with unit variance. The sequences and are mutually independent, and , are white processes with the following time-invariant probability distributions:

- −

- is uniformly distributed over the interval ;

- −

- −

- For are Bernoulli random variables with the same time-invariant probabilities in both sensors .

- The additive noises are defined by , where , , , and is a zero-mean Gaussian white process with unit variance. Clearly, the additive noises , , are correlated at any time, with

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ran, C.; Deng, Z. Self-tuning weighted measurement fusion Kalman filtering algorithm. Comput. Stat. Data Anal. 2012, 56, 2112–2128. [Google Scholar] [CrossRef]

- Feng, J.; Zeng, M. Optimal distributed Kalman filtering fusion for a linear dynamic system with cross-correlated noises. Int. J. Syst. Sci. 2012, 43, 385–398. [Google Scholar] [CrossRef]

- Yan, L.; Li, X.; Xia, Y.; Fu, M. Optimal sequential and distributed fusion for state estimation in cross-correlated noise. Automatica 2013, 49, 3607–3612. [Google Scholar] [CrossRef]

- Ma, J.; Sun, S. Centralized fusion estimators for multisensor systems with random sensor delays, multiple packet dropouts and uncertain observations. IEEE Sens. J. 2013, 13, 1228–1235. [Google Scholar] [CrossRef]

- Gao, S.; Chen, P. Suboptimal filtering of networked discrete-time systems with random observation losses. Math. Probl. Eng. 2014, 2014, 151836. [Google Scholar] [CrossRef]

- Liu, Y.; He, X.; Wang, Z.; Zhou, D. Optimal filtering for networked systems with stochastic sensor gain degradation. Automatica 2014, 50, 1521–1525. [Google Scholar] [CrossRef]

- Chen, B.; Zhang, W.; Yu, L. Distributed fusion estimation with missing measurements, random transmission delays and packet dropouts. IEEE Trans. Autom. Control 2014, 59, 1961–1967. [Google Scholar] [CrossRef]

- Li, N.; Sun, S.; Ma, J. Multi-sensor distributed fusion filtering for networked systems with different delay and loss rates. Digit. Signal Process. 2014, 34, 29–38. [Google Scholar] [CrossRef]

- Wang, S.; Fang, H.; Tian, X. Recursive estimation for nonlinear stochastic systems with multi-step transmission delays, multiple packet dropouts and correlated noises. Signal Process. 2015, 115, 164–175. [Google Scholar] [CrossRef]

- Chen, D.; Yu, Y.; Xu, L.; Liu, X. Kalman filtering for discrete stochastic systems with multiplicative noises and random two-step sensor delays. Discret. Dyn. Nat. Soc. 2015, 2015, 809734. [Google Scholar] [CrossRef]

- García-Ligero, M.J.; Hermoso-Carazo, A.; Linares-Pérez, J. Distributed fusion estimation in networked systems with uncertain observations and markovian random delays. Signal Process. 2015, 106, 114–122. [Google Scholar] [CrossRef]

- Gao, S.; Chen, P.; Huang, D.; Niu, Q. Stability analysis of multi-sensor Kalman filtering over lossy networks. Sensors 2016, 16, 566. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.; Sun, S. State estimation for a class of non-uniform sampling systems with missing measurements. Sensors 2016, 16, 1155. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Wang, Z.; Chen, D.; Alsaadi, F.E. Estimation, filtering and fusion for networked systems with network-induced phenomena: New progress and prospects. Inf. Fusion 2016, 31, 65–75. [Google Scholar] [CrossRef]

- Sun, S.; Lin, H.; Ma, J.; Li, X. Multi-sensor distributed fusion estimation with applications in networked systems: A review paper. Inf. Fusion 2017, 38, 122–134. [Google Scholar] [CrossRef]

- Li, W.; Jia, Y.; Du, J. Distributed filtering for discrete-time linear systems with fading measurements and time-correlated noise. Digit. Signal Process. 2017, 60, 211–219. [Google Scholar] [CrossRef]

- Luo, Y.; Zhu, Y.; Luo, D.; Zhou, J.; Song, E.; Wang, D. Globally optimal multisensor distributed random parameter matrices Kalman filtering fusion with applications. Sensors 2008, 8, 8086–8103. [Google Scholar] [CrossRef] [PubMed]

- Shen, X.J.; Luo, Y.T.; Zhu, Y.M.; Song, E.B. Globally optimal distributed Kalman filtering fusion. Sci. China Inf. Sci. 2012, 55, 512–529. [Google Scholar] [CrossRef]

- Hu, J.; Wang, Z.; Gao, H. Recursive filtering with random parameter matrices, multiple fading measurements and correlated noises. Automatica 2013, 49, 3440–3448. [Google Scholar] [CrossRef]

- Linares-Pérez, J.; Caballero-Águila, R.; García-Garrido, I. Optimal linear filter design for systems with correlation in the measurement matrices and noises: Recursive algorithm and applications. Int. J. Syst. Sci. 2014, 45, 1548–1562. [Google Scholar] [CrossRef]

- Yang, Y.; Liang, Y.; Pan, Q.; Qin, Y.; Yang, F. Distributed fusion estimation with square-root array implementation for Markovian jump linear systems with random parameter matrices and cross-correlated noises. Inf. Sci. 2016, 370–371, 446–462. [Google Scholar] [CrossRef]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Networked fusion filtering from outputs with stochastic uncertainties and correlated random transmission delays. Sensors 2016, 16, 847. [Google Scholar] [CrossRef] [PubMed]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Fusion estimation using measured outputs with random parameter matrices subject to random delays and packet dropouts. Signal Process. 2016, 127, 12–23. [Google Scholar] [CrossRef]

- Feng, J.; Zeng, M. Descriptor recursive estimation for multiple sensors with different delay rates. Int. J. Control 2011, 84, 584–596. [Google Scholar] [CrossRef]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Least-squares linear-estimators using measurements transmitted by different sensors with packet dropouts. Digit. Signal Process. 2012, 22, 1118–1125. [Google Scholar] [CrossRef]

- Ma, J.; Sun, S. Distributed fusion filter for networked stochastic uncertain systems with transmission delays and packet dropouts. Signal Process. 2017, 130, 268–278. [Google Scholar] [CrossRef]

- Wang, S.; Fang, H.; Liu, X. Distributed state estimation for stochastic non-linear systems with random delays and packet dropouts. IET Control Theory Appl. 2015, 9, 2657–2665. [Google Scholar] [CrossRef]

- Sun, S. Linear minimum variance estimators for systems with bounded random measurement delays and packet dropouts. Signal Process. 2009, 89, 1457–1466. [Google Scholar] [CrossRef]

- Sun, S. Optimal linear filters for discrete-time systems with randomly delayed and lost measurements with/without time stamps. IEEE Trans. Autom. Control 2013, 58, 1551–1556. [Google Scholar] [CrossRef]

- Sun, S.; Ma, J. Linear estimation for networked control systems with random transmission delays and packet dropouts. Inf. Sci. 2014, 269, 349–365. [Google Scholar] [CrossRef]

- Wang, S.; Fang, H.; Tian, X. Minimum variance estimation for linear uncertain systems with one-step correlated noises and incomplete measurements. Digit. Signal Process. 2016, 49, 126–136. [Google Scholar] [CrossRef]

- Tian, T.; Sun, S.; Li, N. Multi-sensor information fusion estimators for stochastic uncertain systems with correlated noises. Inf. Fusion 2016, 27, 126–137. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Optimal Fusion Estimation with Multi-Step Random Delays and Losses in Transmission. Sensors 2017, 17, 1151. https://doi.org/10.3390/s17051151

Caballero-Águila R, Hermoso-Carazo A, Linares-Pérez J. Optimal Fusion Estimation with Multi-Step Random Delays and Losses in Transmission. Sensors. 2017; 17(5):1151. https://doi.org/10.3390/s17051151

Chicago/Turabian StyleCaballero-Águila, Raquel, Aurora Hermoso-Carazo, and Josefa Linares-Pérez. 2017. "Optimal Fusion Estimation with Multi-Step Random Delays and Losses in Transmission" Sensors 17, no. 5: 1151. https://doi.org/10.3390/s17051151