Stroboscope Based Synchronization of Full Frame CCD Sensors

Abstract

:1. Introduction

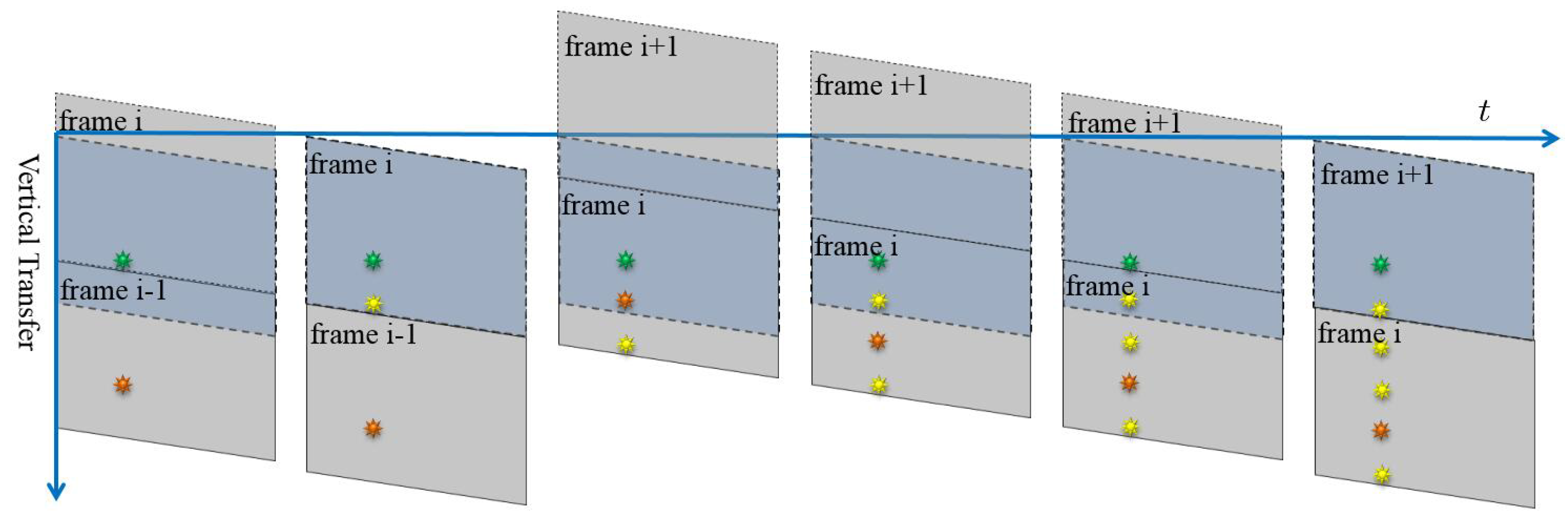

- Aligning the frames from different video sequences. The smear dots of the stroboscope are used as the time stamps, and the relative position between the stroboscope and the smear dots in images are adjusted to align the frames from different sequences.

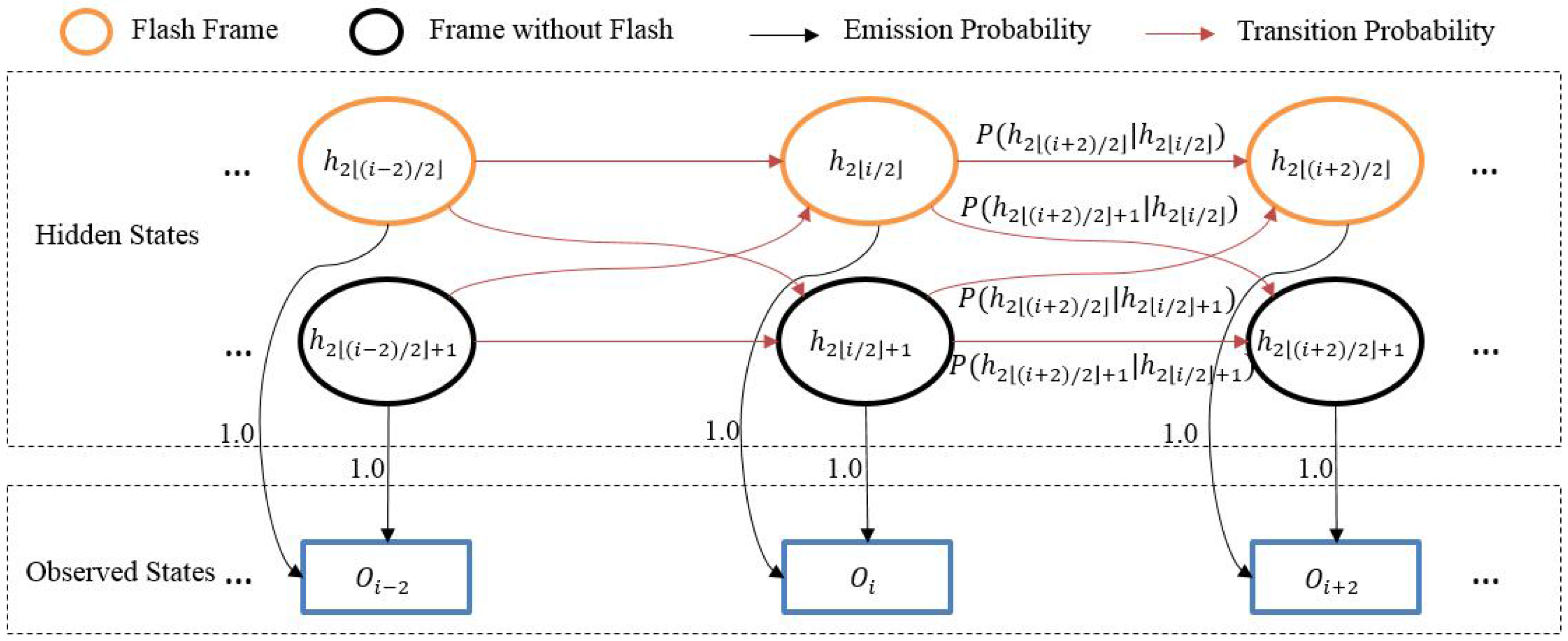

- Matching the sequences. The stroboscope is utilized to generate periodic flashes, which indicate the overlapping content and allow for determining the offset time between cameras. The sequences are matched by matching the flashes using a hidden Markov model.

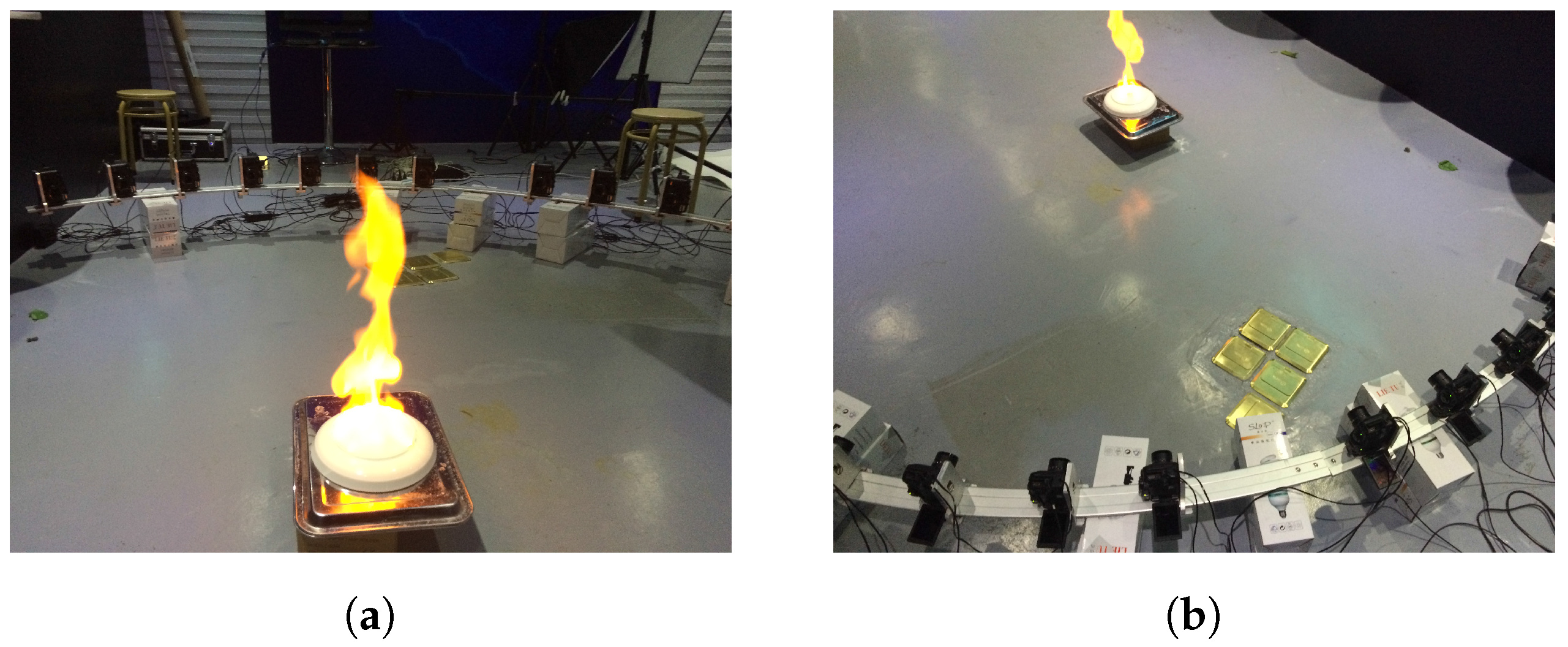

2. Materials and Methods

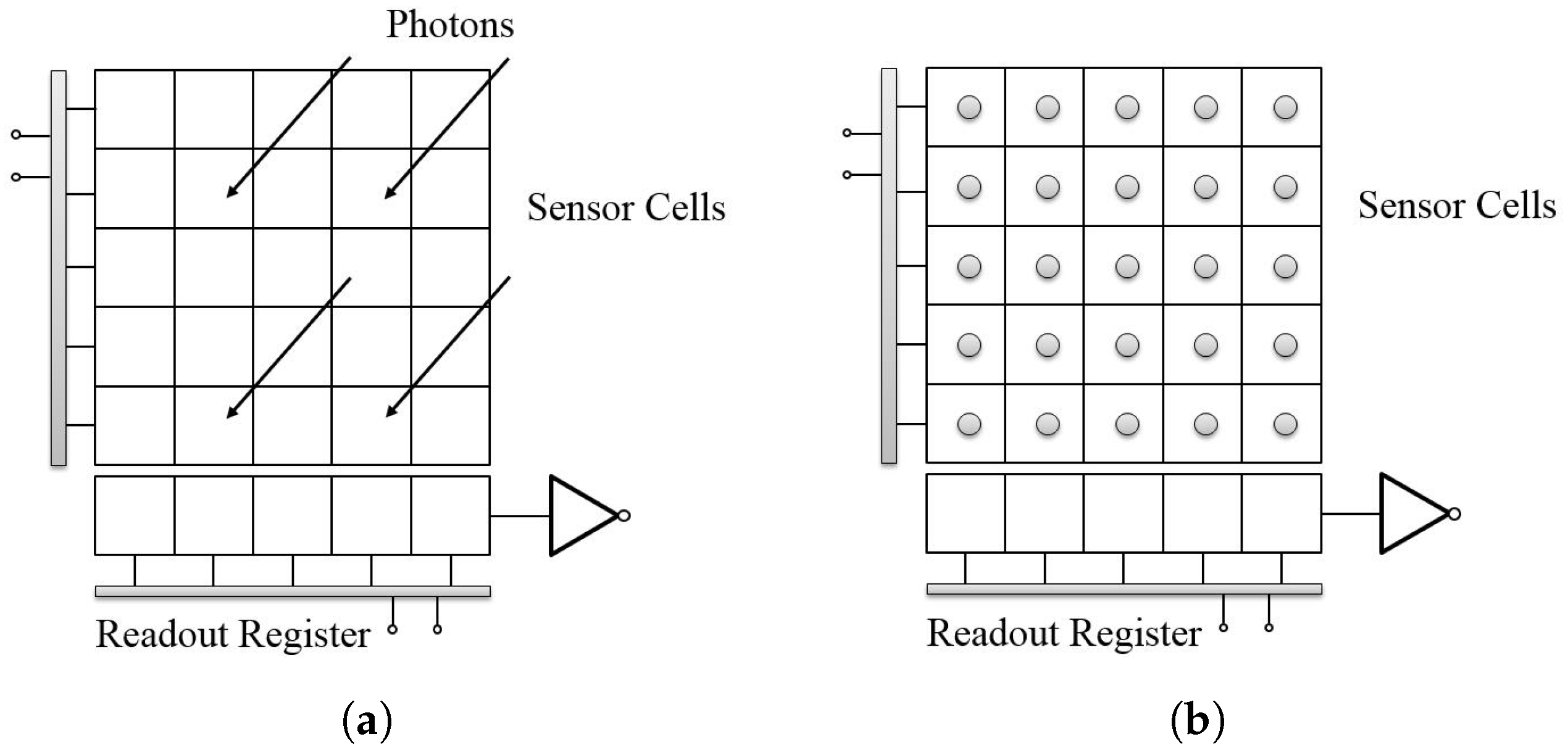

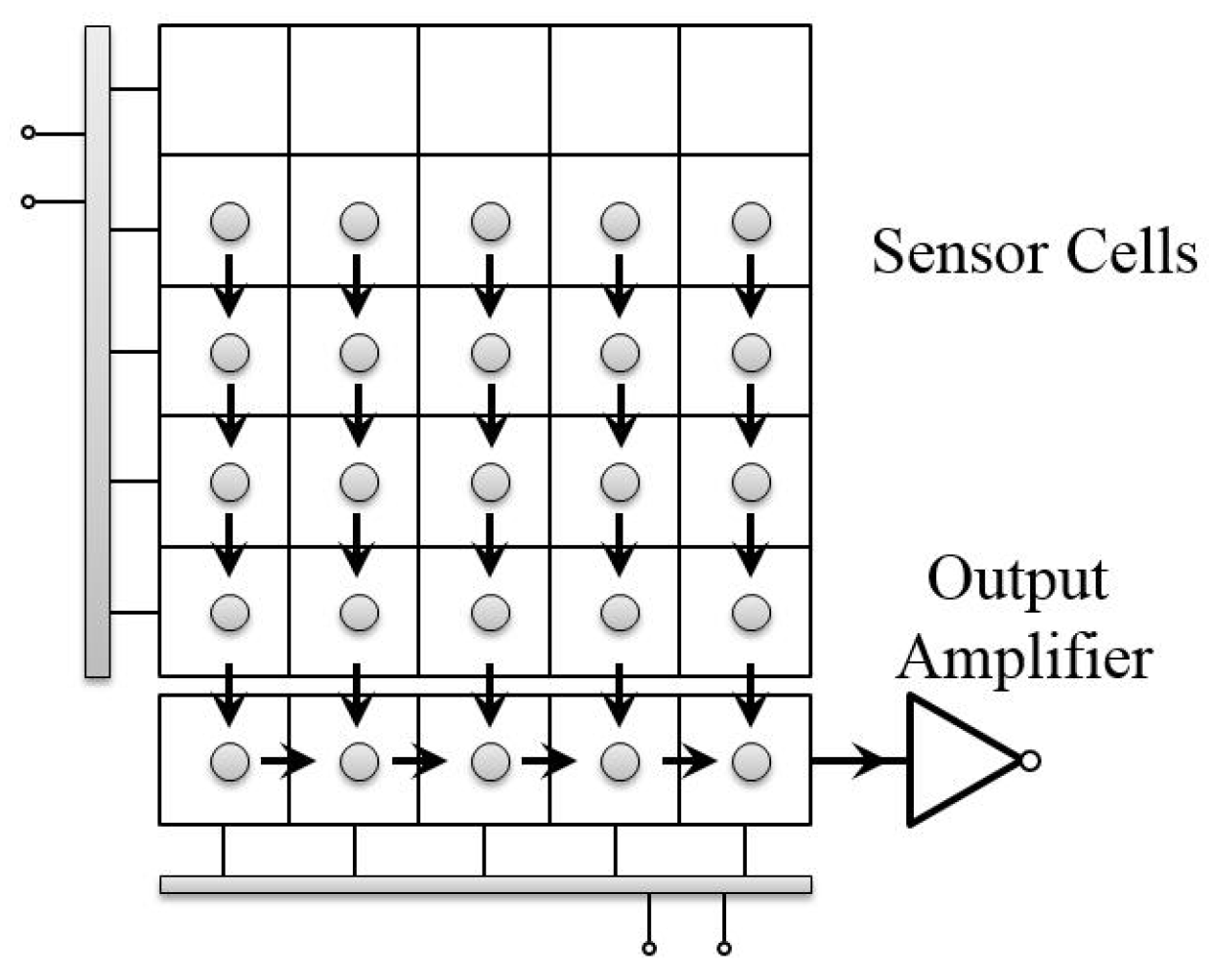

2.1. Full Frame CCD Architecture

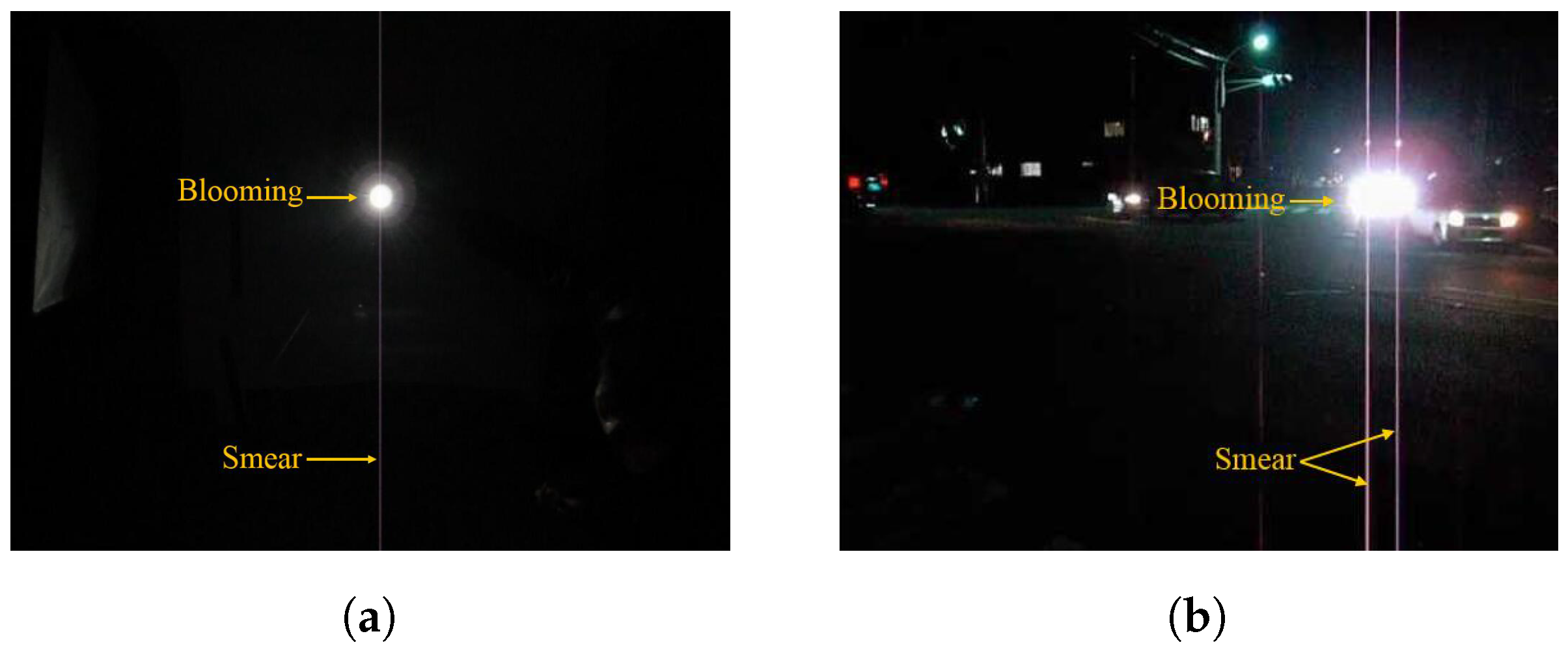

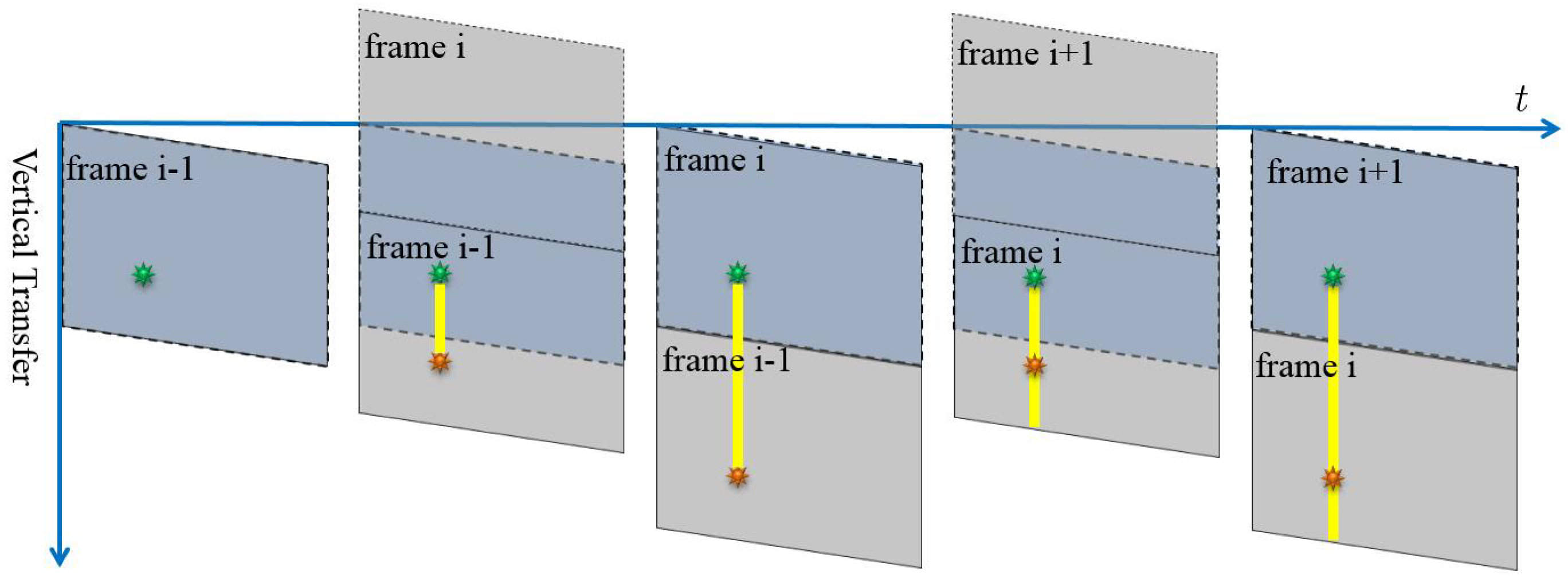

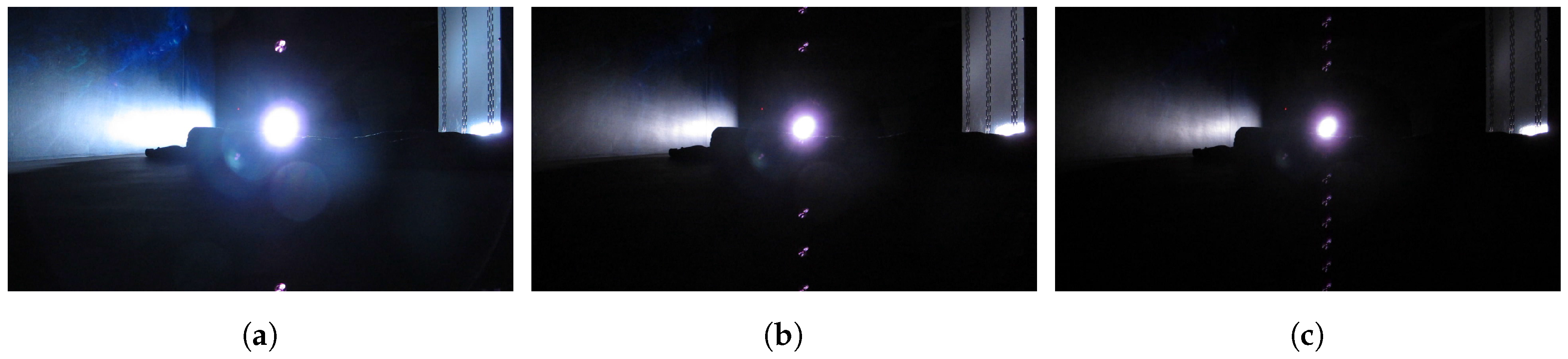

2.2. CCD Smear

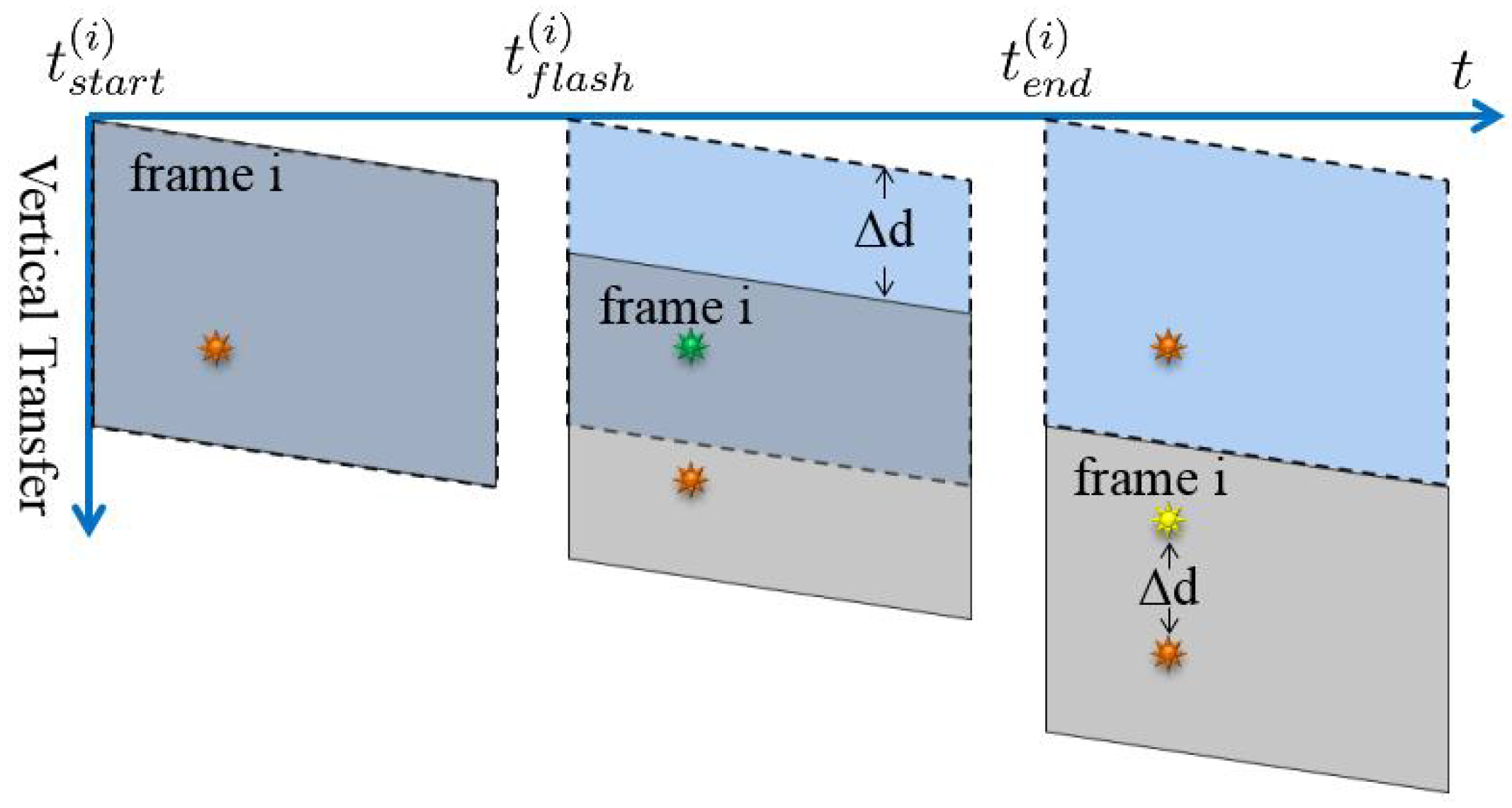

2.3. Smear of a Stroboscope

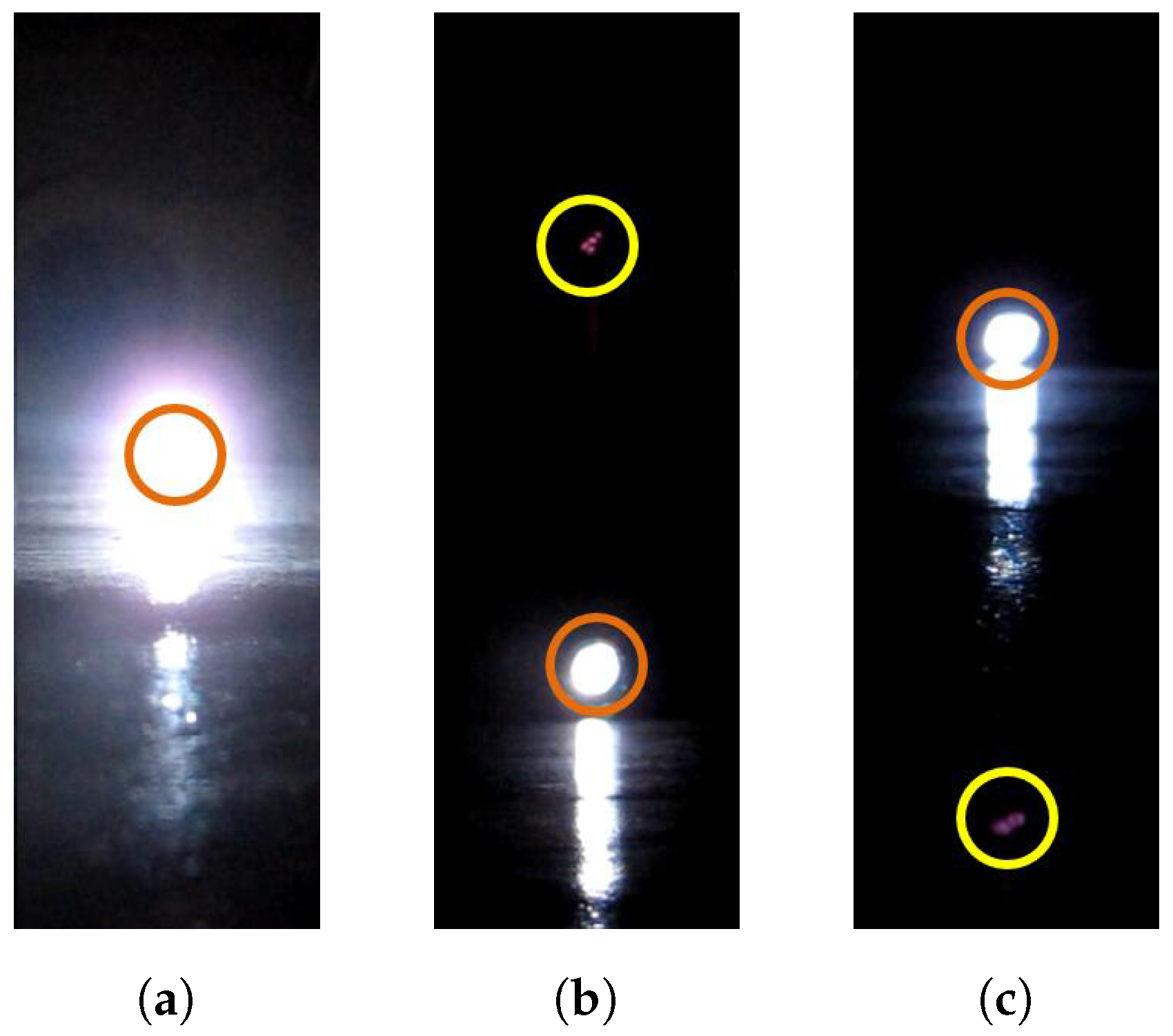

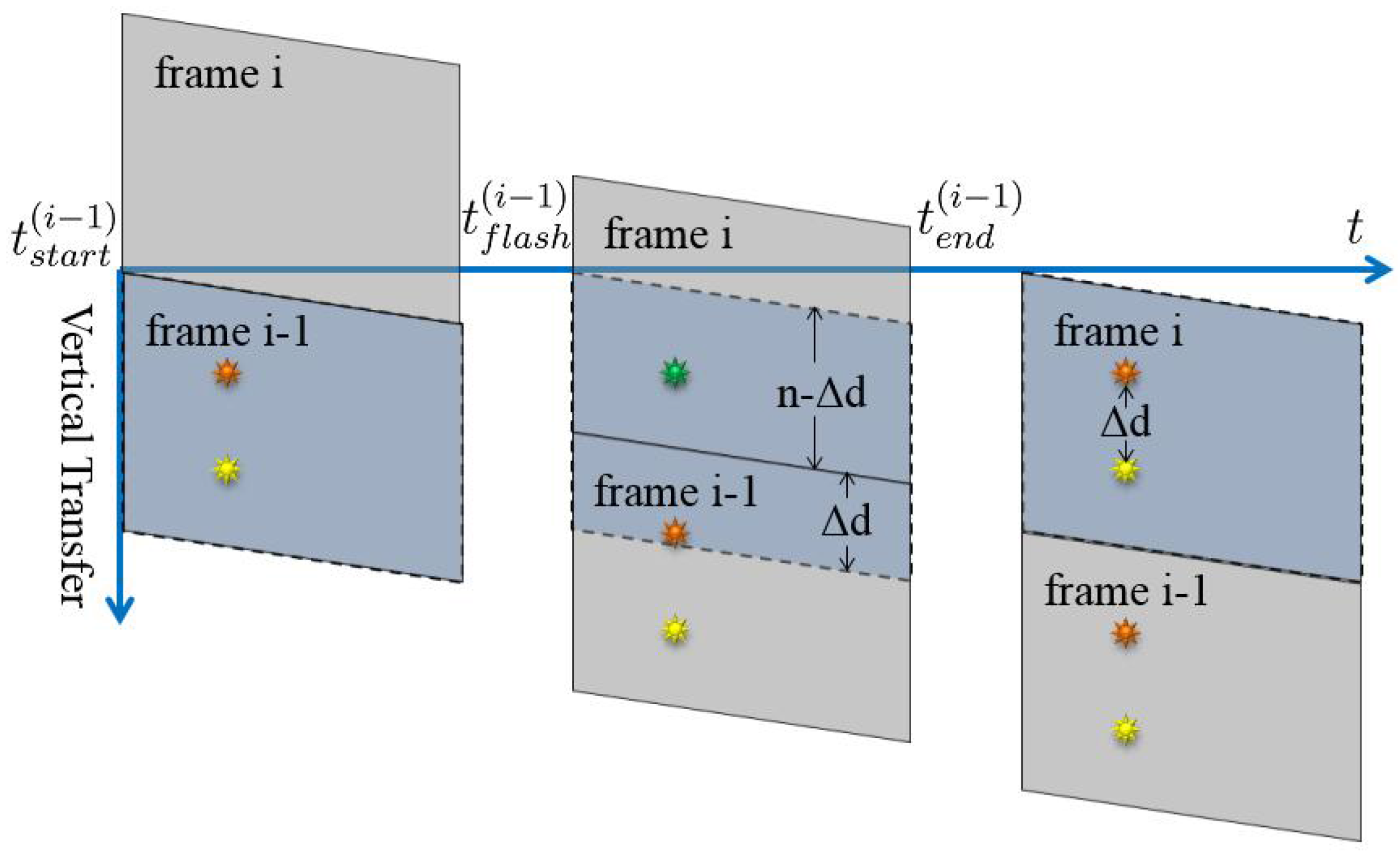

2.4. Frame Alignment

- Set the flash rate of the strobe to the same value as the frame rate of cameras;

- Keep the only smear dot on the same side of the light source for all camera images;

- Adjust the smear dot positions to make them equidistant from the light source.

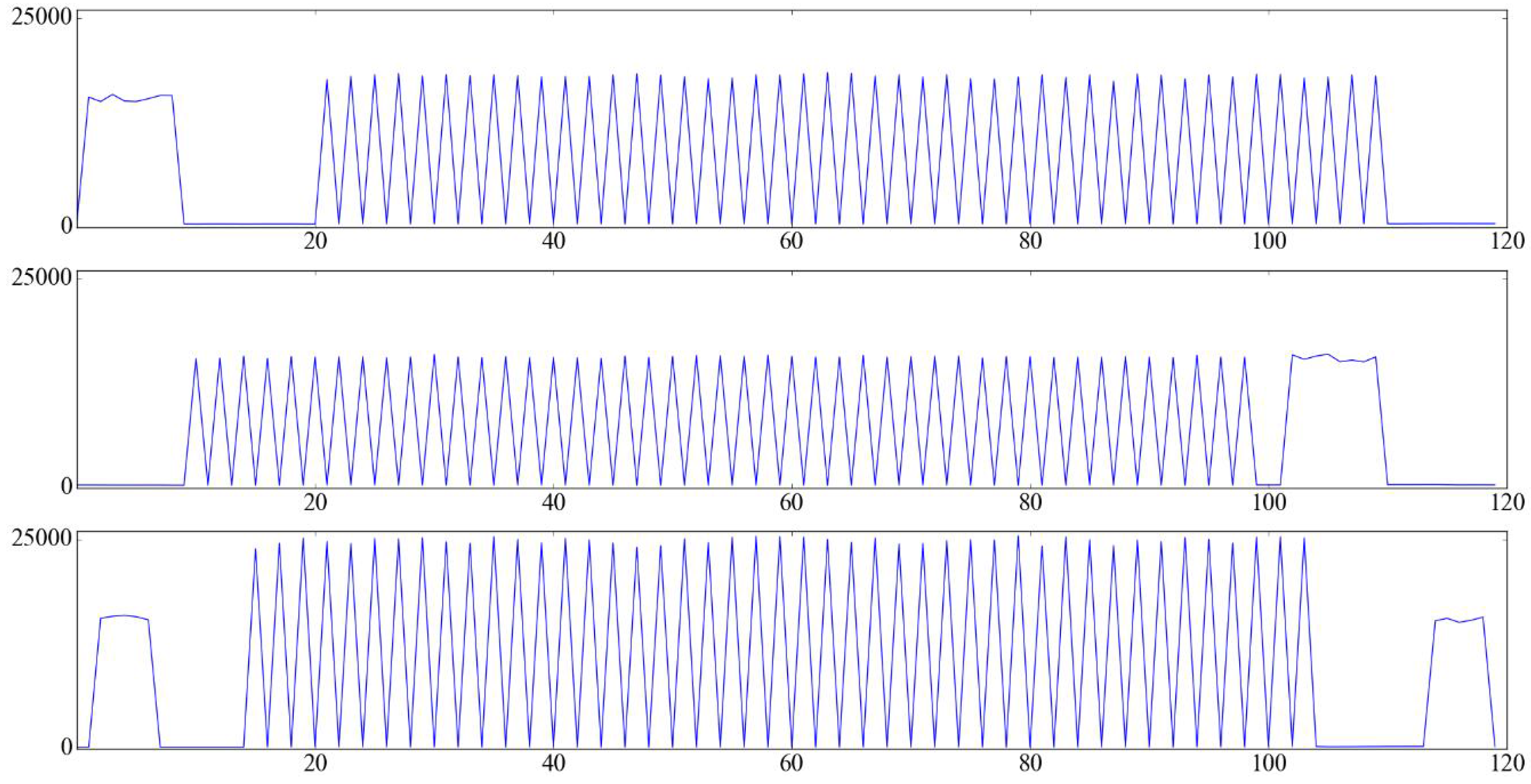

2.5. Sequence Match

- Compute the adaptive threshold T based on the video contents,

- Calculate the values of O for each frame,

- Get the flash subsequences by choosing the odd-index or even-index subsequence with a larger mean value of O,

- Apply the hidden Markov model on the flash subsequences to find the first flash frame, which would be used to determine the offset for each sequence.

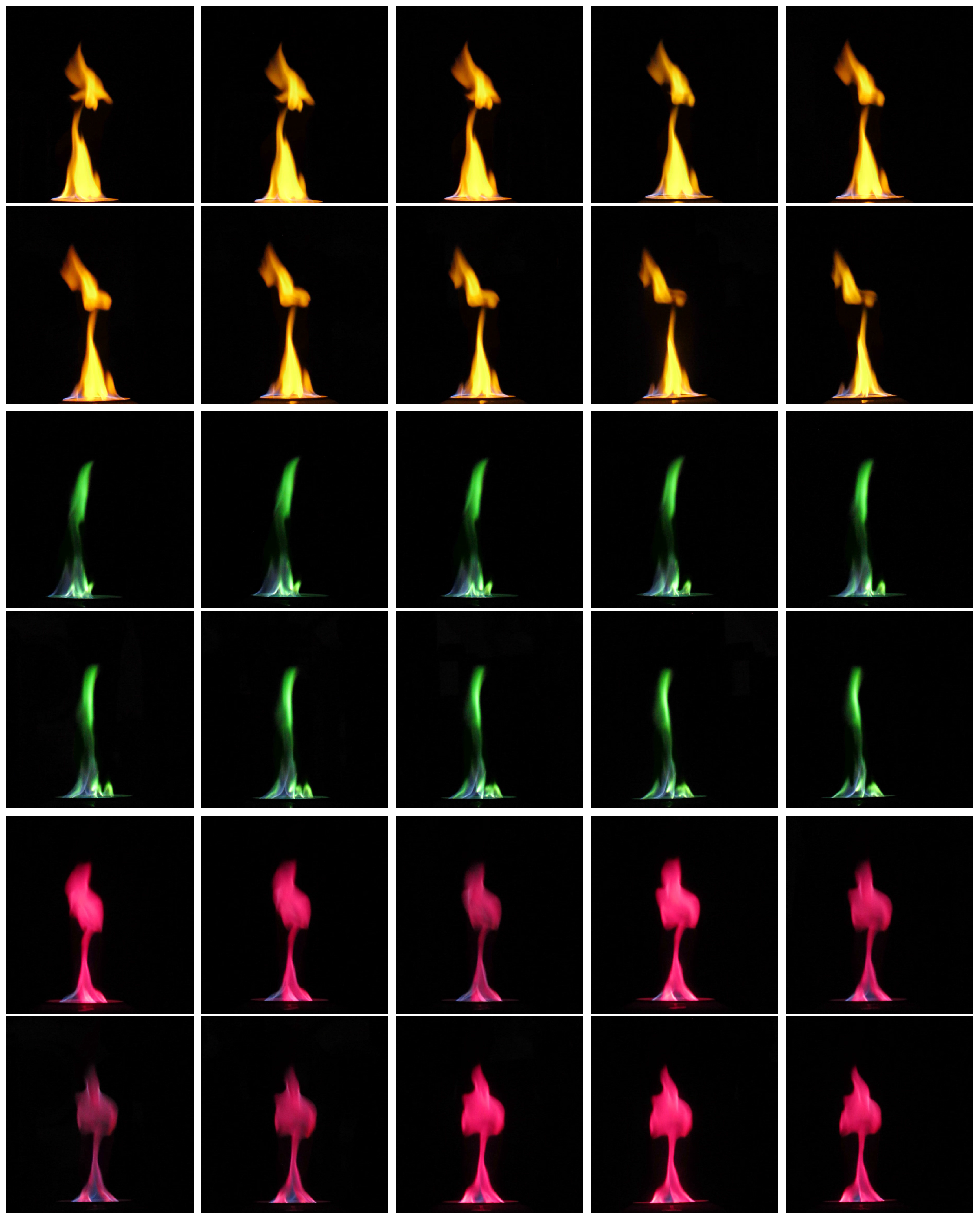

3. Results

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Yücer, K.; Sorkine-Hornung, A.; Wang, O.; Sorkine-Hornung, O. Efficient 3D object segmentation from densely sampled light fields with applications to 3D reconstruction. ACM Trans. Graph. 2016, 35, 22–35. [Google Scholar] [CrossRef]

- Bradley, D.; Nowrouzezahrai, D.; Beardsley, P. Image-based reconstruction and synthesis of dense foliage. ACM Trans. Graph. 2013, 32, 74. [Google Scholar] [CrossRef]

- Okabe, M.; Dobashi, Y.; Anjyo, K.; Onai, R. Fluid volume modeling from sparse multi-view images by appearance transfer. ACM Trans. Graph. 2015, 34, 93–102. [Google Scholar] [CrossRef]

- Wu, Z.; Zhou, Z.; Tian, D.; Wu, W. Reconstruction of three-dimensional flame with color temperature. Vis. Comput. 2015, 31, 613–625. [Google Scholar] [CrossRef]

- Hasinoff, S.W.; Kutulakos, K.N. Photo-consistent reconstruction of semitransparent scenes by density-sheet decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 870–885. [Google Scholar] [CrossRef] [PubMed]

- Ihrke, I.; Magnor, M. Image-based tomographic reconstruction of flames. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Grenoble, France, 27–29 August 2004; pp. 365–373. [Google Scholar]

- Atcheson, B.; Ihrke, I.; Heidrich, W.; Tevs, A.; Bradley, D.; Magnor, M.; Seidel, H.P. Time-resolved 3D capture of non-stationary gas flows. ACM Trans. Graph. 2008, 27, 132–140. [Google Scholar] [CrossRef]

- Li, C.; Pickup, D.; Saunders, T.; Cosker, D.; Marshall, D.; Hall, P.; Willis, P. Water surface modeling from a single viewpoint video. IEEE Trans. Vis. Comp. Graph. 2013, 19, 1242–1251. [Google Scholar]

- Wang, C.; Wang, C.; Qin, H.; Zhang, T.Y. Video-based fluid reconstruction and its coupling with SPH simulation. Vis. Comput. 2016. [Google Scholar] [CrossRef]

- Gregson, J.; Krimerman, M.; Hullin, M.B.; Heidrich, W. Stochastic tomography and its applications in 3D imaging of mixing fluids. ACM Trans. Graph. 2012, 31, 52–61. [Google Scholar] [CrossRef]

- Zhu, H.; Liu, Y.; Fan, J.; Dai, Q.; Cao, X. Video-Based Outdoor Human Reconstruction. IEEE Trans. Circ. Syst. Vid. Tech. 2016, 27, 760–770. [Google Scholar] [CrossRef]

- Gregson, J.; Ihrke, I.; Thuerey, N.; Heidrich, W. From capture to simulation: Connecting forward and inverse problems in fluids. ACM Trans. Graph. 2014, 33, 139–149. [Google Scholar] [CrossRef]

- Tang, T.; Tian, J.; Zhong, D.; Fu, C. Combining Charge Couple Devices and Rate Sensors for the Feedforward Control System of a Charge Coupled Device Tracking Loop. Sensors 2016, 16, 968. [Google Scholar] [CrossRef] [PubMed]

- Idroas, M.; Rahim, R.A.; Green, R.G.; Ibrahim, M.N.; Rahiman, M.H.F. Image reconstruction of a charge coupled device based optical tomographic instrumentation system for particle sizing. Sensors 2010, 10, 9512–9528. [Google Scholar]

- Tompsett, M.F.; Amelio, G.F.; Bertram, W.J.; Buckley, R.R.; McNamara, W.J.; Mikkelsen, J.C.; Sealer, D.A. Charge-coupled imaging devices: Experimental results. IEEE Trans. Elect. Devic. 1971, 18, 992–996. [Google Scholar] [CrossRef]

- CMOS Wikipedia. Available online: https://en.wikipedia.org/wiki/CMOS (accessed on 23 December 2016).

- Sensor Comparison II: Interline Scan, Frame Transfer & Full Frame. Available online: http://www.adept.net.au/news/newsletter/200810-oct/sensors.shtml (accessed on 23 December 2016).

- Han, Y.S.; Choi, E.; Kang, M.G. Smear removal algorithm using the optical black region for CCD imaging sensors. IEEE Trans. Consum. Electron. 2009, 55, 2287–2293. [Google Scholar] [CrossRef]

- Yao, R.; Zhang, Y.-N.; Sun, J.-Q.; Zhang, Y.-P. Smear Removal Algorithm of CCD Imaging Sensors Based on Wavelet Transform in Star-sky Image. Acta Photonica Sin. 2011, 40, 413–418. [Google Scholar] [CrossRef]

- Dorrington, A.A.; Cree, M.J.; Carnegie, D.A. The importance of CCD readout smear in heterodyne imaging phase detection applications. In Proceedings of the Image and Vision Computing, Dunedin, New Zealand, 28–29 November 2005; pp. 73–78. [Google Scholar]

- Carceroni, R.L.; Pádua, F.L.; Santos, G.A.; Kutulakos, K.N. Linear sequence-to-sequence alignment. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Dai, C.; Zheng, Y.; Li, X. Subframe video synchronization via 3D phase correlation. In Proceedings of the IEEE International Conference on Image Processing, Atlanta, GA, USA, 8–11 October 2006; pp. 501–504. [Google Scholar]

- Lei, C.; Yang, Y.H. Tri-focal tensor-based multiple video synchronization with subframe optimization. IEEE Trans. Image Proc. 2006, 15, 2473–2480. [Google Scholar]

- Shrestha, P.; Weda, H.; Barbieri, M.; Sekulovski, D. Synchronization of multiple video recordings based on still camera flashes. In Proceedings of the 14th ACM International Conference on Multimedia, Santa Barbara, CA, USA, 23–27 October 2006; pp. 137–140. [Google Scholar]

- Bradley, D.; Atcheson, B.; Ihrke, I.; Heidrich, W. Synchronization and rolling shutter compensation for consumer video camera arrays. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Miami, FL, USA, 20–25 June 2009; pp. 1–8. [Google Scholar]

- Casio’s Latest Exilim High-Speed Camera Can Sync with up to Seven Others. Available online: http://www.cio.com/article/2861593/consumer-technology/casios-latest-exilim-highspeed-camera-can-sync-with-up-to-seven-others.html (accessed on 12 February 2017).

- Concepts in Digital Imaging Technology: CCD Saturation and Blooming. Available online: http://hamamatsu.magnet.fsu.edu/articles/ccdsatandblooming.html (accessed on 5 April 2017).

- Rabiner, L.R. A tutorial on hidden Markov models and selected applications inspeech recognition. Proc. IEEE 1989, 77, 257–286. [Google Scholar] [CrossRef]

| Method | Still Camera Flash Based Method [24] | Our Method | |

|---|---|---|---|

| Result | |||

| Manually annotated | 238 | 260 | |

| Correctly detected | 210 (88.2%) | 260 (100%) | |

| Falsely detected | 0 (0%) | 0 (0%) | |

| Missed detected | 28 (11.8%) | 0 (0%) | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, L.; Feng, X.; Zhang, Y.; Shi, M.; Zhu, D.; Wang, Z. Stroboscope Based Synchronization of Full Frame CCD Sensors. Sensors 2017, 17, 799. https://doi.org/10.3390/s17040799

Shen L, Feng X, Zhang Y, Shi M, Zhu D, Wang Z. Stroboscope Based Synchronization of Full Frame CCD Sensors. Sensors. 2017; 17(4):799. https://doi.org/10.3390/s17040799

Chicago/Turabian StyleShen, Liang, Xiaobing Feng, Yuan Zhang, Min Shi, Dengming Zhu, and Zhaoqi Wang. 2017. "Stroboscope Based Synchronization of Full Frame CCD Sensors" Sensors 17, no. 4: 799. https://doi.org/10.3390/s17040799