1. Introduction

The accurate acquisition of 3D face appearance characteristics is important to plan facial contouring surgery, and excellent work is based on an exact 3D face modeling [

1,

2]. Experts want to devise a non-contact, rapid, and precise approach to acquiring a 3D digital face profile, which is applied to simulate and design an optimal plan for face surgery by modern technologies, such as computer-aided design [

3,

4,

5].

Three types of 3D face modeling methods are currently employed to extract human face profiles: computer tomography (CT) technology [

4,

5,

6,

7,

8,

9,

10], the passive optical 3D sensing technique [

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23], and the active optical 3D sensing technique [

24,

25,

26,

27,

28,

29,

30,

31,

32,

33]. The 3D reconstruction method based on CT technology is sensitive to the skeleton and can be conveniently utilized for craniofacial plastics, as well as the oral and maxillofacial correction of abnormalities [

4,

5,

6,

7,

8,

9,

10]. However, CT technology cannot easily rebuild soft tissue 3D profiles and obtain human face surface features.

Passive optical 3D sensing techniques, such as stereo vision, utilize two or more camera systems to capture a scene in ambient light from different viewpoints. They determine the height by matching the image features of the corresponding surface features. This method requires many factors to be noticed, such as ambient light, background, vision angle, face gesture, expression, and shading, because they can directly influence measuring accuracy. Furthermore, a mass of data operations, such as correlation analysis and matching operations, are also necessary. Along with the development of computing techniques, the arithmetic speed is no longer a key limiting factor, and the passive optical 3D sensing technique is more often applied for 3D object recognition and understanding. Recently, there have been promising passive long-wavelength infrared polarimetric imaging techniques for 3D face reconstruction; for example, American scholars Alex J. Yuffa and his team proposed an interesting approach whereby a 3D image of a human face is obtained from Stokes parameters and the degree of linear polarization of long-wavelength infrared radiation emitted by human faces [

23].

The active optical 3D sensing technique employs structured light to illuminate the specimen. The time or space in the structured light is modulated by the height, and the 3D information can then be extracted from the observation light by a certain unwrapping algorithm [

24,

25]. Given its non-contact, high-resolution, and highly automated features, the active optical 3D sensing technique is employed in most 3D sensing systems for 3D surface-shape measurement [

24,

25,

26,

27,

28,

29,

30,

31,

32,

33].

Phase measuring profilometry (PMP) is an important method of the active optical 3D sensing technique [

26,

27]. The sinusoidal fringe and phase-shifting techniques are employed in PMP to obtain the desired height information. A flaw in PMP is that it has to capture at least three continuously modulated phase-shifting fringes that correspond to a static profile. Therefore, real-time dynamic measurement becomes difficult, and slight movements or facial expression changes of the target human face potentially bring errors to the demodulated results during the shooting process. A series of phase-shifting fringes can be projected and shot within a short span of time by utilizing a fast digital grating projection approach. However, the images photographed by a charge-coupled device (CCD) camera can easily cause drawbacks, such as trailing and distortion, due to the rapid rotation of the phase-shifting fringe. The measurement inaccuracy also increases. Thus, a one-shot technique is now becoming the trend [

31,

32,

33].

This paper reports on a novel one-shot approach for 3D human face profile measurement. A composite pattern (CP) is adopted in place of the series phase-shifting fringe in PMP, and only a single CP frame is required for projection and capture. The CP efficiently combines several phase-shifting fringes and the same number of carrier gratings so that the phase-shifting technique can also be utilized in this approach. The method to generate this type of composite pattern is presented in

Section 2, and the measuring principle and demodulation approaches are also provided in this section.

Section 3 presents experimental tests to prove the validity and feasibility of the proposed method. Difficulties and outlooks are then discussed in

Section 4.

2. Measuring System Based on a Composite Pattern

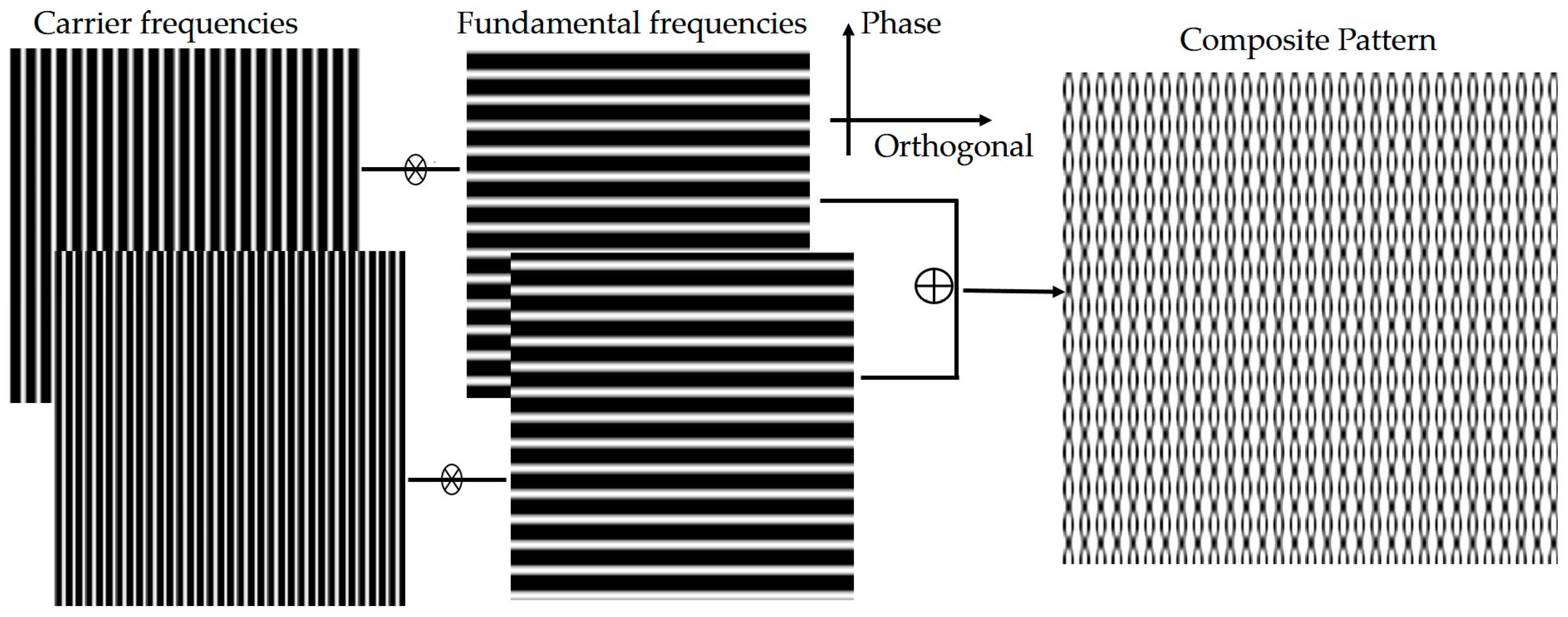

2.1. Composite Pattern of the Phase-Shifting Fringes and Carrier Gratings

A CP is composed of two phase-shifting sinusoidal fringes with the same frequency (called fundamental frequency) and two carrier gratings with distinct frequencies (called carrier frequencies). Each of the two phase-shifting fringes, which are along the depth distortion direction (i.e., phase direction), is modulated by a carrier fringe along the orthogonal axis of the phase direction (i.e., orthogonal direction). They are then combined to obtain the CP, as shown in

Figure 1.

The phase-shifting sinusoidal fringe in CP is given as follows:

where constant

is applied to offset

to be non-negative values,

is the fundamental frequency,

is the phase direction, and

is the phase-shifting index from 0 to 1. The above figure describes CP as follows:

where

and

are the carrier frequencies of the carrier gratings along the orthogonal direction, and

and

are the projection constants.

2.2. Projection and Modulation

The proper values of the projection constants are selected to ensure that the projection intensity of CP falls within

. A CP is projected by a digital light processor (DLP) to cover the target human face so that the surface can be captured as an entire field recording. Given that CP phases are modulated by height, the intensity of the reflected light field is changed as follows:

where

is the reflectivity, and

is the wrapped phase related to the face depth. This modulated distortion pattern is captured by a CCD camera.

2.3. Phase Acquisition and Unwrapping

After 2D Fourier transform (FT) and simplification, Equation (3) can be rewritten as follows:

where

,

,

, and

represent the 2D Fourier frequency spectra of

,

,

, and

, respectively, and

and

correspond to the orthogonal and phase directions in the Fourier domain. The symbol

in the equation represents

is the conjugate function of

. The spectrum

is located in the center of the frequency domain, which is called zero frequency spectra.

Equation (4) indicates that the two carrier frequency spectra and their conjugates are evenly distributed in both sides of the zero frequency spectra. A suitable 2D band-pass filter (e.g., Hanning window) was employed to separate each channel, and then inverse FT (IFT) was performed on each channel. Their absolute values were obtained, and the demodulated phase-shifting fringe was then derived. Grayscale adjustment was then introduced to the fringe, such that

where

is a constant matrix that represents the reflectivity. Subtraction between the phase-shifting fringes is performed, such that

After 1D FT is conducted along the

-direction (viz. phase direction), Equation (6) can be rewritten as follows:

where

and

represent the 1D Fourier frequency spectra of

and

, respectively.

Figure 2 shows the distribution of the frequency spectra

.

A proper band-pass filter is selected to extract the fundamental component, which is shown in

Figure 2 as a shadow. The wrapped phase

related to the face depth can then be obtained from the fundamental component by performing IFT. A reference plane is required behind the target face to determine the true depth. By utilizing the aforementioned method, the wrapped phase

related to the reference plane can be obtained beforehand. The wrapped phase values of

and

require an unwrapping process to connect the interrupted phases caused by an inversely tangential or imaginary phase algorithm. The height values

of the human face can finally be calculated as follows [

24,

25,

26,

27]:

where

represents the angle parameters between the CCD camera and DLP (viz. the geometric parameters of the system).

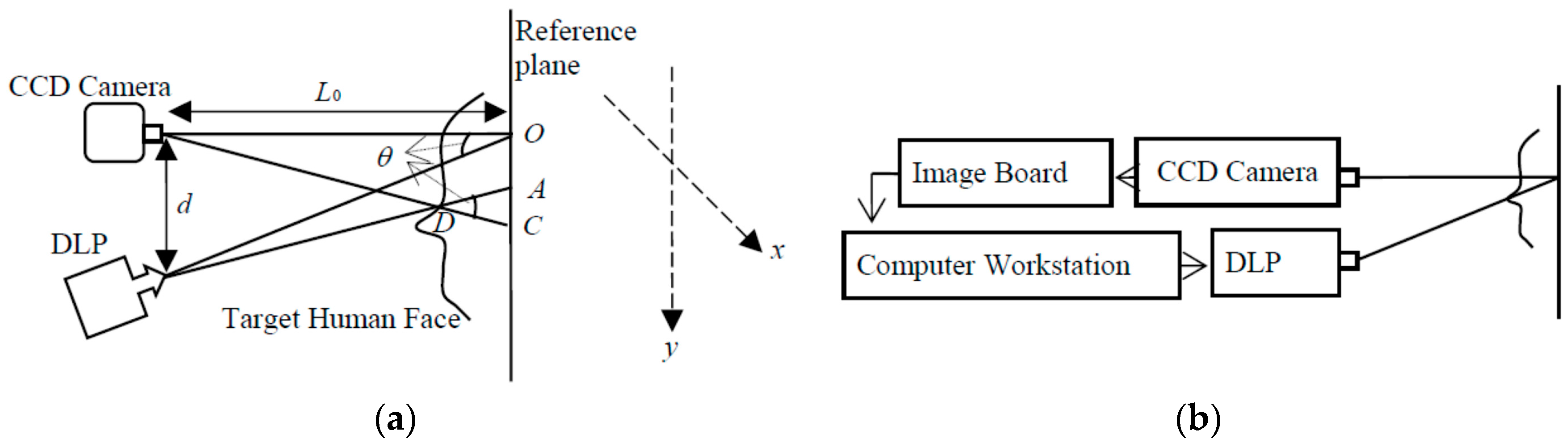

2.4. Working Process and Optical Geometric Parameters

Given the aforementioned measuring principle, the working process can be described as follows: (1) several phase-shifting fringes and carrier gratings are combined to generate a CP, which is then projected to cover the target human face by DLP; (2) the CP phases (viz. carrier signals) are modulated by the face depth (viz. modulation signals), and the modulated distortion pattern is then captured by a CCD camera (imaging system); (3) the captured picture is sent to a computer for processing; (4) a series of calculations and a certain unwrapping algorithm obtains the unwrapped phases; and (5) the target 3D human face profile is obtained according to the relationship between phase and height.

Figure 3 shows the process details.

The optical geometric parameters are shown in

Figure 4a, where

is the instance between CCD and DLP, and

is the distance from CCD to the reference plane.

Figure 4b shows the diagram of the experimental setup.

3. Experiment and Results

3.1. Model Test

A human face model was utilized as a tentative test to demonstrate the feasibility of this one-shot technique. The projector used was a Panasonic (PT-P2500) digital projector with a 1024 × 768 resolution. The image sensor utilized was a low-aberrance color CCD camera (Prosilica, EC1350C, Vancouver, BC, Canada) with a 1360 × 1024 resolution, pixel size of 4.65 μm × 1.65 μm, and maximum frame rate of 18 fps. The focus of the camera lens (KOWA, LM12JCM, Nagoya, Japan) was 12 mm. The image board was a 1394 card (KEC, 1582T, Taiwan). The reference plane was a piece of white board.

Figure 5 shows the experimental setup, where the geometric parameters were detected as

= 73 mm and

= 18 mm.

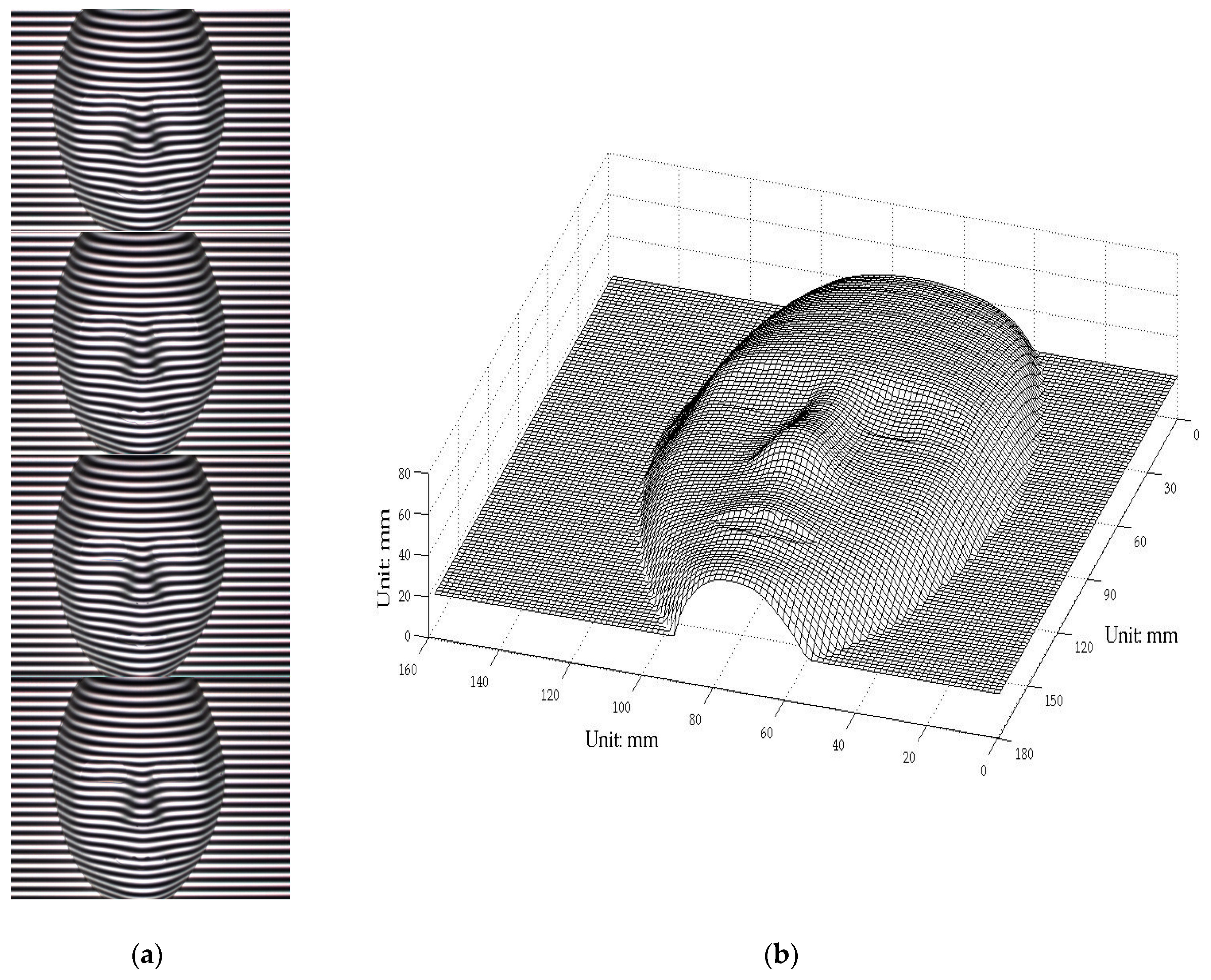

The traditional PMP method (i.e., the four-step phase-shifting technique) was first adopted to rebuild the 3D face model. The fundamental frequency

in PMP was 1/25 line/pixel.

Figure 6a shows the captured four phase-shifting fringes, which were modulated by the face model surface depth.

Figure 6b shows the rebuilt results utilizing PMP. A suitable rebuilt 3D shape could be obtained utilizing a static face model.

The composite pattern was then introduced as a one-shot technique to reconstruct the face model and compare the results. The carrier frequencies

and

were 1/10 line/pixel and 1/5 line/pixel, respectively, whereas the fundamental frequency

was 1/20 line/pixel. The composite pattern coding, projection, and data acquisition, storage, and processing were controlled utilizing a computer workstation.

Figure 7a shows the captured distortion composite pattern modulated by height.

Figure 7b shows the obtained 3D digital profile of the face model. The images show no noise point, and the reconstructed surface clearly shows the entire face profile with a suitable resolution. Notably, this approach is faster and more convenient because it requires only one capture.

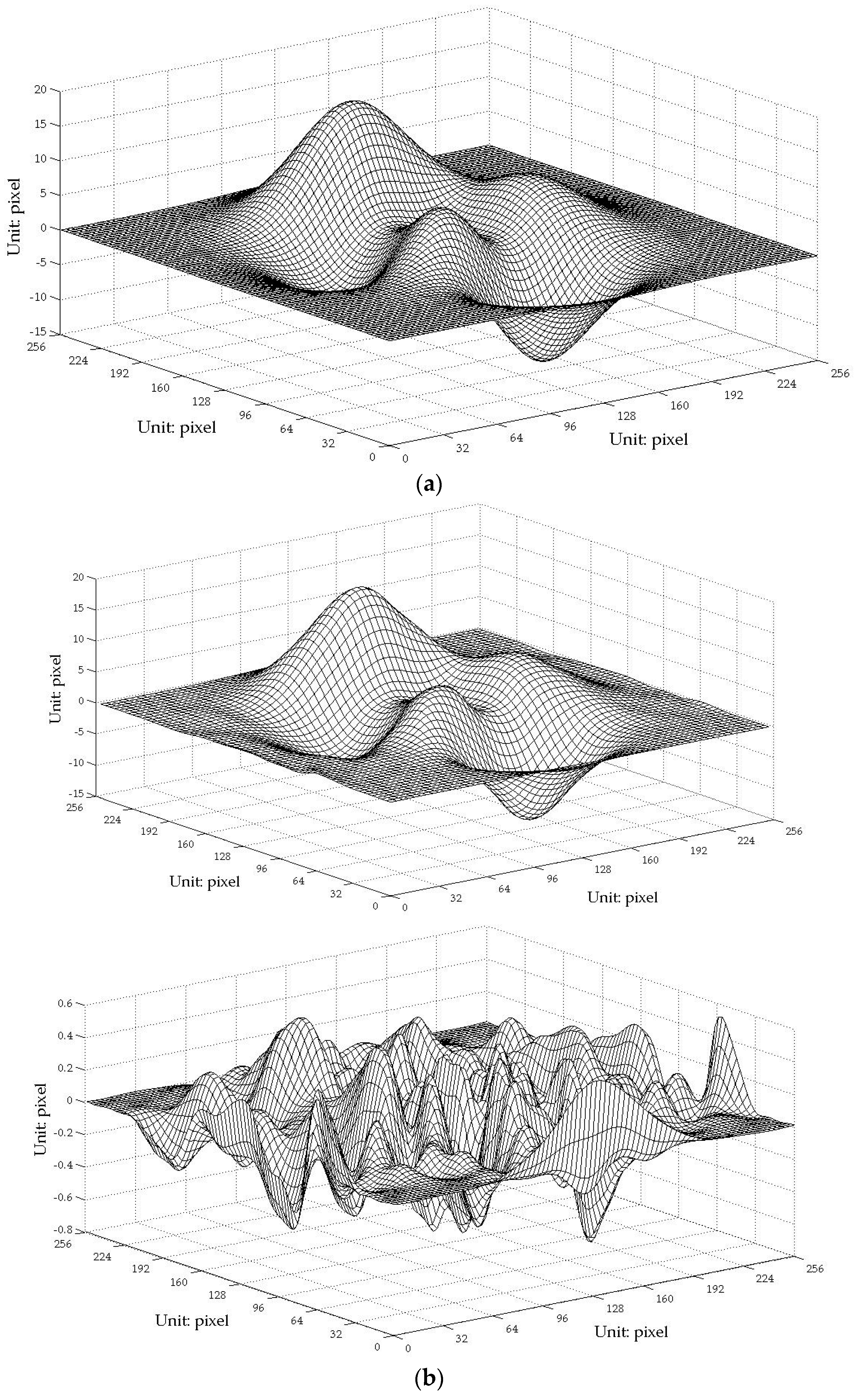

Figure 8 shows the errors of the proposed one-shot 3D reconstruction against the traditional PMP method. The small range of the errors from −0.6428 mm to 0.7823 mm show that they were in reasonable agreement. We found that the error is bigger around the edge of the face, which shows that the algorithm has a limit around the area with discontinuities in surface slopes due to sharp edges.

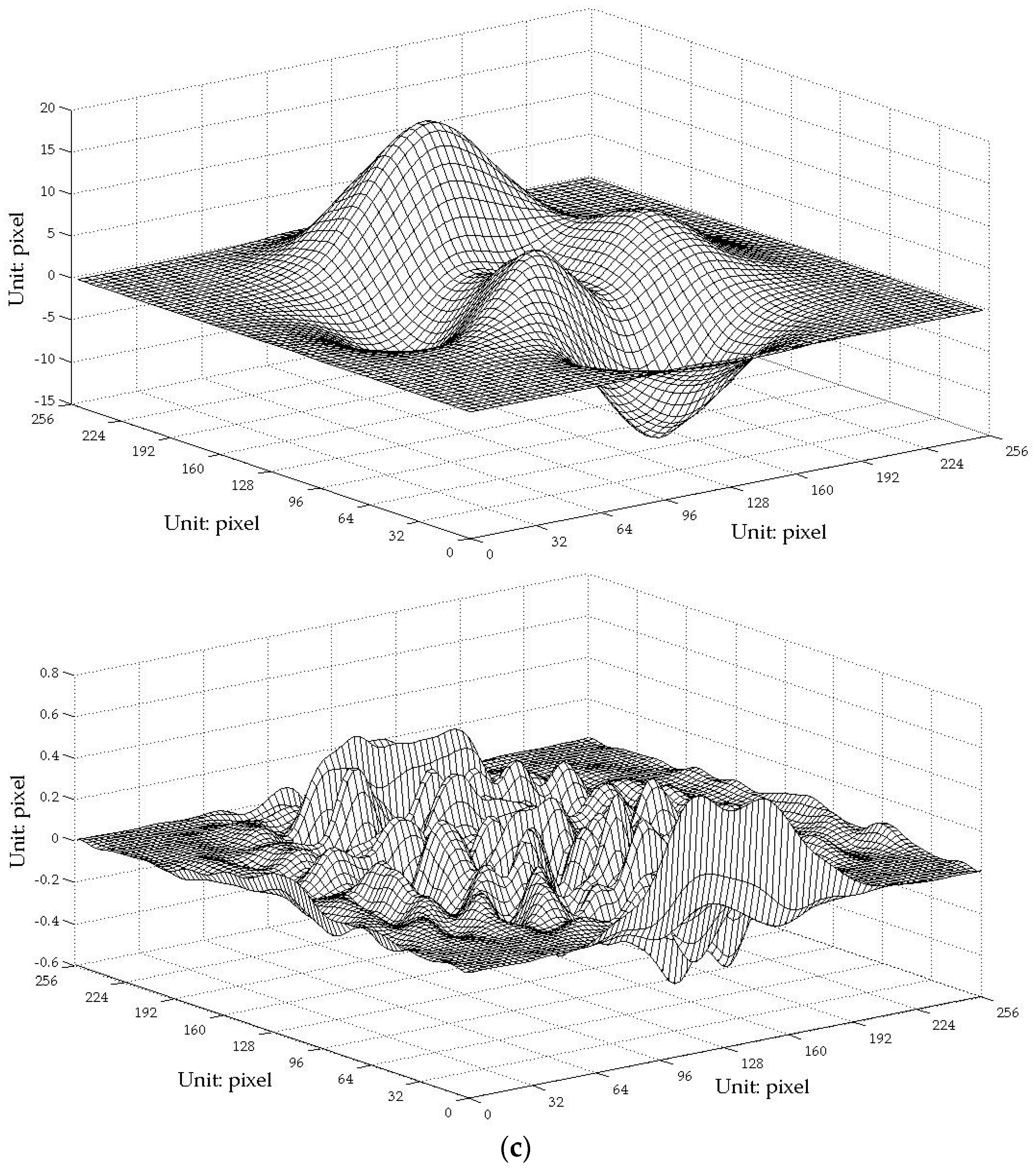

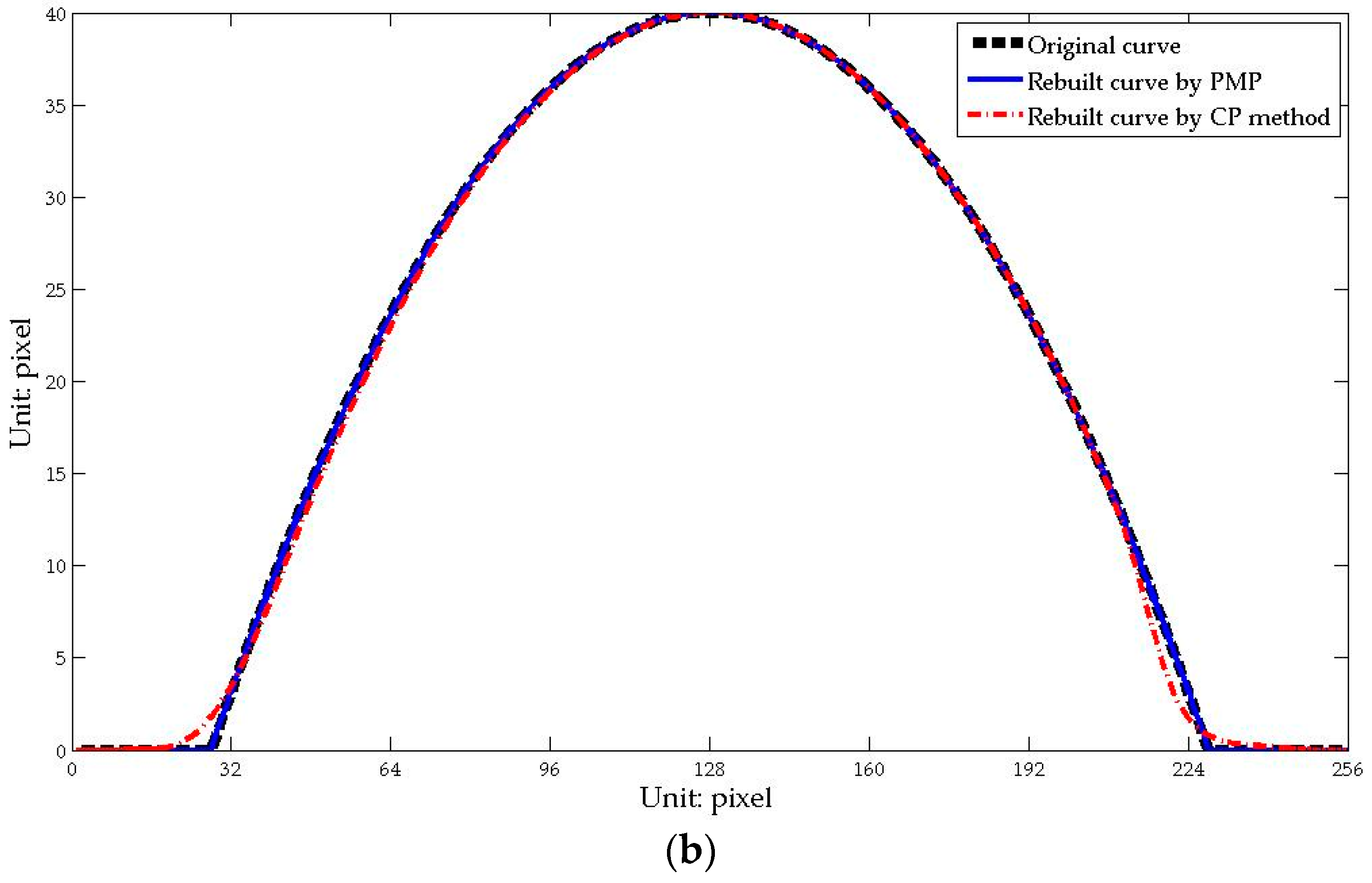

Furthermore, to find the errors between the 3D reconstruction and the original and to make a quantitative comparison between the traditional PMP method and the one-shot CP technique proposed in this paper, simulations were explored because the real digital information of the face model is hard to attain. The Peaks function included in the commercial MATLAB software as shown in

Figure 9a and an artificial upside-down bowl as shown in

Figure 10a were used for the studies. The results show that the rebuilt profiles have small errors against the original and no distinct decrease in the precision of the proposed one-shot CP method unlike the traditional phase-shifting method. However, we found that, around the edge of the bowl, there is a slightly greater deviation.

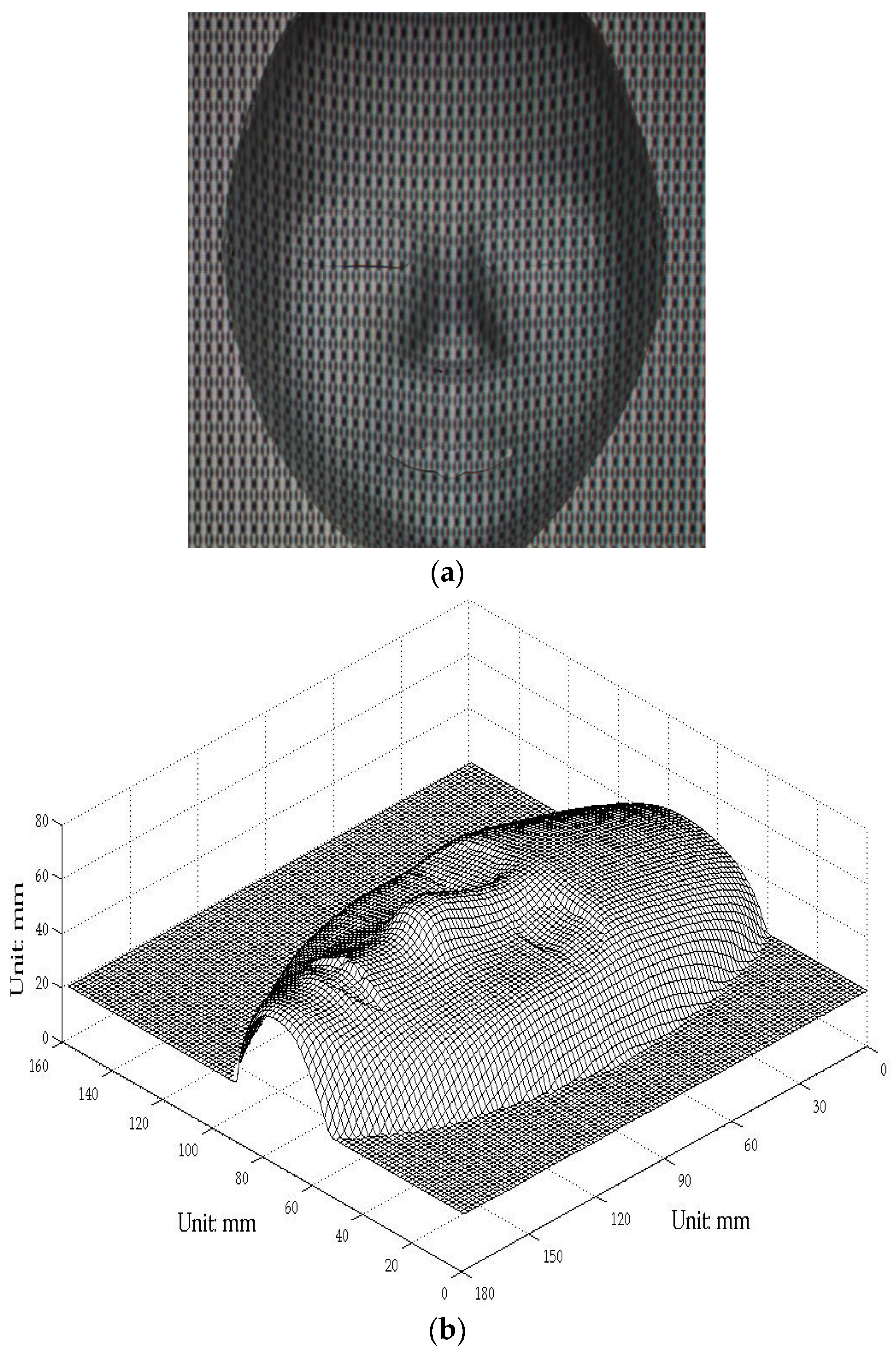

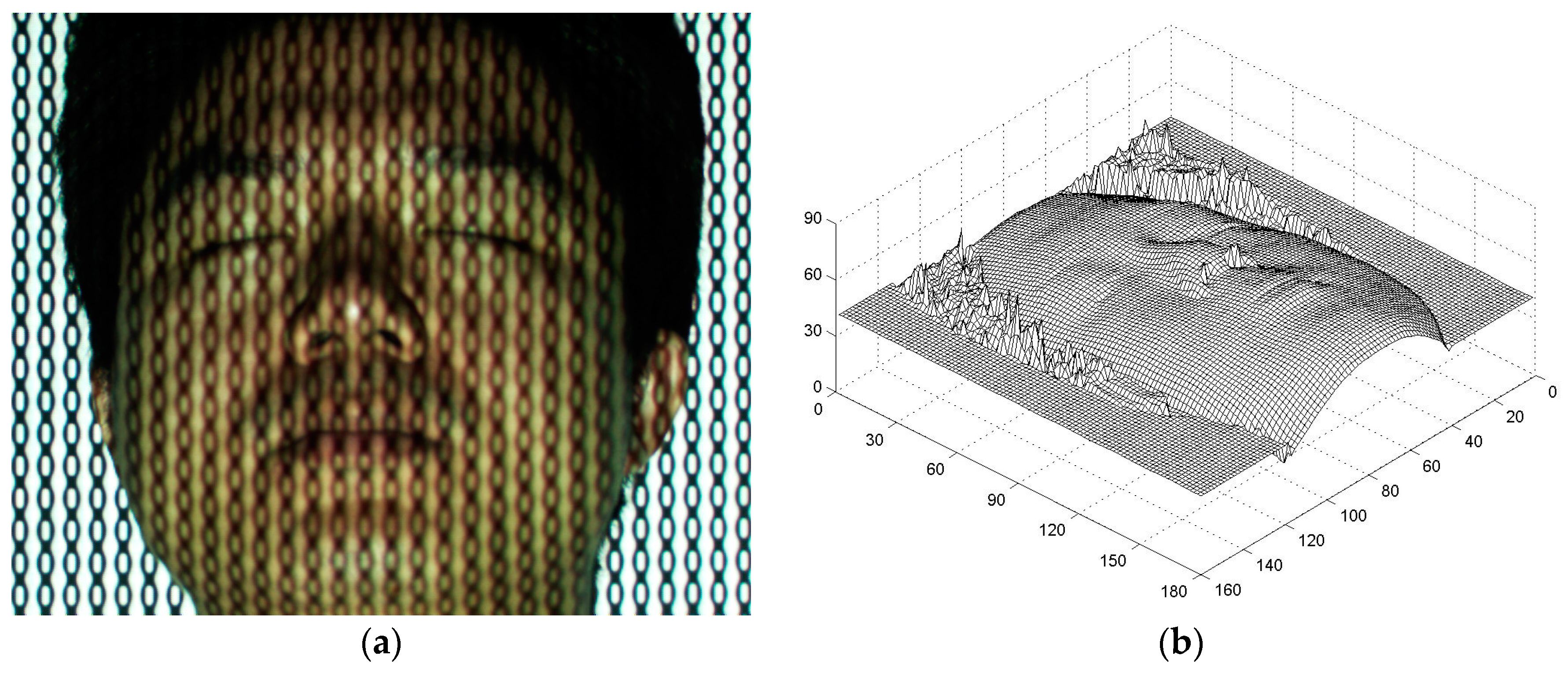

3.2. Survey of a Real Human Face

The aforementioned experimental setup was employed to survey a real 3D human face profile. The fundamental parameter

was 1/25 line/pixel, whereas the other parameters remain the same.

Figure 11a shows the distortion composite pattern modulated by the volunteer’s face depth, and

Figure 11b is the 3D digital face profile rebuilt in the survey. The 3D digital data of a real human face were successfully obtained; although some noise was observed around the edge, which is acceptable. The texture mapping technique was applied to extract the color map of the real face and fuse it into the artificial face to reconstruct a nature-appearance 3D digital face [

33,

34,

35,

36].

Figure 12 shows the 3D curved surface rebuilt by using the texture mapping technique. The proposed technique enables a surgeon to extract 3D features and design an operation more easily and conveniently from the true face model.

4. Discussion

This study employs a composite pattern to obtain a 3D human face digital profile. This one-shot technique can avoid unwanted difficulties, such as trailing and distortion, which can occur in PMP when only one projection and a corresponding capture are required. A 3D digital model of a real human face can be obtained more conveniently and precisely based on the proposed approach. The rebuilt 3D profile of a real human face obtained in this study shows a suitable shape, except for some noise around the edge, which is acceptable. The texture mapping technique was also employed to reconstruct a nature-appearance 3D digital face. A true face 3D profile can provide more convenience to surgeons who must extract 3D face features and design a proper surgical operation.

The active optical 3D sensing techniques, such as the traditional PMP method and the one-shot CP method proposed in this paper, obtain the 3D information by unwrapping the phase from the observation light. As we know, the propagation of the wave can be regarded as the phase travels so that the distance of the wave moves (i.e. the wave-path) determines the phase value. While a sinusoidal fringe or a certain pattern that has a regularly distributed phase is projected to an uneven surface, the phase distribution in the reflected light is determined by the wave-path which is related to the height of the surface. However, two troubles to note are as follows: (1) the steep surface with discontinuities in surface slopes due to sharp edges will give rise to significant changes to the phase. In general, unwrapping the phase can be challenging, so added errors will be introduced to the results if the phase variations between the two adjacent sampling signals exceed one period, i.e., 2π. That is why the algorithm has a hard time around the edge of the specimen. (2) The phase information contained in the observation light would be disturbed if the reflector has very high or very low reflectivity, as the rippling pattern of brightening and dimming represents phase variety. A piece of white or low-contrast observation light caused by the high reflectivity adds difficulties to the phase unwrapping, so the rebuilt image would be in low grade.

In addition, the visible light projected from DLP generally makes a volunteer feel uncomfortable, especially on the eyes. Although the one-shot approach has cut the operation time, the eyes can be affected if they remain open during the survey. Thus, we asked the volunteer to close his eyes. The rebuilt shape with closed eyes can cause difficulties to the surgeon.

Our research group will then employ near-infrared (NIR) light generated by an NIR-DLP to project the composite pattern. An NIR-CCD will then be employed to capture the distortion pattern. People might be more comfortable in the NIR environment. Given this technique, a device called a Digital Face Beautifier can be developed to help surgeons produce tentative designs and inspect the effects before a surgical operation. This approach is more convenient to both the surgeon and the patient.

5. Conclusions

This paper reports on a novel active optical technique for 3D human face profile acquisition by utilizing a projection pattern composed of several frames of phase-shifting patterns and the same number of carrier patterns. Several techniques in the traditional method, such as phase-shifting or image transform, can also be utilized in this one-shot approach to solve the phase demodulation with automatic processing. The texture mapping technique can be applied to obtain a true 3D face profile. The face model test and real human face measuring prove that this method is simple, fast, and accurate. Therefore, a surgeon can utilize this approach to extract 3D appearance characteristics of a human face and to design operations more easily.

Acknowledgments

This work was supported by the Collaborative Innovation Center of High-end Manufacturing, Zhuhai (Grant No. ZX-2015-063).

Author Contributions

Yong You and Yang Shen conceived and designed the experiments. Yong You performed the experiments. Yong You, Shen Yang, Guocai Zhang, and Xiuwen Xing analyzed the data. Yong You wrote this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Murrell, G.L.; McIntyre, N.K.; Trotter, B. Facial contouring. Facial Plast. Surg. Clin. N. Am. 2003, 11, 391–397. [Google Scholar] [CrossRef]

- Choi, J.-Y. Orthognathic Surgery and Facial Contouring Surgery. J. Jpn. Soc. Aesthet. Surg. 2005, 42, 188–197. [Google Scholar]

- Xia, J.; Samman, N.; Yeung, R.W.K.; Wang, D.; Shen, S.G.F.; Ip, H.H.S.; Tideman, H. Computer-assisted three-dimensional surgical planning and simulation: 3D soft tissue planning and prediction. Int. J. Oral Maxillofac. Surg. 2000, 29, 250–258. [Google Scholar] [CrossRef]

- Altobelli, D.E.; Kikinis, R.; Mulliken, J.B.; Cline, H.; Lorensen, W.; Jolesz, F. Computer-assisted three-dimensional planning in craniofacial surgery. Plast. Reconstr. Surg. 1993, 92, 576–585. [Google Scholar] [CrossRef] [PubMed]

- Schmelzeisen, R.; Schramm, A. Computer-assisted reconstruction of the facial skeleton. Arch. Facial Plast. Surg. 2003, 5, 437–440. [Google Scholar] [CrossRef] [PubMed]

- Troulis, M.J.; Everett, P.; Seldin, E.B.; Kikinis, R.; Kaban, L.B. Development of a three-dimensional treatment planning system based on computed tomographic data. Int. J. Oral Maxillofac. Surg. 2002, 31, 349–357. [Google Scholar] [CrossRef] [PubMed]

- Alfred, K.L.; Peter, M. Contour Reconstruction in 3D X-ray CT. IEEE Trans. Med. Imaging 1993, 12, 764–769. [Google Scholar]

- Prakoonwit, S.; Benjamin, R. Optimal 3D surface reconstruction from a small number of conventional 2D X-ray images. J. X-ray Sci. Technol. 2007, 15, 197–222. [Google Scholar]

- Mi, D.; Wei, B.; Feng, P.; Yao, F. Oral Implant Orientation of 3D Imaging Based on X-ray Computed Tomography (CT). Asian J. Inform. Technol. 2007, 6, 1143–1147. [Google Scholar]

- Mahfouz, M.R.; Badawi, A.; Fatah, E.E.A.; Merkl, B. Reconstruction of 3D Patient-Specific Bone Models from Biplanar Xray Images Utilizing Morphometric Measurements. In Proceedings of the 2006 International Conference on Image Processing, Computer Vision, and Pattern Recognition (IPCV’06), Las Vegas, NV, USA, 26–29 June 2006; pp. 345–349. [Google Scholar]

- Jiang, D.; Hu, Y.; Yan, S.; Zhang, L.; Zhang, H.; Gao, W. Efficient 3D reconstruction for face recognition. Pattern Recognit. 2005, 38, 787–798. [Google Scholar] [CrossRef]

- Uchida, N.; Shibahara, T.; Aoki, T.; Nakajima, H.; Kobayashi, K. 3D face recognition using passive stereo vision. In Proceedings of the IEEE International Conference on Image Processing (ICIP 2005), Genova, Italy, 11–14 September 2005; Volume 2, p. II-950-3. [Google Scholar]

- Erdem, A.T. A New method for Generating 3DFace Models for Personalized User Interaction. In Proceedings of the 13th European Signal Processing Conference, Antalya, Turkey, 4–8 September 2005. [Google Scholar]

- Chen, Q.; Medioni, G. Building 3D Human Face Models from Two Photographs. J. Signal Process. Syst. 2001, 27, 127–140. [Google Scholar]

- Zhang, C.; Cohen, F.S.; Rvsi, H. 3D face structure extraction and recognition from images using 3D morphing and distance mapping. IEEE Trans. Image Process. 2002, 11, 1249–1259. [Google Scholar] [CrossRef] [PubMed]

- Hirschmuller, H.; Innocent, P.R.; Garibaldi, J. Real-time correlation-based stereo vision with reduced border errors. Int. J. Comput. Vis. 2002, 47, 229–246. [Google Scholar] [CrossRef]

- Huq, S.; Abidi, B.; Goshtasby, A.; Abidi, M.A. Stereo matching with energy-minimizing snake grid for 3D face modeling. Proc. SPIE 2004, 5404, 339–350. [Google Scholar]

- Ansari, A.N.; Abdel-Mottaleb, M. Automatic facial feature extraction and 3D face modeling using two orthogonal views with application to 3D face recognition. Pattern Recogn. 2005, 38, 2549–2563. [Google Scholar] [CrossRef]

- Suna, T.-H.; Chena, M.; Lob, S.; Tien, F.-C. Face recognition using 2D and disparity eigen face. Expert Syst. Appl. 2007, 33, 265–273. [Google Scholar] [CrossRef]

- Mahoor, M.H.; Abdel-Mottaleb, M. A Multimodal Approach for Face Modeling and Recognition. IEEE Trans. Inf. Forensics Secur. 2008, 3, 431–440. [Google Scholar] [CrossRef]

- Amin, S.H.; Gillies, D. Analysis of 3D Face Reconstruction. In Proceedings of the 14th International Conference on Image Analysis and Processing (ICIAP 2007), Modena, Italy, 10–14 September 2007; pp. 413–418. [Google Scholar]

- Lengagne, R.; Fua, P.; Monga, O. 3D stereo reconstruction of human faces driven by differential constraints. Image Vis. Comput. 2000, 18, 337–343. [Google Scholar] [CrossRef]

- Yuffa, A.J.; Gurton, K.P.; Videen, G. Three-dimensional facial recognition using passive long-wavelength infrared polarimetric imaging. Appl. Opt. 2014, 53, 8514–8521. [Google Scholar] [CrossRef] [PubMed]

- Su, X.Y.; Li, J.T. Information Optics; Science Press: Beijing, China, 2000; pp. 131–133. [Google Scholar]

- Salas, L.; Luna, E.; Salinas, J.; García, V. Profilometry by fringe projection. Opt. Eng. 2003, 42, 3307–3314. [Google Scholar] [CrossRef]

- Takeda, M.; Mutoh, K. Fourier transform profilometry for the automatic measurement 3D object shapes. Appl. Opt. 1983, 22, 3977–3982. [Google Scholar] [CrossRef] [PubMed]

- Su, X.Y.; Chen, W.J. Fourier transform profilometry: A review. Opt. Lasers Eng. 2001, 35, 263–284. [Google Scholar] [CrossRef]

- Schreiber, W.; Notni, G. Theory and arrangements of self-calibrating whole-body three-dimensional measurement systems using fringe projection technique. Opt. Eng. 2000, 39, 159–169. [Google Scholar] [CrossRef]

- Scharstein, D.; Szeliski, R. High-accuracy stereo depth maps using structured light. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; Volume 1, pp. I-195–I-202. [Google Scholar]

- Tsalakanidou, F.; Forster, F.; Malassiotis, S.; Strintzis, M.G. Real-time acquisition of depth and color images using structured light and its application to 3D face recognition. Real Time Imaging 2005, 11, 358–369. [Google Scholar] [CrossRef]

- Wong, A.K.C.; Niu, P.Y.; He, X. Fast acquisition of dense depth data by a new structured light scheme. Comput. Vis. Image Underst. 2005, 98, 398–422. [Google Scholar] [CrossRef]

- Yue, H.M.; Su, X.Y.; Li, Z.R. Improved Fast Fourier Transform Profilometry Based on Composite Grating. Acta Opt. Sin. 2005, 25, 767–770. [Google Scholar]

- Guan, C.; Hassebrook, L.G.; Lau, D.L. Composite structured light pattern for three-dimensional video. Opt. Express 2003, 11, 406–417. [Google Scholar] [CrossRef] [PubMed]

- Turk, G. Texture synthesis on surfaces. In Proceedings of the Computer Graphics Proceedings, Annual Conference Series, ACM SIGGRAPH, Los Angeles, CA, USA, 12–17 August 2001; pp. 347–354. [Google Scholar]

- Matsuyama, T.; Wu, X.J.; Takai, T.; Wada, T. Real-time dynamic 3D object shape reconstruction and high-fidelity texture mapping for 3D video. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 357–369. [Google Scholar] [CrossRef]

- Zhang, E.; Mischaikow, K.; Turk, G. Feature-based surface parameterization and texture mapping. ACM Trans. Graph. 2005, 24, 1–27. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).