1. Introduction

In a rapidly aging society, various social problems have arisen related to medical care, welfare, pensions, and ensuring the mobility of elderly people [

1,

2]. The latter is a particularly important issue to be resolved. Studies on ensuring the mobility of elderly people are actively conducted [

3,

4]. From the perspective of ensuring the vitality of an aging society as shown in

Figure 1, it is important to ensure the autonomous (self-supportable) mobility of elderly people [

5].

A handle-type electric wheelchair (hereinafter, electric wheelchair) is useful as a means of transportation for elderly people [

6,

7]. About 15,000 electric wheelchairs are sold each year in Japan [

8]. The user of an electric wheelchair (maximum speed is 6 km/h) is a pedestrian under the Road Traffic Law in Japan. Elderly people use electric wheelchairs for shopping, hospital visits, participation in communities, walks, and so on. Truly, Reference [

9] indicated that elderly people can achieve self-supportable mobility using electric wheelchairs, which underpins improvement in their quality of life (QoL). Nevertheless, falling accidents involving electric wheelchairs occur frequently on stairs, curbs, irrigation canals, and so on [

10,

11]. Many accidents occur during the daytime when elderly people move the most, but many accidents occur at night, when accidents can easily result in severe injury and death [

11]. When elderly people go out during the day and come home late, night travelling is dangerous because the visibility of elderly people becomes worse at night. From these facts, it is an important task to ensure the safety of electric wheelchairs for use by elderly people.

To prevent falling accidents of electric wheelchairs, studies of hazardous object detection have been actively conducted [

12,

13,

14,

15,

16,

17,

18,

19,

20,

21]. Hazardous object detecting sensors in electric wheelchairs are classifiable into active type, passive type, and combined type. The active type sensors includes laser range finders, ultrasonic waves, and range image sensors (Time-Of-Flight type and Structured-Light type). The passive type sensors include stereovision and monocular cameras. Combined type sensors include a laser range finder and stereovision, monocular cameras and ultrasonic waves, and so on. Considering that electric wheelchair users are elderly people, safe driving support from evening to night (awareness enhancement) is an important subject. However, few reports describe hazardous object detection using commercially available and inexpensive equipment in electric wheelchairs.

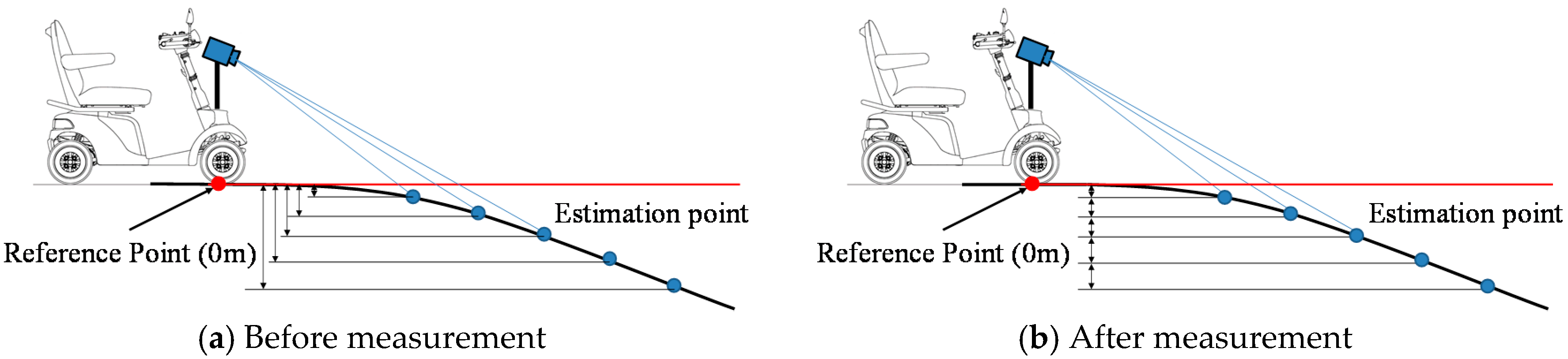

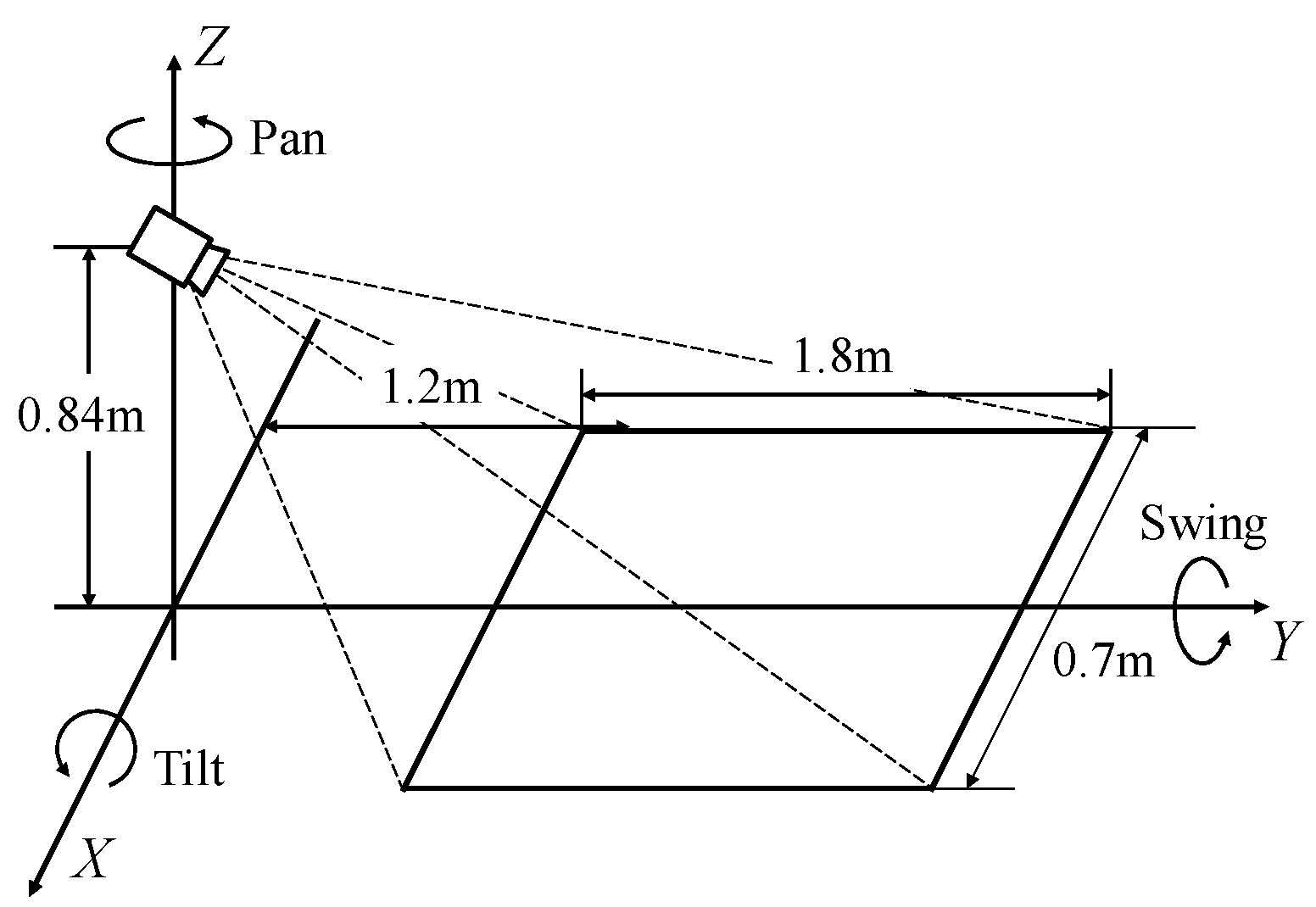

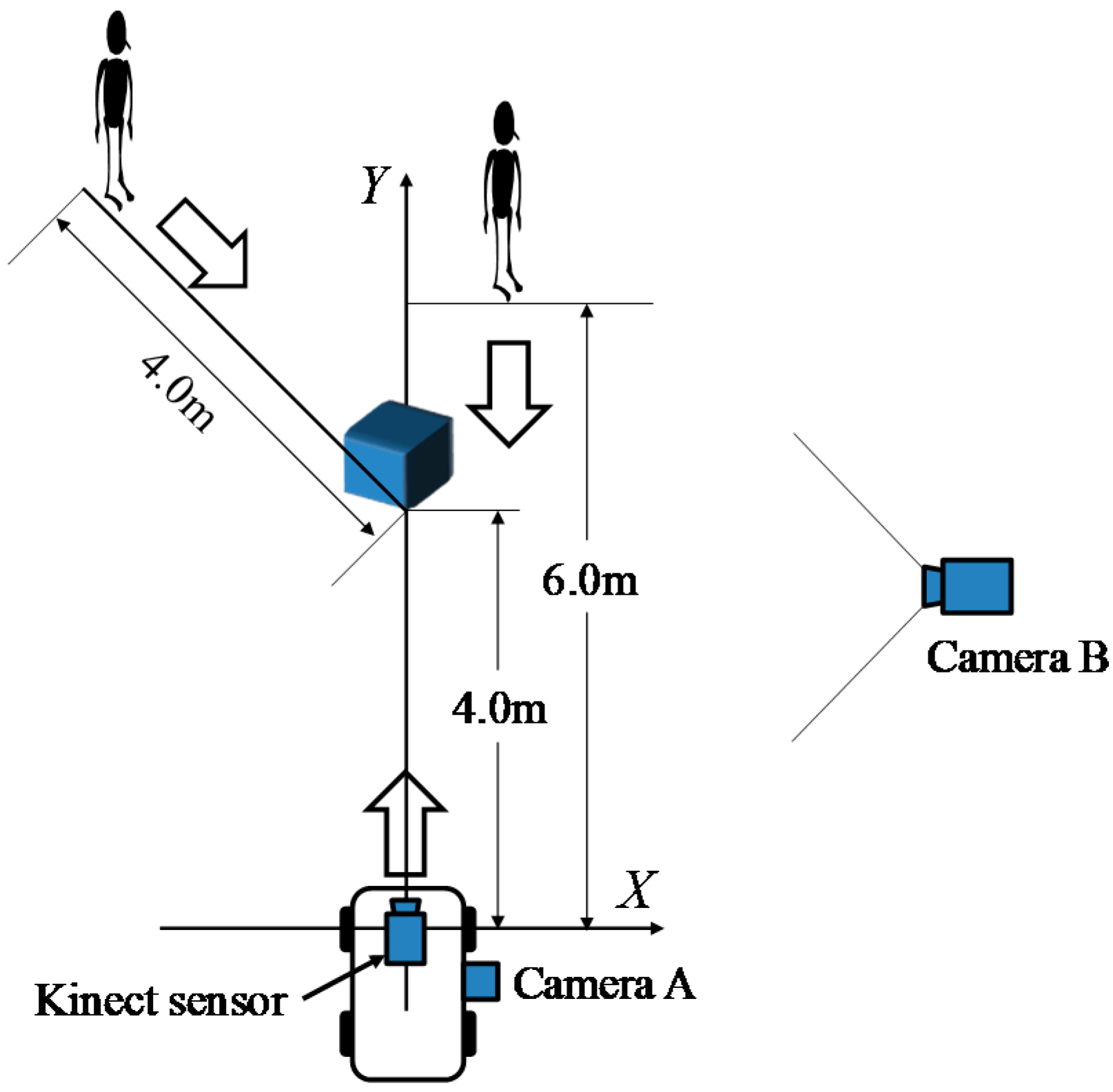

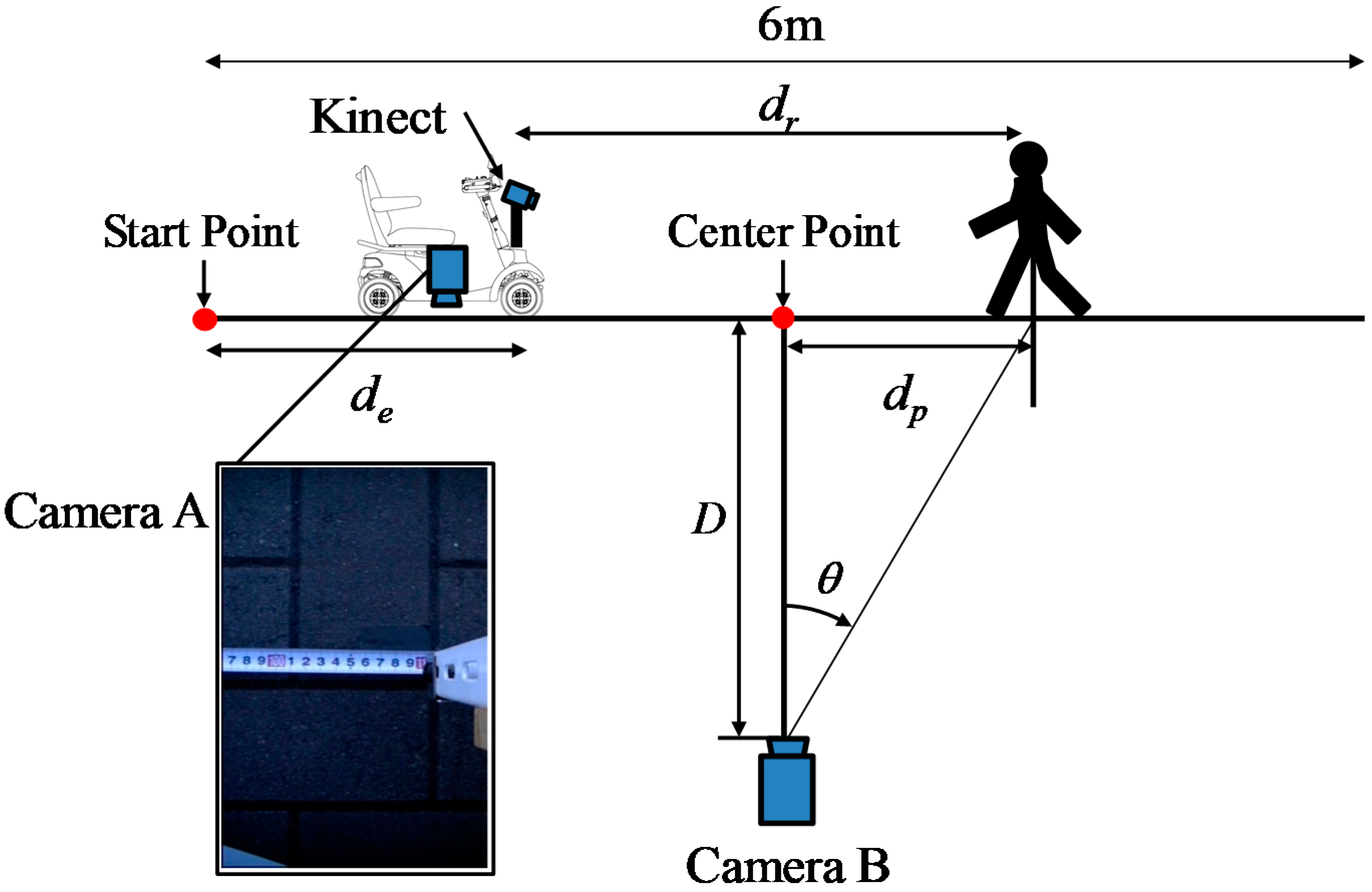

To prevent falling accidents involving handle-type electric wheelchairs, we studied the applicability of commercially available and inexpensive Kinect sensors. Specifically, we constructed a hazardous object detection system using Kinect sensors, detected hazardous objects outdoors, and underscored the system effectiveness. The composition of this paper is the following.

Section 2 presents a description of related research on hazardous object detection in electric wheelchairs. A hazardous object detection system using Kinect is proposed in

Section 3. Furthermore, we evaluated the performance of static and dynamic hazardous object detection during nighttime, discussed in

Section 4, and the performance of the dynamic hazardous object detection in the daytime is shown in

Section 5. Finally,

Section 6 presents conclusions.

2. Related Works

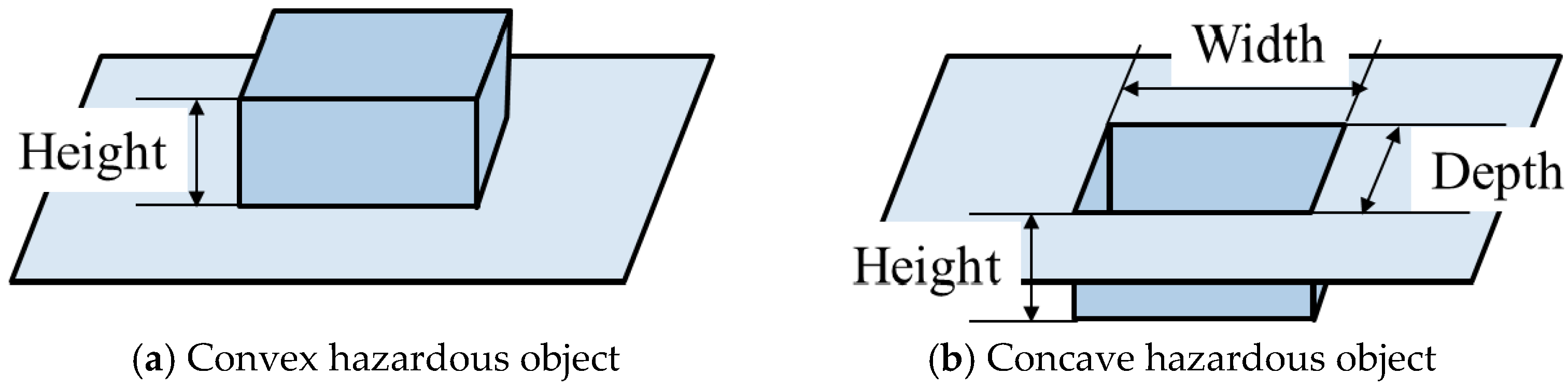

We describe related studies of hazardous object detection. Furthermore, ‘resolution’ in this paper means the ability to detect hazardous objects (height ± 0.05 m or more) within the hazardous object detection area described in

Section 3. As explained earlier, hazardous object detecting sensors are classifiable into active type, passive type, and combined type.

First, the active type will be described. The active type includes laser range finders, ultrasonic waves, and range image sensors (Time-Of-Flight type, Structured-Light type). The laser range finder can acquire two-dimensional information by scanning with a laser. It is possible to detect a hazardous object with high accuracy during day or night on the scanning plane. In an earlier report of the relevant literature [

12], the authors used a laser range finder to detect curbstones and to navigate. However, it is difficult to detect obstacles above and below the scanning plane. Moreover, these sensors are expensive. Time-Of-Flight (TOF) type and structured-light type sensors are range image sensors. The TOF type range image sensor irradiates light from a light source to an object, estimates the time until the reflected light returns for every pixel, and acquires three-dimensional information using the estimated time. Another report [

13] describes obstacle detection by an indoor rescue robot. The TOF type range image sensor has resolution capable of detecting hazardous objects, but it is expensive because it estimates the time for each pixel. Additionally, it is difficult to use outdoors in the daytime when there is much infrared ray noise. The structured-light type sensor projects a specifically patterned light from the projector to the object. Then the pattern is photographed by a pair of cameras. The irradiation light pattern and the photographed light pattern are correlated [

14]. Furthermore, three-dimensional information up to the object is obtained by triangulation as stereovision. Nevertheless, as with the TOF type, such sensors are difficult to use outdoors during daytime, when there is much infrared ray noise. Ultrasonic sensors estimate the distance using the time until the ultrasonic wave emitted from the sensor is reflected by the object. In one report from the relevant literature [

15], navigation such as passing through a door and running along a wall can be performed using an ultrasonic sensor. Because ultrasonic sensors are inexpensive and compact, they are used frequently. They are useful day and night. However, because the resolution is low, it is difficult to detect small steps, which are hazardous objects for electric wheelchairs.

Next, passive type sensors include stereovision and monocular cameras. Stereovision acquires three-dimensional information by photographing the same object from two viewpoints. In an earlier report of the literature [

16], obstacles and steps were detected using stereovision. Stereovision is inexpensive; moreover, the obtained image has high resolution. However, it is difficult to detect objects at night when the illumination environment is bad. Ulrich et al. used a monocular camera to detect obstacles indoors. The system converts color images to HSI (Hue, Saturation, and Intensity), creates histograms of reference regions, compares the surroundings with the reference region, and detects obstacles [

17]. In a method using the monocular camera, calibration is easy because one camera is used. Mounting is easy because the size is small. However, it is difficult to use at night when the lighting environment is bad.

Finally, we describe a combined type using both active type and passive type for detecting hazardous objects. Murarka et al. used a laser range finder and stereovision to detect obstacles [

18]. The laser range finder detects obstacles on the 2D plane while stereovision detects obstacles in 3D space, and a safety map is built. By combining the laser range finder and stereovision, the difficulty in detecting obstacles above and below the plane of the laser beam, which is a shortcoming of the laser range finder, was resolved. However, the laser range finder is expensive. As described in an earlier report of the relevant literature [

19], 12 ultrasonic sensors and 2 monocular cameras were installed in an electric wheelchair to detect hazardous objects. Although sensors are inexpensive, it is difficult to use monocular cameras when the illumination environment is bad. Moreover, it is difficult to detect hazardous objects in electric wheelchairs because the ultrasonic sensor resolution is low. Furthermore, the necessity of using many sensors requires much time for installation and calibration of sensors.

Hazardous object detection using Kinect game sensors, which have multiple sensors in one device, will be described. In an earlier report from a study [

20], the Kinect sensor was installed on a white cane for visually impaired people. Stairs and chairs are detected using range image data obtained from the range image sensor. Then, in another report [

21], navigation is performed while avoiding obstacles using a laser scanner, ultrasound, and Kinect indoors. However, it takes time to install and calibrate the sensors because multiple sensors are used. Reference [

22] examined obstacle detection (convex portion only) using Kinect v2 during the daytime and presented the possibility of obstacle detection by Kinect v2. However, no detailed explanation is available for the detectable range or the detection accuracy of obstacles by Kinect v2 during the daytime. Additionally, it is difficult to detect hazardous objects that are a concave area because the installation position (height) of Kinect v2 is low. When the installation position of Kinect is low, it might be estimated as higher than the actual height of the object. Furthermore, the system might not judge it as a hazardous object. It is difficult to detect without approaching the hazardous object.

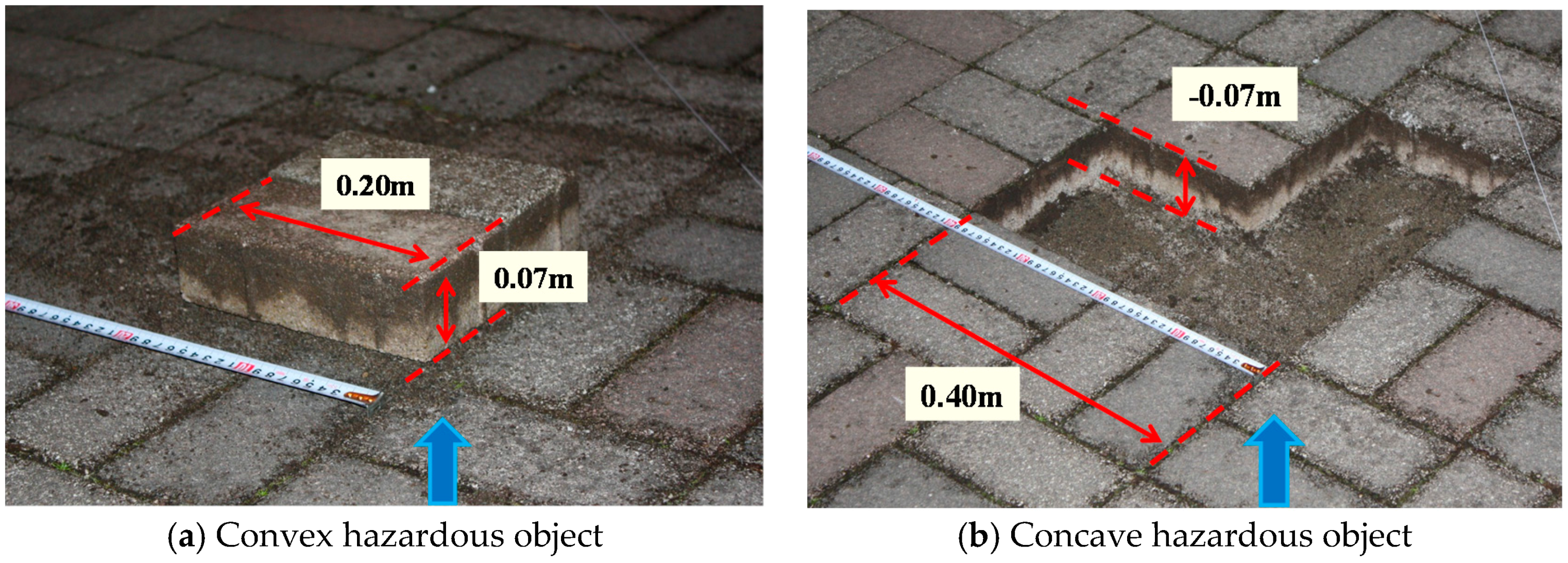

To ensure the safety of electric wheelchairs using Kinect outdoors, it is necessary to detect static and dynamic hazardous objects using a commercially available and inexpensive sensor. However, few reports describe the performance evaluation of static (convex and concave portion) and dynamic (pedestrian) object detection using Kinect. As described in this paper, we assess the applicability of the commercially available and inexpensive Kinect sensor as a sensor for detecting hazardous objects to prevent falling accidents of electric wheelchairs.

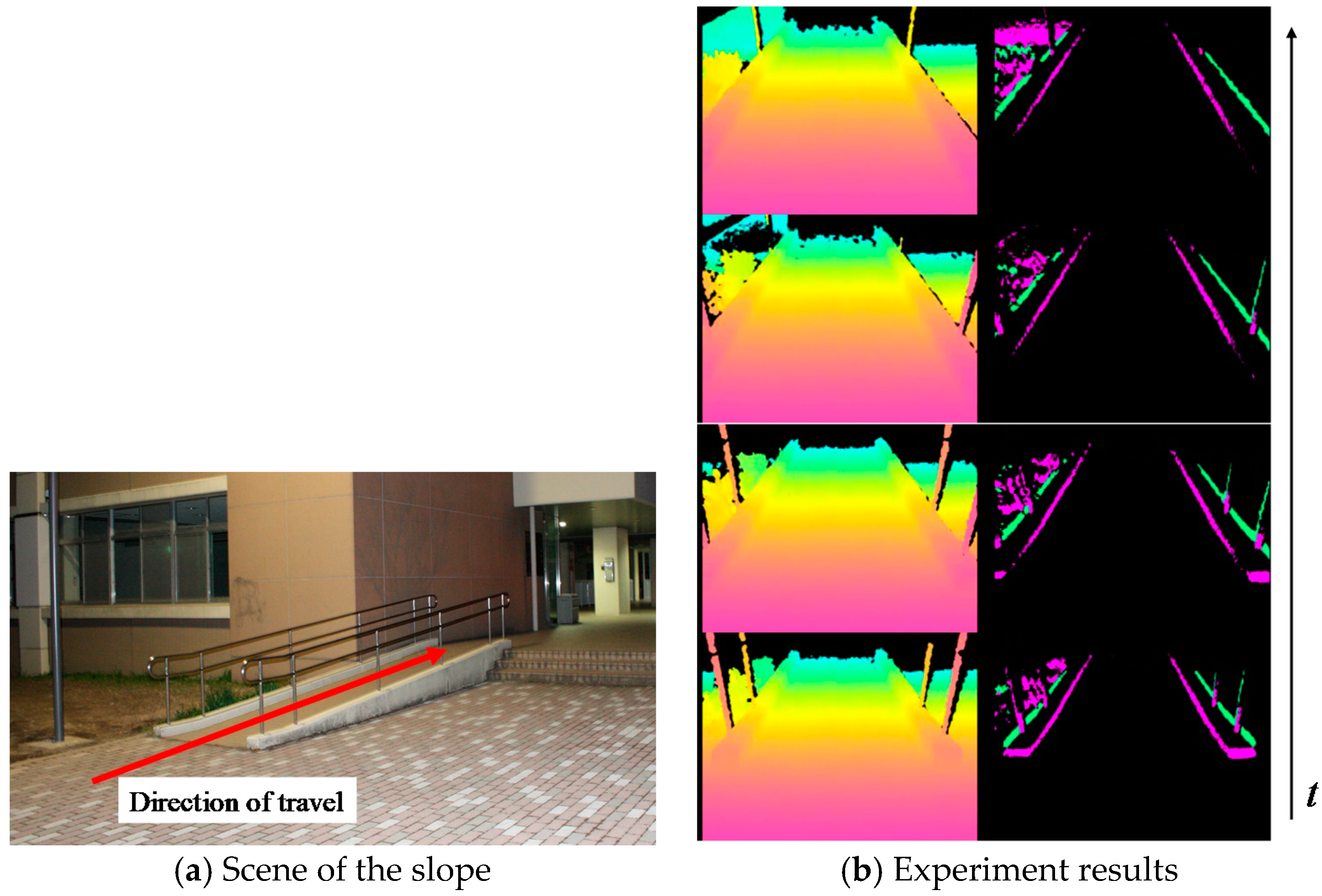

6. Conclusions

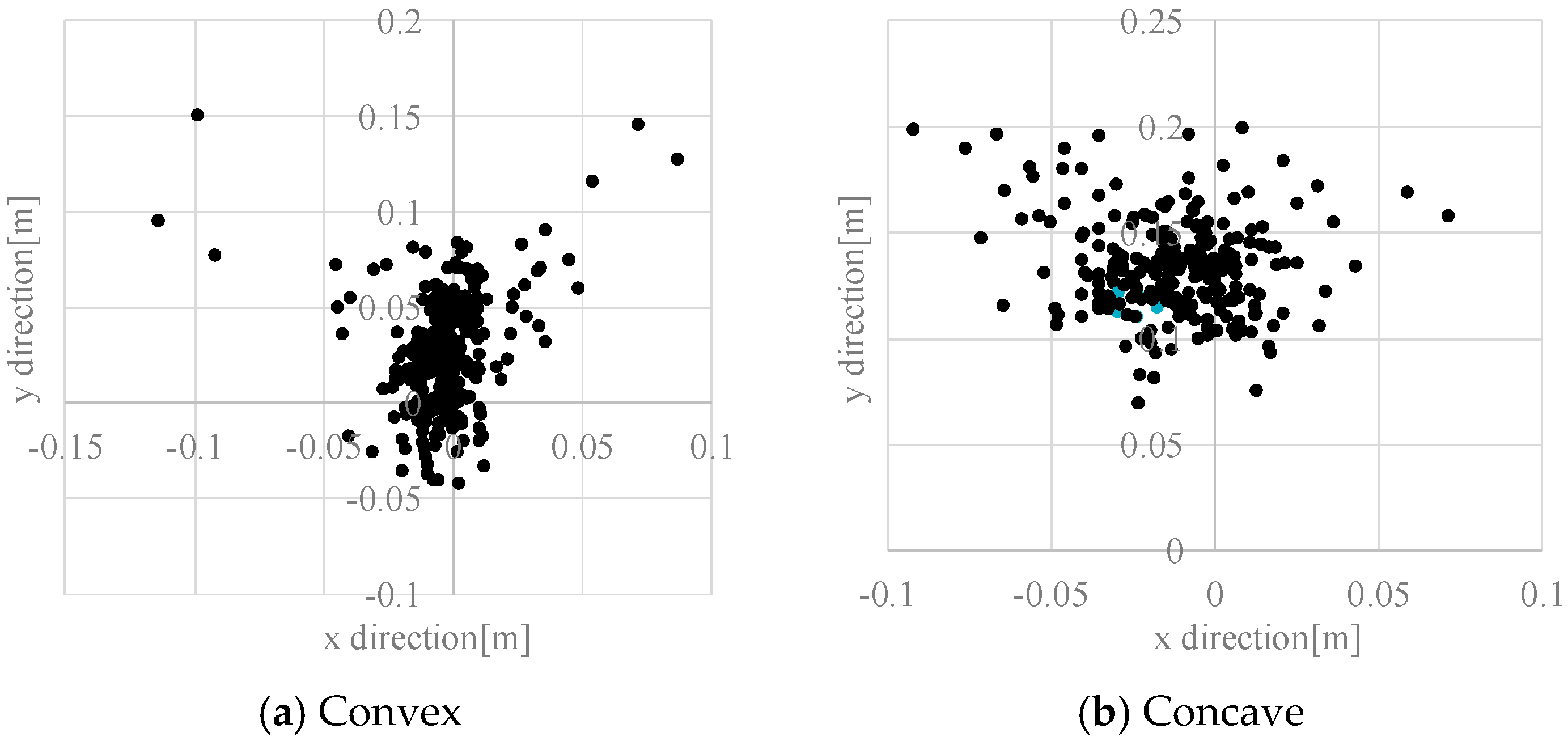

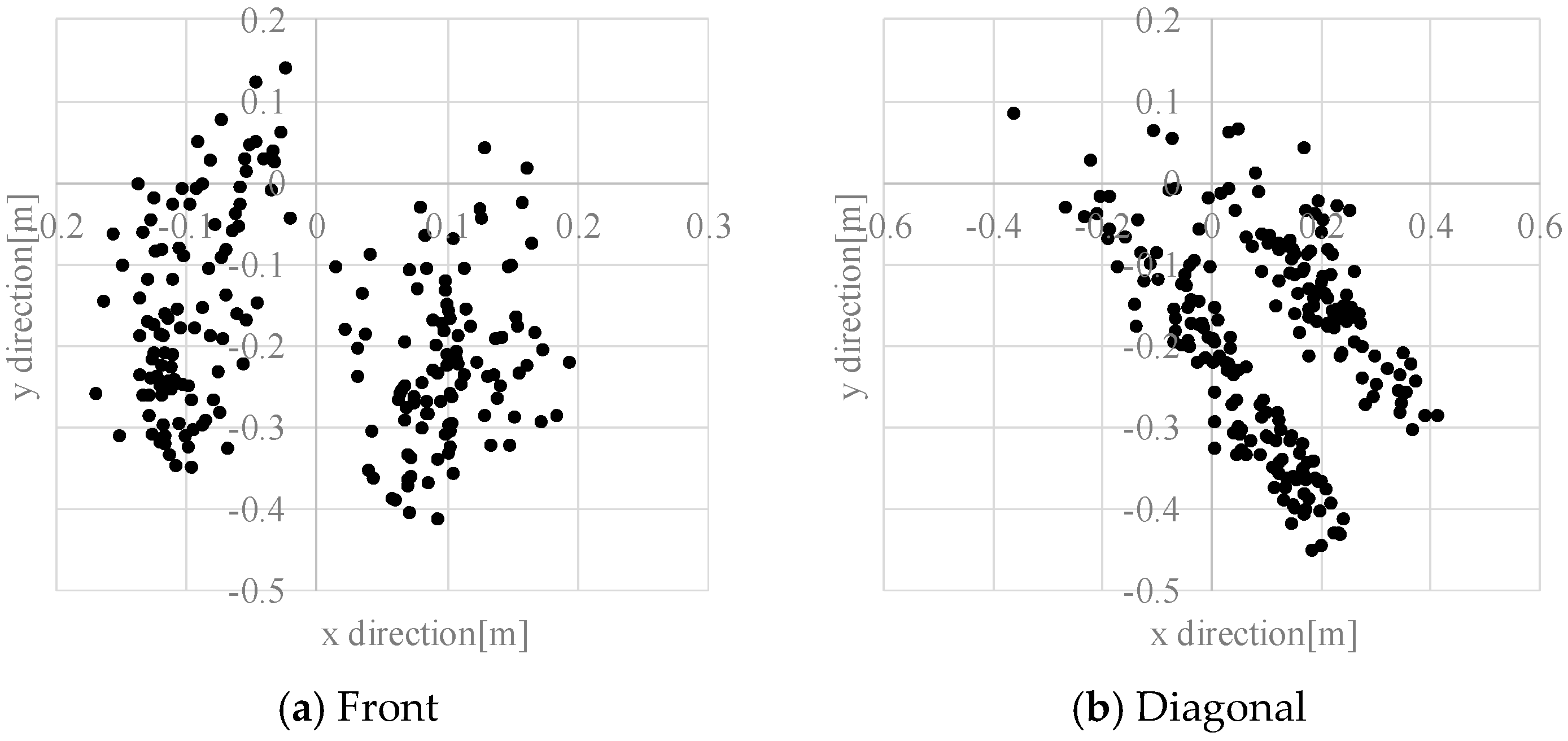

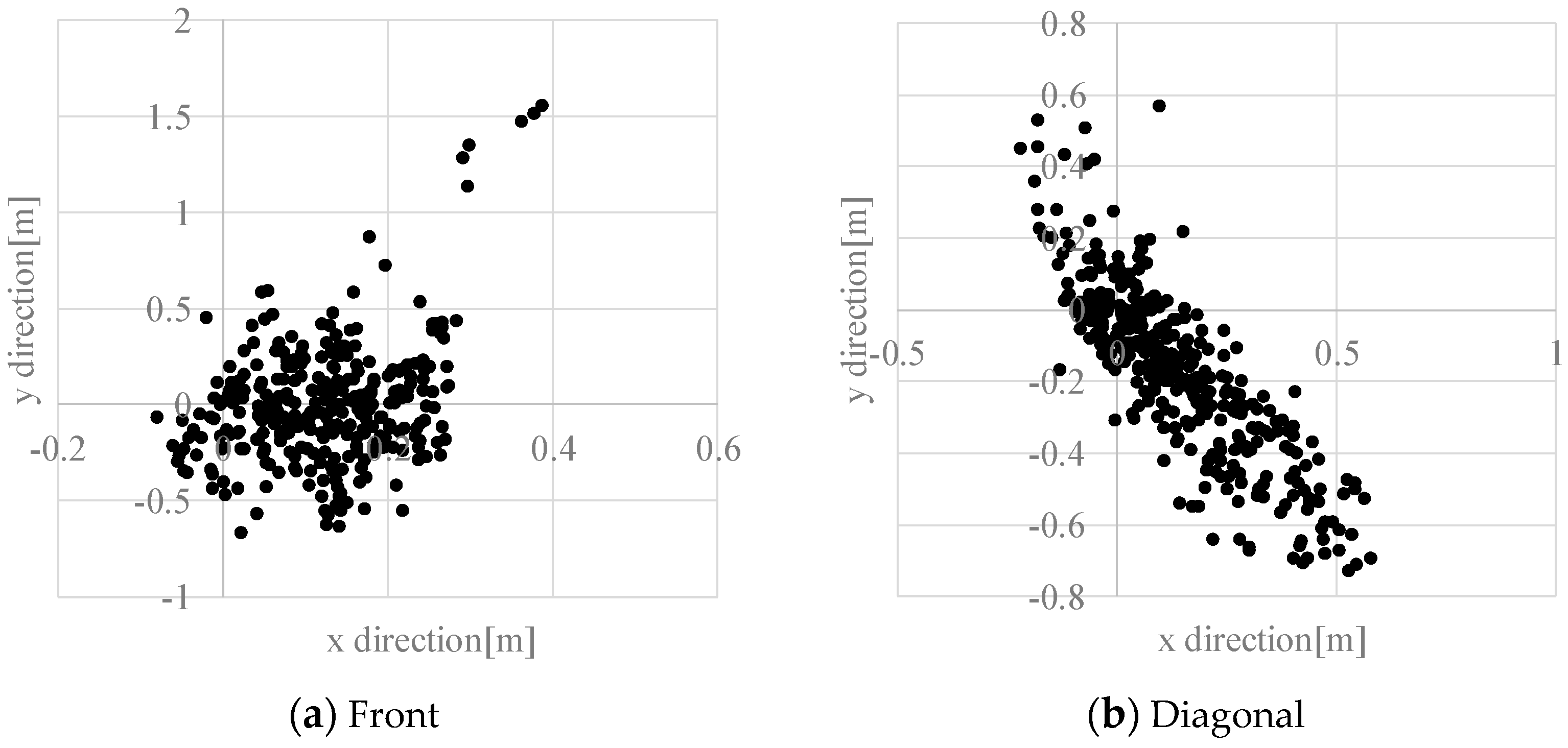

This report has described the applicability of using a commercially available Kinect sensor to ensure the safety of a handle-type electric wheelchair. Considering that the actual users of electric wheelchairs are elderly people, it is important to ensure the safety of electric wheelchairs at night. To ensure the safety of the electric wheelchair during nighttime, we examined warning timing and the hazardous object detection area while considering the recognition, judgment, and operation time of elderly people and constructed a hazardous object detection system using Kinect sensors. The results of detecting static and dynamic hazardous objects outdoors demonstrated that this system is able to detect static and dynamic hazardous objects with high accuracy. We also conducted experiments related to dynamic hazardous object detection during the daytime. Along with the results presented above, it showed that this system is applicable to ensuring the safety of the handle-type electric wheelchair.

The results described above demonstrated that the system is useful for hazardous object detection with an electric wheelchair using a commercially available and inexpensive Kinect sensor. Furthermore, the system can reduce the risk associated with the use of electric wheelchairs and contribute to securing the autonomous mobility of elderly people.

As future tasks, further performance evaluations on the detection of various hazardous objects under various environments should be undertaken, because only the typical examples of convex and concave portions were evaluated for performance. Additionally, there should also be studies on the detection of the static hazardous objects during daytime and the improvement of dynamic hazardous object detection by tracking. Furthermore, a comparison with other methods of static hazardous object detection should be carried out.