A Middleware with Comprehensive Quality of Context Support for the Internet of Things Applications

Abstract

:1. Introduction

2. State of the Art of Middleware with QoC Support

2.1. AWARENESS

2.2. COSMOS

2.3. COPAL

2.4. INCOME

2.5. SALES

2.6. Analysis and Open Issues

- QoC provisioning: the middleware’s ability to provide a significant variety of QoI and QoS parameters, in order to satisfy IoT applications that have multiple QoC requirements;

- QoC monitoring: the middleware’s ability to provide mechanisms that detect variation in context quality at runtime in order to allow context aware applications to dynamically adapt to such oscillations. In this case, the middeware should allow the application itself to define the monitoring events it requires to be notified in real time;

- Heterogeneous physical sensors management: the middleware’s ability to discover device services and to interact with heterogeneous sensors and technologies in a dynamic and opportunistic way. In addition, the middleware must abstract the hardware heterogeneity so that the application does not know the details of the sensor driver implementation;

- Reliable data delivery in mobile environments: the middleware’s ability to ensure delivery of context data, even in situations where applications run in mobile environments with weak or intermittent connectivity or when IP address exchanges happen.

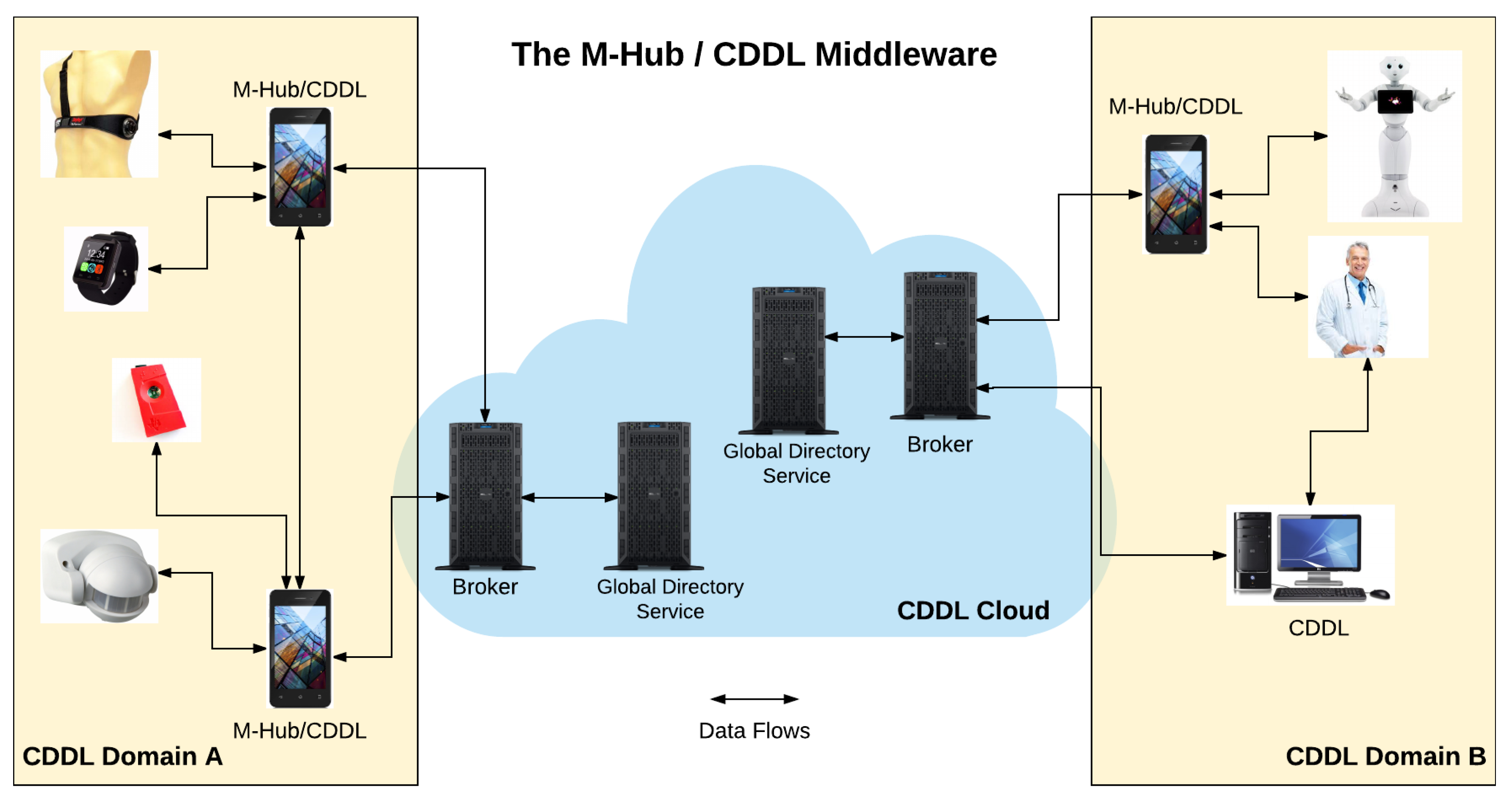

3. Proposed Solution: M-Hub/CDDL

3.1. Middleware Overview

3.2. Case Study

- Interaction with Heterogeneous Physical Sensors: the system must be capable of interacting with different types of sensors (e.g., portable, wearable or embedded in intelligent environments) in order to obtain different types of context data about the patient (e.g., physiological, movement and location) and the environment in which it is located (e.g., light, temperature, air quality);

- Activity recognition: the system must be able to determine the activity performed by the patient in real time, based on the grouping, sorting and processing of the data collected in real time;

- Intensity measurement: The system should be able to measure the intensity of the performed activities, as well as to determine if the intensity is low, high or moderate;

- Situational inference: the system must be able to infer the patient’s state (e.g., patient with atrial fibrillation running with moderate intensity at 1800 m altitude at 3:00 p.m.) by specifying rules whose execution infers different situations based on an analysis that combines data from the sensors, activity and intensity, taking into account the specific treatment of the patient;

- Decision making and execution of actions: the system should be able to perform actions predefined by health professionals for each type of detected situation (e.g., send an emergency notification to health professionals);

- Mobility support: the system must provide mobility to the users, allowing patients and health professionals to use the system to recognize activities in a flexible way and in different situations;

- Local and remote data distribution: the system must be capable of distributing data and context for both the application running on the patient’s mobile device and the applications running on the healthcare providers devices.

- Accuracy: The accuracy rate in activities recognition, intensity measurement, and status inference depends on how the collected context data reflects the reality in which the patient is;

- Available attributes and completeness: the efficiency and success rate of activity recognition mechanisms and situation inference are greater when all the attributes of the collected information are available;

- Sensor location: in emergencies, it may be necessary to inform the location of the patient to a response team, doctors, caregivers or family members.

- Delivery reliability: the data processed by the mobile device (activity, intensity, situation) must be delivered to the remote server running in a cloud infrastructure. Since mobility requires the use of wireless networks, which are more prone to failures in the transmission, it is important that the system be able to retransmit the data if delivery is not confirmed;

- Refresh Rate: the system must be able to adjust the frequency used for detecting activities and situations, allowing patient monitoring in real-time or only occasionally as needed;

- History: the system must be able to store detected activities, inferred situations and other patient data so that they can be available to the health professionals when necessary.

- Lifespan: the system should provide the means for health professionals to determine the context information expiration time. Therefore, out-of-date data must be removed from the application history.

3.3. M-Hub/CDDL Requirements

- Interaction with Heterogeneous Physical Sensors: the middleware must provide mechanisms for interacting with a broad range of heterogeneous physical sensors. It must also allow the opportunistic discovery and ad-hoc interaction with sensors using short-range wireless communication technologies such as Classic Bluetooth and BLE. The support for wireless technologies must be extensible, allowing easy integration of new technologies as needed.

- Local and Remote Data Distribution: it must provide mechanisms of data distribution, in order to allow information sharing between context producers and consumers in a flexible way. The middleware must support both local distribution (producers and consumers running on the same device) as well as remote distribution (producers and consumers running on different devices).

- Discovery and Registration of Distributed Services: the middleware must provide mechanisms to describe the characteristics of the available context services (at least, publisher id, service name, last read value, and averages of QoC attributes) provided by smart objects so they can be registered in a local and/or in a global directory service. The later should run in a cloud/cluster infrastructure and maintain a register of all discovered services provided by a group of CDDL publishers. Context-aware applications (subscribers) should discover the services available by performing queries on those directories. The query should use an expressive language that allows the developer not only to describe the application demands for context information services but also the required QoC that they must provide. CDDL clients (publishers and subscribers) connected to the same broker are automatically part of the same domain (the CDDL domain).

- Uniform and Location-Independent Programming Model: indicates that the programming model (interfaces, components) used for development of applications that consume local data is the same one adopted for applications that consume remote data. That is, the developer does not have to change the way of programming if the data origin changes.

- Context Information Quality, Monitoring, and Provisioning: the middleware must provide mechanisms that allow the context information to be enriched with attributes that describe its quality (e.g., accuracy, source location, measurement time, expiration time, available attributes and completeness) and provide methods to calculate them dynamically. The mechanisms for registering and discovering services should take QoI into account. The local and/or global directory service must be aware of the provided QoI. The context query language should allow the specification of the consumer QoI requirements for selecting appropriate context services that match the specified criteria. In addition, the middleware must provide monitoring mechanisms that allow consumer applications to be notified when the QoC changes significantly, allowing the application to react to those events.

- Distribution Quality Monitoring and Provisioning: this requirement means that producers and consumers can independently define quality of data distribution service policies. These policies express a set of configurations that determine how CDDL handles a broad set of non-functional properties (e.g., delivery reliability, deadline, refresh rate, latency budget, history, lifespan). The service registration and discovery mechanisms should also take into account the need to store and query information about the quality of the distribution service. For some QoS policies, monitoring mechanisms should be provided to ensure that publishers and subscribers are notified when the required quality is no longer met.

- Reliable Data Delivery in Mobility Scenarios: In order to also handle the distribution of context information in unrestricted mobility scenarios, the middleware must provide mechanisms that minimize data loss, in the presence of weak or intermittent connectivity of local gateways and/or consumer applications with the Internet.

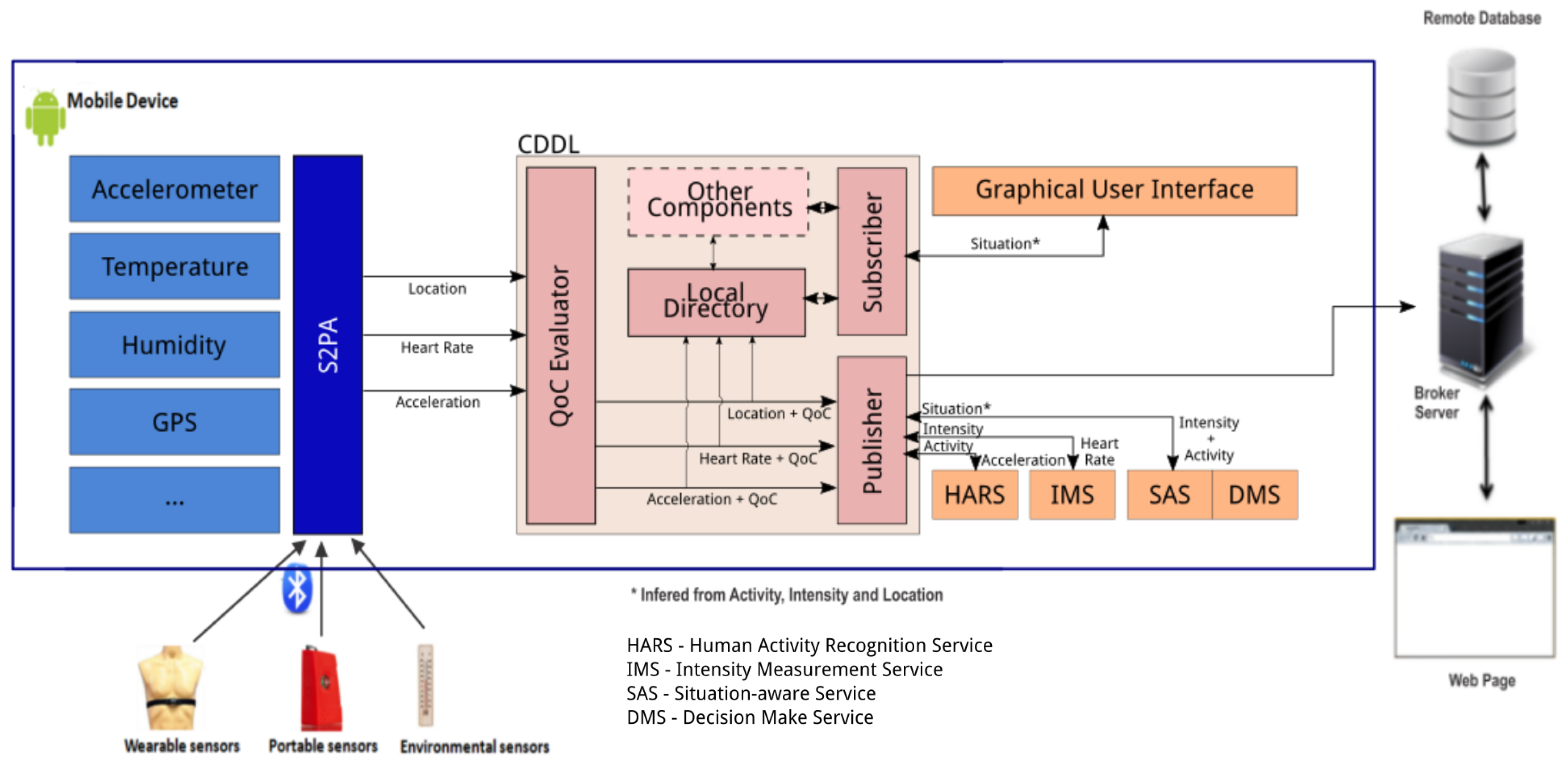

3.4. M-Hub/CDDL Architecture

3.4.1. M-Hub Components

- S2PA: component responsible for managing the discovery, connection, and interaction with smart objects. M-Hub standardizes the sensor adapters/wrappers implementation through three main interfaces provided by S2PA: Technology, Technology Device and Technology Listener. S2PA APIs were designed to provide generic methods for short-range communication between the M-Hub and smart objects, that can be directly mapped to the specific capabilities of the underlying short-range wireless communication technologies (WPANs). Hence, for each supported WPAN was introduzed into the M-Hub the component that implements the methods defined by the S2PA APIs. The Technology Interface posses a unique ID that is defined at programming time to identify each technology (e.g., Bluetooth Classic, BLE, ANT+, etc.). That interface has the required methods to handle different short-range protocols used for the interaction with sensors. Some of the main methods defined by the Technology interface are the followings: boolean exists(), method that verifies if the device provides support for the technology; readSensorValue() and writeSensorValue(), methods that request a read or a write of a sensor, respectively. There is an attribute called serviceName (a String), which represents the sensor service (e.g., “Temperature”, “Humidity”, “Accelerometer”). From the M-Hub point of view, each device adapter/wrapper (e.g., Zephyr Bioharness) is a class that implements the Technology Device interface. All the context information and QoC attributes that a sensor adapter/wrapper can provide are send to the Technology Listener, that takes care of sending the data retrieved from the different devices to the CDDL layer, responsible publishing the context data. S2PA also manages the device’s reachability, notifying eventual disconnections that can occur due to several reasons, such as the movement of smart objects or the gateway, considering the unrestricted mobility of IoMT. Once started, the S2PA service periodically searches for available nearby devices supporting the enabled communication technologies. If there are adapters/wrappers compatible with the discovered devices, S2PA will connect to them and start receiving their data (in case of sensors) or wait for remote commands to be sent to the device (for actuators). Whenever a new smart object is discovered, S2PA generates an event for each service provided by the smart object notifying its availability. Local Directory Service (see Section 3.4.2) receives those notifications, registering the discovered services. In addition, S2PA monitors the connection established with the smart object in order to be able to notify the Local Directory Service whenever there is a disconnection (spontaneously or abruptly) and the connection is not restored within a configurable time window. This time window is intended to prevent sending unavailability notifications in situations where the smart object connects and disconnects to the gateway frequently.

- BT Technology and BLE Technology: components that implement the functionality required for the interaction with devices supporting Bluetooth Classic (e.g., Zephyr Bioharness (https://www.zephyranywhere.com/)) and Bluetooth Low Energy (e.g., Sensor Tag (http://processors.wiki.ti.com/index.php/SensorTag_User_Guide)) respectively, based on the operations defined by the Technology interface.

- Internal Technology: component that implements the functionality required for the interaction with built-in sensors available on the Android device running the M-Hub.

3.4.2. CDDL Components

- QoC Evaluator: receives context data enriched with some QoI metadata from S2PA. One of the responsibilities of the QoC Evaluator is to dynamically compute the value of some QoI parameters, which are not provided during data acquisition since they are not intrinsically related to the sensor hardware. The computed QoI is also added to the context data metadata structure and then forwarded to the Publisher. The QoC Evaluator also periodically calculates the average quality of context provided by each sensor. This average QoC is a relevant information for some client applications, which can use it as a criteria for selecting context services. They are stored by the directory services.

- Publisher: component responsible for publishing data, which may be originated from the S2PA or provided by the application layer. From the point of view of client applications, each instantiated Publisher is a context service provider. In addition to publishing context data enriched with QoI metadata, this component can also be used to publish context queries and responses used in the process of searching for smart objects services. Data delivery to the broker is performed according to a set of QoS policies implemented by this component.

- Local Directory Service: responsible for managing information related to the context service providers running on the device. This information includes the available context data types, the average quality of the information published, and data related to the quality of the distribution service offered by each provider. Local Directory Service is also responsible for publishing the service unavailability notification on the same topic used to publish and receive the context data in order to notify the applications that a service is no longer available.

- Subscriber: component responsible for context data acquisition following a topic approach. In this way, applications subscribe to a given context topic. In addition to receiving context data enriched with QoI metadata, a Subscriber can also be used to receive context queries and responses. Data delivery to the application layer is done according to some QoS policies implemented by this component.

- Micro Broker: modified version of a Broker Server targeting mobile devices running the Android platform. Its function is to intermediate the communication between publishers and subscribers based on the definition of topic. A topic is a hierarchical structured string, which is used for message filtering and routing. By default, this component is configured to only accept local connections. In this way, message exchange is only possible between applications running on the same device. However, the Micro Broker can also be configured to accept remote connections, enabling communication between applications running on different devices.

- Connection: component responsible for managing connections and sessions established by clients CDDL with brokers, whether local or remote. An application can establish multiple connections. Each one of them can be either local or remote. For each established connection, an instance of the Connection component is created for managing the connection.

- Filter: component that filters information based on the content of its attributes, including the QoI metadata. MQTT allows clients to publish and subscribe context information based only on the definition/signature of topics that are managed by the broker. However, it does not provide content-based routing/filtering. Therefore, applications use the topic approach to send and receive data to/from the MQTT broker and locally apply the content-based filtering mechanism, if necessary.

- Monitor: component responsible for analyzing context data streams in order to detect the occurrence of certain events that are of interest to the application. The monitoring mechanism is rule-based, an approach that allows the application to define monitoring events of its interest and implement the actions that must be performed when such events are detected. The monitoring mechanism uses a CEP engine which allows event processing to occur in near real-time. For each monitoring rule, a Listener is provided through which the application is notified in case of an event occurrence. This component can also be used for QoI and QoS monitoring. However, some QoS policies are self-monitored, i.e. the policy itself has a standard monitoring mechanism that notifies the application if the required quality of service is violated.

3.4.3. Cloud/Cluster Components

- Broker Server: intermediates the communication between publishers and subscribers connected to the cloud. This component has a greater capacity of data distribution than the Micro Broker, since it runs on a hardware with more powerful computing resources.

- Global Directory Service: component responsible for receiving and storing all records of service providers available in the same CDDL domain. By default, each Local Directory Service publishes events that characterize the discovery or disappearance of context services in topics managed by the server broker (if the CDDL client has a connection to the network). The Global Directory Service is a subscriber of these topics and is able to update its records based on the events it receives. However, each Local Directory Services can disable the sending of information to Global Directory Service.

3.5. Quality of Information Parameters

- Accuracy: represents how close the measured value is to the actual value [8]. Generally, IoT applications work with the accuracy estimated by the sensor. In some cases, sensor defects and/or failures can cause the estimated accuracy value to be significantly distant from the actual value. For mobile devices, S2PA obtains the internal sensors accuracy using the Android sensors API. However, for external sensors (BT and BLE sensors), it is necessary for the sensor driver programmer to use its APIs to obtain the accuracy and inform S2PA. After getting the accuracy S2PA informs CDDL.

- Source Location: is the location where the context information was measured [8]. In M-Hub/CDDL, indicates the approximate position of the context source at the time of measurement. If the smart object has a built-in location hardware, its provided location will be assigned to the gathered data. However, in most cases, smart objects do not have their own location hardware. In this case, the QoC Evaluator will assign to the data the position of the device it runs on. Since short range wireless communication networks are used for interaction with smart objects, the provided location will only be an approximation of the context source location.

- Measurement Time: is the moment at which the context information was measured [8]. In M-Hub/CDDL, indicates the approximate time it which the information was measured at the smart object. If the smart object does not provide this information, then the QoC Evaluator will fill the corresponding metadata with the timestamp of data arrival. Note that in this case the elapsed time between the measurement and the arrival of the data through the WPAN communication is neglected. This usually takes miliseconds. A similar approach is adopted for internal sensors, except for the fact that there is no short network communication, leading to a even better precision.

- Arrival Time: informs the arrival timestamp of the context information at the consumer. The arrival time is entered in the context information by the Subcriber component.

- Expiration Time: is the time interval in which the context information can be considered valid for the applications, counting from the measurement time [8]. The expiration time is specified by the producer of the information, and can be considered or not by consumer applications. Upon receiving the information, the consumer can accept the specified vality time or even extend this time according to its own interests.

- Age: is the elapsed time between the measurement time of the information and the current instant [8], calculated as follows:where is the current time and is the measurement time.Therefore, age is a very dynamic parameter, which must be recalculated whenever it is required for some decision making.In M-Hub/CDDL, the parameter is dynamically calculated based on the difference between the measurement time and the current time. When the producer and the consumer run on different devices, a time synchronization mechanism is required in order to calculate the age of the context information at data receipt as accurately as possible (e.g., ClockSync (https://play.google.com/store/apps/details?id=ru.org.amip.ClockSync&hl) synchronizes device system clock with atomic time from Internet via Network Time Protocol -NTP).

- Measurement Interval: is the minimum separation interval between two sensor measurements [8]. This parameter is calculated dynamically by the QoC Evaluator based on the difference between the measurement times of two consecutive samples of the same context information. Although the sensors on the Android platform may have their frequency set, the actual sampling rate is not always the same as that specified. Therefore, M-Hub/CDDL adopts the strategy of calculating this parameter at runtime.

- Available Attributes: are the attributes that characterize a given information type [8]. For example, location information provided by GPS has three attributes: latitude, longitude, and altitude. Under normal conditions, all context information attributes are available. However, sensor failures can cause incomplete readings.

- Completeness: indicates how complete is the information that the consumer receives. In other words, it is the amount of information that is provided by a context object, and can be calculated based on the ratio of the sum of the weights of the available attributes and the sum of the weights of the total number of attributes that are required by the consumer. The equation for this metric is as follows:where m is the number of available attributes and n is the total number of attributes for the desired information. The weights w are necessary due to the fact that each attribute may have a different importance for each consumer. For example, if the location sensor provides only the latitude and longitude attributes, but not the altitude, then the reading completeness is approximately 66.6%. M-Hub/CDDL assumes, by default, the same weight for all attributes. In M-Hub/CDDL, completeness is calculated dynamically by the QoC Evaluator. The lower the completeness value, the more incomplete is the information received.

- Numeric Resolution: indicates the degree of detail of the context information [6]. It is called granularity by [8]. In M-Hub/CDDL, for numerical data, the QoC Evaluator calculates the numeric resolution as the granularity in relation to the number of decimal places of the measured value. It should be noted that having a sensor that performs temperature readings with two decimal places, such as 20.01 C for example does not necessarily imply that it has a higher accuracy than another one which measures with only one, 19.0 C for instance.

3.6. Quality of the Distribution Service Policies

- Deadline: Publishers and subscribers use this policy to specify the maximum time they are willing to wait to send and receive, respectively, at least one message. To time the deadline, this policy uses a local Java TimerTask. This component notifies the application through a Listener when the specified maximum wait time expires. The maximum wait time is set in milliseconds, with the default value of this parameter being “disabled” (deadline equal to zero). In this case, the application will not receive any notification. Publishers and subscribers define the maximum waiting time independently of each other, meaning there is no negotiation between them.

- Refresh Rate: Publishers and subscribers use this policy to specify the minimum separation interval between successive messages submissions and receipts, respectively. This policy uses a time-based filter that causes only the last (most recent) message to actually be sent or received. To control the send and receive intervals, this policy uses a local Java TimerTask component. The minimum separation interval is defined in milliseconds, with the default value of this parameter being “disable” (minimum separation interval equal to zero). Publishers and subscribers do not negotiate the minimum separation interval. This way, even if the publisher sends data with a very high frequency, the subscriber can establish a lower reception rate that meets their requirements.

- Latency Budget: This policy is used by publishers and subscribers to set an additional delay in sending and receiving messages, respectively. The definition of an additional delay greater than zero causes the middleware to accumulate the messages produced in the specified interval and send or receive them in a single burst by means of a packing message (a message in which all accumulated messages are inserted). Therefore, if the packager message is lost, any messages entered into it will also be lost. To delay sending and receiving messages this policy uses a local Java TimerTask. The additional delay is set in milliseconds, with the default value of this parameter being “disable” (additional delay equal to zero). In this case, the middleware will not delay sending/receiving messages. Publishers and subscribers define the additional delay independently of each other. Therefore, even if the publisher does not set an additional delay, the subscriber can do so in order to receive the messages in a grouped fashion at regular intervals.

- History: This policy is used by publishers and subscribers to set the amount of message that can be stored in their respective history. This policy can be configured in two ways: keep all and keep last. In the keep all mode all messages are stored in the history (up to resource limits). In keep last mode only the last n messages are stored in history (n needs to be defined by the application). The default setting for this policy is keep last, where the size of the history is equal to 1. Publishers and subscribers define the type and size of the history independently of each other, that is, there is no negotiation between them.

- Destination Order: This policy determines how messages are stored in the history of publishers and subscribers. In the case of the publisher, the messages are always sorted by the measurement time (the arrival time of the data at the gateway, if the measurement time is not informed by the sensor). In the case of the subscriber, the messages can be sorted by the measurement time, publication time or message reception time at the subscriber. The default ordering of the subscriber is the message reception time. If the subscriber sets the order by the measurement time or the publication time, it is necessary to install clock synchronization software, since the middleware does not provide this type of functionality.

- Lifespan: This policy is used by publishers and subscribers to control the lifespan of messages. This policy uses a local Java TimerTask that removes from the history messages whose expiration time has passed. The default value of lifespan is “disable” (lifespan equal to zero), indicating that the messages do not have a given lifespan and therefore will not be removed from the history (unless replaced by more recent messages in situations where the history is set to “keep last”). This policy is flexible in relation to the agent of the lifespan definition. By default, the lifespan agent is the publisher (in an analogy with the real world, it is always the product manufacturer that specifies the expiration time). In this case, the message will be removed from both the publisher’s history and the subscriber’s history. It is important to note that if the message reaches the subscriber with the lifespan expired, it is not entered in the history. However, there is an alternate setting, in which the subscriber ignores the lifespan specified by the publisher and sets a different lifespan, which may be larger or smaller than that set by the publisher (in an analogy with the real world the consumer sometimes discards products before the expiration date and sometimes uses products even after the expiration date). This setting is intended to allow the subscriber not to be forced to accept the lifespan imposed by the publisher. There is no negotiation process between publisher and subscriber regarding lifespan.

- Retention: the publisher uses this policy to signal that the broker should retain the last (and only the last) post published on the topic. For the publisher, the default value is “disable”, indicating that the broker should not retain the messages. Retention allows late subscribers, i.e., subscribers who were offline during message posting, to receive the retained message as soon as they (re)connect to the broker. Thus, the subscriber is not required to wait until the next publication to receive an update. However, the client application will only receive retained messages if the subscriber has the retention policy enabled. The default value of this policy for the subscriber is also “disable”, indicating that the subscriber will not pass on any retained messages to the client application. There is no negotiation between publishers and subscribers regarding message retention.

- Vivacity: this policy allows clients to be notified of connection failures on other clients in the same CDDL domain. In order for client B to receive client A fault notifications, client A must register with the broker a Last Will Testament (LWT) message at the time of connection. If the client A connection with broker fails, then the broker will publish the LWT message in the topic “Vivacity”. Therefore, client B will only receive the notification if it subscribes to this topic. Receipt of notifications can be filtered based on client ID. In this way, client B may indicate that it wants to be notified only of client A faults, instead of receiving failure notifications from other clients. The default policy value for the client is “disable”, indicating that it does not want to register LWT messages with the broker at the time of the connection. There is no negotiation between publishers and subscribers regarding this policy.

- Reliability: sets the reliability level to be used when delivering messages. There are three possible values: 0 (at most once), 1 (at least once), and 2 (exactly once). At level 0, the best effort policy is used. The message delivery is not confirmed by the receiver. It is not stored by the sender and can be lost in the event of a delivery failure. This is the fastest transfer mode. At level 1, the receiver must send an acknowledgment to the sender. If the sender does not receive the acknowledgment, the message will be sent again with the DUP flag set until the delivery confirmation is received. As a result, the receiver may receive and process the same message several times. The message must be stored locally on the sender buffer until the acknowledgment has been received. If the receiver is a broker, it sends the message to the subscribers. If the receiver is a client, the message will be delivered to the subscriber application. At level 2, at least two pairs of transmissions are used between the sender and receiver before the message is deleted from the sender. In the first pair of transmissions, the sender transmits the message and gets an acknowledgment from the receiver notifying that it has stored the message. If the sender does not receive the acknowledgment, the message is sent again with a DUP flag set, and this is periodically performed until an acknowledgment is received. In the second pair of transmissions, the sender tells the receiver that it has received the acknowledgment by sending it a “PUBREL” message. If the sender does not receive an acknowledgment of the “PUBREL” message, the “PUBREL” message is sent again until an acknowledgment is received. The sender deletes the message from its buffer when it receives the acknowledgment of the “PUBREL” message. The receiver can process the message in the first or second phases, provided that it does not reprocess the message. If the receiver is a broker, it publishes the message to the subscribers. If the receiver is a client, it delivers the message to the subscriber application. This is the slowest and most expensive transmission mode. The default reliability value is level 0. There is no negotiation process between publishers and subscribers regarding this policy. However, the subscriber’s reliability requirements will only be met if the publisher uses a reliability level greater than or equal to that required by the subscriber. For example, if the subscriber requires reliability level 1, then the publisher needs to use reliability level 1 or 2.

- Session: defines whether the session held between the client and the broker is persisted or not. A persistent session is one in which the broker stores the customer’s identifier and the topics signed by him, preventing data from having to be re-informed in the case of reconnection. During a temporary disconnect, the broker stores messages posted on topics that were of interest to the subscriber in a buffer, but only those that require delivery confirmation. When the client reconnects, the broker will attempt to send (or resend) the persistent messages, obeying the order of receipt. The default value for this policy is “not persistent”, that is, the broker does not save the session between the subscriber and itself and, therefore, does not attempt to resend persisted messages when the connection is restored.

3.7. Implementation Aspects

3.7.1. Complex Events Processing

3.7.2. QoC Evaluation

insert into ServiceInformationMessage (

publisherID, serviceName, accuracy, measurementTime, availableAttributes,

sourceLocationLatitude, sourceLocationLongitude, sourceLocationAltitude,

measurementInterval, numericalResolution, age

) select publisherID, serviceName, avg(accuracy), avg(measurementTime),

avg(availableAttributes), avg(sourceLocationLatitude), avg(sourceLocationLongitude),

avg(sourceLocationAltitude), avg(measurementInterval), avg(numericalResolution), avg(age)

from SensorDataMessage.win:time(TIME_WINDOW)

group by publisherID, serviceName

// creates the message SensorDataMessage msg = new SensorDataMessage(); // fill the message properties msg.setServiceName(‘‘TEMPERATURE’’); msg.setServiceValue(38); // the type can be any Java type (String, Integer, Object, etc.) msg.setAccuracy(0.025); //or any other QoC parameter // obtaning references to default publisher and publishes the message Publisher publisher = MHubCDDL.getInstance().getDefaultPublisher; publisher.publish(msg);

3.7.3. QoC-Based Service Discovery

// obtaning references to default publisher and subscriber MHubCDDL mhubcddl = MHubCDDL.getInstance(); publisher = mhubcddl.getDefaultPublisher(); subscriber = mhubcddl.getDefaultSubscriber(); // query for location of the publisher [email protected] with accuracy less than 5; String query = ‘‘serviceName = ’LOCATION’ and publisherID = ’[email protected]’ and accuracy < 5’’; // returnCode can be used to cancel the continuous query int returnCode = publisher.query(QueryDestiny.LOCAL, QueryType.CONTINUOUS, query); // creates the listener to receive subscriptions subscriber.setSubscriberListener(new ISubscriberListener() { @Override public void onMessageArrived(Message message) { // handles query responses if (message instanceof QueryResponseMessage) { QueryResponseMessage response = (QueryResponseMessage) message; // is the query response about location of [email protected]? if (message.returnCode == returnCode) { // obtain the list of services from response List<ServiceInformationMessage> services = response.getServiceInformationMessageList(); // loop from all services from response and subscribe them for(ServiceInformationMessage info : services) { subscriber.subscribeServiceTopic(info.getTopic()) } } return; } // handles subscribed messages if (message instanceof SensorDataMessage) { SensorDataMessage sensorDataMessage = (SensorDataMessage) message; // simulates showing message on UI. showMessageOnUI(message); // one can get the attributes of the message like: // message.getPublisherID(); // message.getServiceName(); // message.getServiceValue(); // message.getAccuracy(); // message.getSourceLocation(); return; } } // if, in any moment, the application wants to cancel the query above // publisher.cancelQuery(returnCode) });

3.7.4. QoC-Based Event Monitoring

// first, define a monitor listener IMonitorListener monitorListener = new IMonitorListener() { @Override public void onEvent(final Message message) { // the monitoring event just happened. // do something about it doSomething(message); }; // monitoring rule String cepRule = ‘‘select * from pattern [every A=SensorDataMessage -> B=SensorDataMessage and A.accuracy <> B.accuracy] where A.serviceName = ’TEMPERATURE’ and A.publisherID = ’[email protected]’’’; // ruleId can be used to remove the query (see comment below) int ruleId = subscriber.getMonitor().addRule(cepRule, monitorListener); // cancel the monitoring using the statement bellow // subscriber.getMonitor().removeRule(ruleId);

3.7.5. QoC-Based Event Filtering

// subscribe to temperature of any publisher subscriber.subscribeSensorDataTopicByServiceName(‘‘TEMPERATURE’’); // the application will only receive context information with accuracy equal to 0.9 subscriber.setFilter(‘‘select * from SensorDataMessage where accuracy > 0.9’’); // disable filtering and receive all information subscriber.clearFilter();

3.7.6. QoS Configuration

// informs the customer ID that will be used by all connections MHubCDDL.getInstance().setClientId(‘‘[email protected]’’); // obtains the connections factory instance ConnectionFactory connectionFactory = ConnectionFactory.getInstance(); // get an instance of the connection Connection connection = connectionFactory.createConnection(); // activates the intermediate buffer connection.setEnableIntermediateBuffer(true); // instantiates the reliability policy ReliabilityQoS reliabilityQoS = new ReliabilityQoS(); //configures reliability at level 1 reliabilityQoS.setKind(ReliabilityQoS.AT_MOST_ONCE); // gets a default publisher instance Publisher publisher = DefaultPublisher.getInstance(); // changes publisher reliability policy publisher.setReliabilityQoS(reliabilityQoS); // adds a connection to the publisher publisher.addConnection(connection); //connects connection.connect();

4. Implementing an Application with QoC Requirements

4.1. System Architecture and Key Features

4.2. Discussion on the Case Study

5. Evaluation and Results

5.1. Evaluation of the Context Information Delivery Time to the Consumer

- Communication Time: equals to the sum of the delay imposed by the network communication, or local bus, with the message processing time in the local or remote MQTT Broker. Communication time between publisher and subscriber is directly affected by the level of delivery reliability used by publishers and subscribers, as well as by external factors over which the middleware has no control, such as bandwidth, latency, and network packets loss rate.

- Middleware Processing Time: is the sum of the times that the context information takes to transit or be processed by the middleware components in the producer (interval between the arrival of the data at the publisher and sending the message to the MQTT Broker) and consumer (interval between the arrival of the message at the subscriber and delivery of the data to the application). The information processing time in the middleware includes marshaling and unmarshaling operations, QoI evaluation, and application of QoS policies according to parameters set by publishers and subscribers.

- Delivery Total Time: it is equivalent to the sum of the communication times between publisher and subscriber and the middleware processing time, that is, the time elapsed between the arrival of the data in the gateway and its effective delivery to the consumer application.

- Wearable Sensor: used to generate the context data. The Zephyr Bioharness 3 device, which has several sensors, was used. However, the S2PA was set up to collect only heart rate data. The data generation frequency of this sensor is approximately 1 Hz.

- Smartphone: used to run the test application. The smartphone model is a LG-K430F, which has 2 GB of RAM, quad-core 1.2 Gigahertz (GHz) processor, Android 6.0 operating system, Bluetooth and 802.11 Wifi.

- Notebook: used to run the test application monitoring tool (Android Device Monitor) and to run a server broker in experiments using LAN. It was used a DELL Inspiron 15-5557, which has 16 GB of RAM, Intel Core i7 2.5 GHz processor and Ubuntu 16.04 LTS operating system.

- LSDi Server computer used to run a server broker in experiments using the Internet. It has 32 GB of RAM and an Intel Xeon processor 1.7 GHz. This broker can be accessed using the following url: www.lsdi.ufma.br:1883.

- Experiments with reliability set to level 0: in this experiment the at most once.

- Experiments with reliability set to level 1: In this experiment the at least once policy was employed.

- Experiments with reliability set to level 2: In this experiment the policy exacly once was used.

- Experiments without network usage: In this experiment no network connection was used. Publisher and subscriber were connected to a micro broker running on the same device as the test application (the smartphone).

- Experiments using local network: in this experiment a Wireless Local Area Network (WLAN) network was used. Publisher and subscriber were connected to an external server broker. In this case, the server broker ran on the notebook, which also acted as a network access point. The WLAN was formed only by the smartphone and the notebook, and there was no connection to the Internet.

- Experiments using fixed Internet: in this experiment, a local Wifi network was used. Internet access was made via a wireless Asymmetric Digital Subscriber Line (ADSL) router/modem. Both publisher and subscriber were connected to a server broker running on the LSDi server.

- Experiments using mobile Internet: in this experiment was used a 4G connection. Both publisher and subscriber were connected to a server broker running on the LSDi server.

5.2. Evaluating of the Message Delivery Reliability

- Low-intermittency scenario: in which the connectivity to the broker was programmatically interrupted within a range that varied randomly according to a uniform distribution in the interval between 120 and 180 s.

- High-intermittence scenario: in which connectivity with the broker was programmatically interrupted within a range that varied randomly according to a uniform distribution in the interval between 12 and 18 s, so the intermittence rate was 10 times greater than the one in the previous scenario.

5.3. Evaluation of Monitoring Time

5.4. Evaluation of Memory Consumption

5.5. Evaluation of Battery Consumption

6. Comparative Analysis and Discussions

7. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Dey, A.K. Understanding and using context. Pers. Ubiquitous Comput. 2001, 5, 4–7. [Google Scholar] [CrossRef]

- Abowd, G.D.; Dey, A.K.; Brown, P.J.; Davies, N.; Smith, M.; Steggles, P. Towards a Better Understanding of Context and Context-Awareness. In Proceedings of the 1st International Symposium on Handheld and Ubiquitous Computing (HUC ’99), Karlsruhe, Germany, 27–29 September 1999; Springer: London, UK, 1999; pp. 304–307. [Google Scholar]

- Borgia, E. The internet of things vision: Key features, applications and open issues. Comput. Commun. 2014, 54, 1–31. [Google Scholar] [CrossRef]

- Rios, L.; Endler, M.; Vasconcelos, I.; Vasconcelos, R.; Cunha, M.; da Silva e Silva, F.S. The Mobile Hub Concept: Enabling Applications for the Internet of Mobile Things. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOM Workshops), St. Louis, MO, USA, 23–27 March 2015; pp. 123–128. [Google Scholar]

- Nazário, D.C.; Tromel, I.V.B.; Dantas, M.A.R.; Todesco, J.L. Toward assessing quality of context parameters in a ubiquitous assisted environment. In Proceedings of the IEEE Symposium on Computers and Communication (ISCC), Madeira, Portugal, 23–26 June 2014; pp. 1–6. [Google Scholar]

- Buchholz, T.; Küper, A.; Schiffers, M. Quality of Context: What It Is and Why We Need It. In Proceedings of the 10th Workshop of the OpenView University Association: (HPOVUA), Geneva, Switzerland, 6–9 July 2003. [Google Scholar]

- Bellavista, P.; Corradi, A.; Fanelli, M.; Foschini, L. A survey of context data distribution for mobile ubiquitous systems. ACM Comput. Surv. 2012, 44, 24:1–24:45. [Google Scholar] [CrossRef]

- Manzoor, A.; Truong, H.L.; Dustdar, S. Quality of Context: Models and applications for context-aware systems in pervasive environments. Knowl. Eng. Rev. 2014, 29, 154–170. [Google Scholar] [CrossRef]

- Henricksen, K.; Indulska, J. Modelling and using imperfect context information. In Proceedings of the Second IEEE Annual Conference on Pervasive Computing and Communications Workshops, Orlando, FL, USA, 14–17 March 2004; pp. 33–37. [Google Scholar]

- McNaull, J.; Augusto, J.C.; Mulvenna, M.; McCullagh, P. Data and information quality issues in ambient assisted living systems. J. Data Inf. Qual. 2012, 4, 1–15. [Google Scholar] [CrossRef]

- Arcangeli, J.P.; Bouzeghoub, A.; Camps, V.; Canut, M.F.; Chabridon, S.; Conan, D.; Desprats, T.; Laborde, R.; Lavinal, E.; Leriche, S.; et al. INCOME: Multi-scale context management for the internet of things. In Proceedings of the AmI ’12: International Joint Conference on Ambient Intelligence, Pisa, Italy, 13–15 November 2012; Volume 7683, pp. 338–347. [Google Scholar]

- Li, Y.; Feng, L. A Quality-Aware Context Middleware Specification for Context-Aware Computing. In Proceedings of the 33rd Annual IEEE International Computer Software and Applications Conference (COMPSAC ’09), Seattle, WA, USA, 20–24 July 2009; Volume 2, pp. 206–211. [Google Scholar]

- Cavalcante, E.; Alves, M.P.; Batista, T.; Delicato, F.C.; Pires, P.F. An Analysis of Reference Architectures for the Internet of Things. In Proceedings of the 1st International Workshop on Exploring Component-Based Techniques for Constructing Reference Architectures (CobRA ’15), Montreal, QC, Canada, 4–8 May 2015; pp. 13–16. [Google Scholar]

- Breivold, H.P. A Survey and Analysis of Reference Architectures for the Internet-of-Things. In Proceedings of the the 12th International Conference on Software Engineering Advances (ICSEA 2017), Athens, Greece, 8–12 October 2017; pp. 132–138. [Google Scholar]

- Weyrich, M.; Ebert, C. Reference Architectures for the Internet of Things. IEEE Softw. 2016, 33, 112–116. [Google Scholar] [CrossRef]

- European FP7 Research Project IoT-A. IoT-A Architectural Reference Model. 2014. Available online: http://open-platforms.eu/standard_protocol/iot-a-architectural-reference-model/ (accessed on 6 November 2017).

- Industrial Internet Consortium. Industrial Internet Reference Architecture V 1.8. 2017. Available online: http://www.iiconsortium.org/IIRA.htm (accessed on 6 November 2017).

- Artemis Project Arrowhead. 2013. Available online: http://www.arrowhead.eu/about/arrowhead-common-technology/arrowhead-framework/ (accessed on 6 November 2017).

- Fremantle, P. A Reference Architecture For The Internet of Things. WSO2 White Paper. 2016. Available online: http://wso2.com/whitepapers/a-reference-architecture-for-the-internet-of-things/ (accessed on 6 November 2017).

- The Intel IoT Plataform. 2015. Available online: https://www.intel.com/content/dam/www/public/us/en/documents/white-papers/iot-platform-reference-architecture-paper.pdf (accessed on 6 November 2017).

- Delicato, F.C.; Pires, P.F.; Batista, T.V. Middleware Solutions for the Internet of Things; Springer: Berlin, Germany, 2013; pp. 1–78. [Google Scholar]

- Perera, C.; Zaslavsky, A.B.; Christen, P.; Georgakopoulos, D. Context Aware Computing for the Internet of Things: A Survey. CoRR 2013. [Google Scholar] [CrossRef]

- Bandyopadhyay, S.; Sengupta, M.; Maiti, S.; Dutta, S. A Survey of Middleware for Internet of Things. In Proceedings of the Recent Trends in Wireless and Mobile Networks: Third International Conferences (WiMo 2011 and CoNeCo 2011), Ankara, Turkey, 26–28 June 2011; pp. 288–296. [Google Scholar]

- Li, X.; Eckert, M.; Martinez, J.F.; Rubio, G. Context Aware Middleware Architectures: Survey and Challenges. Sensors 2015, 15, 20570–20607. [Google Scholar] [CrossRef] [PubMed]

- Yürür, O.; Liu, C.H.; Sheng, Z.; Leung, V.C.M.; Moreno, W.; Leung, K.K. Context-Awareness for Mobile Sensing: A Survey and Future Directions. IEEE Commun. Surv. Tutor. 2016, 18, 68–93. [Google Scholar] [CrossRef]

- Blair, G.; Schmidt, D.; Taconet, C. Middleware for internet distribution in the context of cloud computing and the internet of things. Ann. Telecommun. 2016, 71, 87–92. [Google Scholar] [CrossRef]

- Krause, M.; Hochstatter, I. Challenges in Modelling and Using Quality of Context (QoC). In Mobility Aware Technologies and Applications; Magedanz, T., Karmouch, A., Pierre, S., Venieris, I., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3744, pp. 324–333. [Google Scholar]

- Lei, H.; Sow, D.M.; Davis, J.S., II; Banavar, G.; Ebling, M.R. The Design and Applications of a Context Service. SIGMOBILE Mob. Comput. Commun. Rev. 2002, 6, 45–55. [Google Scholar] [CrossRef]

- Hönle, N.; Kappeler, U.P.; Nicklas, D.; Schwarz, T.; Grossmann, M. Benefits of Integrating Meta Data into a Context Model. In Proceedings of the Third IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOMW ’05), Cancun, Mexico, 6–8 October 2005; pp. 25–29. [Google Scholar]

- Gray, P.D.; Salber, D. Modelling and Using Sensed Context Information in the Design of Interactive Applications. In Proceedings of the 8th IFIP International Conference on Engineering for Human-Computer Interaction ( EHCI ’01), Toronto, ON, Canada, 11–13 May 2001; Springer: London, UK, 2001; pp. 317–336. [Google Scholar]

- Schmidt, A. Implicit human computer interaction through context. Pers. Technol. 2000, 4, 191–199. [Google Scholar] [CrossRef]

- Sheikh, K.; Wegdam, D.M.; van Sinderen, D.M. Quality-of-context and its use for protecting privacy in context aware systems. J. Softw. 2008, 3, 83–93. [Google Scholar] [CrossRef]

- Bu, Y.; Gu, T.; Tao, X.; Li, J.; Chen, S.; Lu, J. Managing Quality of Context in Pervasive Computing. In Proceedings of the Sixth International Conference on Quality Software (QSIC 2006), Beijing, China, 27–28 October 2006; pp. 193–200. [Google Scholar]

- Huebscher, M.C.; McCann, J.A.; Dulay, N. Fusing Multiple Sources of Context Data of the Same Context Type. In Proceedings of the International Conference on Hybrid Information Technology, Cheju Island, Korea, 9–11 November 2006; Volume 2, pp. 406–415. [Google Scholar]

- Chantzara, M.; Anagnostou, M.; Sykas, E. Designing a quality-aware discovery mechanism for acquiring context information. In Proceedings of the 20th International Conference on Advanced Information Networking and Applications (AINA’06), Vienna, Austria, 18–20 April 2006; Volume 1, pp. 211–216. [Google Scholar]

- Pawar, P.; Tokmakoff, A. Ontology-Based Context-Aware Service Discovery for Pervasive Environments. In Proceedings of the 1st IEEE International Workshop on Services Integration in Pervasive Environments (SIPE 2006), Lyon, France, 29 June 2006; pp. 1–7. [Google Scholar]

- Neisse, R.; Wegdam, M.; van Sinderen, M.; Lenzini, G. Trust Management Model and Architecture for Context-Aware Service Platforms. In Proceedings of the OTM Confederated International Conferences “On the Move to Meaningful Internet Systems”, Vilamoura, Portugal, 25–30 November 2007; Meersman, R., Tari, Z., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 1803–1820. [Google Scholar]

- Manzoor, A.; Truong, H.L.; Dustdar, S. Quality Aware Context Information Aggregation System for Pervasive Environments. In Proceedings of the International Conference on Advanced Information Networking and Applications Workshops, Bradford, UK, 26–29 May 2009; pp. 266–271. [Google Scholar]

- Van Sinderen, M.J.; van Halteren, A.T.; Wegdam, M.; Meeuwissen, H.B.; Eertink, E.H. Supporting context-aware mobile applications: An infrastructure approach. IEEE Commun. Mag. 2006, 44, 96–104. [Google Scholar] [CrossRef]

- Conan, D.; Rouvoy, R.; Seinturier, L. Scalable Processing of Context Information with COSMOS. In Proceedings of the 7th IFIP WG 6.1 International Conference on Distributed Applications and Interoperable Systems (DAIS 2007), Paphos, Cyprus, 6–8 June 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 210–224. [Google Scholar]

- Abid, Z.; Chabridon, S.; Conan, D. A Framework for Quality of Context Management. In Quality of Context; Rothermel, K., Fritsch, D., Blochinger, W., Dürr, F., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5786, pp. 120–131. [Google Scholar]

- Sehic, S.; Li, F.; Dustdar, S. COPAL-ML: A Macro Language for Rapid Development of Context-Aware Applications in Wireless Sensor Networks. In Proceedings of the 2nd Workshop on Software Engineering for Sensor Network Applications (SESENA ’11), Waikiki, HI, USA, 22 May 2011; pp. 1–6. [Google Scholar]

- Li, F.; Sehic, S.; Dustdar, S. COPAL: An adaptive approach to context provisioning. In Proceedings of the IEEE 6th International Conference on Wireless and Mobile Computing, Networking and Communications (WiMob), Niagara Falls, NU, Canada, 11–13 October 2010; pp. 286–293. [Google Scholar]

- Marie, P.; Lim, L.; Manzoor, A.; Chabridon, S.; Conan, D.; Desprats, T. QoC-aware Context Data Distribution in the Internet of Things. In Proceedings of the 1st ACM Workshop on Middleware for Context-Aware Applications in the IoT (M4IOT ’14), Bordeaux, France, 9 December 2014; pp. 13–18. [Google Scholar]

- Marie, P.; Desprats, T.; Chabridon, S.; Sibilla, M.; Taconet, C. From Ambient Sensing to IoT-based Context Computing: An Open Framework for End to End QoC Management. Sensors 2015, 15, 14180–14206. [Google Scholar] [CrossRef] [PubMed]

- Corradi, A.; Fanelli, M.; Foschini, L. Implementing a scalable context-aware middleware. In Proceedings of the IEEE Symposium on Computers and Communications (ISCC 2009), Sousse, Tunisia, 5–8 July 2009; pp. 868–874. [Google Scholar]

- Corradi, A.; Fanelli, M.; Foschini, L. Adaptive Context Data Distribution with Guaranteed Quality for Mobile Environments. In Proceedings of the IEEE International Symposium on Wireless Pervasive Computing (ISWPC’10), Modena, Italy, 5–7 May 2010; pp. 373–380. [Google Scholar]

- Fanelli, M. Middleware for Quality-Based Context Distribution in Mobile Systems. Ph.D. Thesis, University of Bologna, Bologna, Italy, 2012. [Google Scholar]

- Corradi, A.; Fanelli, M.; Foschini, L. Towards Adaptive and Scalable Context Aware Middleware. In Technological Innovations in Adaptive and Dependable Systems: Advancing Models and Concept; IGI Global: Hershey, PA, USA, 2012; pp. 21–37. [Google Scholar]

- Witten, I.H.; Frank, E. Data Mining: Practical Machine Learning Tools and Techniques, Second Edition (Morgan Kaufmann Series in Data Management Systems); Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2005. [Google Scholar]

- Gianpaolo Cugola, A.M. Processing Flows of Information: From Data Stream to Complex Event Processing. ACM Comput. Surv. 2012, 44. [Google Scholar] [CrossRef]

- Al-Mejibli, I.; Colley, M. Evaluating UPnP service discovery protocols by using NS2 simulator. In Proceedings of the 2nd Computer Science and Electronic Engineering Conference (CEEC), Colchester, UK, 8–9 September 2010; pp. 1–5. [Google Scholar]

- Lim, L.; Conan, D. Distributed Event-Based System with Multiscoping for Multiscalability. In Proceedings of the 9th Workshop on Middleware for Next Generation Internet Computing (MW4NG ’14), Bordeaux, France, 8–12 December 2014; pp. 3:1–3:6. [Google Scholar]

- Kent, S. Model Driven Engineering. In Proceedings of the Third International Conference on Integrated Formal Methods (IFM ’02), Turku, Finland, 15–17 May 2002; Springer: London, UK, 2002; pp. 286–298. [Google Scholar]

- Marie, P.; Desprats, T.; Chabridon, S.; Sibilla, M. The QoCIM Framework: Concepts and Tools for Quality of Context Management. In Context in Computing: A Cross-Disciplinary Approach for Modeling the Real World; Springer: New York, NY, USA, 2014; pp. 155–172. [Google Scholar]

- De Vasconcelos Batista, C.S. Um Monitor de Metadados de QoS e QoC Para Plataformas de Middleware. Ph.D. Thesis, Universidade Federal do Rio Grande do Norte, Natal, Brazil, 2014. [Google Scholar]

- Vasconcelos, R.O.; Talavera, L.; Vasconcelos, I.; Roriz, M.; Endler, M.; Gomes, B.D.T.P.; da Silva e Silva, F.J. An Adaptive Middleware for Opportunistic Mobile Sensing. In Proceedings of the International Conference on Distributed Computing in Sensor Systems, Fortaleza, Brazil, 10–12 June 2015; pp. 1–10. [Google Scholar]

- Gomes, B.D.T.P.; Muniz, L.C.M.; da Silva e Silva, F.J.; Ríos, L.E.T.; Endler, M. A comprehensive and scalable middleware for ambient assisted living based on cloud computing and internet of things. Concurr. Comput. Pract. Exp. 2017, 29. [Google Scholar] [CrossRef]

- David, L.; Vasconcelos, R.; Alves, L.; André, R.; Endler, M. A DDS-based middleware for scalable tracking, communication and collaboration of mobile nodes. J. Internet Serv. Appl. 2013, 4, 16. [Google Scholar] [CrossRef]

- Vasconcelos, R.; Silva, L.; Endler, M. Towards efficient group management and communication for large-scale mobile applications. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOM Workshops), Budapest, Hungary, 24–28 March 2014; pp. 551–556. [Google Scholar]

- Silva, L.D.; Vasconcelos, R.; Lucas Alves, R.A.; Baptista, G.; Endler, M. A Communication Middleware for Scalable Real-time Mobile Collaboration. In Proceedings of the IEEE 21st International WETICE, Track on Adaptive and Reconfigurable Service-Oriented and Component-Based Applications and Architectures (AROSA), Toulouse, France, 25–27 June 2012; pp. 54–59. [Google Scholar]

- Gomes, B.; Muniz, L.; da Silva e Silva, F.J.; Rios, L.E.T.; Endler, M. A comprehensive cloud-based IoT software infrastructure for ambient assisted living. In Proceedings of the International Conference on Cloud Technologies and Applications (CloudTech), Marrakesh, Morocco, 2–4 June 2015; pp. 1–8. [Google Scholar]

- Vasconcelos, R.O.; Endler, M.; Gomes, B.D.T.P.; da Silva e Silva, F.J. Design and Evaluation of an Autonomous Load Balancing System for Mobile Data Stream Processing Based on a Data Centric Publish Subscribe Approach. Int. J. Adapt. Resil. Autonom. Syst. 2014, 5, 1–9. [Google Scholar] [CrossRef]

- Pardo-Castellote, G. OMG Data-distribution Service: Architectural Overview. In Proceedings of the IEEE Conference on Military Communications (MILCOM’03), Boston, MA, USA, 13–16 October 2003; pp. 242–247. [Google Scholar]

- Hunkeler, U.; Truong, H.L.; Stanford-Clark, A. MQTT-S: A publish/subscribe protocol for Wireless Sensor Networks. In Proceedings of the 3rd International Conference on Communication Systems Software and Middleware and Workshops (COMSWARE 2008), Bangalore, India, 6–10 January 2008; pp. 791–798. [Google Scholar]

- Eurotech, International Business Machines Corporation (IBM). MQTT V3.1 Protocol Specification. Available online: http://public.dhe.ibm.com/software/dw/webservices/ws-mqtt/mqtt-v3r1.html (accessed on 19 October 2017).

- PrismTech. Messaging Technologies for the Industrial Internet and the Internet of Things Whitepaper: A Comparison Between DDS, AMQP, MQTT, JMS, REST, CoAP, and XMPP; Technical Report; PrismTech Corporation: Woburn, MA, USA, 2017. [Google Scholar]

- Raggett, D. The Web of Things: Extending the Web into the Real World. In Proceedings of the 36th Conference on Current Trends in Theory and Practice of Computer Science (SOFSEM 2010), Špindlerův Mlýn, Czech Republic, 23–29 January 2010; van Leeuwen, J., Muscholl, A., Peleg, D., Pokorný, J., Rumpe, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 96–107. [Google Scholar]

- Filho, J.D.P.R.; da Silva e Silva, F.J.; Coutinho, L.R.; Gomes, B.D.T.P.; Endler, M. A Movement Activity Recognition Pervasive System for Patient Monitoring in Ambient Assisted Living. In Proceedings of the 31st Annual ACM Symposium on Applied Computing (SAC ’16), Pisa, Italy, 4–8 April 2016; pp. 155–161. [Google Scholar]

- Filho, J.D.P.R.; da Silva e Silva, F.J.; Coutinho, L.R.; Gomes, B.D.T.P. MHARS: A mobile system for human activity recognition and inference of health situations in ambient assisted living. Mob. Inf. Syst. 2016, 5, 48–55. [Google Scholar]

- Luckham, D.C. The Power of Events: An Introduction to Complex Event Processing in Distributed Enterprise Systems; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 2001. [Google Scholar]

- EsperTech. Esper–Complex Event Processing; EsperTech: Palo Alto, CA, USA, 2017. [Google Scholar]

- Eggum, M. Smartphone Assisted Complex Event Processing. Master’s Thesis, University of Oslo, Oslo, Norway, 2014. [Google Scholar]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A Public Domain Dataset for Human Activity Recognition Using Smartphones. In Proceedings of the 21th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN 2013), Bruges, Belgium, 24–26 April 2013. [Google Scholar]

- Hoseini-Tabatabaei, S.A.; Gluhak, A.; Tafazolli, R. A Survey on Smartphone-Based Systems for Opportunistic User Context Recognition. ACM Comput. Surv. 2013, 45. [Google Scholar] [CrossRef] [Green Version]

| Middleware | Quality of Context Provisioning | Quality of Context Monitoring | Heterogeneous Sensors Management | Reliable Data Delivery in Mobility Scenarios |

|---|---|---|---|---|

| AWARENESS | Focus on QoI, but with some support for QoS. | Not addressed. | Provides only an API for implementing adapters/wrappers for the sensors drivers. | Not addressed |

| COSMOS | Focus on QoI, only. | Not addressed. | Provides only API for implementing adapters/wrappers for the sensors drivers. | Not addressed. |

| COPAL | Greater focus on QoI, but with some QoS support. | Not addressed. | Provides an API for implementing specific adapters/wrappers for the sensors drivers; Provides a sensors management service. Service description is based on the UPnP. | Not addressed. |

| INCOME | Focus on QoI, only. | Not addressed. | Provides only API for implementing adapters/wrappers for the sensors drivers. | Not addressed. |

| SALES | Focus on QoS, only. | Not addressed. | Provides only API for implementing adapters/wrappers the sensors drivers. | Not addressed. |

| Configuration | Reliability | Average (ms) | Maximum (ms) | Minimum (ms) | Standard Deviation (ms) |

|---|---|---|---|---|---|

| Without Network (Micro Broker) | 0 | 62.25 | 66.61 | 59.60 | 3.20 |

| Without Network (Micro Broker) | 1 | 186.17 | 194.99 | 177.92 | 6.45 |

| Without Network (Micro Broker) | 2 | 236.68 | 241.77 | 232.87 | 3.35 |

| Average of Experiments without Network Usage | 161.70 | 167.79 | 156.80 | 4.33 | |

| Isolated Local Network | 0 | 74.37 | 80.79 | 71.22 | 3.94 |

| Isolated Local Network | 1 | 92.51 | 99.92 | 88.59 | 4.60 |

| Isolated Local Network | 2 | 126.23 | 131.18 | 121.82 | 4.48 |

| Average of Experiments using Isolated Local Network | 97.70 | 103.97 | 93.88 | 4.34 | |

| Fixed Internet (Wifi + ADSL) | 0 | 233.35 | 241.40 | 228.61 | 5.56 |

| Fixed Internet (Wifi + ADSL) | 1 | 417.04 | 490.48 | 375.22 | 46.57 |

| Fixed Internet (Wifi + ADSL) | 2 | 681.42 | 711.17 | 666.79 | 17.64 |

| Average of Experiments using Fixed Internet | 443.94 | 481.01 | 423.54 | 23.26 | |

| Mobile Internet (4G) | 0 | 262.55 | 273.69 | 254.81 | 7.98 |

| Mobile Internet (4G) | 1 | 472.12 | 483.05 | 456.64 | 9.98 |

| Mobile Internet (4G) | 2 | 807.14 | 845.54 | 782.28 | 25.36 |

| Average of Experiments using the 4G Internet | 513.94 | 534.09 | 497.91 | 14.44 | |

| Configuration | Reliability | Average (ms) | Maximum(ms) | Minimum(ms) | Standard Deviation (ms) |

|---|---|---|---|---|---|

| Without Network (Micro Broker) | 0 | 37.21 | 42.05 | 34.01 | 4.21 |

| Without Network (Micro Broker) | 1 | 31.34 | 36.91 | 26.15 | 3.88 |

| Without Network (Micro Broker) | 2 | 34.95 | 37.66 | 32.84 | 2.26 |

| Average of Experiments without Network Usage | 34.50 | 38.87 | 31.00 | 3.45 | |

| Isolated Local Network | 0 | 34.66 | 37.20 | 32.28 | 2.13 |

| Isolated Local Network | 1 | 37.14 | 39.44 | 34.36 | 1.83 |

| Isolated Local Network | 2 | 36.46 | 42.37 | 34.24 | 3.39 |

| Average of Experiments using Isolated Local Network | 36.09 | 39.67 | 33.63 | 2.45 | |

| Fixed Internet (Wifi + ADSL) | 0 | 33.79 | 37.34 | 31.75 | 2.31 |

| Fixed Internet (Wifi + ADSL) | 1 | 34.60 | 36.20 | 33.68 | 0.97 |

| Fixed Internet (Wifi + ADSL) | 2 | 35.97 | 40.06 | 33.28 | 3.11 |

| Average of Experiments using Fixed Internet | 34.79 | 37.87 | 32.90 | 2.13 | |

| Mobile Internet (4G) | 0 | 36.42 | 42.83 | 34.27 | 3.64 |

| Mobile Internet (4G) | 1 | 34.60 | 36.20 | 33.68 | 0.97 |

| Mobile Internet (4G) | 2 | 34.37 | 36.92 | 32.14 | 2.35 |

| Average of Experiments using the 4G Internet | 35.13 | 38.65 | 33.36 | 2.32 | |

| Configuration | Reliability | Average (ms) | Maximum (ms) | Minimum (ms) | Standard Deviation (ms) |

|---|---|---|---|---|---|

| Without Network (Micro Broker) | 0 | 99.46 | 108.19 | 93.60 | 7.35 |

| Without Network (Micro Broker) | 1 | 217.51 | 225.84 | 204.07 | 8.89 |

| Without Network (Micro Broker) | 2 | 271.64 | 278.91 | 266.51 | 5.31 |

| Average of Experiments without Network Usage | 196.20 | 204.31 | 188.06 | 7.18 | |

| Isolated Local Network | 0 | 112.46 | 120.45 | 105.30 | 6.52 |

| Isolated Local Network | 1 | 128.60 | 134.85 | 123.47 | 4.24 |

| Isolated Local Network | 2 | 162.69 | 167.02 | 156.37 | 4.49 |

| Average of Experiments using Isolated Local Network | 134.58 | 140.77 | 128.38 | 5.08 | |

| Fixed Internet (Wifi + ADSL) | 0 | 267.14 | 278.74 | 260.41 | 7.73 |

| Fixed Internet (Wifi + ADSL) | 1 | 440.07 | 472.06 | 412.94 | 24.68 |

| Fixed Internet (Wifi + ADSL) | 2 | 715.80 | 743.30 | 703.24 | 16.59 |

| Average of Experiments using Fixed Internet | 474.33 | 498.03 | 458.86 | 16.33 | |

| Mobile Internet (4G) | 0 | 298.97 | 309.12 | 289.59 | 9.24 |

| Mobile Internet (4G) | 1 | 506.72 | 519.25 | 490.32 | 10.77 |

| Mobile Internet (4G) | 2 | 844.15 | 882.05 | 822.06 | 23.86 |

| Average of Experiments using the 4G Internet | 549.95 | 570.14 | 533.99 | 14.62 | |

| Network Configuration | Reliability | Interval between Disconnections (s) | Average Loss Rate | Maximum Loss Rate | Minimum Loss Rate | Standard Deviation Loss Rate |

|---|---|---|---|---|---|---|

| Isolated Local Network | 0 | 120–180 | 3.8% | 4.2% | 3.6% | 0.3% |

| Isolated Local Network | 0 | 12–18 | 23.8% | 28.6% | 21.1% | 2.9% |

| Isolated Local Network | 1 | 120–180 | 0.0% | 0.0% | 0.0% | 0.0% |

| Isolated Local Network | 1 | 12–18 | 0.0% | 0.0% | 0.0% | 0.0% |

| Isolated Local Network | 2 | 120–180 | 0.0% | 0.0% | 0.0% | 0.0% |

| Isolated Local Network | 2 | 12–18 | 0.0% | 0.0% | 0.0% | 0.0% |

| Internet (Wifi + ADSL) | 0 | 120–180 | 3.8% | 3.9% | 3.5% | 0.2% |

| Internet (Wifi + ADSL) | 0 | 12–18 | 35.5% | 36.3% | 33.9% | 1.0% |

| Internet (Wifi + ADSL) | 1 | 120–180 | 0.0% | 0.0% | 0.0% | 0.0% |

| Internet (Wifi + ADSL) | 1 | 12–18 | 0.0% | 0.0% | 0.0% | 0.0% |

| Internet (Wifi + ADSL) | 2 | 120–180 | 0.0% | 0.0% | 0.0% | 0.0% |

| Internet (Wifi + ADSL) | 2 | 12–18 | 0.0% | 0.0% | 0.0% | 0.0% |

| 5 min | 10 min | 15 min | 20 min | 25 min | Average | Standard Deviation | |

|---|---|---|---|---|---|---|---|

| QoC Evaluator | 263 | 262 | 268 | 269 | 270 | 266 | 2.77 |

| Monitor | 213 | 213 | 216 | 219 | 280 | 214 | 3.14 |

| Minimum | Maximum | Average | Standard Deviation | |

|---|---|---|---|---|

| Battery Consumption | 15% | 16% | 15.6% | 0.55 |

| Middleware | Quality of Context Provisioning | Quality of Context Monitoring | Heterogeneous Sensors Management | Reliable Data Delivery in Mobility Scenarios |

|---|---|---|---|---|

| M-Hub/CDDL | Provides multiple QoI parameters and QoS policies. | Monitors various QoI parameters and QoS policies. | Provides an API for implementing specific adapters/wrappers for sensors drivers; | Provides support for multiple reliability levels; |

| Provides a generic and extensible sensors management service with native support for Bluetooth Classic and BLE technologies; | Provides an intermediate buffer which increases the reliability delivery; | |||

| Provides support for the sensor dynamic loading from the web software repositories. | Provides support also for disconnected operations and IP address exchange. | |||

| AWARENESS | Focus on QoI, but with some support for QoS. | Not addressed. | Provides only an API for implementing adapters/wrappers for the sensors drivers. | Not addressed. |

| COSMOS | Focus on QoI, only. | Not addressed. | Provides only API for implementing adapters/wrappers for the sensors drivers. | Not addressed. |

| COPAL | Greater focus on QoI, but with some QoS support. | Not addressed. | Provides an API for implementing specific adapters/wrappers for the sensors drivers; | Not addressed. |

| Provides a sensors management service. Service description is based on the UPnP. | ||||

| INCOME | Focus on QoI, only. | Not addressed. | Provides only API for implementing adapters/wrappers for the sensors drivers. | Not addressed. |

| SALES | Focus on QoS, only. | Not addressed. | Provides only API for implementing adapters/wrappers the sensors drivers. | Not addressed. |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gomes, B.D.T.P.; Muniz, L.C.M.; Da Silva e Silva, F.J.; Dos Santos, D.V.; Lopes, R.F.; Coutinho, L.R.; Carvalho, F.O.; Endler, M. A Middleware with Comprehensive Quality of Context Support for the Internet of Things Applications. Sensors 2017, 17, 2853. https://doi.org/10.3390/s17122853

Gomes BDTP, Muniz LCM, Da Silva e Silva FJ, Dos Santos DV, Lopes RF, Coutinho LR, Carvalho FO, Endler M. A Middleware with Comprehensive Quality of Context Support for the Internet of Things Applications. Sensors. 2017; 17(12):2853. https://doi.org/10.3390/s17122853

Chicago/Turabian StyleGomes, Berto De Tácio Pereira, Luiz Carlos Melo Muniz, Francisco José Da Silva e Silva, Davi Viana Dos Santos, Rafael Fernandes Lopes, Luciano Reis Coutinho, Felipe Oliveira Carvalho, and Markus Endler. 2017. "A Middleware with Comprehensive Quality of Context Support for the Internet of Things Applications" Sensors 17, no. 12: 2853. https://doi.org/10.3390/s17122853