1. Introduction

Motor imagery brain–computer interface (BCI) [

1] is significant for stroke patients, which can help in the recovery of damaged nerves and play an important role in assisting with rehabilitation training. The application spectrum of the BCI also extends to navigation, healthcare, military services, robotics, virtual gaming, communication, and controls [

2,

3]. The classification accuracy of motor imagery is of great importance in the performance of BCI systems. As for EEG signal processing, the high dimensions of features represent a technical challenge. It is necessary to eliminate the redundant features, which not only create an additional overhead of managing the space complexity but also might include outliers, thereby reducing classification accuracy [

4].

Numerous feature selection approaches have been proposed, including principal component analysis [

5], independent component analysis [

6], sequential forward search [

7], and kernel principal component analysis [

8]. These methods can significantly reduce the number of features, but there are still some research difficulties: even if the variance of components meets the requirements, the classification accuracy is still unsatisfactory, probably because they cannot remove redundant features.

Because of the large dimensions of the feature vector, the computational complexity can be increased dramatically, and the redundant features will lead to lower classification accuracy. Aiming at this problem, the genetic algorithm was applied to a motor imagery-based BCI system to reduce the feature vector and eliminate redundant features to solve the high dimension problem [

9]. The adaptive regression coefficients, and asymmetric power, spectrum, coherence, and phase locking values were extracted from MI EEG, and the genetic algorithm was used to select feature subsets from the above features, which obtained good results [

10]. Other evolutionary algorithms for feature selection include differential evolution [

11], artificial bee colony [

12], firefly [

13], and adaptive weight particle swarm optimization [

14].

The essential feature of a genetic algorithm (GA) lies in the global search of data for the group search strategy and evolutionary operator settings. Because of its generalizability, robustness, and easy operation, GAs have been widely used for feature selection [

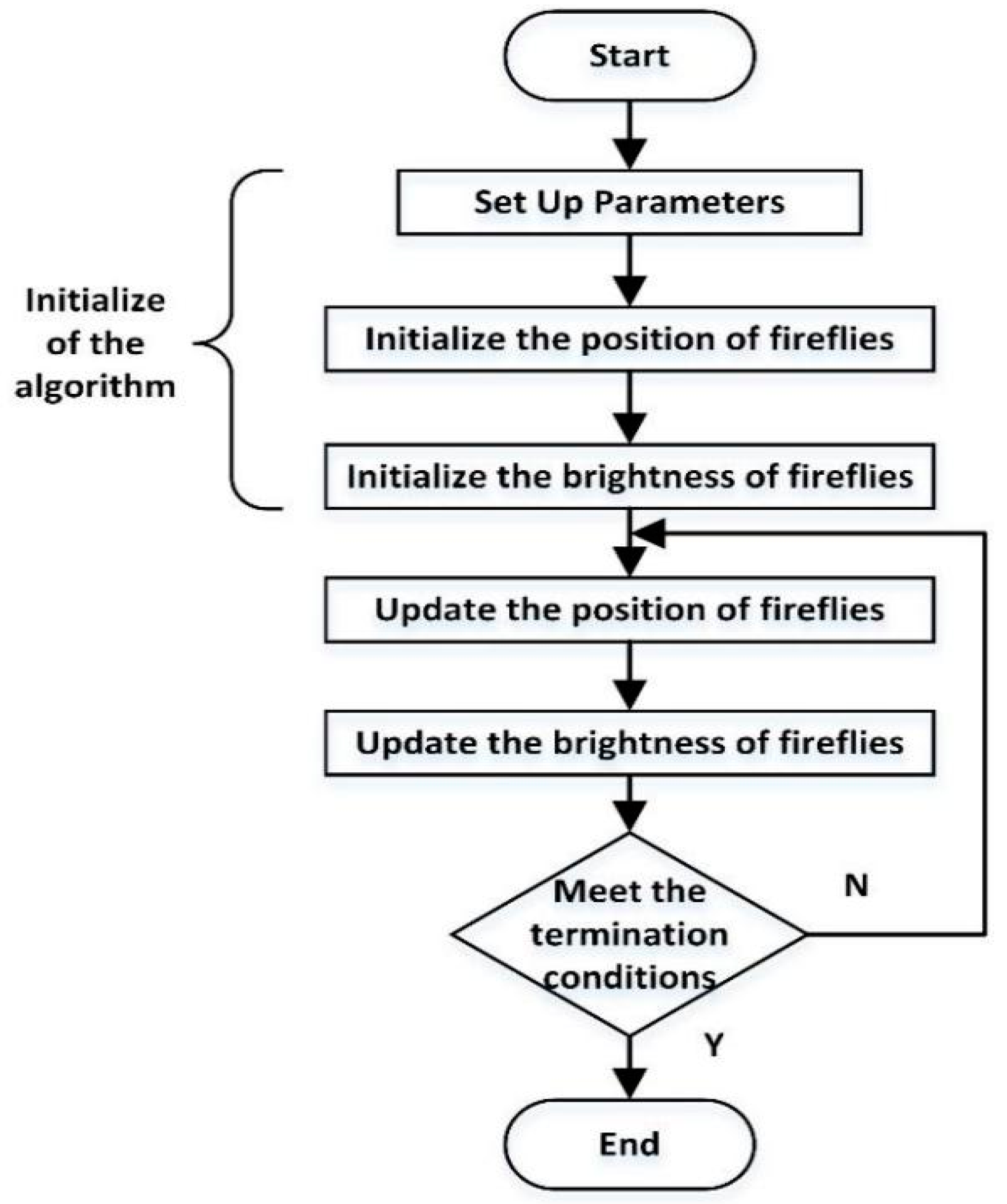

15]. However, they cannot take full advantage of local information, and it takes a long time to converge to the optimal solution. Because biological evolution is essentially a violent search algorithm, differential evolution is easy to fall into the local optimal solution as well as other intelligent algorithms. Adaptive weight particle swarm optimization is an improved algorithm for particle swarm optimization. It can adaptively update weights and ensure that the particles achieve good global search ability and fast convergence speed. The artificial bee colony algorithm has been successfully applied to many problems by simulating the behavior of bee colony intelligence in their search for food to optimize the actual optimization problem. The artificial bee colony algorithm has better global search ability, but its local search ability is weak. The firefly algorithm (FA) was proposed by Xin-She Yang in 2008, which simulates the principle of mutual attraction between individual fireflies to optimize the solution space [

16]. The parameters that need to be controlled are relatively few, and the precision optimization and convergence speed are high.

To sum up, the effect of the application of the evolutionary algorithm alone to feature selection is limited, for there is easy entrapment in the local optimum. The evolutionary algorithm and Q-learning algorithm were combined to propose a new feature selection method which is based on the time difference learning method and the firefly algorithm [

13].

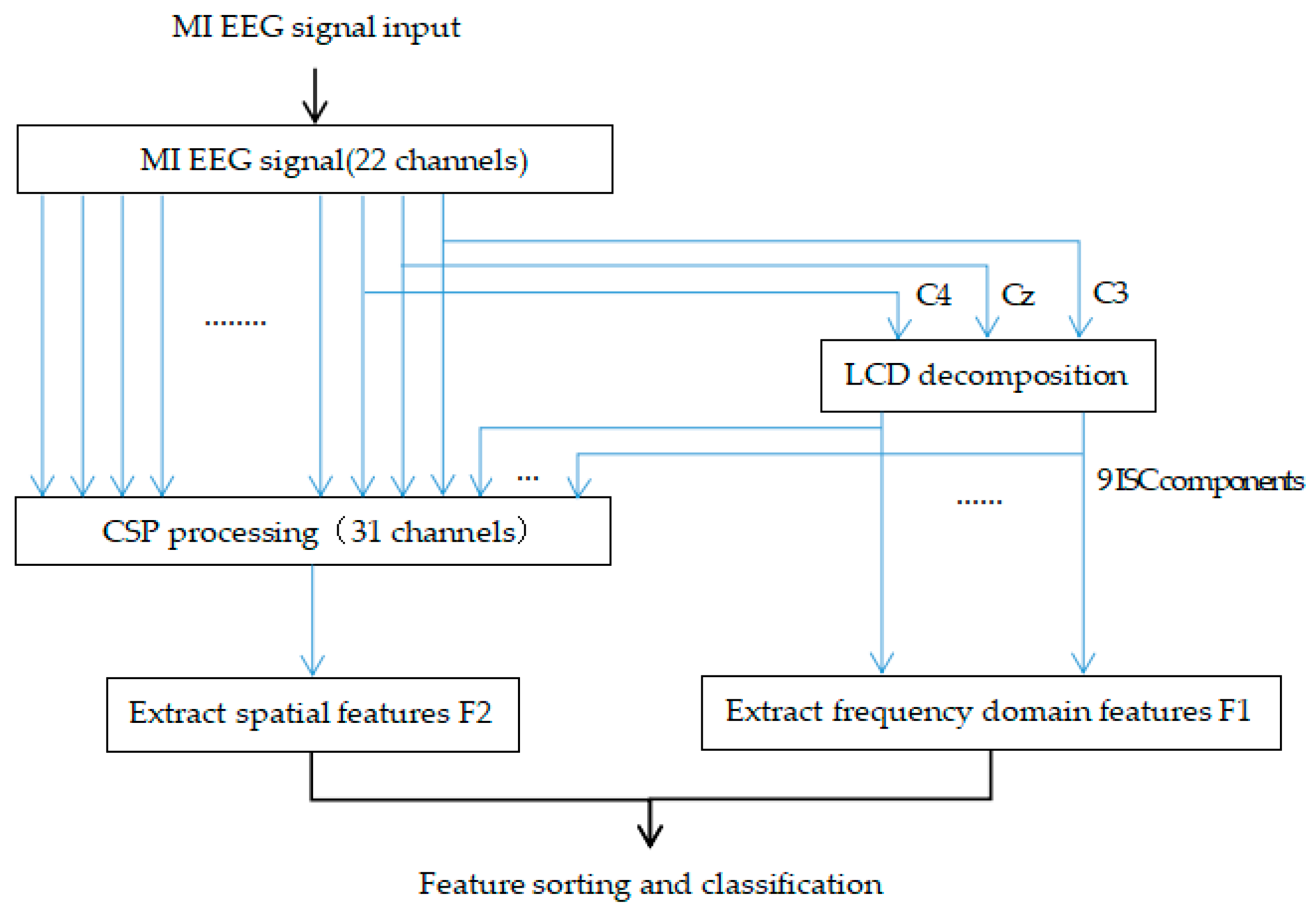

As a tool of parameter optimization, hypothesis testing, and game theory, learning automata have been applied to the fields of mathematical statistics, automatic control, communication networks, and economic systems. In this paper, we propose a method of combining learning automata (LA) with FA to optimize the parameters of the firefly algorithm, and to avoid getting the local optimum. Common spatial pattern and local characteristic-scale decomposition were used for MI EEG feature extraction. A method of combining LA with FA was employed to select feature subsets, and spectral regression discriminant analysis was used for classification.

4. Conclusions

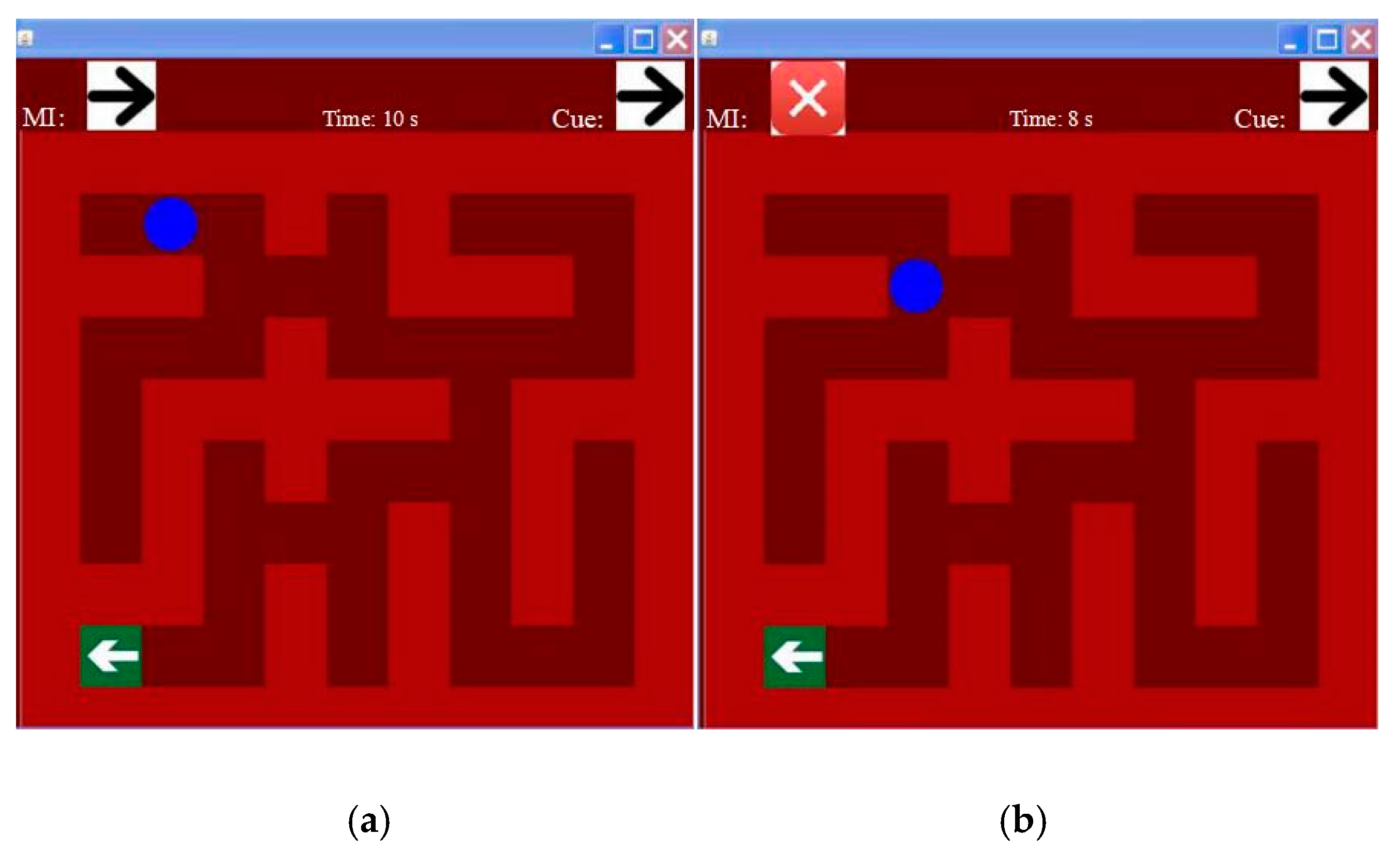

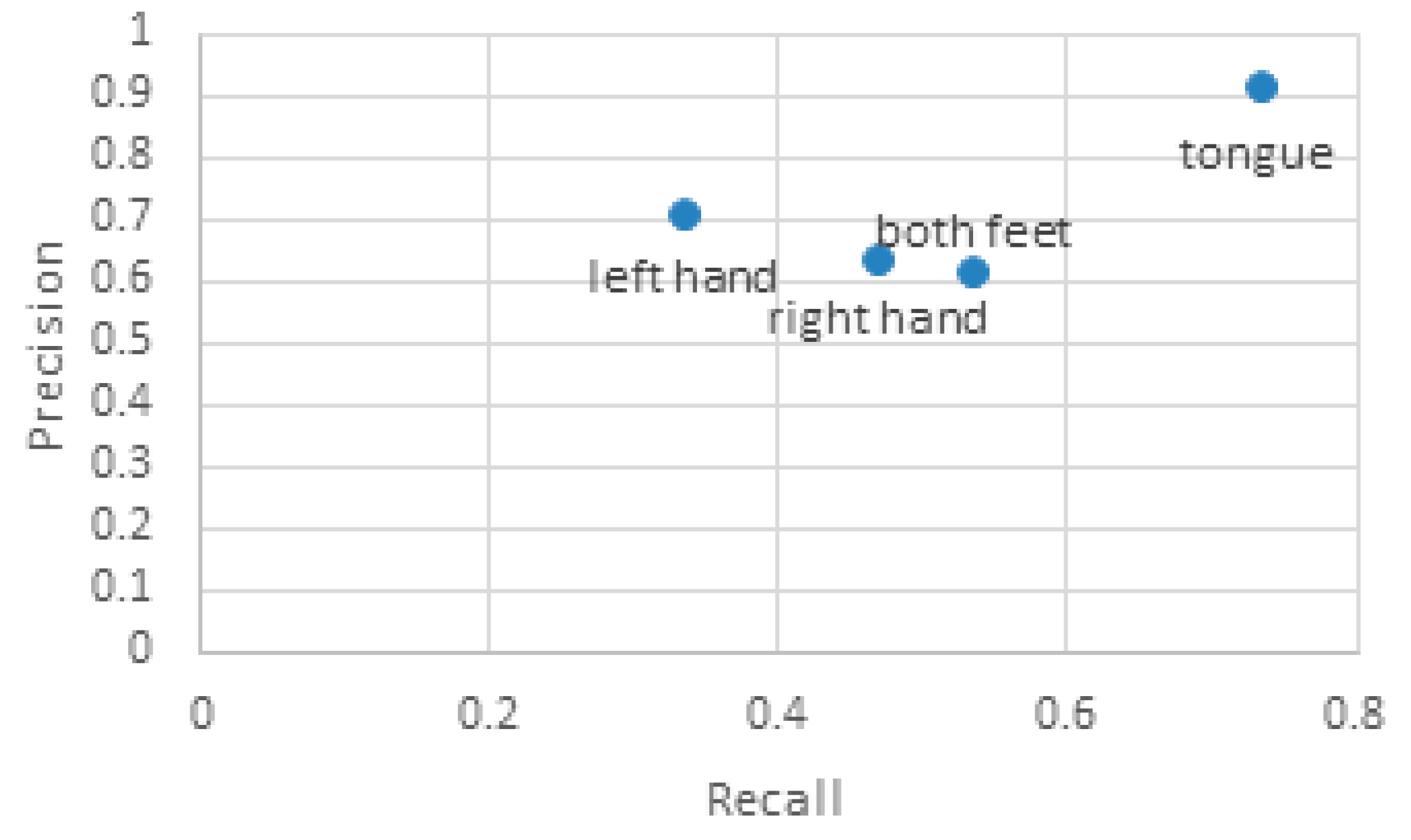

In this paper, we proposed a novel feature selection method based on the firefly algorithm and learning automata for four-class motor imagery EEG signal processing to avoid being entrapped in the local optimum. After feature extraction using a method of combining CSP and LCD from the EEG data, the proposed feature selection method FA-LA is used to obtain the best subset of features, and the SRDA is utilized for classification. Both the fourth brain–computer interface competition data and real-time data acquired in our physical experiments were used to confirm the validation of the proposed method. Experimental results show that the proposed FA-LA further improves the recognition accuracy of motor imagery EEG, mainly decreases the dimensions of the feature set, and is capable of operating in a real-time BCI system.

In future work, we will further improve the signal preprocessing method to remove eye artifacts in the EEG signal. How to determine the existence of eye artifacts, and how to adaptively remove the eye signals, are critical research issues. The future goal is to apply this method to the real-time control of devices such as robotic arms, which will be helpful in the rehabilitation of patients with functional paralysis.