Single-Shot Dense Depth Sensing with Color Sequence Coded Fringe Pattern

Abstract

:1. Introduction

2. Mathematical Model for Sequence Encoding

3. Overview of the System

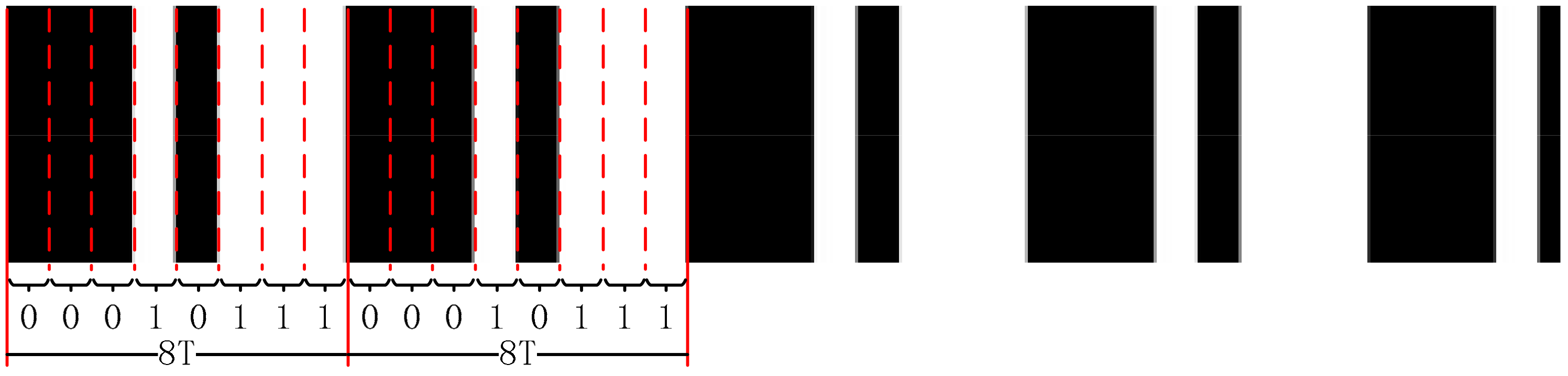

4. Color Sequence Coded Fringe Pattern

4.1. Phase-Coding Based on the Intensity Information

4.2. De Bruijn Coding Based on the Color Information

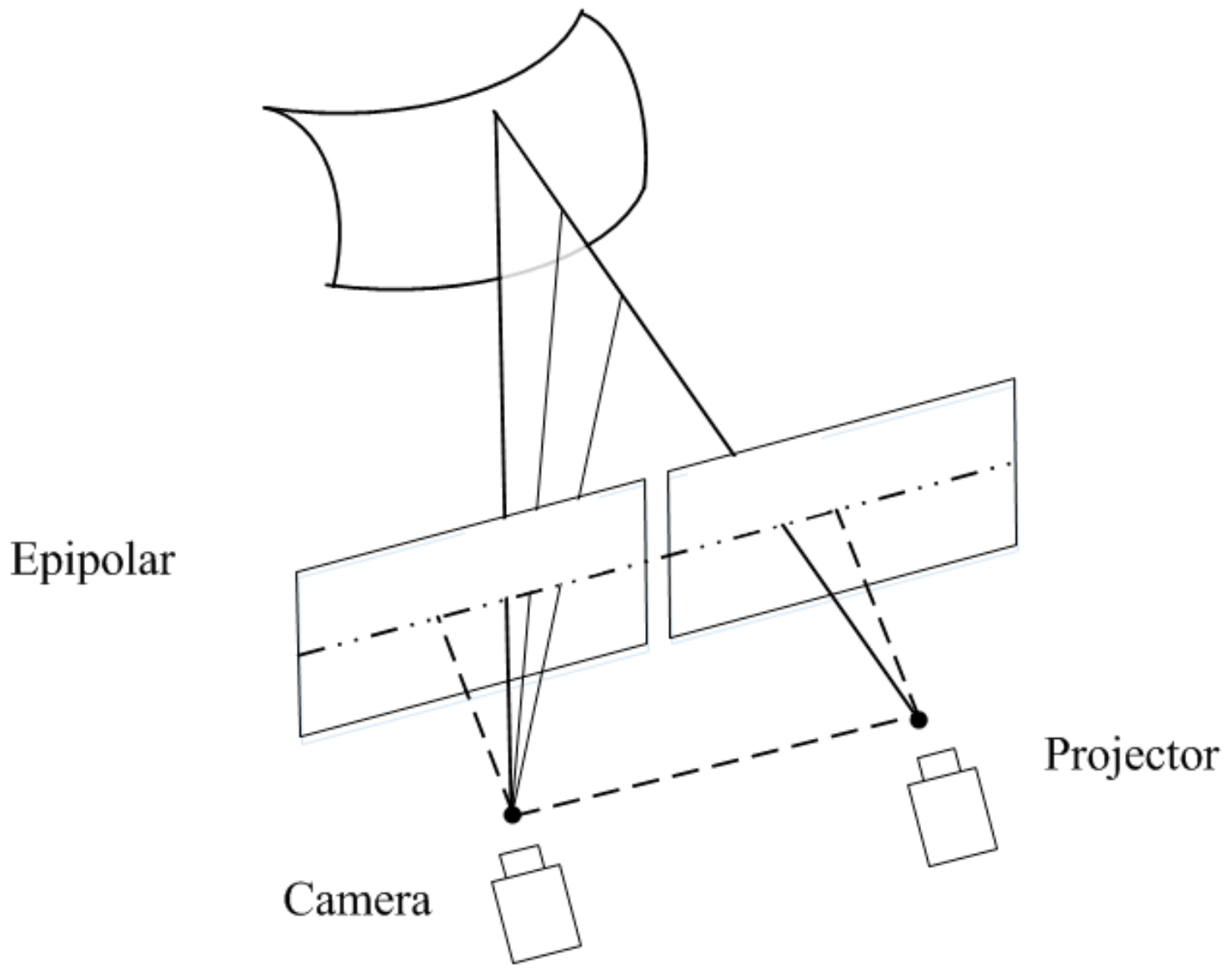

5. Projector–Camera Stereo Matching

5.1. Phase Estimation

5.2. Color Decoding

5.3. Phase Unwrapping Based on De Bruijn Sequence

5.4. Phase Based Stereo Matching

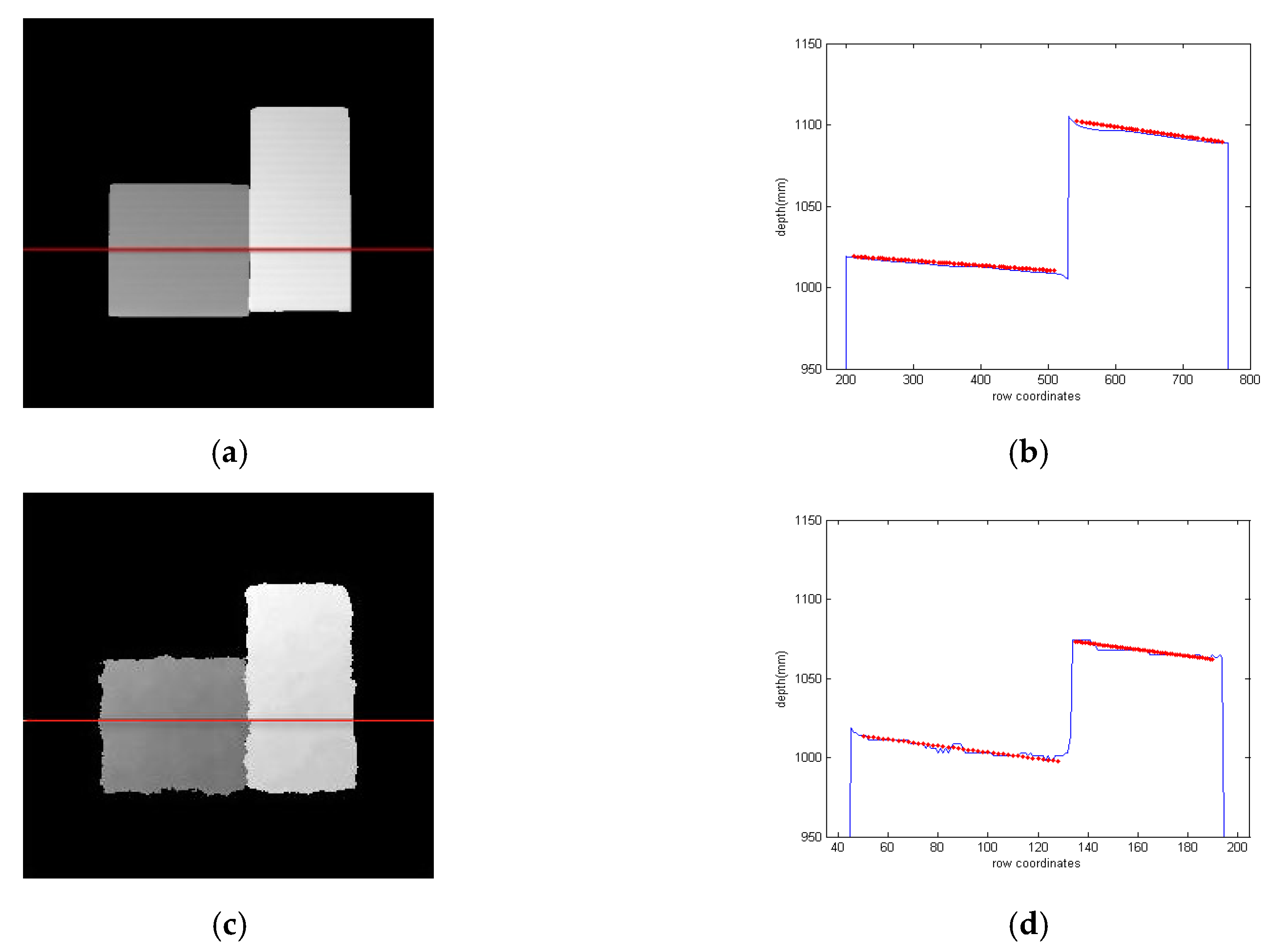

5.5. Simulation Experiments of the Proposed Method

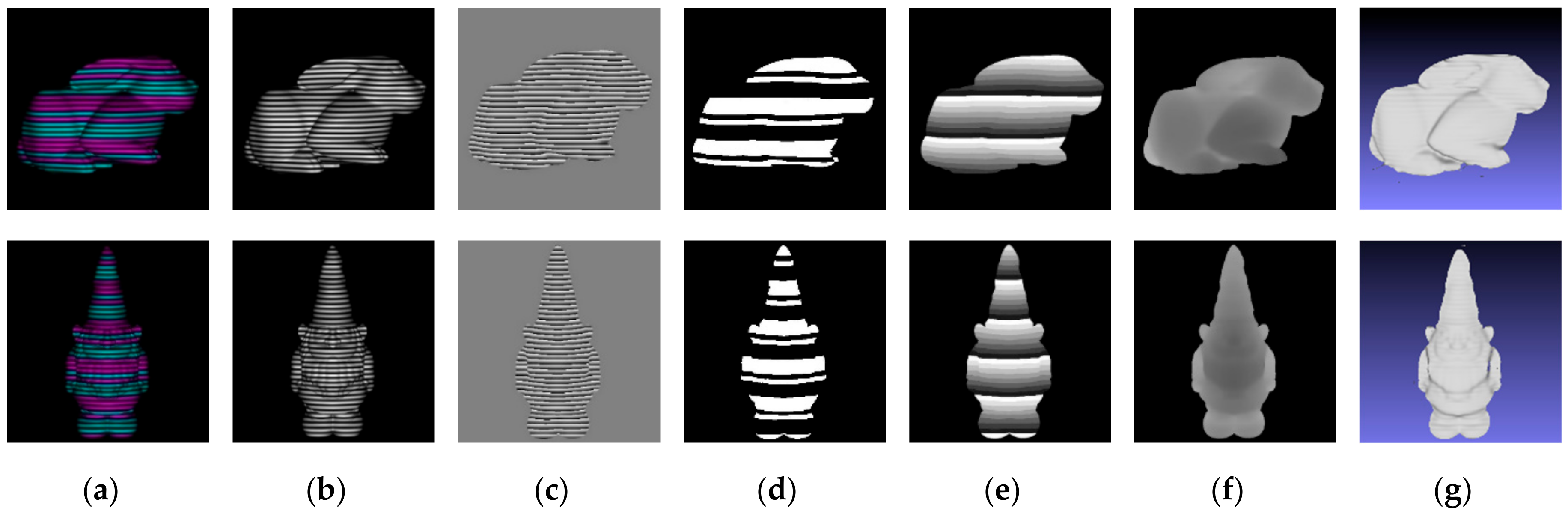

6. Experiment Results in Practice

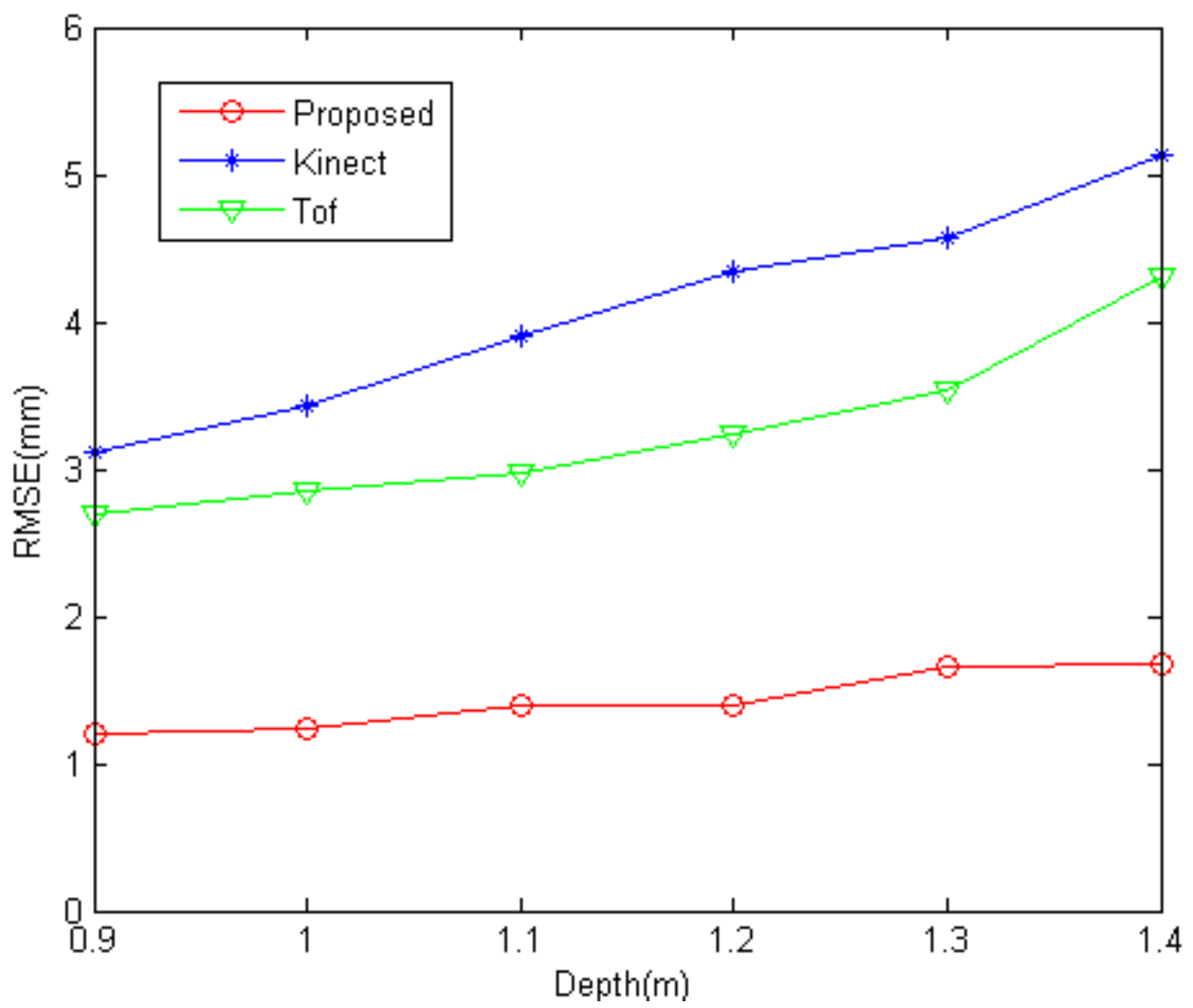

6.1. Quantitative Analysis

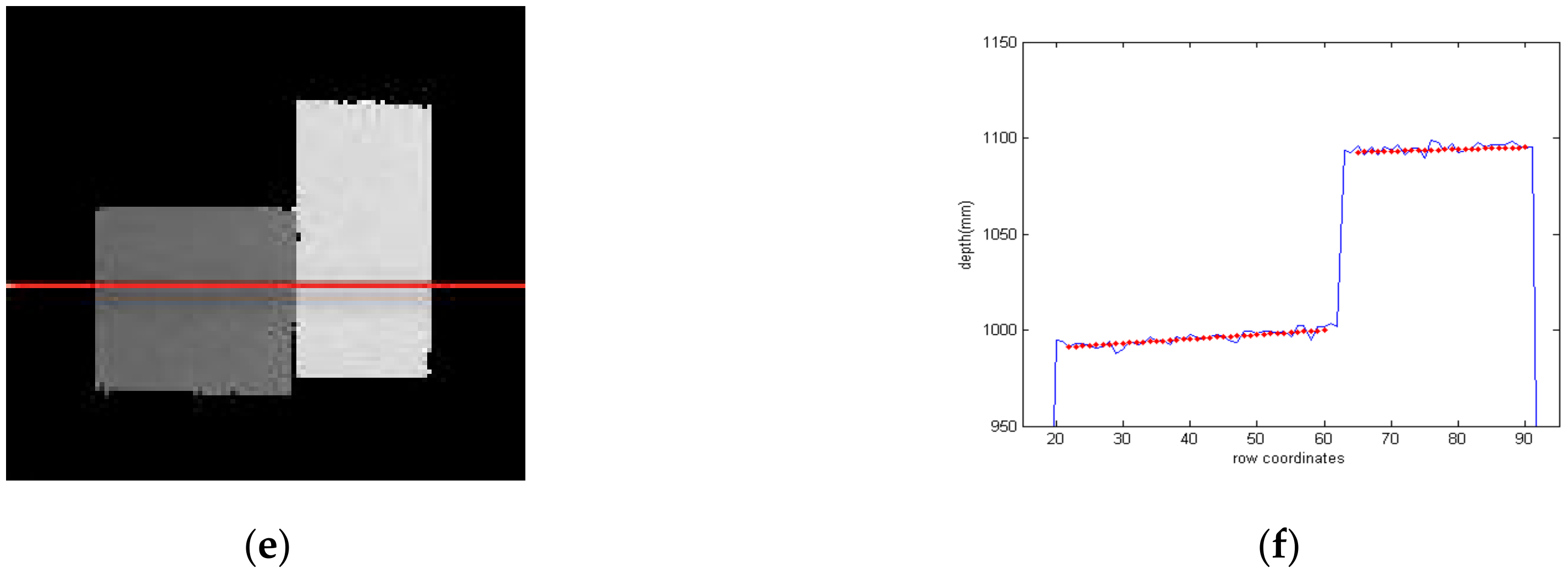

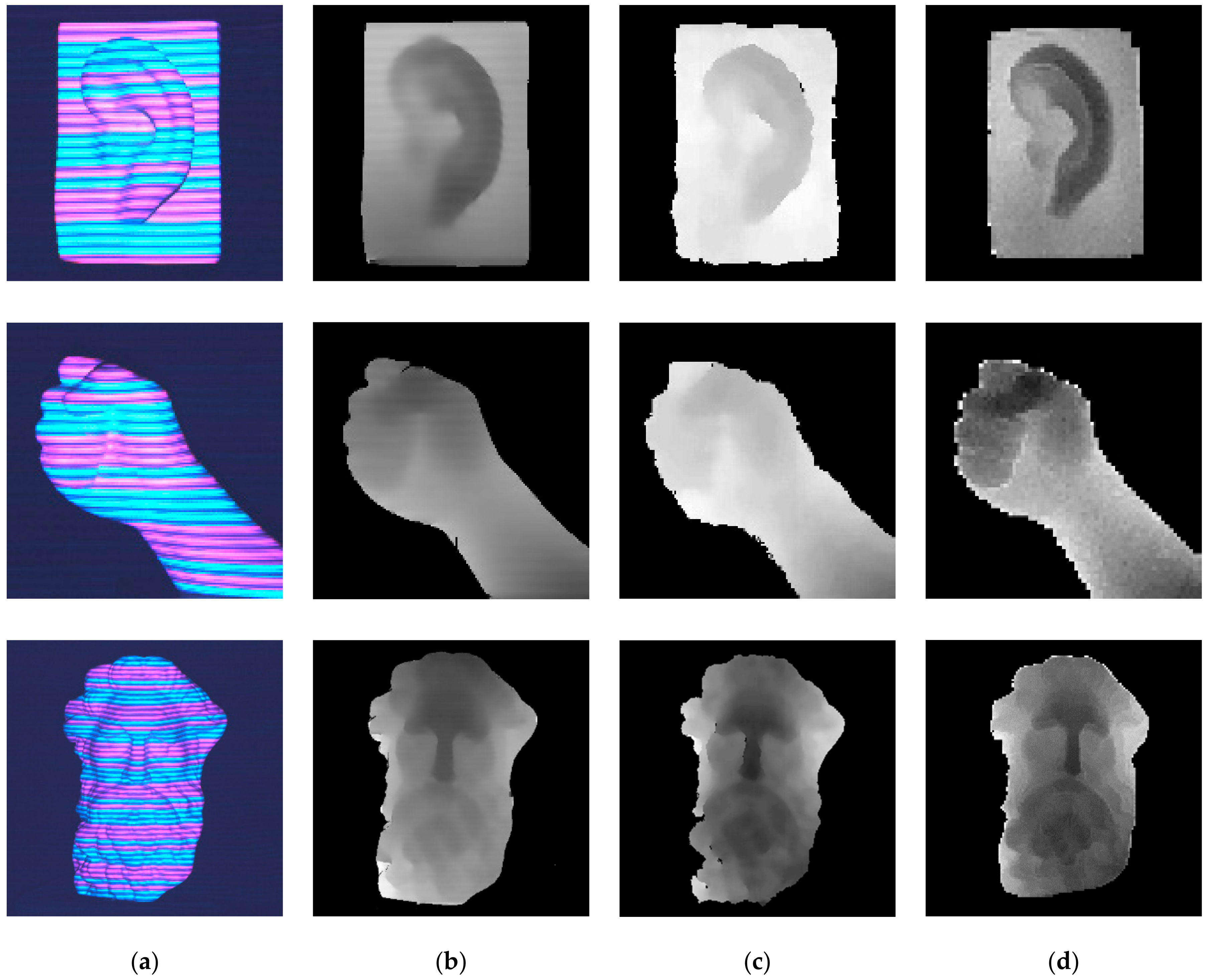

6.2. Qualitative Results

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Lilley, F.; Lalor, M.J.; Burton, D.R. Robust fringe analysis system for human body shape measurement. OPTICE 2000, 39, 187–195. [Google Scholar] [CrossRef]

- Genovese, K.; Pappalettere, C. Whole 3D shape reconstruction of vascular segments under pressure via fringe projection techniques. Opt. Lasers Eng. 2006, 44, 1311–1323. [Google Scholar] [CrossRef]

- Lin, C.-H.; He, H.-T.; Guo, H.-W.; Chen, M.-Y.; Shi, X.; Yu, T. Fringe projection measurement system in reverse engineering. J. Shanghai Univ. 2005, 9, 153–158. [Google Scholar] [CrossRef]

- Zhang, Z.; Jing, Z.; Wang, Z.; Kuang, D. Comparison of fourier transform, windowed fourier transform, and wavelet transform methods for phase calculation at discontinuities in fringe projection profilometry. Opt. Lasers Eng. 2012, 50, 1152–1160. [Google Scholar] [CrossRef]

- Gorthi, S.S.; Rastogi, P. Fringe projection techniques: Whither we are? Opt. Lasers Eng. 2010, 48, 133–140. [Google Scholar] [CrossRef]

- Quan, C.; Chen, W.; Tay, C.J. Phase-retrieval techniques in fringe-projection profilometry. Opt. Lasers Eng. 2010, 48, 235–243. [Google Scholar] [CrossRef]

- Salvi, J.; Fernandez, S.; Pribanic, T.; Llado, X. A state of the art in structured light patterns for surface profilometry. Pattern Recognit. 2010, 43, 2666–2680. [Google Scholar] [CrossRef]

- Posdamer, J.L.; Altschuler, M. Surface measurement by space-encoded projected beam systems. Comput. Graph. Image Process. 1982, 18, 1–17. [Google Scholar] [CrossRef]

- Gupta, M.; Nayar, S.K. Micro phase shifting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 813–820. [Google Scholar]

- Tuliani, J. De bruijn sequences with efficient decoding algorithms. Discret. Math. 2001, 226, 313–336. [Google Scholar] [CrossRef]

- Monks, T.; Carter, J.; Shadle, C. Colour-encoded structured light for digitisation of real-time 3D data. In Proceedings of the International Conference on Image Processing and its Applications, Maastricht, The Netherlands, 7–9 April 1992; pp. 327–330. [Google Scholar]

- Li, Q.; Li, F.; Shi, G.; Qi, F.; Shi, Y.; Gao, S. Dense depth acquisition via one-shot stripe structured light. In Proceedings of the Conference on Visual Communications and Image Processing (VCIP), Sarawak, Malaysia, 17–20 November 2013; pp. 1–6. [Google Scholar]

- Yang, Z.; Xiong, Z.; Zhang, Y.; Wang, J.; Wu, F. Depth acquisition from density modulated binary patterns. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 25–32. [Google Scholar]

- Chen, S.; Li, Y.; Zhang, J. Realtime structured light vision with the principle of unique color codes. In Proceedings of the IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 429–434. [Google Scholar]

- Morano, R.A.; Ozturk, C.; Conn, R.; Dubin, S.; Zietz, S.; Nissanov, J. Structured light using pseudorandom codes. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 322–327. [Google Scholar] [CrossRef]

- Takeda, M.; Mutoh, K. Fourier transform profilometry for the automatic measurement of 3-D object shapes. Appl. Opt. 1983, 22, 3977–3982. [Google Scholar] [CrossRef] [PubMed]

- Song, L.; Chang, Y.; Xi, J.; Guo, Q.; Zhu, X.; Li, X. Phase unwrapping method based on multiple fringe patterns without use of equivalent wavelengths. Opt. Commun. 2015, 355, 213–224. [Google Scholar] [CrossRef]

- Lohry, W.; Chen, V.; Zhang, S. Absolute three-dimensional shape measurement using coded fringe patterns without phase unwrapping or projector calibration. Opt. Express 2014, 22, 1287–1301. [Google Scholar] [CrossRef] [PubMed]

- Lilienblum, E.; Michaelis, B. Optical 3D surface reconstruction by a multi-period phase shift method. JCP 2007, 2, 73–83. [Google Scholar] [CrossRef]

- Yu, S.; Zhang, J.; Yu, X.; Sun, X.; Wu, H. Unequal-period combination approach of gray code and phase-shifting for 3-D visual measurement. Opt. Commun. 2016, 374, 97–106. [Google Scholar] [CrossRef]

- Zhang, S.; Yau, S.-T. High-resolution, real-time 3D absolute coordinate measurement based on a phase-shifting method. Opt. Express 2006, 14, 2644–2649. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Van Der Weide, D.; Oliver, J. Superfast phase-shifting method for 3-D shape measurement. Opt. Express 2010, 18, 9684–9689. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Liu, K.; Hao, Q.; Lau, D.L.; Hassebrook, L.G. Period coded phase shifting strategy for real–time 3-D structured light illumination. IEEE Trans. Image Process. 2011, 20, 3001–3013. [Google Scholar] [CrossRef] [PubMed]

- Su, X.; Chen, W. Fourier transform profilometry: A review. Opt. Lasers Eng. 2001, 35, 263–284. [Google Scholar] [CrossRef]

- Berryman, F.; Pynsent, P.; Cubillo, J. The effect of windowing in fourier transform profilometry applied to noisy images. Opt. Lasers Eng. 2004, 41, 815–825. [Google Scholar] [CrossRef]

- Guo, H.; Huang, P.S. Absolute phase technique for the fourier transform method. OPTICE 2009, 48, 043609. [Google Scholar] [CrossRef]

- Xiao, Y.S.; Xian-Yu, S.U.; Zhang, Q.C.; Ze-Ren, L.I. 3-D profilometry for the impact process with marked fringes tracking. Opto-Electron. Eng. 2007, 34, 46–52. [Google Scholar]

- Budianto, B.; Lun, P.K.; Hsung, T.C. Marker encoded fringe projection profilometry for efficient 3D model acquisition. Appl. Opt. 2014, 53, 7442–7453. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; An, Y.; Zhang, S. Single-shot absolute 3D shape measurement with fourier transform profilometry. Appl. Opt. 2016, 55, 5219. [Google Scholar] [CrossRef] [PubMed]

- Pagès, J.; Salvi, J.; Collewet, C.; Forest, J. Optimised de bruijn patterns for one-shot shape acquisition. Image Vis. Comput. 2005, 23, 707–720. [Google Scholar] [CrossRef]

- Su, W.H. Projected fringe profilometry using the area-encoded algorithm for spatially isolated and dynamic objects. Opt. Express 2008, 16, 2590–2596. [Google Scholar] [CrossRef] [PubMed]

- Falcao, G.; Hurtos, N.; Massich, J. Plane-based calibration of a projector-camera system. Vibot Master 2008, 9. [Google Scholar]

- Camera Calibration Toolbox for Matlab. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/index.html (accessed on 26 February 2017).

- Meshlab Software. Available online: http://www.meshlab.net/ (accessed on 14 November 2016).

| White | Red | Green | Blue | Yellow | Pink | Cyan | |

|---|---|---|---|---|---|---|---|

| RGB | 255,255,255 | 255,0,0 | 0,255,0 | 0,0,255 | 255,255,0 | 255,0,255 | 0,255,255 |

| Errors (unit: mm) | 0.42 | 0.83 | 0.84 | 0.41 | 0.46 | 0.55 | 0.56 |

| Camera | Projector | |

|---|---|---|

| focal length | 2312.5320 | 2227.9948 |

| principal points | ||

| tangential distortions | ||

| radial distortions |

| The Proposed Method | Kinect | ToF Camera | |

|---|---|---|---|

| The cube | 0.8366 | 1.4665 | 1.8699 |

| The cuboid | 1.3345 | 1.4599 | 1.9811 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, F.; Zhang, B.; Shi, G.; Niu, Y.; Li, R.; Yang, L.; Xie, X. Single-Shot Dense Depth Sensing with Color Sequence Coded Fringe Pattern. Sensors 2017, 17, 2558. https://doi.org/10.3390/s17112558

Li F, Zhang B, Shi G, Niu Y, Li R, Yang L, Xie X. Single-Shot Dense Depth Sensing with Color Sequence Coded Fringe Pattern. Sensors. 2017; 17(11):2558. https://doi.org/10.3390/s17112558

Chicago/Turabian StyleLi, Fu, Baoyu Zhang, Guangming Shi, Yi Niu, Ruodai Li, Lili Yang, and Xuemei Xie. 2017. "Single-Shot Dense Depth Sensing with Color Sequence Coded Fringe Pattern" Sensors 17, no. 11: 2558. https://doi.org/10.3390/s17112558