Comparing the Performance of Indoor Localization Systems through the EvAAL Framework

Abstract

:1. Introduction

2. Diversity Problem Review

2.1. Diversity in Research Systems and Metrics

- Out of Sight [59] is a toolkit for tracking occluded human joint positions based on Kinect cameras. Some in-room test were run for evaluating different contexts (stationary, stepping, walking, presence of obstacle and oclusion). The mean error on the three axes (x, y, z) and the mean positioning error were provided.

- A Kalman filtering-based localization and tracking for the IoT paradigm was proposed in [60], where a simulation in a 1000 m by 1000 m sensor field was performed. The results and comparisons were based on the trajectory plots, and the location and velocity errors in the x and y axes over time (track).

- A smartphone-based tracking system using Hidden Markov Model pattern recognition was developed in [22]. They carried out their experiments in the New Library of Wuhan University using three different device models with over 50 subjects walking over an aggregate distance of over 40 km. The CDF and mean accuracy were used to report the results.

2.2. Diversity in the Results Reported in Surveys

2.3. Diversity in Datasets

- A Database related to a Wi-Fi-based positioning system on the second floor of an office building on the campus of the University of Mannheim, released in [64]. The operation area was about 57 m × 32 m but only 221 square meters were covered. The test environment had twelve APs. Seven of them were administered by the university technicians, whereas the others were installed in the nearby buildings and offices. Thirteen additional access points were added for localization purposes.

- Mobility traces at five different sites (NCSU university campus, KAIST university campus, New York City, Disney World -Orlando- and North Carolina state fair) [65].

- Two trace files (one for Wi-Fi and one for Bluetooth) collected by the University of Illinois Movement (UIM) framework using Google Android phones [66].

- One data set to aid the development and evaluation of indoor location in complex indoor environments using round-trip time-of-flight (RToF) and magnetometer measurements [67]. It contains RToF and magnetometer measures taken in the 26 m × 24 m New Wing Yuan supermarket in Sunnyvale, CA, USA. The data was collected during working hours over a period of 15 days.

- The database donated in [68] contains the RSS (Radio Signal Strength) data collected with a mobile robot in two environments: indoor (KTH) and outdoor (Dortmund). The RSS metric was used to collect the RSS data in terms of dBm. The mobile robot location was recorded using odometry (dead reckoning).

- The UJIIndoorLoc database is a Wi-Fi fingerprinting database collected at three buildings of the Jaume I university (UJI) campus for indoor navigation purposes. It was collected by means of more than 20 devices and 20 people [70] and was used for the off-site track of the 2015 EvAAL-ETRI Competition [25,71].

- The Indoor User Movement Prediction from RSS Data Set represents a real-life benchmark in the area of Active and Assisted Living applications. The database introduces a binary classification task, which consists in predicting the pattern of user movements in real-world office environments from time-series generated by a Wireless Sensor Network (WSN) [74].

- The Geo-Magnetic field and WLAN dataset for indoor localisation from wristband and smartphone data set contains Wi-Fi and magnetic filed fingerprints, together with inertial sensor data during two campaigns performed in the same environment [75].

- The Signal processing for the wireless positioning group (Tampere University of Technology, Finland), provides an open repository of source software and measurement data on their website (http://www.cs.tut.fi/tlt/pos/Software.htm). Indoor WLAN measurement data in two four-floor buildings for indoor positioning studies are provided. The data contains the collected RSS values, the Access Points ID (mapped to integer indices) and the coordinates; both the training data and several tracks for the estimation part are provided as indicated in [76,77]. Other datasets with GSM, UMTS and GNSS data are also provided.

- PerfLoc [78] is also running a competition about developing the best possible indoor localization and tracking applications for Android smartphones. The competition participants are required to use a huge database collected by The National Institute of Standard and Technology (NIST) by means of four different Android devices.

2.4. Diversity in Competitions

2.4.1. The Microsoft Competition

2.4.2. The IPIN Competition

- IPIN competitions take measurements while naturally moving through a predefined trajectory unknown to competitors, instead of evaluating the competitors by standing still at evaluation points;

- IPIN competitions are generally done in big challenging multi-floor environments, possibly on multiple buildings, with significant path lengths and duration, instead of evaluating competitors in small single-floor environments;

- IPIN competitions generally require the competitors to interface with an independent real-time measurement application and test on an independent actor;

- The final score metrics is the third quartile of the positioning error in IPIN, which makes the accuracy results less prone to the influence of outliers and more in line with demanded accuracy for commercial systems.

2.4.3. The Scenarios in the IPIN 2016 Competition

- positioning of people in real time;

- positioning of people off-line;

- robotic positioning in real time.

- Track 1: Smartphone-based (on-site);

- Track 2: Pedestrian dead reckoning positioning (on-site).

- Track 3: Smartphone-based (off-site)

- Track 4: Indoor mobile robot positioning (on-site)

2.4.4. Other Competitions

3. EvAAL Framework Applied to IPIN Competition

3.1. Benchmarking Metrics

3.1.1. The EVARILOS Benchmarking Framework

3.1.2. The EvAAL Benchmarking Framework

- Natural movement of an actor: the agent testing a localization system is an actor walking with a regular pace along the path. The actor can rest in a few points and walk again until the end of the path.

- Realistic environment: the path the actor walks is in a realistic setting: the first EvAAL competitions were done in living labs.

- Realistic measurement resolution: the minimum time and space error considered are relative to people’s movement. The space resolution for a person is defined by the diameter of the body projection on the ground, which we set to 50 cm. The time resolution is defined by the time a person takes to walk a distance equal to the space resolution. In an indoor environment, considering a maximum speed of 1 m/s, the time resolution is 0.5 s. These numbers are used to define the accuracy of measurements. When the actor walks, measurements are taken when he/she steps over a set of predefined points. The actor puts his/her feet on marks made on the floor when a bell chimes, once per second. As long as he/she does not make time and space errors greater than the measurement resolutions, which is easy for a trained agent, the test is considered adequate.

- Third quartile of point Euclidean error: the accuracy score is based on the third quartile of the error, which is defined as the 2-D Euclidean distance between the measurement points and the estimated points. More discussion on this is in the next section. Using the third quartile of point errors, the system under measure provides an error below the declared one in three cases out of four, which is in line with the perceived usefulness of the experimental IPS. Reference [86] argues that using linear metrics such as the mean may lead to strange and unwanted behaviour if they are not properly checked because of, for instance, the presence of outliers. A clear example of this behaviour can be seen in [25], where a competitor of the off-site track provided severe errors in very few cases and its average error and root mean squared error were negatively highly affected, however the competition metric, the third quartile, showed that the solution proposed was not as bad as the averaged error had shown. More detailed discussion is found in [21].

- Secret path: the final path is disclosed immediately before the test starts, and only to the competitor whose system is under test. This prevents competitors from designing systems exploiting specific features of the path.

- Independent actor: the actor is an agent not trained to use the localization system.

- Independent logging system: the competitor system estimates the position at a rate of twice per second, and sends the estimates on a radio network provided by the EvAAL committee. This prevents any malicious actions from the competitors. The source code of the logging system is publicly available.

- Identical path and timing: the actor walks along the same identical path with the same identical timing for all competitors, within time and space errors within the above defined resolutions.

3.2. Applying EvAAL Criteria to IPIN 2016 Competition

- The two first core EvAAL criteria are followed closely: in Tracks 1–3 the actor moves naturally in a realistic and complex environment spanning several floors of one (for Tracks 1 and 2) or few (Track 3) big buildings; in Track 4, the robot moves at the best of its capabilities in a complex single-floor track.

- The same holds for the third core criterion: the space-time error resolution for Tracks 1–3, where the agent is a person, are 0.5 m and 0.5 s, while space-time resolution for Track 4, where the agent is a robot, are ≈1 mm and 0.1 s. In Track 4, only the adherence to the trajectory is considered given the overwhelming importance of space accuracy with respect to time accuracy as far as robots are concerned.

- The last core criterion of the EvAAL framework is followed as well, as the third quartile of the point error is used as the final score. The reason behind using a point error as opposed to comparing trajectories using, for example, the Fréchet distance [38,88] is that the latter is less adequate to navigation purposes, for which the real-time identification of the position is more important than the path followed.

- In tracks 1 and 2, the path is kept secret only until one hour before the competition begins, because it would be impractical to keep it hidden from the competitors after the first one in a public environment. However, competitors could not add this knowledge to their systems. In Track 3, the competitors work with blind datasets (logfiles) in the evaluation so the path can be kept secret. In Track 4, a black cover is used to avoid any visual reference of the path and other visual markers.

- The agent is independent for all tracks apart from Track 2, where the technical difficulties of the track suggested that the actor was allowed to be one of the members of the competing team.

- The logging system is only independent in Track 1. An exception was added in Track 2 for the logging system, which was done by the competitors themselves rather than by an independent application. In Track 3, competitors submitted the results via email before a deadline. In Track 4, the competitors had to submit the results via email within a 2-min window after finishing the evaluation track.

- The path and timing was identical for all competitors in Track 3. The path and timing was also identical for all competitors in Track 4. The paths are slightly different in tracks 1 and 2, which involved positioning people in real-time, because the path was so long that it would have been impossible to force the actors to follow exactly the same path with the same timing many times.

4. The IPIN 2016 Competition Tracks

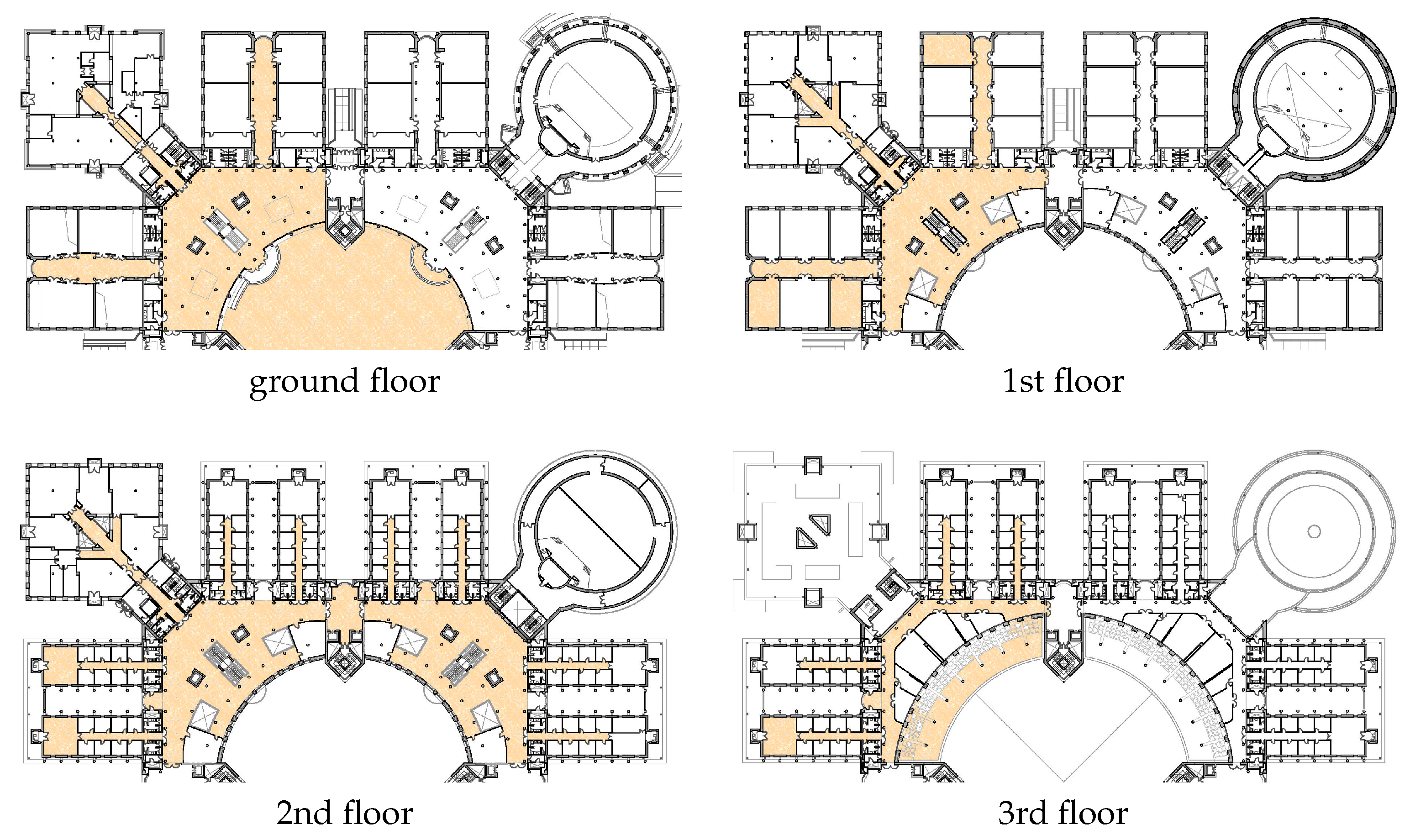

4.1. Tracks 1 and 2: Positioning of People in Real Time

4.1.1. Surveying the Area

4.1.2. Evaluation Path

- stairs (for both Tracks 1 and 2) and a lift (Track 1 only) are used to move between floors;

- the path traverses four floors and includes the patio, for a total of 56 key points marked on the floor, 6000 m2 indoor and 1000 m2 outdoor;

- actors stay still for few seconds in six locations and for about 1 min in three locations; this cadence is intended to reproduce the natural behaviour of humans while moving in an indoor environment;

- actors move at a natural pace, typically at a speed of around 1 m/s;

- total length and duration are 600 m, 15’ ± 2’, which allows to stress the competing apps in realistic conditions.

- key points were placed in easily accessible places where people usually step over;

- distance between key points ranged from about 3 to 35 m, with a median of 8 m;

- each key point was visible from the previous one, to ease the movement of the actor and reduce random paths between two consecutive key points.

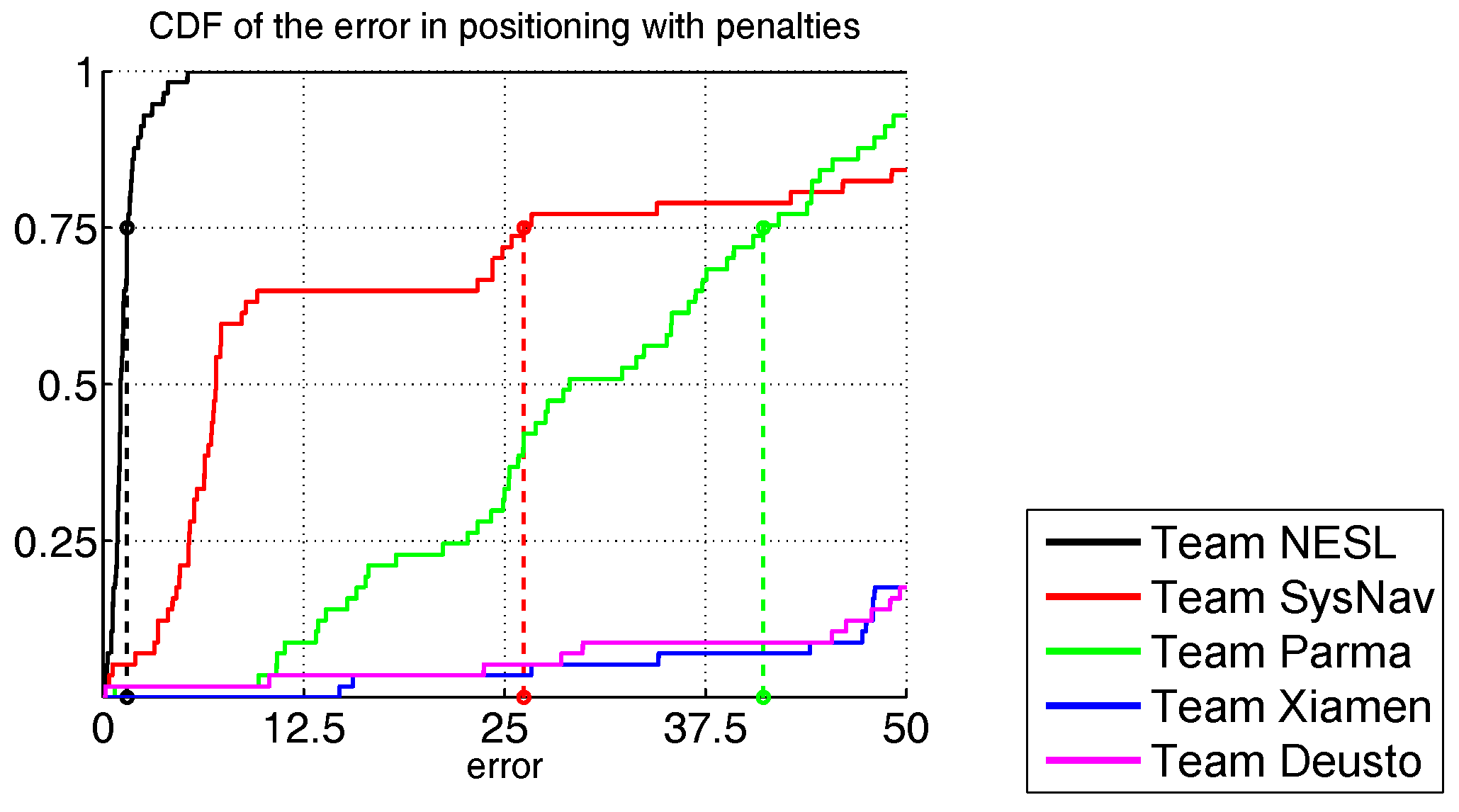

4.1.3. Track 1 Results

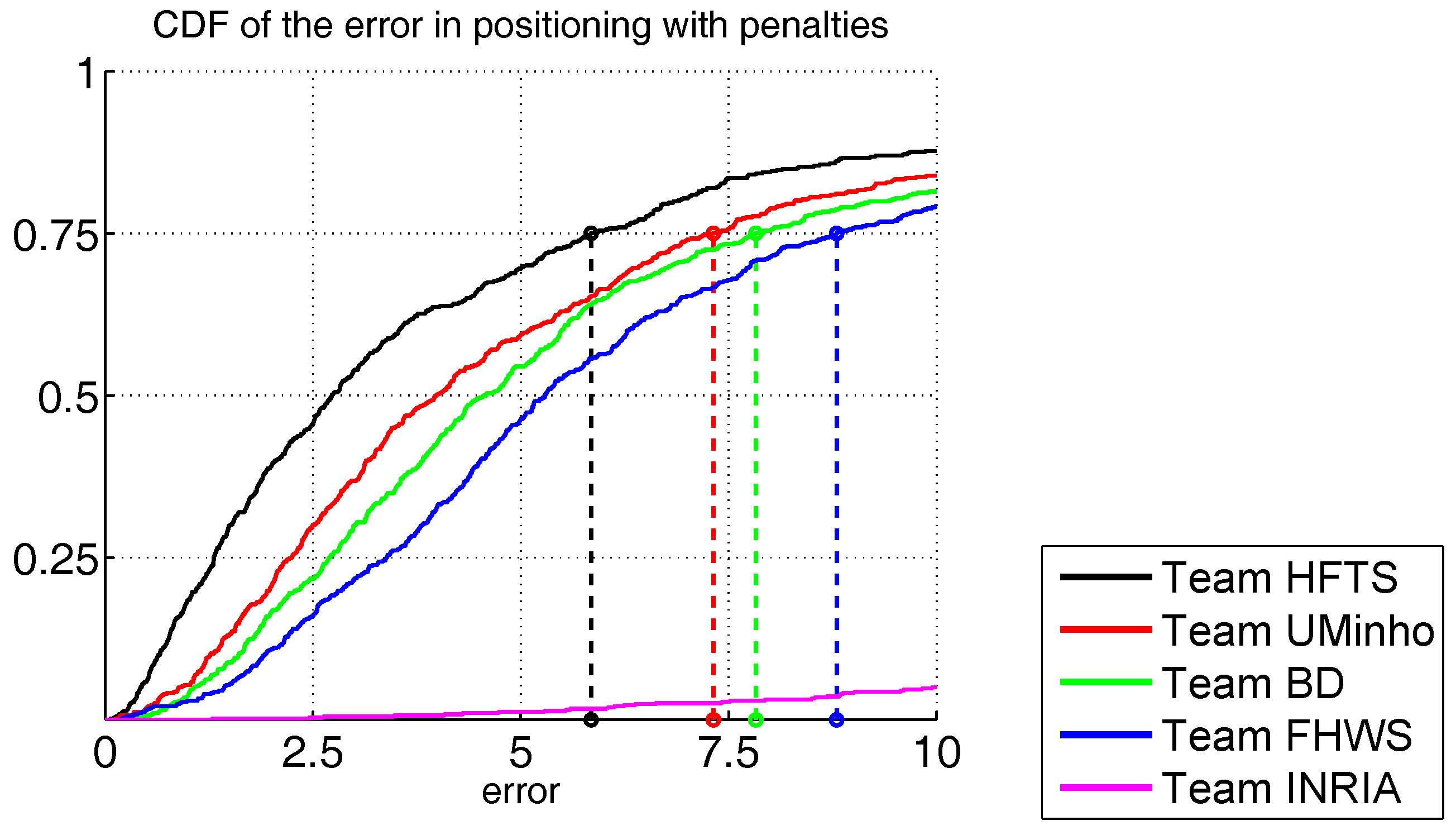

4.1.4. Track 2 Results

4.2. Track 3: Smartphone-Based (Off-Line)

4.2.1. Surveying the Area

Data Format

4.2.2. The Evaluation Path

- the stairs and the lifts could be used to move between floors;

- the paths traverse four floors in the UAH building, one floor in the CAR building, six floors in the UJIUB building and four floors in the UJITI building;

- paths in the CAR building also include an external patio;

- the nine paths cover a total of 578 key points;

- total duration is 2 h and 24 min, which allows to stress the competing applications in realistic conditions;

- actors may stay still for few seconds in a few locations; this rhythm is intended to reproduce the natural behaviour of humans while moving in an indoor environment;

- actors move at a natural pace, typically at a speed of around 1 m/s;

- phoning and lateral movements were allowed occasionally to reproduce a real situation;

- competitors have all the same data for calibrating and competing.

4.2.3. Results

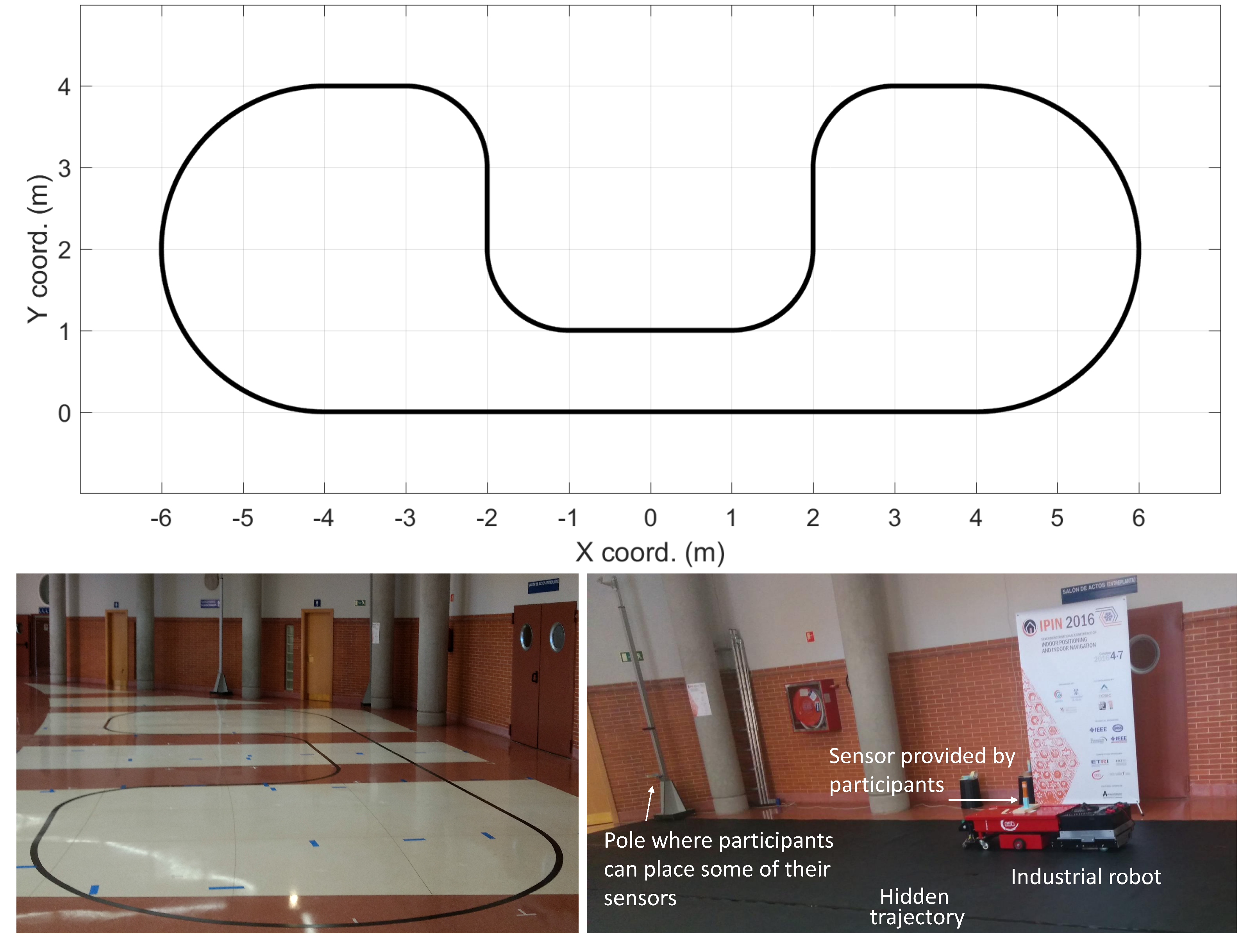

4.3. Track 4: Robotic Positioning

- The tracked element is an industrial robot;

- The task not only needs discrete and usually well separated key positions to be estimated, but a detailed tracking of the actual precise and unknown robot trajectory;

- Competitors could put sensors on board as well as locate them on given poles around the navigation area.

4.3.1. Surveying the Area

Robot

4.3.2. Evaluation Path

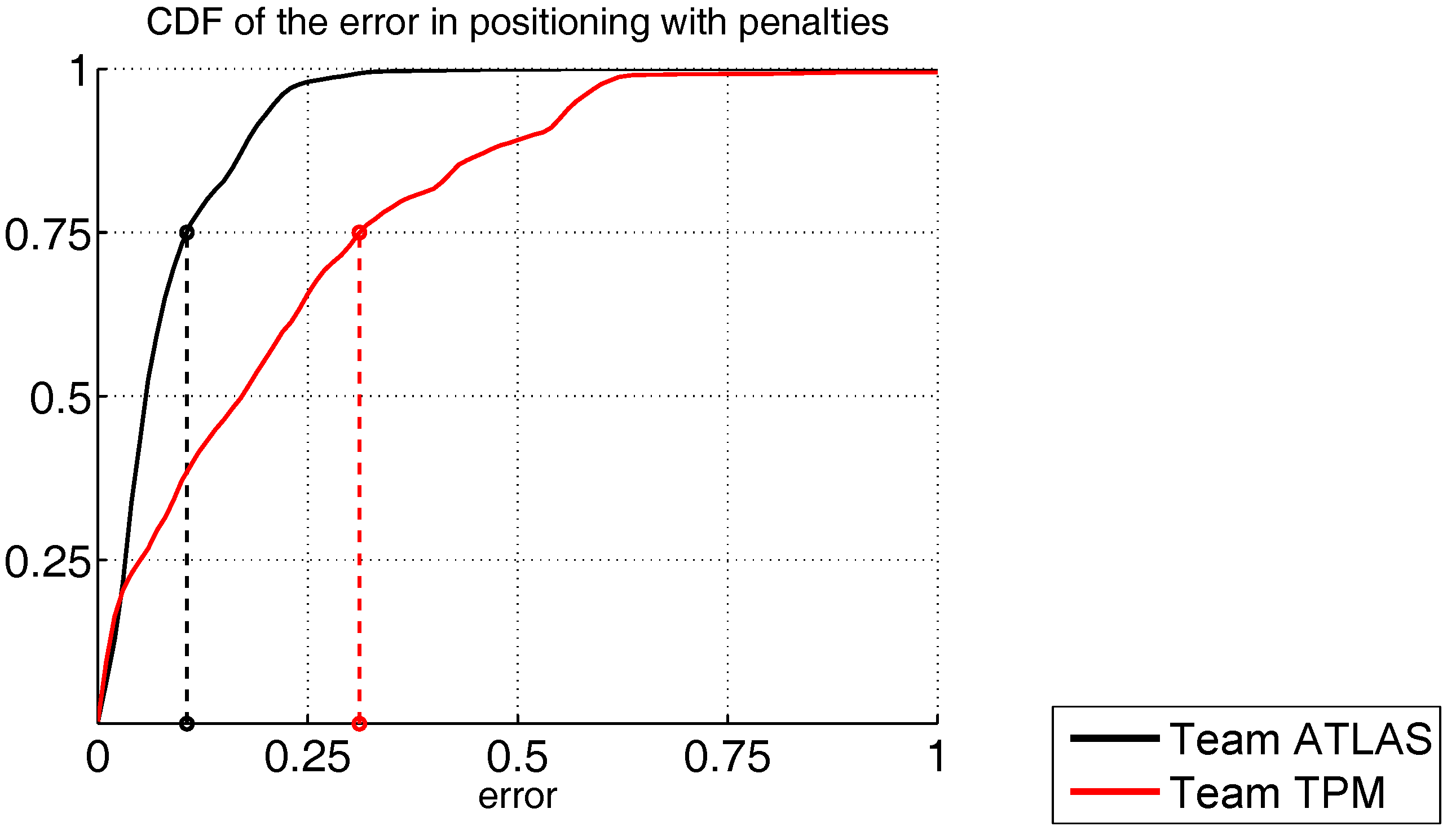

4.3.3. Results

4.4. Lessons Learned from IPIN Competition Tracks

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| AAL | Active and Assisted Living |

| AP | Access Point |

| BT | Bluetooth |

| BLE | Bluetooth Low Energy |

| CDF | Cumulative Distribution Function |

| EvAAL | Evaluating Active and Assisted Living |

| GNSS | Global Navigation Satellite System |

| IMU | Inertial Measurement Unit |

| IoT | Internet of Things |

| IPIN | Indoor Positioning and Indoor Navigation |

| IPS | Indoor Positioning System |

| Probability Density Function | |

| PDR | Pedestrian Dead Reckoning |

| RFID | Radio Frequency Identification |

| RMSE | Root Mean Square Error |

| UWB | Ultra Wide Band |

References

- Park, K.; Shin, H.; Cha, H. Smartphone-based pedestrian tracking in indoor corridor environments. Pers. Ubiquit. Comput. 2013, 17, 359–370. [Google Scholar] [CrossRef]

- Hardegger, M.; Roggen, D.; Tröster, G. 3D ActionSLAM: Wearable person tracking in multi-floor environments. Pers. Ubiquit. Comput. 2015, 19, 123–141. [Google Scholar] [CrossRef]

- Zampella, F.; Jiménez, A.; Seco, F. Indoor positioning using efficient map matching , RSS measurements , and an improved motion model. IEEE Trans. Veh. Technol. 2015, 64, 1304–1317. [Google Scholar] [CrossRef]

- Jiménez, A.R.; Seco, F.; Prieto, J.C.; Guevara, J. Indoor pedestrian navigation using an INS/EKF framework for Yaw drift reduction and a foot-mounted IMU. In Proceedings of the 2010 7th Workshop on Positioning Navigation and Communication (WPNC), Dresden, Germany, 11–12 March 2010. [Google Scholar]

- Jiménez, A.R.; Seco, F.; Zampella, F.; Prieto, J.C.; Guevara, J. Improved Heuristic Drift Elimination ( iHDE ) for pedestrian navigation in complex buildings. In Proceedings of the 2011 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Guimaraes, Portugal, 21–23 September 2011. [Google Scholar]

- Vera, R.; Ochoa, S.F.; Aldunate, R.G. EDIPS: An Easy to Deploy Indoor Positioning System to support loosely coupled mobile work. Pers. Ubiquit. Comput. 2011, 15, 365–376. [Google Scholar] [CrossRef]

- Kim, S.C.; Jeong, Y.S.; Park, S.O. RFID-based indoor location tracking to ensure the safety of the elderly in smart home environments. Pers. Ubiquit. Comput. 2013, 17, 1699–1707. [Google Scholar] [CrossRef]

- Piontek, H.; Seyffer, M.; Kaiser, J. Improving the accuracy of ultrasound-based localisation systems. Pers. Ubiquit. Comput. 2007, 11, 439–449. [Google Scholar] [CrossRef]

- Prieto, J.C.; Jiménez, A.R.; Guevara, J.; Ealo, J.L.; Seco, F.; Roa, J.O.; Ramos, F. Performance evaluation of 3D-LOCUS advanced acoustic LPS. IEEE Trans. Instrum. Meas. 2009, 58, 2385–2395. [Google Scholar] [CrossRef]

- Seco, F.; Prieto, J.C.; Jimenez, A.R.; Guevara, J. Compensation of multiple access interference effects in CDMA-based acoustic positioning systems. IEEE Trans. Instrum. Meas. 2014, 63, 2368–2378. [Google Scholar] [CrossRef]

- Bruns, E.; Bimber, O. Adaptive training of video sets for image recognition on mobile phones. Pers. Ubiquit. Comput. 2009, 13, 165–178. [Google Scholar] [CrossRef]

- Randall, J.; Amft, O.; Bohn, J.; Burri, M. LuxTrace: Indoor positioning using building illumination. Pers. Ubiquit. Comput. 2007, 11, 417–428. [Google Scholar] [CrossRef]

- Yang, S.H.; Jeong, E.M.; Kim, D.R.; Kim, H.S.; Son, Y.H.; Han, S.K. Indoor three-dimensional location estimation based on LED visible light communication. Electron. Lett. 2013, 49, 54–56. [Google Scholar] [CrossRef]

- Kuo, Y.S.; Pannuto, P.; Hsiao, K.J.; Dutta, P. Luxapose: Indoor positioning with mobile phones and visible light. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, Maui, HI, USA, 7–11 September 2014. [Google Scholar]

- Jimenez, A.R.; Zampella, F.; Seco, F. Light-matching: A new signal of opportunity for pedestrian indoor navigation. In Proceedings of the 4th International Conference on Indoor Positioning and Indoor Navigation (IPIN), Montbeliard-Belfort, France, 28–31 October 2013. [Google Scholar]

- Markets and Markets. Indoor Location Market by Component (Technology, Software Tools, and Services), Application, End User (Transportation, Hospitality, Entertainment, Shopping, and Public Buildings), and Region - Global Forecast to 2021. 2016. Available online: http://www.marketsandmarkets.com/Market-Reports/indoor-positioning-navigation-ipin-market-989.html (accessed on 12 October 2017).

- Liu, H.; Darabi, H.; Banerjee, P.; Liu, J. Survey of wireless indoor positioning techniques and systems. IEEE Trans. Syst. Man Cybern. C (Appl. Rev.) 2007, 37, 1067–1080. [Google Scholar] [CrossRef]

- Gu, Y.; Lo, A.; Niemegeers, I. A survey of indoor positioning systems for wireless personal networks. IEEE Commun. Surv. Tutor. 2009, 11, 13–32. [Google Scholar] [CrossRef]

- Ficco, M.; Palmieri, F.; Castiglione, A. Hybrid indoor and outdoor location services for new generation mobile terminals. Pers. Ubiquit. Comput. 2014, 18, 271–285. [Google Scholar] [CrossRef]

- Bahl, V.; Padmanabhan, V. RADAR: An In-Building RF-based User Location and Tracking System; Institute of Electrical and Electronics Engineers, Inc.: Piscataway, NJ, USA, 2000. [Google Scholar]

- Barsocchi, P.; Chessa, S.; Furfari, F.; Potortì, F. Evaluating AAL solutions through competitive benchmarking: The localization competition. IEEE Perv. Comput. Mag. 2013, 12, 72–79. [Google Scholar] [CrossRef]

- Niu, X.; Li, M.; Cui, X.; Liu, J.; Liu, S.; Chowdhury, K.R. WTrack: HMM-based walk pattern recognition and indoor pedestrian tracking using phone inertial sensors. Pers. Ubiquit. Comput. 2014, 18, 1901–1915. [Google Scholar] [CrossRef]

- Salvi, D.; Barsocchi, P.; Arredondo, M.T.; Ramos, J.P.L. EvAAL, Evaluating AAL Systems through Competitive Benchmarking, the Experience of the 1st Competition; Springer: Berlin/Heidelberg, Germany, 2011; pp. 14–25. [Google Scholar]

- Chessa, S.; Furfari, F.; Potortì, F.; Lázaro, J.P.; Salvi, D. EvAAL: Evaluating AAL systems through competitive benchmarking. ERCIM News 2011, 84, 46. [Google Scholar]

- Potortì, F.; Barsocchi, P.; Girolami, M.; Torres-Sospedra, J.; Montoliu, R. Evaluating indoor localization solutions in large environments through competitive benchmarking: The EvAAL-ETRI competition. In Proceedings of the 2015 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Calgary, AB, Canada, 13–16 October 2015. [Google Scholar]

- Youssef, M.; Agrawala, A.K. The Horus WLAN location determination system. In Proceedings of the 3rd International Conference on Mobile Systems, Applications, and Services, Seattle, WA, USA, 6–8 June 2005. [Google Scholar]

- Youssef, M.; Agrawala, A.K. On the Optimally of WLAN Location Determination Systems; Technical Report UMIACS-TR 2003-29 and UMIACS-TR 4459; University of Maryland: College Park, MD, USA, 2003. [Google Scholar]

- Youssef, M.; Agrawala, A.K. Handling samples correlation in the horus system. In Proceedings of the 23rd Annual Joint Conference of the IEEE Computer and Communications Societies, Hong Kong, China, 7–11 March 2004. [Google Scholar]

- Gu, Y.; Song, Q.; Ma, M.; Li, Y.; Zhou, Z. Using iBeacons for trajectory initialization and calibration in foot-mounted inertial pedestrian positioning systems. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Fetzer, T.; Ebner, F.; Deinzer, F.; Köping, L.; Grzegorzek, M. On Monte Carlo smoothing in multi sensor indoor localisation. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Ahmed, D.B.; Diaz, E.M.; Kaiser, S. Performance comparison of foot- and pocket-mounted inertial navigation systems. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Song, Q.; Gu, Y.; Ma, M.; Li, Y.; Zhou, Z. An anchor based framework for trajectory calibration in inertial pedestrian positioning systems. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Caruso, D.; Sanfourche, M.; Besnerais, G.L.; Vissière, D. Infrastructureless indoor navigation with an hybrid magneto-inertial and depth sensor system. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Ehrlich, C.R.; Blankenbach, J.; Sieprath, A. Towards a robust smartphone-based 2,5D pedestrian localization. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Galčík, F.; Opiela, M. Grid-based indoor localization using smartphones. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Zhang, W.; Hua, X.; Yu, K.; Qiu, W.; Zhang, S. Domain clustering based WiFi indoor positioning algorithm. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Li, W.; Wei, D.; Yuan, H.; Ouyang, G. A novel method of WiFi fingerprint positioning using spatial multi-points matching. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Mathisen, A.; Sørensen, S.K.; Stisen, A.; Blunck, H.; Grønbæk, K. A comparative analysis of Indoor WiFi Positioning at a large building complex. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Berkvens, R.; Weyn, M.; Peremans, H. Position error and entropy of probabilistic Wi-Fi fingerprinting in the UJIIndoorLoc dataset. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Liu, W.; Fu, X.; Deng, Z.; Xu, L.; Jiao, J. Smallest enclosing circle-based fingerprint clustering and modified-WKNN matching algorithm for indoor positioning. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Mizmizi, M.; Reggiani, L. Design of RSSI based fingerprinting with reduced quantization measures. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Guimarães, V.; Castro, L.; Carneiro, S.; Monteiro, M.; Rocha, T.; Barandas, M.; Machado, J.; Vasconcelos, M.; Gamboa, H.; Elias, D. A motion tracking solution for indoor localization using smartphones. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Ma, M.; Song, Q.; Li, Y.H.; Gu, Y.; Zhou, Z.M. A heading error estimation approach based on improved Quasi-static magnetic Field detection. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Skog, I.; Nilsson, J.O.; Händel, P. Pedestrian tracking using an IMU array. In Proceedings of the 2014 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 6–7 January 2014. [Google Scholar]

- Du, Y.; Arslan, T.; Juri, A. Camera-aided region-based magnetic field indoor positioning. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Laurijssen, D.; Truijen, S.; Saeys, W.; Daems, W.; Steckel, J. A flexible embedded hardware platform supporting low-cost human pose estimation. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Stancovici, A.; Micea, M.V.; Cretu, V. Cooperative positioning system for indoor surveillance applications. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Cioarga, R.D.; Micea, M.V.; Ciubotaru, B.; Chiuciudean, D.; Stanescu, D. CORE-TX: Collective Robotic environment—The timisoara experiment. In Proceedings of the Third Romanian-Hungarian Joint Symposium on Applied Computational Intelligence, SACI 2006, Timisoara, Romania, 25–26 May 2006. [Google Scholar]

- Ogiso, S.; Kawagishi, T.; Mizutani, K.; Wakatsuki, N.; Zempo, K. Effect of parameters of phase-modulated M-sequence signal on direction-of-arrival and localization error. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Pelka, M.; Hellbrück, H. Introduction, discussion and evaluation of recursive Bayesian filters for linear and nonlinear filtering problems in indoor localization. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Jiménez, A.R.; Seco, F. Comparing Decawave and Bespoon UWB location systems: Indoor/outdoor performance analysis. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Karciarz, J.; Swiatek, J.; Wilk, P. Using path matching filter for lightweight indoor location determination. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Dombois, M.; Döweling, S. A pipeline architecture for indoor location tracking. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Ettlinger, A.; Retscher, G. Positioning using ambient magnetic fields in combination with Wi-Fi and RFID. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Okuda, K.; Yeh, S.Y.; Wu, C.I.; Chang, K.H.; Chu, H.H. The GETA Sandals: A Footprint Location Tracking System; Strang, T., Linnhoff-Popien, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 120–131. [Google Scholar]

- Yeh, S.Y.; Chang, K.H.; Wu, C.I.; Chu, H.H.; Hsu, J.Y.J. GETA sandals: A footstep location tracking system. Pers. Ubiquit. Comput. 2007, 11, 451–463. [Google Scholar] [CrossRef]

- Lorincz, K.; Welsh, M. MoteTrack: A Robust, Decentralized Approach to RF-Based Location Tracking; Strang, T., Linnhoff-Popien, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 63–82. [Google Scholar]

- Lorincz, K.; Welsh, M. MoteTrack: A robust, decentralized approach to RF-based location tracking. Pers. Ubiquit. Comput. 2007, 11, 489–503. [Google Scholar] [CrossRef]

- Wu, C.J.; Quigley, A.; Harris-Birtill, D. Out of sight: A toolkit for tracking occluded human joint positions. Pers. Ubiquit. Comput. 2017, 21, 125–135. [Google Scholar] [CrossRef]

- Guo, J.; Zhang, H.; Sun, Y.; Bie, R. Square-root unscented Kalman filtering-based localization and tracking in the Internet of Things. Pers. Ubiquit. Comput. 2014, 18, 987–996. [Google Scholar] [CrossRef]

- Xiao, J.; Zhou, Z.; Yi, Y.; Ni, L.M. A survey on wireless indoor localization from the device perspective. ACM Comput. Surv. 2016, 49, 1–31. [Google Scholar] [CrossRef]

- He, S.; Chan, S.H.G. Wi-Fi fingerprint-based indoor positioning: Recent advances and comparisons. IEEE Commun. Surv. Tutor. 2016, 18, 466–490. [Google Scholar] [CrossRef]

- Hassan, N.U.; Naeem, A.; Pasha, M.A.; Jadoon, T.; Yuen, C. Indoor positioning using visible LED lights: A survey. ACM Comput. Surv. 2015, 48, 1–32. [Google Scholar] [CrossRef]

- King, T.; Kopf, S.; Haenselmann, T.; Lubberger, C.; Effelsberg, W. CRAWDAD Dataset Mannheim/compass (v. 2008-04-11). Traceset: Fingerprint. 2008. Available online: http://crawdad.org/mannheim/compass/20080411/fingerprint (accessed on 12 October 2017).

- Rhee, I.; Shin, M.; Hong, S.; Lee, K.; Kim, S.; Chong, S. CRAWDAD Dataset Ncsu/Mobilitymodels (v. 2009-07-23). 2009. Available online: http://crawdad.org/ncsu/mobilitymodels/20090723 (accessed on 12 October 2017).

- Nahrstedt, K.; Vu, L. CRAWDAD dAtaset Uiuc/Uim (v. 2012-01-24). 2012. Available online: http://crawdad.org/uiuc/uim/20120124 (accessed on 12 October 2017).

- Purohit, A.; Pan, S.; Chen, K.; Sun, Z.; Zhang, P. CRAWDAD Dataset Cmu/Supermarket (v. 2014-05-27). 2014. Available online: http://crawdad.org/cmu/supermarket/20140527 (accessed on 12 October 2017).

- Parasuraman, R.; Caccamo, S.; Baberg, F.; Ogren, P. CRAWDAD Dataset kth/rss (v. 2016-01-05). 2016. Available online: http://crawdad.org/kth/rss/20160105 (accessed on 12 October 2017).

- Lichman, M. UCI Machine Learning Repository. Irvine, CA: University of California, School of Information and Computer Science. Available online: http://archive.ics.uci.edu/ml (accessed on 12 October 2017).

- Torres-Sospedra, J.; Montoliu, R.; Usó, A.M.; Avariento, J.P.; Arnau, T.J.; Benedito-Bordonau, M.; Huerta, J. UJIIndoorLoc: A new multi-building and multi-floor database for WLAN fingerprint-based indoor localization problems. In Proceedings of the 2014 International Conference on Indoor Positioning and Indoor Navigation, IPIN 2014, Busan, Korea, 27–30 October 2014. [Google Scholar]

- Torres-Sospedra, J.; Moreira, A.J.C.; Knauth, S.; Berkvens, R.; Montoliu, R.; Belmonte, O.; Trilles, S.; Nicolau, M.J.; Meneses, F.; Costa, A.; et al. A realistic evaluation of indoor positioning systems based on Wi-Fi fingerprinting: The 2015 EvAAL-ETRI competition. JAISE 2017, 9, 263–279. [Google Scholar] [CrossRef]

- Torres-Sospedra, J.; Rambla, D.; Montoliu, R.; Belmonte, O.; Huerta, J. UJIIndoorLoc-Mag: A new database for magnetic field-based localization problems. In Proceedings of the 2015 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Banff, AB, Canada, 13–16 October 2015. [Google Scholar]

- Torres-Sospedra, J.; Montoliu, R.; Mendoza-Silva, G.M.; Belmonte, O.; Rambla, D.; Huerta, J. Providing databases for different indoor positioning technologies: Pros and cons of magnetic field and Wi-Fi based positioning. Mob. Inform. Syst. 2016, 2016, 1–22. [Google Scholar] [CrossRef]

- Bacciu, D.; Barsocchi, P.; Chessa, S.; Gallicchio, C.; Micheli, A. An experimental characterization of reservoir computing in ambient assisted living applications. Neural Comput. Appl. 2014, 24, 1451–1464. [Google Scholar] [CrossRef]

- Barsocchi, P.; Crivello, A.; Rosa, D.L.; Palumbo, F. A multisource and multivariate dataset for indoor localization methods based on WLAN and geo-magnetic field fingerprinting. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Shrestha, S.; Talvitie, J.; Lohan, E.S. Deconvolution-based indoor localization with WLAN signals and unknown access point locations. In Proceedings of the 2013 International Conference on Localization and GNSS (ICL-GNSS), Turin, Italy, 25–27 June 2013. [Google Scholar]

- Talvitie, J.; Lohan, E.S.; Renfors, M. The effect of coverage gaps and measurement inaccuracies in fingerprinting based indoor localization. In Proceedings of the International Conference on Localization and GNSS 2014 (ICL-GNSS 2014), Helsinki, Finland, 24–26 June 2014. [Google Scholar]

- Moayeri, N.; Ergin, M.O.; Lemic, F.; Handziski, V.; Wolisz, A. PerfLoc (Part 1): An extensive data repository for development of smartphone indoor localization apps. In Proceedings of the 27th IEEE Annual International Symposium on Personal, Indoor, and Mobile Radio Communications, PIMRC 2016, Valencia, Spain, 4–8 September 2016. [Google Scholar]

- Lymberopoulos, D.; Liu, J.; Yang, X.; Choudhury, R.R.; Handziski, V.; Sen, S. A realistic evaluation and comparison of indoor location technologies: Experiences and lessons learned. In Proceedings of the 14th International Conference on Information Processing in Sensor Networks, Seattle, WA, USA, 14–16 April 2015. [Google Scholar]

- 2016 Microsoft Indoor Localization Competition. Available online: https://www.microsoft.com/en-us/research/event/microsoft-indoor-localization-competition-ipsn-2016/ (accessed on 6 July 2017).

- Steinbauer, G.; Ferrein, A. 20 years of RoboCup. KI Künstliche Intell. 2016, 30, 221–224. [Google Scholar] [CrossRef]

- Osuka, K.; Murphy, R.; Schultz, A.C. USAR competitions for physically situated robots. IEEE Robot. Autom. Mag. 2002, 9, 26–33. [Google Scholar] [CrossRef]

- Davids, A. Urban search and rescue robots: From tragedy to technology. IEEE Intell. Syst. 2002, 17, 81–83. [Google Scholar]

- AUVSI SUAS Competition. Available online: http://www.auvsi-suas.org/competitions/2016/ (accessed on 4 November 2016).

- Van Haute, T.; De Poorter, E.; Lemic, F.; Handziski, V.; Wirström, N.; Voigt, T.; Wolisz, A.; Moerman, I. Platform for benchmarking of RF-based indoor localization solutions. IEEE Commun. Mag. 2015, 53, 126–133. [Google Scholar] [CrossRef]

- Pulkkinen, T.; Verwijnen, J. Evaluating indoor positioning errors. In Proceedings of the 2015 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Korea, 28–30 October 2015. [Google Scholar]

- Campos, R.S.; Lovisolo, L.; de Campos, M.L.R. Wi-Fi multi-floor indoor positioning considering architectural aspects and controlled computational complexity. Expert Syst. Appl. 2014, 41, 6211–6223. [Google Scholar] [CrossRef]

- Schauer, L.; Marcus, P.; Linnhoff-Popien, C. Towards feasible Wi-Fi based indoor tracking systems using probabilistic methods. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Barsocchi, P.; Girolami, M.; Potortì, F. Track 1: Smartphone Based (on-Site). 2016. Available online: http://www3.uah.es/ipin2016/docs/Track1_IPIN2016Competition.pdf (accessed on 12 October 2017).

- Lee, S.; Lim, J. Track 2: Pedestrian Dead Reckoning Positioning (on-Site). Available online: http://www3.uah.es/ipin2016/docs/Track2_IPIN2016Competition.pdf (accessed on 12 October 2017).

- Fetzer, T.; Ebner, F.; Deinzer, F.; Grzegorzek, M. Multi sensor indoor localisation (only abstract). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Bai, Y.B.; Gu, T.; Hu, A. Integrating Wi-Fi and magnetic field for fingerprinting based indoor positioning system. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Herrera, J.C.A.; Ramos, A. Navix: Smartphone Based hybrid indoor positioning (only abstract). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Guo, X.; Shao, W.; Zhao, F.; Wang, Q.; Li, D.; Luo, H. WiMag: Multimode fusion localization system based on Magnetic/WiFi/PDR. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Carrera, J.L.; Zhao, Z.; Braun, T.; Li, Z. A real-time indoor tracking system by fusing inertial sensor, radio signal and floor plan. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Zheng, L.; Wang, Y.; Peng, A.; Wu, Z.; Wen, S.; Lu, H.; Shi, H.; Zheng, H. A hand-held indoor positioning system based on smartphone (only abstract). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Chesneau, C.I.; Hillion, M.; Prieur, C. Motion estimation of a rigid body with an EKF using magneto-inertial measurements. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Ju, H.; Park, S.Y.; Park, C.G. Foot mounted inertial navigation system using estimated velocity during the contact phase (work in progress). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Peng, A.; Zheng, L.; Zhou, W.; Yan, C.; Wang, Y.; Ruan, X.; Tang, B.; Shi, H.; Lu, H.; Zheng, H. Foot-mounted indoor pedestrian positioning system based on low-cost inertial sensors (work in progress). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Díez, L.E.; Bahillo, A. Pedestrian positioning from wrist-worn wearable devices (only abstract). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Strozzi, N.; Parisi, F.; Ferrari, G. A multifloor hybrid inertial/barometric navigation system. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Xiao, Z.; Yang, Y.; Mu, R.; Liang, J. Accurate dual-foot micro-inertial navigation system with inter-agent ranging (only abstract). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Liu, Y.; Guo, K.; Li, S. D-ZUPT based pedestrian dead-reckoning system using low cost IMU (work in progress). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Yang, J.; Lee, H.; Moessner, K. 3D pedestrian dead reckoning based on kinematic models in human activity by using multiple low-cost IMUs (only abstract). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Torres-Sospedra, J.; Jiménez, A.R. Track 3: Smartphone Based (off-Site). 2016. Available online: http://www3.uah.es/ipin2016/docs/Track3_IPIN2016Competition.pdf (accessed on 12 October 2017).

- Torres-Sospedra, J.; Jiménez, A.R. Track 3: Detailed Description of LogFiles and sUpplementary Materials. 2016. Available online: http://indoorloc.uji.es/ipin2016track3/files/Track3_LogfileDescription_and_SupplementaryMaterial.pdf (accessed on 12 October 2017).

- Beer, Y. WiFi fingerprinting using bayesian and hierarchical supervised machine learning assisted by GPS (only abstract). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Ta, V.C.; Vaufreydaz, D.; Dao, T.K.; Castelli, E. Smartphone-based user location tracking in indoor environment. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Moreira, A.; Nicolau, M.J.; Costa, A.; Meneses, F. Indoor tracking from multidimensional sensor data. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Knauth, S.; Koukofikis, A. Smartphone positioning in large environments by sensor data fusion, particle filter and FCWC. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Rubio, M.C.P.; Losada-Gutiérrez, C.; Espinosa, F.; Macias-Guarasa, J. Track 4: Indoor Mobile Robot Positioning Competition Rules. 2016. Available online: http://www3.uah.es/ipin2016/docs/Track4_IPIN2016Competition.pdf (accessed on 12 October 2017).

- ASTi Automated Conveyance Web. 2016. Available online: http://asti.es/en/soluciones/transporte-automatico (accessed on 4 November 2016).

- Tiemann, J.; Eckermann, F.; Wietfeld, C. ATLAS—An open-source TDOA-based Ultra-wideband localization system. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Wang, Z.; Zhang, L.; Lin, F.; Huang, D. Aidloc: A high acoustic smartphone indoor localization system with NLOS identification and mitigation (only abstract). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Ho, S.W. Indoor localization using visible Light (only abstract). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Katkov, M.; Huba, S. TOPOrtls TPM UWB wireless system technical description (only abstract). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

- Ureña, J.; Villadangos, J.M.; Gualda, D.; Pérez, M.C.; Álvaro Hernández; Arango, J.F.; García, J.J.; García, J.C.; Jiménez, A.; Díaz, E. Technical description of Locate-US: An ultrasonic local positioning system based on encoded beacons. (Out of Competition) (work in progress). In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016. [Google Scholar]

| Ref. | Session | Base Tech | Evaluation Scenario | Evaluation Metrics |

|---|---|---|---|---|

| [29] | Hybrid IMU | ifoot-mounted IMU iBeacons Smartphone | 1 walking track of 5.4 km | Final error Trajectory |

| [30] | Hybrid IMU | Smartphone sensor fusion | 4-storey building (77 × 55 m) 4 walking tracks | Average Error |

| [31] | Hybrid IMU | Xsens MTw inertial sensor | multi-storey office building | Average Error Root Mean Square Error |

| [32] | Hybrid IMU | Data acquisition platform with IMU, UWB and BT | 2 rooms and 2 corridors 1 walk | Position error Heading error Trajectory |

| [33] | Hybrid IMU | visual-magneto-inertial system | multiple experiments: 1 m2 area; staircase; and motion capture room | final drift Trajectory |

| [34] | Hybrid IMU | Google Nexus 5 Sensor fusion | 1 walking track | Trajectory |

| [35] | Hybrid IMU | low-end smartphone low-end tablet | different tests | Final Disc. Ratio Average Error Hints |

| [36] | RSS | no device info | 2 rooms | Average Error Root Mean Square Error Max. Error CDF |

| [37] | RSS | Huawei Mate | Garage | Average Error |

| [38] | RSS | 6 different android devices | Large university hospital >160.000 m2 (3 floors) | Average Error Error variants échet distance |

| [39] | RSS | Competition Database Wi-Fi fingerprinting | 3 multi-storey buildings | Average Error Median Error 95th percentile building & floor rate |

| [40] | RSS | Device not defined | China National Grand Theatre 210 × 140 m | PDF CDF |

| [41] | RSS | Simulation (RSS) | 8 × 8 m | Complex Scatter plot |

| [42] | RSS | 7 smartphone models (50 subjects) | Set of routes ≈50 km + ≈7 km | Average error Histogram |

| [43] | Magnetic | MIMU Platform [44] | 2 walks: Office and mall | Average error Trajectory and ROC curve |

| [45] | Magnetic | Magnetic and camera: Project Tango and Google Nexus 5X | Noreen and Kenneth Murray Library 2 different floors with strong and weak disturbances | Average error Visual results Matching rate |

| [46] | Ultrasounds | Senscomp 7000r and proposed HW platform | Not described | Average error by axis and angle |

| [47] | Ultrasounds | CORE-TX [48] | Indoor Surveillance Small office with 6 rooms | Abs. Error |

| [49] | Ultrasounds | Acoustic Beacons | small area 3 × 3 | Average error Trajectory |

| [50] | UWB | IMU; UWB; and Combination | 20 × 20 m | Average error Trajectory |

| [51] | UWB | BeSopon and Decawave EVK1000 | 12.4 × 9.6 m | Average Error Median Error 90th percentile CDF Trajectory Histograms |

| [52] | S.C.Sensor | Samsung smartphones and proprietary podometer | 3 Buildings | Average Error |

| [53] | Hybrid Syst. | Sony Xperia Z3 Compact Samsung Galaxy S5 | Office space following an open space concept (2600 m2 approx) | Average Error Percentile m |

| [54] | RFID | low cost IMU (Xsens MTi) Samsung Galaxy S2 | 1 hall (10 × 7.5 m) 1 corridor (50 m) | Average Error Avg. lateral deviation |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Potortì, F.; Park, S.; Jiménez Ruiz, A.R.; Barsocchi, P.; Girolami, M.; Crivello, A.; Lee, S.Y.; Lim, J.H.; Torres-Sospedra, J.; Seco, F.; et al. Comparing the Performance of Indoor Localization Systems through the EvAAL Framework. Sensors 2017, 17, 2327. https://doi.org/10.3390/s17102327

Potortì F, Park S, Jiménez Ruiz AR, Barsocchi P, Girolami M, Crivello A, Lee SY, Lim JH, Torres-Sospedra J, Seco F, et al. Comparing the Performance of Indoor Localization Systems through the EvAAL Framework. Sensors. 2017; 17(10):2327. https://doi.org/10.3390/s17102327

Chicago/Turabian StylePotortì, Francesco, Sangjoon Park, Antonio Ramón Jiménez Ruiz, Paolo Barsocchi, Michele Girolami, Antonino Crivello, So Yeon Lee, Jae Hyun Lim, Joaquín Torres-Sospedra, Fernando Seco, and et al. 2017. "Comparing the Performance of Indoor Localization Systems through the EvAAL Framework" Sensors 17, no. 10: 2327. https://doi.org/10.3390/s17102327