Computer Vision-Based Structural Displacement Measurement Robust to Light-Induced Image Degradation for In-Service Bridges

Abstract

:1. Introduction

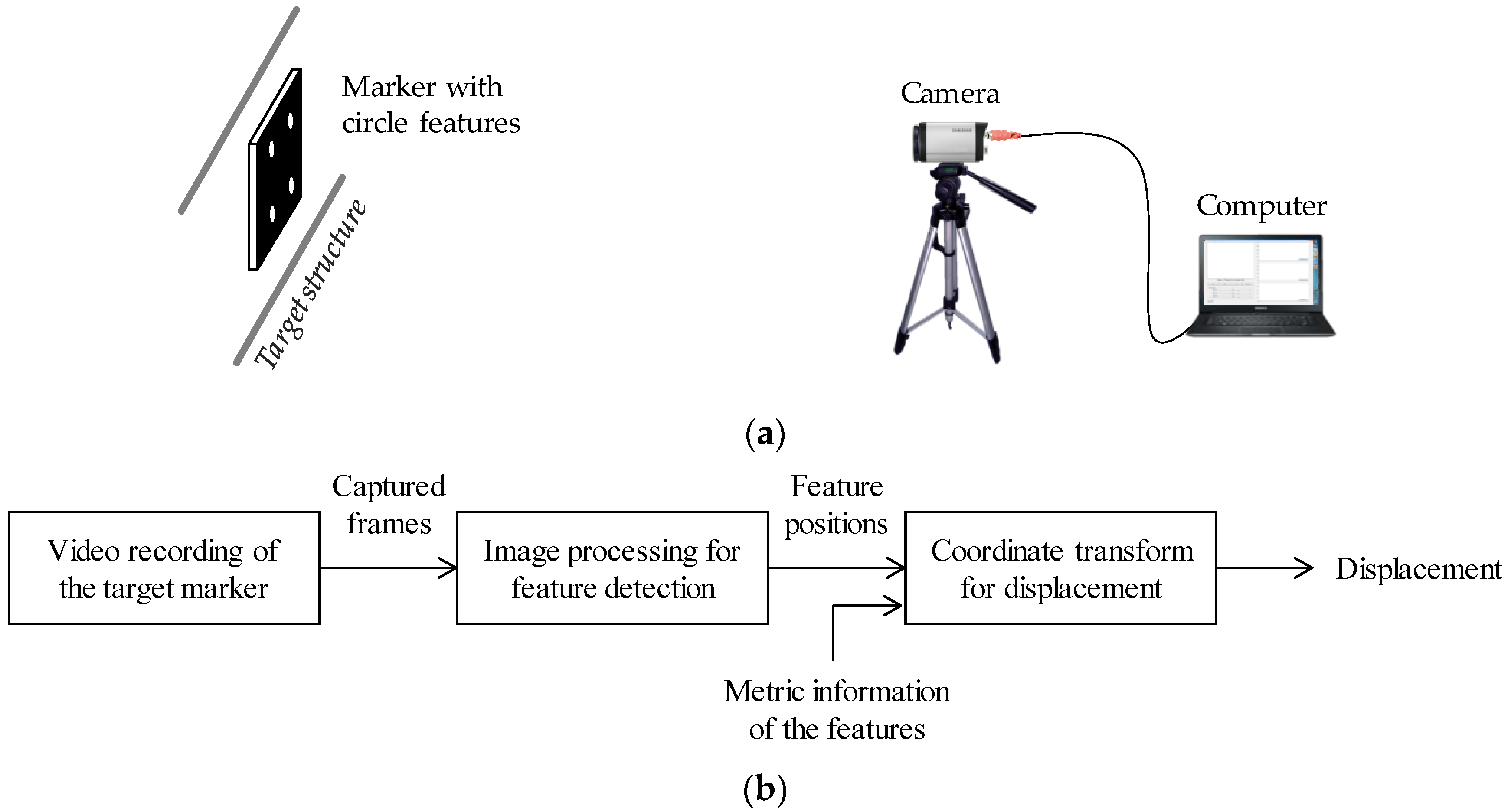

2. Computer Vision-Based Displacement Measurement

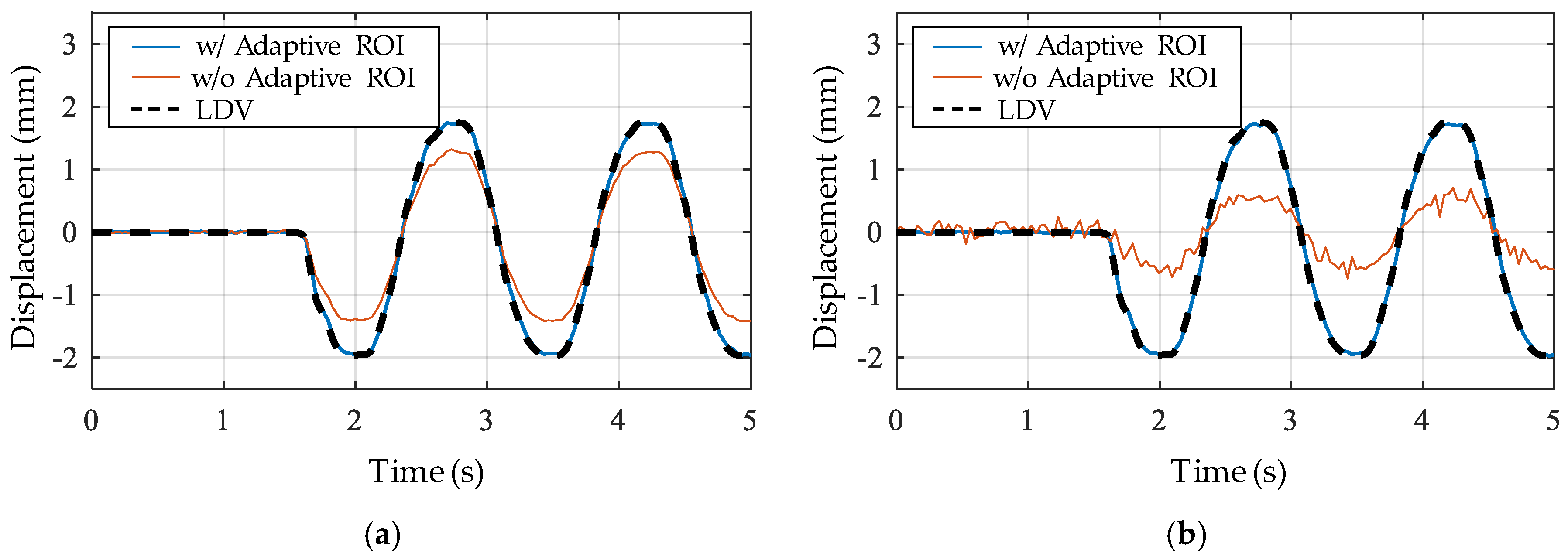

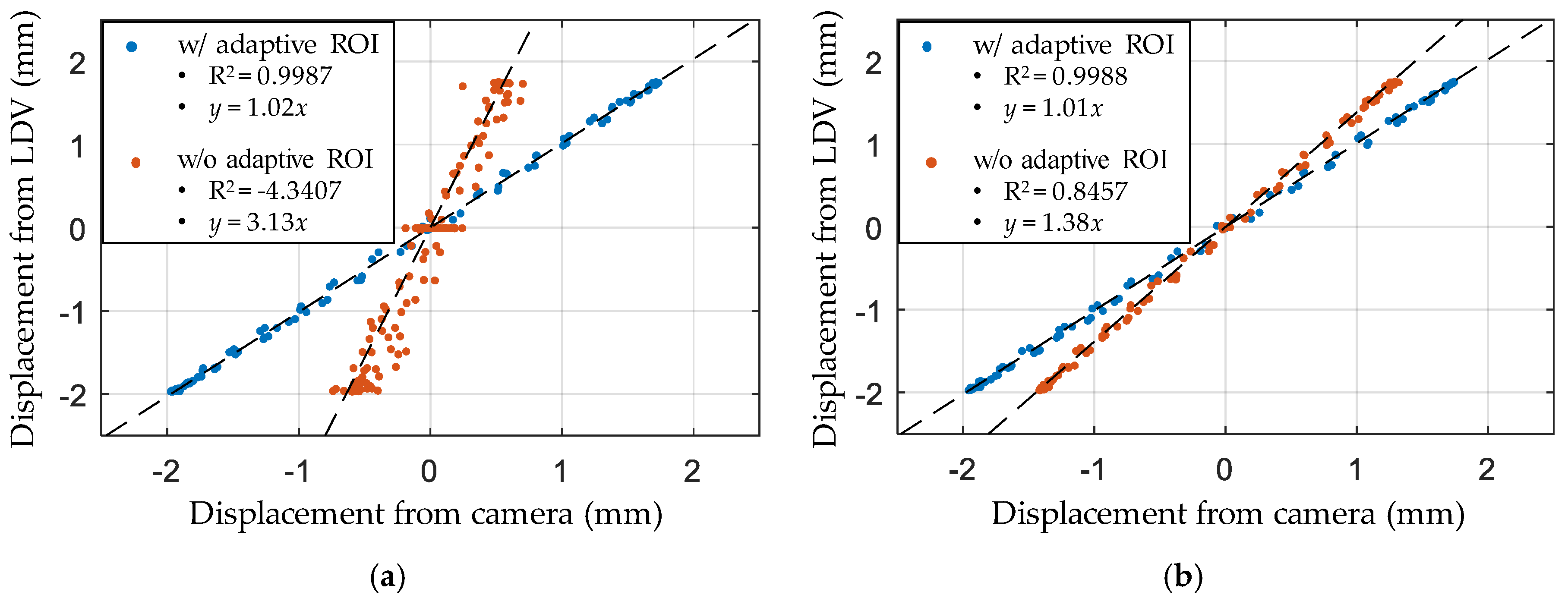

2.1. Overview

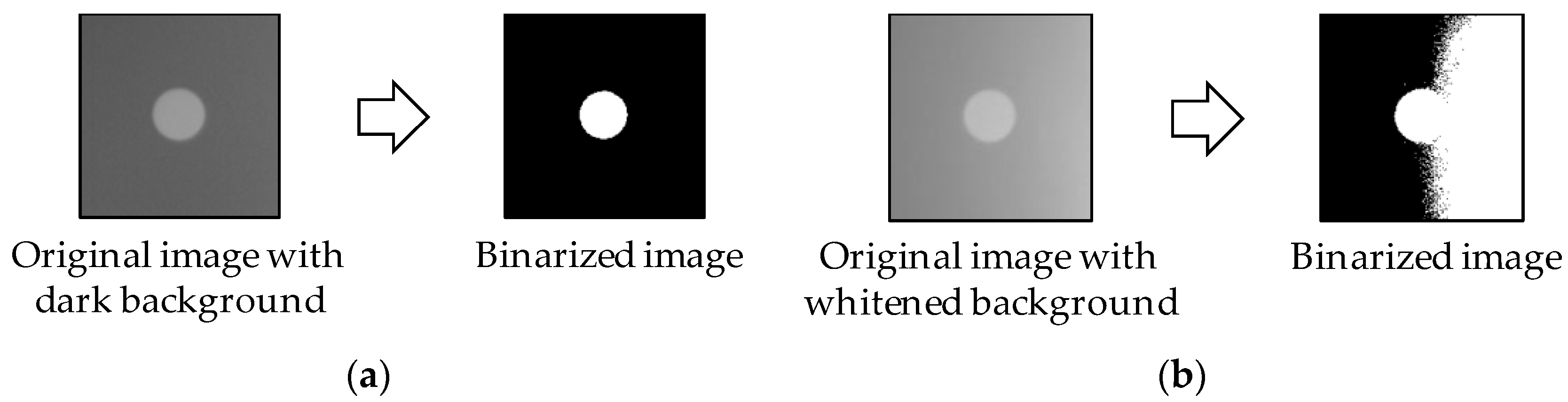

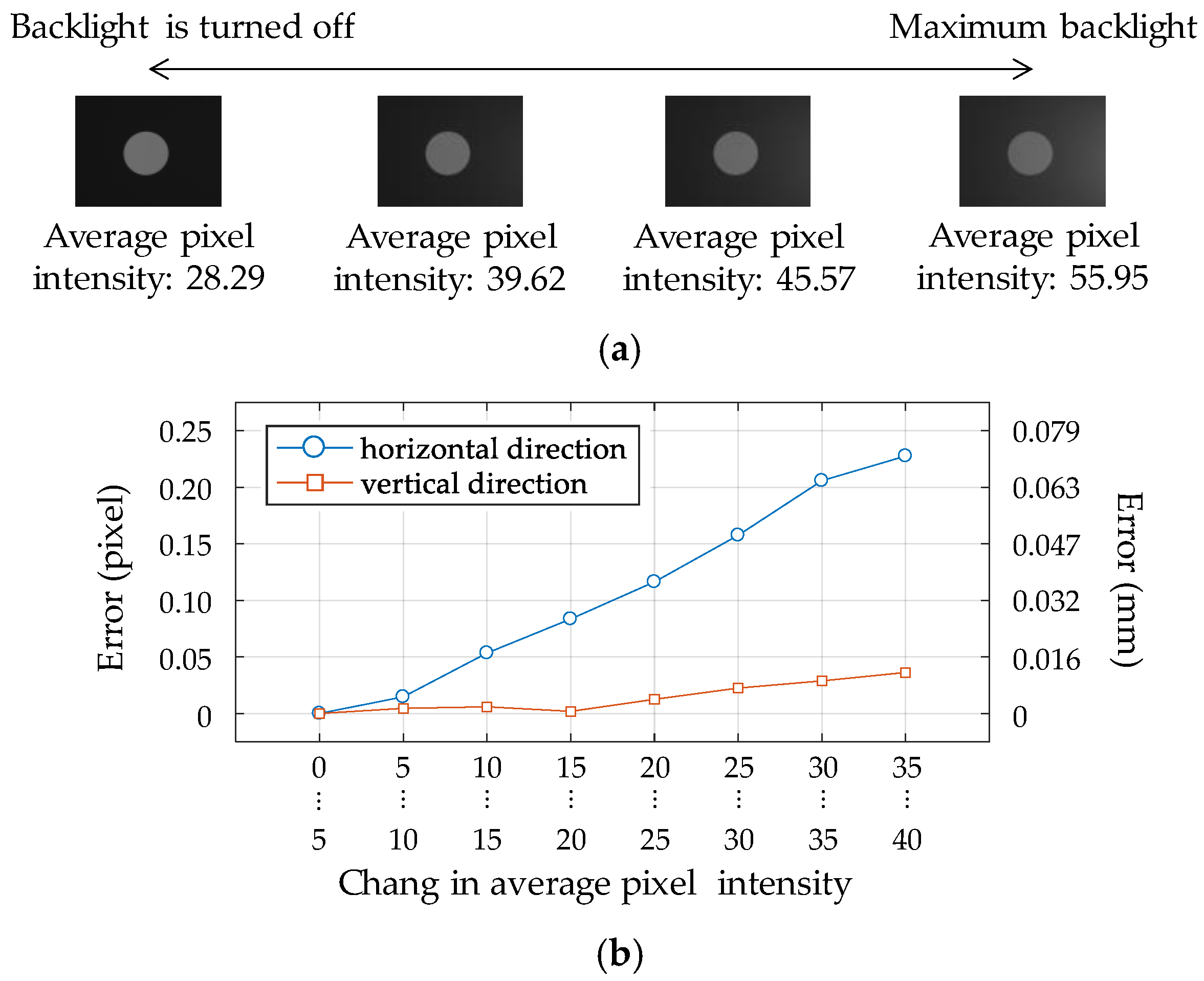

2.2. Light-Induced Image Degradation

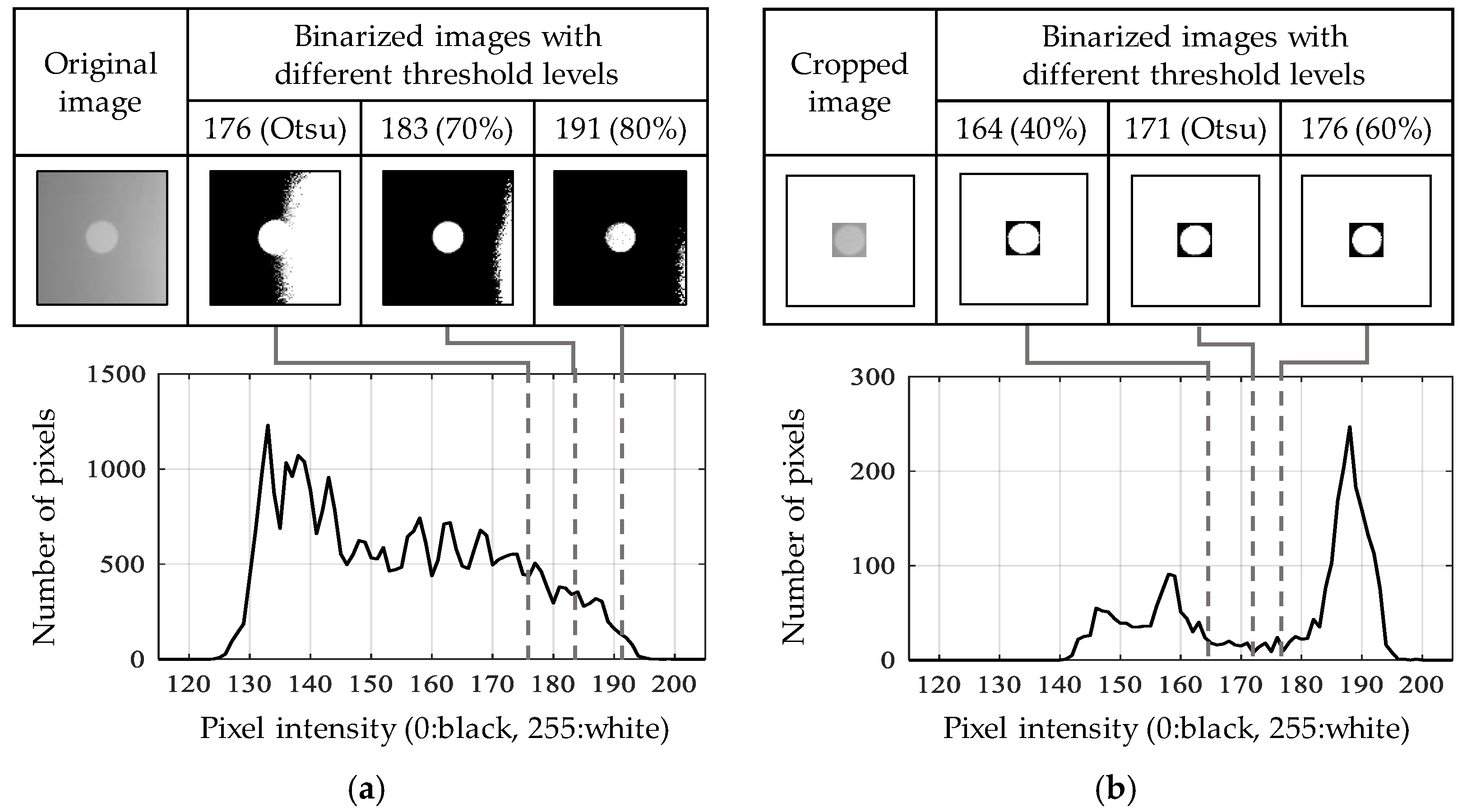

3. Displacement Measurement Using an Adaptive ROI

3.1. Adaptive ROI Algorithm

3.2. Uncertainty Analysis

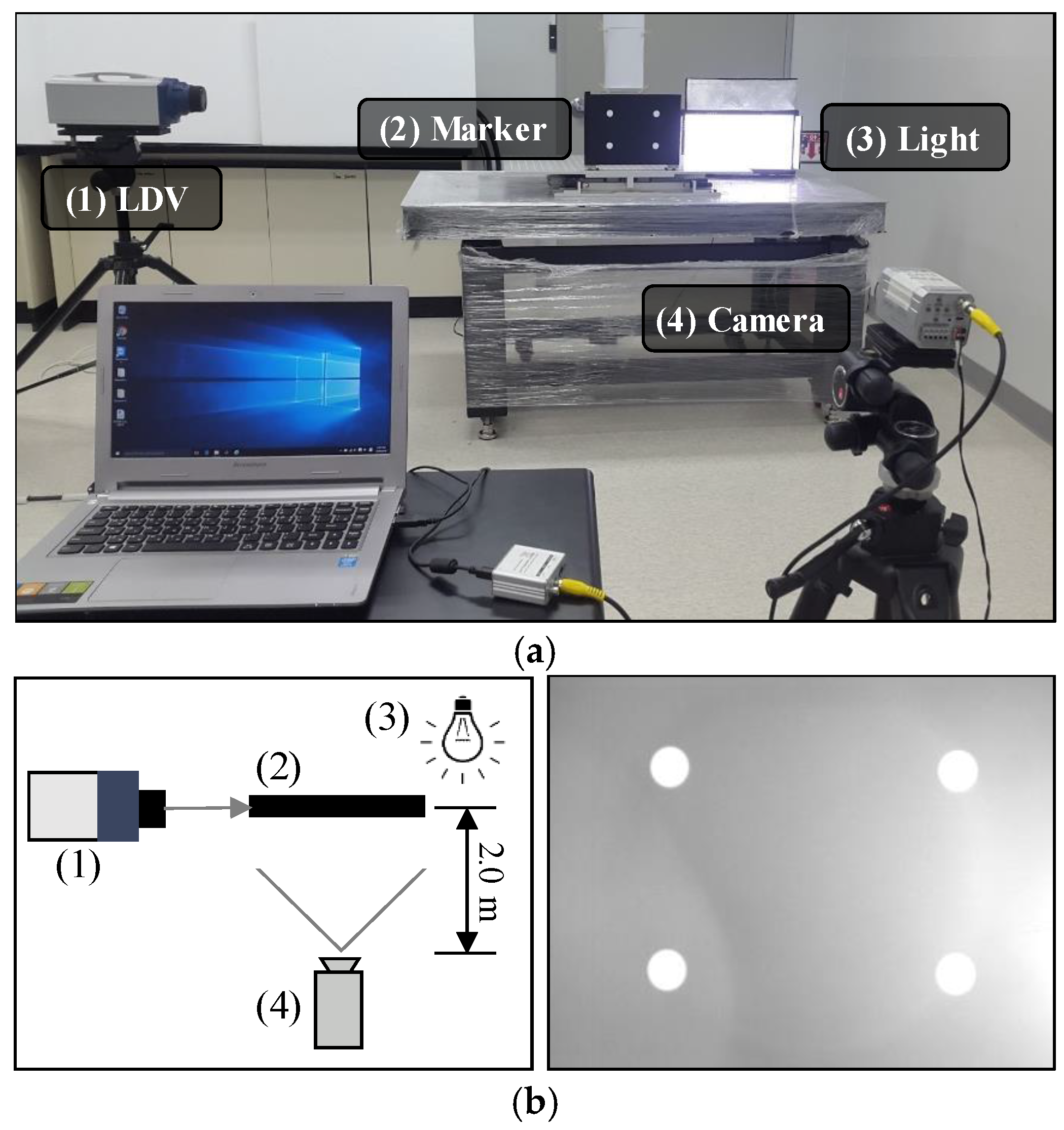

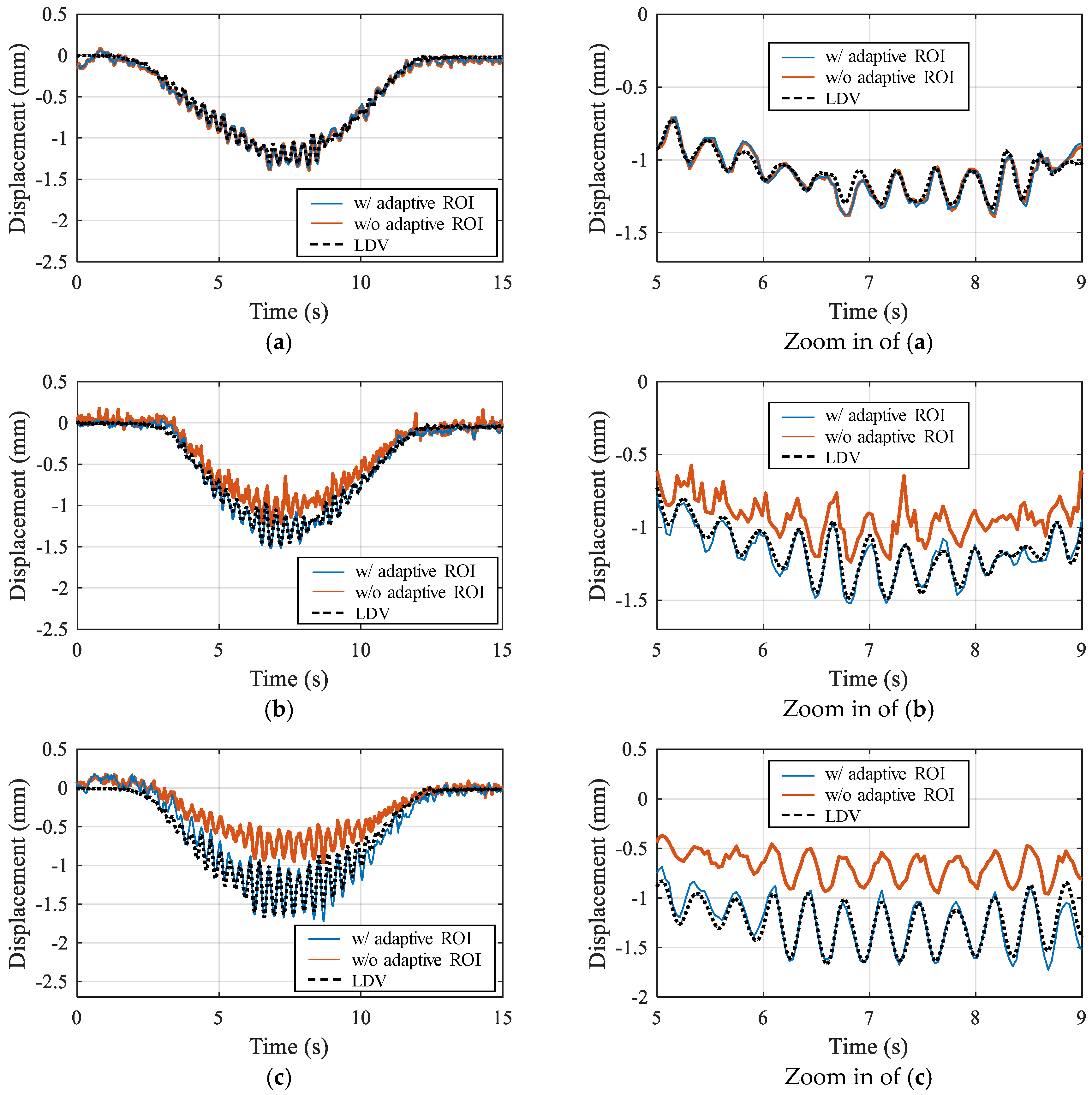

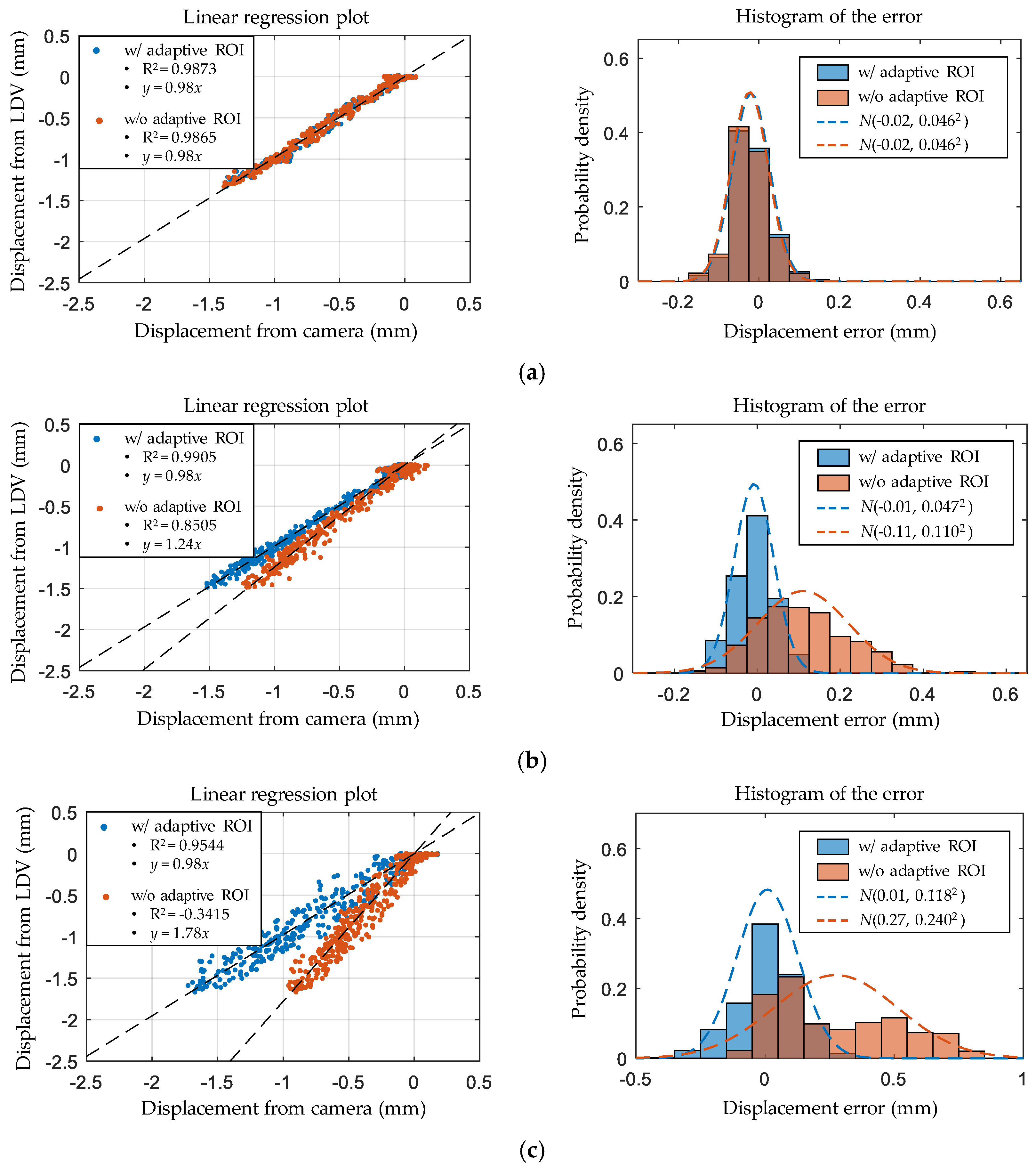

4. Experimental Validation: Laboratory-Scale

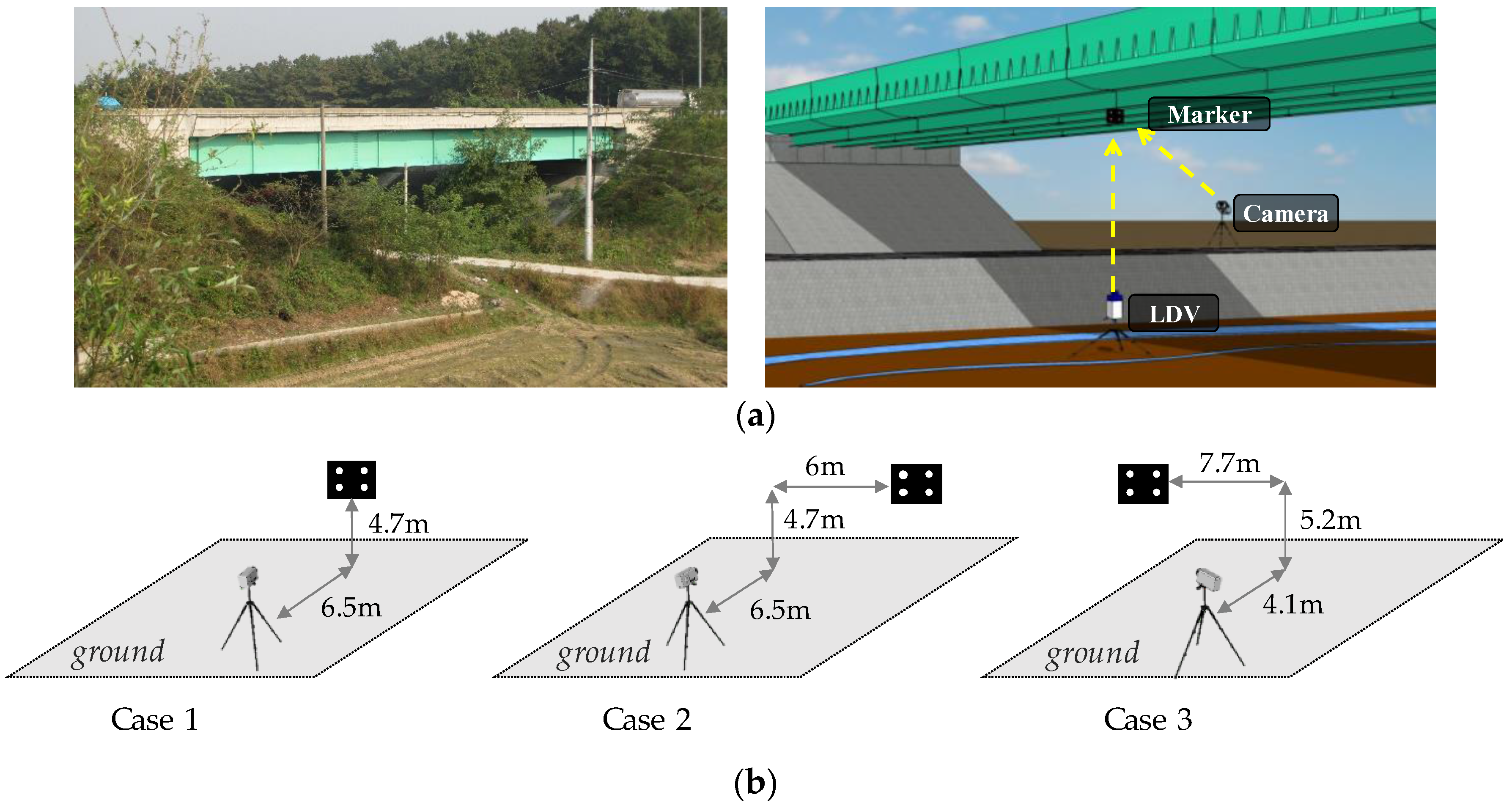

5. Field Validation

6. Conclusions

Conflicts of Interests

Acknowledgments

Author Contributions

References

- Celebi, M.; Purvis, R.; Hartnagel, B.; Gupta, S.; Clogston, P.; Yen, P.; O’Connor, J.; Franke, M. Seismic instrumentation of the Bill Emerson Memorial Mississippi River Bridge at Cape Girardeau (MO): A cooperative effort. In Proceedings of the 4th International Seismic Highway Conference, Memphis, TN, USA, 9–11 February 2004. [Google Scholar]

- Wong, K.Y. Instrumentation and health monitoring of cable-supported bridges. Struct. Control Health Monit. 2004, 11, 91–124. [Google Scholar] [CrossRef]

- Seo, J.; Hatfield, G.; Kimn, J.-H. Probabilistic structural integrity evaluation of a highway steel bridge under unknown trucks. J. Struct. Integr. Maint. 2016, 1, 65–72. [Google Scholar] [CrossRef]

- Newell, S.; Goggins, J.; Hajdukiewicz, M. Real-time monitoring to investigate structural performance of hybrid precast concrete educational buildings. J. Struct. Integr. Maint. 2016, 1, 147–155. [Google Scholar] [CrossRef]

- Park, J.W.; Lee, K.C.; Sim, S.H.; Jung, H.J.; Spencer, B.F. Traffic safety evaluation for railway bridges using expanded multisensor data fusion. Comput.-Aided Civ. Infrastruct. Eng. 2016, 31, 749–760. [Google Scholar] [CrossRef]

- Sanayei, M.; Scampoli, S.F. Structural element stiffness identification from static test data. J. Eng. Mech. 1991, 117, 1021–1036. [Google Scholar] [CrossRef]

- Wolf, J.P.; Song, C. Finite-Element Modelling of Unbounded Media; Wiley: Chichester, UK, 1996. [Google Scholar]

- Nishitani, A.; Matsui, C.; Hara, Y.; Xiang, P.; Nitta, Y.; Hatada, T.; Katamura, R.; Matsuya, I.; Tanii, T. Drift displacement data based estimation of cumulative plastic deformation ratios for buildings. Smart Struct. Syst. 2015, 15, 881–896. [Google Scholar] [CrossRef]

- Park, K.T.; Kim, S.H.; Park, H.S.; Lee, K.W. The determination of bridge displacement using measured acceleration. Eng. Struct. 2005, 27, 371–378. [Google Scholar] [CrossRef]

- Gindy, M.; Nassif, H.; Velde, J. Bridge displacement estimates from measured acceleration records. Transp. Res. Rec. 2007, 2028, 136–145. [Google Scholar] [CrossRef]

- Gindy, M.; Vaccaro, R.; Nassif, H.; Velde, J. A state-space approach for deriving bridge displacement from acceleration. Comput.-Aided Civ. Infrastruct. Eng. 2008, 23, 281–290. [Google Scholar] [CrossRef]

- Lee, H.S.; Hong, Y.H.; Park, H.W. Design of an FIR filter for the displacement reconstruction using measured acceleration in low-frequency dominant structures. Int. J. Numer. Methods Eng. 2010, 82, 403–434. [Google Scholar] [CrossRef]

- Kim, H.I.; Kang, L.H.; Han, J.H. Shape estimation with distributed fiber Bragg grating sensors for rotating structures. Smart Mater. Struct. 2011, 20, 035011. [Google Scholar] [CrossRef]

- Shin, S.; Lee, S.U.; Kim, Y.; Kim, N.S. Estimation of bridge displacement responses using FBG sensors and theoretical mode shapes. Struct. Eng. Mech. 2012, 42, 229–245. [Google Scholar] [CrossRef]

- Park, J.W.; Sim, S.H.; Jung, H.J. Development of a wireless displacement measurement system using acceleration responses. Sensors 2013, 13, 8377–8392. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Park, J.W.; Jung, H.J.; Sim, S.H. Displacement estimation using multimetric data fusion. IEEE/ASME Trans. Mechatron. 2013, 18, 1675–1682. [Google Scholar] [CrossRef]

- Cho, S.; Sim, S.H.; Park, J.W. Extension of indirect displacement estimation method using acceleration and strain to various types of beam structures. Smart Struct. Syst. 2014, 14, 699–718. [Google Scholar] [CrossRef]

- Cho, S.; Yun, C.B.; Sim, S.H. Displacement estimation of bridge structures using data fusion of acceleration and strain measurement incorporating finite element model. Smart Struct. Syst. 2015, 15, 645–663. [Google Scholar] [CrossRef]

- Almeida, G.; Melício, F.; Biscaia, H.; Chastre, C.; Fonseca, J.M. In-plane displacement and strain image analysis. Comput.-Aided Civ. Infrastruct. Eng. 2015, 31, 292–304. [Google Scholar] [CrossRef]

- Nassif, H.H.; Gindy, M.; Davis, J. Monitoring of bridge girder deflection using laser Doppler vibrometer. In Proceedings of the 10th International Conference and Exhibition-Structural Faults and Repair Conference, London, UK, 1–3 July 2003; p. 6. [Google Scholar]

- Castellini, P.; Martarelli, M.; Tomasini, E.P. Laser Doppler Vibrometry: Development of advanced solutions answering to technology’s needs. Mech. Syst. Signal Process. 2006, 20, 1265–1285. [Google Scholar] [CrossRef]

- Im, S.B.; Hurlebaus, S.; Kang, Y.H. Summary review of GPS technology for structural health monitoring. J. Struct. Eng. 2013, 139, 1653–1664. [Google Scholar] [CrossRef]

- Celebi, M.; Prescott, W.; Stein, R.; Hudnut, K.; Behr, J.; Wilson, S. GPS monitoring of dynamic behavior long-period structures. Earthq. Spectra 1999, 15, 55–66. [Google Scholar] [CrossRef]

- Fujino, Y.; Murata, M.; Okano, S.; Takeguchi, M. Monitoring system of the Akashi Kaikyo bridge and displacement measurement using GPS. In Proceedings of the SPIE 3995, Nondestructive Evaluation of Highways, Utilities, and Pipelines IV, Newport Beach, CA, USA, 6–8 March 2000; p. 229. [Google Scholar]

- Watson, C.; Watson, T.; Coleman, R. Structural monitoring of cable-stayed bridge: Analysis of GPS versus modeled deflections. J. Surv. Eng. 2007, 133, 23–28. [Google Scholar] [CrossRef]

- Kijewsji-Correa, T.L.; Kareem, A.; Kochly, M. Experimental verification and full-scale deployment of global positioning systems to monitor the dynamic response of tall buildings. J. Struct. Eng. 2006, 132, 1242–1253. [Google Scholar] [CrossRef]

- Jo, H.; Sim, S.H.; Tatkowski, A.; Spencer, B.F.; Nelson, M.E. Feasibility of displacement monitoring using low-cost GPS receivers. Struct. Control Health Monit. 2013, 20, 1240–1254. [Google Scholar] [CrossRef]

- Fukuda, Y.; Feng, M.Q.; Narita, Y.; Kaneko, S.I.; Tanaka, T. Vision-based displacement sensor for monitoring dynamic response using robust object search algorithm. IEEE Sens. J. 2013, 13, 4725–4732. [Google Scholar] [CrossRef]

- Feng, M.Q.; Fukuda, Y.; Feng, D.; Mizuta, M. Nontarget vision sensor for remote measurement of bridge dynamic response. J. Bridge Eng. 2015, 20, 04015023. [Google Scholar] [CrossRef]

- Yoon, H.; Elanwar, H.; Choi, H.; Golparvar-Fard, M.; Spencer, B.F. Target-free approach for vision-based structural system identification using consumer-grade cameras. Struct. Control Health. Monit. 2016, 23, 1405–1416. [Google Scholar] [CrossRef]

- Shariati, A.; Schumacher, T.; Ramanna, N. Eulerian-based virtual visual sensors to detect natural frequencies of structures. J. Civ. Struct. Health Monit. 2015, 5, 457–468. [Google Scholar] [CrossRef]

- Schumacher, T.; Shariati, A. Monitoring of structures and mechanical systems using virtual visual sensors for video analysis: Fundamental concept and proof of feasibility. Sensors 2013, 13, 16551–16564. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q.; Ozer, E.; Fukuda, Y. A vision-based sensor for noncontact structural displacement measurement. Sensors 2015, 15, 16557–16575. [Google Scholar] [CrossRef] [PubMed]

- Wahbeh, A.M.; Caffrey, J.P.; Masri, S.F. A vision-based approach for the direct measurement of displacements in vibrating systems. Smart Mater. Struct. 2003, 12, 785–794. [Google Scholar] [CrossRef]

- Choi, H.S.; Cheung, J.H.; Kim, S.H.; Ahn, J.H. Structural dynamic displacement vision system using digital image processing. NDT E Int. 2011, 44, 597–608. [Google Scholar] [CrossRef]

- Lee, J.J.; Shinozuka, M. A vision-based system for remote sensing of bridge displacement. NDT E Int. 2006, 39, 425–431. [Google Scholar] [CrossRef]

- Fukuda, Y.; Feng, M.Q.; Shinozuka, M. Cost-effective vision-based system for monitoring dynamic response of civil engineering structures. Struct. Control Health Monit. 2010, 17, 918–936. [Google Scholar] [CrossRef]

- Song, Y.Z.; Bowen, C.R.; Kim, A.H.; Nassehi, A.; Padget, J.; Gathercole, N. Virtual visual sensors and their application in structural health monitoring. Struct. Health Monit. 2014, 13, 251–264. [Google Scholar] [CrossRef] [Green Version]

- Zhao, X.; Liu, H.; Yu, Y.; Xu, X.; Hu, W.; Li, M.; Ou, J. Bridge displacement monitoring method based on laser projection-sensing technology. Sensors 2015, 15, 8444–8463. [Google Scholar] [CrossRef] [PubMed]

- Shariati, A.; Schumacher, T. Eulerian-based virtual visual sensors to measure dynamic displacements of structures. Struct. Control Health Monit. 2016, 24, e1977. [Google Scholar] [CrossRef]

- Chang, C.C.; Xiao, X.H. Three-dimensional structural translation and rotation measurement using monocular videogrammetry. J. Surv. Eng 2009, 136, 840–848. [Google Scholar] [CrossRef]

- Ji, Y. A computer vision-based approach for structural displacement measurement. In Proceedings of the SPIE 7647, Sensors and Smart Structures Technologies for Civil, Mechanical, and Aerospace Systems 2010, San Diego, CA, USA, 7–11 March 2010. [Google Scholar]

- Jeon, H.M.; Kim, Y.J.; Lee, D.H.; Myung, H. Vision-based remote 6-DOF structural displacement monitoring system using a unique marker. Smart Struct. Syst. 2014, 13, 927–942. [Google Scholar] [CrossRef]

- Kim, S.W.; Jeon, B.G.; Kim, N.S.; Park, J.C. Vision-based monitoring system for evaluating cable tensile forces on a cable-stayed bridge. Struct. Health Monit. 2013, 12, 440–456. [Google Scholar] [CrossRef]

- Ye, X.W.; Yi, T.H.; Dong, C.Z.; Liu, T. Vision-based structural displacement measurement: System performance evaluation and influence factor analysis. Measurement 2016, 88, 372–384. [Google Scholar] [CrossRef]

- Wu, L.J.; Casciati, F.; Casciati, S. Dynamic testing of a laboratory model via vision-based sensing. Eng. Struct. 2014, 60, 113–125. [Google Scholar] [CrossRef]

- Dworakowski, Z.; Kohut, P.; Gallina, A.; Holak, K.; Uhl, T. Vision-based algorithms for damage detection and localization in structural health monitoring. Struct. Control Health Monit. 2016, 23, 35–50. [Google Scholar] [CrossRef]

- Kohut, P.; Holak, K.; Martowicz, A. An uncertainty propagation in developed vision based measurement system aided by numerical and experimental tests. J. Theor. Appl. Mech. 2012, 50, 1049–1061. [Google Scholar]

- Jeong, Y.; Park, D.; Park, K.H. PTZ camera-based displacement sensor system with perspective distortion correction unit for early detection of building destruction. Sensors 2017, 17, 430. [Google Scholar] [CrossRef] [PubMed]

- Sładek, J.; Ostrowska, K.; Kohut, P.; Holak, K.; Gąska, A.; Uhl, T. Development of a vision based deflection measurement system and its accuracy assessment. Measurement 2013, 46, 1237–1249. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Identification of structural stiffness and excitation forces in time domain using noncontact vision-based displacement measurement. J. Sound Vib. 2017, 406, 15–28. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Experimental validation of cost-effective vision-based structural health monitoring. Mech. Syst. Signal Process. 2017, 88, 199–211. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Vision-based multipoint displacement measurement for structural health monitoring. Struct. Control Health Monit. 2016, 23, 876–890. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: New York, NY, USA, 2003. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1990, 9, 62–66. [Google Scholar] [CrossRef]

- Sobel, I. An isotropic 3 × 3 image gradient operator. In Machine Vision for Three-Dimensional Scenes; Freeman, H., Ed.; Academic Press: New York, NY, USA, 1990; pp. 376–379. [Google Scholar]

| Parts | Model | Features |

|---|---|---|

| Marker | User-defined | - Four white circles in a black background |

| - Horizontal interval: 150 mm | ||

| - Vertical interval: 100 mm | ||

| - Radius of the circles: 10 mm | ||

| Camera | CNB-A1263NL | - NTSC output interface 1 |

| - ×22 optical zoom | ||

| Computer | LG-A510 | - 1.73 GHz Intel Core i7 CPU |

| - 4 GB DDR3 RAM | ||

| LDV | RSV-150 | - Displacement resolution: 0.3 μm |

| Cases | Feature Detection | Emax | Esd |

|---|---|---|---|

| Case 1 | without adaptive ROI | 0.0549 mm | 0.0185 |

| with adaptive ROI | 0.0433 mm | 0.0126 | |

| Case 2 | without adaptive ROI | 0.2518 mm | 0.1736 |

| with adaptive ROI | 0.0314 mm | 0.0127 | |

| Case 3 | without adaptive ROI | 0.7110 mm | 0.4081 |

| with adaptive ROI | 0.0565 mm | 0.0439 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Lee, K.-C.; Cho, S.; Sim, S.-H. Computer Vision-Based Structural Displacement Measurement Robust to Light-Induced Image Degradation for In-Service Bridges. Sensors 2017, 17, 2317. https://doi.org/10.3390/s17102317

Lee J, Lee K-C, Cho S, Sim S-H. Computer Vision-Based Structural Displacement Measurement Robust to Light-Induced Image Degradation for In-Service Bridges. Sensors. 2017; 17(10):2317. https://doi.org/10.3390/s17102317

Chicago/Turabian StyleLee, Junhwa, Kyoung-Chan Lee, Soojin Cho, and Sung-Han Sim. 2017. "Computer Vision-Based Structural Displacement Measurement Robust to Light-Induced Image Degradation for In-Service Bridges" Sensors 17, no. 10: 2317. https://doi.org/10.3390/s17102317