1. Introduction

The applications of terrestrial laser scanning (TLS) are continuously growing in areas such as city modeling, heritage documentation, manufacturing, and terrain surveying. The primary purpose of terrestrial laser scanning is to generate a complete surface model of the target object. However, because the limits of coverage vary and interruptions exist, a series of scans from different views are generally necessary. Hence, point clouds from various scans have their own coordinate frames. To obtain a complete model, point clouds from multiple scans must be transformed into a common uniform frame. This process is called registration.

Standard method for the registration task includes using artificial targets and semi-automatic registration. There are many types of artificial targets such as spheres [

1] and planar targets [

2], and such targets generally have special shapes or reflective features. When the targets are identified, transformation parameters can be calculated based on corresponding targets between different scans. One drawback of this method is that it takes too much additional time to arrange the targets in the scene. In some extreme conditions, it is impossible to place any targets. Additionally, placing artificial targets inevitably causes occlusions and disrupts the integrality of the data. Semi-automatic registration is also a commonly used registration method, which possesses a high universality and has been implemented in many commercial or opensource software packages (e.g., Riscan, Cyclone and Cloudcompare), and the corresponding points are picked manually to calculate the transformation parameters. Nevertheless, it sometimes takes much time and manpower when there are a number of scans.

To avoid the manual intervention, much research has been conducted focusing on automatic registration. Generally, automatic registration comprises two stages: coarse registration, roughly aligning scans and producing good initial pose parameters, and fine registration, obtaining final registration results with high precision. The most widely used method for fine registration is the Iterative Closest Point (ICP) algorithm introduced by Besl and McKay [

3] and Chen and Medioni [

4]. Transformation parameters between two scans are estimated iteratively until the sum of the squares of Euclidean distances between corresponding points converge to the minimum. Variants and optimizations have been proposed in various contexts since then, such as mathematical framework [

5,

6], corresponding metric [

7,

8], corresponding selection and weighting [

9]. The drawback is that the distances will most likely converge to a local minimum without a good prior alignment. Therefore, methods to roughly align two original points in the coarse registration stage are important to the ICP algorithm.

A general line of thought for coarse registration is based on distinctive spatial elements, such as points, lines or planes. Those spatial elements generally have unique features, which are different from most others and can be extracted for correspondence searching. Scale Invariant Feature Transform (SIFT) [

10] is one of the most widely used point features and can be classified as 2D key points. Bendels et al. [

11] introduced SIFT for TLS points registration combing SIFT features from camera images with surface elements from range images. Barnea and Filin [

12] proposed an autonomous model based on SIFT key points of panoramic images. This method addresses the registration of multiple scans. Application of the SIFT feature to reflectance images was introduced by Böhmand and Becker [

13]. False matches caused by symmetry and self-similarity are filtered by checking the geometric relationship in a RANSAC filtering scheme. The SIFT feature was also used on reflectance images by Wang and Brenner [

14] and Kang et al. [

15,

16]. Weinmann et al. [

17] extracted characteristic feature points from reflectance images based on SIFT features and projected them into 3D space to calculate transformation parameters. This algorithm can achieve a high accuracy without fine registration by using 3D-to-3D geometric constraints. Besides SIFT descriptor, other image features also have been used, such as Moravec operator [

18]. Methods mentioned above mainly rely on mature image processing algorithms which are efficient and convenient, but generally require large overlap areas to make sure enough correspondences. To relax the overlap requirement and adapt to the case of minimal overlap, Renaudin et al. utilized the linear features from photogrammetric and TLS dataset for the registration of multiple scans, with the coregistration of image and point cloud as a byproduct [

19]. Similarly, photogrammetric linear and planar features were used for scan registration by Canaz and Habib [

20], to avoid the requirement of large overlap.

In situations of strong viewpoint changes or poor intensity resolution, a method using 2D features is prone to failure; 3D features display more robust performances. Thus, many studies have focused on 3D point features for registration. Those methods extract 3D feature point sets and identify corresponding points to recover the transformation between two scans. Gelfand et al. [

21] proposed an integral volume descriptor to detect feature points and match those points using an algorithm called branch-and-bound correspondence search. Theiler et al. [

22] extracted Difference-of-Gaussian (DoG) and 3D Harris [

23] key points from voxel-filtered datasets as input to the 4-Points Congruent Sets algorithm [

24] to achieve coarse registration. Rusu et al. [

25] estimated a set of 16D features called Point Feature Histograms (PFH), which are robust to scale and noise, providing good starting points for ICP. Then, Rusu et al. [

26] applied some optimizations to PFH and proposed Fast Point Feature Histograms (FPFH) reducing the computation time dramatically. Examples of point features also include normal vector [

27], distance between target point and center of neighboring points [

28], 2.5D SIFT [

29], curvature [

30] and rotational projection statistics feature [

31]. Stamos and Leordeanu extracted the intersection lines of neighboring planes as the primitives and calculated the transformation parameters with at least two line pairs [

32]. Yao et al. presented a common framework for the automatic registration of two scans with linear or planar features and the orientation angles and distances were used to find candidate matches [

33]. Yang and Zang used curves as matching primitives to find the initial transformation for point clouds with freeform surfaces, such as statues and artifacts [

34]. Planes are also used for coarse registration in many studies. Theiler and Schindler [

35] used intersecting planes to generate a set of virtual tie points, which are described by properties of corresponding parent planes. Then, tie points matching is guided by those descriptors. In the work of Dold and Brenner [

36], planar patches were described by features including area, boundary length, bounding box and mean intensity value and matched with the help of image information. In this approach, those features of planar patches are sensitive to density and occlusions; thus, Brenner et al. [

37] proposed a more robust method with planar patches. Plane triples were used instead of single patch in the matching process based on a sensible criterion. Pu et al. [

38] combined the semantic features of planar patches and GPS position to derive the mathematical formulation of transformation parameters. The semantic information was also used in [

39], in which Yang et al. detected features points based on semantic feature and the matching was processed by searching for corresponding triangles constructed and eliminate from the feature points. Kelbe et al. [

40] calculated the transformation parameters for the forest TLS data based on the results of tree detection, which can be obtained from some previous work [

41,

42]. Some other geometric elements are also used in the registration, such as salient directions [

43], cylindrical and polygonal objects [

44], fitted objects in industrial environments [

7] and other fitted geometric primitives [

45]. To identify a good feature combination, Urban and Weinmann [

46] presented a framework to evaluate different detector-descriptor combinations, in which five approaches are involved.

Another train of thought depends on external sensors (e.g., GPS/IMU) to record the position and orientation of each scan. Point clouds from different scans can be registered easily. This method is often used in mobile laser scanning [

47,

48] but can also be used in terrestrial laser scanning [

38,

49]. External sensors are helpful for registration of terrestrial point clouds, although the high cost of external sensors limits the application of this method.

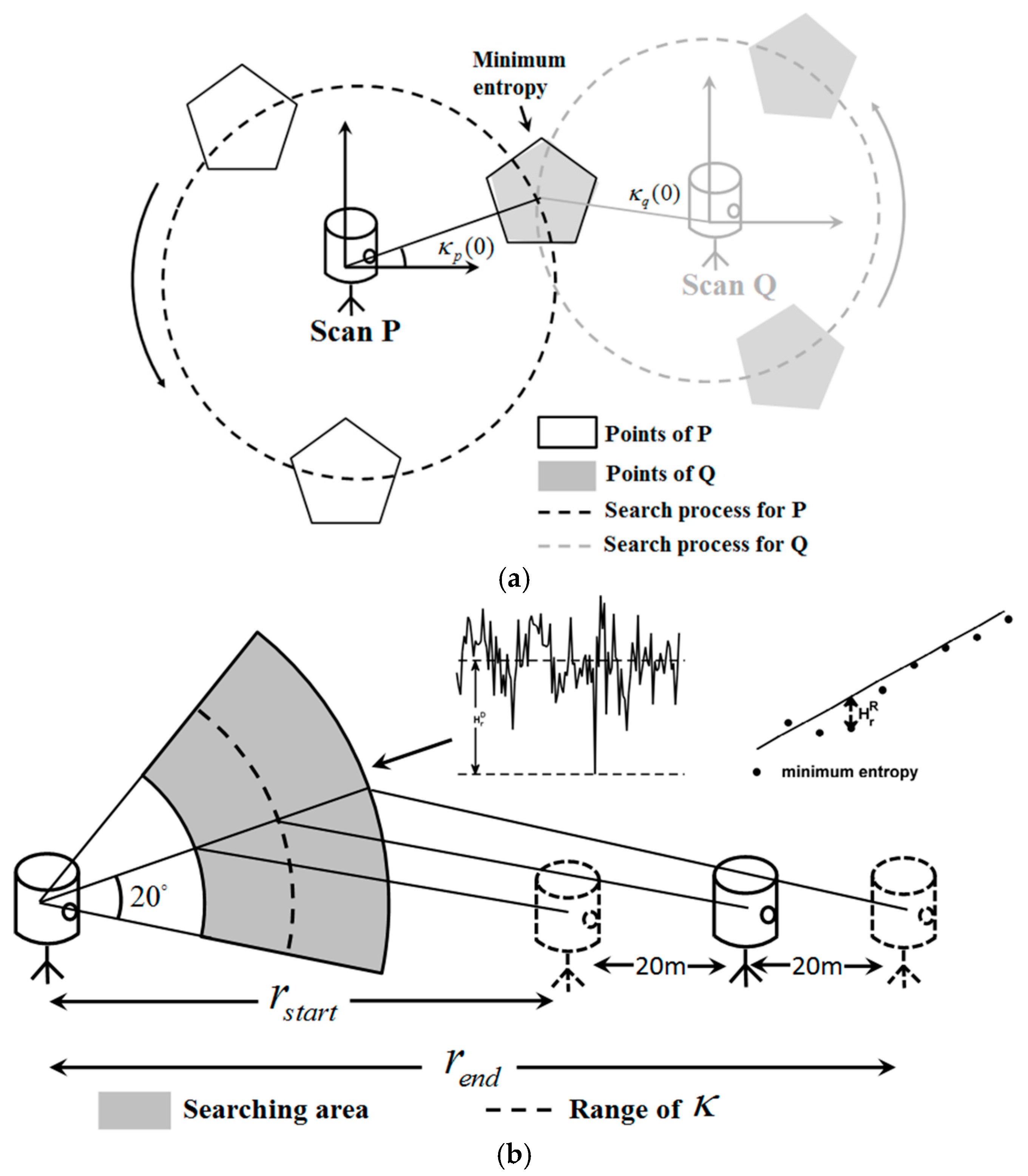

The registration techniques described above suggest that most methods focus on detailed information extracted from point clouds to achieve the registration task. In complex scenes, feature extraction and matching are time-consuming and prone to failure if too much symmetry, self-similarity and occlusions exist. As the external sensors are quite helpful for point registration, this paper presents a novel method for the automatic coarse registration of leveled point clouds, combining terrestrial laser scanner with the smartphone, which is low-cost compared with professional sensors. This method works without synchronization between scanner and smartphone and jumps out of detailed features to register terrestrial point clouds from a macroscopic perspective. Scanner positions are roughly measured by the smartphone GPS and the distance between neighboring scanner positions is used as a translation constraint. The distribution coherence of the whole points from two scans is measured by 2D distribution entropy and used to identify optimal transformation parameters. The main contributions of this paper are as follows:

combining the terrestrial laser scanner with smartphone for coarse registration;

using 2D projection entropy to measure the distribution coherence between two scans; and

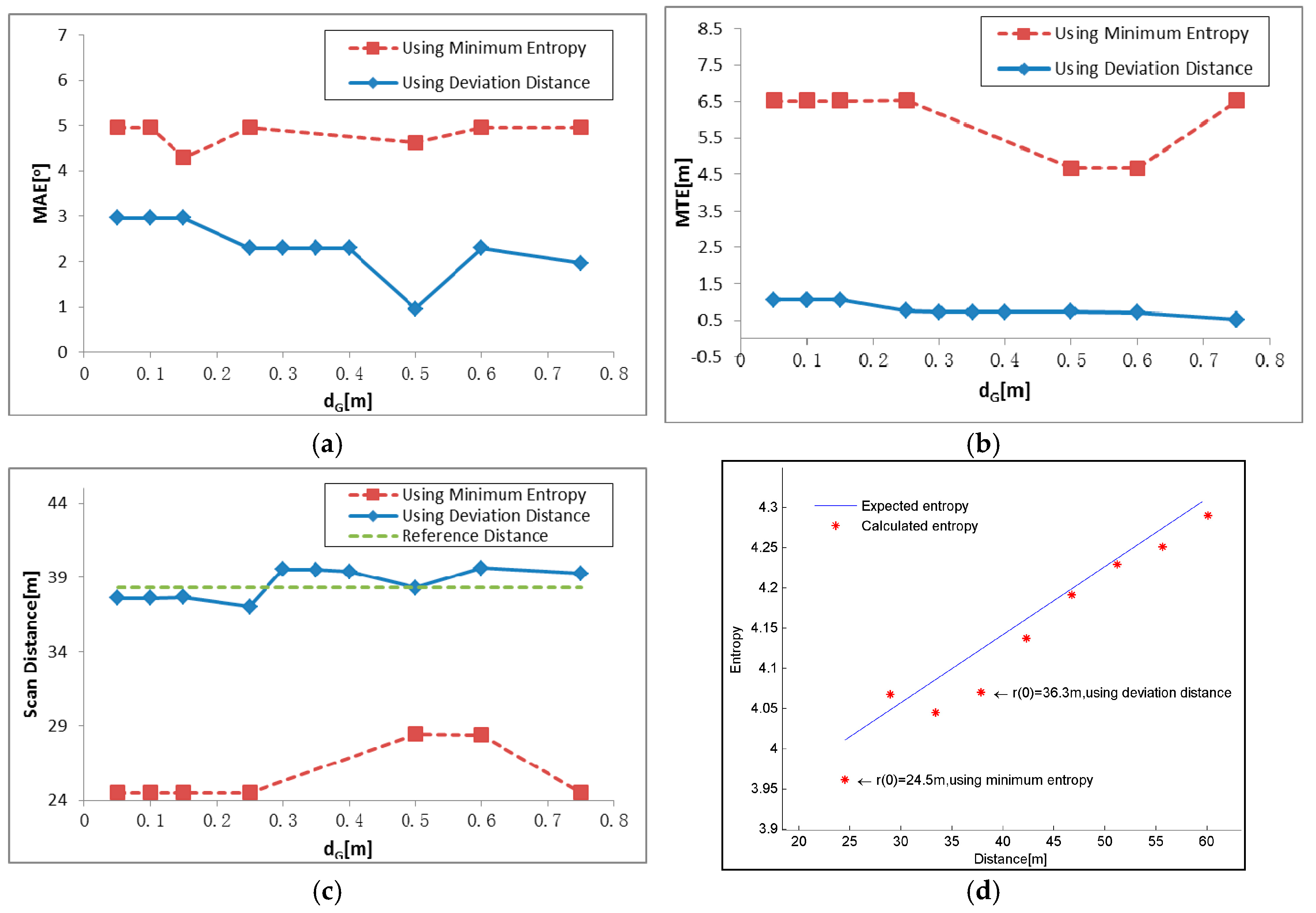

presenting the Iterative Minimum Entropy (IME) algorithm to correct initial transformation parameters and reduce the effect of positioning error from the smartphone GPS.

4. Conclusions

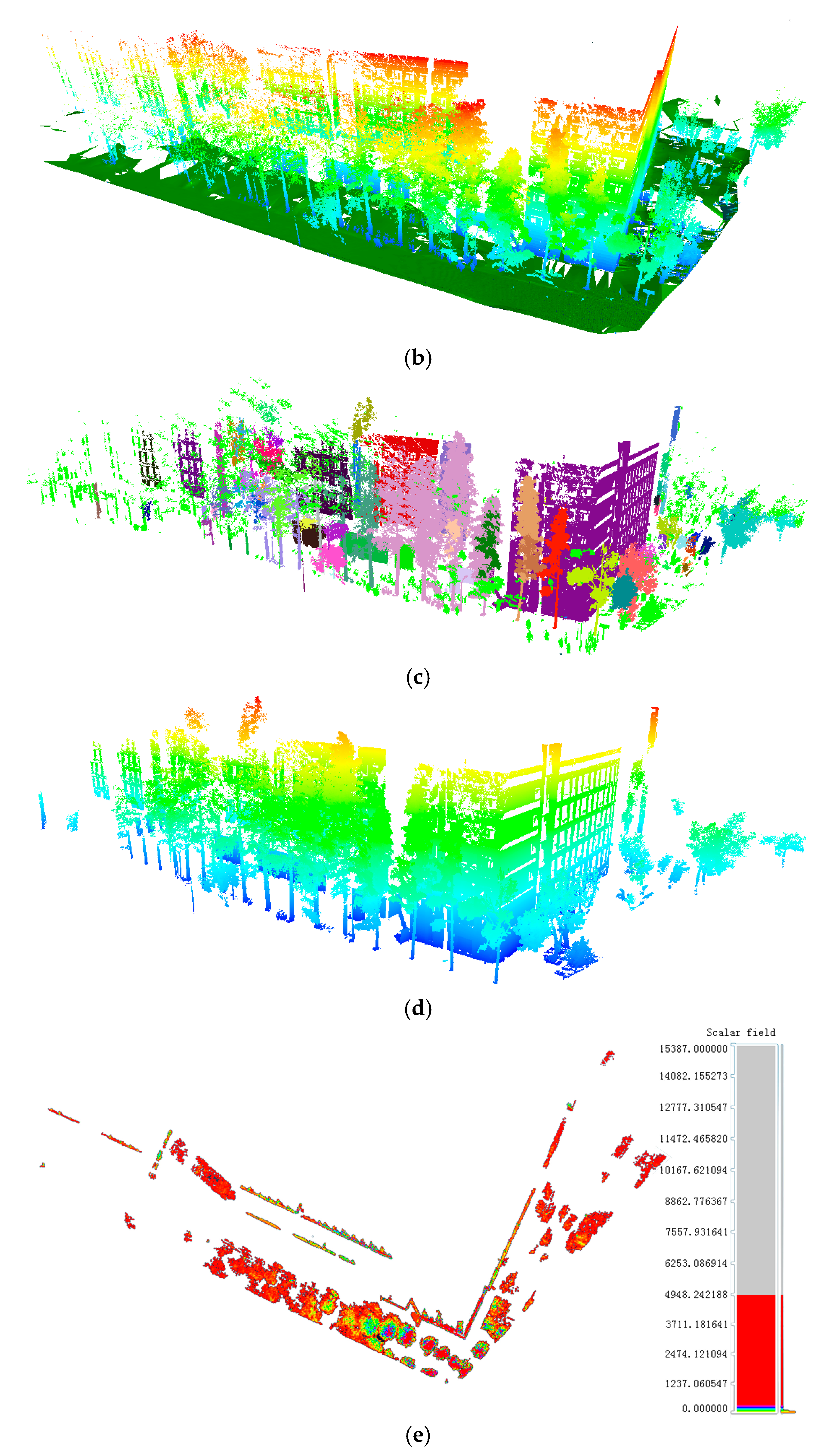

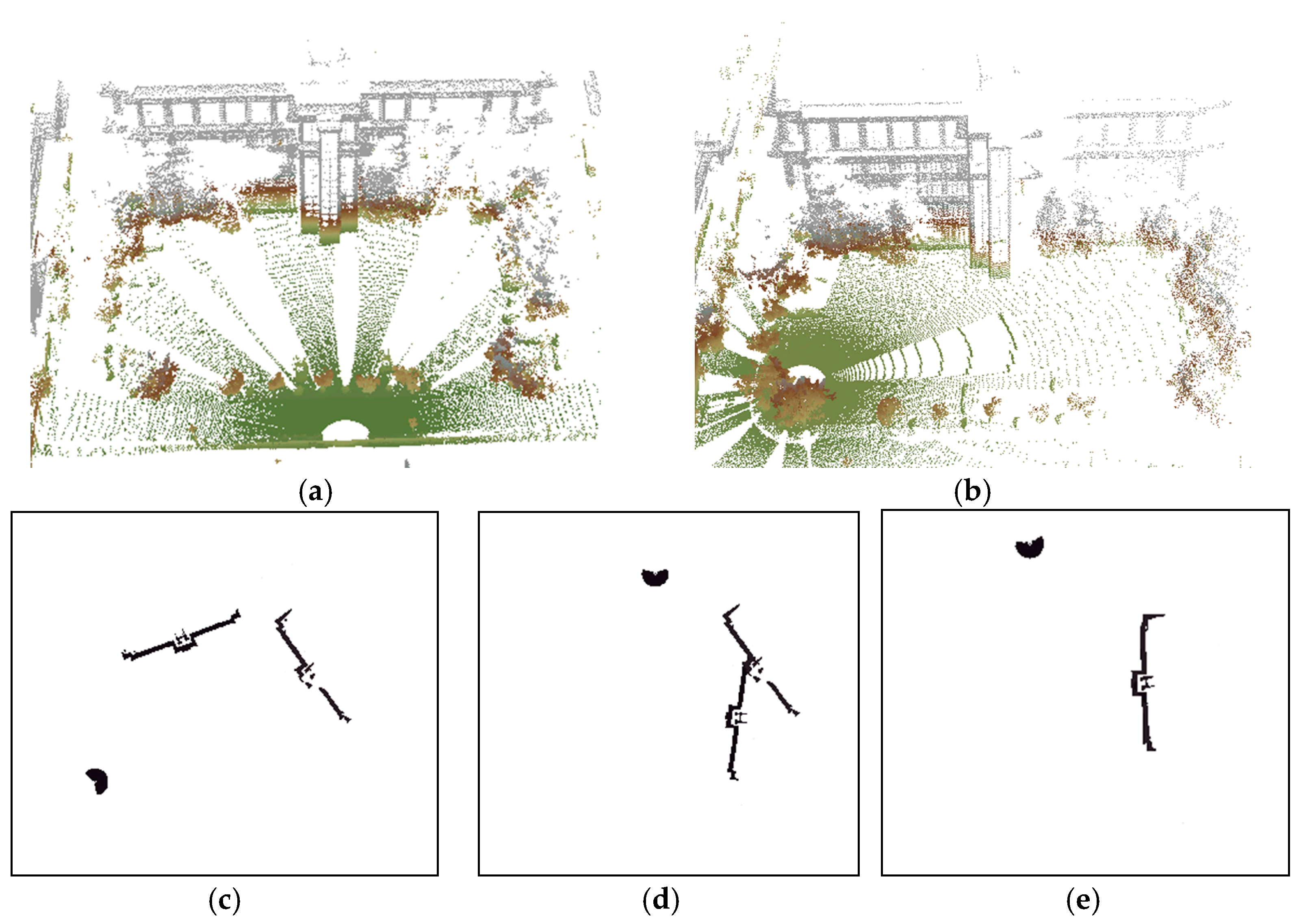

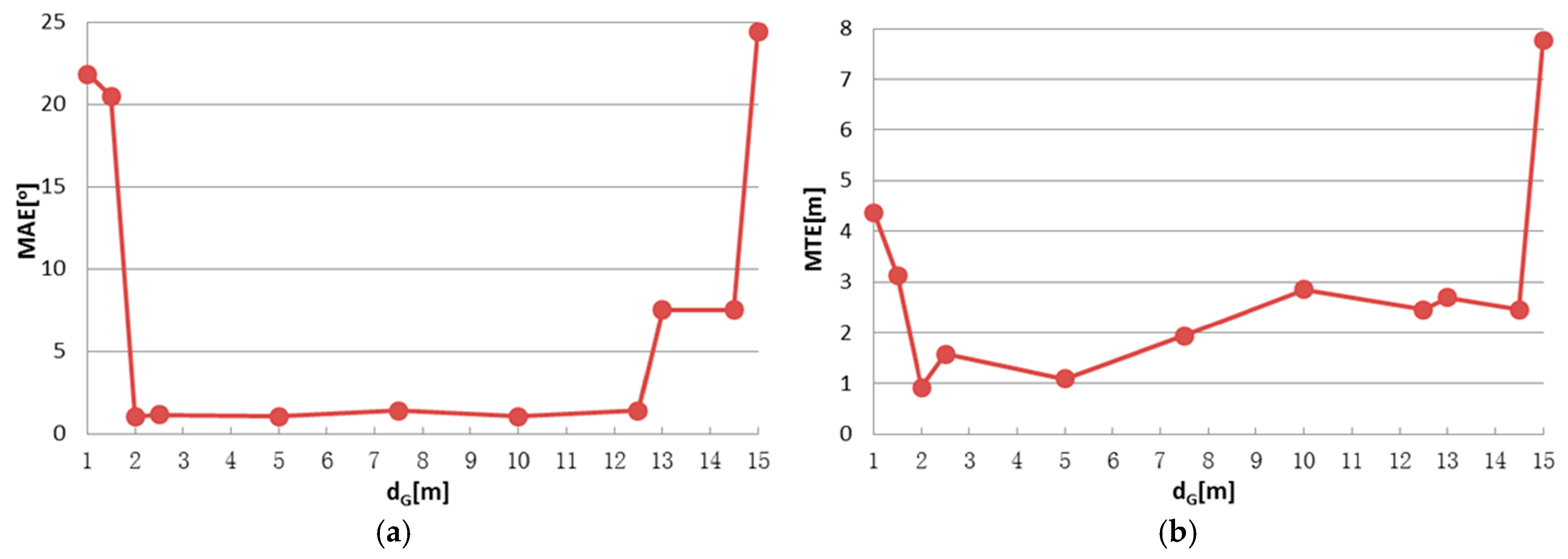

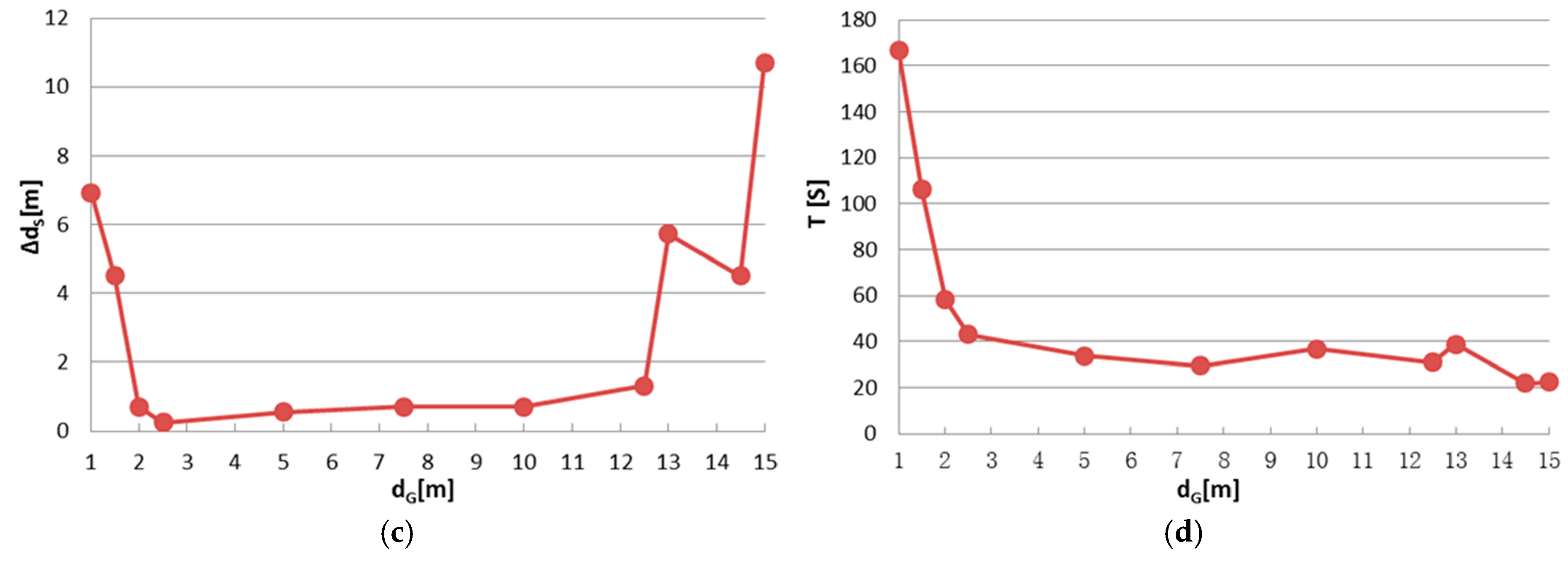

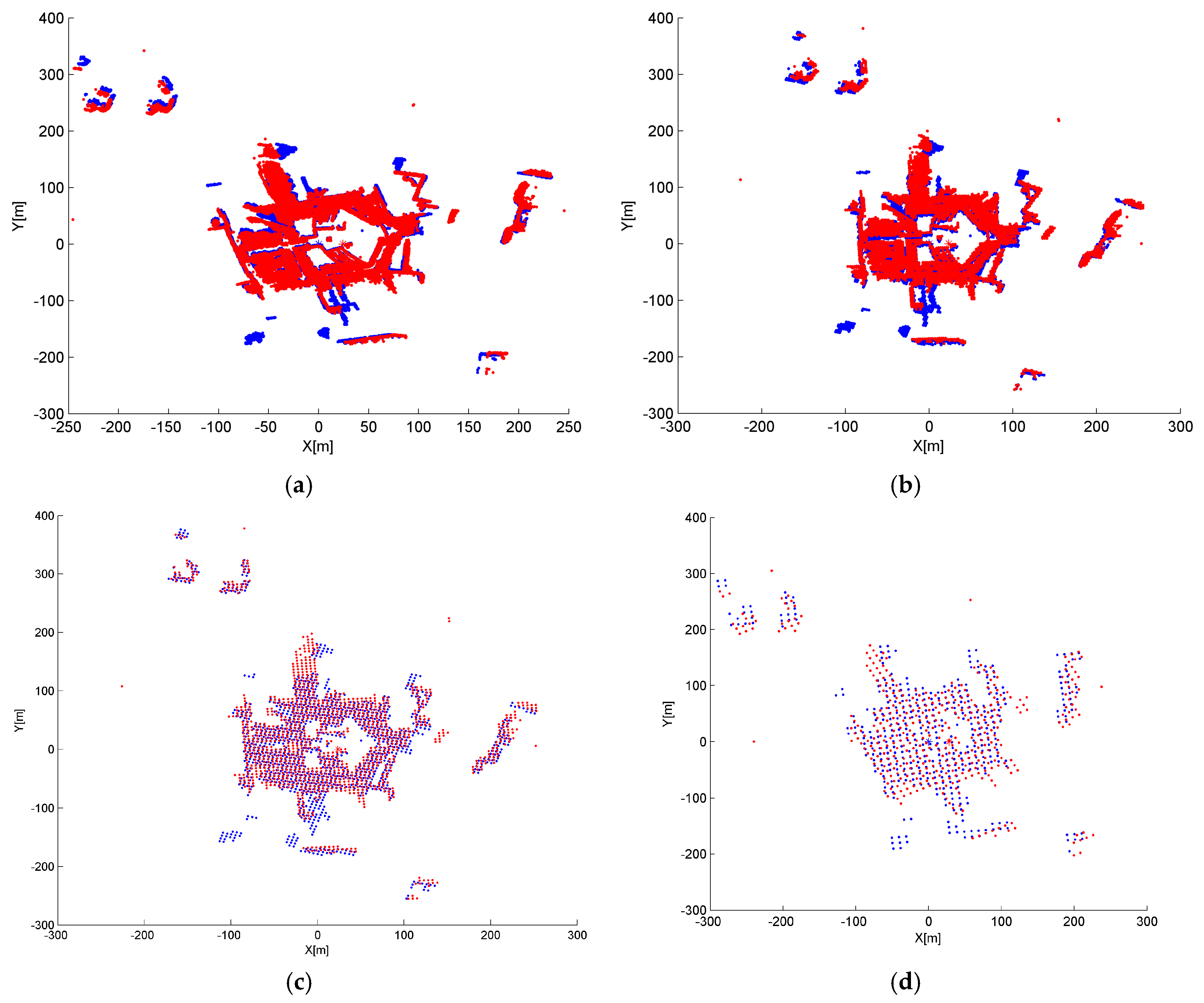

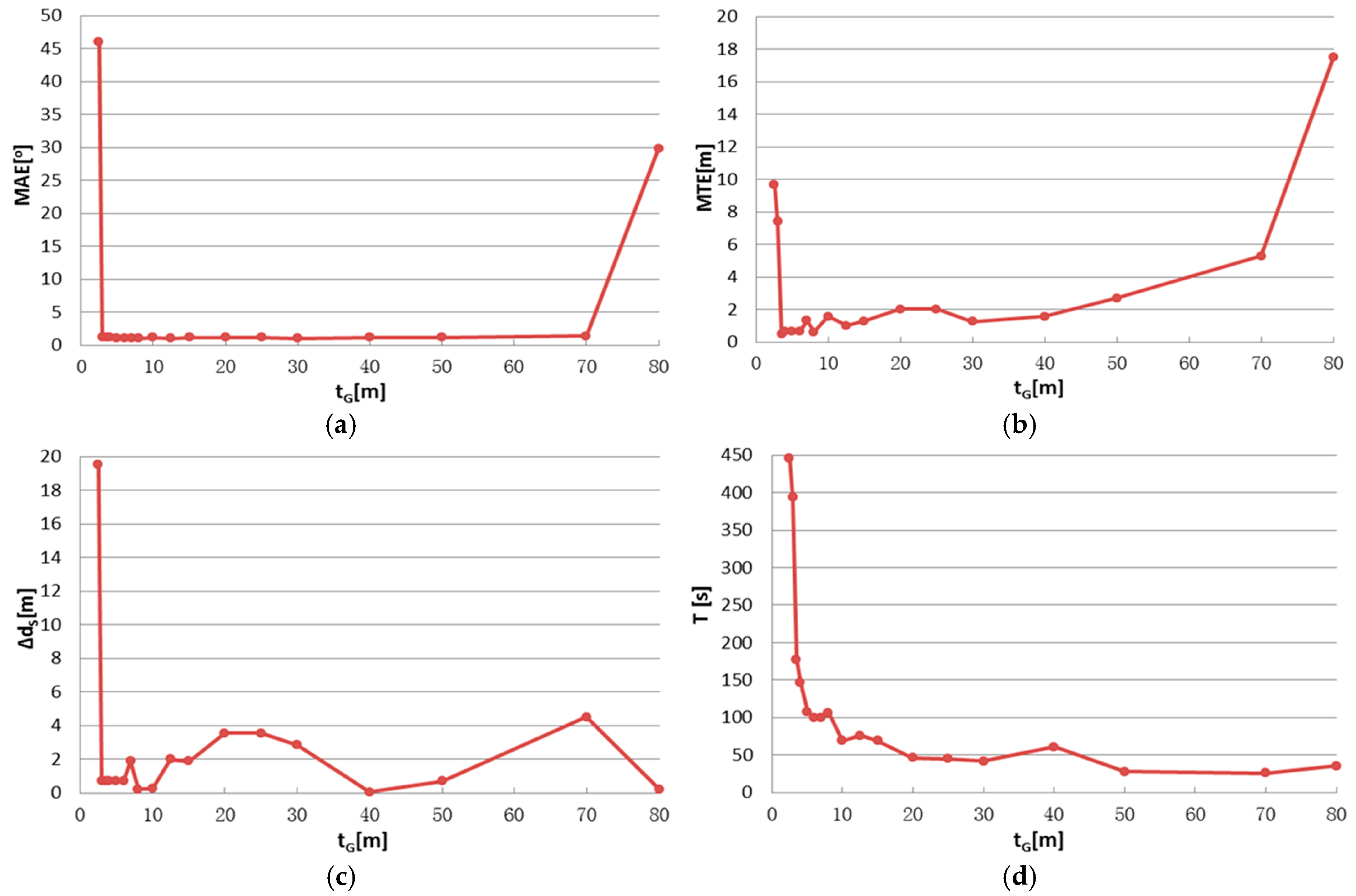

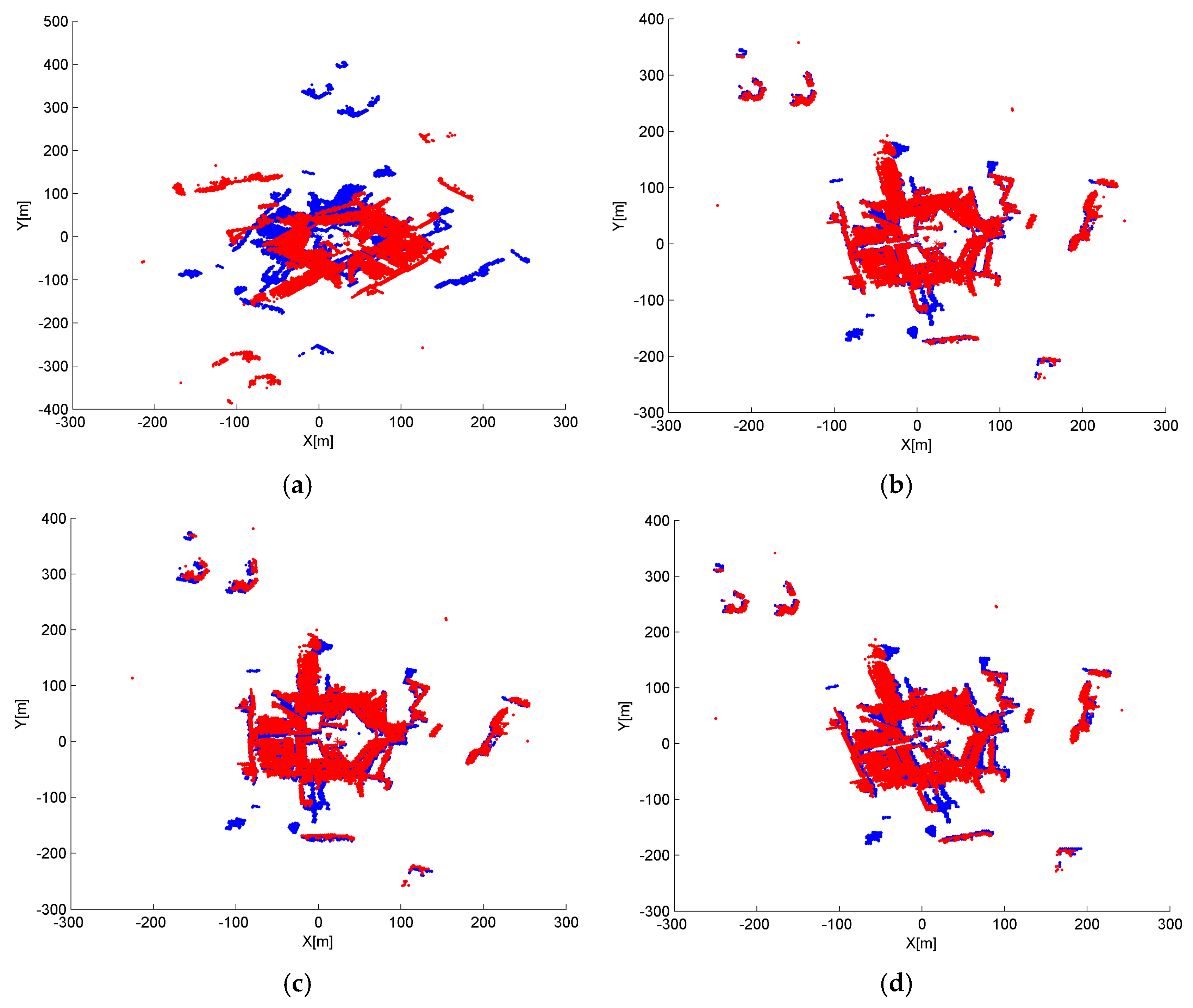

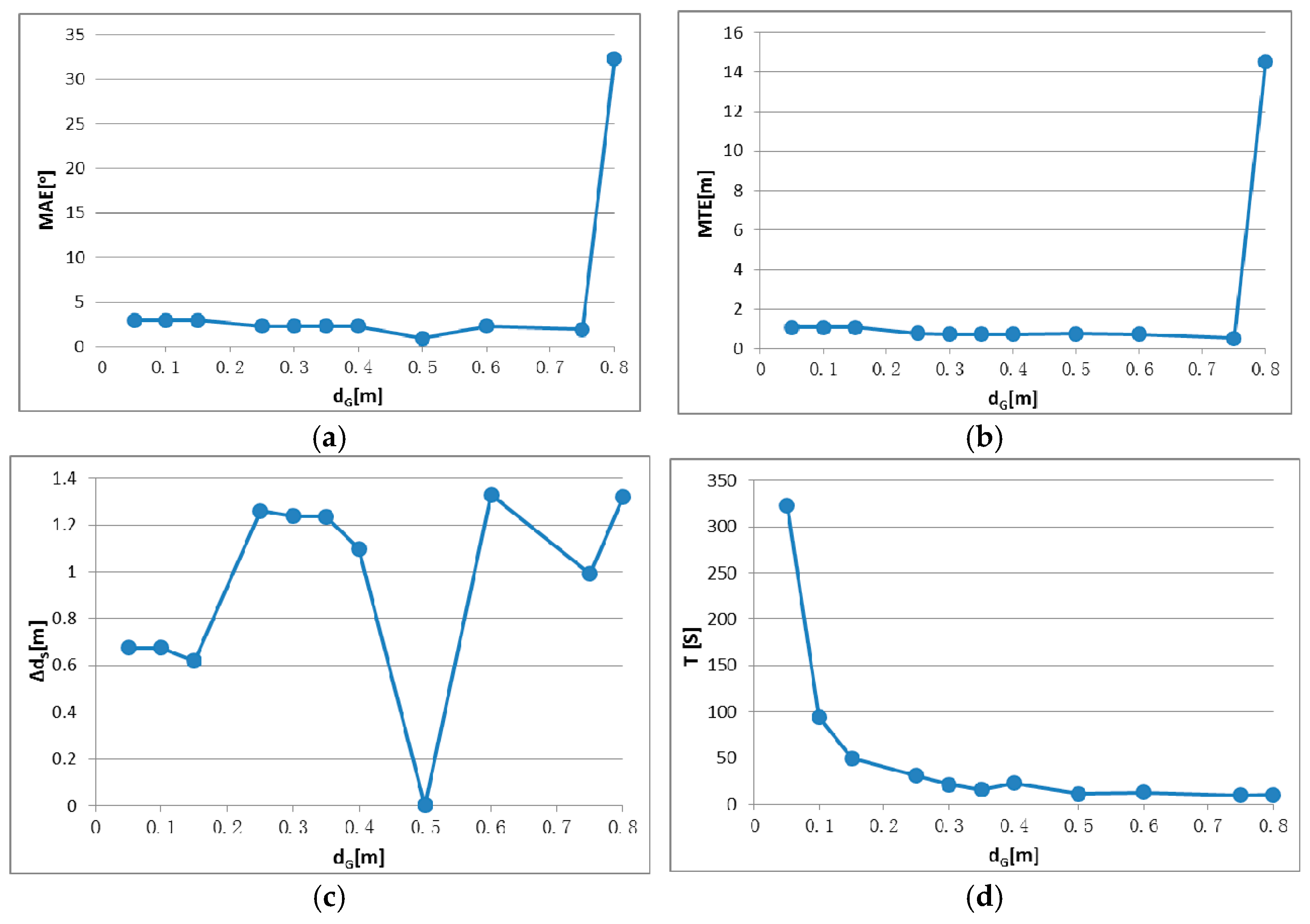

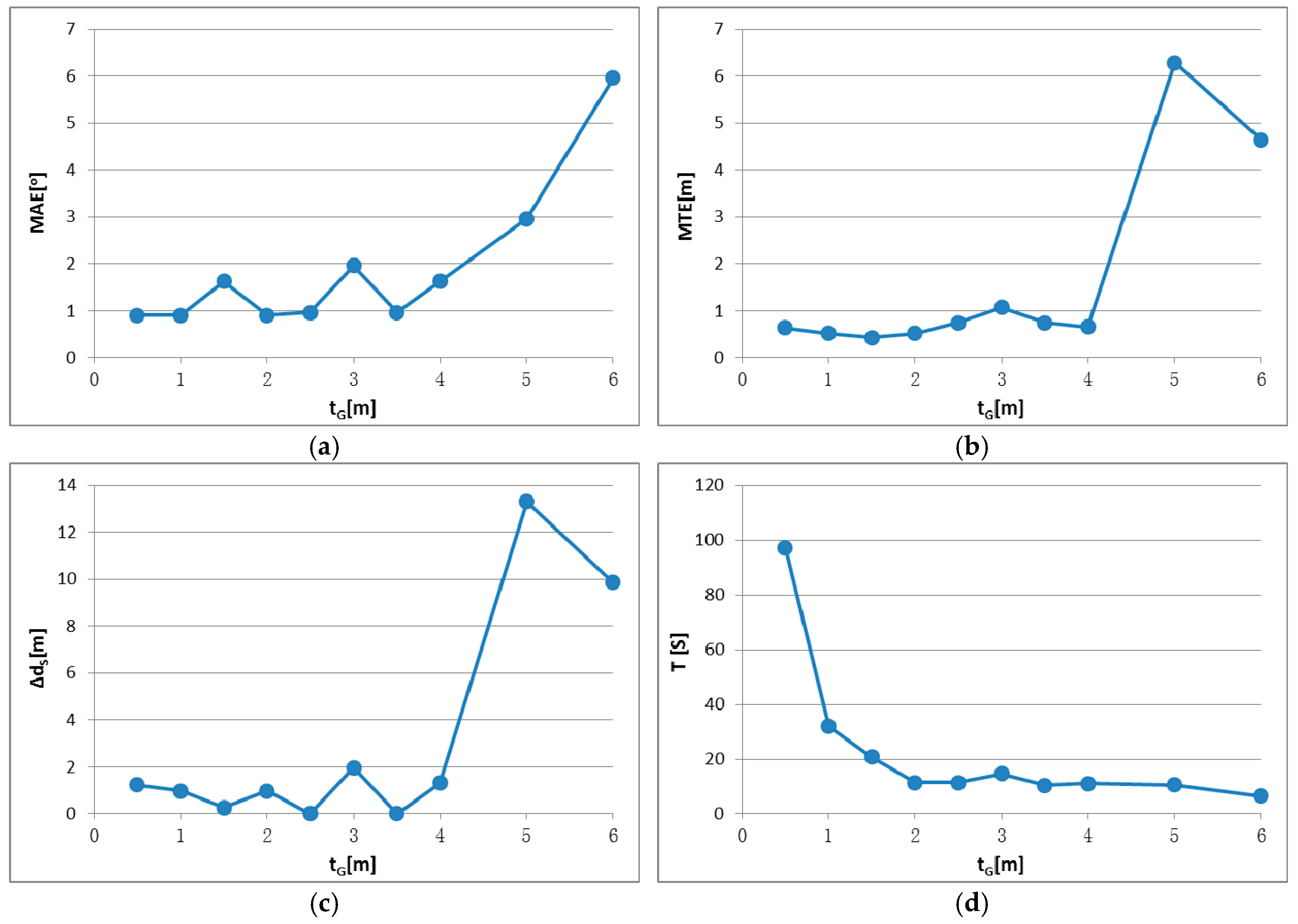

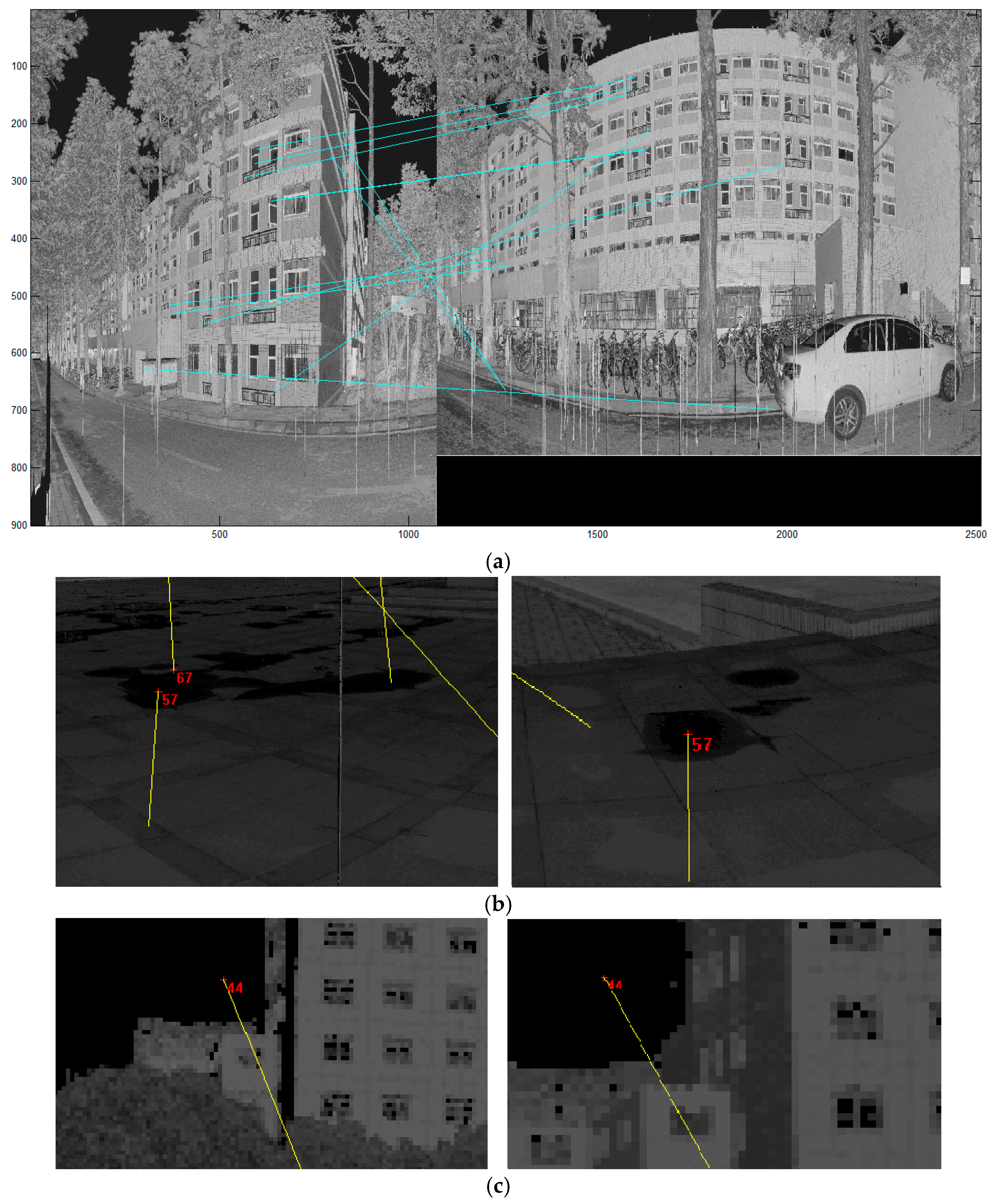

In this paper, a method called Iterative Minimum Entropy (IME) is proposed for the coarse registration of TLS point clouds, with a novel sensor combination mode of terrestrial laser scanner and smartphone. This method is based on 2D distribution entropy and the distance r between neighboring scan positions, by rotating two point clouds around their own z axis until the optimal initial transformation is reached. Since there is no synchronization between the laser scanner and smartphone, only a rough distance between neighboring scanner positions is measured using smartphone GPS. We proposed two criteria, the difference between average and minimum entropy and the deviation from minimum entropy to expected entropy, to decide the optimal initial transformation between two scans instead of directly using the minimum entropy. The method achieved high accuracy and efficiency in the two experiments we have conducted, in which panoramic and non-panoramic, vegetation-dominated and building-dominated scenes were tested. Commonly, the proposed method achieves a good result when tG is approximately 1% to 10% of the shortest edge of the bounding box and dG is approximately 50 to 100 times the average density, according to the experimental results, but the range is not absolute. It is also noticed that the IME is likely to fall into a local convergence basin when the overlapping rate is too low or most overlapping areas are of narrow distribution.

The future investigations will include efforts to deal with the small overlapping rate, which is about 5%–10%, and homogeneous overlapping areas. In addition, IME can be extended in the future using other ranging methods, e.g., Electronic Distance Measurement (EDM), or the initial distance can simply be given by the user in some special cases, such as near the subway or train tracks, where each track section has a fixed length and can be treated as the distance marker, making it a method using no prior information. However, some modifications may be needed to ensure the robustness for different manners of extension.