1. Introduction

This study is related to the development of an LPS (local positioning system) based on IR signals and PSD sensors, used to obtain the position of mobile agents in indoor environments. A variety of technologies is used to implement an LPS, depending on the accuracy, range and cost constraints of each application. Examples of commonly-used technologies include: infrared, ultrasound, video and radio frequency. Our research group is currently developing an LPS based on the emission and reception of near infrared (NIR) signals. As reported in [

1], this technology provides positioning accuracies of between 1 cm and 10 cm, with a range of 1 m to 5 m.

Regardless of the technology used for detecting the position of a mobile agent, LPS systems are based on strategies, such as: time of arrival/time of flight (ToA/ToF), which measure the time taken to arrive from signal emission to reception and, therefore, measure the distance between the sender and each receiver; time differential of arrival (TDoA), which measures the difference between times of arrival at different receivers, and thus, three or more receivers are necessary to obtain the position; angle of arrival (AoA), which measures the angles of arrival at each receiver, and therefore, the position of a mobile agent can be obtained with two receivers; and received signal strength (RSS), which extracts the distance between the mobile agent and the receiver based on the strength of the signal reaching each receiver.

ToA and ToF are used in systems based on interferometry [

2], time of flight cameras [

3,

4] or ultrasound [

5], in which the sender and receiver share hardware and can detect when the signal is emitted and received, and therefore, measure signal flight time or the phase difference of arrival (PoA-PDoA). The sender and the receiver are distant, so they must be synchronized in order to obtain the propagation times. With high speed signal propagation, as in the case of light or any other electromagnetic signal, synchronization is extremely difficult. However, with TDoA/DoTF (PDoA), the sender and receiver do not need to be synchronized, but the different receivers do, as is the case of the IR-based LPS being developed by our research group [

6,

7]. In these cases, receivers are synchronized by using a wired signal clock.

Another position determination system is RSS [

8,

9], a method based on estimating the distance between the sender and the receiver by means of the strength of the signal that reaches the sensors nodes. Position determination can be performed using absolute measurements, for example by estimating the distance between sender and receiver in each node or from differences in the strength of the signal received, performing the same measurements as with TDoA. In the case of infrared, use of ToA/ToF entails the disadvantage that the power received depends on the power transmitted, which is not stable over time and is also sensitive to environmental conditions.

In AoA-based systems, commonly used in digital camera systems [

10,

11], it is possible to obtain the position using stereoscopic geometry. This strategy is applied to PSD sensors, as reported in [

12,

13,

14]. Once the distances and differences between them, the signal strength and the angles of arrival have been measured, the position of the mobile agent can be determined by trilateration (ToA/ToF, TDoA, PDoA) or RSS and triangulation (AoA).

Here, we propose a method for measuring the angle of arrival using a PSD sensor, in order to determine the direction of arrival of the optical beam from mobile agents in the environment. To this end, given that an optical system is attached to the PSD with a non-ideal behavior with respect to the theoretical pin-hole model used, it was necessary to perform a geometric calibration to determine the intrinsic parameters of the system, thus enabling us to accurately determine the angle of arrival of the beam. As explained in [

15], electrical calibration is required prior to performing a geometric calibration, in order to rectify errors due to imbalances in the amplification channels of the electronic circuit employed to amplify the signals delivered by the PSD, which would hinder the determination of the point of impact (prior to determination of the orientation). The LPS proposed by our group (

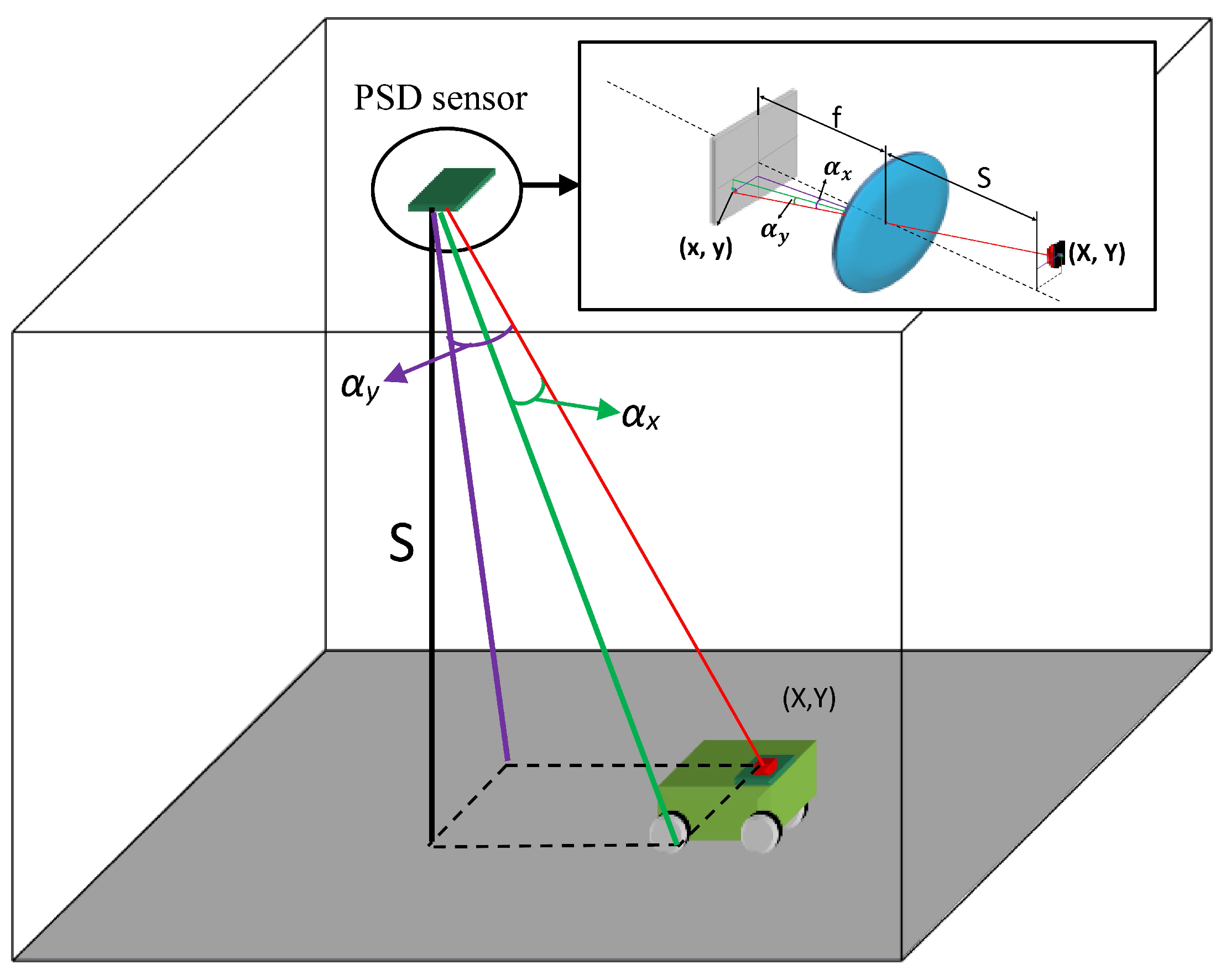

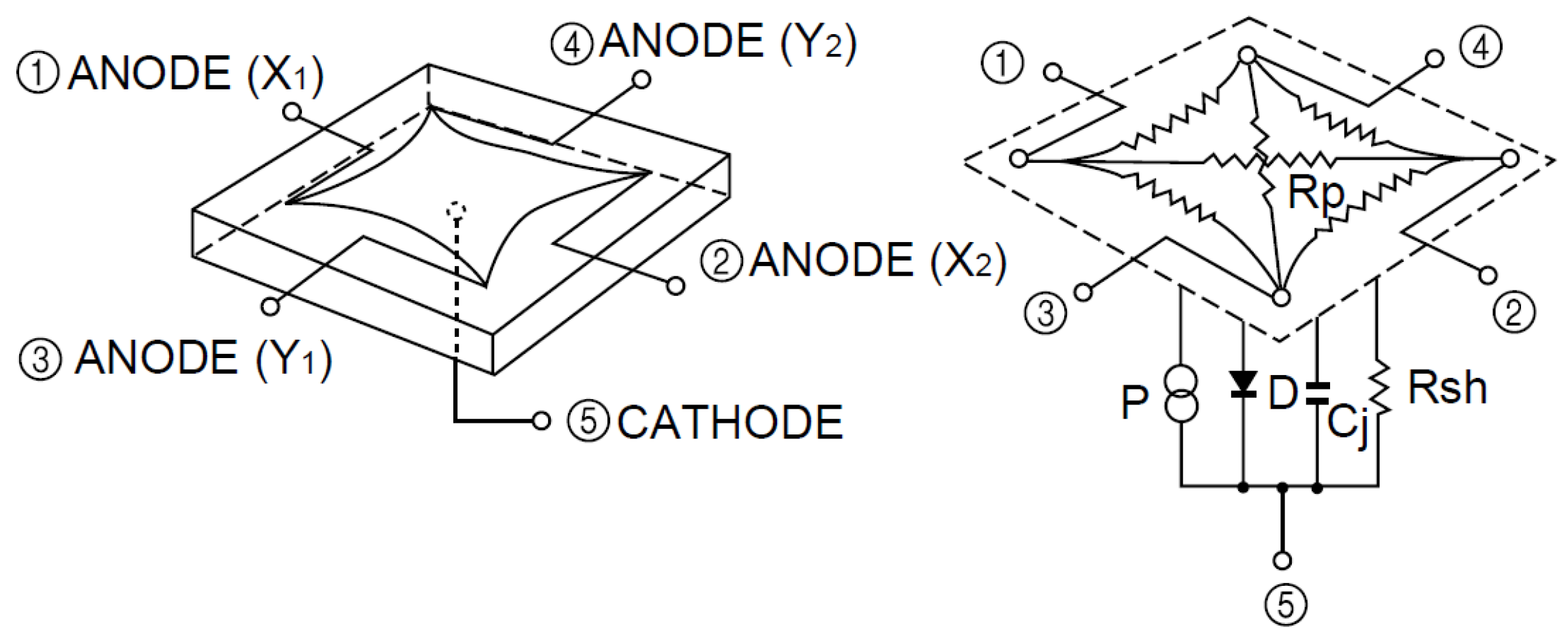

Figure 1) consists of a sensory system (PSD sensor + optical lens) located in the environment and NIR transmitters carried by the mobile agents.

The 3D position of the NIR transmitter is determined with a single PSD sensor based on the measurements of two parameters. The first of these, the subject of this article, is the angle of arrival, and the second, the determination of which is not discussed here, is the distance between the PSD sensor and the NIR transmitter.

The parameters of interest shown in the

Figure 1 are as follows: the point in the environment where the NIR transmitter is located is

; the impact of its image on the PSD sensor surface is

; the angles of arrival are

for the axes

x and

y, respectively;

S is the distance between the PSD sensor and the plane in which the NIR transmitter moves ; and

f is the focal length of the optical system attached.

Several systems that use PSD sensors, such as [

16], use collimated laser beams, and therefore, the PSD sensor is not attached to an optical system; however, they also require calibration, since the behavior of the PSD presents distortion that must be corrected, as explained in [

17]. In [

18], laser scanning was used to correct PSD sensor distortion, yielding micron-level accuracy and making it possible to adjust the measurements obtained with the movements performed by the laser. This procedure enabled accurate modeling of PSD sensor distortion, providing a system with very good resolution that was very stable to vibration, as well as very controlled test conditions. Similarly, sweeping with a laser the surface of the PSD sensor in [

19], they correct this distortion using neural networks. In other studies, such as [

12], stereo measurements have been used to perform calibration. However, this approach is not based on the computation of the intrinsic parameters, but on obtaining the relationship between the environment and the optical system. Since it does not adjust the intrinsic parameter values (optical center, focal length and distortion parameters), the distance measurement will contain an error that can only be corrected by calibrating the real intrinsic parameters.

In our case, due to the few works that there are about calibration methods for PSD sensors with an optical system attached, where the intrinsic parameters are required for the calculation of measures of the angle of incidence, we have chosen calibration methods used for cameras.

The geometric calibration described below is closely related to calibration methods used for cameras, since the mathematical models applied for cameras are generally based on the pin-hole model [

20], the same model that we have used for the PSD sensor system. This is a linear model that relates points in the environment to points in the image, depending on the intrinsic linear parameters of the optical system (without distortion, since the model does not have a lens), and the extrinsic linear parameters (rotation, translation). However, despite the similarity, there are differences, because cameras are array detectors with millions of receiver cells (space is discretized), whereas a PSD is an analog sensor that delivers very small currents (pA), with all of the problems and errors that this entails. The parameters corresponding to the effects produced by the lens attached to the PSD sensor, such as radial and tangential distortion, were added to the pin-hole model, as were the corresponding distortion parameters presented by the PSD sensor itself. Once we obtained the proposed mathematical model of the system, it was necessary to develop a method to obtain the various parameters. The several existing methods can be grouped into: (a) those based on three-dimensional templates; and (b) those based on two-dimensional templates. Both types are based on geometric patterns, such as cubes, rods, checks and circles.

Methods using 3D templates include those presented in [

21,

22,

23], which can obtain the camera model parameters from a single image. The disadvantages and key factors are that the calibration template must be accurate, since only one image is used and any error in template production would lead to erroneous calibration. In addition, the template must be correctly positioned so that all sides are visible. In the present case, in which the templates are constructed from IR, the SNR of the signals arriving at the PSD sensor should be as high as possible, and therefore, the calibration template must point towards the sensor, which would not be possible with a 3D template.

Methods based on 2D templates include those presented in [

24,

25,

26]. The advantage of these methods is that they are flexible, since it is not necessary to know the position in which the calibration template has been placed. Thus, in our case, all of the LEDs can point towards the same orientation. Furthermore, these methods also yield the best results. For this study, we used a 2D calibration template manufactured with IR diodes that emit at the wavelength to which the PSD sensor is most sensitive. To model our proposal, we used an adapted version of Zhang’s method [

26], which is among the most flexible methods, since it allows more images to be added to the calibration process, thus reducing error in the parameters’ value determination. This is an important feature of the system, because the calibration template has a number short reduced points (LEDs), being very sensitive to errors; thus, the system will be rendered more robust by using more images.

This paper is organized as follows: in

Section 2, we explain the mathematical model of the optical system (PSD sensor + lens) based on the pin-hole model; in

Section 3, we describe the proposed calibration method used to obtain the optical system parameters, and we analyze mobile robot positioning error according to errors in the intrinsic parameters of the system (sensitivity to errors); in

Section 4, we report simulations using the proposed models and methods; in

Section 5, we describe experimental PSD sensor calibration tests performed with two different lenses; and in the final section, we present our conclusions.

3. Calibration Process

There is no calibration method that is better than another, but depending on the type of camera, some methods give better results than others, so for example, in cases where the noise in the images is high, it is better to use methods based on 2D templates.

In our case, as mentioned in the Introduction, it is imperative that the calibration methods are based on 2D templates, because the LEDs should be directed to the PSD sensor so that the SNR of the signals in the PSD sensor is as high as possible, and therefore, the error in the calculation of the impact point is small. Therefore, of the methods based on 2D templates, Tsai, Batista and Zhang, the one that best adjusted to our needs is Zhang, since the calibration method of Tsai and Batista needs to know exactly certain parameters (optical center, focal length, etc.) to obtain the other parameters. This is a great handicap in our case, because we fix the optical system and the PSD sensor manually, and error in the measurement of these parameters would be high.

The calibration method selected for our system was an adapted version of Zhang’s method. This flexible method uses a 2D calibration pattern and permits the use of multiple images, being more robust to errors when capturing the template points. It has been shown to be a reliable method that yields very good results.

Zhang’s method requires the correspondences between points in space and their corresponding projections. The procedure consists of two stages. In the first step, a linear calculation is performed to obtain approximate values for linear system parameters, i.e., the mathematical model parameters excluding those that model distortions. In the second, nonlinear parameters are obtained by means of iterative methods, and the parameters calculated in the first stage are refined.

To improve the results obtained using the methods described and, therefore, the calibration models adapted to our needs, we incorporated proposals from other studies, such as [

27,

28], which suggest enhancing the results by performing a preliminary distortion correction and reducing image noise sensitivity, respectively.

As we will demonstrate later, preliminary distortion correction improves the results of the first stage of Zhang’s method, since this stage is very sensitive to image nonlinearities. However, when using lenses with low distortion, this correction process could be omitted.

These improvements will be analyzed in detail in

Section 4, adapted and used in the model proposed in this paper.

Thus, the order of the calibration process proposed for our model was as follows: an initial rough distortion correction, followed by data normalization and then implementation of the proposed calibration method, which is an adapted version of Zhang’s method for PSD sensors. Each one of these processes is explained in the following subsections, in

Section 3.4, to finally analyze error in the determination of the angle of arrival and mobile agent position.

3.1. System Calibration Method

The objective of this stage was to calculate the optical system parameter values, using nonlinear system algorithms (Gauss–Newton, Levenberg–Marquardt, gradient descent, etc.). One problem associated with these techniques is that they may converge on a solution that is mathematically correct, but that does not conform to reality. In order to avoid this situation, it is necessary to establish a good starting point, based on an approximate knowledge of the real parameter values or, in the case of Zhang’s method, by obtaining an approximation analytically.

To estimate the system’s linear parameter values for the starting point, Zhang performs two steps. In the first stage, the projection matrix (

M) for each of the images is obtained using direct linear transformation (DLT), where Equation (

17) must be solved by means of single value decomposition (SVD).

where

are the coordinates of the impact point in the sensor,

M is a homography and

the coordinates of corresponding point in the calibration template. As the calibration template is 2D the coordinate

is zero, Expression (

18) relates the the calibration template points with the image plane points.

Knowing that

, where

are the columns of matrix

is the matrix containing the intrinsic parameters,

are the columns of the rotation matrix and

t is the translation vector, we know that since the rotation matrix is orthonormal, the following relationships can be applied:

and

. Consequently, from these constraints, we obtain Equations (

19) and (

20).

where

results in the matrix

B given in Equation (

21), which is the proposal for our model, where the focal length parameter is considered of equal value for the two coordinate axes.

As the matrix

B is symmetrical, it is only necessary to obtain six elements, so the vector is

. The elements of matrix B are traditionally obtained using Equation (

22), where,

and

are the elements of matrix

M obtained by DLT.

By retrieving the vector

b, the intrinsic parameters are then obtained with the following expressions:

Once the intrinsic parameters have been calculated, the projection matrices for each image are used to calculate the extrinsic parameters as follows:

where

. Due to image noise and nonlinearity, these parameters contain error because we are using linear techniques to solve a nonlinear problem. Hence, in

Section 3.2, we present a prior image distortion correction, which improves the results.

Having obtained approximate linear parameter values, the extrinsic and intrinsic parameters that model the system are obtained/optimized, in our case using the Levenberg–Marquardt algorithm.

The cost function used in [

25] minimizes error in the projection of the points on the image plane. The proposed cost function for our model, and the one with which we obtained the best results, minimizes the errors of the points on the calibration template (distance between points on the template and projection of the image points onto the template) and is expressed as follows:

where

n is the number of images,

m is the number of points per image,

Q are points in the image plane,

A is the intrinsic matrix containing the parameters, such as the focal length and optical center, vector

D contains the parameters that model distortion,

and

are the rotation matrix and the translation vector, respectively,

are the calibration template points and

P are the re-projected image plane points (points obtained from the intrinsic and extrinsic parameters and the image plane points).

3.2. Preliminary Distortion Correction

As mentioned earlier, this step is essential in the case of high distortion lenses. Of the several methods described and proposed in [

27] to reduce image distortion, we selected the “line straightness method”. With regard to PSD sensor distortion, we assumed that it would exert little effect on image distortion since it would be considerably less than the distortion generated by the lens. Nevertheless, it will also be analyzed in the following sections.

In this section, we describe how to estimate the coefficients of the distortion produced by the lenses in order to correct the distortion. In our case, we did this to improve the results obtained in the first stage of Zhang’s method, which is very sensitive to nonlinearity. Estimation of those coefficients is based on analyzing curves, which are actually straight lines that have been distorted by the lens, using iterative methods.

An example is given in [

27], in which one radial distortion coefficient and one tangential distortion coefficient are estimated; however, we estimated two radial distortion coefficients since in the majority of cases, tangential distortion exerted less influence.

The method is based on detecting the lines found in the image, which in our case were easy to identify since we used a calibration template, and then obtaining the slope at several points along the lines. If they were straight, the points along a line would have the same slope. However, due to lens distortion, this is not the case, and therefore, the cost function to minimize is the slope of the tangents at each of the points on the line.

Hence, the cost function to minimize is as follows:

where

L is the number of lines,

N is the number of points on each line,

are the coordinates of each point and

s is the slope at that point.

where

is the slope of the tangent at the point

and the partial derivatives for estimating the radial distortion coefficients are as follows:

where

r is the Euclidean distance between point

and the optical center

. In the simulations section, we will analyze the influence of this preliminary distortion correction when obtaining the linear values in the first stage of Zhang’s method, which will serve as the starting point.

3.3. Data Normalization

The matrix

M that relates scene points to their projection on the image plane is sensitive to image plane position and image noise, as given in [

28]. To resolve this problem and obtain more stable results, we proposed to obtain a better conditioned projection matrix than the one obtained by DLT. Thus, starting from Equation (

36):

where

is the matrix with the impact points for each image,

is the homography and

are the template points. Our proposal is to normalize matrix

Q by a matrix

and the template points by a matrix

, obtaining Equation (

37).

Being

and

created under the following conditions, the calibration template and its projection on the image plane must be centered on the coordinate origin, and the points must be isotropically scaled, so that the mean distance to the origin is equal to

; obviously,

always is the same matrix because the coordinates of the template do not change. Thus, we have:

where

, which relates

to

, is obtained by DLT.

Having obtained

, all that remains is to solve Equation (

38) in order to obtain the real homography.

Next,

Section 3.4 describes an analysis of the sensibility of AoA, due to the impact point and focal length.

3.4. Analysis of AoA Uncertainty Regarding Optical System Parameters

In this section, we describe the sensitivity of the measurement system to errors in the optical system parameters, to demonstrate the robustness or weakness. Based on

Figure 5, the equation to obtain the angle of arrival on the

x axis is given by Expression (

39); a similar expression and conclusions may be extracted for the

y axis.

where

,

represent the angles of arrival,

is the impact point,

is the position of the IR transmitter,

S is the distance between the optical system and the IR transmitter and

f is the focal length.

The partial derivatives to determine the error in the angle of arrival due to each of the intrinsic parameters are as follows:

The final expression of the error in the angle of arrival is as follows:

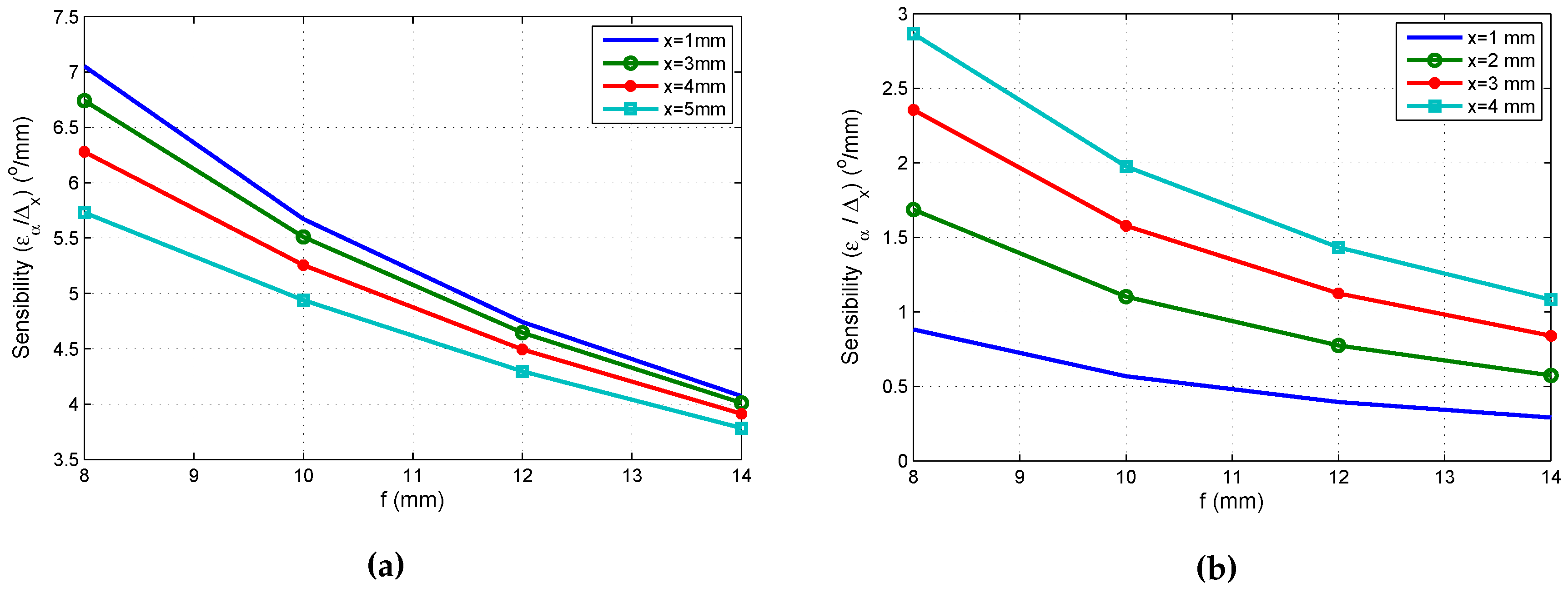

Figure 6a,b show the sensitivity with respect to the impact point and focal length.

Comparing

Figure 6a,b, one can show that the sensibility due to error in the impact point is higher than the error coming from the focal length.

4. Evaluation of the Proposed Model and Calibration Method

In what follows, we evaluate the accuracy and sensitivity to different effects of the proposed calibration method. To this end, we describe four tests. The first of these assesses the improvements yielded by the proposed preliminary distortion correction and data normalization. The second assesses the effect of PSD sensor signal noise, using different levels of SNR, which is added to the output signal of the amplifiers. The third test evaluates the effect of imbalances in amplifier gain factors alone (without noise) and in combination with the effect of noise. The fourth and final test assesses PSD sensor distortion, which is also analyzed alone and in combination with noise and imbalances. To conclude, the results are compared with different sources of error.

4.1. Tests Setup

The different tests have considered two types of lens to verify that the proposed calibration method is robust in either case. Since distortion is an important problem, we evaluated the calibration using one lens with low distortion (long focal length) and another with higher distortion (short focal length):

Lens 1: focal length of 25 mm and one radial distortion parameter ( mm).

Lens 2: focal length of 8 mm and two radial distortion parameters (, mm).

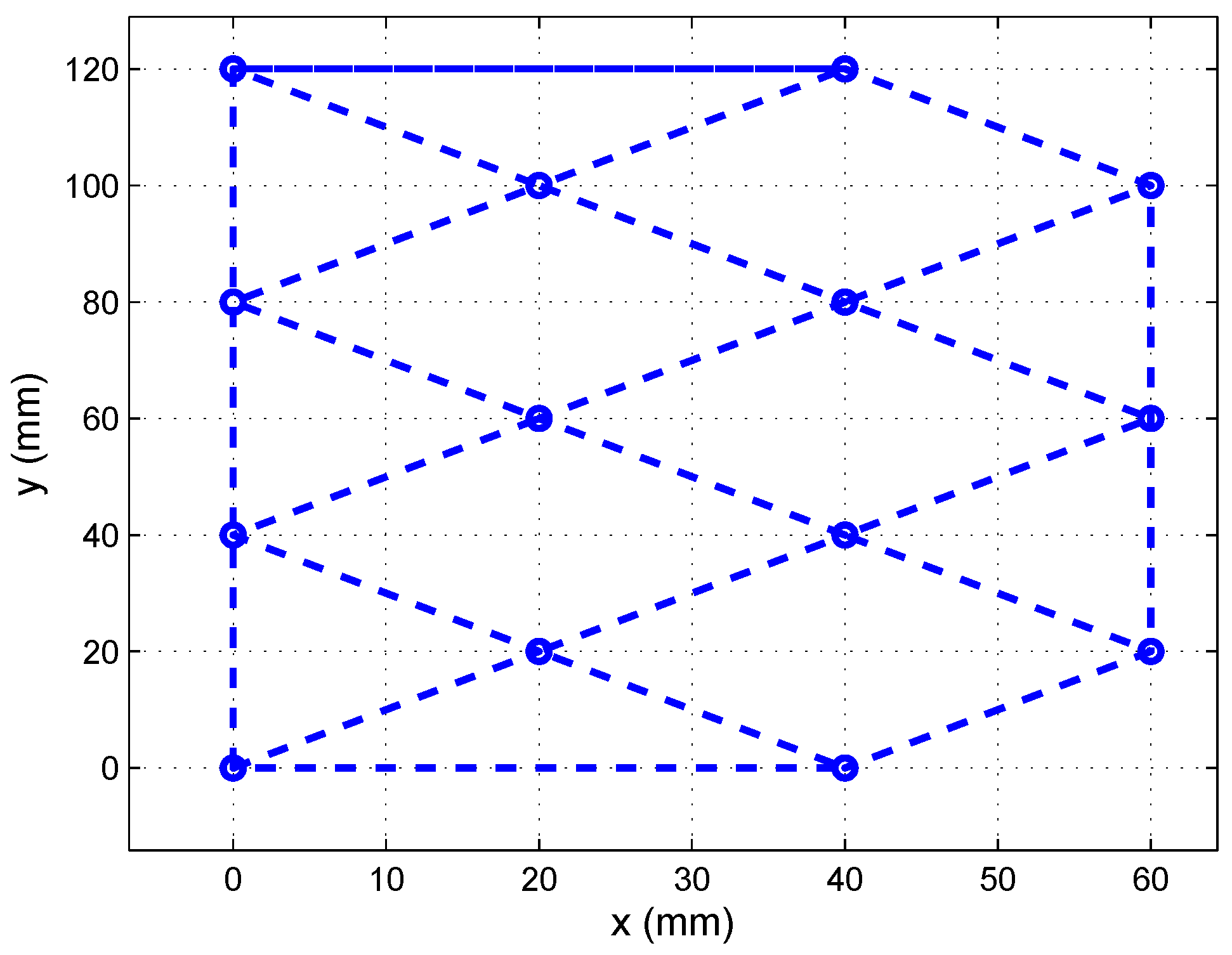

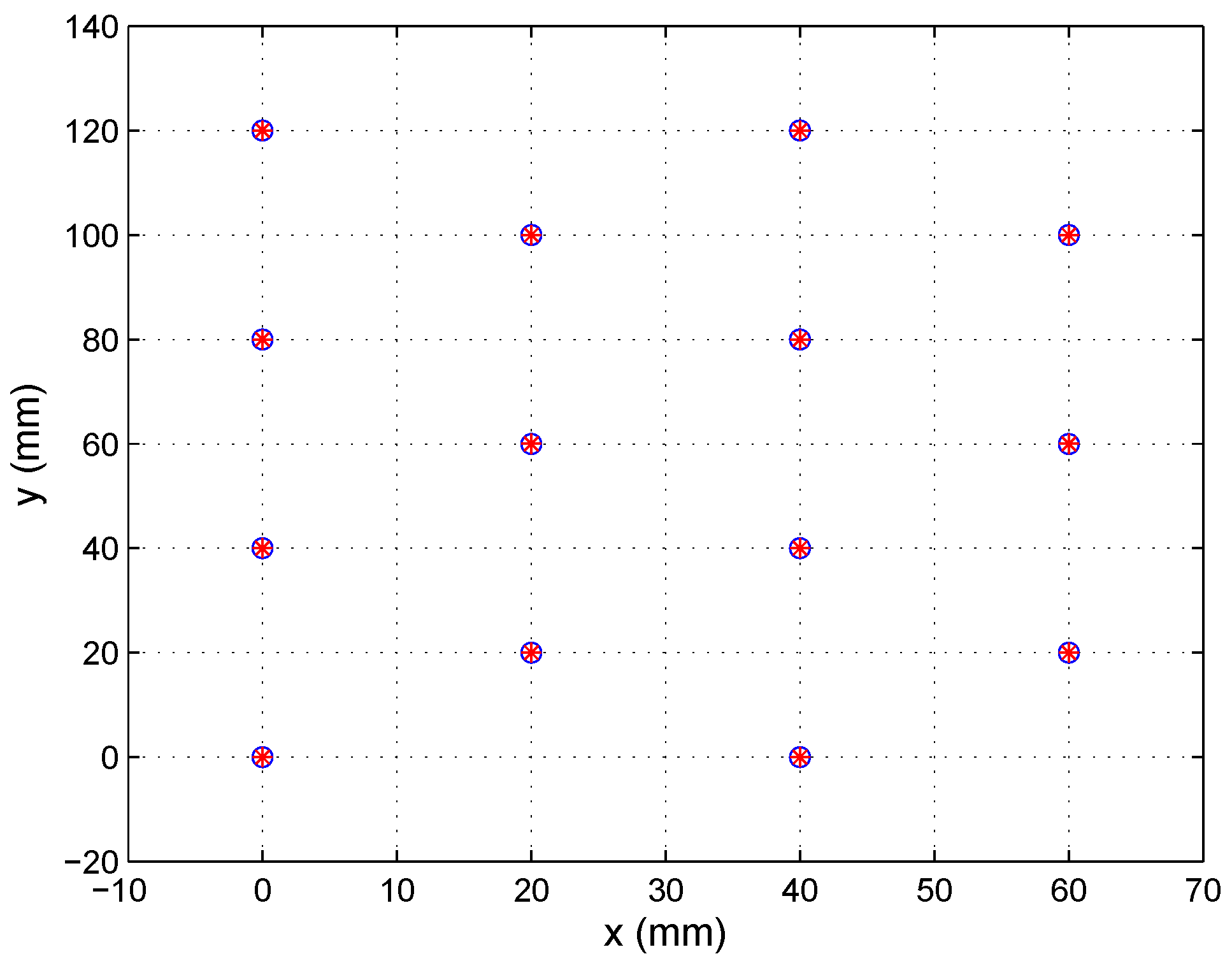

The tests were performed using synthetic images created from the calibration template shown in

Figure 7. The points in the calibration template were unaligned to enhance the calculation of the projection matrix.

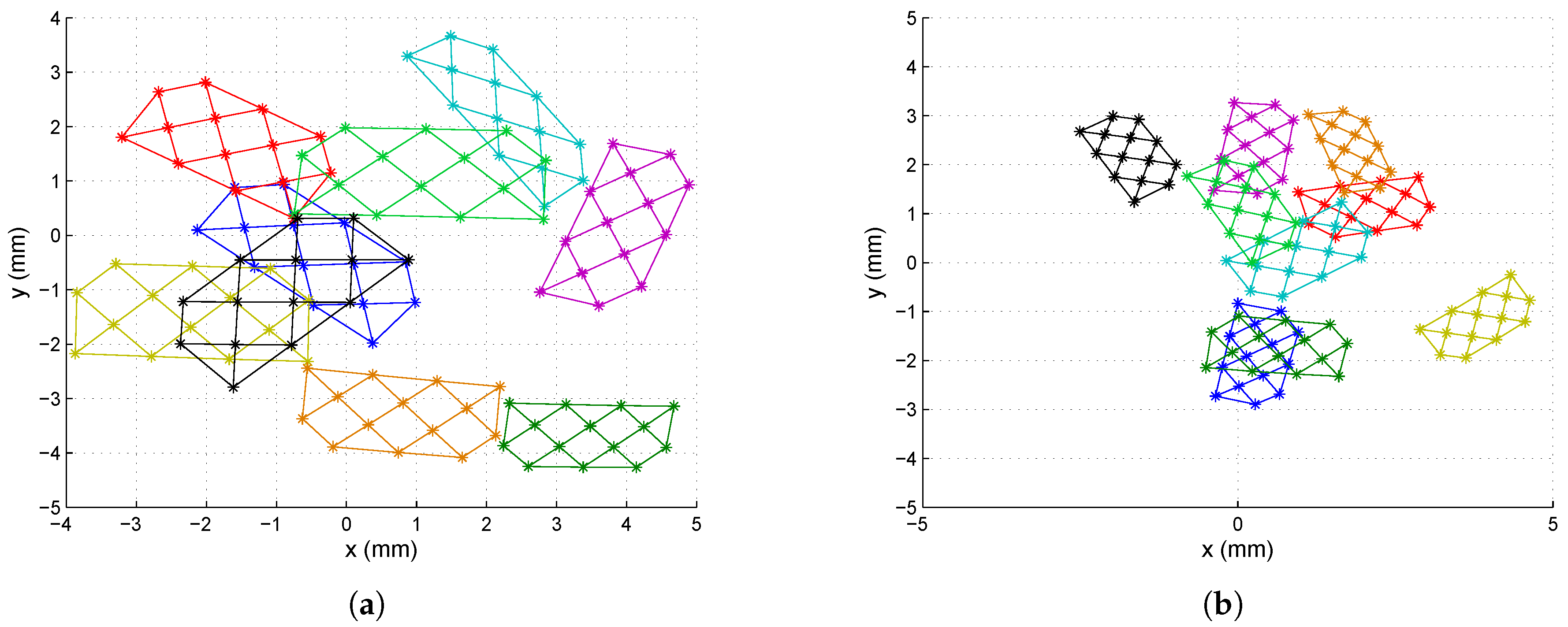

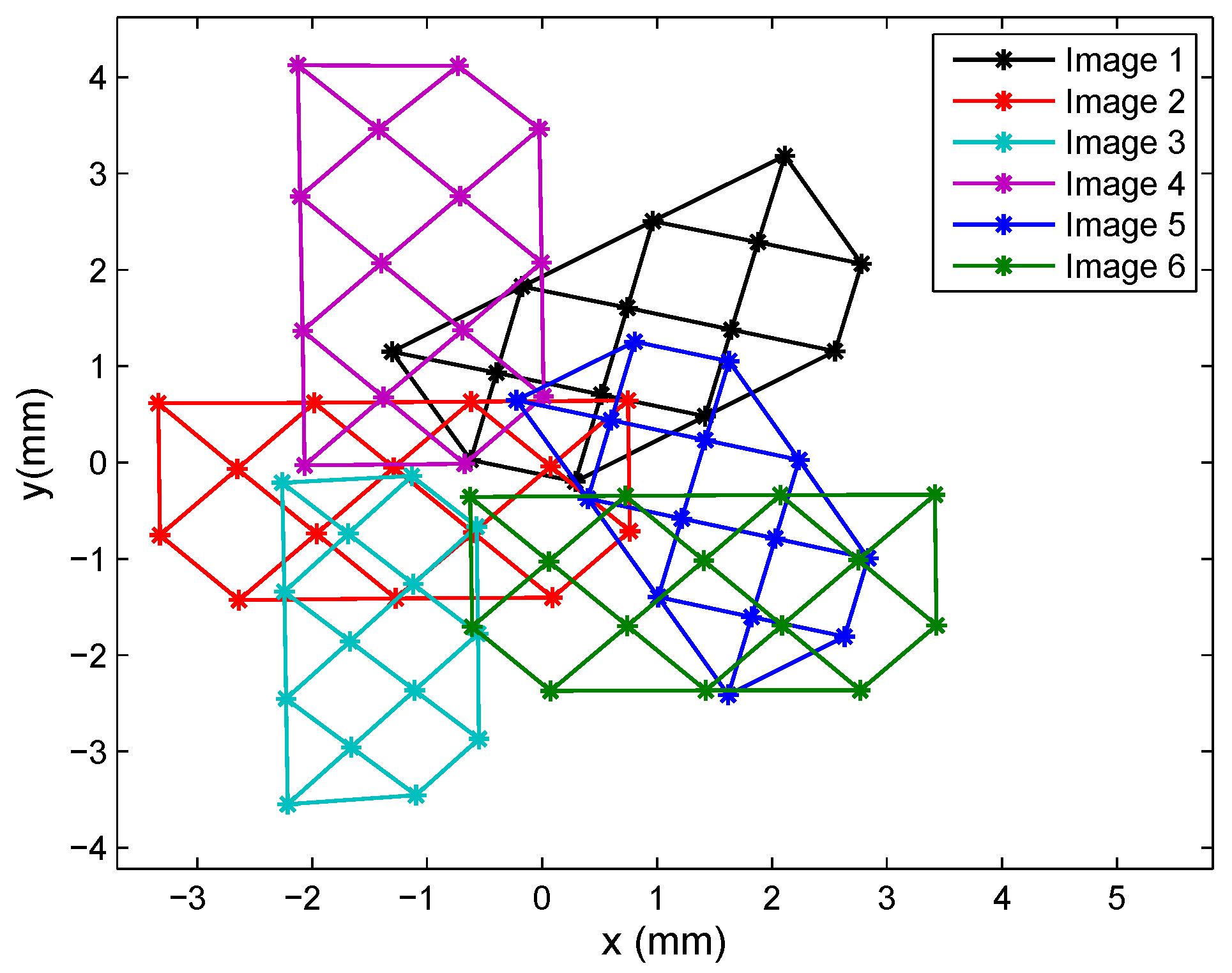

Figure 8a shows Set 1 of the synthetic images (nine images for each lens), which were captured using a 25-mm focal length lens, placing the calibration template in different positions.

Figure 8b shows a set of images captured with an 8-mm focal length lens (note that the template was not placed in the same positions in each case). In both cases, the images were generated with a misalignment from the optical center with a displacement of (0.5,

) mm with respect to the electrical center. The extrinsic parameter values used to perform the test were randomly selected.

As shown in

Figure 8, the images captured should cover the whole PSD surface sensor to produce a good distortion correction.

4.2. Evaluation of the Preliminary Corrections

First, we will evaluate the preliminary distortion correction and data normalization by comparing the intrinsic parameter values obtained in the first calibration stage, which yielded the initial values for the iterative method.

Table 1 and

Table 2 show the results for Lenses 1 and 2, respectively. We used Set 1 and Set 2, each one with nine ideal images, i.e., without noise or imbalances in the signal gain factors and without taking PSD sensor distortion into account (these sources of error will be evaluated in the following sections).

The best results were obtained when using preliminary distortion correction, whereas any improvement due to data normalization was imperceptible, perhaps because of the lack of noise in the images. In the following subsection, we discuss a simulation of the calibration method using the same images, but with noise.

4.3. Evaluation of the Effect of PSD Sensor Signal Noise on the Calibration Process

To evaluate the effect of noise in the PSD sensor output signals on geometric calibration, we conducted tests with SNR values of between 30 and 50 dB, adding white Gaussian noise with a distribution of . Having introduced noise into the signals, these were filtered before calculating the point of impact on the PSD sensor surface. We used a Butterworth bandpass filter with a 600-Hz bandwidth centered at a frequency of 1 kHz.

The calibration results also depend on other variables, such as the number of images, lens parameters and the images captured with each lens (position of the images in the image plane).

We used nine images for the two lenses in the calibration simulations and also included or omitted the preliminary distortion correction and improved projection matrix calculation (indicated in the following tables as “with improvements”), to compare the effect of these improvements. We performed 200 different test instances and extract the mean and std values.

Table 3 shows the results of calibration for Lens 1, giving the ideal and the measured intrinsic parameter values, as well as the mean and standard deviation in parenthesis.

The results obtained indicate that there are few differences in the results for the intrinsic parameters; however, when the improvements were incorporated, the iterative method converged on fewer iterations, and the starting point obtained in these cases was closer to the final solution. One indication that the calibration results were good are the residuals. These indicate the error between the template points and the image points re-projected into the environment, i.e., the points in the image plane translated to the environment using the parameter values obtained in the calibration, where the residuals are the sum of the error in each of the points in each image.

Table 4 shows the results for the simulation with Lens 2, which had a shorter focal length than the previous lens and higher distortion. As in the previous case, 200 iterations were performed for each SNR level, and the simulation was also carried out with and without preliminary distortion correction and data normalization.

The results were similar to those for the previous simulation. The number of iterations was significantly lower when the preliminary corrections were incorporated because the distortion was higher, and therefore, the images were less linear and the projection matrix calculation worse.

These simulations indicated that preliminary distortion correction and data normalization improve the calibration process, and they were therefore incorporated in the following tests.

4.4. Evaluation of the Effect of Imbalance in Amplifier Gain Factors on the Geometric Calibration

Another source of error is imbalance in gain factors, i.e., differences between the electronic components involved in gain factor values. To perform the simulation, we selected tolerances of 1% and 15% for the resistor and capacitor, respectively, with nominal values of 100 kΩ and 100 pF, where the equation for the transimpedance amplifier gain factor is as follows:

This factor also depends on the

R and

C values of the working frequency, where the greatest error is produced at 13.793 kHz, as described in [

15]; the nominal gain factor at this frequency is 53,572, and variation in this factor with the above-mentioned tolerances is 4017.9.

Variation in the gain factor for each signal was simulated assuming that distribution was uniform . As before, we also performed tests with white Gaussian noise .

For each case, 200 iterations were performed, obtaining the results shown in

Table 5 and

Table 6 for Lenses 1 and 2, respectively, giving the mean value and standard deviation in brackets for the intrinsic parameters.

The results obtained indicate that when the electrical components involved in signal gain were not precision components, the intrinsic parameters obtained for the optical system were incorrect, with large errors in the optical center and somewhat smaller errors in the other parameters (focal length and distortions). The results obtained with and without adding noise (SNR of 40–50 dB) to the measurements were almost identical.

The results for Lens 2 were similar to those for Lens 1 in that the images with and without noise were approximately the same, and thus, the predominant error was that due to imbalances. Consequently, as discussed in [

15], it is necessary to calibrate the amplification step, since this could give rise to unacceptable errors in geometric calibration.

4.5. Analysis of Pin-Cushion PSD Sensor Distortion

Here, we will analyze the effect of PSD sensor distortion, an error that is characteristic of the type of PSD sensor employed. For our tests, we used the Hamamatsu PSD S5991-01 sensor, a pin-cushion type sensor in which, as explained in [

17], distortion is approximately 1% of the size of the PSD sensor and where the center of distortion is the center of the PSD sensor

. This pin-cushion type distortion is the opposite of the distortion produced by the lenses that we used in our system, which are biconvex and produce barrel-type distortion, suggesting that if the optical center coincides with or is very close to the electrical center of the PSD sensor, the contribution of this distortion to error should be small. However, if these two centers do not coincide, it is possible that the intrinsic system parameters will be affected. The PSD sensor distortion was modeled as a radial distortion with a single parameter value of

.

As in the previous cases, the simulations were performed with two lenses under three conditions: with the effect of PSD sensor distortion alone, with PSD sensor distortion plus imbalances and in combination with white Gaussian noise at an SNR value of 40 dB.

Table 7 shows the intrinsic parameter results for Lens 1 under all the conditions described above, giving the mean value and standard deviation in brackets.

Note that in this test, the parameter is the contribution of lens distortion and PSD sensor distortion; therefore, the ideal value is 6.9416.

The results obtained indicate that when distortion is 1% of the size of the PSD sensor and images are contaminated with noise, the errors are acceptable. In the case of higher distortion, this could be corrected by disconnecting the lens and scanning the entire PSD sensor surface, obtaining the distortion of the PSD sensor itself and correcting the images prior to the calibration process.

Table 8 shows the results for Lens 2 under the same conditions as those used for Lens 1.

As in the previous case, is the contribution from the lens distortion and PSD sensor distortion, the ideal value being 8.69415.

4.6. Conclusions Drawn from the Simulations

Here, we will compare the results obtained for the different simulations. The results obtained for Lens 1 are compared in

Table 9 and those for Lens 2 in

Table 10.

As expected, the results confirm that the worst case was when all of the effects were added. However, it can be seen that gain imbalances constituted the greatest source of error, and as has been mentioned, these can be corrected [

15]. Therefore, the most realistic case would be that of noise and PSD sensor distortion.

PSD distortion is constant, and thus, if the distribution of captured images is judiciously selected, its effect can to a large extent be corrected implicitly when lens distortion is corrected.

In terms of noise, signal SNR may be greater than 50 dB for the calibration process; however, the results obtained with an SNR of 50 dB and PSD sensor distortion were very close to ideal. In addition, the more images used, the better the results.

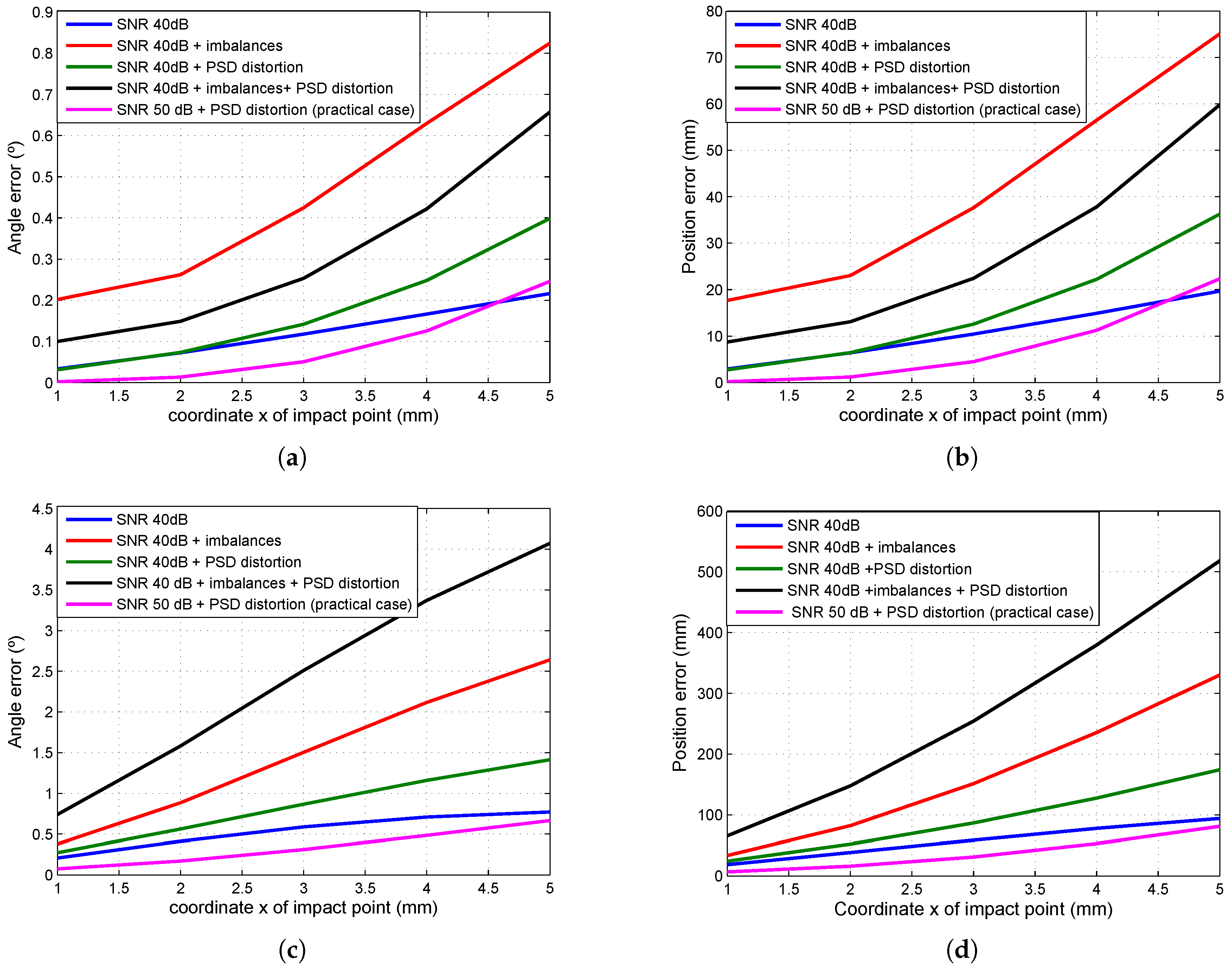

Figure 9 shows the errors in the angles of arrival and in position determination depending on the point of impact of the beam, where the distance between the plane in which the mobile agent is moving and the detector is 5 m. The graphs were generated using the results shown in

Table 9 and

Table 10, while the parameter values employed were the mean values plus twice the value of the standard deviation.

The best result was obtained with an SNR of 50 dB and PSD distortion and was very similar to that obtained when solely taking noise with an SNR value of 40 dB into account. In both cases for Lens 1, the error in mobile agent position determination was around 2 cm, while in the case of Lens 2, it was less than 10 cm (when furthest from the PSD).

As the graphs show, the error in the tests that included the gain imbalances’ factor is approximately four-times higher.

In conclusion, the proposed calibration method (preliminary distortion correction, data normalization and Zhang’s method adapted to small, continuous sensors) yielded good results in cases without gain factor imbalances and when the SNR was >40 dB, regardless of lens and/or PSD sensor distortion. In the worst case for Lens 1, the error in the angle of arrival and mobile agent position determination was around 0.25°, and for Lens 2, it was 0.7°.

These simulations were performed to analyze the most problematic sources of error, but there are other sources of error that were not modeled and which arose in the experimental tests, such as a poor connection between the lens and the PSD sensor, a faulty and/or asymmetrical lens or manufacturing problems with the PSD sensor.

5. Experimental Tests

For these tests, we used a 2D calibration template consisting of 14 OSRAM SFH-4233 LEDs, as shown in

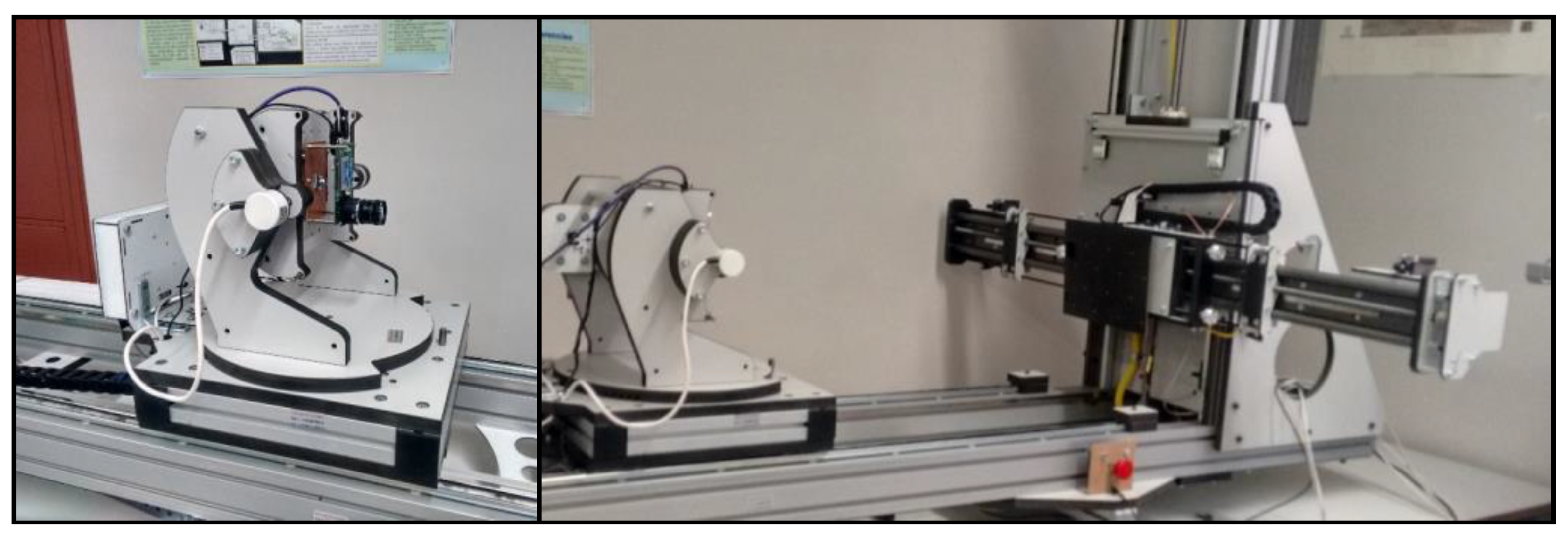

Figure 10. A National Instruments PCI-6289 data acquisition card was used to control data acquisition and to switch the LEDs on and off. The outgoing signal was a 1000-Hz sinusoidal signal generated with an AFG 3022B-Tektronix. The frequency was selected bearing in mind the harmonics of other sources of noise (mains electricity, fluorescent lighting).

To obtain the different images (

Figure 11), an automated system was used with five degrees of freedom, three of translation and two of rotation.

The first calibration was performed with a 40-mm focal length lens measuring 1 in in diameter; given the large focal length, radial distortion and the field of view angle were low. The second calibration was performed with a spacecom JF7.5M-2 lens with a focal length of 7.5 mm and a field of view of 50°, yielding appreciable radial distortion.

In the first test, we used 12 images covering the entire PSD sensor surface, in different positions and angles, six of which are depicted in

Figure 12 (not all of the images have been included, as they overlap and would be difficult to distinguish if the entire set were shown in the figure).

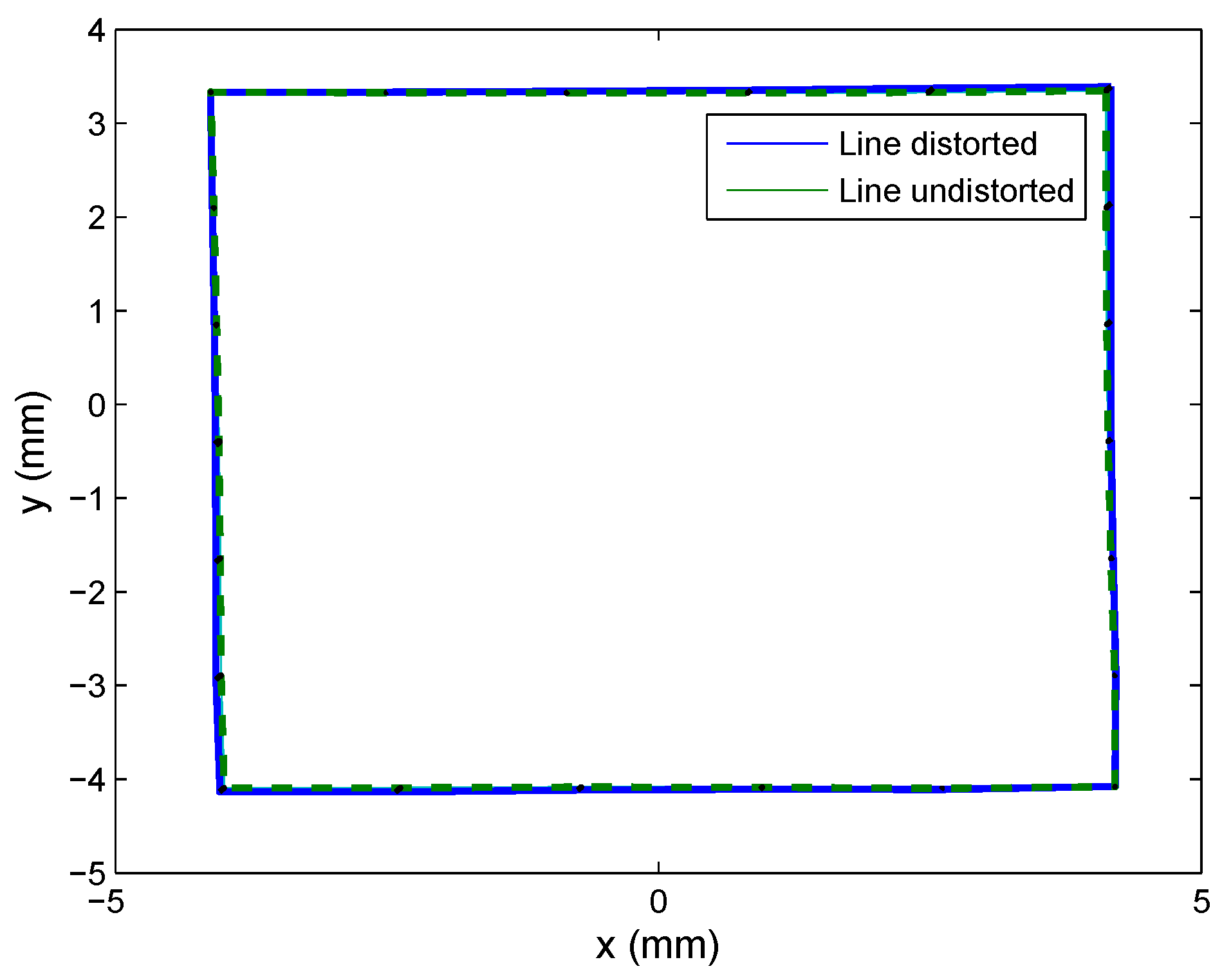

Table 11 shows the results obtained for the intrinsic parameters using a different number of images, in this case, the results of 8–12 images. The first row with the legend “residuals” refers to the sum of errors in all images between points in the calibration template and re-projected points (from the images in the PSD onto the template), using the parameter values obtained during calibration. In

Figure 13, the error using 12 images is depicted.

As can be seen, the results remained stable with small changes from 10 images onward, and therefore, the result will remain unchanged even if more images are used. Note that for each image, the residuals are the sum of errors of: 14 points · number of images.

To determine whether optimization of the calibration process generated intrinsic parameters of similar dimensions to those of the real parameters (abstraction of the method could yield mathematically-correct solutions that would solve the calibration, but with values that did not conform to the real parameters), we examined, by way of example, the focal length value. For this, we took four points from those shown in

Figure 14 on the PSD sensor (the blue line represents measured points and the green line corrected points; note that this is a lens without distortion).

Given the coordinates for the points

and the distance from the PSD sensor to the transmitter, we calculated the focal length using Equation (

44).

where

f is the focal length,

H is the distance between two points in the environment,

h is the distance between the same points, but in the image plane, and

S is the distance between the optical system and the transmitter.

Using the corners of the square in

Figure 14, where

S is equal to 950 mm ± 1 mm (error caused by the automated mounting encoder), we found that:

From the lower right corner to the lower left corner, His equal to 200 mm and h is equal to 8.2058 mm; therefore, the focal length is 38.9776 mm.

From the lower left corner to the upper left corner, H is 180 mm and h is 7.4285; therefore, the focal length is 39.2060 mm.

From the upper left corner to the upper right corner, H is 200 mm and h is 8.2456; therefore, the focal length is 39.1666 mm.

From the upper right corner to the lower right corner, H is 180 mm and h is 7.4297 mm; therefore, the focal length is 39.2123 mm.

As can be seen, these are within range of the solution given by calibration.

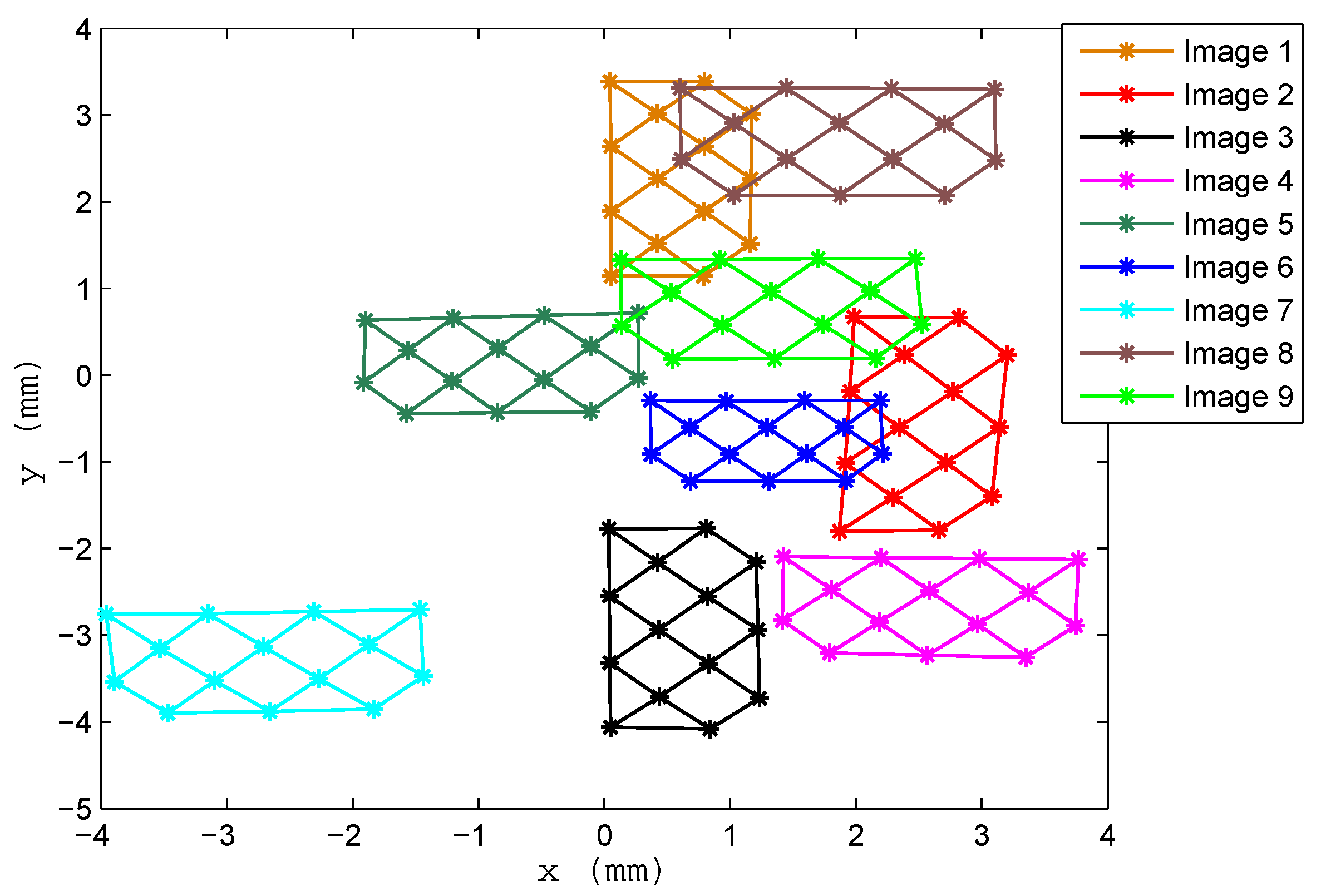

In the case of calibration using the JF7.5M-2 lens, we also used 12 images, six of which are shown in

Figure 15.

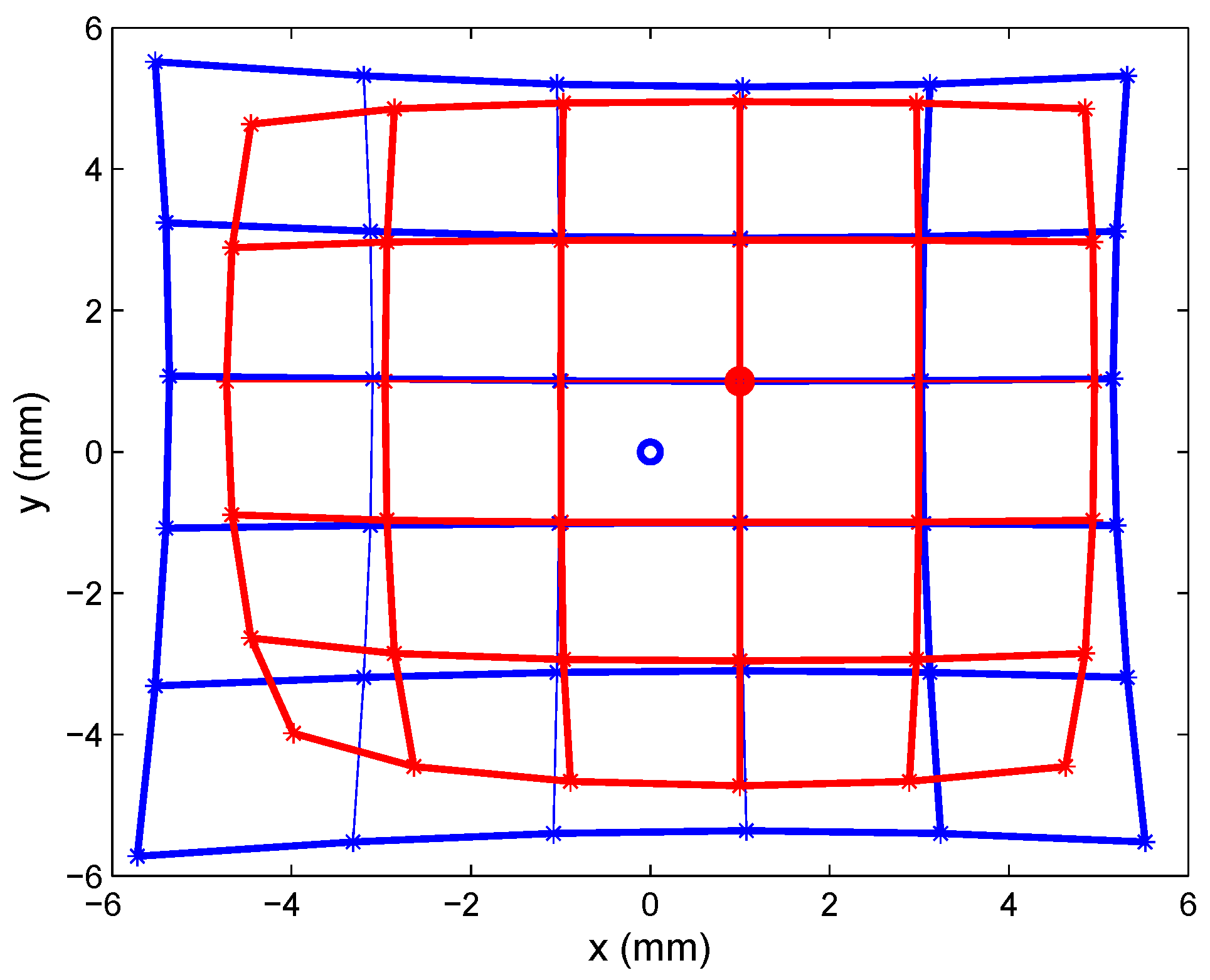

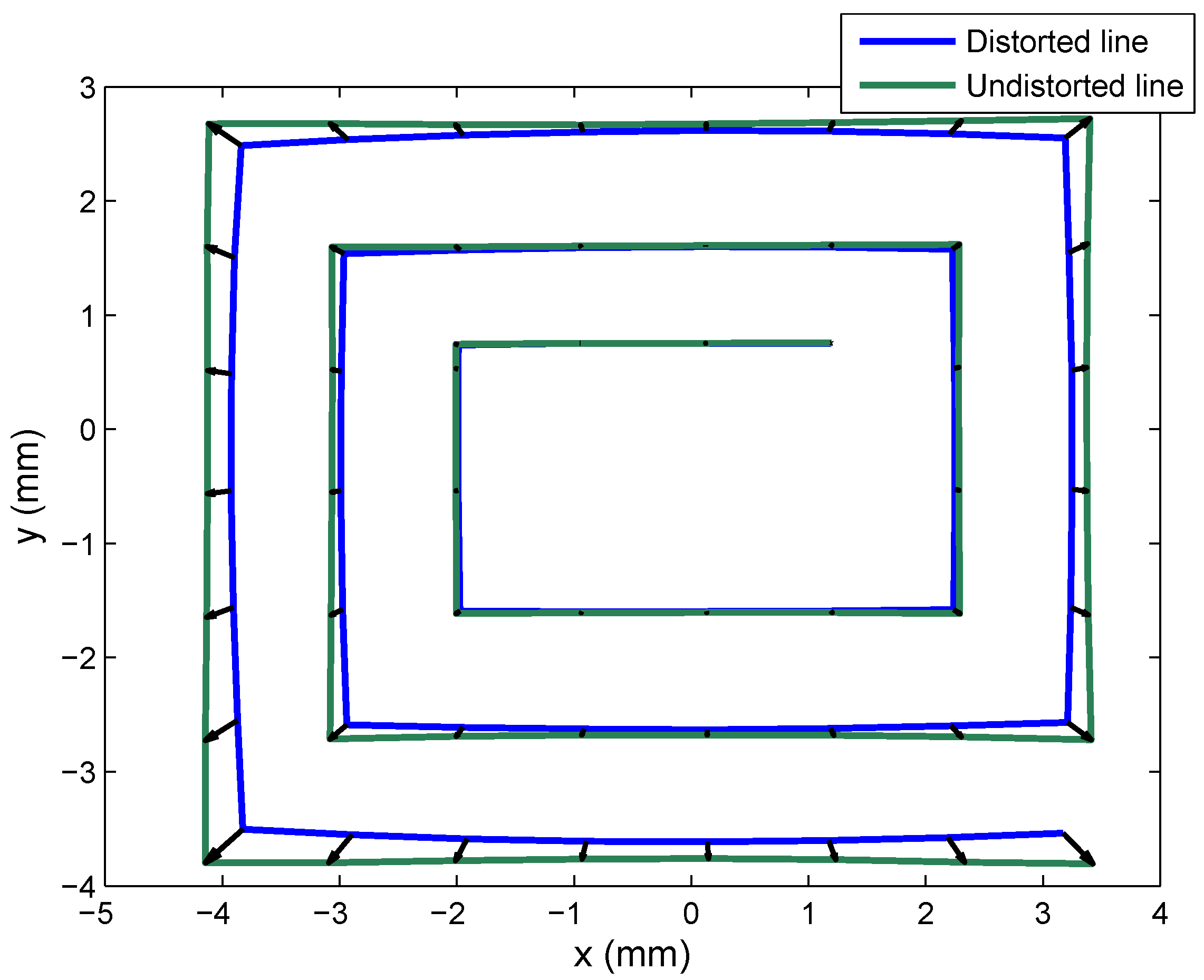

To provide a visual depiction of how distortion was corrected, we took points from the entire surface of the PSD sensor equipped with the JF7.5M-2 lens. The test mounting described earlier was used to perform linear movements forming a “spiral”. These data are shown in

Figure 16, where the blue line shows the points obtained with clearly evident radial distortion, and the green line shows the points corrected with the intrinsic parameter values (distortion and optical center) obtained from calibration.

We also conducted the same test with Lens 2, to calculate the focal length according to Equation (

44). Taking the points at the very outer corners of the “spiral” and given that the distance between the PSD sensor and the IR transmitter was 350 mm, we found that:

From the lower right corner to the lower left corner, H is equal to 350 mm and h is equal to 7.5714 mm; therefore, the focal length is 7.5714 mm.

From the lower left corner to the upper left corner, H is 300 mm and h is 6.4663; therefore, the focal length is 7.5441 mm.

From the upper left corner to the upper right corner, H is 350 mm and h is 7.5130; therefore, the focal length is 7.5130 mm.

From the upper right corner to the lower right corner, H is 300 mm and h is 6.4797 mm; therefore, the focal length is 7.5597 mm.

In

Table 12, which shows the parameter values obtained from calibration, the focal length was 7.4933 mm, while in the results obtained in this test, the greatest difference from this figure was 0.0781 mm, indicating that the calibration results were close to real values.

6. Conclusions

The simulations and empirical tests demonstrated that the best system calibration results were obtained when using the proposed model and calibration procedure, which commences with a preliminary distortion correction, since the calibration method is very sensitive to lens distortion. However, we found that in contrast to reports in other studies, data normalization results were no different to those obtained using DLT. We also found that in order to calibrate the system, signal noise must be low (SNR > 40 dB), although this does not present a problem, since calibration can be performed over short distances and, therefore, with high signal levels.

Another source of error that can be disregarded is PSD sensor distortion in the event that it is a pin-cushion sensor, since this type of PSD presents low distortion that is canceled out by calibrating lens distortion.

The larger error is produced by errors in the gain factors. Our results indicate that these could produce the largest errors since they depend on the quality of the components; thus, it would be necessary to perform an electrical calibration as explained in [

15], to eliminate this source of error.

As regards the experimental tests, the results obtained were close to reality, as shown in the focal length calculation using Equation (

44), which coincides with the focal length obtained in the geometric calibration, for two lenses. This also indicates that distortion was corrected in the case of the second lens, since if it were not duly corrected, the error in parameter (

h) would generate a very different focal length value from that obtained from calibration.

Correction of the distortion produced by the second lens is depicted in

Figure 16.