Ambient Sound-Based Collaborative Localization of Indeterministic Devices

Abstract

:1. Introduction

1.1. Challenges

- Setting up an ad hoc network for sound localization

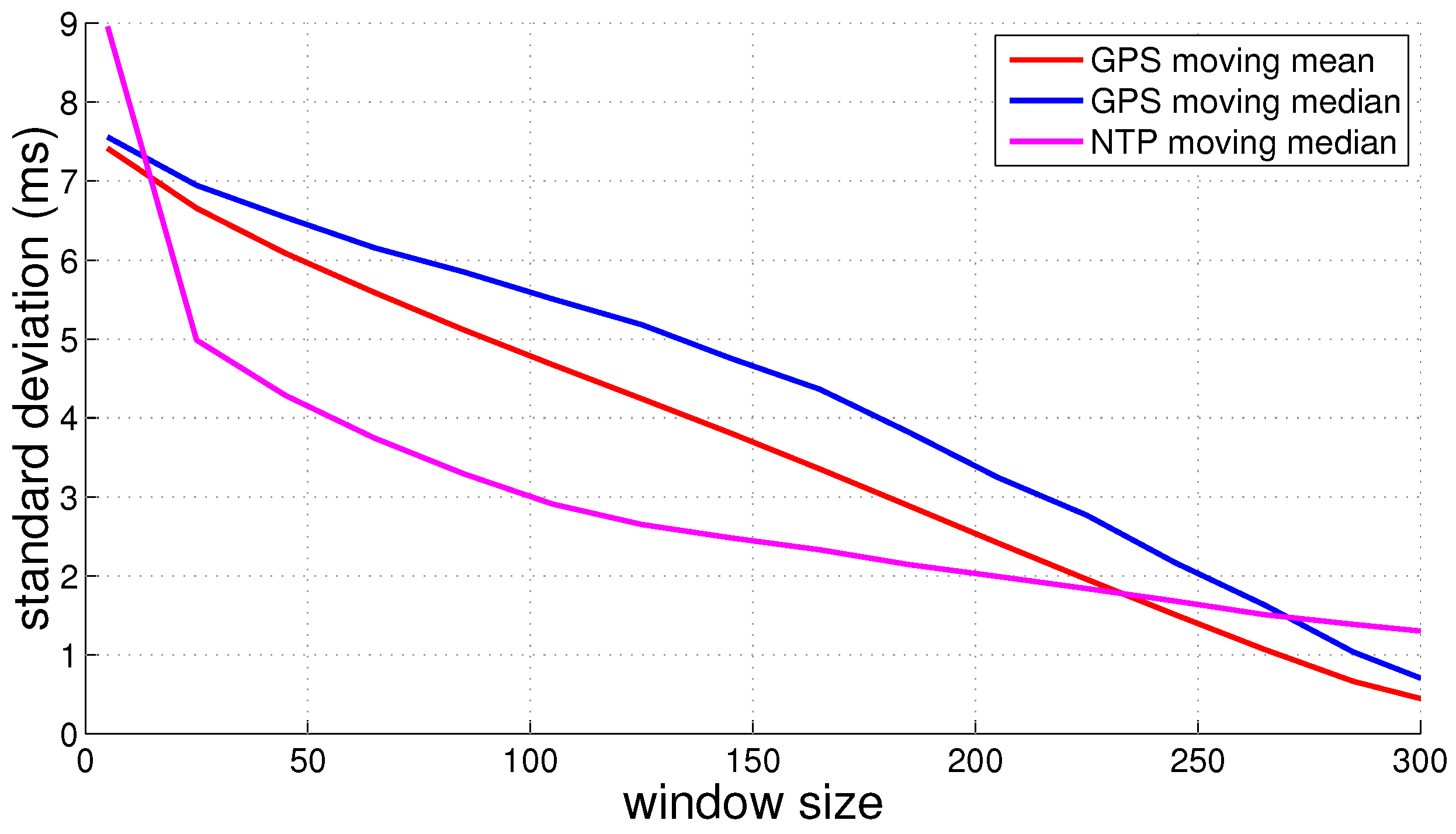

- Time synchronization amongst devices in the network

- Detecting and identifying sound events that can be used for localization

- Audio latency and jitter in the hardware platform and operating system

- Dealing with inaccurate measurements

- Localizing devices and sound event origins

1.2. Paper Contributions and Organization

- To the best of our knowledge, this work is the first to exploit indeterministic devices for collaborative localization based on only ambient sound, without the support of local infrastructure

- We investigate Android’s indeterministic behavior and applicability for collaborative localization. This provides an insight into the requirements of collaborative localization and the limitations of indeterministic devices.

- We present the CLASS algorithm that takes indeterministic behavior into account and preforms collaborative localization on smartphones.

- Our approach can be applied when errors are introduced by utilizing different types of phones or inexpensive, simpler hardware platforms.

- We assess the performance of the CLASS algorithm on an outdoor testbed of Android devices.

2. Related Work

2.1. Target Localization Utilizing Anchor Devices with Known Positions

2.2. Collaborative Localization Utilizing Only Sound Signals

3. Problem Formulation

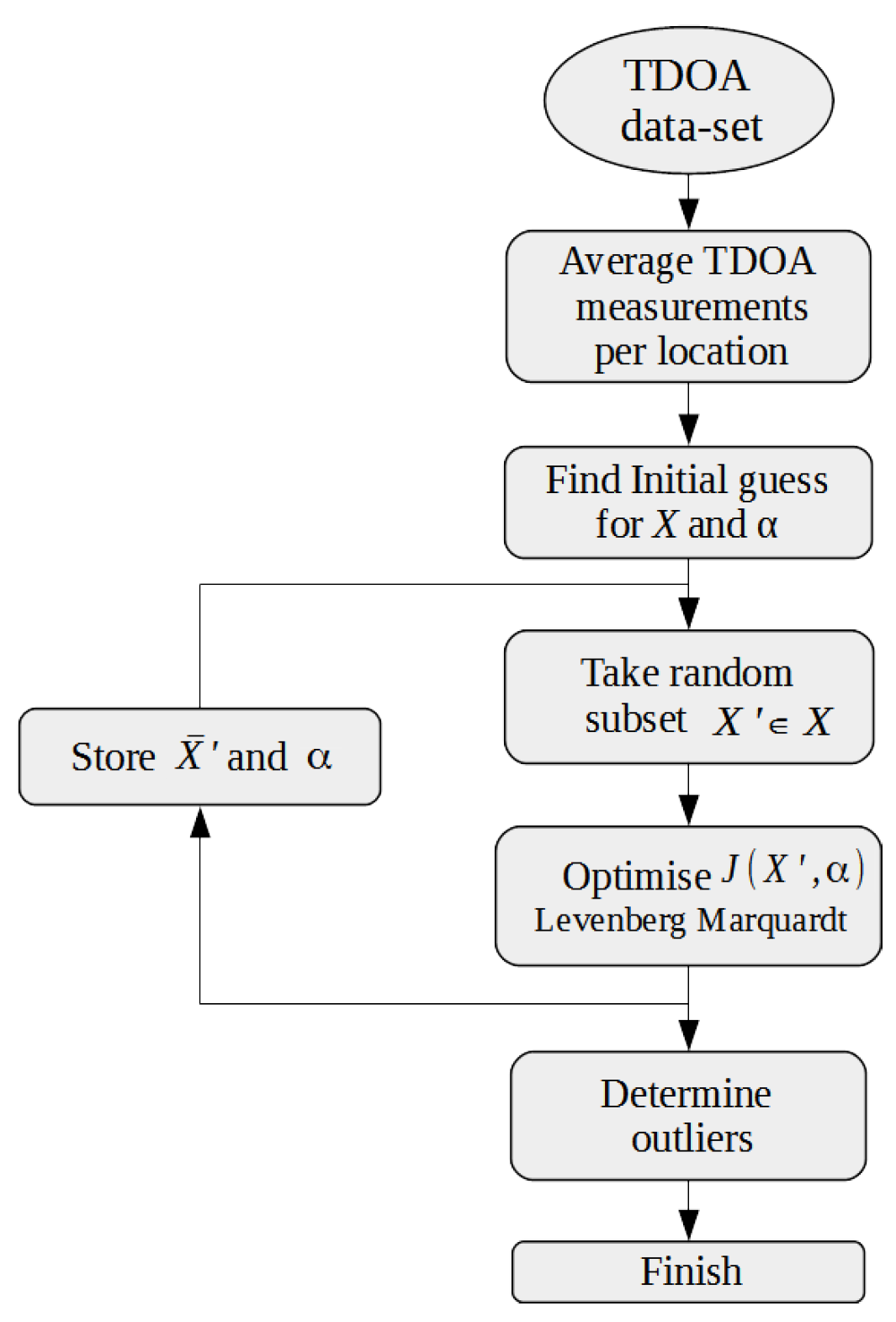

4. A Collaborative Localization Algorithm: CLASS

4.1. Averaging TDOA Values for Events at Identical Locations

| Algorithm 1: TDOA filtering and averaging. |

|

4.2. Histogram-Based Outlier Detection

| Algorithm 2: HBOS filter. |

|

4.3. Starting Point Levenberg–Marquardt Solver

4.4. Main Loop

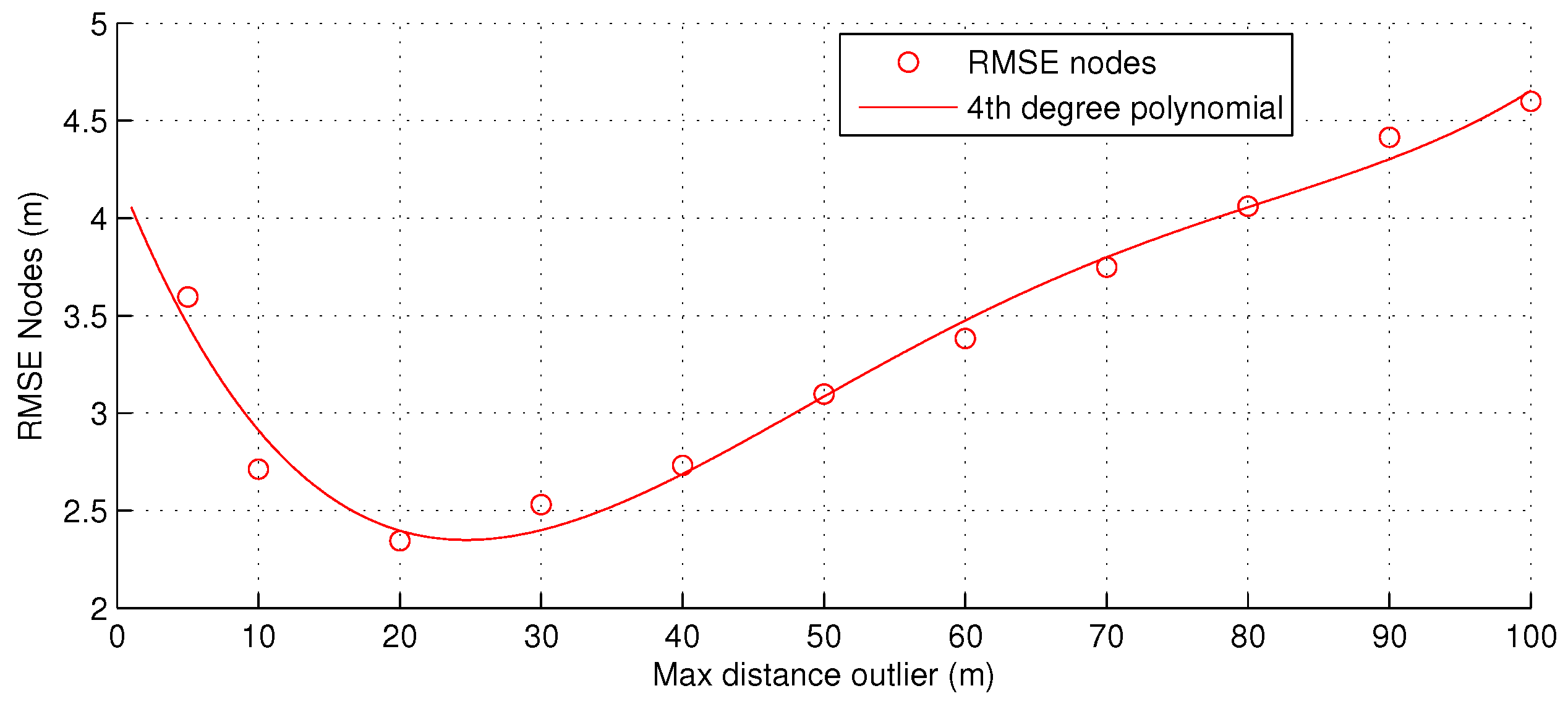

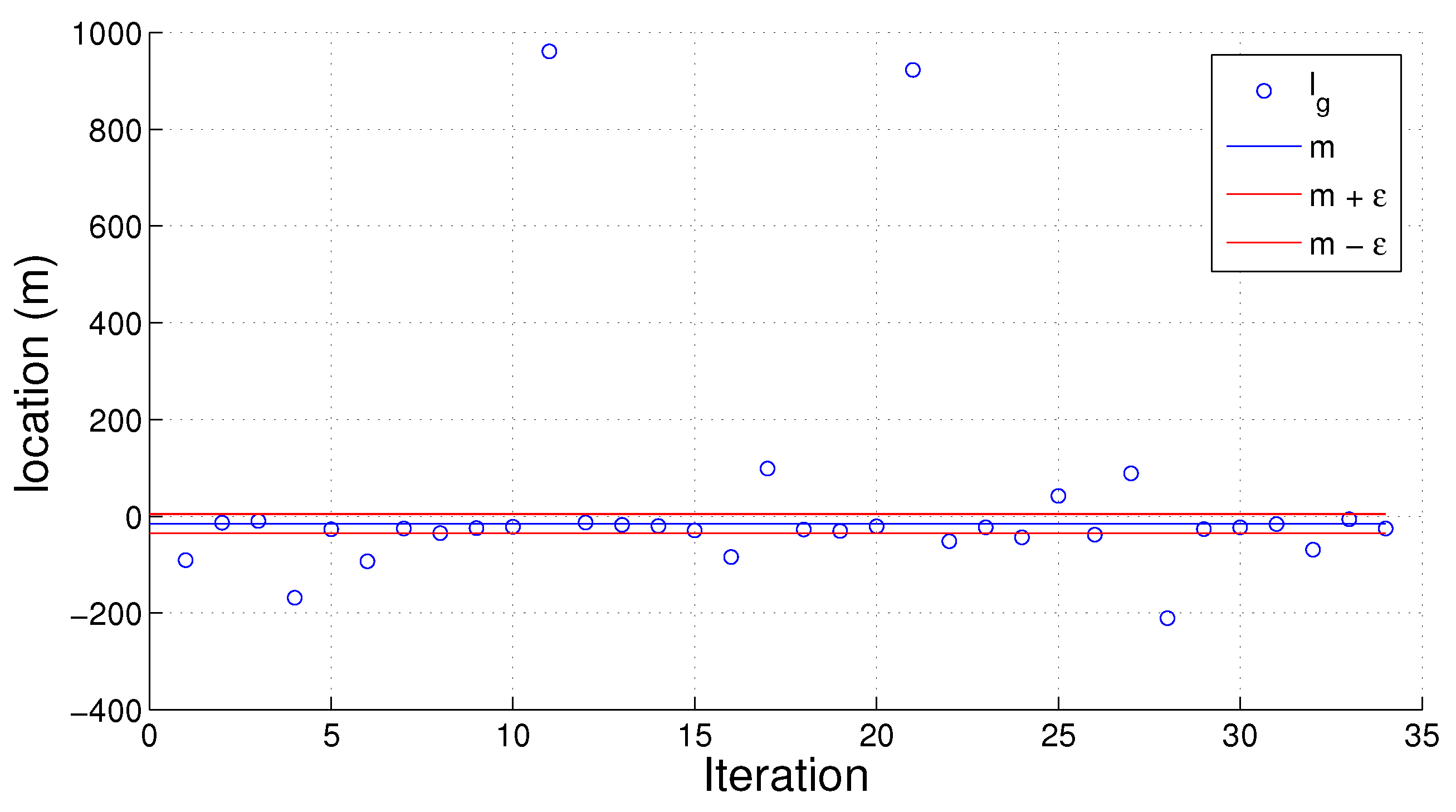

4.5. Outlier Detection in the Results

4.6. Complexity

5. Experimental Validation

5.1. Android Application

- Time synchronization amongst devices in the network

- Detecting sound events and recording their Time Of Arrival (TOA)

- Sharing and aggregation of time stamps

- Executing the localization algorithm with TOA data

5.1.1. Time Synchronization

5.1.2. Sound Event Detection

- Application

- Total number of buffers in the pipeline

- Size of each buffer, in frames

- Additional latency after the app processor, such as from a digital signal processor

- The Linux Completely Fair Scheduler

- High-priority threads with FIFO scheduling

- Priority inversion

- Long scheduling latency

- Long-running interrupt handlers

- Long interrupt disable time

- Power management

- Security kernels

5.1.3. Sharing and Aggregation of Time Stamps

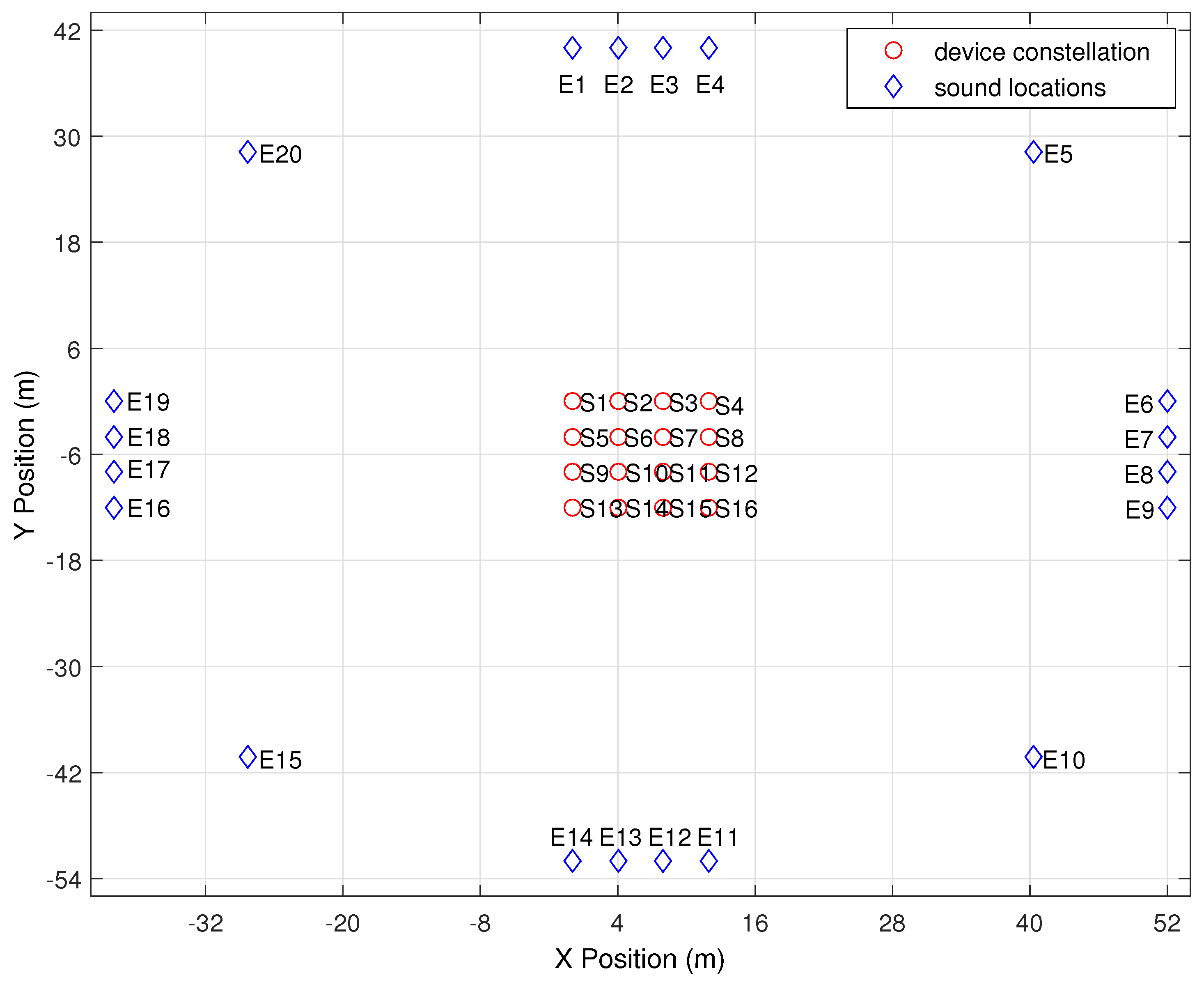

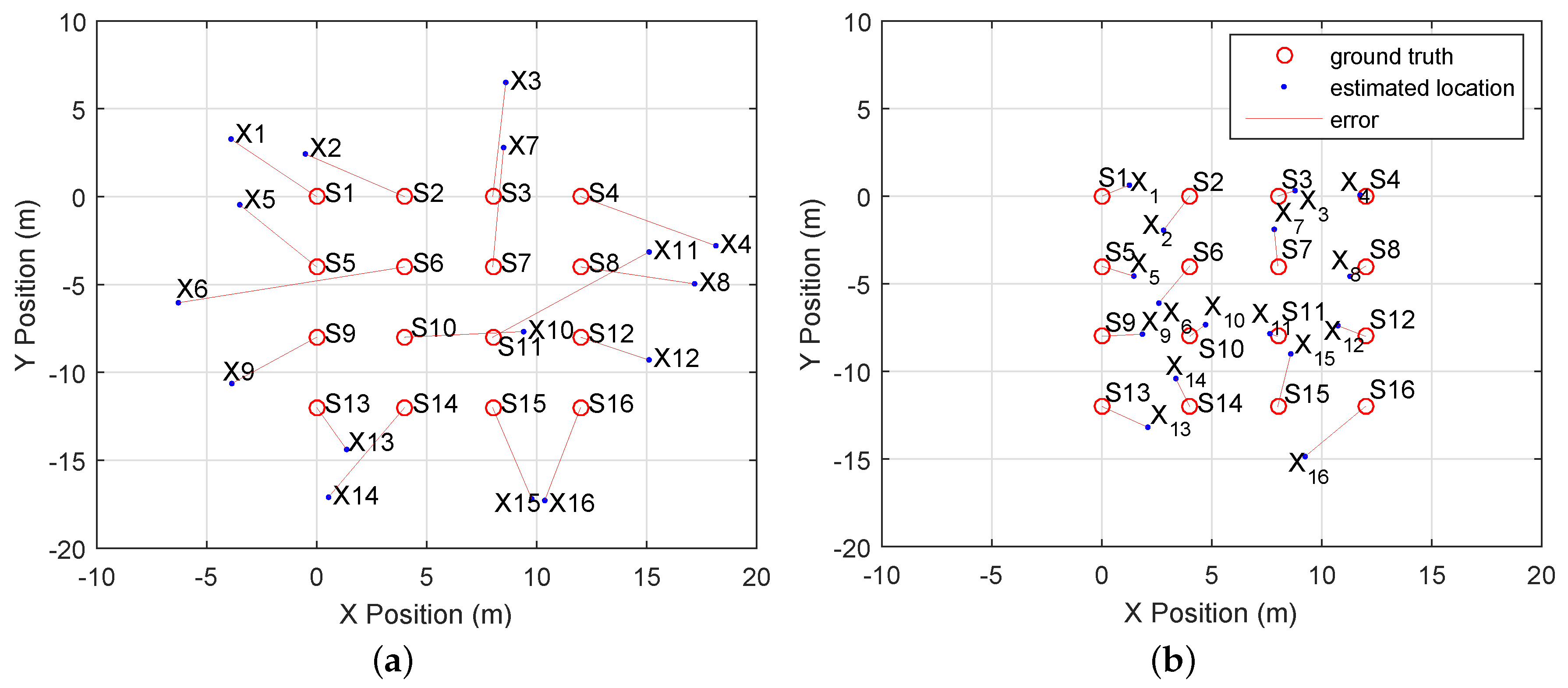

5.2. Outdoor Experiment

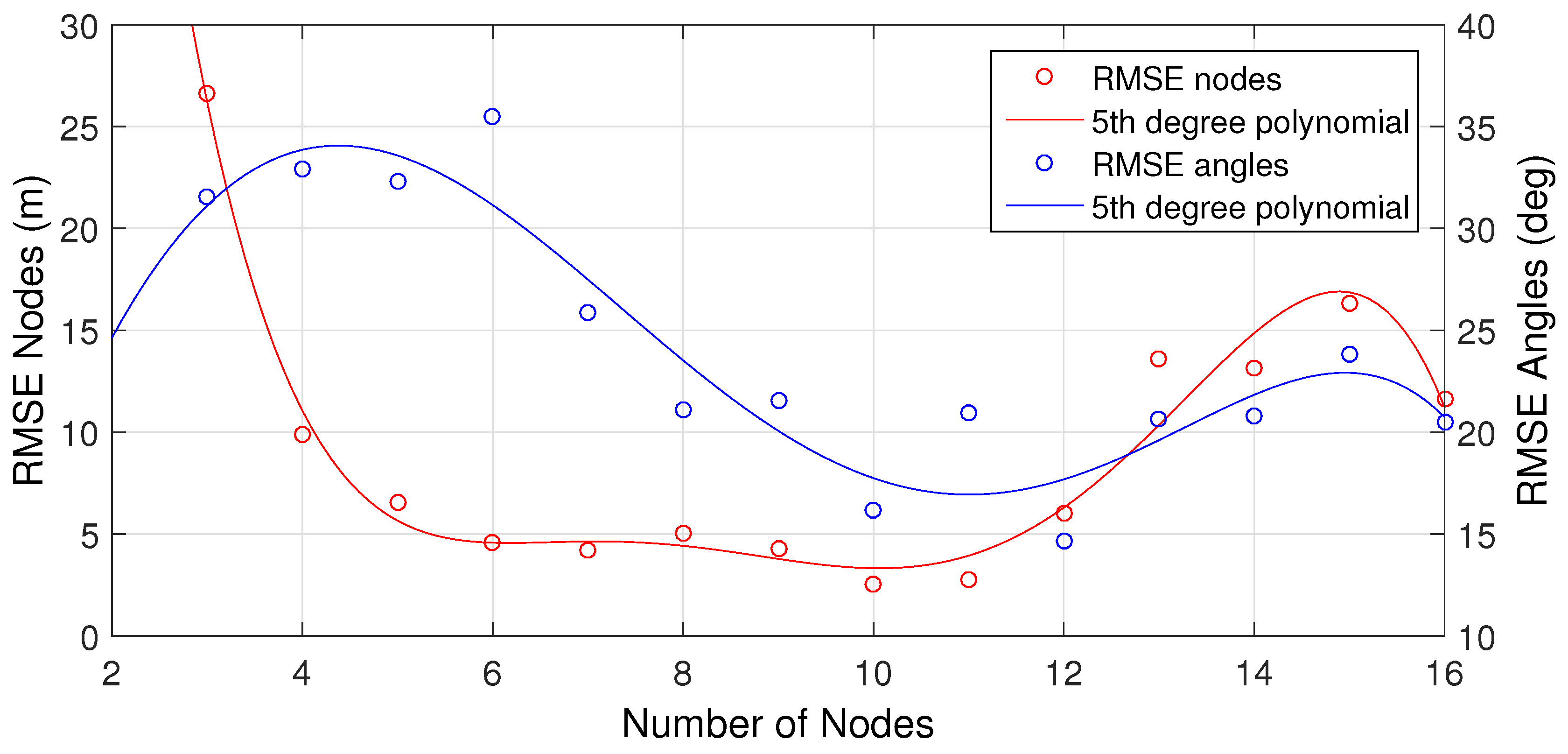

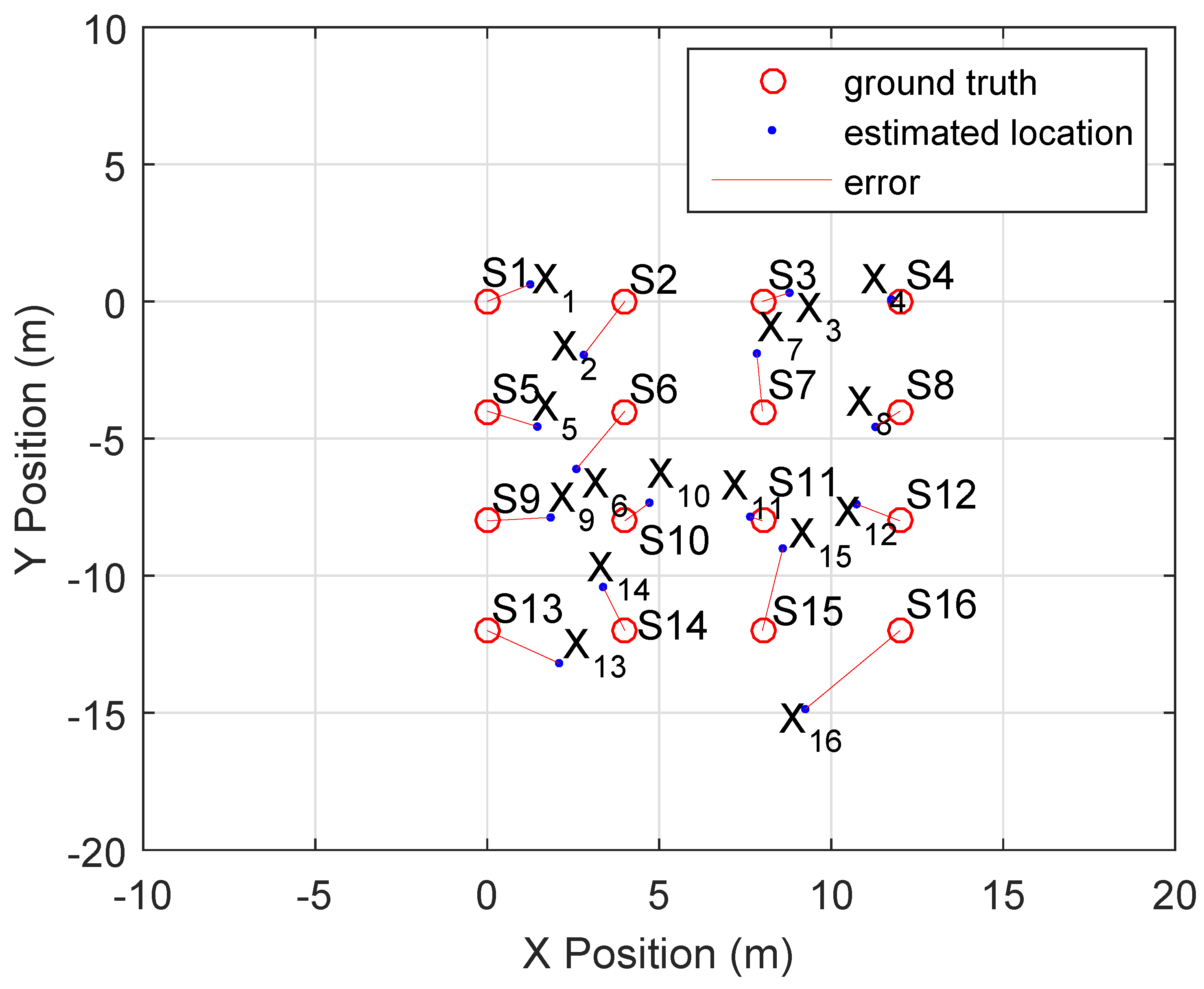

5.3. Results

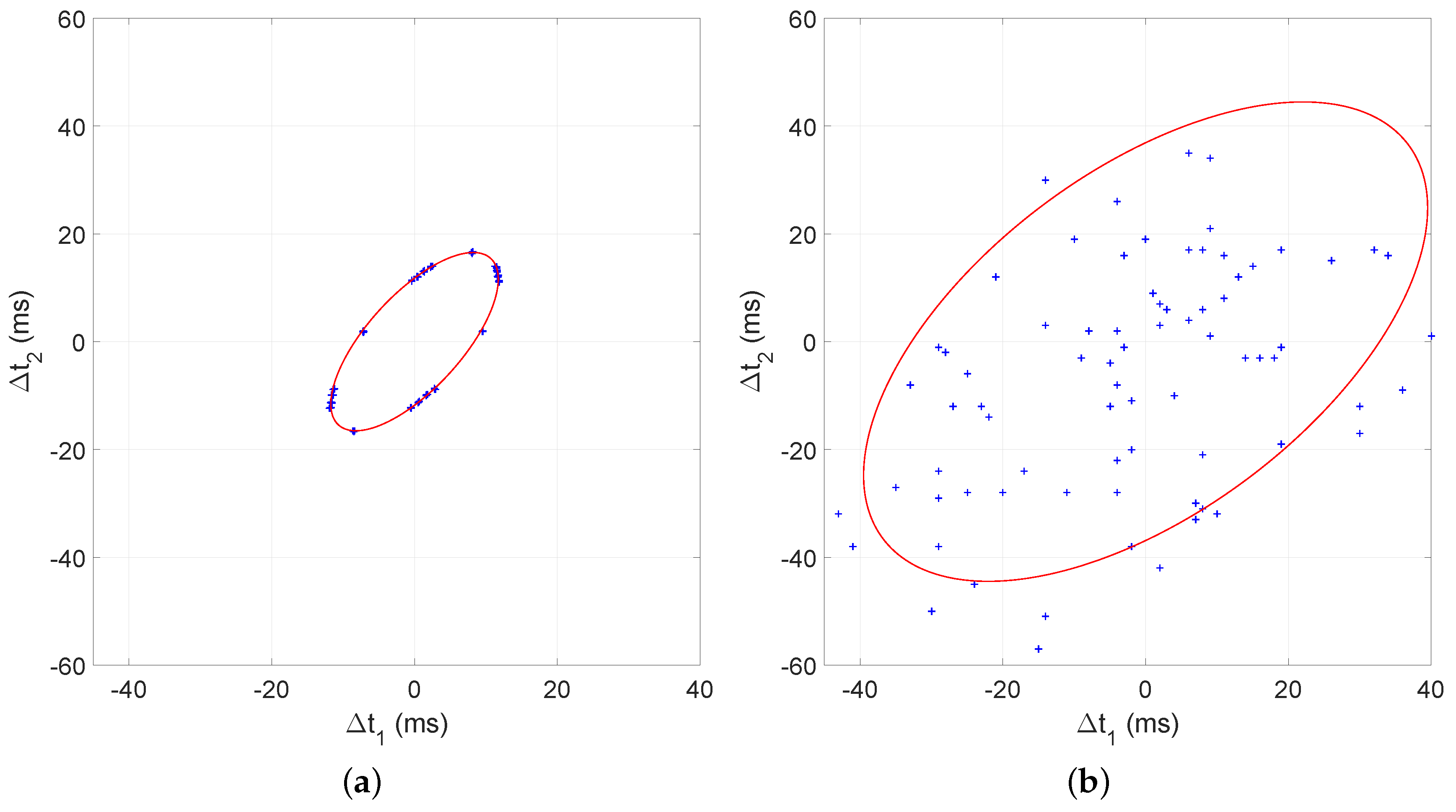

5.4. Comparison with Ellipsoid Method

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Yick, J.; Mukherjee, B.; Ghosal, D. Wireless sensor network survey. Comput. Netw. 2008, 52, 2292–2330. [Google Scholar] [CrossRef]

- Yang, S.-H. Wireless Sensor Networks; Spring: London, UK, 2014. [Google Scholar]

- Campbell, A.T.; Eisenman, S.B.; Lane, N.D.; Miluzzo, E.; Peterson, R.A.; Lu, H.; Zheng, X.; Musolesi, M.; Fodor, K.; Ahn, G.S. The Rise of People-Centric Sensing. IEEE Int. Comput. 2008, 12, 12–21. [Google Scholar] [CrossRef]

- Mao, G.; Fidan, B.; Anderson, B.D. Wireless sensor network localization techniques. Comput. Netw. 2007, 51, 2529–2553. [Google Scholar] [CrossRef]

- Pal, A. Localization Algorithms in Wireless Sensor Networks: Current Approaches and Future Challenges. Netw. Protoc. Algorithms 2010, 2, 45–73. [Google Scholar] [CrossRef]

- Wymeersch, H.; Lien, J.; Win, M.Z. Cooperative localization in wireless networks. Proc. IEEE 2009, 97, 427–450. [Google Scholar] [CrossRef]

- Zekavat, R.; Buehrer, R.M. Handbook of Position Location: Theory, Practice and Advances; John Wiley & Sons: New York, NY, USA, 2011. [Google Scholar]

- Biswas, R.; Thrun, S. A passive approach to sensor network localization. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems, Sendai, Japan, 28 September–2 October 2004; pp. 1544–1549.

- Thrun, S. Affine Structure From Sound. In Advances in Neural Information Processing Systems 18; MIT Press: Cambridge, MA, USA, 2006; pp. 1353–1360. [Google Scholar]

- Wendeberg, J.; Janson, T.; Schindelhauer, C. Self-Localization based on ambient signals. Theor. Comput. Sci. 2012, 453, 98–109. [Google Scholar] [CrossRef]

- Wendeberg, J.; Höflinger, F.; Schindelhauer, C.; Reindl, L. Calibration-free TDOA self-localisation. J. Locat. Based Serv. 2013, 7, 121–144. [Google Scholar] [CrossRef]

- Corporation, O. RTEMS Real Time Operating System (RTOS). Available online: http://www.rtems.org/ (accessed on 1 March 2015).

- Yan, Y.; Cosgrove, S.; Anand, V.; Kulkarni, A.; Konduri, S.H.; Ko, S.Y.; Ziarek, L. Real-time android with RTDroid. In Proceedings of the 12th annual international conference on Mobile systems, applications, and services, Bretton Woods, NH, USA, 16–19 June 2014; pp. 273–286.

- Harter, A.; Hopper, A.; Steggles, P.; Ward, A.; Webster, P. The Anatomy of a Context-Aware Application. Wirel. Netw. 2001, 8, 187–197. [Google Scholar] [CrossRef]

- Simon, G.; Maróti, M.; Lédeczi, A.; Balogh, G.; Kusy, B.; Nádas, A.; Pap, G.; Sallai, J.; Frampton, K. Sensor network-based countersniper system. In Proceedings of the 2nd international conference on Embedded networked sensor systems, Baltimore, MD, USA, 3–5 November 2004; pp. 1–12.

- Shang, Y.; Zeng, W.; Ho, D.K.; Wang, D.; Wang, Q.; Wang, Y.; Zhuang, T.; Lobzhanidze, A.; Rui, L. Nest: Networked smartphones for target localization. In Proceedings of the 2012 IEEE Consumer Communications and Networking Conference, Las Vegas, NV, USA, 14–17 January 2012; pp. 732–736.

- Hennecke, M.H.; Fink, G.A. Towards acoustic self-localization of ad hoc smartphone arrays. In Proceedings of the 2011 Joint Workshop on Hands-free Speech Communication and Microphone Arrays, Edinburgh, UK, 30 May–1 June 2011; pp. 127–132.

- Kuang, Y.; Ask, E.; Burgess, S.; Astrom, K. Understanding toa and tdoa network calibration using far field approximation as initial estimate. In Proceedings of the 1st International Conference on Pattern Recognition Applications and Methods, Algarve, Portugal, 6–8 February 2012; pp. 590–596.

- Burgess, S.; Kuang, Y.; Å ström, K. TOA sensor network self-calibration for receiver and transmitter spaces with difference in dimension. Signal Process. 2014, 107, 33–42. [Google Scholar] [CrossRef]

- Burgess, S.; Kuang, Y.; Wendeberg, J.; Å ström, K.; Schindelhauer, C. Minimal solvers for unsynchronized TDOA sensor network calibration. In Lecture Notes in Computer Science; Springer: Berlin, Germany; Heidelberg, Germany, 2013; pp. 95–110. [Google Scholar]

- Goldstein, M.; Dengel, A. Histogram-based outlier score (hbos): A fast unsupervised anomaly detection algorithm. In KI-2012: Poster and Demo Track, Proceedings of 35th German Conference on Artificial Intelligence, Saarbrucken, Germany, 24–27 September 2012; pp. 59–63.

- Freedman, D.; Diaconis, P. On the histogram as a density estimator:L 2 theory. Probab. Theor. Relat. Fields 1981, 57, 453–476. [Google Scholar] [CrossRef]

- Chen, Y.; Davis, T.A.; Hager, W.W.; Rajamanickam, S. Algorithm 887: CHOLMOD, Supernodal Sparse Cholesky Factorization and Update/Downdate. ACM Trans. Math. Softw. 2008, 35, 22. [Google Scholar] [CrossRef]

- Sabaridevi, M.; Umanayaki, S. Sound Event Detection Using Wireless Sensor Networks. Int. J. Adv. Res. Sci. Eng. 2015, 4, 273–279. [Google Scholar]

- Rougui, J.E.; Istrate, D.; Souidene, W. Audio sound event identification for distress situations and context awareness. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 3501–3504.

- Dennis, J.; Tran, H.D.; Li, H. Spectrogram image feature for sound event classification in mismatched conditions. IEEE Signal Process Lett. 2011, 18, 130–133. [Google Scholar] [CrossRef]

- Le, D.V.; Kamminga, J.W.; Scholten, H.; Havinga, P.J.M. Nondeterministic sound source localization with smartphones in crowdsensing. In Proceedings of the 2016 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops), Sydney, Australia, 14–18 March 2016; pp. 1–7.

- Le, D.V.; Kamminga, J.W.; Scholten, H.; Havinga, P.J.M. Error Bounds for Localization with Noise Diversity. In Proceedings of 2016 International Conference on Distributed Computing in Sensor Systems (DCOSS), Piscataway, NJ, USA, 26–28 May 2016.

- Contributors to Audio Latency. Available online: https://source.android.com/devices/audio/latency_contrib.html (accessed on 1 March 2015).

- Performance Tips. Available online: http://developer.android.com/training/articles/perf-tips.html (accessed on 1 March 2015).

- Group, K. The Standard for Embedded Audio Acceleration. Available online: https://www.khronos.org/opensles/ (accessed on 1 March 2015).

- Turkes, O.; Scholten, H.; Havinga, P.J. BLESSED with Opportunistic Beacons: A Lightweight Data Dissemination Model for Smart Mobile Ad-Hoc Networks. In Proceedings of the 10th ACM MobiCom Workshop on Challenged Networks, Paris, France, 11 September 2015; pp. 25–30.

- Arun, K.S.; Huang, T.S.; Blostein, S.D. Least-squares fitting of two 3-d point sets. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 9, 698–700. [Google Scholar] [CrossRef] [PubMed]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kamminga, J.; Le, D.; Havinga, P. Ambient Sound-Based Collaborative Localization of Indeterministic Devices. Sensors 2016, 16, 1478. https://doi.org/10.3390/s16091478

Kamminga J, Le D, Havinga P. Ambient Sound-Based Collaborative Localization of Indeterministic Devices. Sensors. 2016; 16(9):1478. https://doi.org/10.3390/s16091478

Chicago/Turabian StyleKamminga, Jacob, Duc Le, and Paul Havinga. 2016. "Ambient Sound-Based Collaborative Localization of Indeterministic Devices" Sensors 16, no. 9: 1478. https://doi.org/10.3390/s16091478