1. Introduction

A network of large number of sensors can be deployed to collect and process data to monitor a certain event. Advances in sensor technology have allowed the development of “smart” sensors that are not only able to collect but also process data [

1]. In addition to the sensing and processing units, these sensors have communication units that provide additional capability of dissemination of data and information. Modern sensors are inexpensive and can be deployed in large numbers to form a sensor network so that the sensors collaborate in collecting, analyzing and processing information [

1]. Deployment of large sensors in a network has the benefit of increasing the resolutions of the data collected and, as a result, increasing the accuracy of the processed information. Moreover, sensor networks, by virtue of the multiplicity of the data collected from a large number of sensors, provide protections against sensor failures, mitigates the effect of shadowing due to no line-of-sight (LOS) transmission path and enhances reliability against low Signal-power-to-Noise-Ratio (SNR).

The most important feature of sensor networks is their capability to process data collaboratively. Based on their data processing architecture, sensor networks can be classified as centralized, distributed and hierarchical. In a centralized architecture, all of the data processing functions are accomplished at a central location known as a fusion center where the data is processed and information is extracted. Since the raw data from all the sensors is transmitted to the fusion center, the centralized architecture requires a huge communication bandwidth [

2]. Moreover, the centralized architecture is less attractive because it is not scalable, and it is prone to the robustness of the fusion center.

The distributed architecture has no central processing unit, and, thus, the sensors locally process the collected data with limited collaboration from other sensors, typically with neighboring sensors. Since each sensor communicates only with its neighboring sensors, frequency reuse can be implemented to save bandwidth. Furthermore, transmission power is saved because the distance of signal transmission is limited to neighboring sensors. Distributed architecture has additional benefits such as reduced computational load and enhanced robustness to failures. However, distributed architecture requires more sophisticated sensor nodes, which have the capability to process data, as well as algorithms that can extract optimal information with minimal exchange of data among the sensors. This paper focuses on developing a distributed algorithm that reduces sensor communications with the objective of reducing bandwidth and transmission power.

An important application of sensor networks is for target tracking [

3,

4,

5,

6]. From a signal processing perspective, target tracking can be considered as a filtering problem [

7,

8]. The kinematic parameters of the target, which evolve in time, are modeled as the states of a dynamic system. The data collected from the sensors, which are functions of the kinematics parameters, represent the measurements of the dynamic system. The objective of the target tracking problem is then the estimation of the kinematic parameters, which typically include the position, velocity and acceleration of the target, recursively in real-time.

When the target tracking model is formulated as a linear and a Gaussian dynamic system, the tracking problem is analytically tractable and its solution can be obtained using a Kalman filter. However, most target tracking problems are modeled as nonlinear dynamic systems, primarily because the relationship of the measurements obtained from the sensors and the kinematic parameters is usually nonlinear [

7,

9]. For nonlinear dynamic systems, exact analytic solutions are generally not available; however, approximate and suboptimal solutions can be obtained using grid-based filters or extended Kalman filters (EKF) and their variants. A more accurate and popular estimation method for nonlinear and non-Gaussian dynamic systems, however, are Sequential Monte Carlo (SMC) methods or, as widely refereed, particle filtering methods [

10,

11].

Particle filtering methods are Bayesian methods that recursively approximate the posterior distribution of the unknown kinematic parameters using a discrete measure with a random support [

12,

13]. The discrete measure is defined using a set of samples, also refereed particles, and their associated weights. The particles are sequentially drawn from an importance function through Monte Carlo technique, and the weights are recursively computed using the prior and the likelihood function of the kinematic parameters.

Particle filtering methods can be implemented in a distributed manner on sensor networks [

3,

4,

14,

15]. In such implementation, each of the sensors on the network locally runs a particle filtering algorithm and exchanges information with the other sensors to approximate the global posterior distribution of the kinematic parameters. Sensor networks, however, are resource constrained, and have limited power and communication bandwidth. When the number of sensors in the network is large, the required bandwidth for communication between the sensors can be significant [

16]. Moreover, in most cases, sensors consume more power for transmission of data than for data processing. These constraints limit the amount of information that can be exchanged among sensors. A critical issue in distributed particle filtering, therefore, is the development of algorithms that efficiently represent the information that is exchanged among the sensors to build the global posterior density. The objective of this paper is to develop such an algorithm.

State-of-the-Art

There have been many distributed particle filtering (DPF) methods proposed in the literature. These distributed particle filtering algorithms can be broadly categorized into two classes in terms of the type of information they exchange among each other. The first class of DPF methods requires the exchange, among the sensors, some form of the raw measurement collected by each sensor. Recently, an example of such DPF method that requires the availability of all the raw measurements at every sensor node is proposed in [

3]. This approach allows each sensor to compute the global posterior distribution locally. While such an approach provides an optimal approximation of the global posterior density, and hence, achieves excellent performance, it is, however, resource prohibitive due to its exorbitant power and bandwidth demand [

17]. The second class of DPF methods requires the exchange of some statistics of the local estimate, rather than the raw measurement, of each sensor. Since the estimates represent processed information, this approach may demand lower processing load from the sensors than the methods of the first class. One such approach is described in [

18], where each sensor transmits its computed statistics of the local posterior density to a central processing unit where the data is fused and the global posterior density is determined. Although the computational load of the fusion center may be reduced, due to partial data processing by the sensors, this strategy not only is not entirely distributed but also demands high power requirements because of the need for sensors located far away to send their information to the fusion center. An efficient approach to reducing the power and bandwidth requirement of a sensor network is, therefore, to develop a distributed algorithm whose communication strategy requires the sensors to exchange their statistical information to neighbouring sensors only rather than to a far-located fusion center or to all sensors. We note that the communication power requirement of sensors increases proportional at least to the square of the transmission distance. Moreover, when the sensors are restricted to exchanging information with only their neighbours, the communication bandwidth can be reused in other sensor neighbourhoods. Most of the second class of DPF methods use neighbourhood information exchange strategies such as forward-backward propagation [

19] or a consensus filter [

19,

20,

21] to compute the statistics of the global posterior distribution. The performance as well as the efficiency, in terms of power and bandwidth, of the second class of the DPF methods depend on the type and the form of the information the sensors exchange. For example, in [

22] and also in [

20], each sensor approximates the local posterior distribution using a Gaussian function and transmits to its neighbours the mean and covariance of the approximation to cooperatively build the global posterior distribution through a gossip (consensus) algorithm. Similarly, [

23], builds the global posterior distribution from the Gaussian approximation of a local posterior through an iterative protocol based on covariance intersection. At the cost of power and bandwidth efficiency, a better approximation of the global posterior distribution can be obtained by approximating the local posterior distributions by Gaussian mixture models (GMM) as proposed in [

14,

24]. A DPF method where sensors collaborate to compute the global likelihood function, rather than the global posterior density, through a consensus filter has been reported [

25,

26,

27]. The work in [

25] provides a generalized approach of approximating the global likelihood through consensus filter. It approximates the log-likelihood by a polynomial function, and the sensors exchange only the coefficients of the polynomial function to compute the global likelihood.

This paper follows the approach where the sensors approximate their local likelihood functions by a Gaussian function, and, collaboratively with neighboring sensors, build the global likelihood function by exchanging the mean and covariance of their approximations. Our algorithm has similar computational cost and communication demand as the DPF methods that approximate either the likelihood or the posterior distribution by a Gaussian function. In our algorithm, the Gaussian approximated likelihood function is computed using the Monte Carlo technique. Such approximation of the likelihood function, with the availability of the prior distribution, provides better performance, particularly, for the common case where the measurement noise is Gaussian and the resulting likelihood function is uni-modal.

Our algorithm is based on the observation that, in particle filtering algorithms, the contribution of new measurements from the sensors to the recursive updating of the global posterior distribution is entirely contained within the global likelihood function. Assuming the measurement noise across all the sensors is identically and independently distributed (i.i.d.), it can be shown that the global likelihood function is a product of all the local likelihood functions. With an approximated Gaussian local likelihood functions, the global likelihood function is also a Gaussian function whose mean and covariance can be computed either by propagating the parameters of the local likelihood functions across the sensors using a forward-backward propagation strategy or by using an average consensus filter. In this paper, we have developed an algorithm that is flexible to employ both the forward-backward propagation and the average consensus filter information exchange strategies to compute the global likelihood function.

The rest of the paper is structured as follows. We present the system model in

Section 2. A brief overview of the particle filtering method is given in

Section 3. The proposed distributed particle filtering algorithm is laid out in

Section 4.

Section 5 describes the proposed strategy for information exchange among the sensors. Computer simulation results are given in

Section 6, and finally conclusions are provided in

Section 7.

2. System Model

Suppose a sensor network consisting of

K sensors is used for tracking a target. The problem of target tracking involves the sequential estimation of the kinematic parameters of the target from the measurements obtained from the sensors. From a signal processing perspective, target tracking is a filtering problem [

7,

8]. The time-evolution of the kinematic parameters and the relationship of the measurements to the kinematic parameters can be modeled by a dynamic system with a state-space representation in which the kinematic parameters represent the unknown states, and the measurements obtained from the sensors represent the observations of the dynamic system. In our mathematical representation, we denote these unknown states of the dynamic system at time

n by a vector

where

and

is the dimension of

.

Assuming the evolution of the vector

has a Markovian property, we represent the state equation of the dynamic system as follows:

where

denotes the function that describes the time-evolution of the vector

, and

is an

vector denoting the state noise. We assume the state function

is nonlinear. Because of the Markovian property, the state transition probability density function (pdf),

is solely dependent upon the pdf of

. Furthermore, we assume that

is known.

We represent the observation equation of the dynamic system as follows:

where

is a vector of dimension

and denotes the measurement obtained from sensor

k at time instant

n;

denotes the measurement function that maps the kinematic vector parameter

to the measurement vector

; and

is a vector of dimension

denoting the measurement noise. We assume that the measurement function

is nonlinear but known. Furthermore, we assume that the pdf of the measurement noise vector

is known, and it is identically and independently distributed (i.i.d.) across time and sensors. Therefore, the local likelihood function of each sensor

, for

are known.

From the above formulation, the objective of the tracking problem is the sequential real-time estimation of the state vectors from the measurements , where denotes the values of the states from time 0 to time n, and denotes the measurements obtained from all the sensors from time 1 to time n.

3. Particle Filtering Method for Sensor Networks

When a dynamic system represented by Equations (

1) and (

2) is nonlinear and/or non-Gaussian, there is no analytical solution for the sequential estimation of

from the measurement

. In such a case, we resort to numerical methods for approximate solutions, and one such powerful method is particle filtering. Particle filtering is a Monte Carlo technique whose estimation principles are rooted in Bayesian methodology. According to Bayesian methodology, all the information needed to estimate the unknown parameter

is captured by its posterior distribution,

[

12,

13].

Recently, many variants of particle filtering methods have been proposed [

28,

29,

30]. However, in its basic form, particle filtering recursively estimates the posterior pdf

by approximating it using a random discrete measure defined as

, where

is the

i-th random particle trajectory,

is its corresponding weight and

N is the total number of particles [

13]. Mathematically, the approximate posterior pdf is represented by

where

is the Dirac’s delta function.

A key concept in the development of particle filtering is importance sampling [

13]. According to importance sampling, an empirical approximation of the posterior distribution

can be obtained using samples drawn from an importance function

by attaching corresponding weights that are computed from

A critical feature of particle filtering technique is the ability to recursively build the random discrete measure at time n from the discrete measure obtained at time . When new information of is received at time n, the discrete measure of posterior distribution is built from the discrete measure of by simply appending the new particles and updating the weight .

The recursive approximation of the posterior distribution requires an importance function that can be factorized as follows [

10]:

With such choice of an importance function, it can be shown that the weights update equation is given by

It is shown that, as the number of particles goes to infinity, the approximated posterior distribution converges to the true posterior distribution [

11]. After approximating the posterior distribution by its discrete measure, then a Minimum Mean Square Estimator (MMSE) given by

can be applied to compute the estimates of

.

If the particle filtering algorithm is implemented as described above, the particles would degenerate after a few iterations, i.e., most of the particles except for a very few would have insignificant weights [

13]. Because the particles with small weights have little contribution to the estimation of the unknown parameters, carrying them forward is simply a waste of computational resources. Moreover, it has been reported that the variance of the weights of the particles does not decrease as the iteration continues [

13]. A common approach to avoid such particle degeneracy is to apply resampling [

31], which is a procedure that essentially replicates the particles with significant weights while eliminating those particles with very small weights. Resampling is a Monte Carlo operation where the particles that are carried forward to time

n are stochastically chosen from the particles at time

with a probability proportional to their weights [

32].

4. Distributed Particle Filter

To develop a distributed particle filtering algorithm in which multiple sensors collaborate to estimate the unknown states, let us consider the following decomposition of the posterior distribution

which is derived using Baye’s theorem by assuming Markovian relationship of the states and independent noise measurements across sensors and time. Selecting the prior pdf (predictive density) of the state variable as the importance function,

the weight update equation is obtained as follows:

where

represents the local likelihood function of sensor

k, and the product

denotes the global likelihood function.

In the estimation of the global posterior distribution, we note from the weight update Equation (

9) that all the measurement information from the sensors is captured by the global likelihood function. Therefore, if a sensor can somehow determine the global likelihood function at every instant of

n, then it can make a local estimation of the global posterior distribution and, as a result, estimate the unknown state

recursively. Furthermore, we note that the global likelihood function is a product of the local likelihood function of all the sensors. In building the estimates of the posterior distribution, the sensors, therefore, need to exchange information about their local likelihood function to determine the global likelihood function. It is shown that the distributed particle filters uniformly convergence [

15].

Proposed Algorithm

In our proposed algorithm, at each instant of time

n, when a sensor

k obtains a measurement

, it approximates its local likelihood function,

, by a Gaussian function given by

where

denotes the Gaussian function,

is the mean, and

is the covariance of the Gaussian function.

The approximation of the local likelihood function by a Gaussian function is performed using Monte Carlo techniques. The statistics of the approximated Gaussian function are determined from the sample mean, , and sample covariance, , computed from particles obtained from the predictive density. Since the importance function for our particle filtering algorithm is chosen to be the predictive density, better approximation of the local likelihood function can be obtained if particles, , obtained from the predictive density, are used for the Monte Carlo computation of the sample mean and sample covariance of the approximated Gaussian function.

The computation of the sample mean and covariance of the Gaussian function require the determination of a likelihood factor

, which is obtained by evaluating the value of

at

, where

are particles drawn from

, i.e.,

After determining the likelihood factors, the sample mean

is determined from

and the sample covariance

is computed from

where

is the normalized likelihood factor of the

i-th particle of the

k-th sensor.

Since the local likelihood function of each sensor is approximated by a Gaussian function, the global likelihood function, which is the product of all the local likelihood functions, is also a Gaussian function. The approximate global likelihood function is then given by

where

is the global mean and

is the global covariance. It is straightforward to show that the global covariance can be computed from the covariance of the local likelihood functions by

and the global mean can be determined using

From Equations (

15) and (

16), we observe that the computation of the global covariance and the product of mean and covariance entails only a simple summation of local parameters, which makes our algorithm convenient to apply the forward-backward propagation and average consensus filter strategies for information exchange among the sensors.

5. Strategy for Information Exchange

After computing the mean and covariance of their local likelihood functions using Equations (

12) and (

13), respectively, the sensors exchange these values to compute collaboratively the mean and covariance of the global likelihood functions using Equations (

15) and (

16). If each sensor attempts to broadcast its values to all the sensors, the required transmission power and bandwidth could be extremely high. The power and the bandwidth required for the transmission of information among the sensors will be limited if the sensors are restricted to communicate with their neighbours. We note that when a signal is transmitted over a wireless channel from a source, its received power decreases inversely proportional to at least the square of the transmission distance. Thus, the amount of power required to reach the far located sensors is much greater than the power required to transmit information to neighbouring sensors. Furthermore, when transmission of information is limited to neighbouring sensors, bandwidth can be reused in other sensor neighbourhoods, saving the total bandwidth required for the sensor networks.

The simplest strategy for exchanging information among the sensors is the forward-backward propagation strategy in which each sensor passes on information on a path exactly once in the forward and then once in the backward directions. In its simplest form, the forward-backward strategy, therefore, first requires the determination of a path that traverses through all the sensors once by connecting each sensor to one of its neighbors. In the forward direction, the data of each sensor is accumulated and the total sum is obtained at the last sensor, then the total sum is propagated backwards to each sensor through the same pre-determined path.

For our algorithm, the forward propagation involves the following steps:

the sensor at node j receives partial sums and from sensor at node

adds its computed local values, and to the partial sums

produces its partial sums, and , and transmits them to sensor node

After the forward propagation is completed, the values of the global covariance and the product of the mean and covariance are propagated backwards to each sensor through the established direct path. The complete algorithm of the forward-backward information exchange strategy is given in Algorithm 1.

| Algorithm 1 Forward Backward Exchange |

| 1. Forward Propagation |

| For to K (the path is pre-determined) |

|

|

| end |

| 2. Backward propagation |

| For (backward) |

| Pass to sensor at node j |

| Pass to sensor at node j |

| end |

While simplicity and pre-determined latency are the two major advantages of the forward-backward propagation strategy, it also has important drawbacks. First of all, the latency is directly proportional to the number of sensors, and if the sensor network has a large number of sensors, the latency could be huge. The second drawback is that the strategy requires the pre-determination of a direct path that traverses through all the sensors, and whenever a sensor fails, the path should be updated [

19]. Since the information exchange in our algorithm requires a simple accumulation of values, the latency problem can be alleviated by using multiple paths that converge at single or multiple sensors.

An alternative strategy of information exchange that limits communication with neighboring sensors is the use of an average consensus filter. An average consensus algorithm is an iterative procedure for computing an average of the values held by a network of nodes [

4]. While the exchange of values is limited to neighbouring nodes, the algorithms converge asymptotically to a global agreement. See [

33] for more discussion on convergence of consensus filters. In our case, the sensors compute iteratively the values of the average of the inverse of the covariance and the average of the product of the mean and the inverse of the covariance by exchanging their computed values only with their neighbours.

The steps of the average consensus filter for our algorithm are as follows. Suppose that at iteration

, sensor

j receives from all its single-hop neighbors,

, the averages,

and

, where

denotes the total number of single-hop neighbors of sensor

j. Then, sensor

j updates the averages at iteration

m as follows:

where

ϵ is a constant step size. It is known that if

ϵ is small and

, the iteration converges [

34] to

and

, from which the desired

and

can be computed. The algorithm is summarized in Algorithm 2.

| Algorithm 2 Average Consensus Algorithm |

| 1. Initializing for every sensor j- iteration zero |

|

|

| 2. Iterate until convergence |

| while() (Δ is a small convergence test constant) |

| (increment iteration index) |

| 3. Repeat for all sensors j |

|

|

|

|

| end |

The summary of the steps of the proposed distributed particle filtering algorithm is given in Algorithm 3.

| Algorithm 3 Distributed particle filtering Algorithm |

| 1. Each Sensor k performs the following: |

| 2. Initializing |

| All sensor measurements are used to derive priors |

| 3. For to (total time instants) |

| 4. For to N (total number of particles) |

| 5. Drawing a sample |

| |

| 6. Computing the likelihood factors |

| and |

| 7. Computing the local mean and Covariance |

| |

| |

| 8. Approximating the local likelihood function |

| |

| 9. Computing global likelihood function |

| (by exchanging information with other sensors) |

| |

| where and |

| 10. Weight Updating |

| |

| 11. Weight Normalization |

| 12. Resampling if |

| |

6. Simulation Results

A computer simulation was carried out to verify the effectiveness of the proposed algorithm. A target tracking problem on a two-dimensional plane (

x–

y) was considered. A sensor network consisting of 100 sensors laid out on a 200 m × 200 m field, as shown in

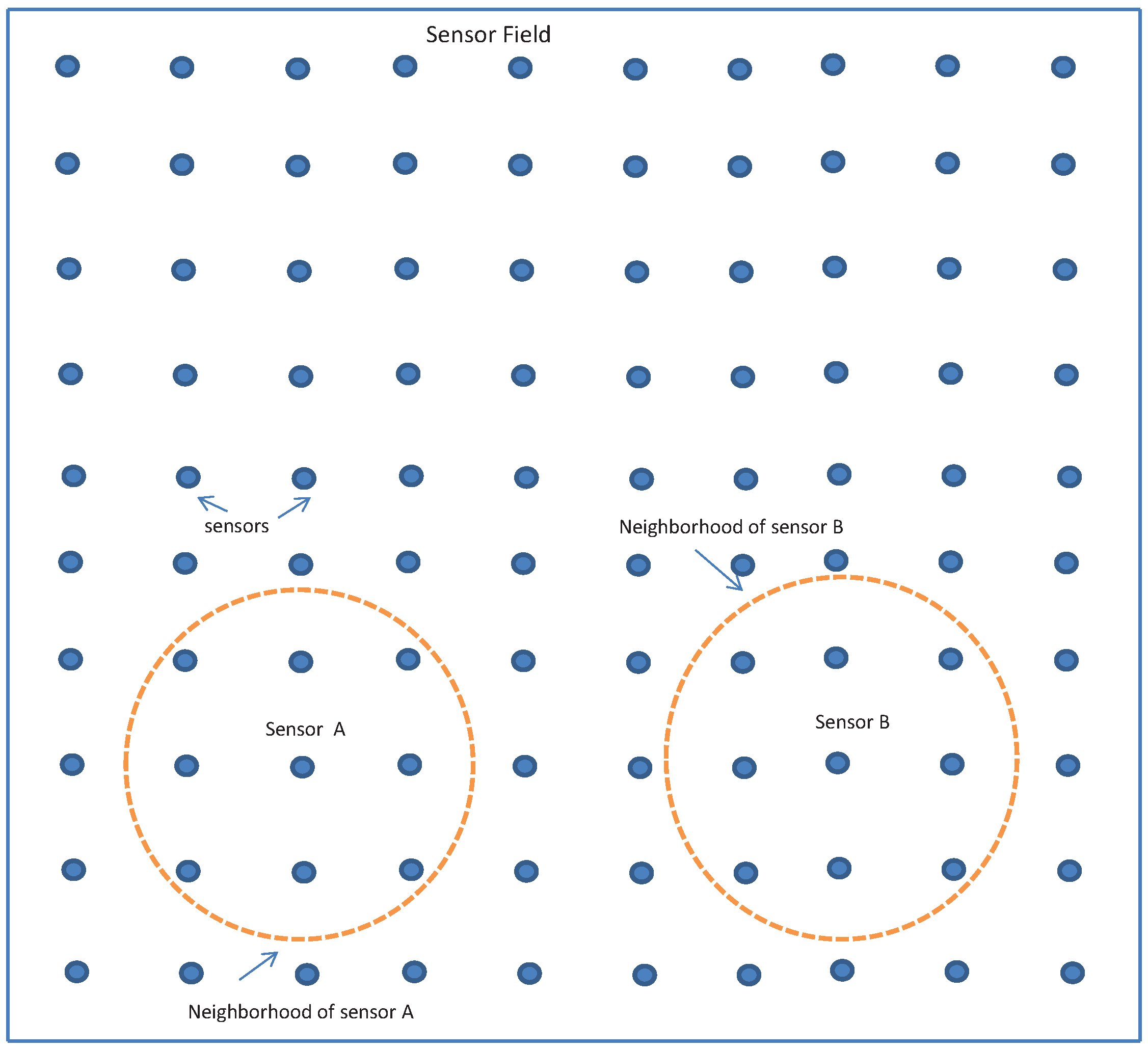

Figure 1, is used to collect and process the target tracking data.

The motion of the target is assumed to be constant velocity and the evolution of its kinematic parameters are modeled by

where the state vector denoted by

consists of the

x–

y positions (

and

) and velocities (

and

) of the target,

is the sampling period (we assume ), and is a two-dimensional Gaussian noise vector with a covariance of .

The measurements obtained by the sensors are expressed as a function of the distance of the sensors from the location of the target is given by the measurement equation

where

denotes the measured signal at time

n by sensor

k,

and

denote the

x- and

y-axis location of sensor

k,

is a constant chosen to calibrate the SNR of the signal received by the furthest sensor to be

when the target is located at the center, and

is a measurement of Gaussian noise of sensor

k with a unity variance,

.

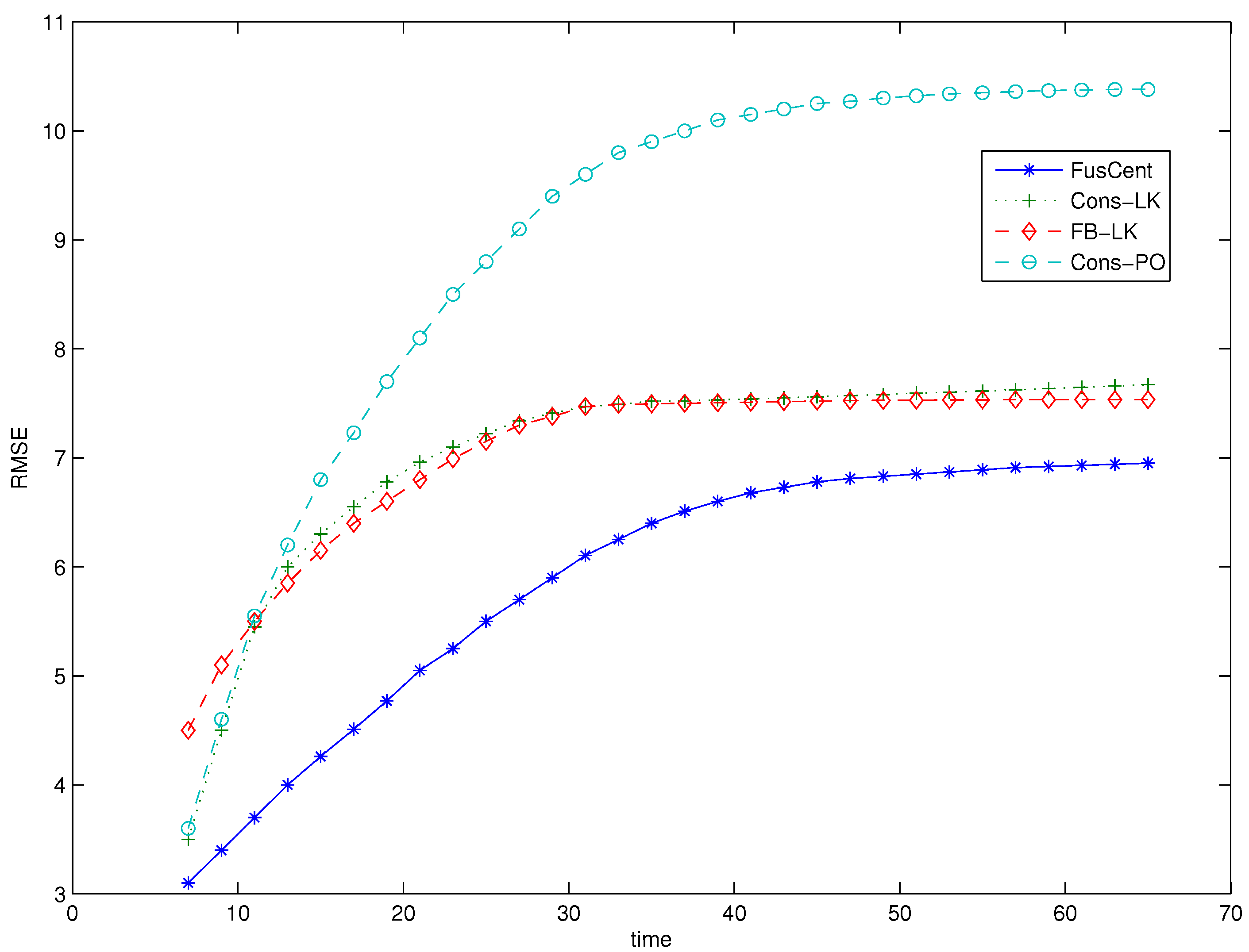

In the first experiment, we run simulations of the proposed distributed particle filtering algorithm using the forward-backward propagation and the average consensus filtering information exchange strategies for the sensors to collaboratively compute the global likelihood function. In this experiment, the consensus filters were run for seven iterations, and we assumed that the neighbors of a sensor include only the sensors that are immediately adjacent in the vertical, horizontal and diagonal directions. For both strategies, the distributed particle filtering algorithm that was run at each local sensor node used a particle size of 500. For comparison, as a lower bound, we have also conducted an experiment where the sensors transmit their raw measurements to a fusion center, which computes the exact global likelihood function to estimate the position of the target.

We note that for distanced-based measurement equations such as Equation (

19), the likelihood is a circularly-symmetric or ring-shaped function, i.e., all particles that are equidistant from any sensor location produce the same local likelihood function. Therefore, for this specific measurement equation, our algorithm requires an informative prior distribution. There are several ways of obtaining a prior distribution; for example, it can be assumed Gaussian distributed whose mean is the estimate obtained through a triangulation method using the first few measurements of sensors selected strategically. In our simulation, however, the prior distribution was obtained with the assumption that initially the first three measurements of each sensor was available to all sensors. Each sensor applies particle filtering method using measurements from all sensors to obtain an estimate of the location of the target at the end of the third measurement. Then, the estimate of each sensor is taken as the mean of its Gaussian prior distribution. The covariance of the Gaussian prior distribution is assumed

.

As a performance measure, we have computed the Root Mean Square Error (RMSE) of the estimated target position

at each time instant

n by averaging the estimates from all sensors. The RMSE is computed by

where

is the number of simulation runs over which the RMSE is averaged.

Figure 2 shows the plots of the RMSE of the estimated target position

as time instant

n evolves from

. In the figure, the plots denoted by

-

,

-

and

correspond to the results of the algorithms that used the information exchange strategies of forward-backward propagation, average consensus filter and fusion center, respectively. For comparison purposes, we have simulated an algorithm with comparable complexity and communication demand as our proposed algorithm. The algorithm, which is similar to [

22], approximates the global posterior distribution using average consensus filter by allowing each sensor to exchange its Gaussian approximated local posterior distributions with its neighbouring sensors. This Gaussian approximated posterior algorithm used the same parameters such as the number of particles, number of consensus iterations and also all-measurement available initialization as our algorithm. The plot of the algorithm is denoted by

-

.

We note from

Figure 2 that the RMSE of proposed methods,

-

and

-

, and that of the

-

, initially increase with time because of the effect of the informative priors, which assume the availability of the data obtained by all the sensors at each sensor node. We observe that the RMSE of all the methods eventually level off with time as the effect of the informative priors wears off. In

Figure 2, we started the plots from

by ignoring the first few estimates that skewed the result favourably due to the the effect of the informative priors.

From

Figure 2, we observe the proposed algorithms, both with the forward-backward propagation and average consensus filter, displayed performance that is close to that of the fusion center based algorithm and better performance than the algorithm with Gaussian approximated posterior. We emphasise, however, in comparison to our algorithms, that the fusion-center based algorithm requires much more power and bandwidth resources because the sensors need to transmit their raw measurement data to the fusion center. The total transmission power requirement of the fusion center based algorithm is proportional to the average of at least the square of the distance of the sensors to the location of the fusion center. Assuming that the fusion center is located at the center of the sensor field shown in

Figure 1, the average of the square of the distance from the sensors to the fusion center is 6600, which is

times bigger than the square of the maximum distance between two sensors in the same neighbourhood. In other words, the power requirement of the fusion center architecture is

times higher than the transmission power required by our algorithm that uses a consensus filter information exchange strategy. Moreover, when using the consensus filter based algorithm, the same bandwidth can be reused by all neighborhoods while adopting a time-division multiple access (TDMA) protocol for sensors in a neighbourhood to share the bandwidth. With this scheme, the total bandwidth-time slot for the consensus algorithm is equal to the number of sensors in one neighbourhood (nine in our case) multiplied by the number of iterations (7 in our case). However, for the fusion-centered algorithm, the corresponding bandwidth-time slot grows linearly with the number of sensors. In fact, it is numerically equal to the total number of sensors in the field.

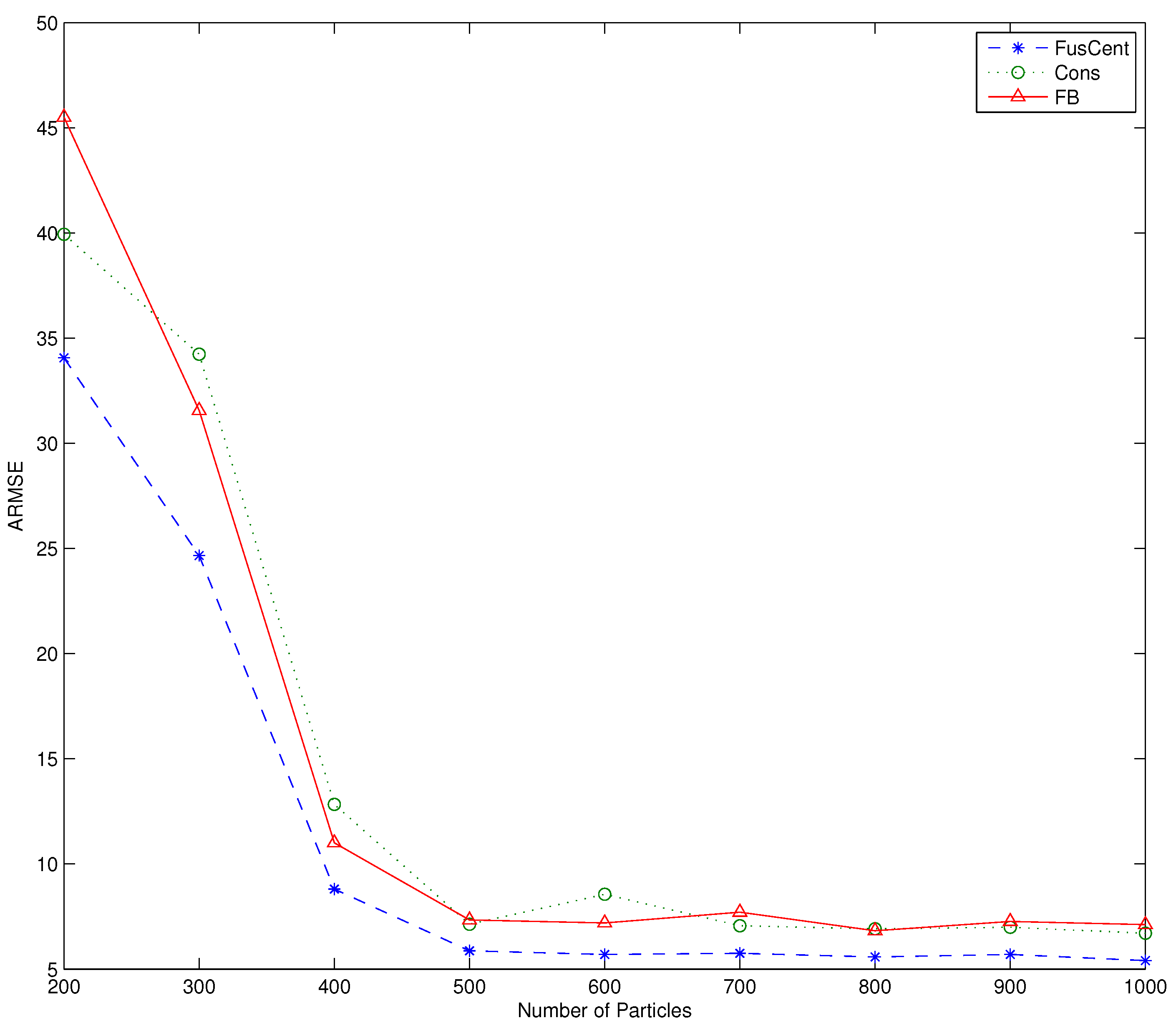

In the second experiment, we explored the effect of the number of particles on the performance of the algorithms by computing the averaged RMSE (ARMSE) of the estimated target position. The ARMSE is computed from

Each value of of the ARMSE is averaged over the time interval of

and

, and

for the number of simulation runs.

Figure 3 shows the plot of ARMSE versus the number of particles for the three algorithms. We observe from

Figure 3 that the algorithms display performance improvement as the particle size is increased to

, but beyond

, the performance gain remains essentially flat. We also note that in all our simulations the performances of the forward-backward propagation and consensus filter based algorithms are practically the same.

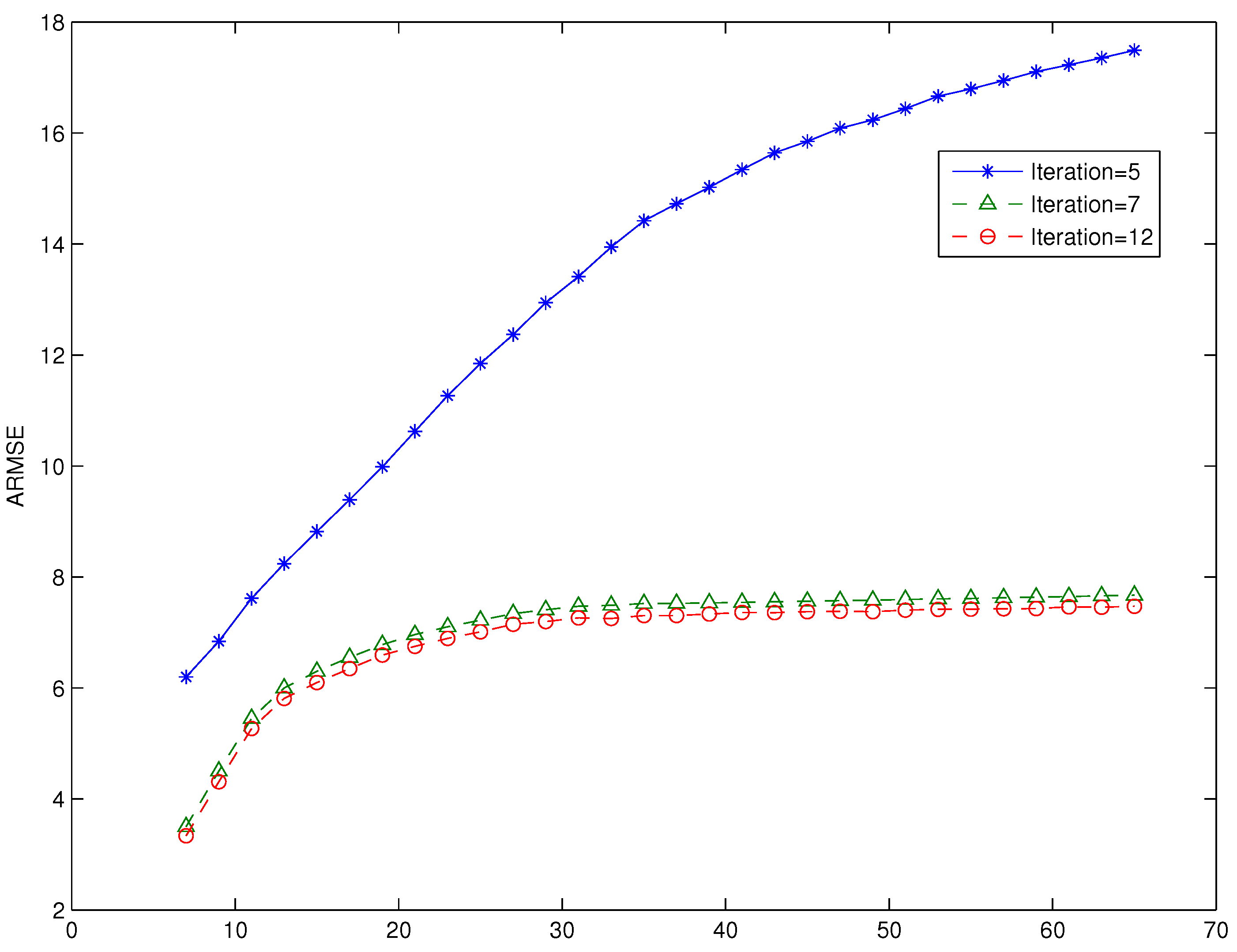

In the third experiment, we explored the effect of varying the number of iterations of the consensus filter based algorithm. We run the consensus filter based algorithm with particle size

, and the number of iterations of 5, 7 and 12. The performance, in terms of RMSE, of the proposed consensus filter based algorithm as the number of iterations of the consensus filter is varied and plotted in

Figure 4. A similar plot of ARMSE versus the number of iterations is shown

Figure 5. In

Figure 5, we have also included the plot of the ARMSE for an iteration of 12. As seen from both figures, the performance improves significantly as the number of iterations is increased from 5 to 7; however, as the number of iterations is increased from seven to 12, there is minimal performance gain. We also note that our algorithm outperforms the algorithm based on a Gaussian approximated posterior for the same number of iterations (iterations = 7). The plots denoted by

represent the results from our proposed algorithm, while the plot denoted by

represented the result from the algorithm based on Gaussian approximated posterior.