1. Introduction

The well-known Kalman filter is optimal given a linear Gaussian model, but its performance will deteriorate in the presence of unknown biases, such as the unknown and time-varying delays in chemical processes [

1,

2,

3,

4], faults or failures in fault-tolerant diagnosis and control systems [

5,

6], registration errors in multi-sensor fusion [

7,

8,

9,

10], or inertial drift in navigation [

11,

12,

13,

14]. In general, such a bias is represented as an unknown input (UI) to the nominal model. To the best of our knowledge, approaches to UI modeling and the design of corresponding filters typically fall into one of the following categories.

The first type of UI is zero-mean random noise with unknown covariance. In the case of the stationary noise processes in linear dynamic systems, the covariance can be identified via Bayesian or maximum likelihood estimation. A corresponding filter has been applied for orbit determination for near-earth satellites [

15,

16]. Recently, this type of filter design has been extended to time-varying covariance [

17] and jump Markov stochastic systems [

18]. Furthermore, an M-robust estimator [

19] has been derived for the simultaneous adaptive estimation of unknown states and observation noise statistics. An auto-covariance least-squares method [

20] has been presented to achieve lower-variance covariance estimates along with the necessary and sufficient conditions for the uniqueness of the estimated covariances. By treating unknown covariances as missing data, the adaptive estimation problem has been transformed into a problem of the joint optimization of state estimation and parameter identification, allowing it to be solved in an expectation-maximization (EM) iterative processing framework [

21].

The second type of UI is unknown but deterministic. Using least-squares estimation and moving-window hypothesis testing, the corresponding filters can cope with cases in which the UI is piecewise constant [

22] or a sum of basis functions with piecewise-constant weights [

23]. The problem of state estimation with UIs in both dynamic and measurement models has been addressed using a joint EM optimization scheme for state estimation, parameter identification and iteration termination decision-making [

24], and this approach has been extended to a distributed EM algorithm for sensor networks [

25], and further extended to an adaptive divided difference filter for nonlinear system with multiplicative parameters [

26].

The third type of UI is completely arbitrary, without the availability of any prior knowledge about its evolution. An asymptotically stable and UI-decoupled observer has been derived [

27] based on the condition that the rank of its distribution matrix must be less than that of the UI. For the case in which the UI appears only in the process equation, a combination of least-squares estimation and the Kalman filter has been proposed for joint state and UI estimation, and the necessary and sufficient condition for the existence of such a joint estimator has also been presented [

28]. In addition, this result has been extended to the case in which the UI appears in both the process and measurement models [

29].

The fourth type of UI is norm-bounded, and robust offline filters have been designed to minimize the gain of the transfer function from the UI to the estimation error [

30]. As an alternative approach, a method of linear minimax regret estimation has been developed to minimize the worst-case regret over all bounded data [

31].

The fifth type of UI is characterized by a randomly switching parameter obeying a known Markov chain. The corresponding solutions fall within the scope of multiple model estimators [

32,

33,

34,

35,

36,

37], such as the interacting multiple model (IMM), which is well known in maneuvering-target tracking. Motivated by the concept of establishing a general framework for joint state estimation and data association in clutters for the tracking of a maneuvering target, the linear minimum-mean-square-error (MMSE) estimator has been derived for discrete-time Markovian jump linear systems with stochastic coefficient matrices [

38].

In general, the filters discussed above are UI-specific because of the significant differences between both the different types of UIs and the solutions designed to address them. However, in many practical applications, much more complicated UIs may be encountered. Recently, the minimum upper bound filter (MUBF) was proposed for a linear stochastic system corrupted by a generalized UI representing an arbitrary linear combination of dynamic UIs, random UIs, and deterministic UIs [

39]. The result was further extended to a discrete-time non-linear stochastic system with a UI in its measurements, and an iterative optimization method for joint estimation and parameter identification was derived [

40]. In multi-sensor bias estimation [

41], the concept of a generalized sensor bias (GSB) has been proposed, which is represented by a dynamic model driven by a structured UI. By deriving an equivalent state-free measurement model and the corresponding UI-free dynamic model for the GSB, the LMMSE can be obtained via the orthogonal principle.

However, the application of the resultant filter is limited to the case in which all sensors have an identical measurement matrix, and only the GSB can be estimated. This problem motivates us to present and solve the problem of the joint estimation of the system state and the GSB under a UI in the case of GSB evolution.

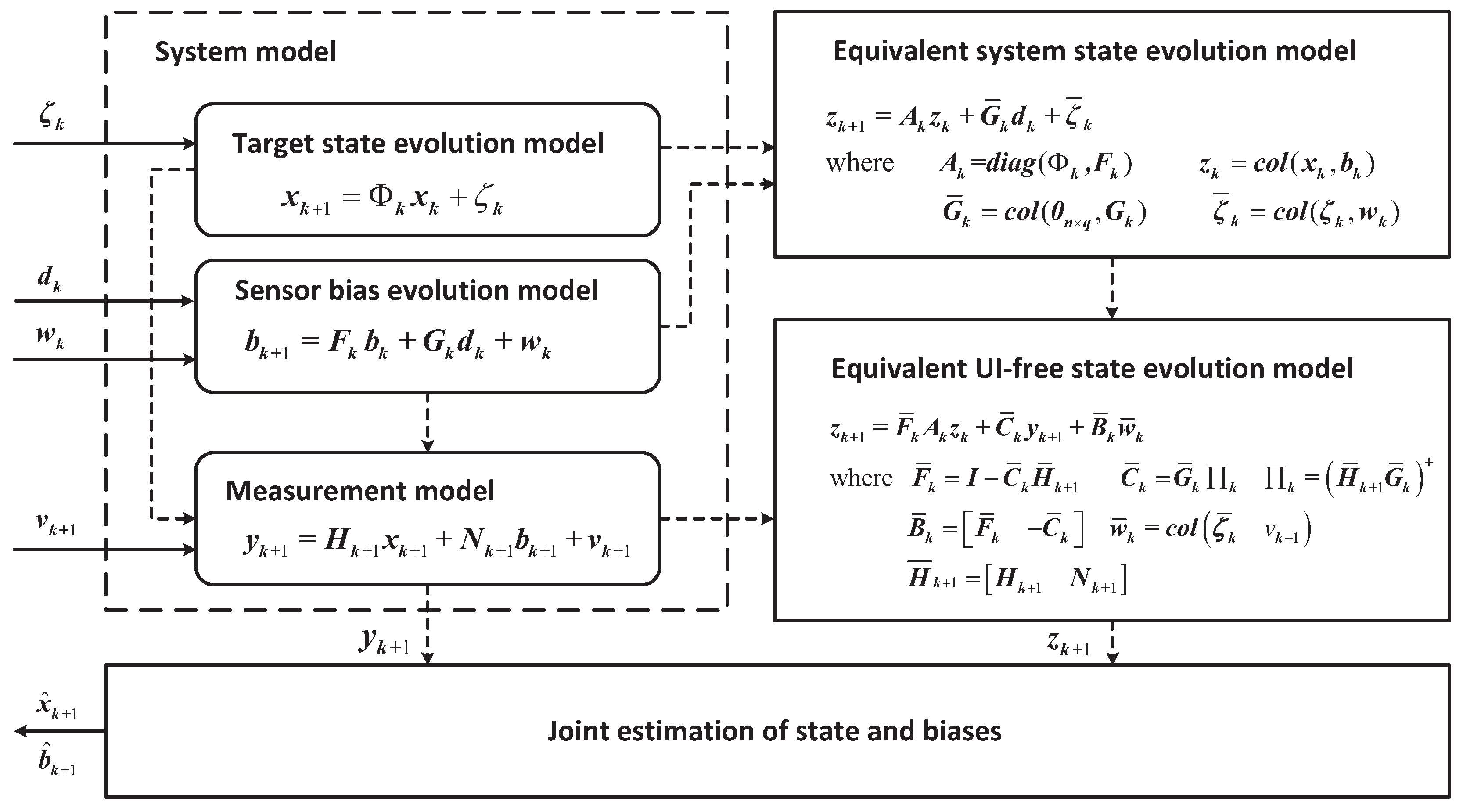

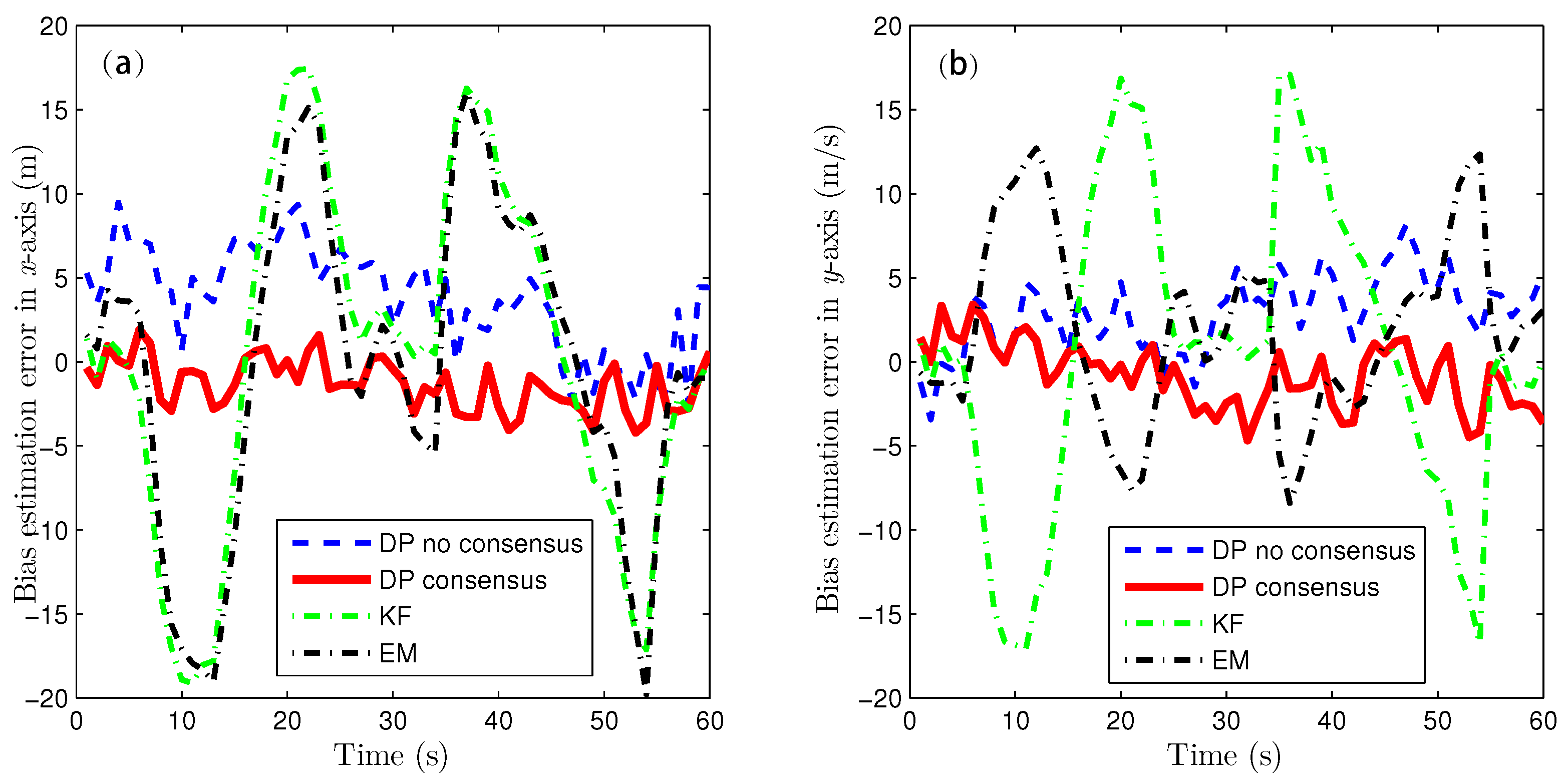

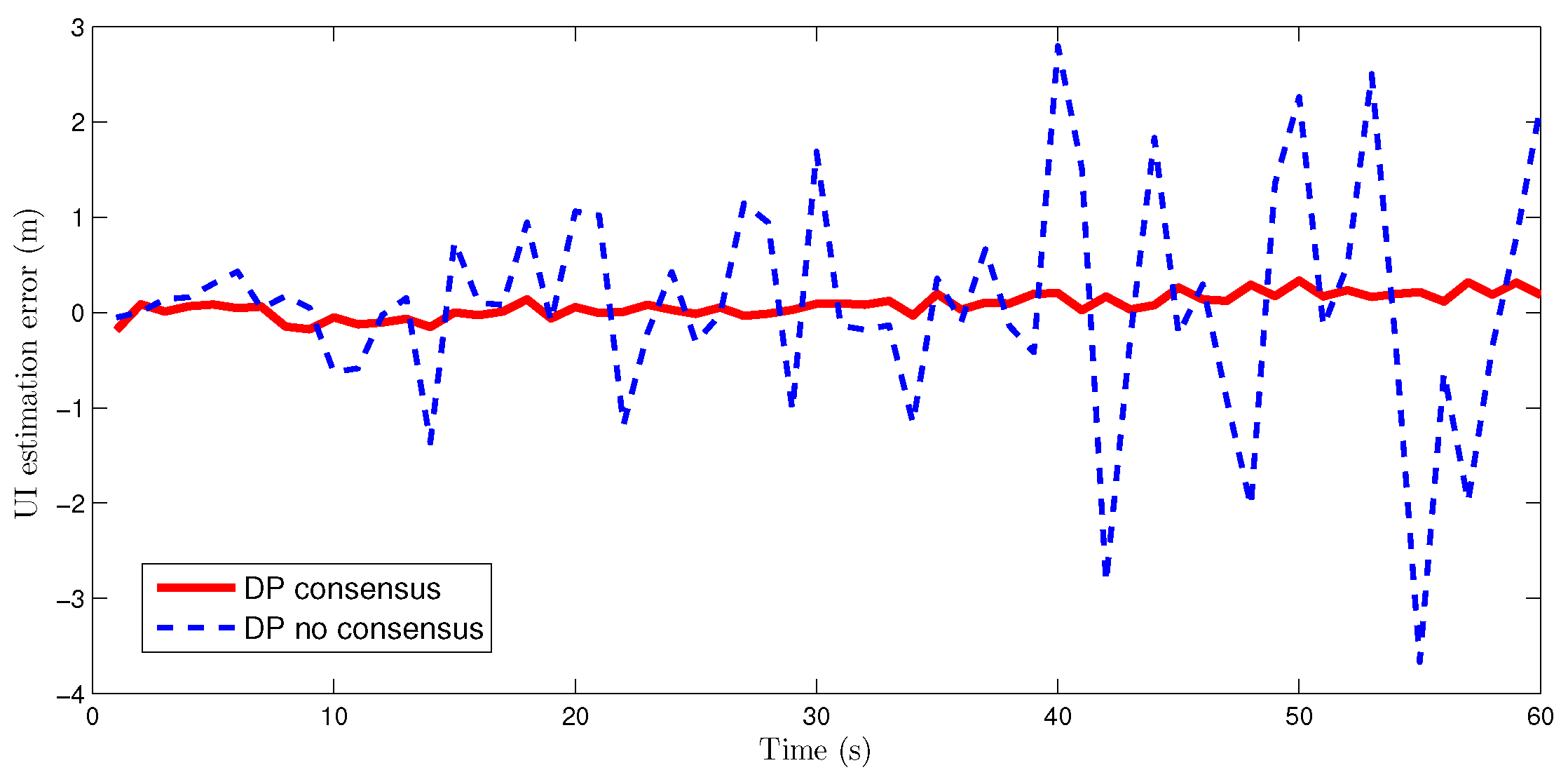

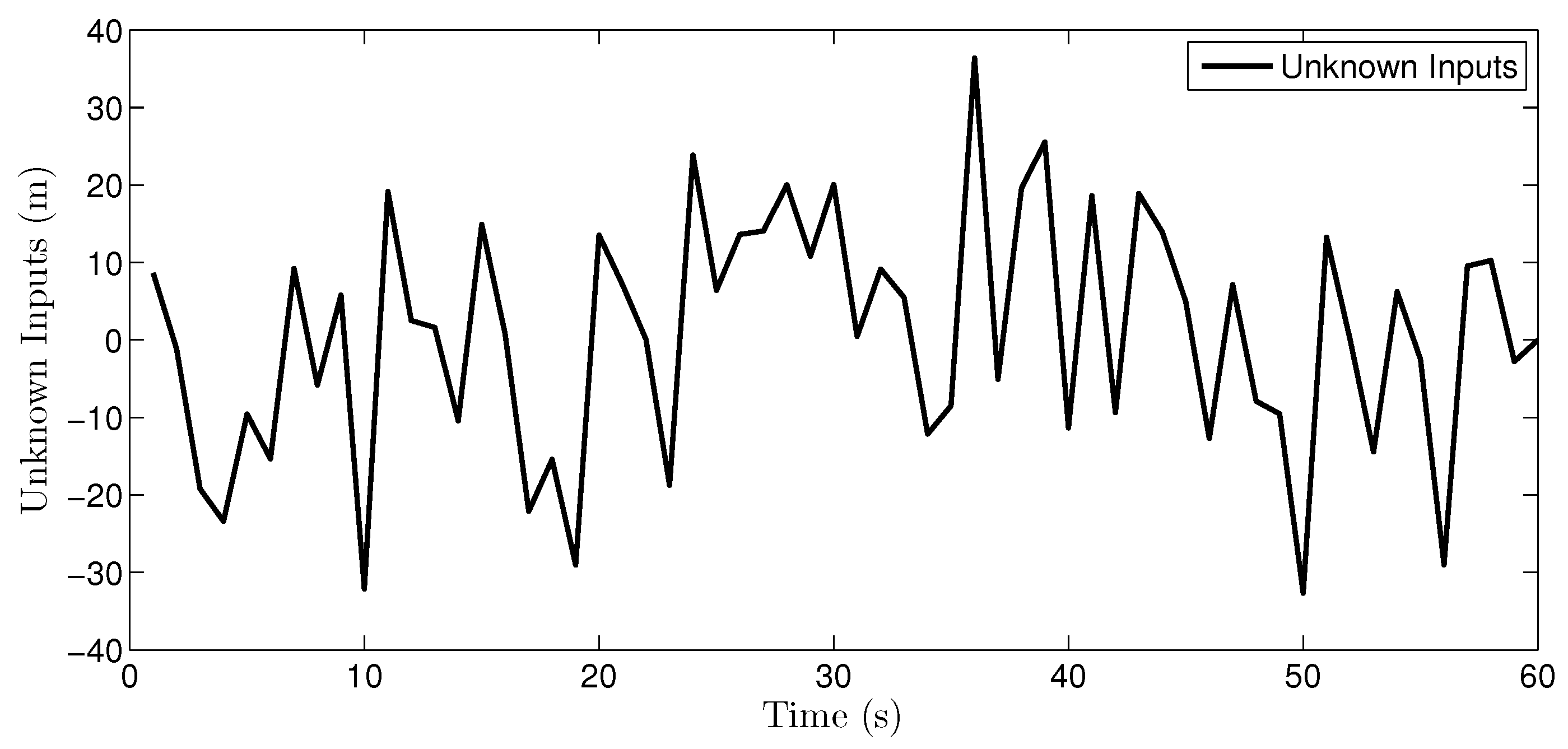

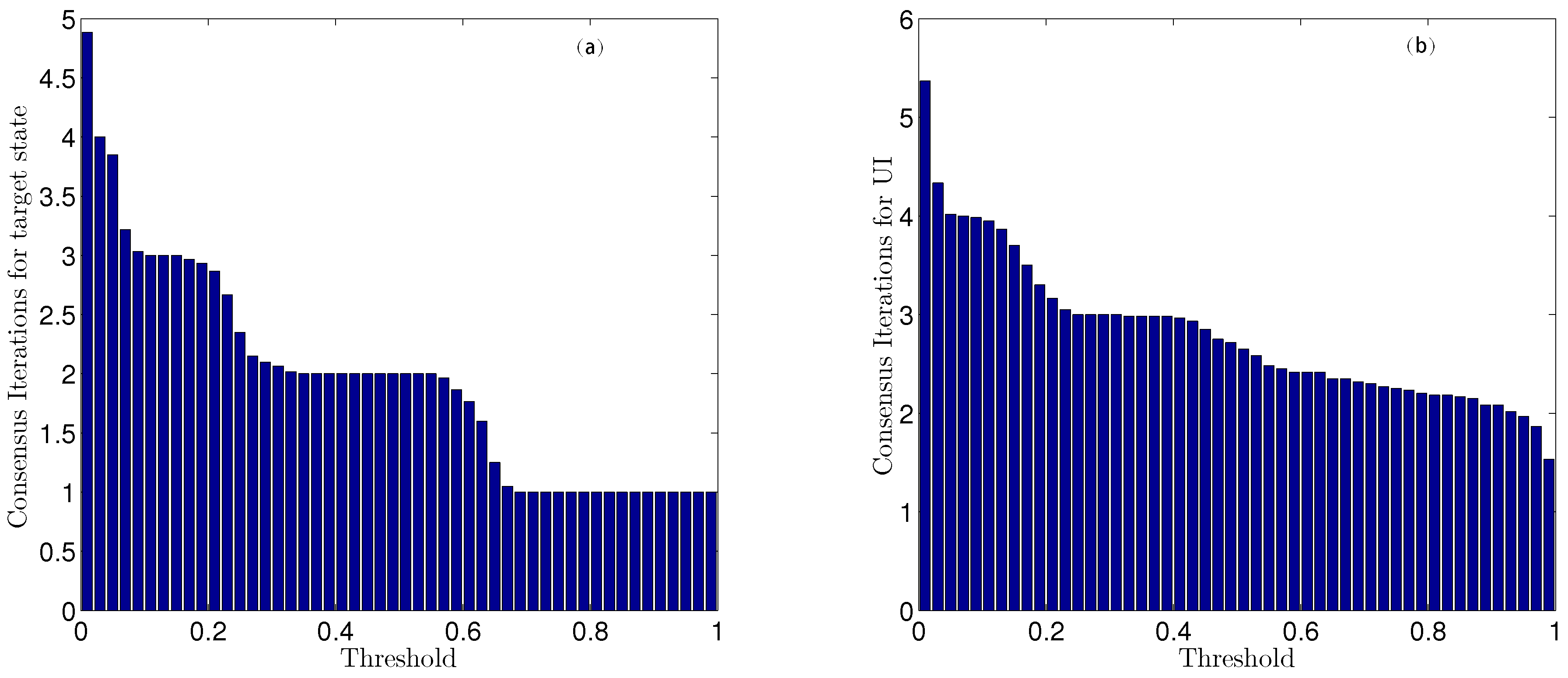

This paper presents the joint estimation of the system state and GSB under a common UI in the case of bias evolution in a heterogeneous sensor network. To the best of the author’s knowledge, this is the first attempt to achieve this kind of the joint estimation. In detail, a two-stages cooperative strategy for pursuing consistent estimates in the distributed network is proposed. First, a joint UI-free evolution model is derived, along with its necessary and sufficient conditions for existence. In the derived model, the process noise and measurement noise are correlated. And the optimal local MMSE estimator with correlated noise is obtained. Second, based on the local estimates, the global estimation of the target state and UI are further achieved by network consensus. Through utilizing the global estimation of UI, the GSB is refined. The optimal estimates of the target state, GSB and UI are finally obtained.

Throughout the paper, the superscripts “”, “T” and “+” represent the inverse, transpose, and Moore-Penrose operations, respectively; “I” and “0” represent the identity matrix and the zero matrix, respectively, of the proper dimensions; “” represents the zero matrix with dimensions of ; diag indicates a diagonal matrix; col denotes column augmentation; E denotes the operator of mathematical expectation; the superscript i indicates a matrix or variable related to the ith sensor; rank denotes the rank of the specified matrix; the subscripts “i” and “j” indicate the ith and jth subblocks of a matrix, respectively; the superscripts “∧” and “”indicate the estimate and residual, respectively, of a random vector; the subscripts “”represents the estimate of a vector at the time k using measurements up to time k + 1 and ⨂ denotes the Kronecker product.

2. Problem Formulation

Sensor bias (SB) is widespread in multi-sensor systems and originates from two types of sources: SBs of the first type are local SBs (LSBs), which are independent among sensors, such as calibration error, navigation bias and sensor faults/drift, whereas sensor biases of the second type are global SB (GLSB, also called UI in this work),which are common to all sensors, such as electronic countermeasures (ECMs) in target tracking. A GSB model has been proposed based on dynamic evolution driven by both independent LSBs and a common UI [

41]. However, the corresponding filter has two shortcomings: first, all sensors must have an identical measurement matrix, and second, the system state and the UI cannot be estimated simultaneously because the SB estimation is obtained by deriving the equivalent GSB model neglecting system state and UI. Here, we present the two assumptions adopted in our work.

Assumption 1. All measurements made by sensors in the network are continuously interrupted by the GSB, which includes LSBs and GLSB.

Assumption 2. The network topology is fixed, and the target can be monitored by all sensors throughout the entire process. There is no fusion centre or lead sensor in this sensor network. In other words, all sensors are equal in status, and they reach a network consensus by exchanging information with their neighbours.

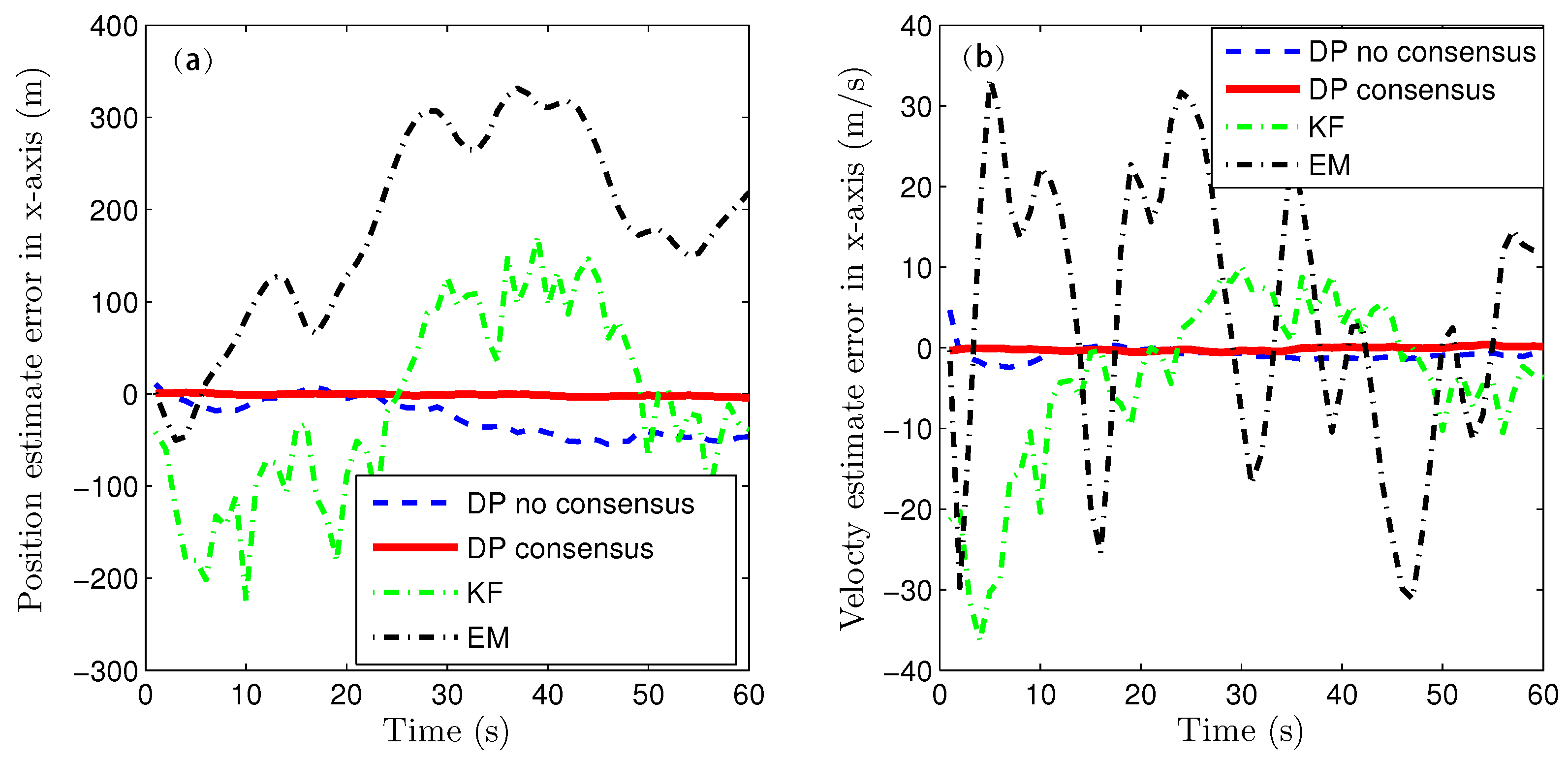

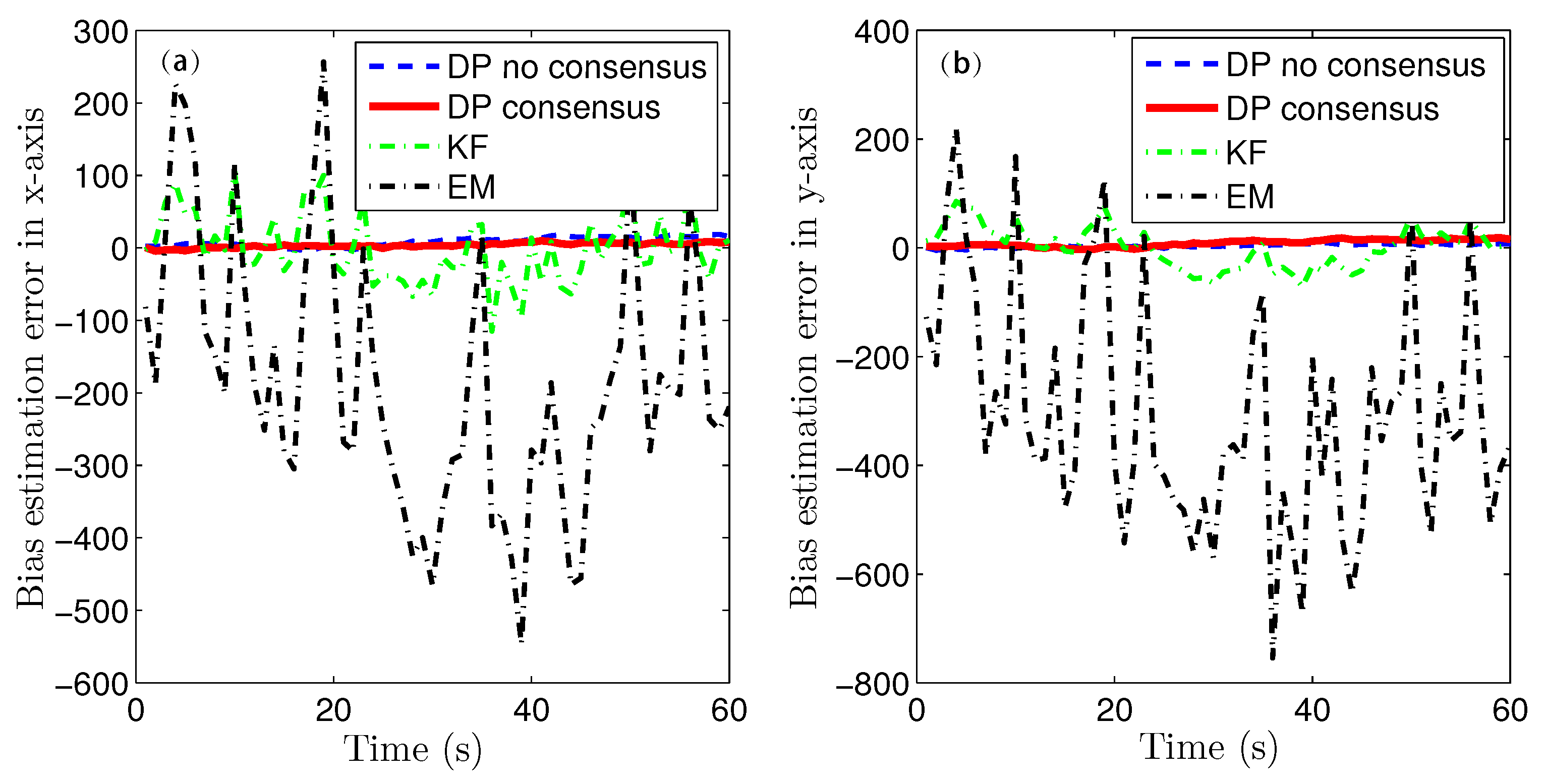

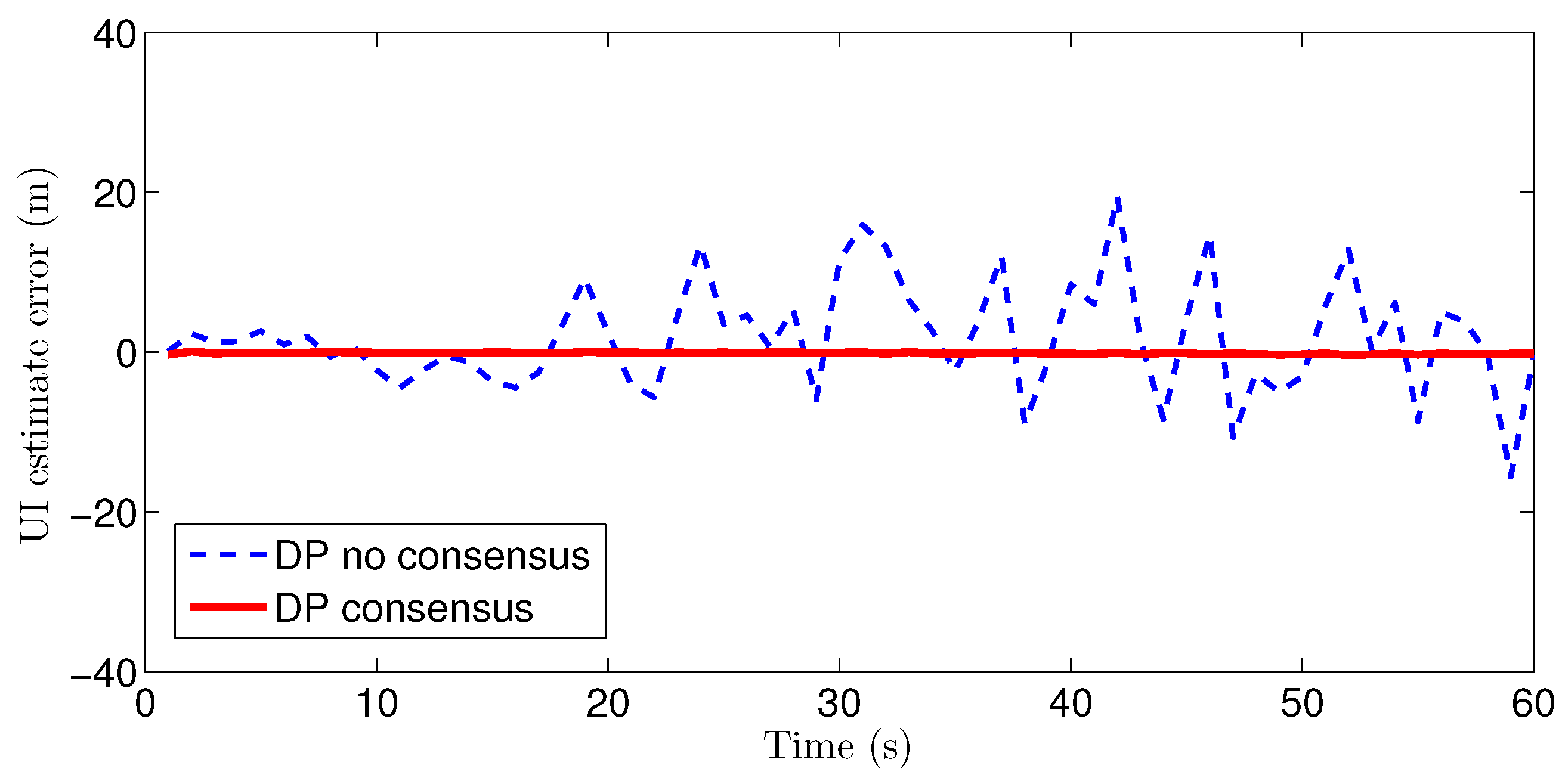

Consider a scenario of collaborative target tracking within a sensor network, as shown in

Figure 1. A target is continuously moving within a surveillance zone. Each sensor node obtains raw measurements of the target corrupted by the GSB. At each step, each sensor obtains local estimates of the target state and GSB, exchanges its local system-state estimate with its neighbors, and obtains fused estimates of the target state and UI via network consensus. In other words, this sensor fusion process can be regarded as the joint distributed estimation of the target state, GSB and UI without the limitation that all sensor measurement matrices must be identical.

Consider a network of

s sensor nodes. The network topology is represented by an undirected graph

, where

denotes the set of nodes;

is the set of permissible communication links; and

is the graph topology matrix, the elements of which are defined as

Let denote the set of neighbors of the ith sensor, and let denote the inclusive neighbor set of sensor i. Here, the presented network is assumed to be a connected graph. Otherwise, at least two separate sub-networks would exist, and hence, information could only be fused within each sub-network instead of throughout the entire network because information could not be exchanged between the two.

Here, we formulate the following problem of distributed sensor fusion in the presence of time-varying sensors subject to a common UI:

where

is the state vector;

is the measurement vector of the

ith sensor;

is the

ith GSB, where

;

is the common UI; and

,

and

are all independent, zero-mean, Gaussian, white-noise components with covariances of

,

and

, respectively. The initial target state

and

ith GSB state

are Gaussian distributed with respective known means of

and

and associated covariances of

and

.

,

,

and

are known and have appropriate dimensions. Under the assumption that

, the

are of full column rank.

Our aim is to design an unbiased minimum-variance filter to jointly estimate the target state , the GSBs and the UI given the distributed measurements recorded up to time k.

Remark 1. The GSB in Equation (3) represents a generalized bias which includes multiple bias types as the special case:- (1).

If , , , then represents a constant-value bias.

- (2).

If , , then represents a time-varying bias with known dynamic model.

- (3).

If both , , then represents a zero-mean random error.

- (4).

If , then represents the dynamically evolving bias driven by arbitrary UI.

The second type of the UI mentioned in the introduction is equivalent to the case (1).

The third type of the UI mentioned in the introduction is equivalent to the case (4).

The first and fourth types of the UI mentioned in the introduction can be contributed to the case (4) when these two types of UI can be decoupled from Equation (3). The above four types of UI can be accommodated within the single-model system proposed in this work. However, for the fifth type of UI mentioned in the introduction, it is usually regarded as a randomly switching parameter obeying a known Markov chain which is classified to the multiple-models system, so this type of UI cannot be accommodated within the presented model in this work. Nevertheless, for this type of UI, if GSB model can be established for the each mode of Markov chain. According to Equation (3), GSB model can be written aswhere r represents the number of states of the Markov chain. Meanwhile, according to Equation (10), the decoupling condition of the corresponding GSB model can be also confirmed as These decoupling conditions for a UI obeying a Markov chain are more stringent than those considered in this work, as Equation (5) must hold for every r. Then the fifth type of UI can be accommodated within the proposed framework. Remark 2. There are two distinct strategies of networked sensor fusion. The first is the centralized strategy [41,42], i.e., all sensors transmit their measurements to a fusion centre, which is responsible for integrated data processing for state estimation and/or parameter identification. The second is the distributed strategy [25], i.e., each sensor processes its own measurements and then shares its processing results with its neighbors in an iterative manner to achieve consistent fusion. Obviously, the distributed fusion strategy allows the computational burden to be shared among sensors and consequently is more desirable for large-scale networks. However, distributed fusion is much more complex, involving both local processing and global fusion. Currently, distributed fusion in the presence of time-varying sensor biases driven by a common UI remains an open problem. Remark 3. Concerning our proposed problem, there exist two possible solution approaches. One is iterative optimization between state estimation and UI identification, as in the well-known expectation-maximization (EM) scheme [25]. As shown later via simulation, the EM scheme is not desirable here because it must treat the UI as a constant or slowly varying parameter in each iterative window. The other approach is UI decoupling plus state estimation, which serves as the basis for our proposed method, as derived later. The main technical difficulty of this approach is to determine how to adaptively decouple the UI and then fuse the local state and UI estimates.