Onboard Robust Visual Tracking for UAVs Using a Reliable Global-Local Object Model

Abstract

:1. Introduction

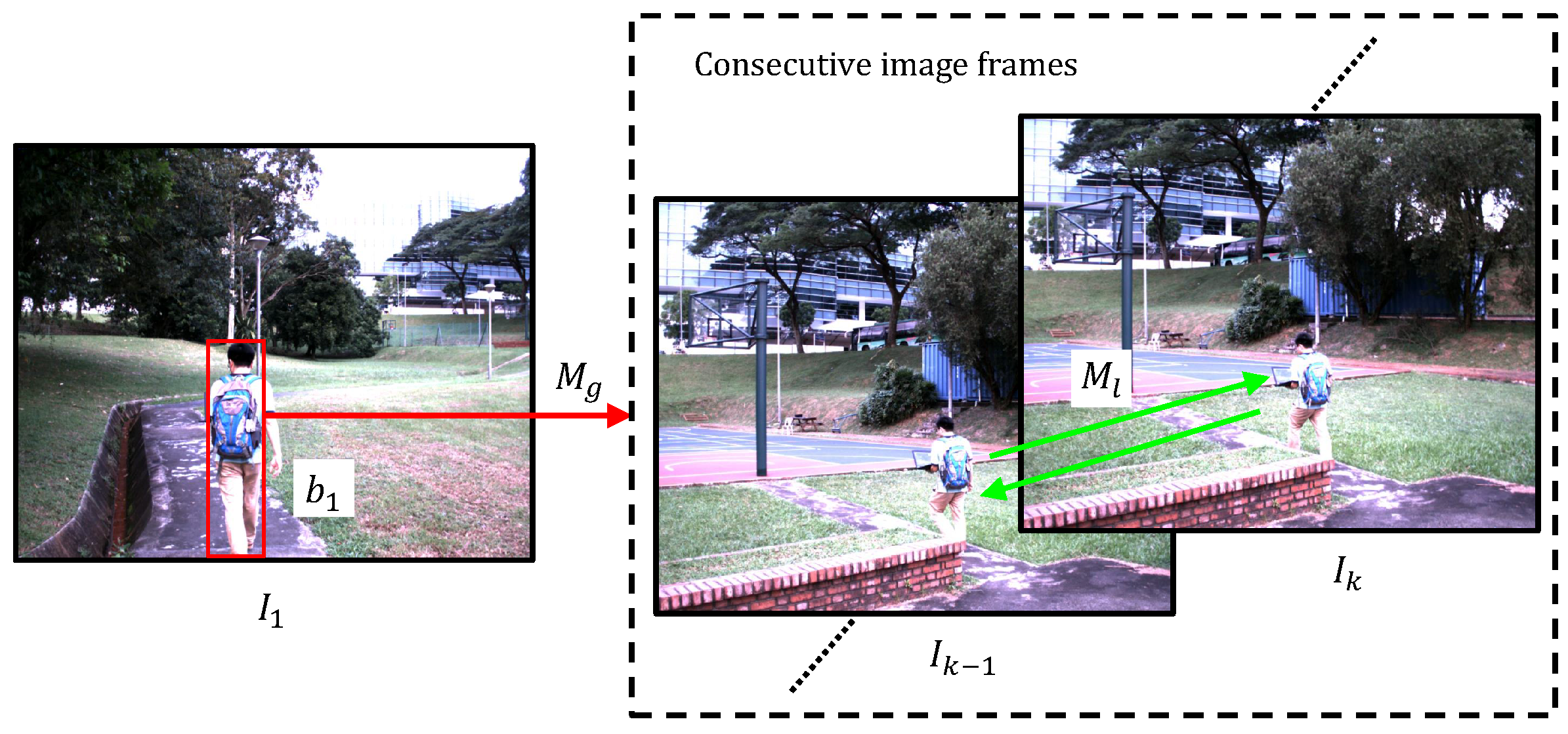

- A global matching and local tracking (GMLT) approach has been developed to initially find the FAST [10] feature correspondences, i.e., an improved version of the BRIEF descriptor [11] is developed for global feature matching, and an iterative Lucas–Kanade optical flow algorithm [12] is employed for local feature tracking between two onboard captured consecutive image frames based on a forward-backward consistency evaluation method [13].

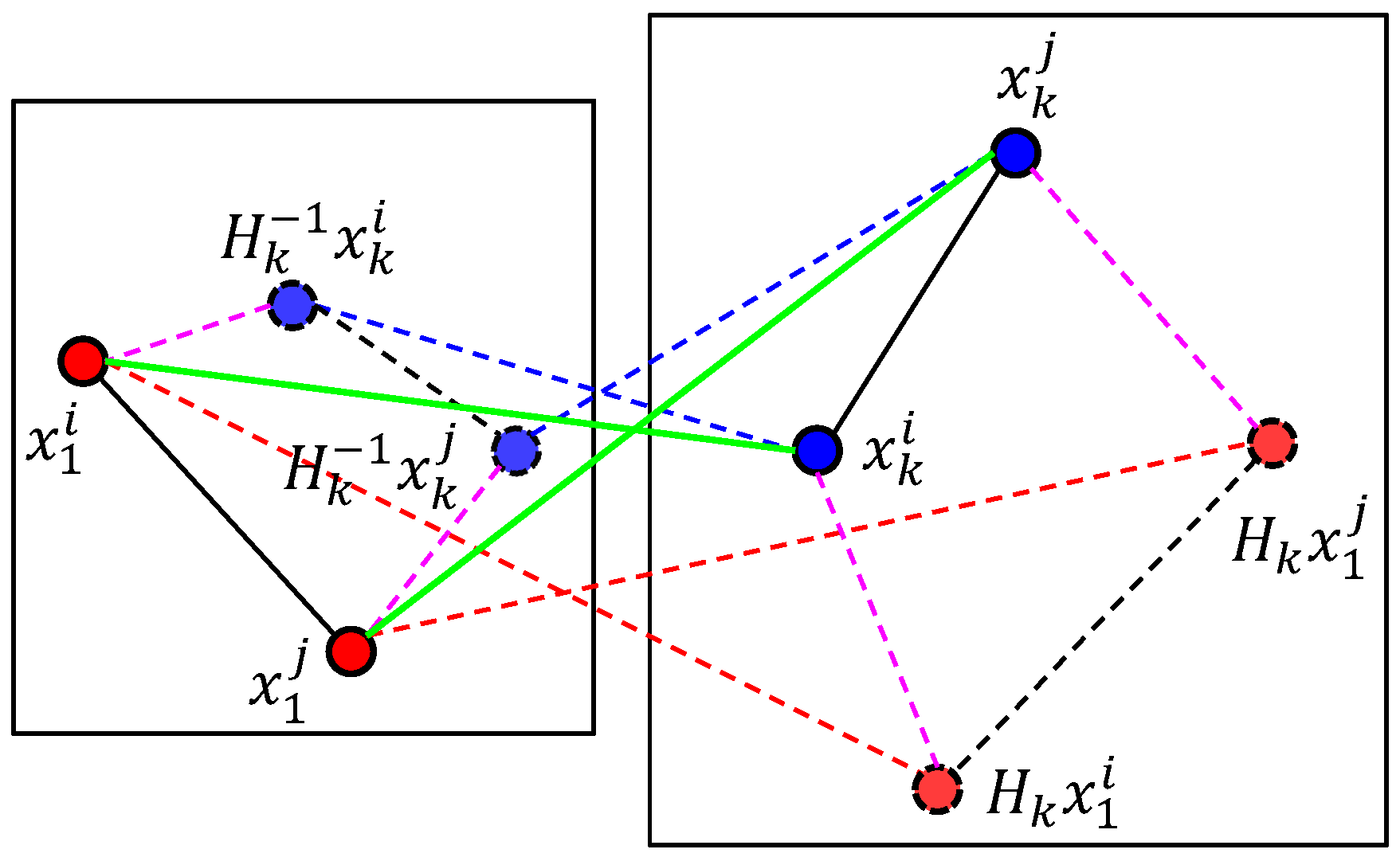

- An efficient local geometric filter (LGF) module has been designed for the proposed visual feature-based tracker to detect outliers from global and local feature correspondences, i.e., a novel forward-backward pairwise dissimilarity measure has been developed and utilized in a hierarchical agglomerative clustering (HAC) approach [14] to exclude outliers using an effective single-link approach.

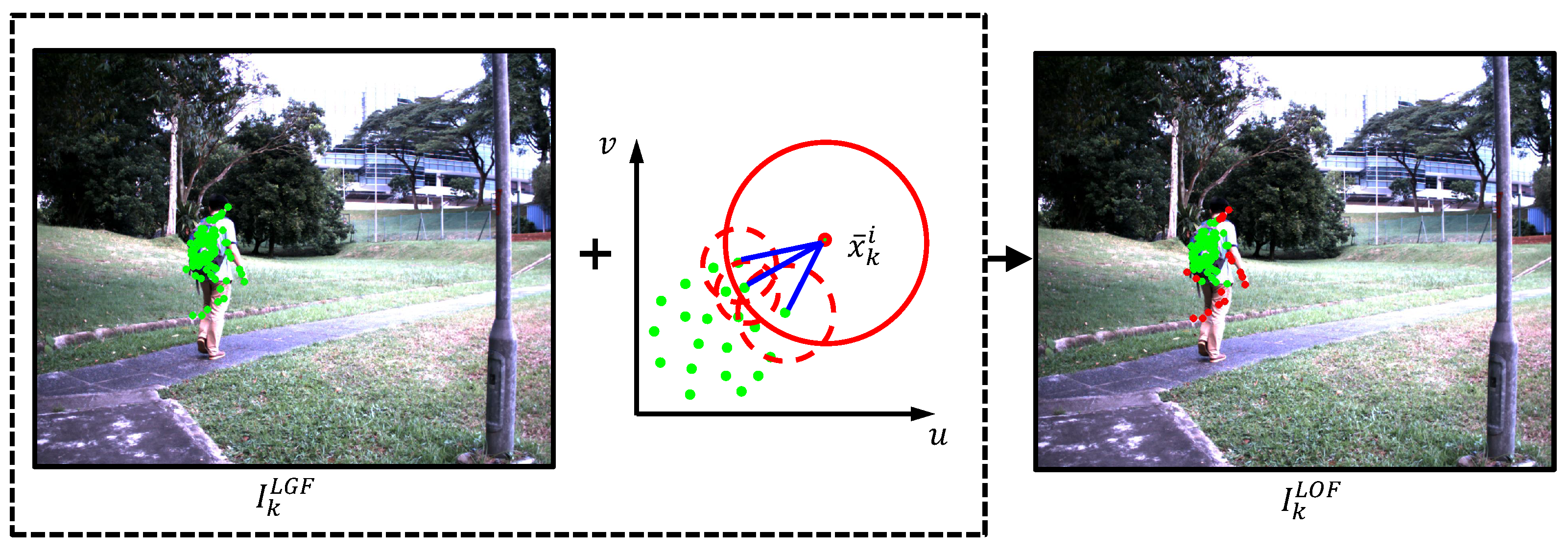

- A heuristic local outlier factor (LOF) [15] module has been implemented for the first time to further remove outliers, thereby representing the target object in vision-based UAV tracking applications reliably. The LOF module can efficiently solve the chaining phenomenon generated from the LGF module, i.e., a chain of features is stretched out with long distances regardless of the overall shape of the object, and the matching confusion problem caused by the multiple moving parts of objects.

2. Related Works

2.1. Color Information-Based Method

2.2. Direct or Feature-Based Approach

2.3. Machine Learning-Based Method

2.3.1. Offline Machine Learning-Based Approach

2.3.2. Online Machine Learning-Based Method

3. Proposed Method

3.1. Global Matching and Local Tracking Module

3.2. Local Geometric Filter Module

3.3. Local Outlier Factor Module

- Construction of the nearest neighbors: the nearest neighbors of the FAST feature are defined as follows:where is the Euclidean distance between the FAST features and . is the Euclidean distance from to the t-th nearest FAST feature neighbor.

- Estimation of neighborhood density: the neighborhood density δ of the FAST feature is defined as:where is the nearest neighbor number of .

- Comparison of neighborhood densities: the comparison of neighborhood densities results in the density dissimilarity measure , which is defined below:

4. Real Flight Tests and Comparisons

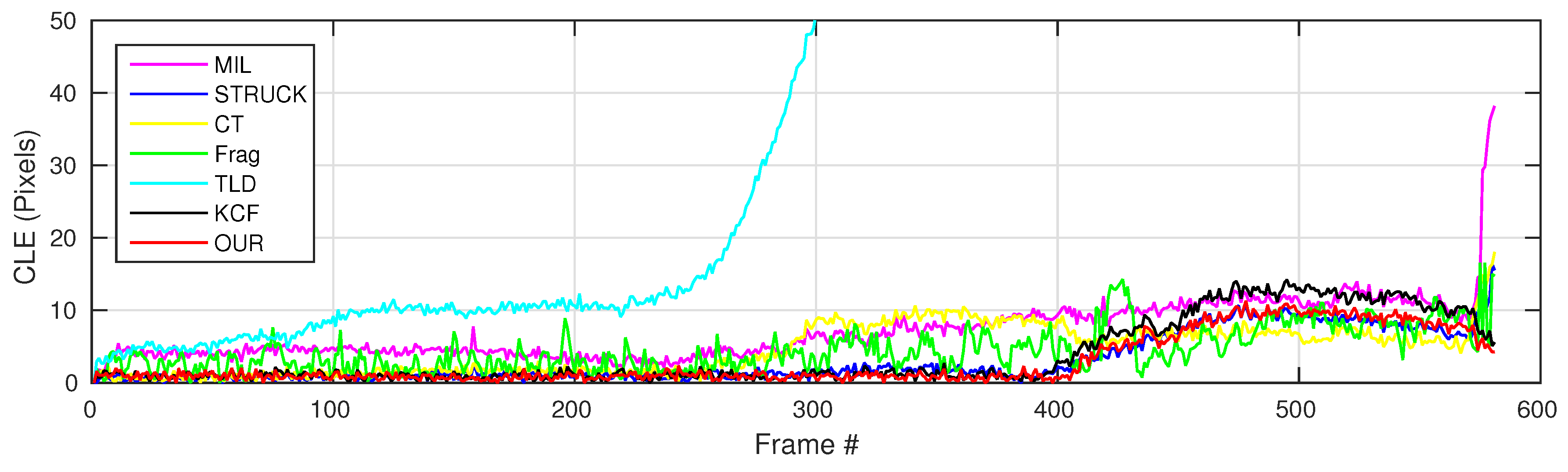

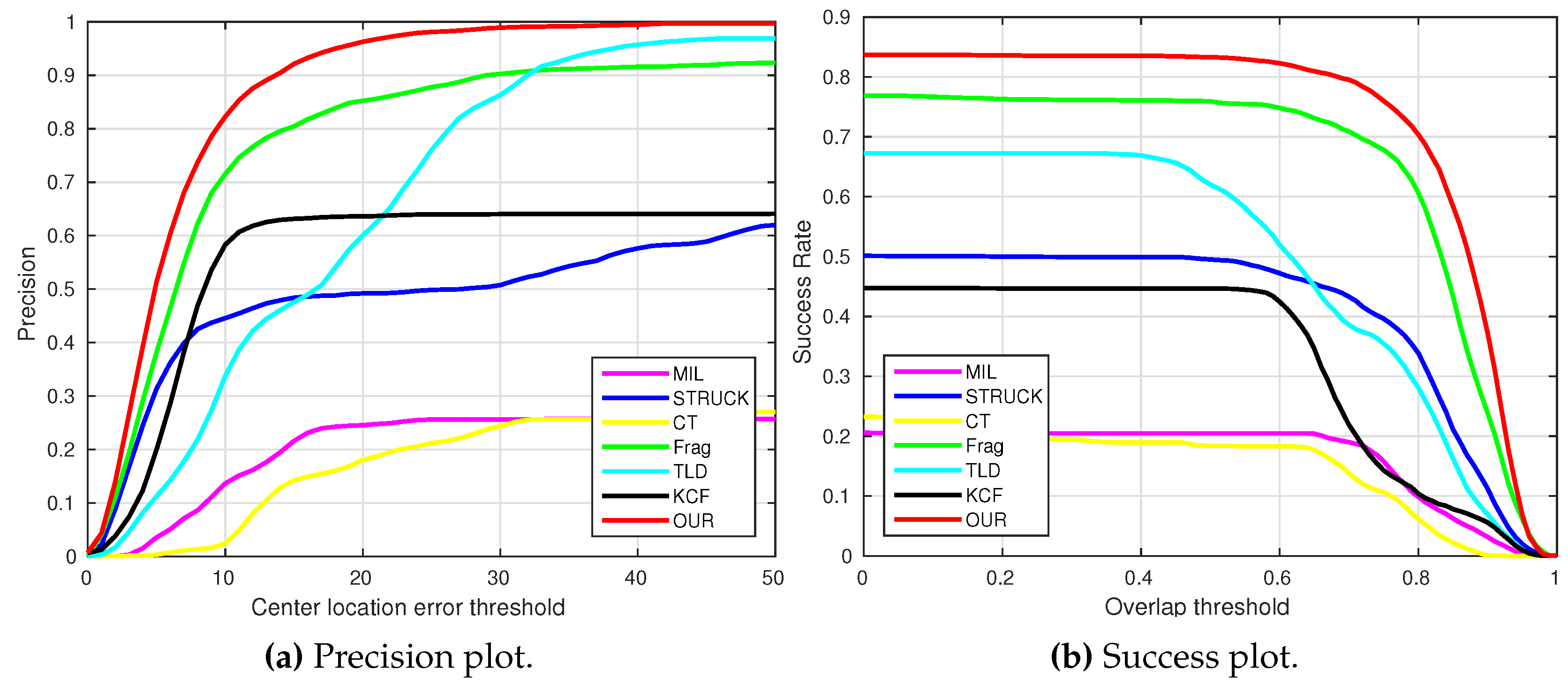

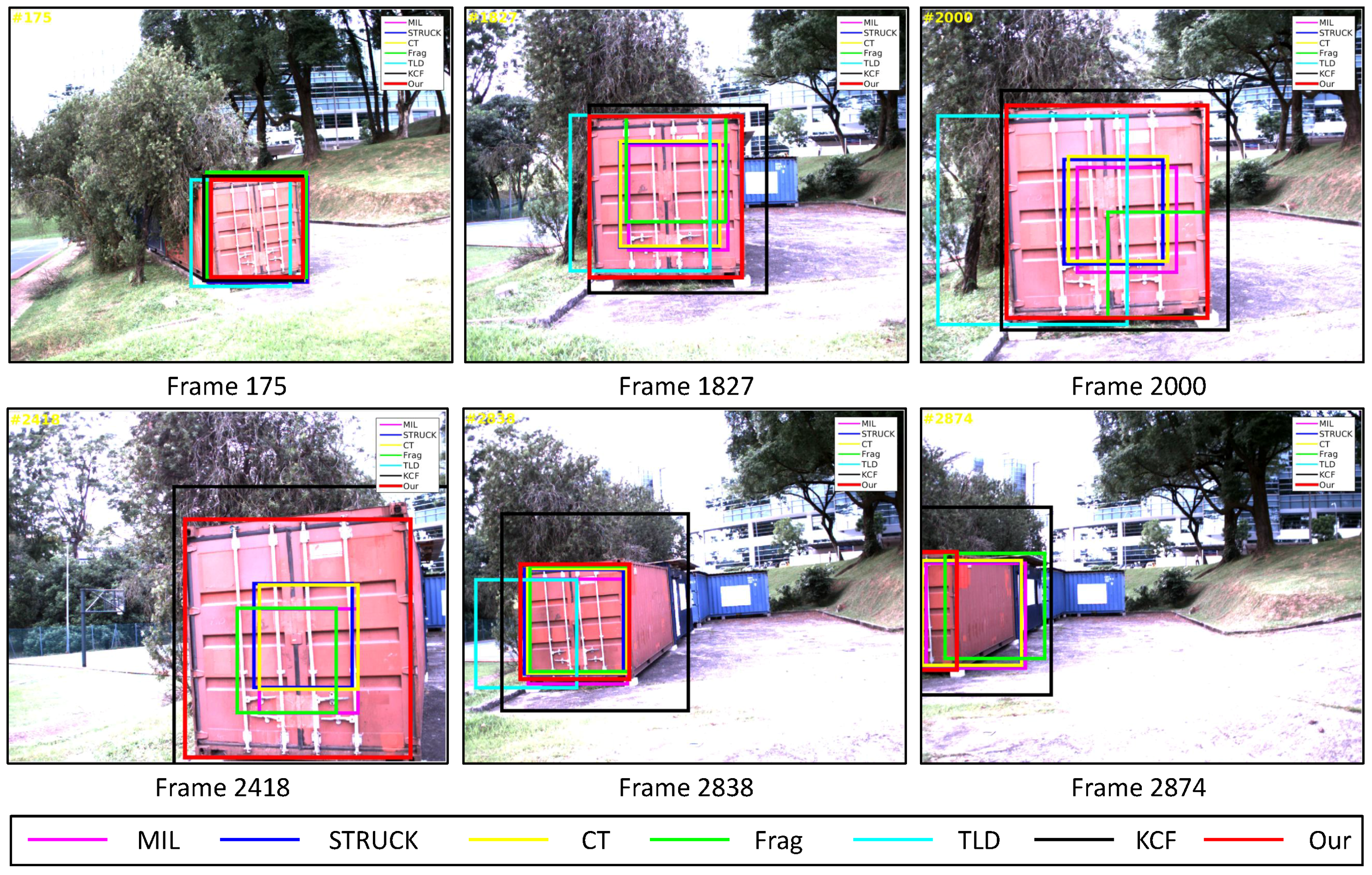

4.1. Test 1: Visual Tracking of The Container

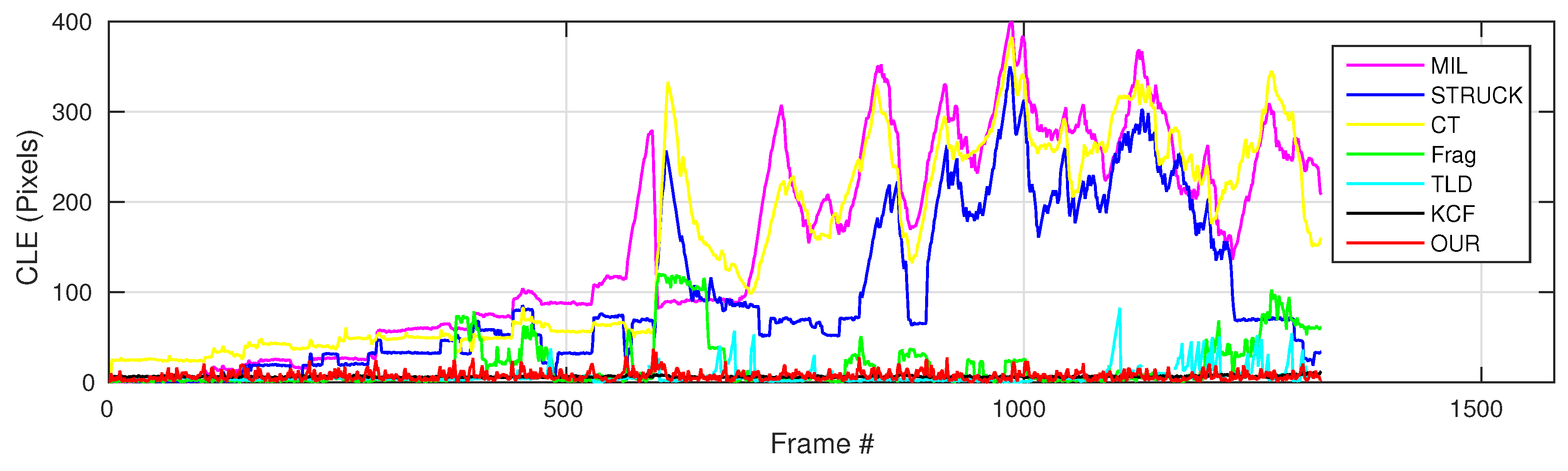

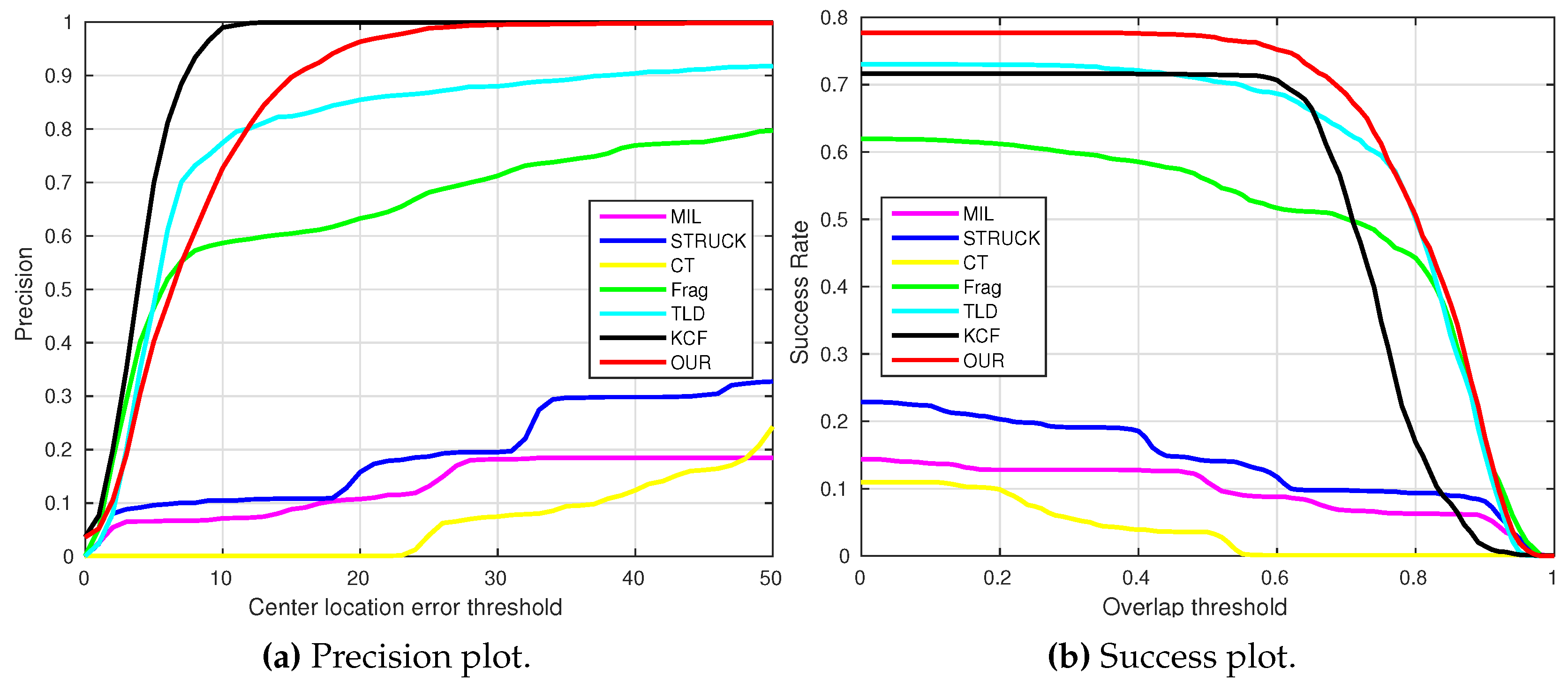

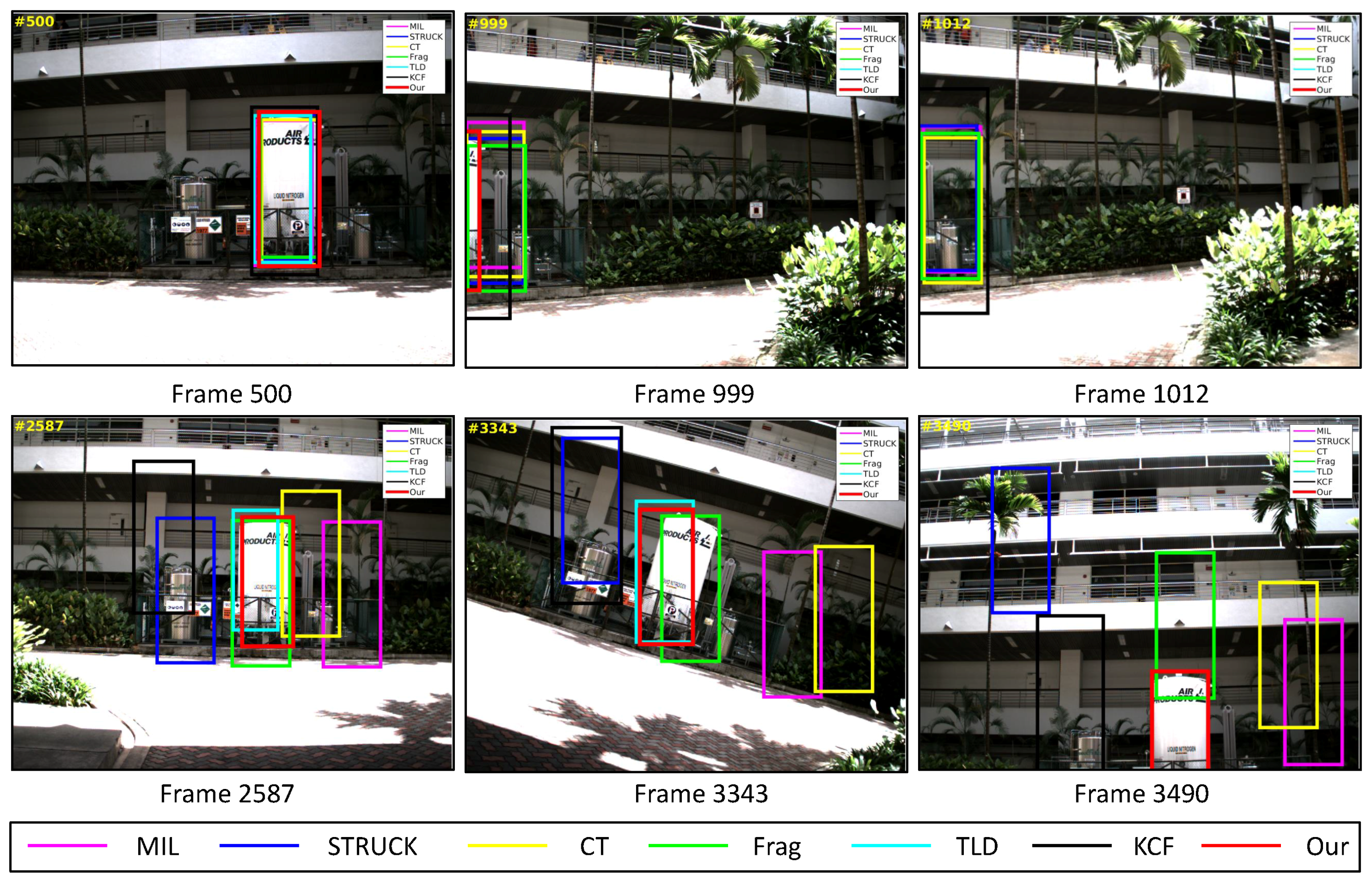

4.2. Test 2: Visual Tracking of the Gas Tank

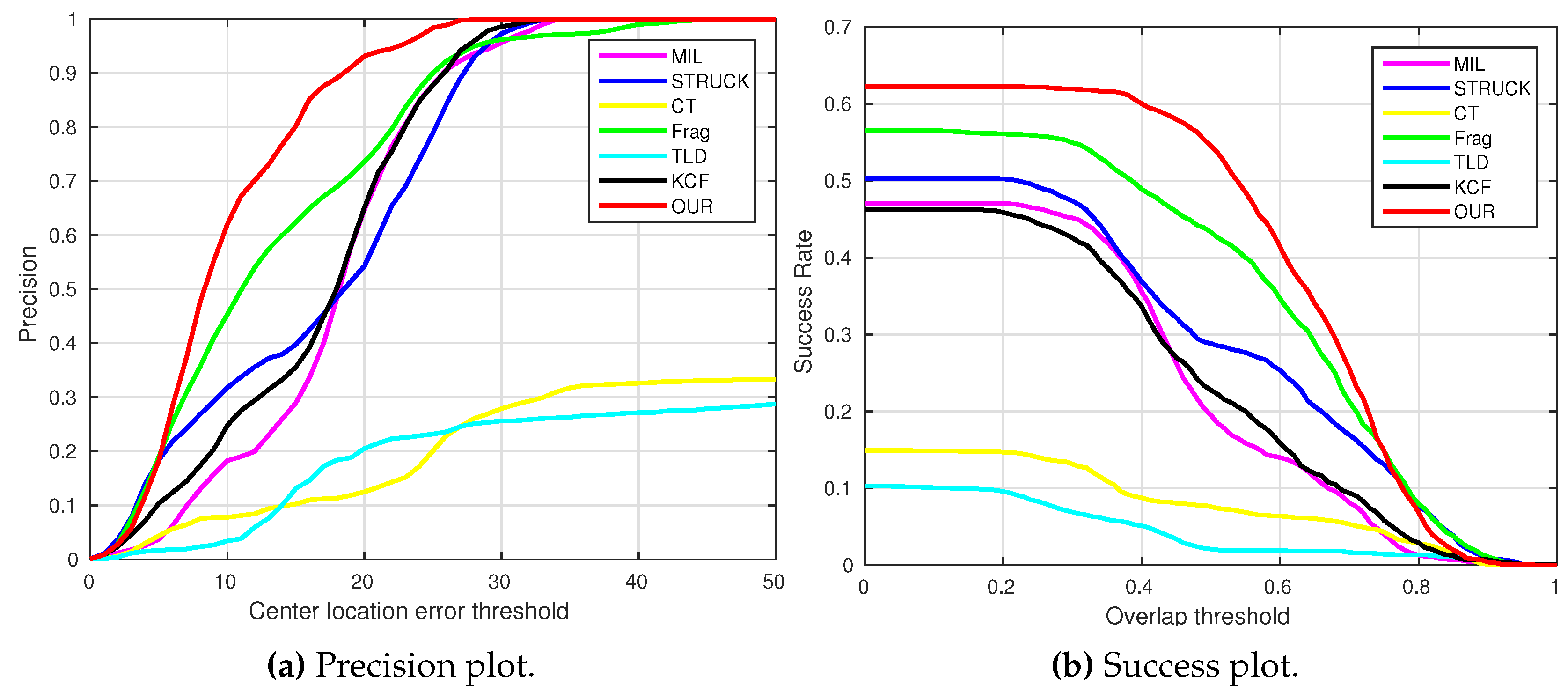

4.3. Test 3: Visual Tracking of the Moving Car

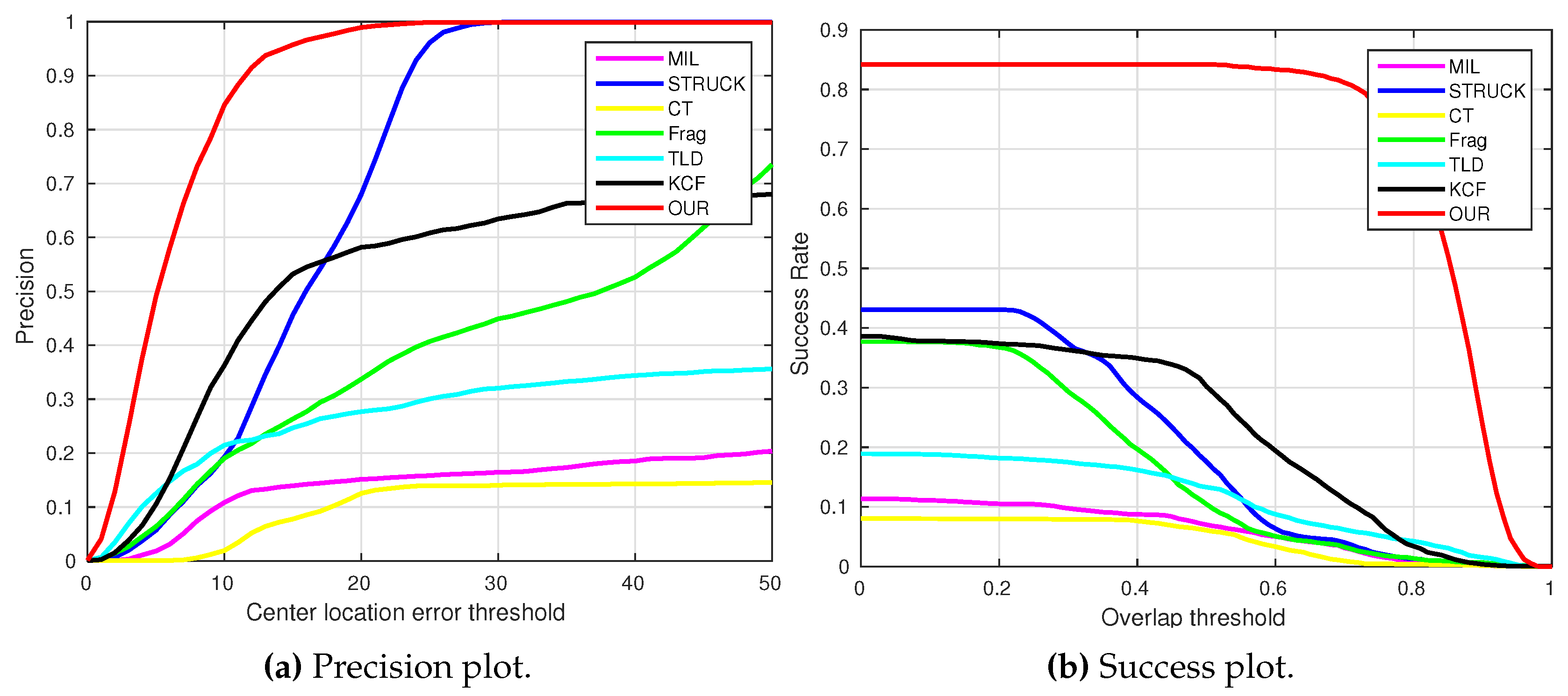

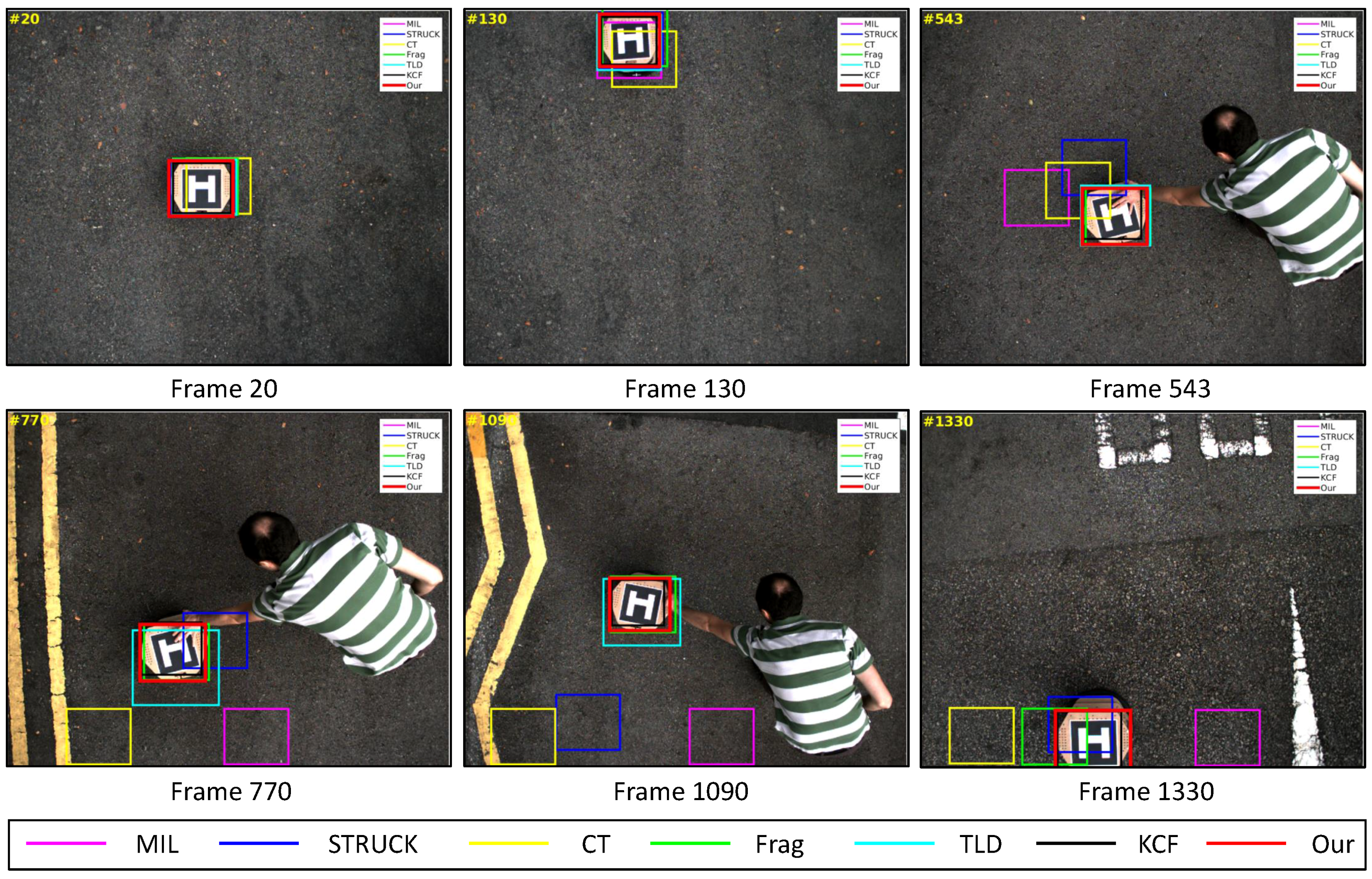

4.4. Test 4: Visual Tracking of the UGV with the Landing Pad (UGVlp)

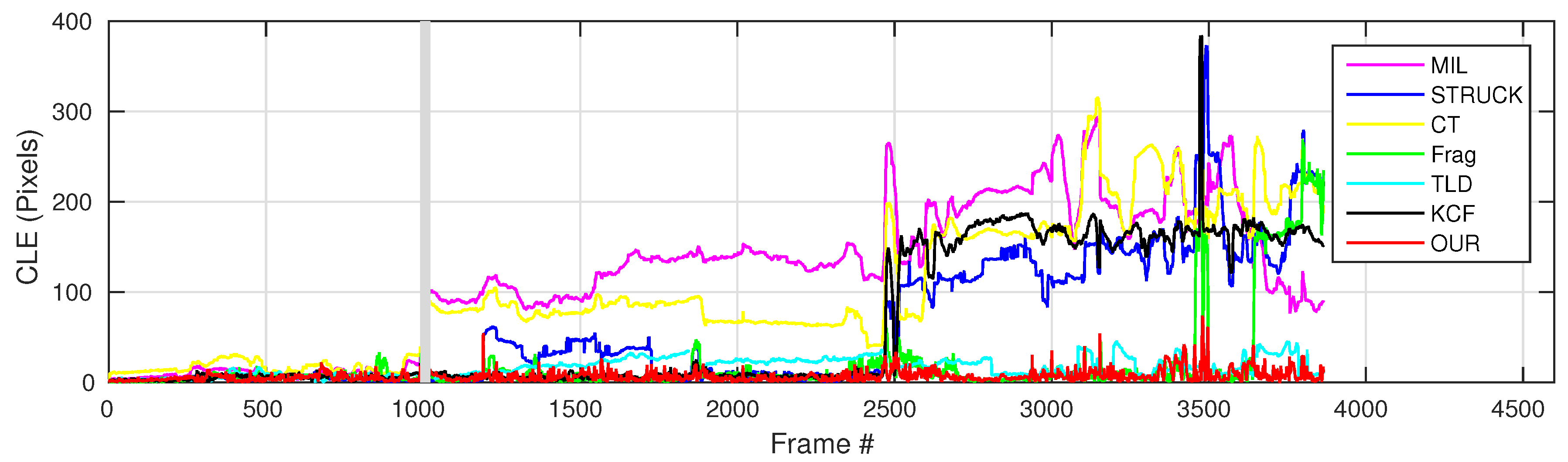

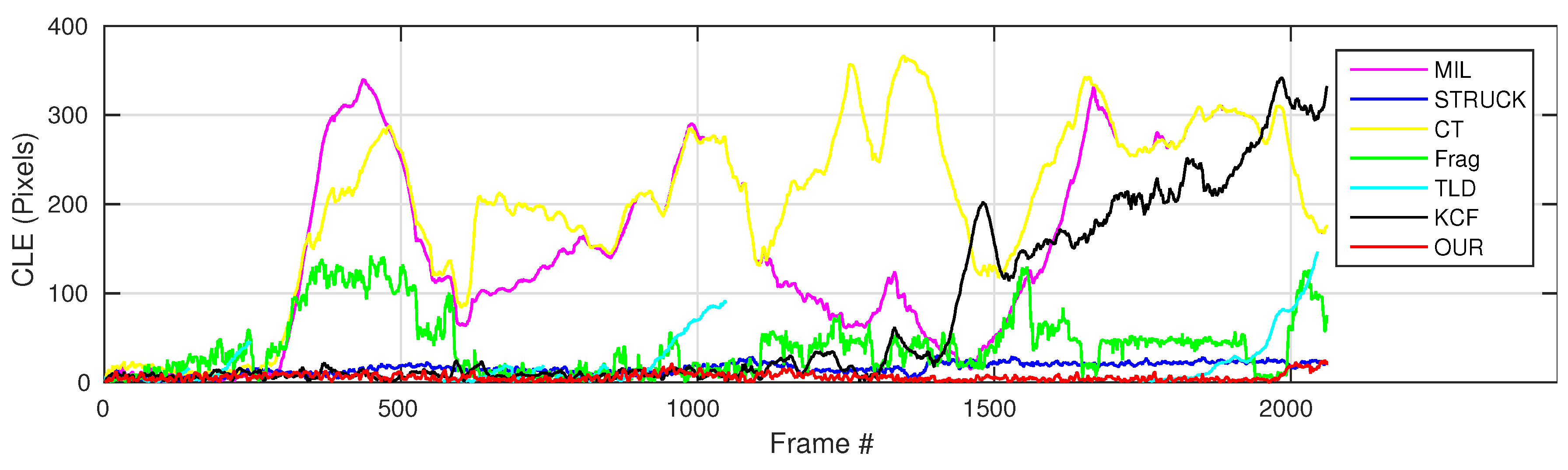

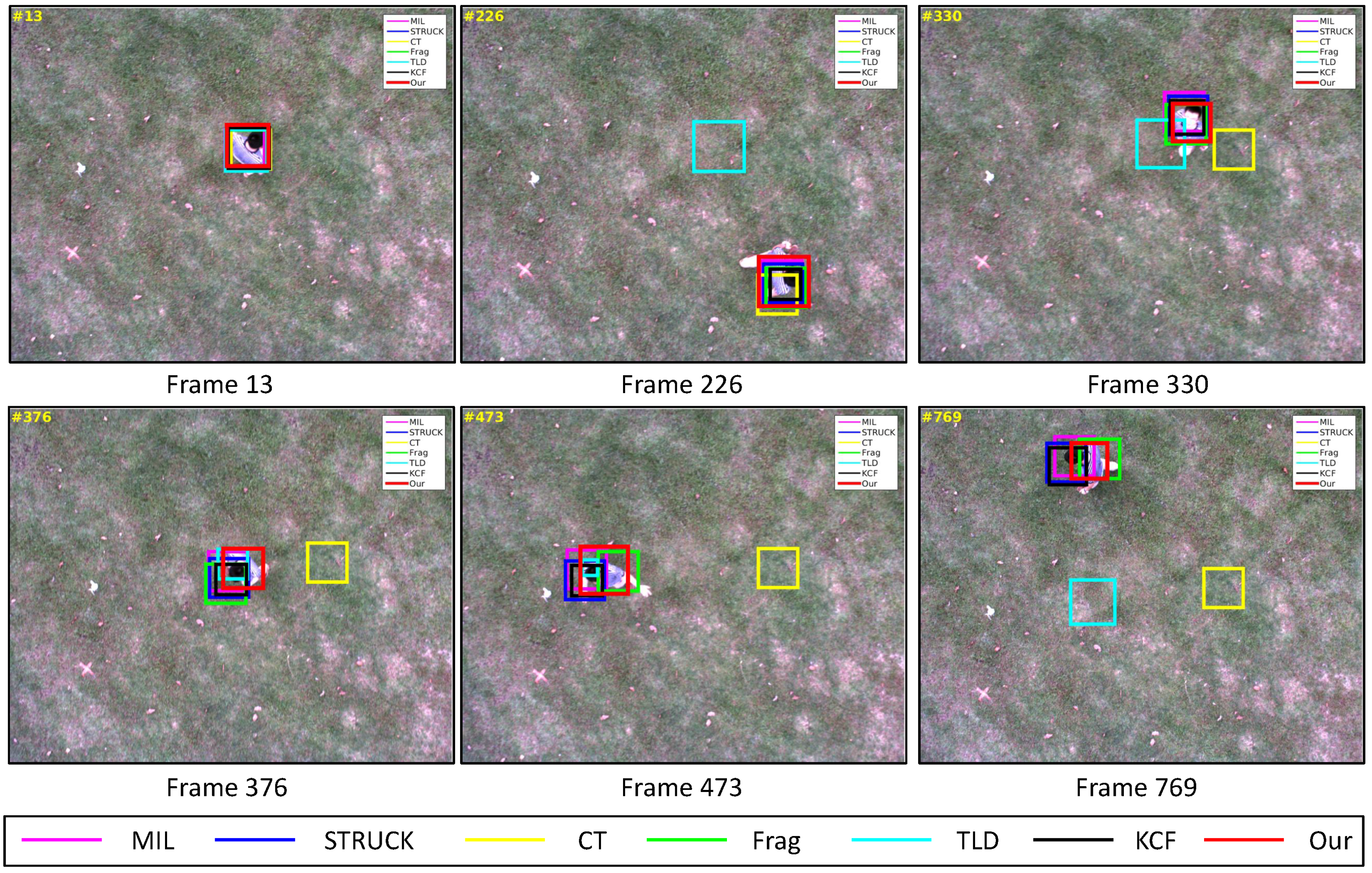

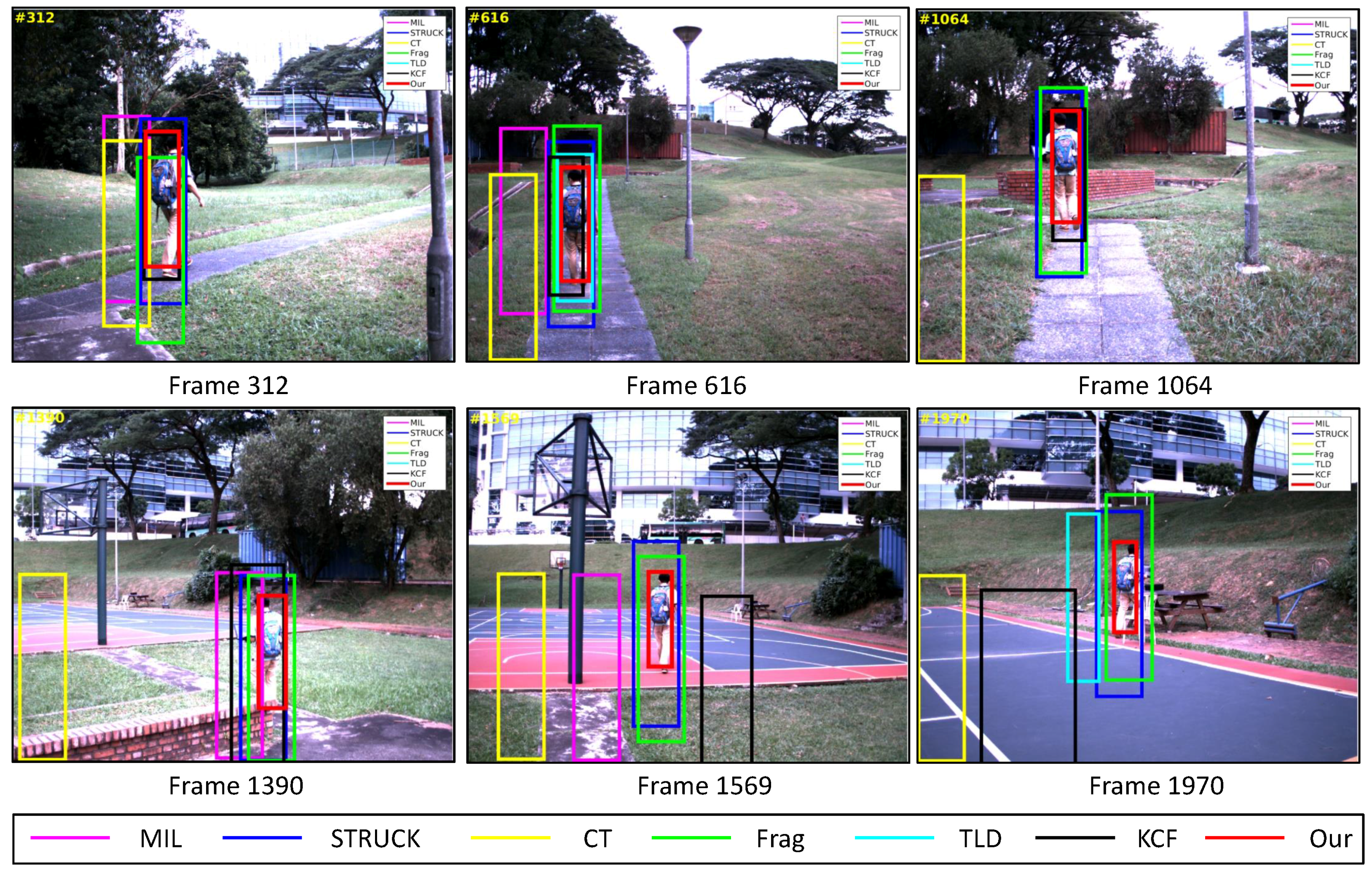

4.5. Test 5: Visual Tracking of Walking People Below (Peoplebw)

4.6. Test 6: Visual Tracking of Walking People in Front (Peoplefw)

4.7. Discussion

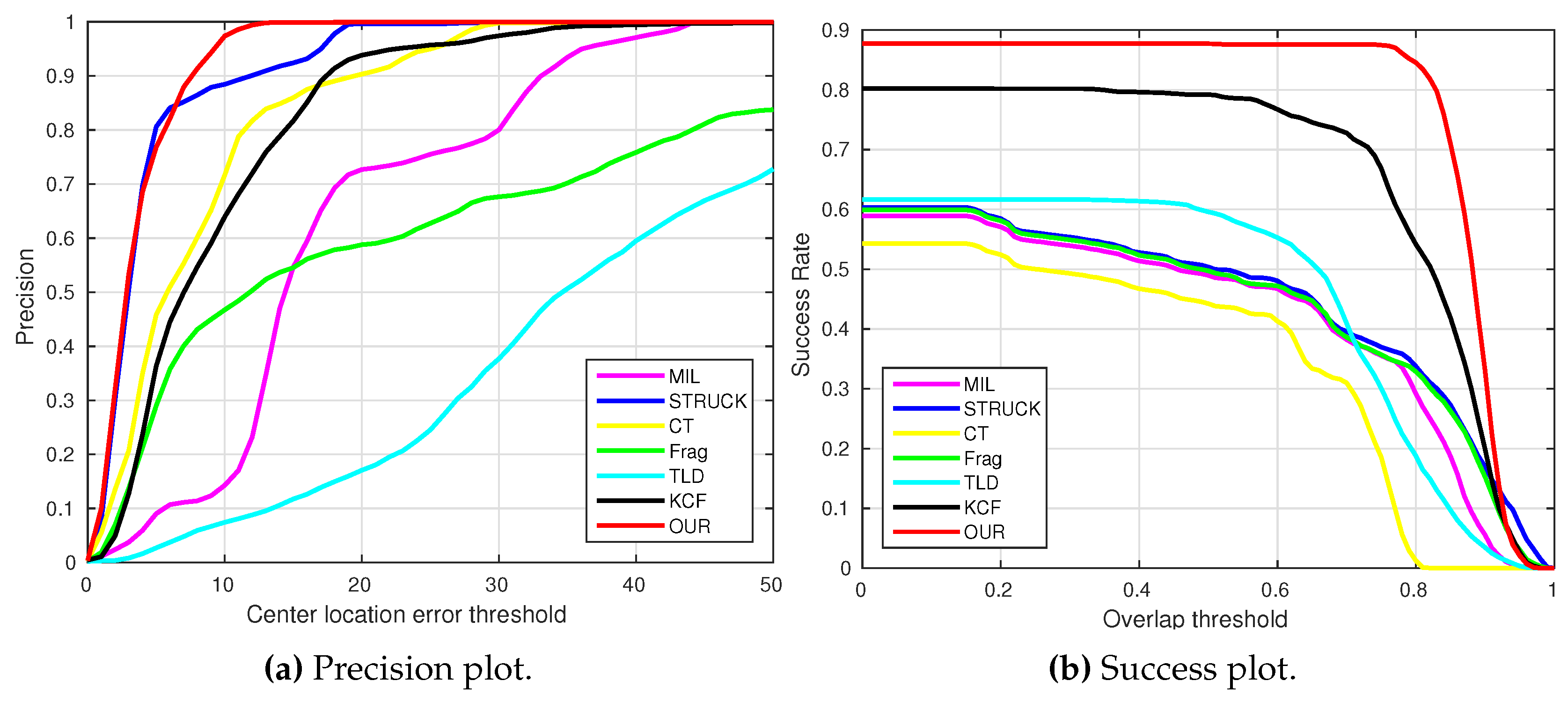

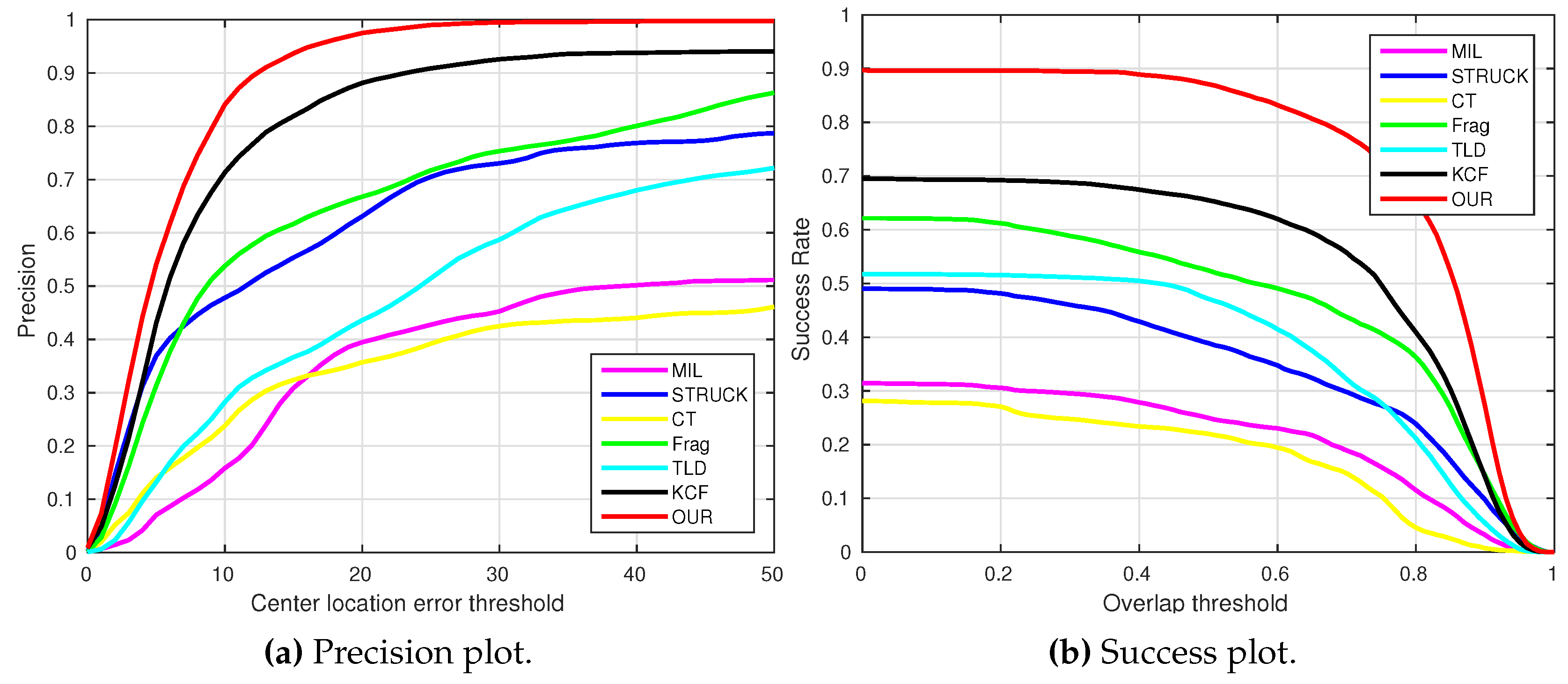

4.7.1. Overall Performances

4.7.2. Failure Case

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Fu, C.; Suarez-Fernandez, R.; Olivares-Mendez, M.; Campoy, P. Real-time adaptive multi-classifier multi-resolution visual tracking framework for unmanned aerial vehicles. In Proceedings of the 2nd Workshop on Research, Development and Education on Unmanned Aerial Systems (RED-UAS), Compiegne, France, 20–22 November 2013; pp. 99–106.

- Lim, H.; Sinha, S.N. Monocular localization of a moving person onboard a Quadrotor MAV. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2182–2189.

- Fu, C.; Carrio, A.; Olivares-Mendez, M.; Suarez-Fernandez, R.; Campoy, P. Robust real-time vision-based aircraft tracking from Unmanned Aerial Vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 5441–5446.

- Babenko, B.; Yang, M.H.; Belongie, S. Visual tracking with online Multiple Instance Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 983–990.

- Hare, S.; Saffari, A.; Torr, P. Struck: Structured output tracking with kernels. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 263–270.

- Zhang, K.; Zhang, L.; Yang, M.H. Real-time compressive tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; pp. 864–877.

- Adam, A.; Rivlin, E.; Shimshoni, I. Robust fragments-based tracking using the integral histogram. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New York, NY, USA, 17–22 June 2006; pp. 798–805.

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the 9th European Conference on Computer Vision (ECCV), Graz, Austria, 7–13 May 2006; pp. 430–443.

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary robust independent elementary features. In Proceedings of the 11th European Conference on Computer Vision (ECCV), Heraklion, Greece, 5–11 September 2010; pp. 778–792.

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence (IJCAI), Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679.

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Forward-backward error: automatic detection of tracking failures. In Proceedings of the 20th International Conference on Pattern Recognition (ICPR), Istanbul, Turkey, 23–26 August 2010; pp. 2756–2759.

- Mullner, D. Fastcluster: Fast hierarchical, agglomerative clustering routines for R and Python. J. Stat. Softw. 2013, 53, 1–18. [Google Scholar] [CrossRef]

- Breunig, M.M.; Kriegel, H.P.; Ng, R.T.; Sander, J. LOF: Identifying density-based local outliers. ACM SIGMOD Rec. 2000, 29, 93–104. [Google Scholar] [CrossRef]

- Teuliere, C.; Eck, L.; Marchand, E. Chasing a moving target from a flying UAV. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Francisco, CA, USA, 25–30 September 2011; pp. 4929–4934.

- Olivares-Mendez, M.A.; Mondragon, I.; Cervera, P.C.; Mejias, L.; Martinez, C. Aerial object following using visual fuzzy servoing. In Proceedings of the 1st Workshop on Research, Development and Education on Unmanned Aerial Systems (RED-UAS), Sevilla, Spain, 30 November–1 December 2011.

- Huh, S.; Shim, D. A vision-based automatic landing method for fixed-wing UAVs. J. Intell. Robotic Syst. 2010, 57, 217–231. [Google Scholar] [CrossRef]

- Martínez, C.; Campoy, P.; Mondragón, I.F.; Sánchez-Lopez, J.L.; Olivares-Méndez, M.A. HMPMR strategy for real-time tracking in aerial images, using direct methods. Mach. Vis. Appl. 2014, 25, 1283–1308. [Google Scholar] [CrossRef]

- Mejias, L.; Campoy, P.; Saripalli, S.; Sukhatme, G. A visual servoing approach for tracking features in urban areas using an autonomous helicopter. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Orlando, FL, USA, 15–19 May 2006; pp. 2503–2508.

- Campoy, P.; Correa, J.; Mondragon, I.; Martinez, C.; Olivares, M.; Mejias, L.; Artieda, J. Computer vision onboard UAVs for civilian tasks. J. Intell. Robotic Syst. 2009, 54, 105–135. [Google Scholar] [CrossRef] [Green Version]

- Mondragón, I.; Campoy, P.; Martinez, C.; Olivares-Mendez, M. 3D pose estimation based on planar object tracking for UAVs control. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 35–41.

- Yang, S.; Scherer, S.; Schauwecker, K.; Zell, A. Autonomous landing of MAVs on an arbitrarily textured landing site using onboard monocular vision. J. Intell. Robotic Syst. 2014, 74, 27–43. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Fourth Alvey Vision Conference (AVC), Manchester, UK, 31 August–2 September 1988; pp. 147–151.

- Lowe, D. Distinctive image features from Scale-Invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2564–2571.

- Olivares-Mendez, M.A.; Fu, C.; Ludivig, P.; Bissyandé, T.F.; Kannan, S.; Zurad, M.; Annaiyan, A.; Voos, H.; Campoy, P. Towards an autonomous vision-based unmanned aerial system against wildlife poachers. Sensors 2015, 15, 31362–31391. [Google Scholar] [CrossRef] [PubMed]

- Sanchez-Lopez, J.; Saripalli, S.; Campoy, P.; Pestana, J.; Fu, C. Toward visual autonomous ship board landing of a VTOL UAV. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 28–31 May 2013; pp. 779–788.

- Sampedro, C.; Martinez, C.; Chauhan, A.; Campoy, P. A supervised approach to electric tower detection and classification for power line inspection. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Beijing, China, 6–11 July 2014; pp. 1970–1977.

- Ross, D.; Lim, J.; Lin, R.S.; Yang, M.H. Incremental learning for robust visual tracking. Int. J. Comput. Vis. 2008, 77, 125–141. [Google Scholar] [CrossRef]

- Fu, C.; Carrio, A.; Olivares-Mendez, M.A.; Campoy, P. Online learning-based robust visual tracking for autonomous landing of Unmanned Aerial Vehicles. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 649–655.

- Pestana, J.; Sanchez-Lopez, J.L.; Saripalli, S.; Campoy, P. Computer vision based general object following for GPS-denied multirotor unmanned vehicles. In Proceedings of the American Control Conference (ACC), Portland, OR, USA, 4–6 June 2014; pp. 1886–1891.

- Kitt, B.; Geiger, A.; Lategahn, H. Visual odometry based on stereo image sequences with RANSAC-based outlier rejection scheme. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), San Diego, CA, USA, 21–24 June 2010; pp. 486–492.

- Hartley, R.I.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, England, 2004. [Google Scholar]

| Sequence | Number | MV | AF | IV | OC | SV | DE | IR | OR | OV | CB |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Container | 2874 | √ | √ | √ | √ | √ | |||||

| Gas tank | 3869 | √ | √ | √ | √ | √ | √ | √ | √ | ||

| Moving car | 582 | √ | √ | √ | |||||||

| UGVlp | 1325 | √ | √ | √ | √ | ||||||

| Peoplebw | 934 | √ | √ | √ | √ | ||||||

| Peoplefw | 2062 | √ | √ | √ | √ | √ | √ | √ |

| Parameter Name | Value | Parameter Name | Value |

|---|---|---|---|

| Bucketing configuration | 10 × 8 | FAST threshold | 20 |

| Sampling patch size () | 48 | BRIEF descriptor length () | 256 |

| Ratio threshold (ρ) | 0.85 | Local search window () | 30 |

| LGF cut-off threshold (η) | 18 | LOF cut-off threshold (μ) | 1.5 |

| Sequence | MIL | STRUCK | CT | Frag | TLD | KCF | Our |

|---|---|---|---|---|---|---|---|

| Container | 17.7 | 27.4 | - | 9.3 | |||

| Gas tank | 118.1 | 62.4 | 103.4 | 63.1 | |||

| Moving Car | 7.1 | 4.5 | 4.5 | 105.1 | |||

| UGVlp | 152.2 | 97.1 | 150.1 | 20.6 | |||

| Peoplebw | 17.8 | 16.7 | 157.9 | 130.8 | |||

| Peoplefw | 153.3 | 197.1 | - | 73.0 | |||

| CLEAve | 96.4 | 41.6 | 107.1 | - | |||

| FPSAve | 24.8 | 16.2 | 13.1 | 23.9 |

| Sequence | MIL | STRUCK | CT | Frag | TLD | KCF | Our |

|---|---|---|---|---|---|---|---|

| Container | 62.9 | 62.7 | 62.7 | 62.5 | |||

| Gas tank | 25.7 | 61.8 | 24.5 | 62.7 | |||

| Moving car | 68.0 | 82.5 | 24.7 | 78.4 | |||

| UGVlp | 15.1 | 18.7 | 6.8 | 70.3 | |||

| Peoplebw | 30.0 | 10.5 | 2.67 | 34.7 | |||

| Peoplefw | 10.6 | 9.9 | 16.9 | 19.6 | |||

| SRAve | 32.9 | 50.1 | 30.8 | 51.8 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, C.; Duan, R.; Kircali, D.; Kayacan, E. Onboard Robust Visual Tracking for UAVs Using a Reliable Global-Local Object Model. Sensors 2016, 16, 1406. https://doi.org/10.3390/s16091406

Fu C, Duan R, Kircali D, Kayacan E. Onboard Robust Visual Tracking for UAVs Using a Reliable Global-Local Object Model. Sensors. 2016; 16(9):1406. https://doi.org/10.3390/s16091406

Chicago/Turabian StyleFu, Changhong, Ran Duan, Dogan Kircali, and Erdal Kayacan. 2016. "Onboard Robust Visual Tracking for UAVs Using a Reliable Global-Local Object Model" Sensors 16, no. 9: 1406. https://doi.org/10.3390/s16091406