2. Related Works

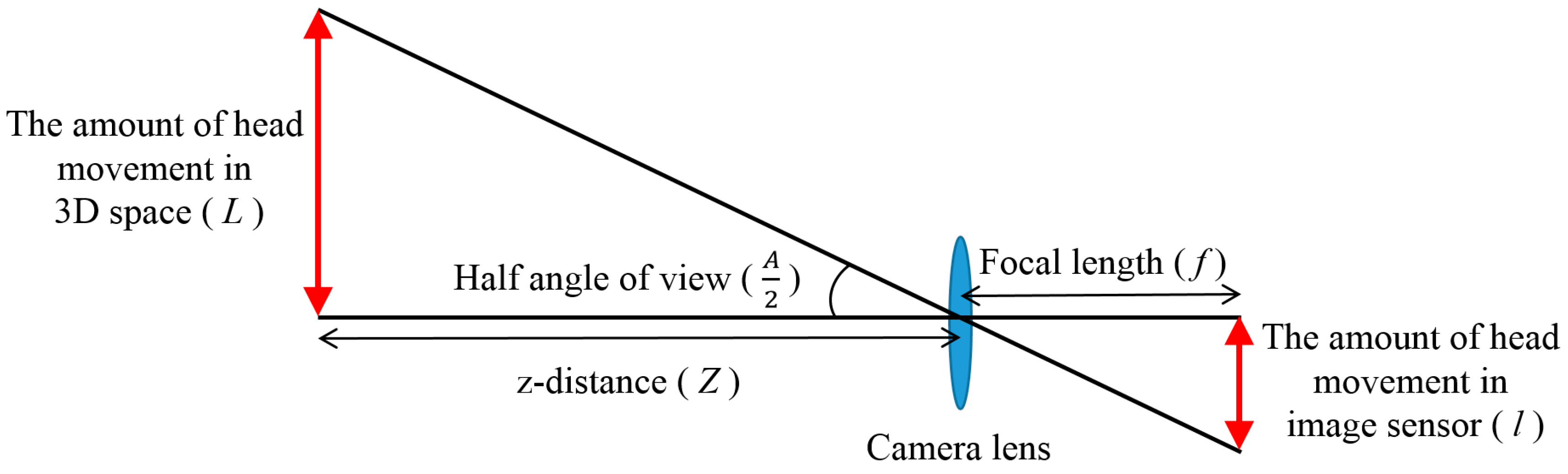

When a gaze tracking system is working properly in a desktop environment, focused images of the user’s eyes should be captured by the gaze tracking camera. Therefore, it is critical to determine the appropriate viewing angle and depth-of-field (DOF) of the gaze tracking camera. Here, DOF indicates the range of z-distances between the user’s eyes and the camera where the camera can acquire a focused eye image.

The viewing angle of a gaze tracking camera should not be very small or very large, as this reduces the system’s performance. If the gaze tracking system adopts a camera lens with a small viewing angle, the eye size in the image may be large, which guarantees accurate detection of the pupil and corneal specular reflection (SR). However, the user’s head movement becomes limited, which can degrade the convenience of the system for the user. Conversely, a camera lens with a large viewing angle allows for large head movements by the user, enhancing the convenience of using the system. However, in this case, the size of the eye region in the captured image decreases, which may degrade the detection accuracy of the pupil and corneal SR, and consequently, the gaze detection accuracy.

In addition, when using a lens with a small DOF, the eye region in the captured image is easily blurred by movement of the user’s head in the

z-direction (approaching or receding from the camera). Blurred eye images can reduce pupil and corneal SR detection accuracies, and thus, the overall gaze detection accuracy. In order to increase the DOF, a lens with a large

f-number should be used. In general,

f-number is inversely proportional to the iris size of the lens, or the lens diameter [

21]. Therefore, a lens with a large

f-number has a small diameter, which decreases the brightness of captured images, and the eye region in the image can become too dark to be useful for gaze detection. Therefore, determining the optimal viewing angle and DOF of lenses designed for gaze tracking cameras is a difficult procedure and a prerequisite for realizing high-performance gaze tracking systems.

Nevertheless, most research has focused on algorithm design for improving the accuracy of gaze tracking systems in situations with limited head movement; therefore, previous work implemented gaze tracking cameras without ground truth information for the optimal viewing angle and DOF of the camera lens [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20]. Previous research [

22] has proposed a long-range gaze tracking system that allows for large head movements. However, this work requires gaze tracking cameras with complicated panning and tilting mechanisms in addition to two wide-view cameras. In addition, this gaze tracking camera is also designed without ground truth information for optimal viewing angle and DOF of the camera lens. There have been commercial gaze trackers [

23,

24]. However, they did not present ground truth information (the experimental measurements of the amount and velocity of user’s head movements) in public, either, although this information is necessary for designing an optimal gaze tracking camera. Therefore, we address this problem providing an empirical study to design an optimal gaze tracking camera based on experimental measurements of the amount and velocity of user’s head movements. Compared to previous works, our research is novel in three ways.

To our best knowledge, most previous researches implemented gaze tracking cameras without a ground truth information for determining the optimal viewing angle and DOF of the camera lens [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

22]. Eye-tracker manufacturers might also use ground truth information, but they do not provide this in public [

23,

24]. Therefore, we address this problem providing the empirical research for designing an optimal gaze tracking camera based on experimental measurement of the amount and velocity of user’s head movements.

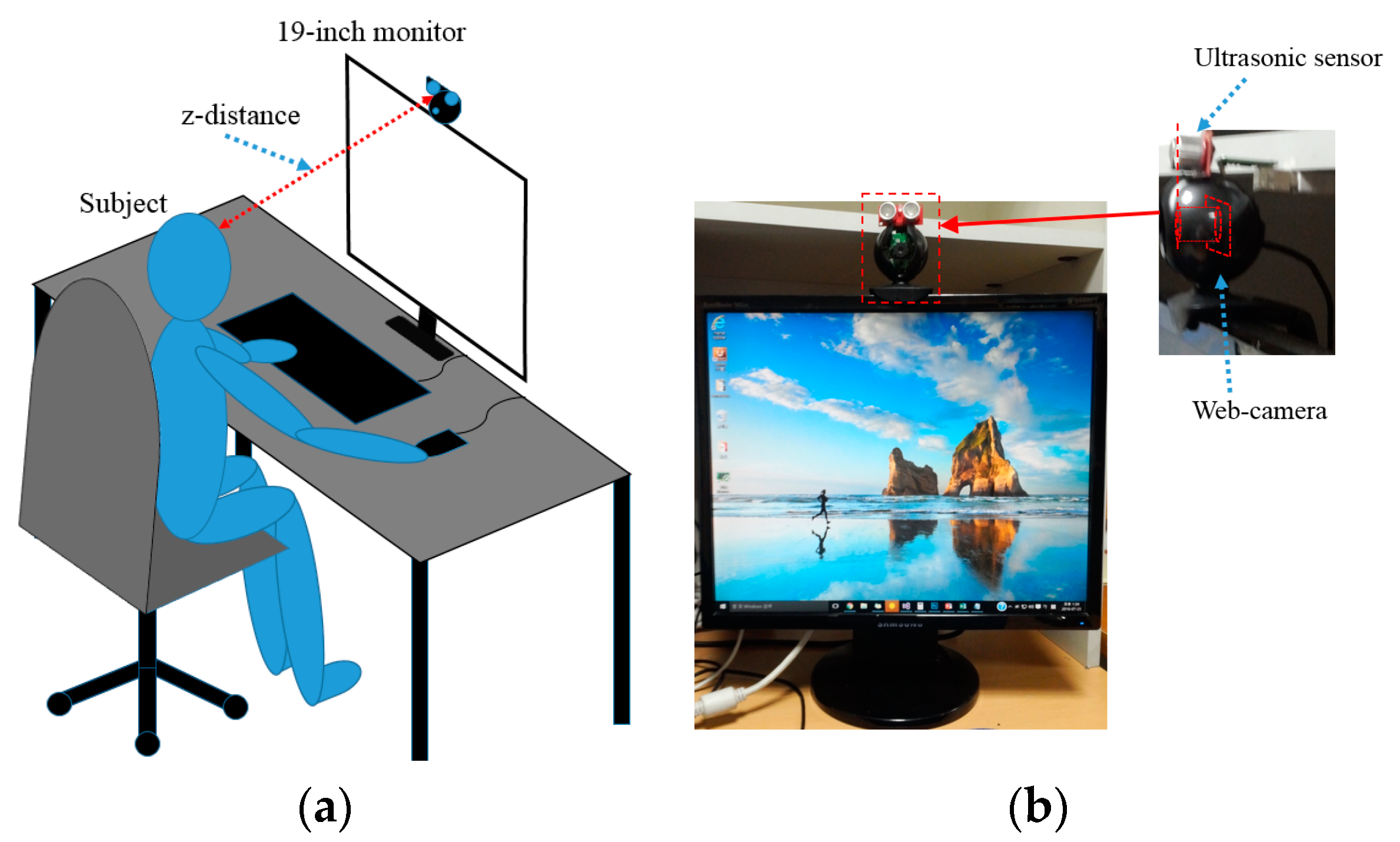

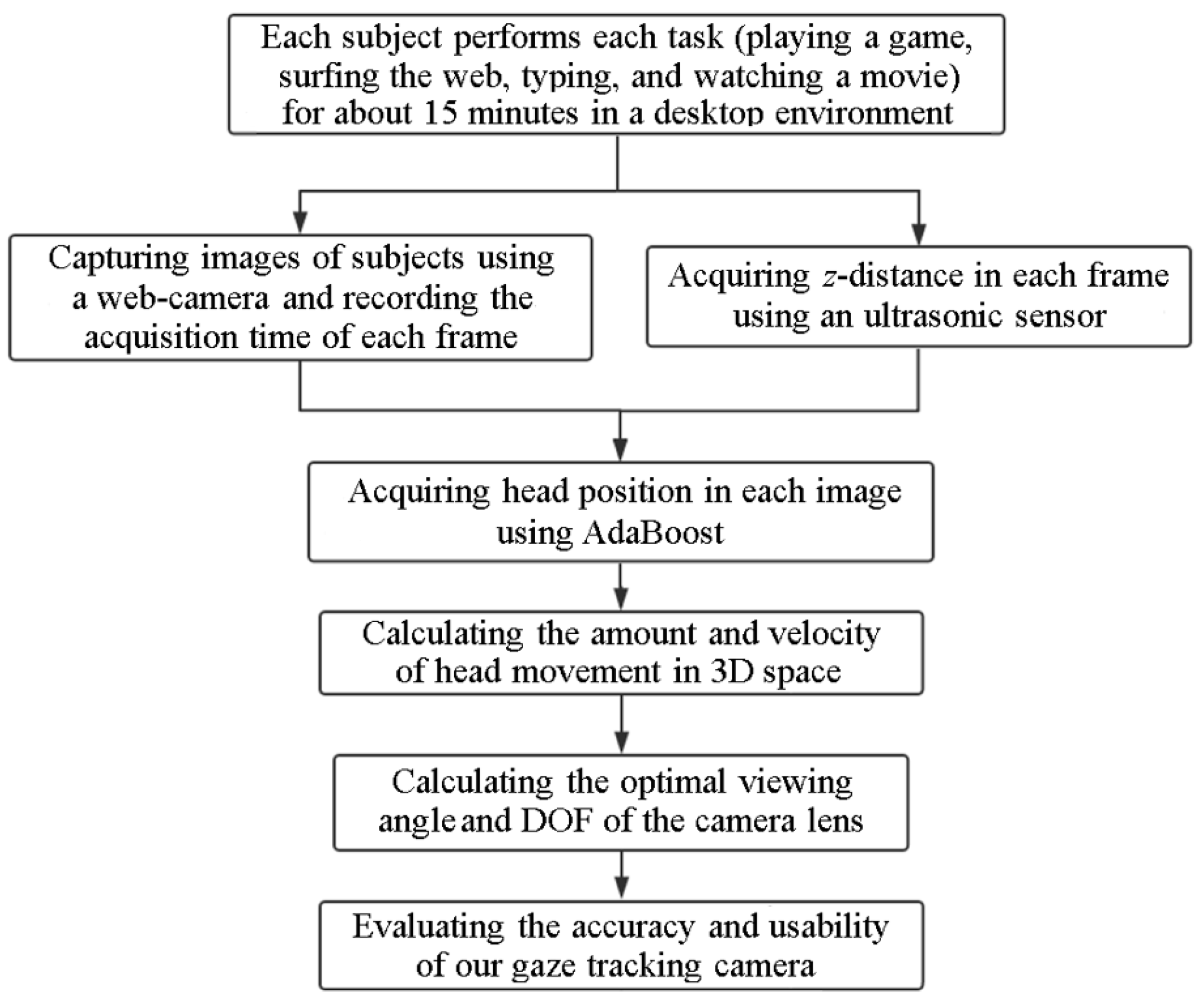

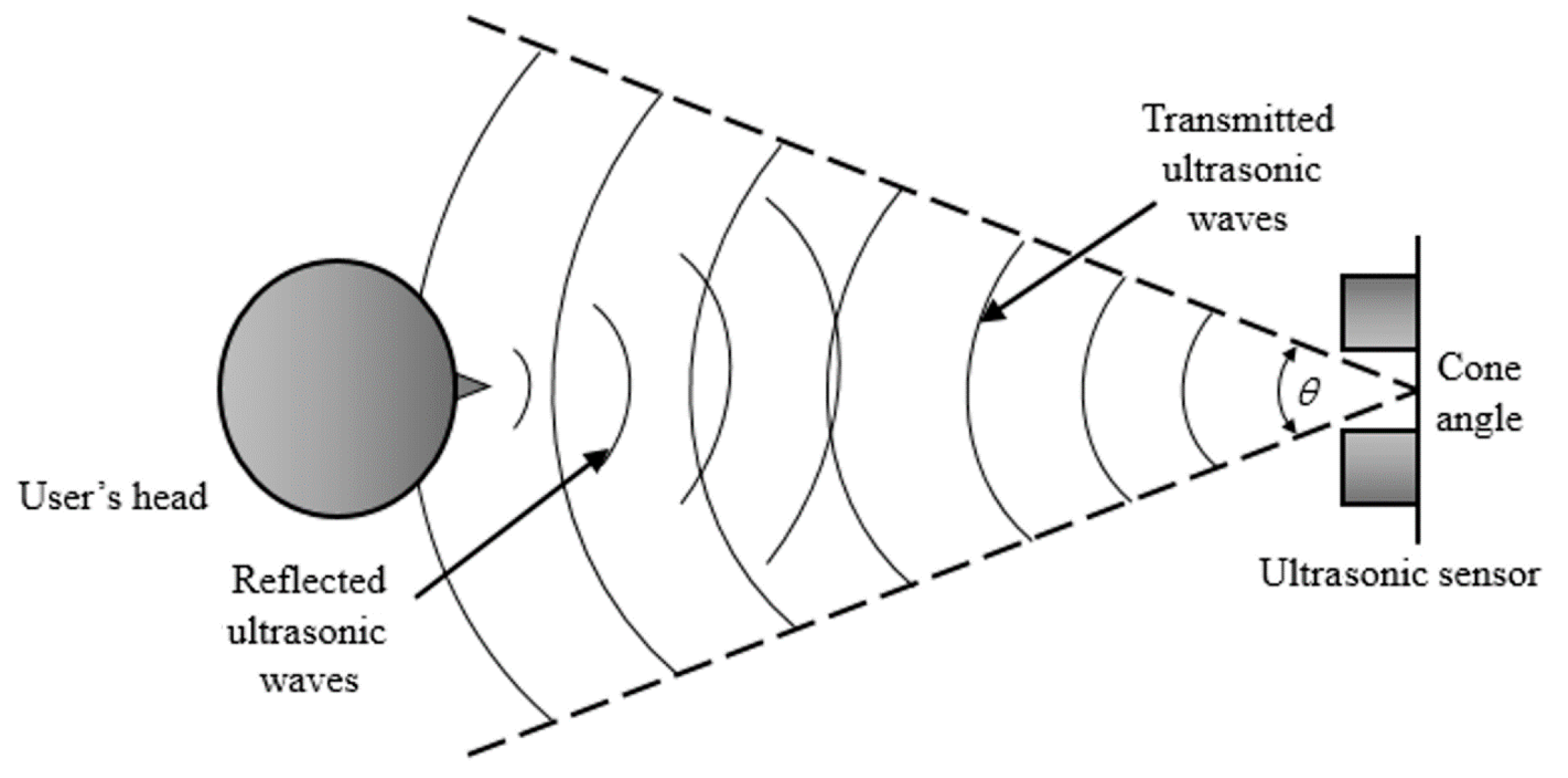

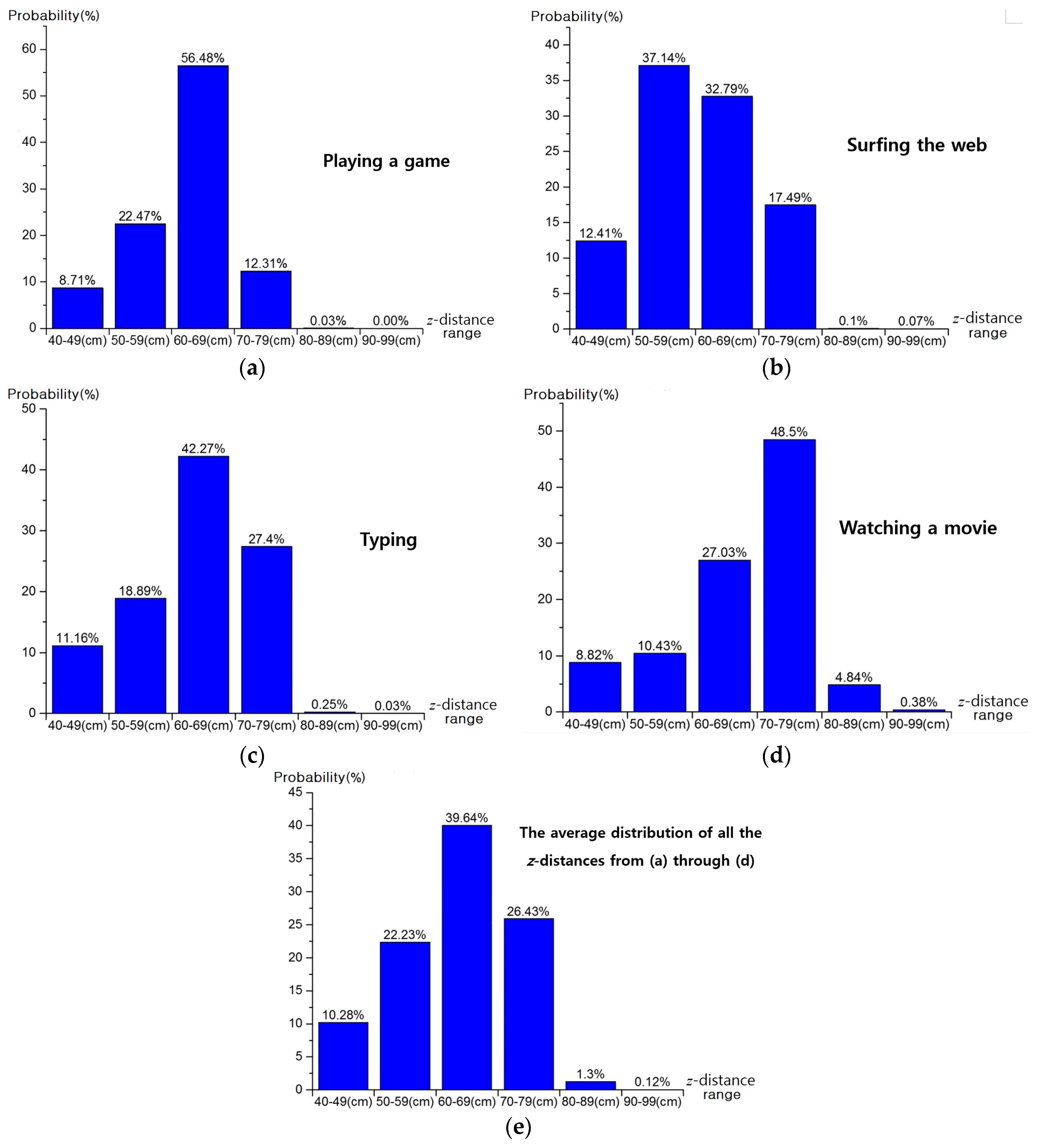

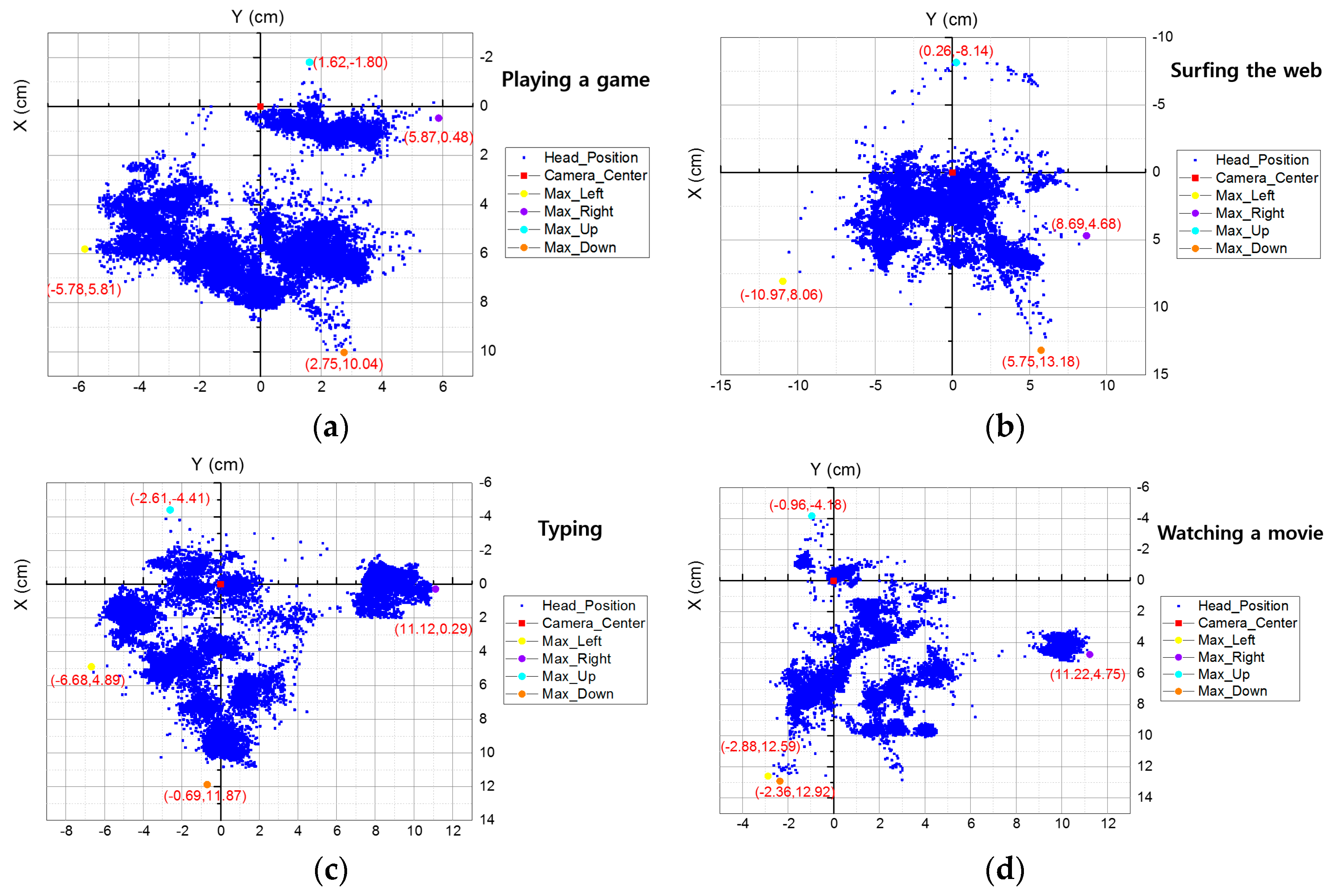

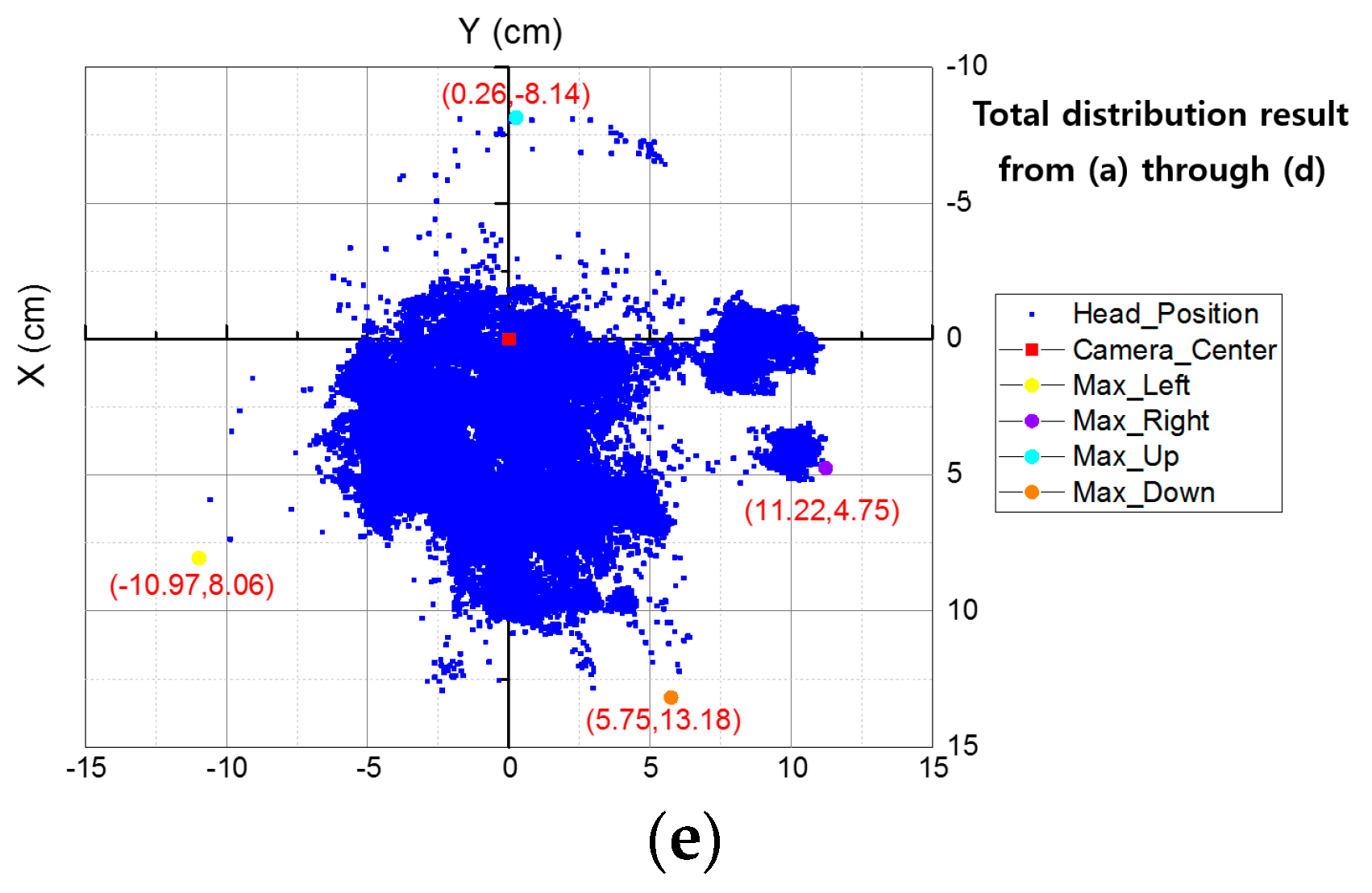

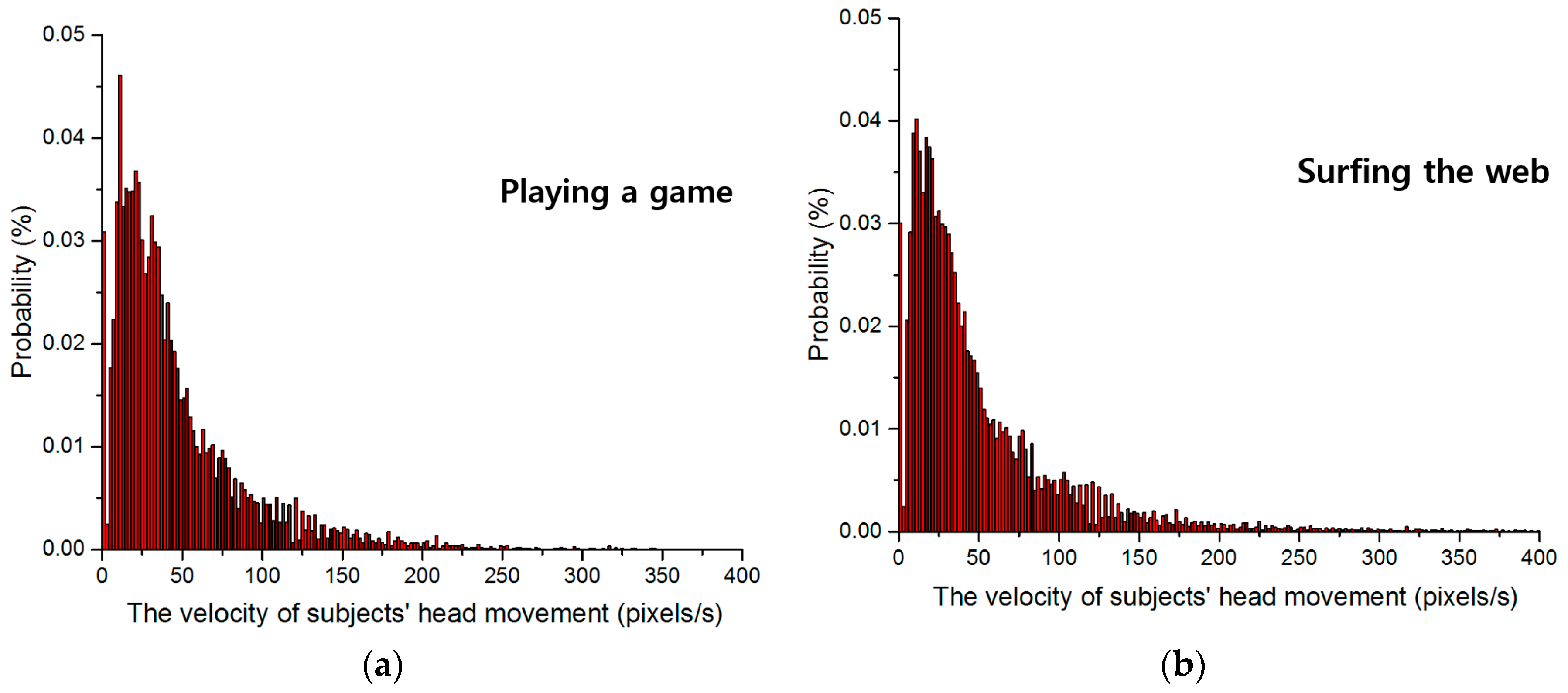

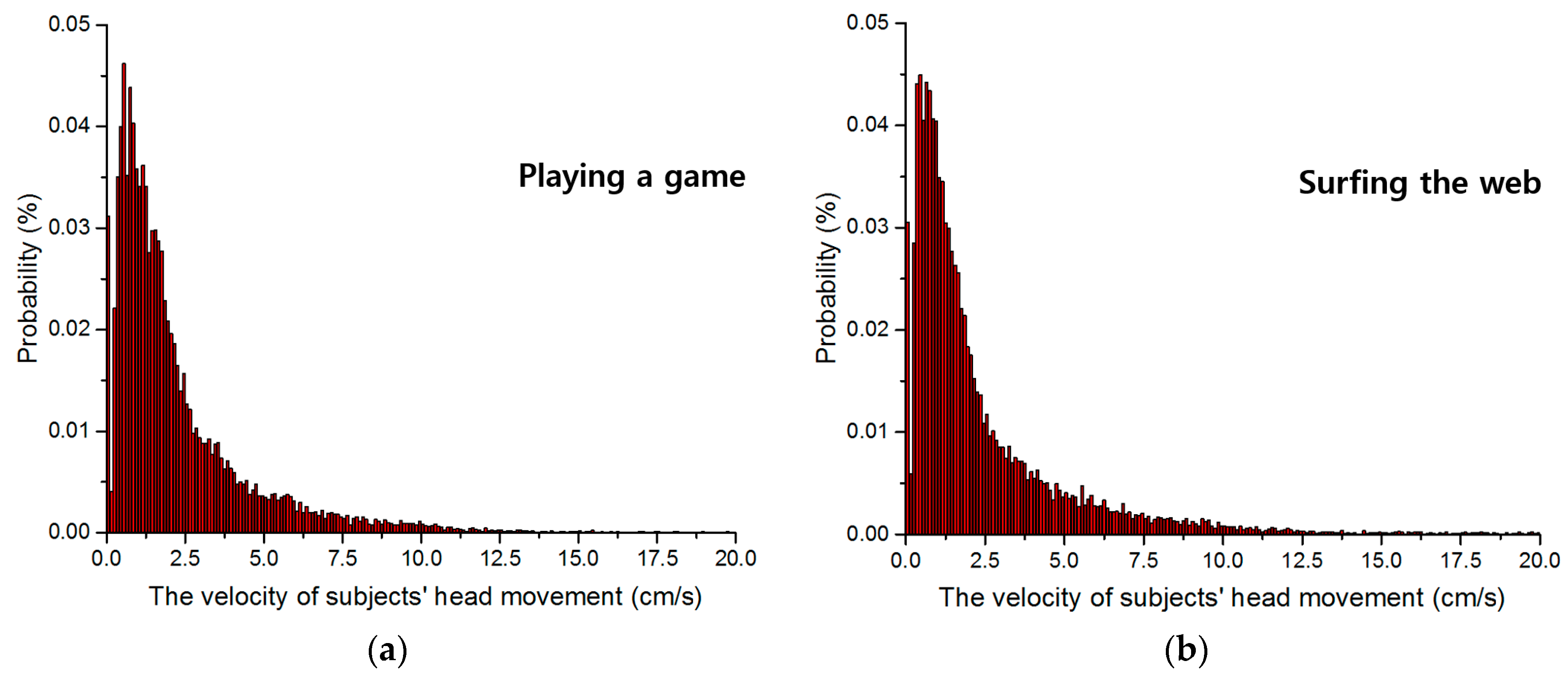

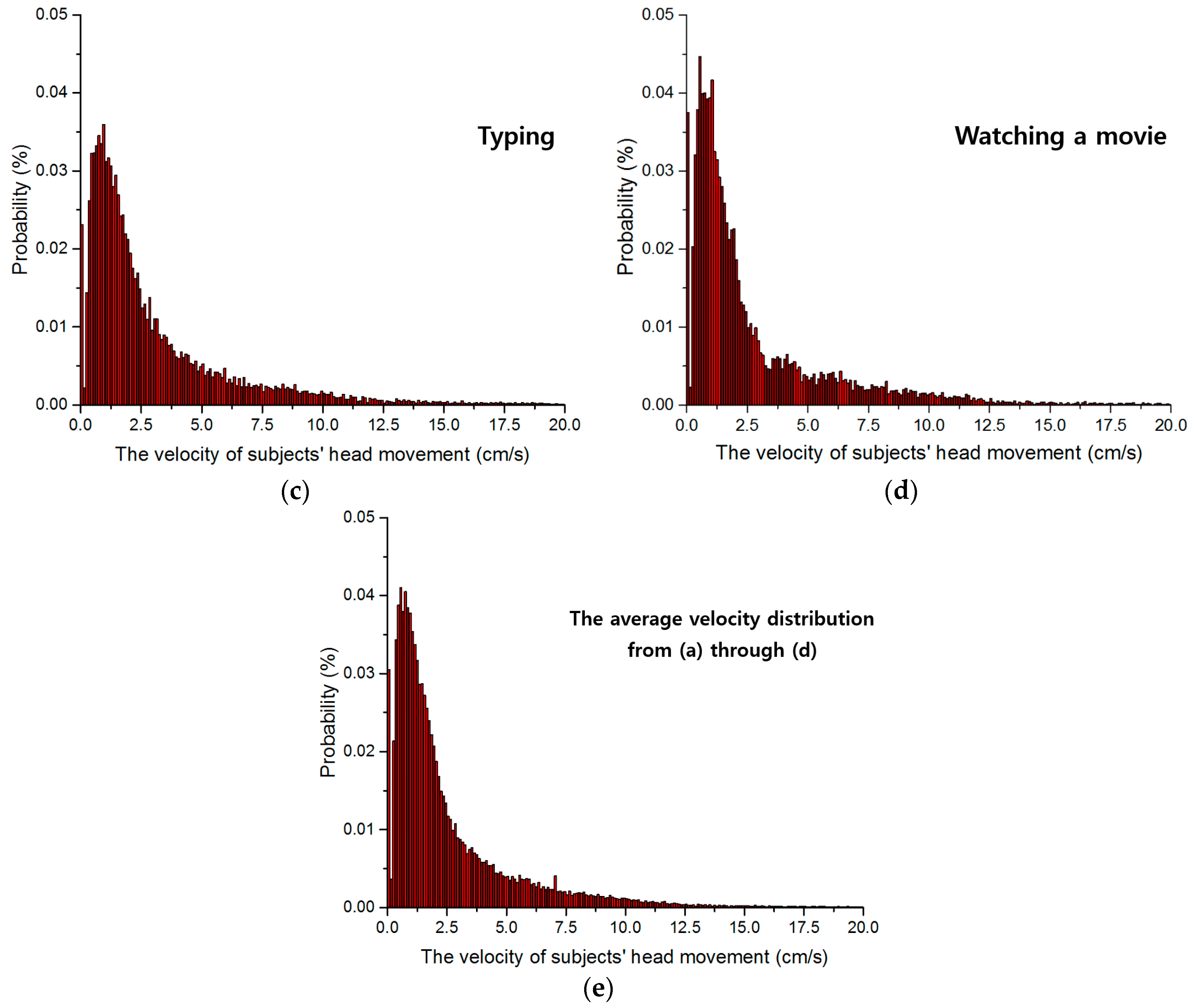

Accurate measurements of the amount and velocity of user’s head movements are made using a web-camera and an ultrasonic sensor while each user plays a game, surfs the web, types, and watches a movie in a desktop environment.

Based on the amount and velocity of user head movements, we determine the optimal viewing angle and DOF of the camera lens. We design a gaze tracking system with such a lens and the accuracy and user convenience of our gaze tracking method is compared with those of previous methods.

Recently, various depth-cameras have been commercialized, and Kinect (versions 1 and 2) sensors (named as “Kinect 1.0” and “Kinect 2.0”) have been widely used due to their superiorities of performance. However, the minimum

z-distance that can be measured by Kinect 1.0 is about 70 cm between object and Kinect 1.0 [

25]. The

z-distance ranges from 40 to 100 cm when people are usually doing various tasks in desktop computer environments. Therefore, accurate

z-distance in the range from 40 to 70 cm cannot be measured by Kinect 1.0 in our experiments. In addition, because Kinect 1.0 provides the depth map of

z-distance including the areas of head, neck and body, the face region which is used for

z-distance measurement should be separated from these areas through further processing using the visible light camera image of Kinect 1.0. In addition, Kinect 1.0 measures the

z-distance based on trigonometry by using the patterned light produced by laser projector which has the feature of straight [

25]. Therefore, in the case that the depth map of face is obtained at near

z-distance (from 40 to 70 cm), the holes in the depth map occur due to the occlusion of light by the protruded area of face such as nose. Thus, the further processing of hole filling should be performed with the depth map of face for

z-distance measurement [

26,

27,

28]. To overcome these problems, Kinect 2.0 is recently commercialized and it can measure the

z-distance by using the time-of-flight (TOF) camera. However, Kinect 2.0 shows the low accuracy (with the large variation) of

z-distance measurement at the near

z-distance range of 50–100 cm, and authors also told that less reliable

z-distance is measured by Kinect 2.0 at this near

z-distance range [

29]. Therefore, it cannot be used for

z-distance measurement in our experiment.

Kinect devices can be used behind the monitor screen. Considering the z-distance range of 40–100 cm in our experiments, Kinect 1.0 should be placed at the position of 30 cm behind the monitor screen. In the case of Kinect 2.0, it should be placed at the position of at least 60 cm behind the monitor. In this case, Kinect device should be placed behind at the upper, left or right position of monitor because monitor should not hide the depth camera of Kinect device. Therefore, additional complicated calibration (among monitor, our web-camera, and the depth camera of Kinect device) should be performed due to the disparities of coordinates of monitor, web-camera, and depth camera. We can consider the method of using the RGB camera of Kinect device. However, the measurement resolution of head movement by the RGB camera becomes too small due to the far z-distance between camera and user, and the distorted image of user’s face can be captured. This is because the Kinect device is placed behind at the upper, left or right position of monitor.

However, in our system, the position of ultrasonic sensor is close to web-camera, and they are placed in the same plane to monitor screen as shown in

Figure 1. Therefore, additional complicated calibration is not necessary in our experiments. In the case of SoftKinetic device [

30], its speed for measuring depth data becomes slower (lower than 15 frames/s) according to the increase of

z-distance, which cannot measure the natural head movement of users (our system can measure it at the speed of 30 frames/s).

In addition, the prices of these systems are expensive, i.e., more than $300 in the case of Intel RealSense F200 [

31], more than $150 in the case of SoftKinetic device, more than $270 in the case of Kinect 2.0, and about $150–$200 in the case of Kinect 1.0. However, our system (including web-camera and ultrasonic sensor) costs less than $50, and researchers can easily use our system for obtaining the ground-truth information for implementing optimal gaze tracking camera.

Polhemus position tracking sensor (Patriot sensor [

32]) can be considered for measuring the

z-distance of user’s face. However, the sensor should be attached on user’s face during the experiments (performing four tasks (playing a game, surfing the web, typing, and watching a movie), respectively, for about 15 min), which gives much inconvenience to the participants of experiments, and prevents the natural head movement of each user.

Table 1 compares the previous and proposed methods.

The remaining content of this paper is organized as follows: In

Section 3, the proposed system and method for measuring the amount and velocity of a user’s head movement are described. In

Section 4, experimental results are presented and the performance of our gaze tracking method is evaluated. Finally, the conclusions of this paper are presented in

Section 5.