4.1. Simulation

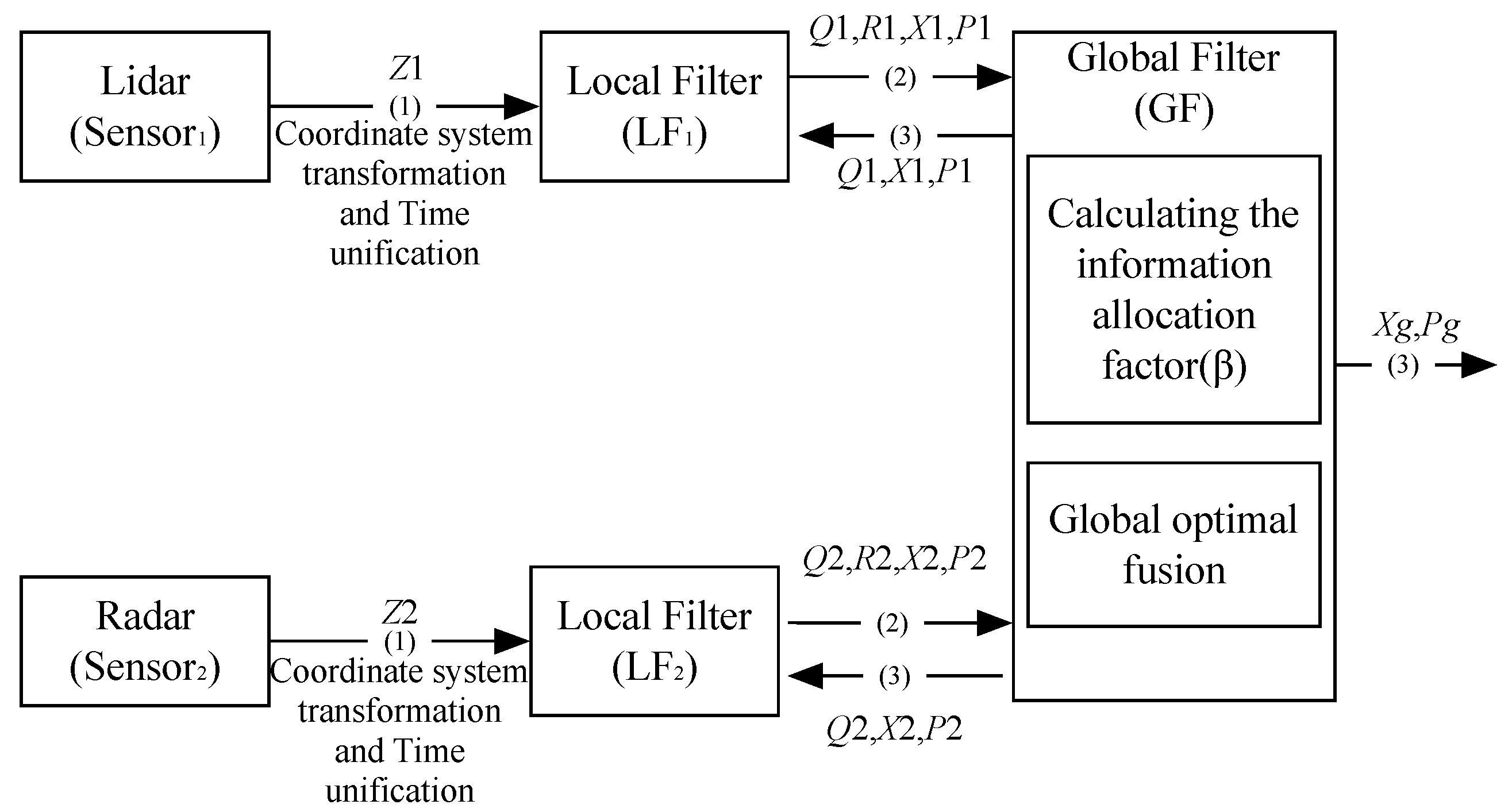

We conducted the simulation using MATLAB R2014a on a computer equipped with an Intel Core i7-4790 CPU (3.60 GHz) and Windows 64. There are two important factors that can affect the accuracy of the filtered results. At the one hand, the noise intensity is treated as a prevailing concern and can be quantified by the variance

R. In the simulation, the Lidar noise variance is increased by 0.1 per time span from 0.03 to 5.93, while the Radar noise variance maintains its initial value of 0.1. The initial velocity, acceleration, and displacement of the forward vehicle are 2 m/s, 0.18 m/s

2, and 0 m, respectively.

Figure 3 shows the root mean squared error (RMSE), where JAKF produces the maximum RMSE of the displacement 0.2323 m and the average 0.0818 m, the maximum RMSE of the velocity 0.0837 m/s and the average 0.0689 m/s, and the maximum RMSE of the acceleration 0.0237 m/s

2 and the average 0.0171 m/s

2. When the Lidar noise variance is increased to 0.93, the RMSEs of the displacement, velocity, and acceleration produced by CKF (Lidar) increase to 10-fold, so the results of CKF (Lidar) have diverged. Although IAKF (Lidar) can adapt to the increased noise intensity, the RMSE of JAKF is significantly smaller than that of IAKF (Lidar). At the other hand, the acceleration

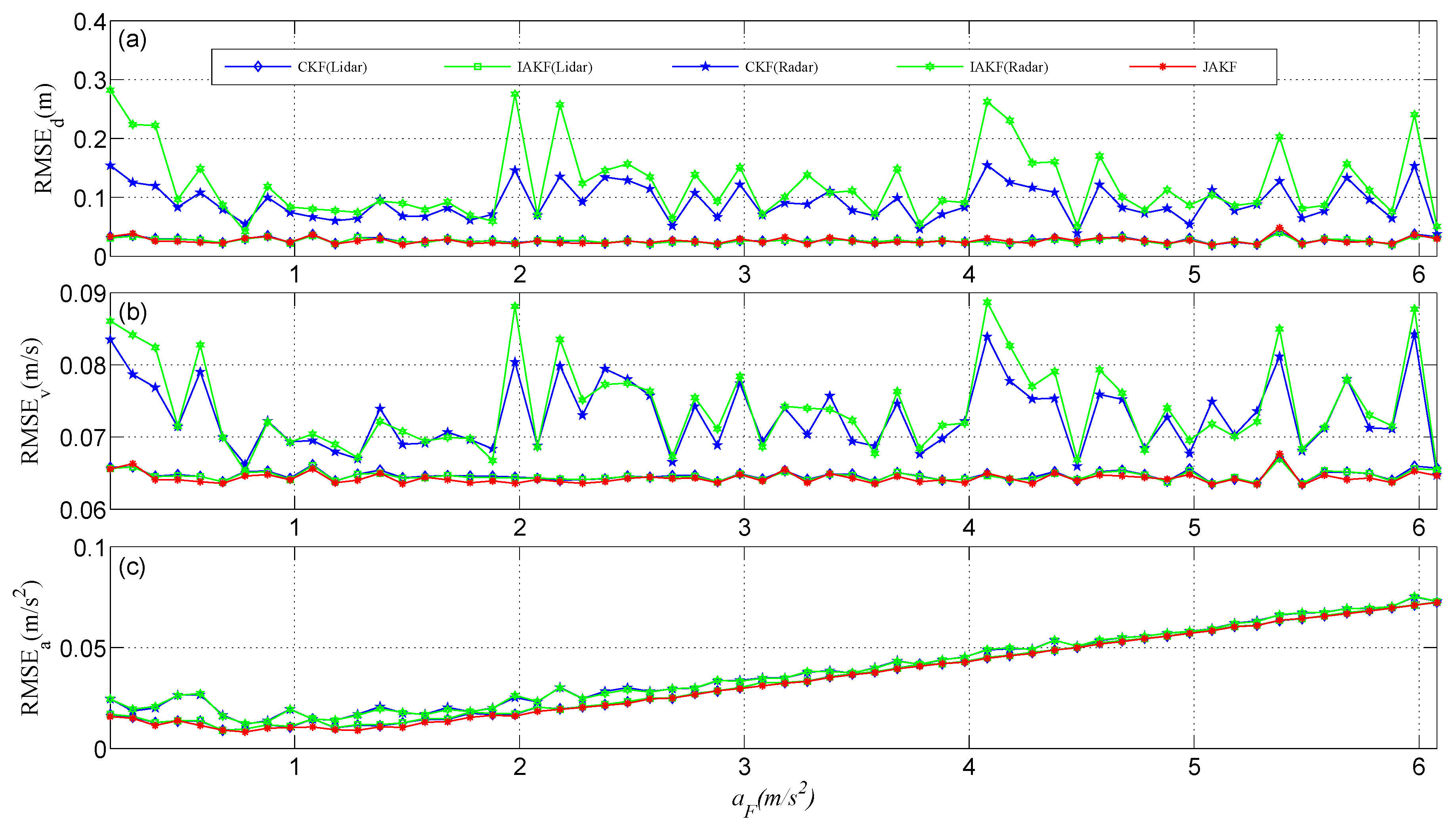

aF of the forward vehicle in the CA model is another matter. In the simulation,

aF is increased by 0.1 m/s

2 per time from 0.18 m/s

2 to 6.08 m/s

2 and the noise variances of Lidar and Radar are fixed at 0.03 and 0.1, respectively.

Figure 4 compares the motion state estimation against different acceleration values

aF. JAKF contributes the maximum RMSE of the displacement 0.0403 m and the average 0.0261 m, the maximum RMSE of the velocity 0.0664 m/s and the average 0.0643 m/s, and the maximum RMSE of the acceleration 0.0737 m/s

2 and the average 0.0346 m/s

2. In the increased acceleration situation, the RMSEs of CKF (Radar) and IAKF (Radar) are larger than the RMSEs of CKF (Lidar), IAKF (Lidar), and JAKF.

Regarding the comparative results in

Figure 3 and

Figure 4, we can conclude: (i) JAKF enables bounding the RMSE of the motion state estimation to an acceptable level, so the JAKF is robust; (ii) JAKF outputs the smallest RMSE of the motion state estimation, so the JAKF is more accurate than CKF and IAKF; (iii) JAKF uses multi-sensors data fusion to realize the global adaptation, so JAKF can be more adaptive than the single-sensor adaptive filter through performance compensation between different sensors.

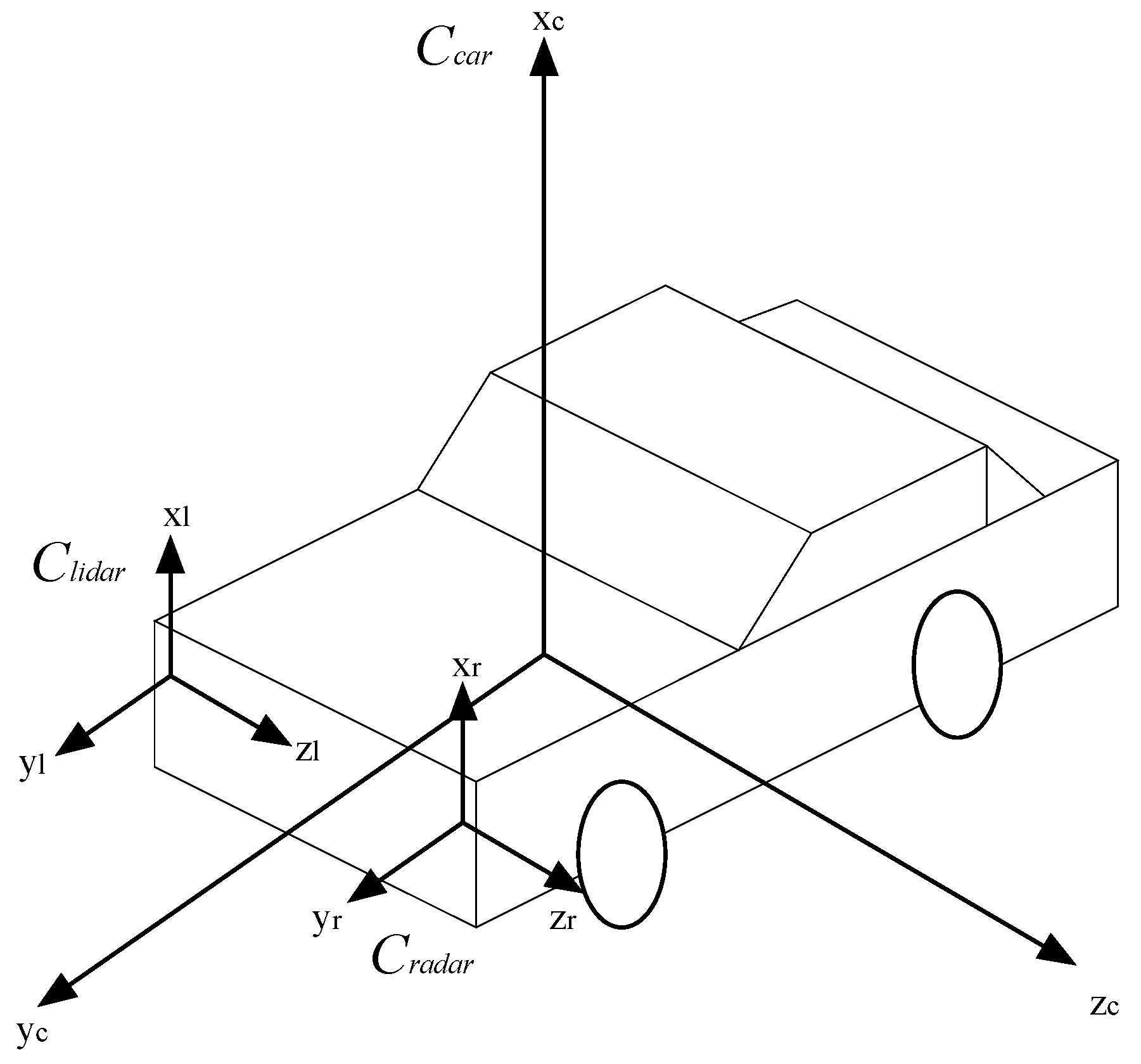

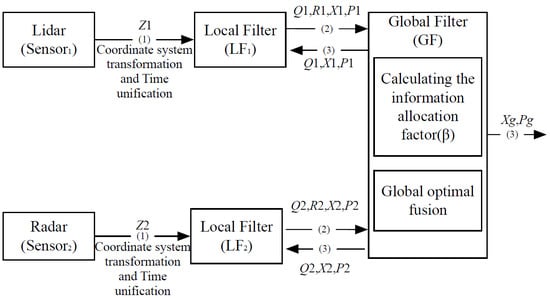

4.2. Experiment

To obtain accurate data, the test car is equipped with a high precision URG-04LX Lidar developed by Hokuyo (Osaka, Japan), and a millimeter-wave Radar ESR, developed by Delphi (Warren, OH, USA). The forward car collects the displacement, velocity, and acceleration from a Controller Area Network (CAN) bus as a benchmark that is used to compare with the filtered results to evaluate JAKF. CAN bus is a vehicle bus standard designed to allow microcontrollers and devices to communicate with each other in applications. The motion states of vehicles can be easily obtained from some vehicle-sensors by CAN bus. CAN bus even can correct the current velocity and direction.

Table 2 lists the parameters of URG-04LX and ESR.

Figure 5 shows the employed URG-04LX and ESR in the experiments, respectively.

Figure 6 shows the test car and the forward car, respectively.

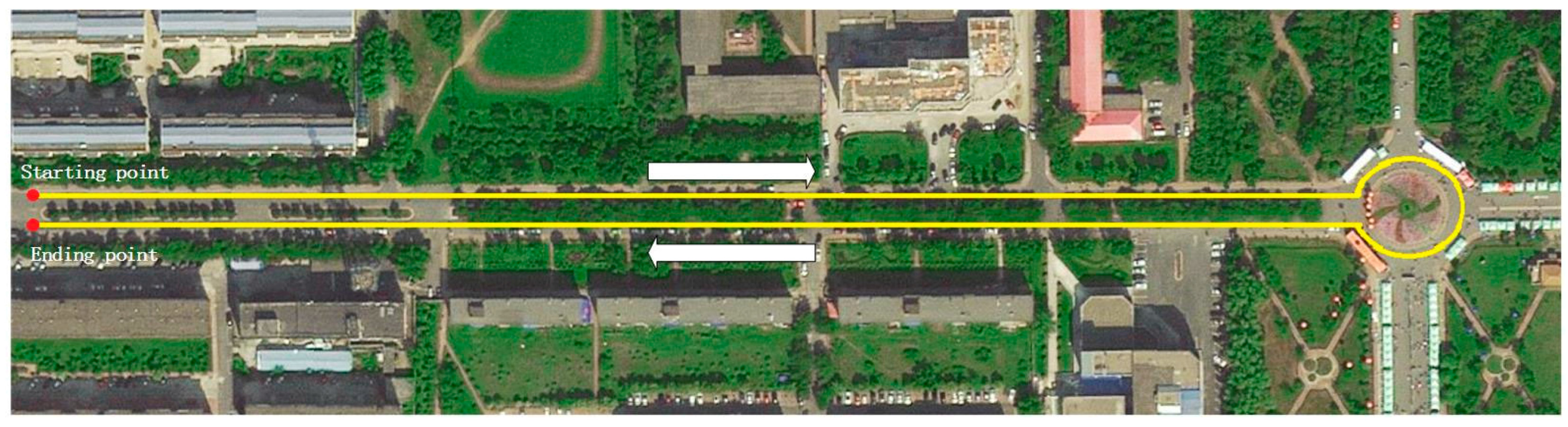

Figure 7 shows the experimental scenario and route.

In the experiments, R1, R2, Q1 and Q2 represent the noises of local filters LF1 and LF2, and their initial values are 0.03, 0.1, 0.000001 and 0.000001, respectively. R1 and R2 are measurement noise covariance in Kalman filter model and are calculated by IAE at each moment. Q1 and Q2 are constant and indicate the systemic noise covariance in Kalman filter model. The sliding window size of the innovation sequence is 30, and the given confidential level α is 0.005 in Chi-square distribution, so (1) = 7.879. We conducted three experiments to evaluate the JAKF results. In the first two experiments, the forward car moves along a straight line at a constant and varying acceleration, respectively. In the third, the forward car is permitted to change lanes and thus produces obvious displacement in the lateral.

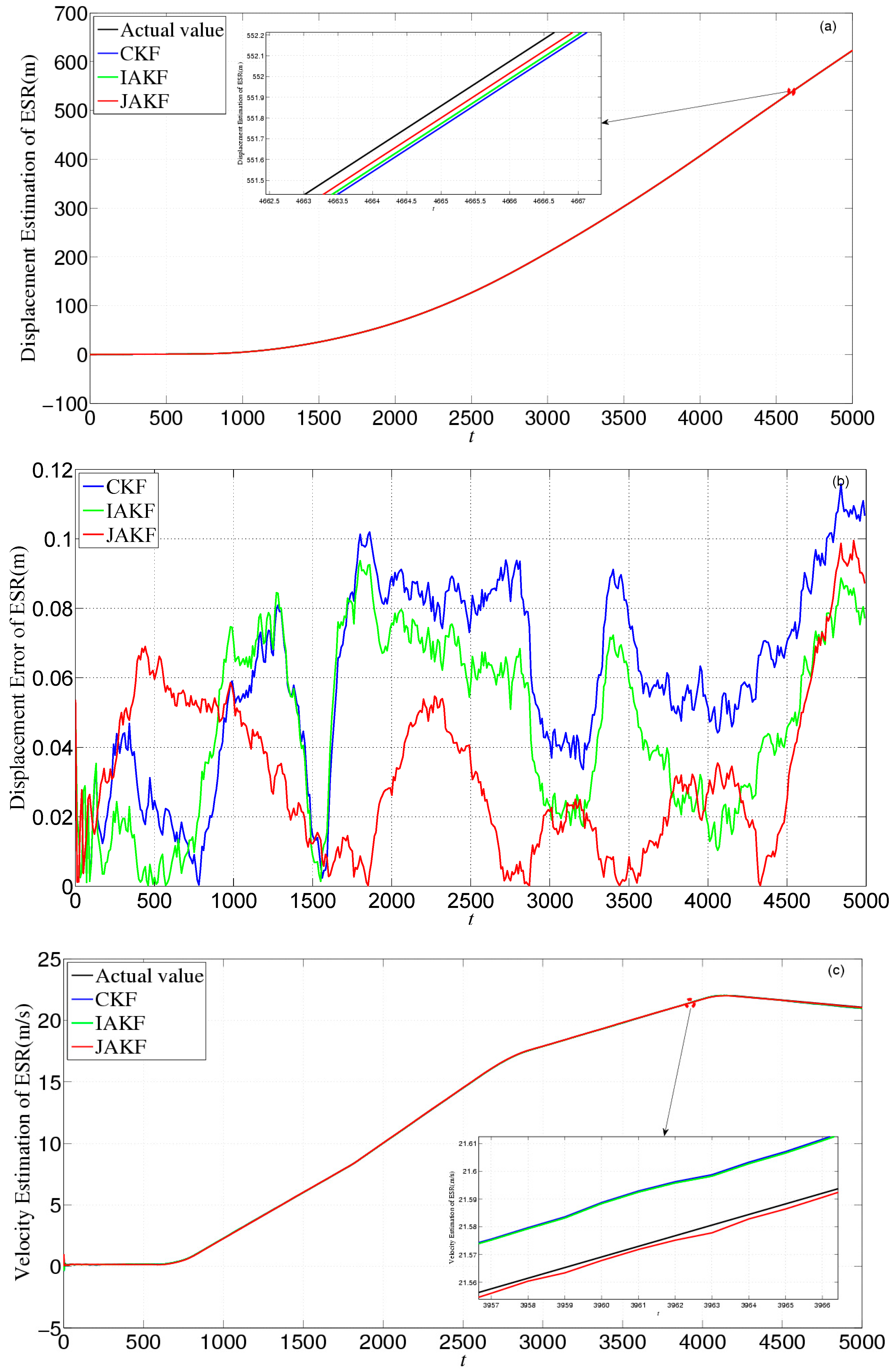

In the first experiment, the forward car just shifts visibly in the vertical, so we only focus on the longitudinal motion information. The initial longitudinal velocity, acceleration, and displacement of the forward vehicle are 5.43 m/s, 0.18 m/s2, and 0 m, respectively. The test car follows the forward car so as to ensure sensors can detect the forward car. We collected 5000 samples with frequency 100 Hz.

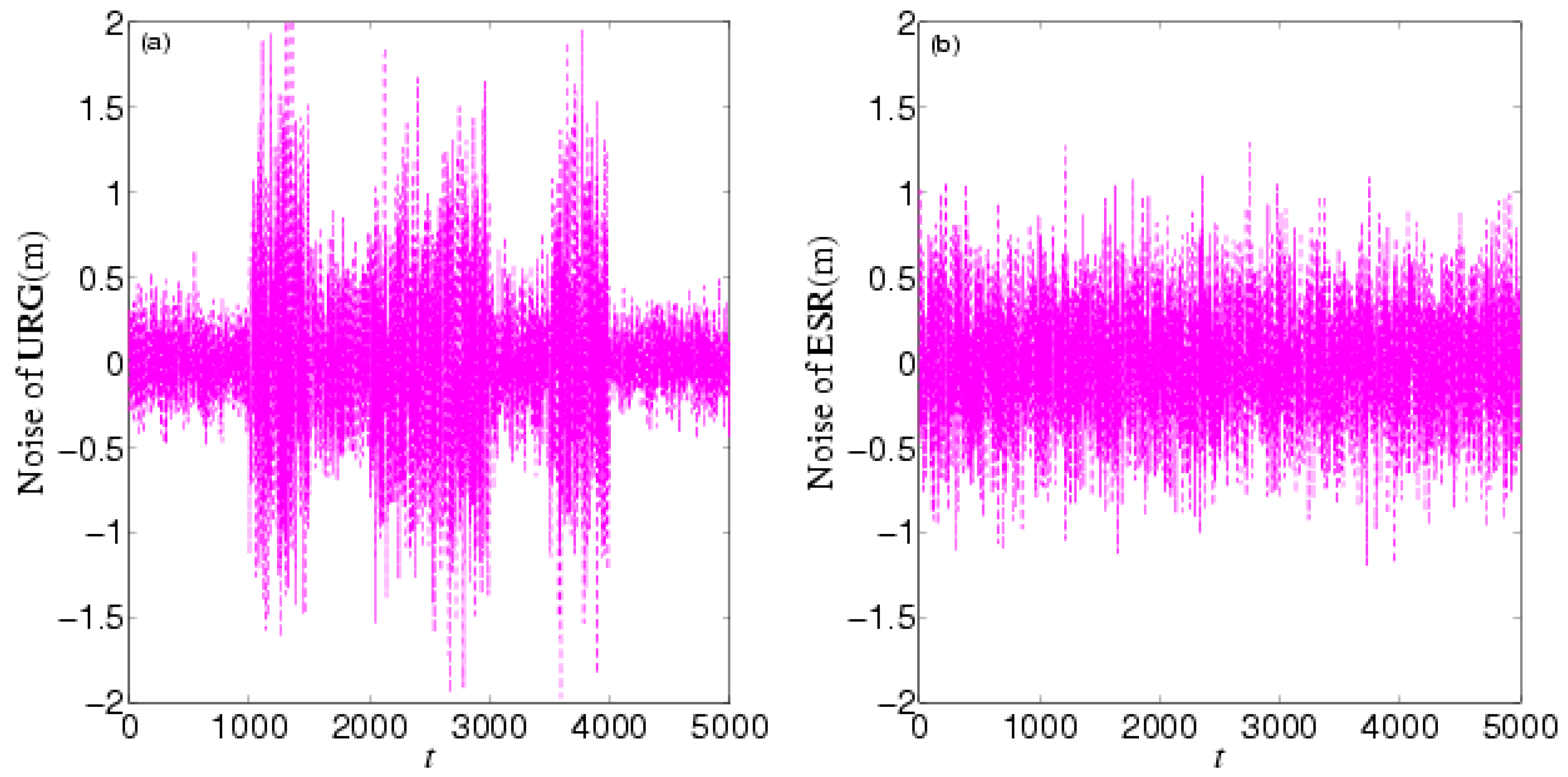

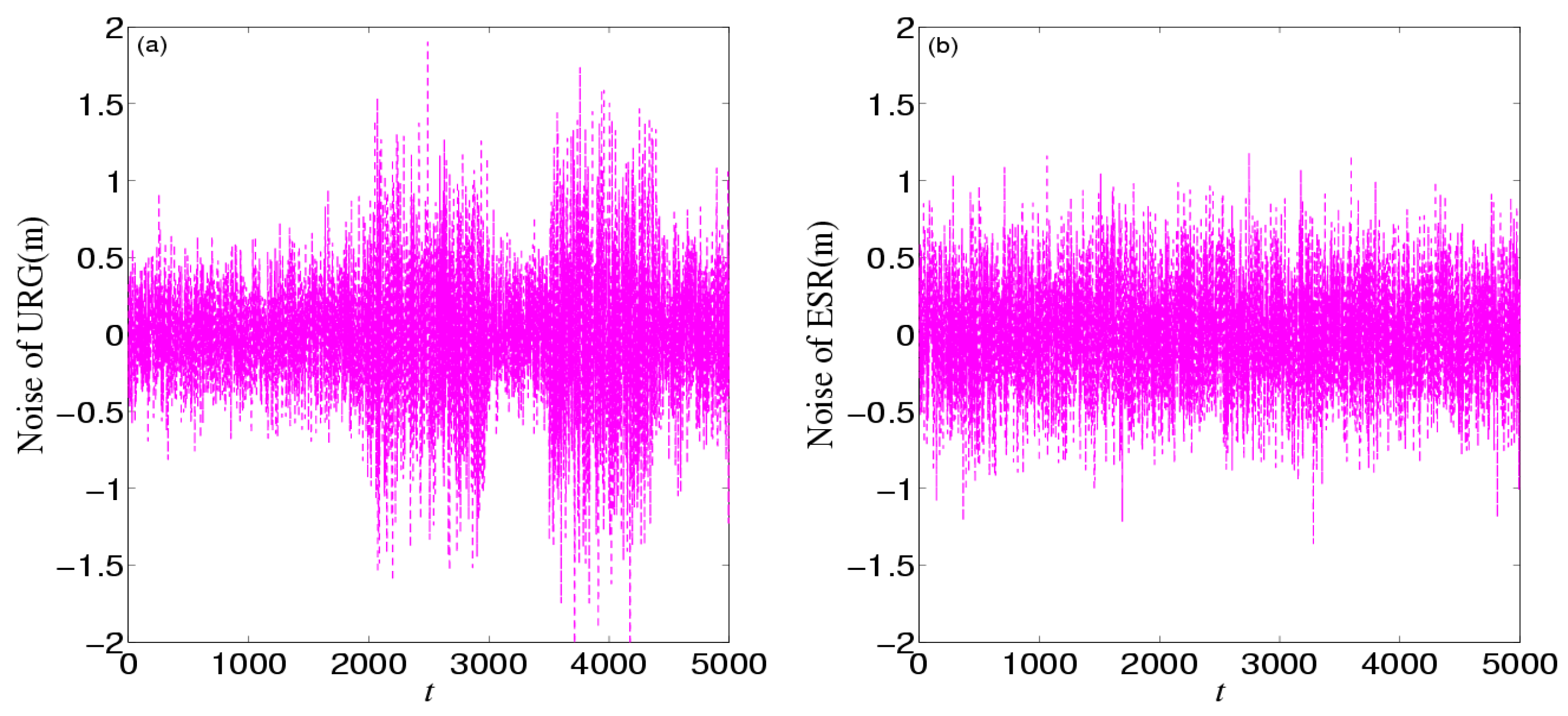

Figure 8 shows the noises of URG and ESR, respectively. URG is suffered from the continuous high noise within the 1000th~1500th time slots, the 2000th~3000th time slots, and the 3500th~4000th time slots, while the noise of ESR always holds steady, but URG has less error than ESR when working well during the 1500th~2000th, 3000th~3500th, and the 4000th~5000th time slots.

Figure 9 provides the measurement noise V-C matrix

R of the local-filter output of URG and ESR, which indicates the

R of JAKF can adapt to the variation of the noise.

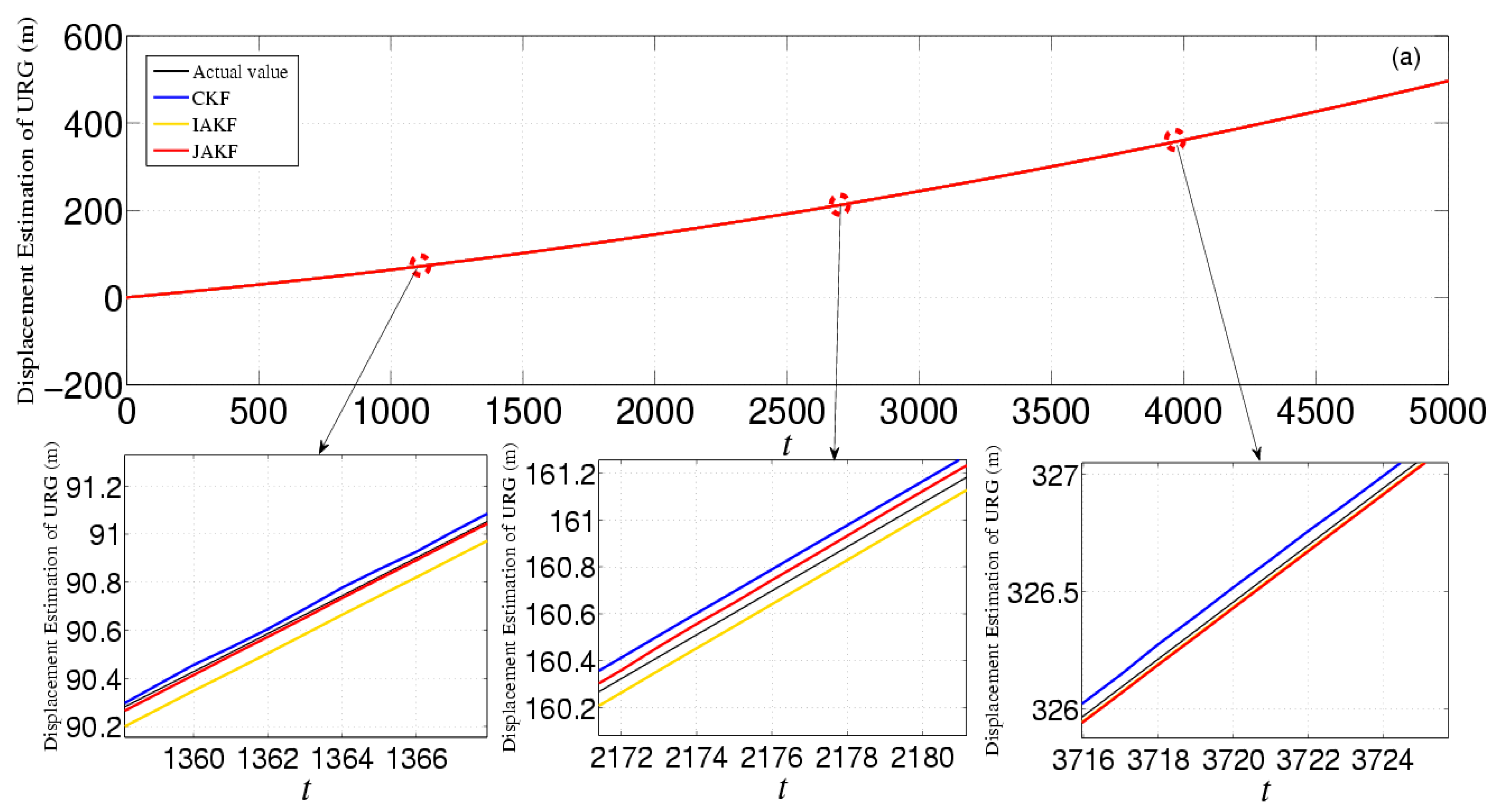

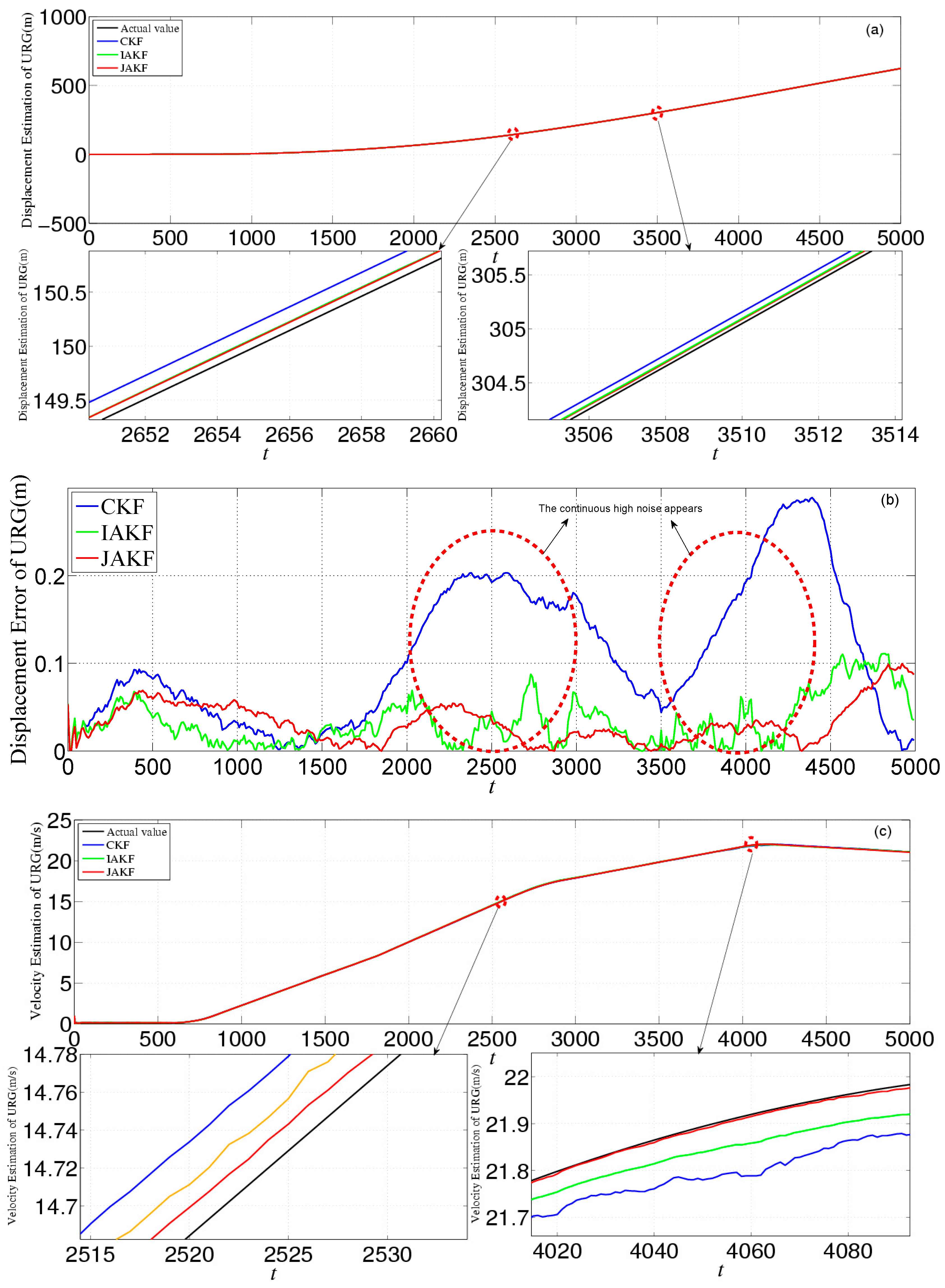

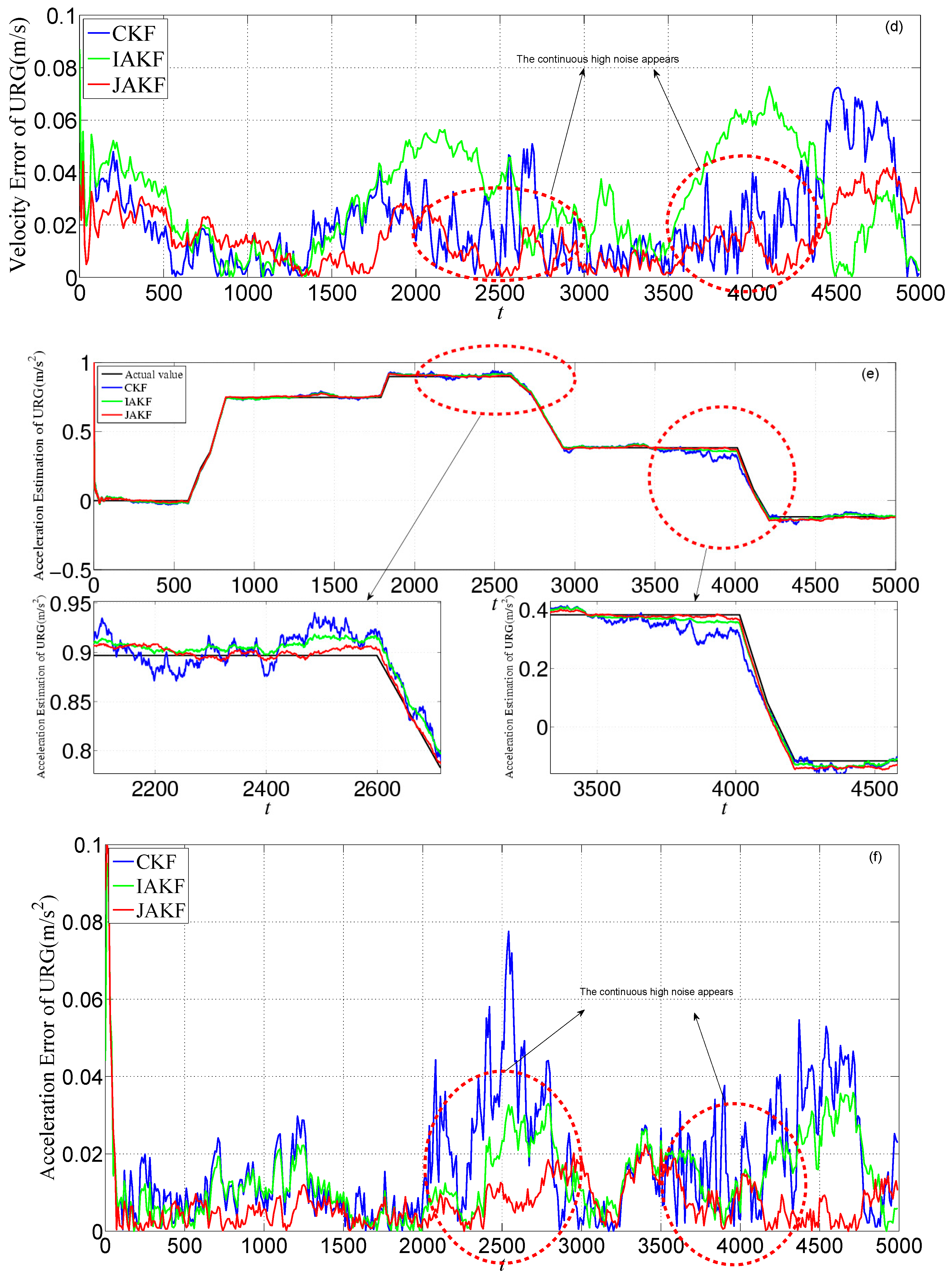

Figure 10 provides the estimation and error comparison of URG. The estimation of error is the abs value of the difference between the actual and estimation values in the same time slot. After the 500th time slot, all the focused three filters converge to a stable state. When the suffered measure noise by URG is normal, these three filtering algorithms can output the normal results. But when the continuous high noise appears during the 1000th~1500th, 2500th~3000th, and 3500th~4000th time slots, the noise variance

R of URG is increased. In this case, the CKF is incapable of dealing with the continuous serious disturbance, while the JAKF still can produce higher accuracy than CKF and IAKF. At the 3051th time slot, the absolute error of the longitudinal displacement is about 0.2665 m in CKF, while 0.0826 m in IAKF and 0.0392 m in JAKF. At the 3023th time slot, the error of the longitudinal velocity is about 0.0826 m/s in CKF, and 0.0725 m/s in IAKF, while 0.0222 m/s in JAKF. At the 3001th time slot, the error of the longitudinal acceleration is about 0.0560 m/s

2 in CKF, while 0.0298 m/s

2 in IAKF, and 0.0148 m/s

2 in JAKF.

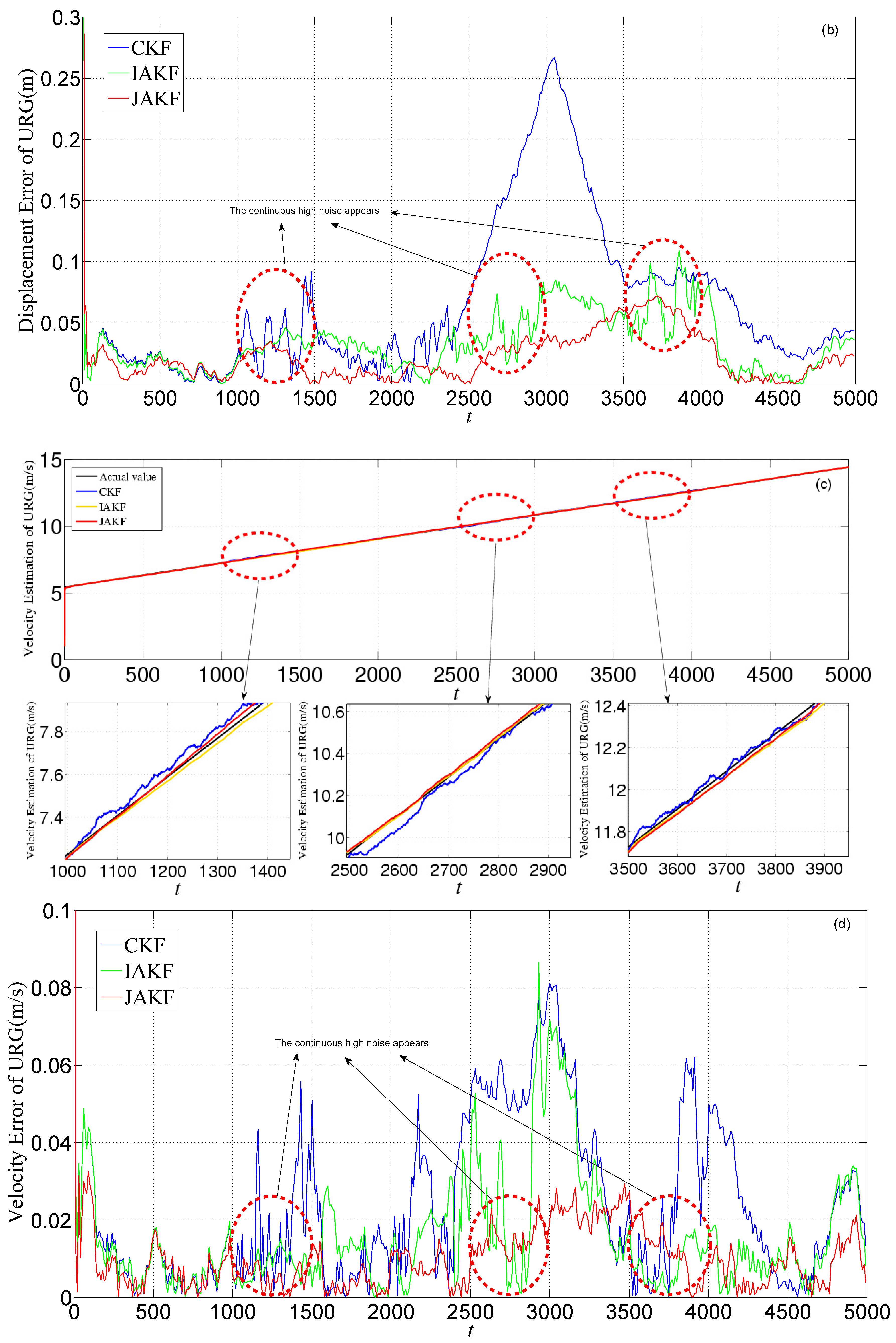

Figure 11 gives the estimation and error comparison of ESR. After the filters converged, all the measured noises of the three filters fluctuate within the theoretical range. The errors in CKF and IAKF are nearly similar, while the JAKF still behaves best w.r.t. accuracy. At the 3571th time slot, the error of the longitudinal displacement is about 0.0649 m in JAKF, while 0.1147 m in CKF and 0.1034 m in IAKF. At the 3466th time slot, the error of the longitudinal velocity is about 0.0626 m/s in CKF, and 0.0605 m/s in IAKF, while 0.0289 m/s in JAKF. At the 4776th time slot, the error of the longitudinal acceleration is about 0.0046 m/s

2 in JAKF, while 0.0297 m/s

2 in CKF and 0.0257 m/s

2 in IAKF.

Table 3 lists the comparisons of CKF, IAKF and JAKF about root-mean-square error, maximum error, and variance of filtered results. In

Table 3, the RMS error and the variance of JAKF is smaller than the ones of CKF and IAKF, so JAKF has better stability and fault-tolerance against the continuous varying noise, and the accuracy of JAKF is higher than that of the single-sensor filter in the situation where the acceleration of the forward car is constant.

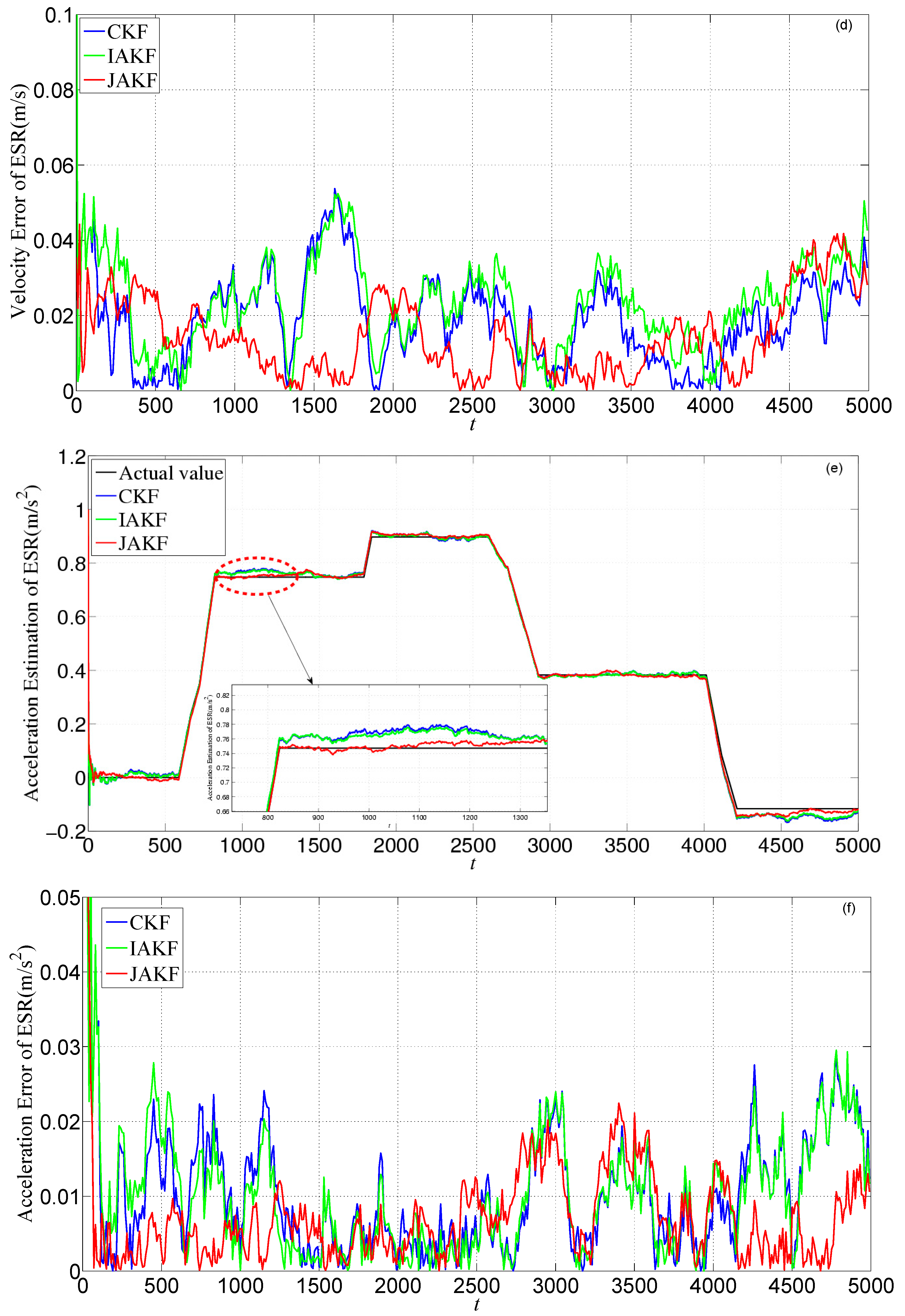

In the second experiment, the forward car moved along a straight line at a varying acceleration. As the first experiment, we only focus on the longitudinal motion in this situation. The initial longitudinal velocity, acceleration, and displacement of the forward vehicle are 0.15 m/s, 0 m/s2, and 0 m, respectively. The test car follows the forward car so as to ensure sensors can detect the forward car. We collected 5000 samples with frequency 100 Hz.

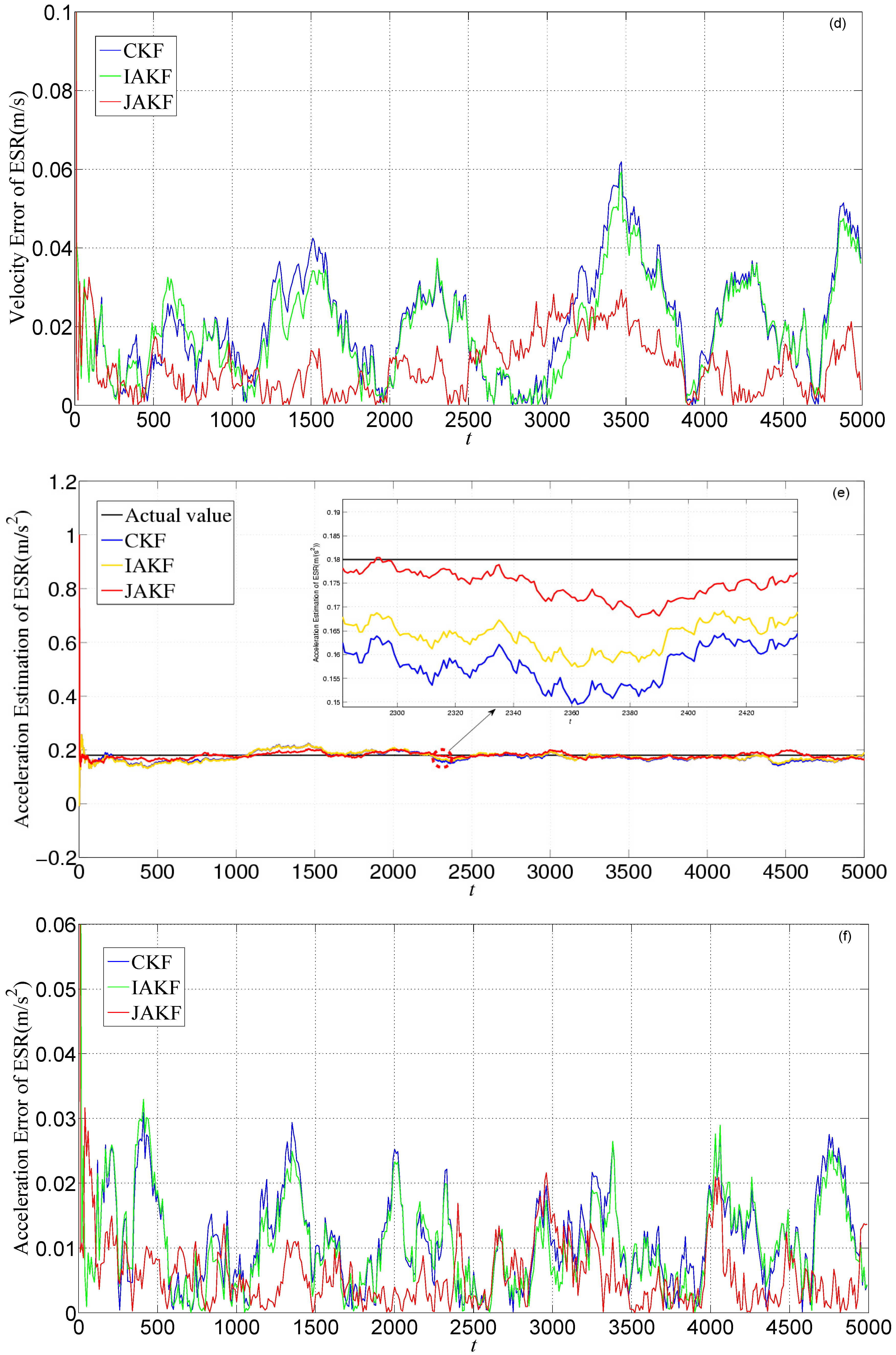

Figure 12 shows the noises of URG and ESR. URG suffered from the continuous high noise within the 2000th~3000th time slots, and the 3500th~4400th time slots, while the noise of ESR still holds steady all the time.

Figure 13 provides the measurement noise V-C matrix

R of the local-filter output of URG and ESR.

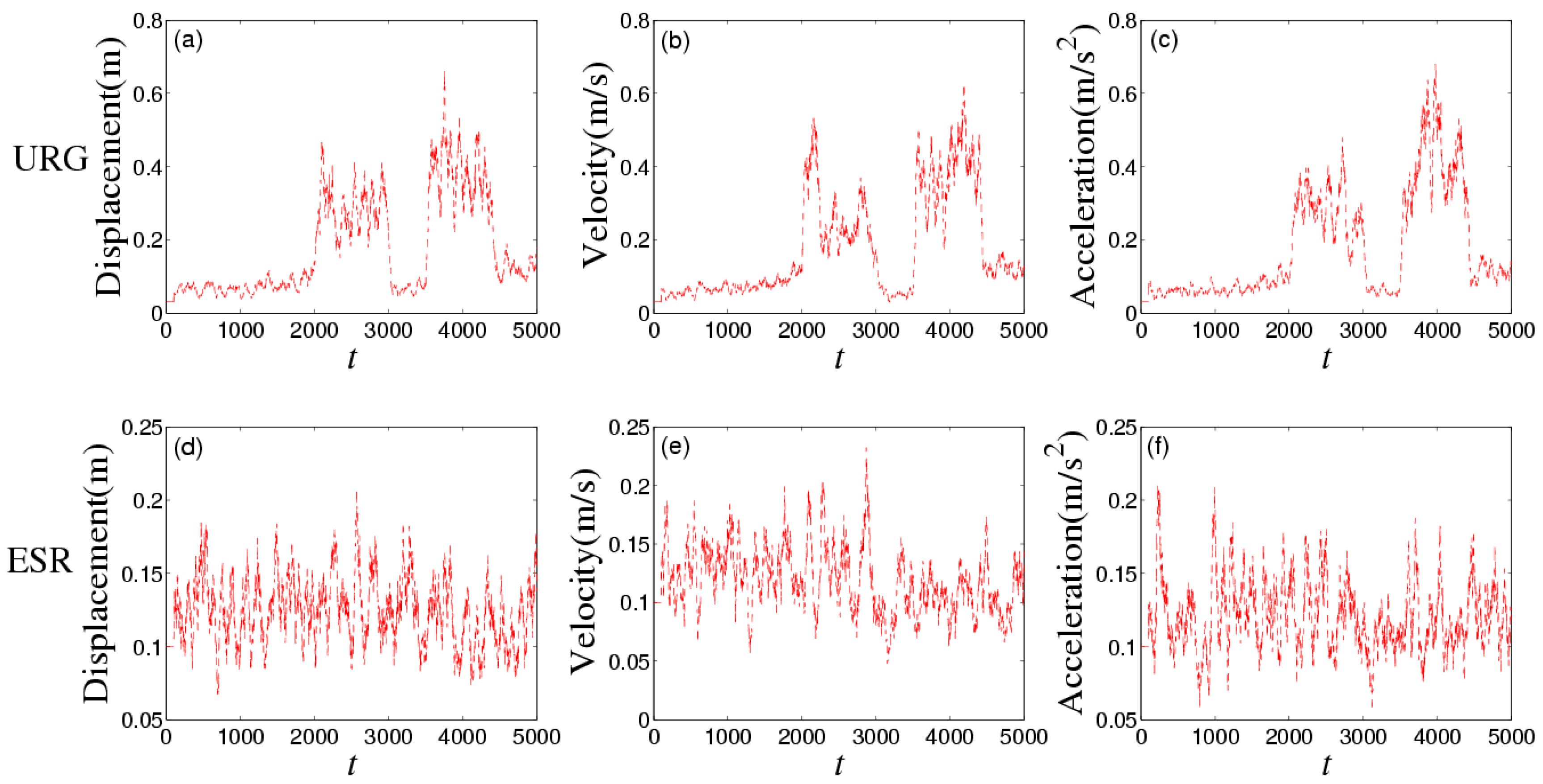

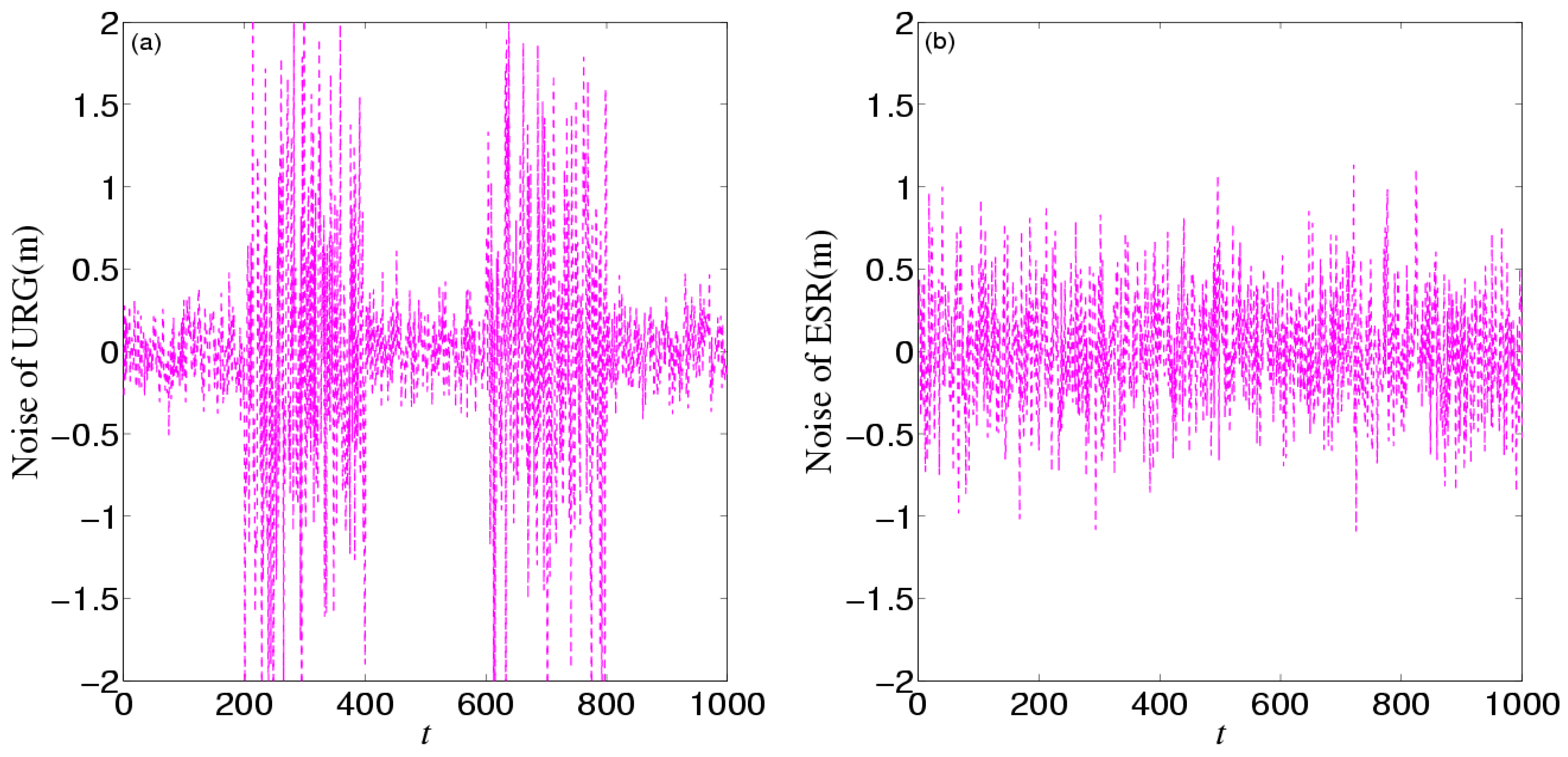

Figure 14 provides the estimation and error comparison of URG. The continuous high noise appears during the 2000th~3000th time slots and the 3500th~4400th time slots, during which the CKF is badly divergent while the JAKF maintains convergent. For example, at the 2712th time slot, the absolute error of the longitudinal displacement is about 0.1486 m in CKF, while 0.0998 m in IAKF and 0.0152 m in JAKF; the error of the longitudinal velocity is about 0.0513 m/s in CKF, and 0.0226 m/s in IAKF, while 0.0185 m/s in JAKF; and the error of the longitudinal acceleration is about 0.0486 m/s

2 in CKF, while 0.0375 m/s

2 in IAKF and 0.0151 m/s

2 in JAKF.

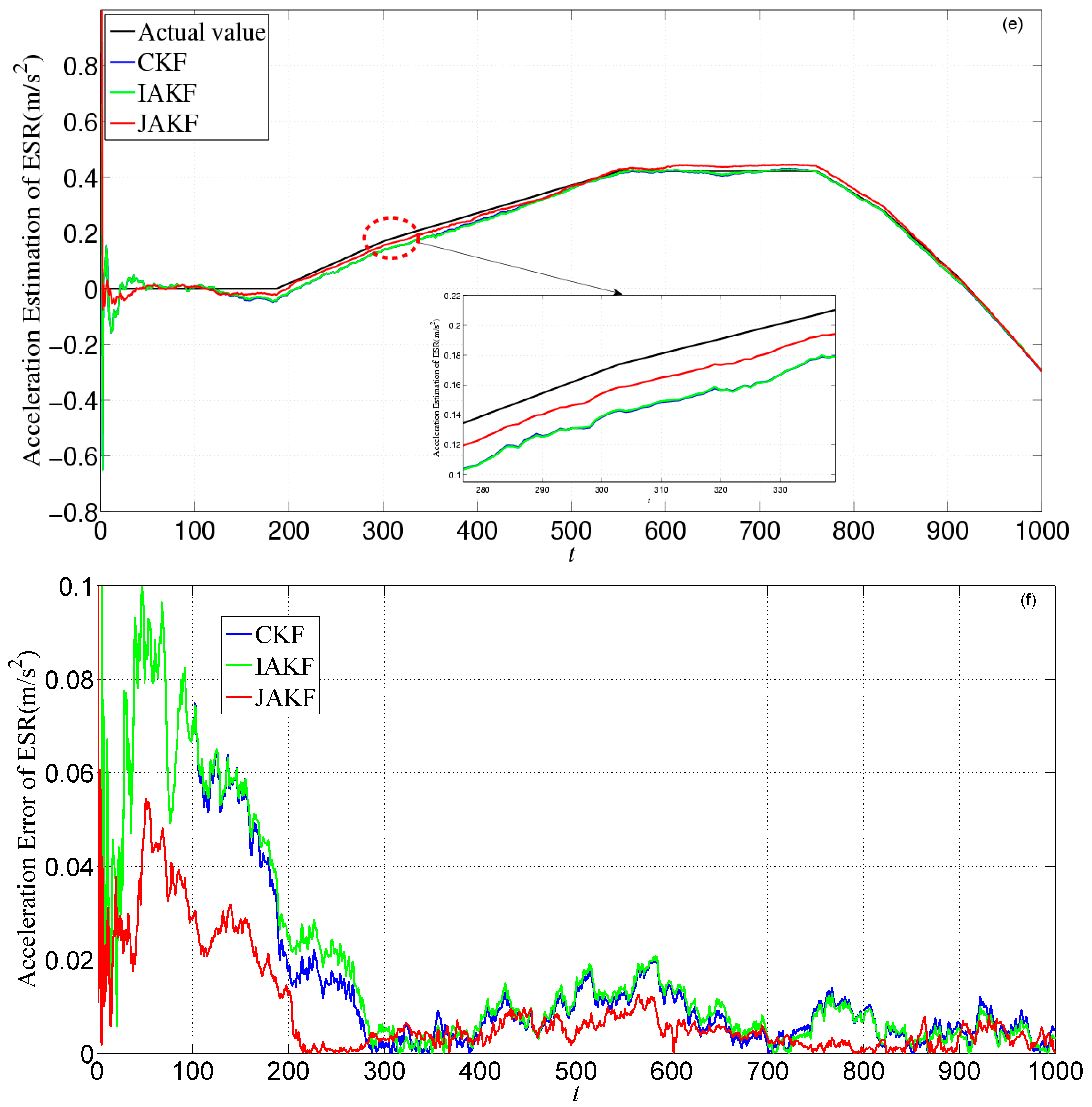

Figure 15 gives the estimation and error comparison of ESR. After the filters converged, all the measured noises of the three filters fluctuate within the theoretical range. The errors in CKF and IAKF are still nearly similar while the JAKF behaves best w.r.t. accuracy during most time slots. At the 2256th time slot, the error of the displacement is about 0.0482 m in JAKF, while 0.0883 m in CKF and 0.0752 m in IAKF; the error of the velocity is about 0.0326 m/s in CKF, and 0.0305 m/s in IAKF, while 0.0189 m/s in JAKF; and the error of the acceleration is about 0.0046 m/s

2 in JAKF, while 0.062 m/s

2 in CKF and 0.057 m/s

2 in IAKF.

Table 4 lists the comparisons of CKF, IAKF and JAKF about root-mean-square error, maximum error, and variance of filtered results.

In

Table 4, JAKF has high accuracy and stability even though the motion model of the forward car is dynamic. In a nutshell, a varying acceleration imposes little influence on JAKF.

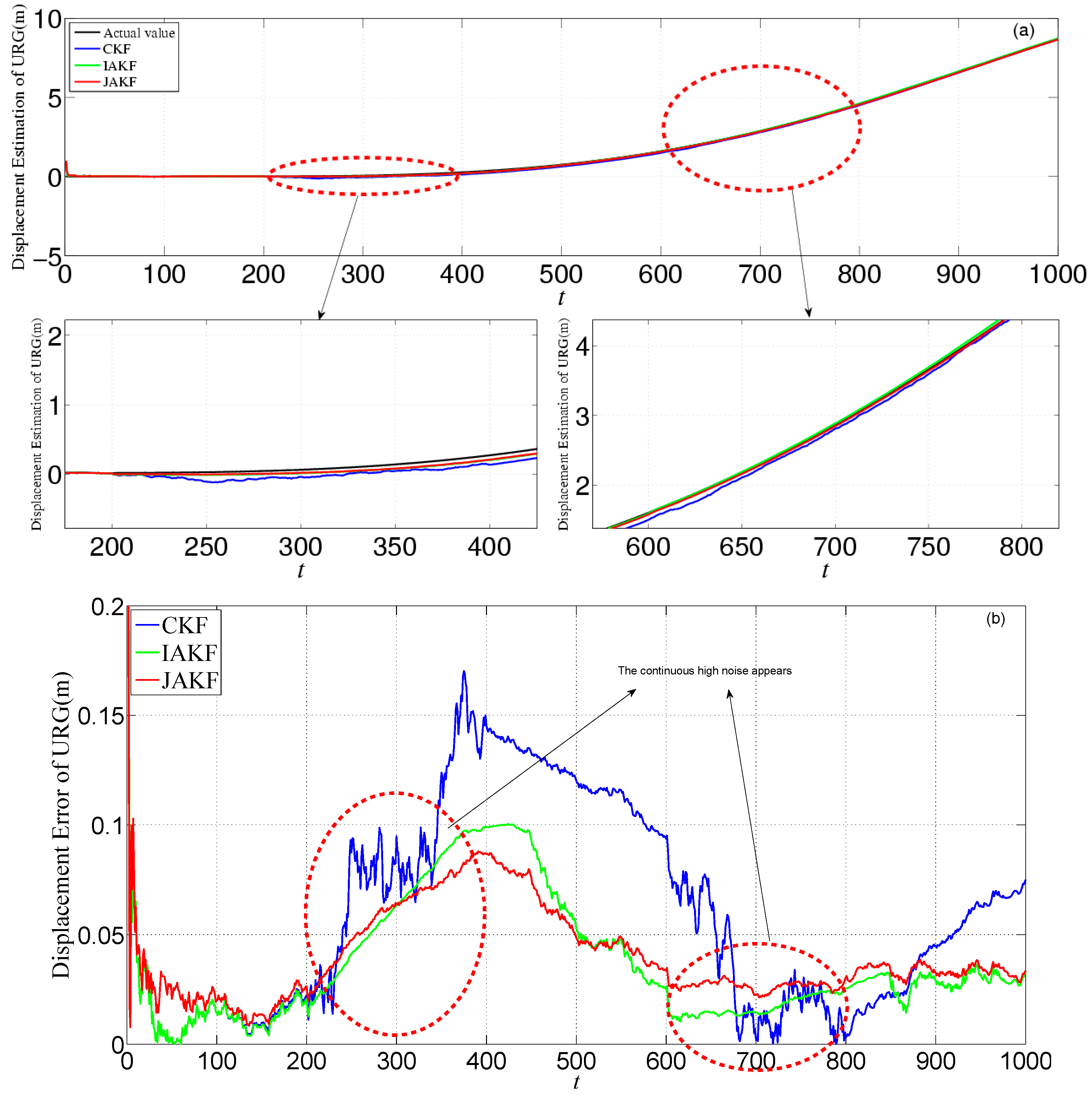

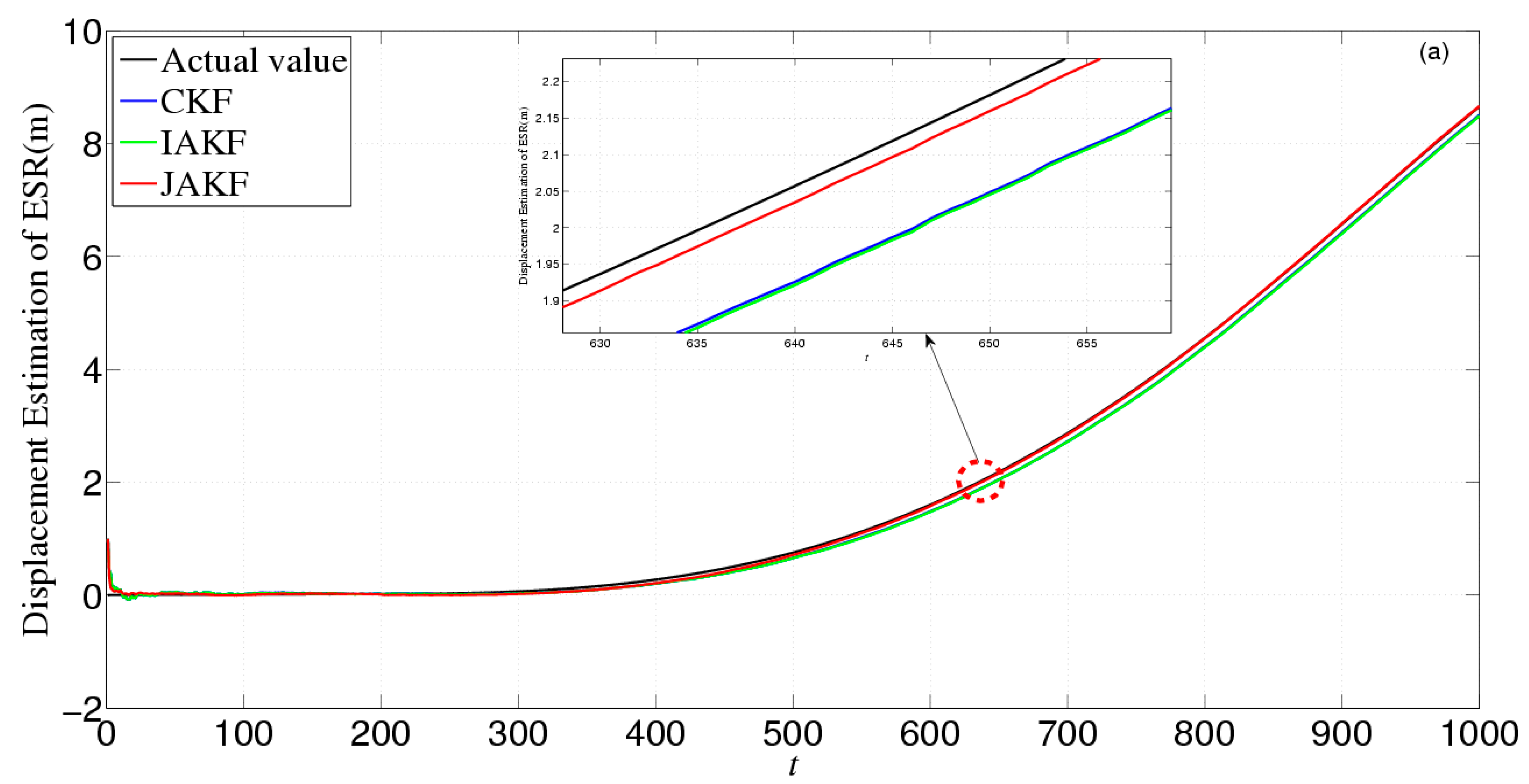

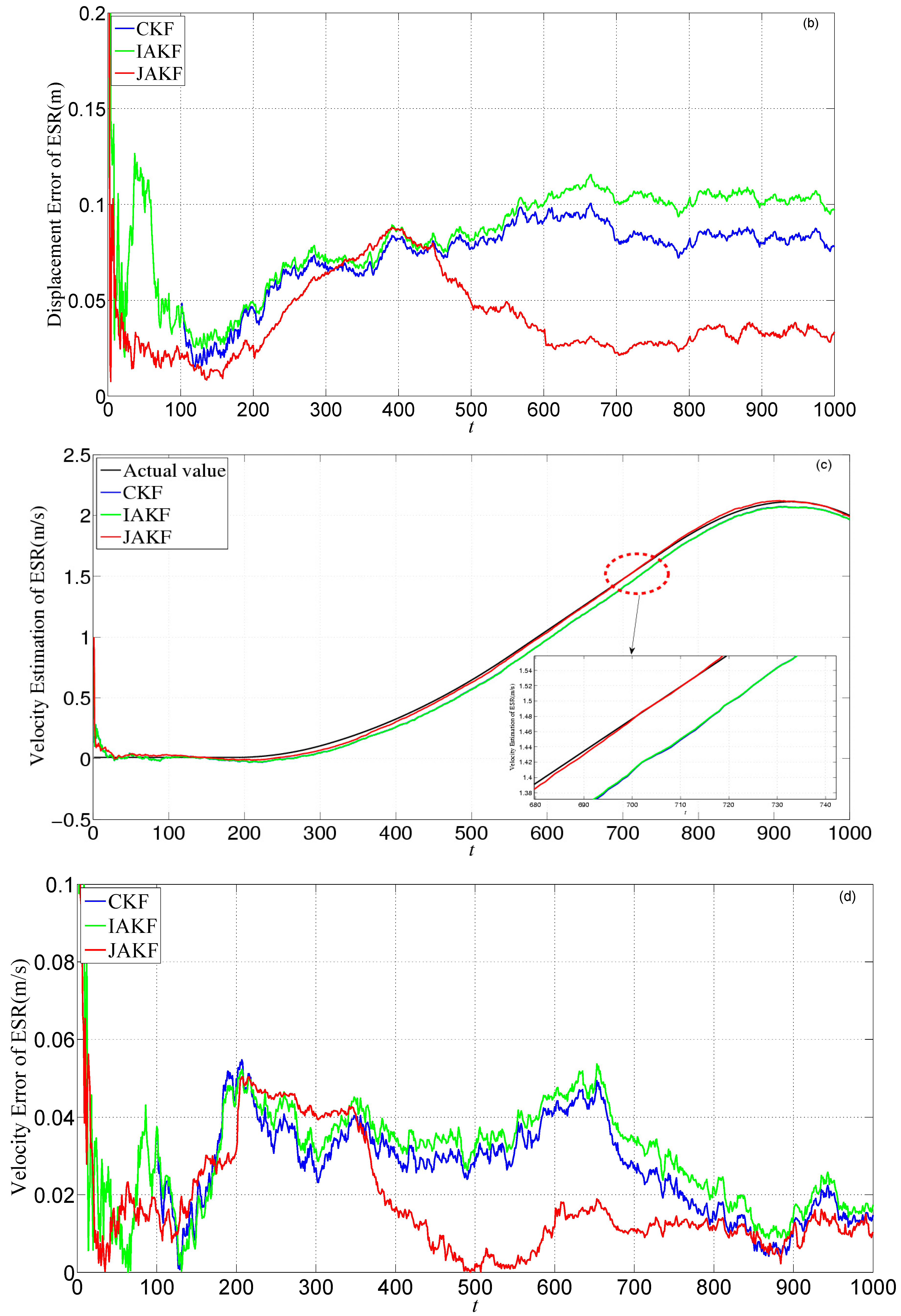

In the third experiment, the forward car is permitted to change lanes and thus produces obvious displacement in the lateral, so we mainly focus on the transverse motion of the forward car in this situation. The initial transverse velocity, acceleration, and displacement of the forward vehicle are 0 m/s, 0 m/s2, and 0 m, respectively. During the whole experiment, the test car moves along a straight line and keeps the inter-distance about 3 m~8 m apart from the forward car so as to ensure sensors can detect the forward car. We collected 1000 samples with frequency 100 Hz. We collected the transverse vehicle motion information to CKF, IAKF and JAKF, and correspondingly compared the transverse results with the former two longitudinal ones.

Figure 16 shows the noises of URG and ESR. URG is suffered from the continuous high noise within the 200th~400th time slots, and the 600th~800th time slots, while the noise of ESR still holds steady.

Figure 17 provides the measurement noise V-C matrix

R of the local-filter output of URG and ESR.

Figure 18 provides the estimation and error comparison of URG. The continuous high noise appears during the 200th~400th time slots and the 600th~800th time slots, during which the CKF fails to deal with it, while the JAKF maintains convergent. For example, at the 372th time slot, the error of the longitudinal displacement is about 0.178 m in CKF, while 0.0995 m in IAKF and 0.0852 m in JAKF; the error of the longitudinal velocity is about 0.1062 m/s in CKF and 0.0481 m/s in IAKF, while 0.0423 m/s in JAKF; and the error of the longitudinal acceleration is about 0.0341 m/s

2 in CKF, while 0.0105 m/s

2 in IAKF and 0.01 m/s

2 in JAKF.

Figure 19 gives the estimation and error comparison of ESR. After the filters converged, all the measured noises of the three filters fluctuate within the theoretical range. The errors in CKF and IAKF are nearly similar while the JAKF still behaves best w.r.t. accuracy. For example, at the 600th time slot, the error of the displacement is about 0.0412 m in JAKF, while 0.0954 m in CKF and 0.0986 m in IAKF; the error of the velocity is about 0.0428 m/s in CKF and 0.0402 m/s in IAKF, while 0.0125 m/s in JAKF; and the error of the acceleration is about 0.0081 m/s

2 in JAKF, while 0.0092 m/s

2 in CKF and 0.0095 m/s

2 in IAKF. Therefore, the accuracy of JAKF is stable in the situation where the forward car is permitted to change lanes.

Table 5 lists the comparisons of CKF, IAKF and JAKF about root-mean-square error, maximum error, and variance of filtered results.

In

Table 5, compared with the results of the first two experiments, the advantages of JAKF in accuracy and stability decline slightly, but the JAKF results are still better than the ones of CKF and IAKF.

Table 6 summarizes the ratio of accuracy improvement of JAKF in the three experiments.

In a nutshell, the JAKF extends the single adaptive filter through integrating the respective advantages of Lidar and Radar, and uses the noise variance R and the information allocation factor β to adjust the filtered results of the local and global filters, respectively, and thus can improve the fault tolerance and accuracy.