Robust Behavior Recognition in Intelligent Surveillance Environments

Abstract

:1. Introduction

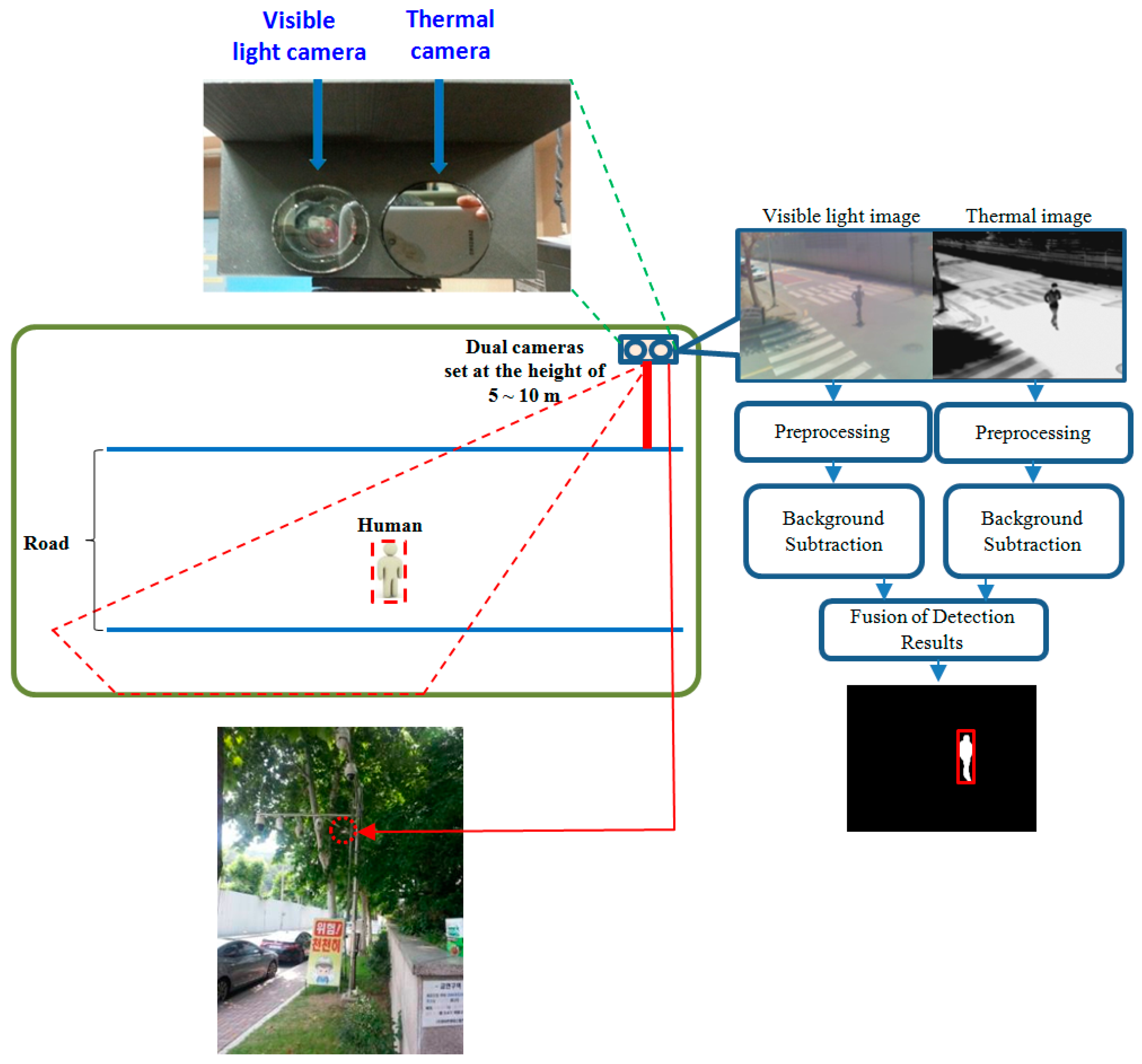

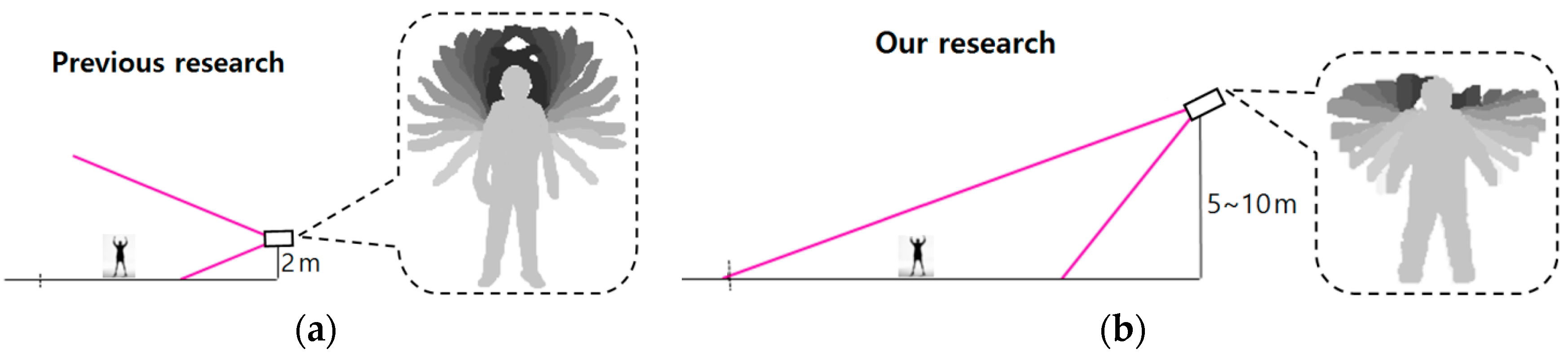

- Contrary to previous research where the camera is installed at the height of a human, we research behavior recognition in environments in which surveillance cameras are typically used, i.e., where the camera is installed at a height much higher than that of a human. In this case, behavior recognition usually becomes more difficult because the camera looks down on humans.

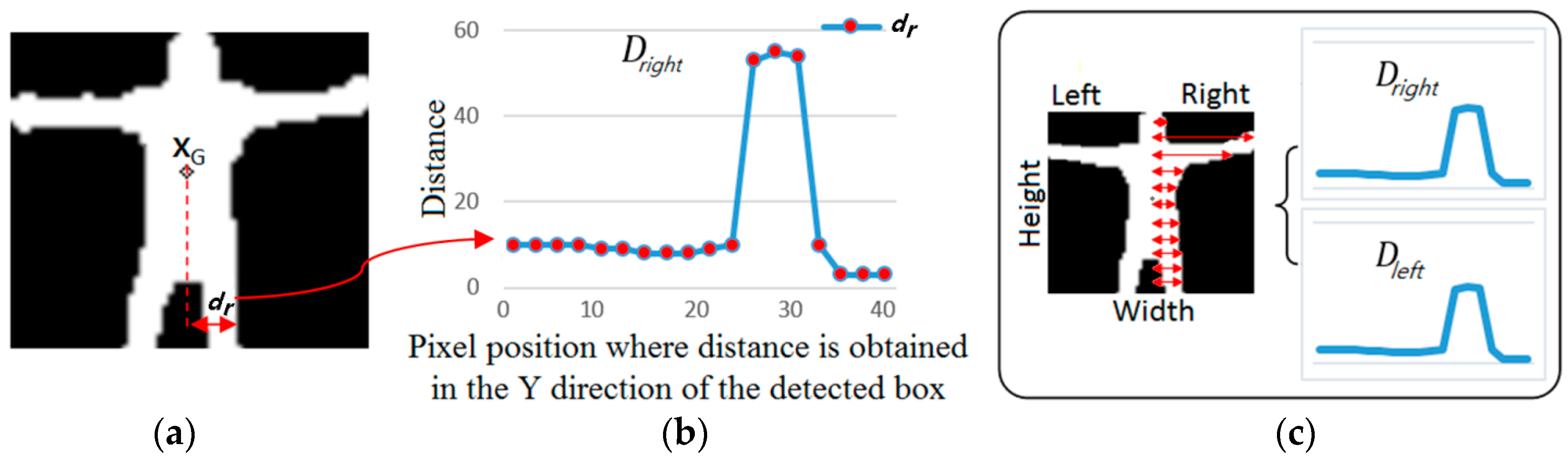

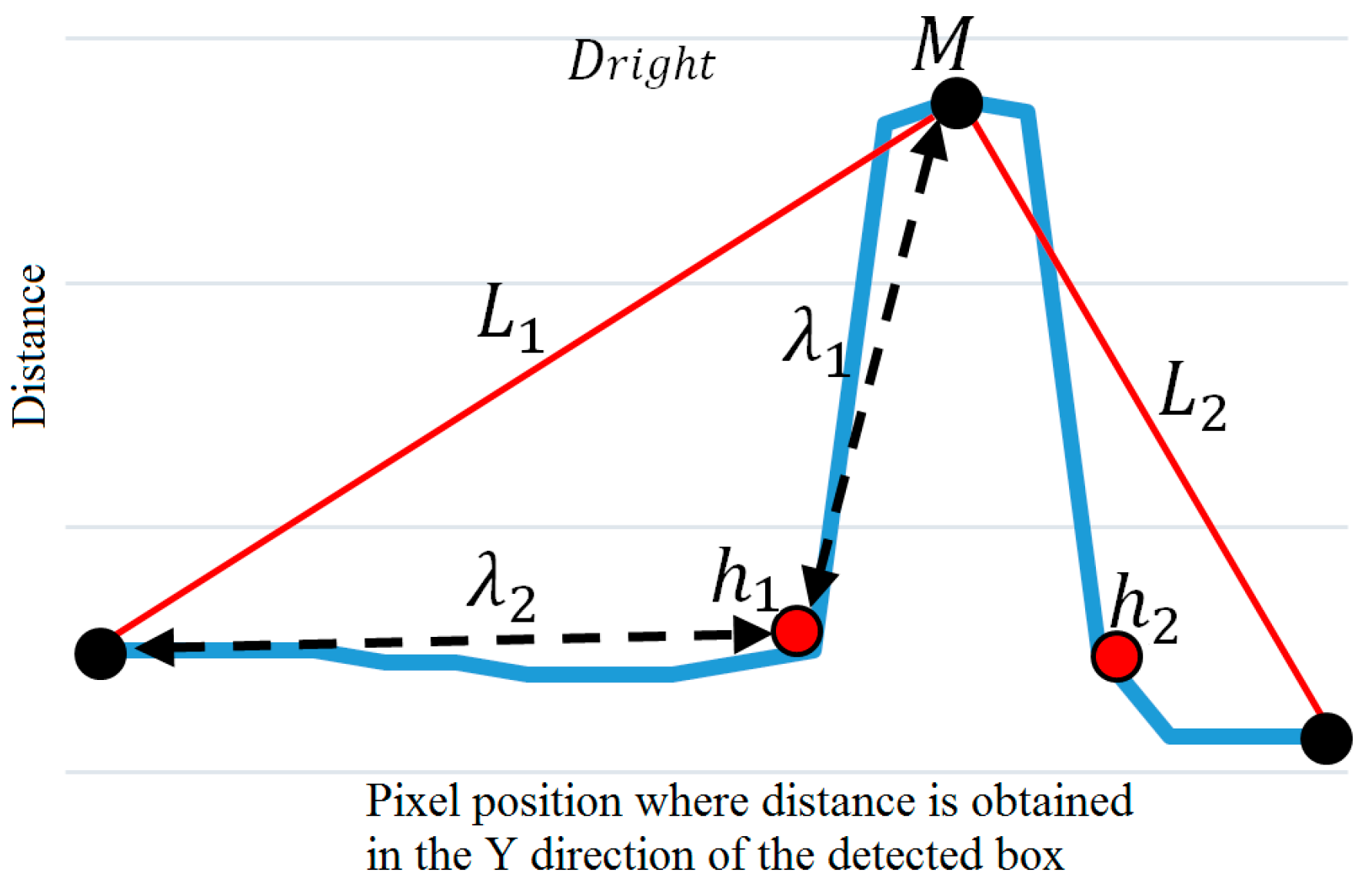

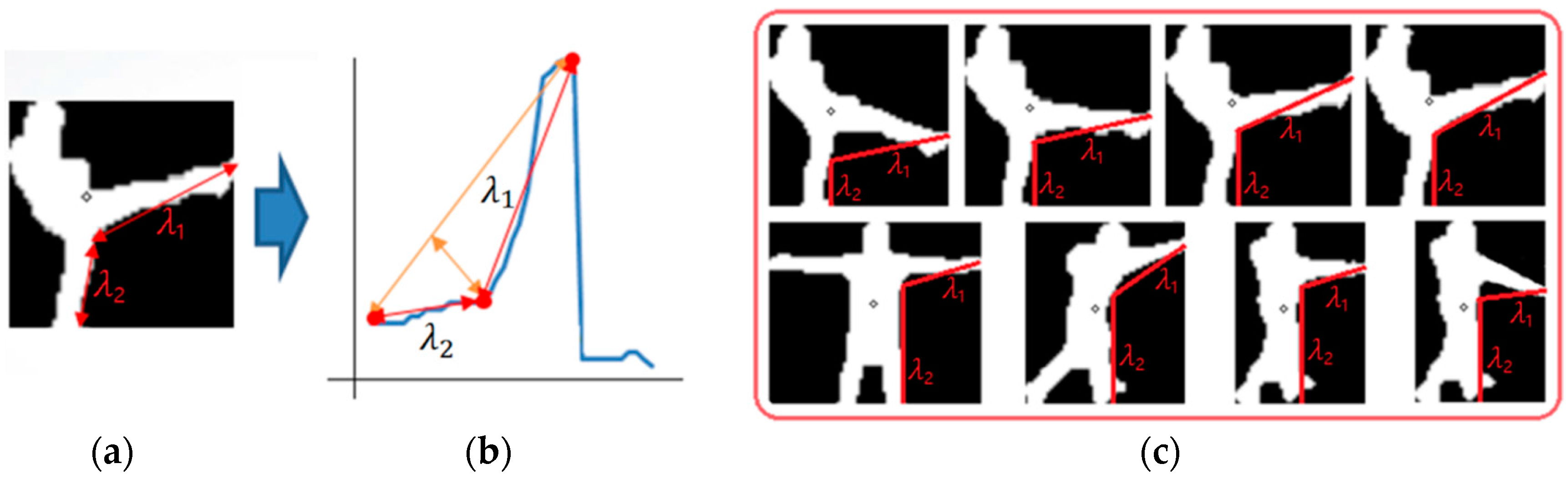

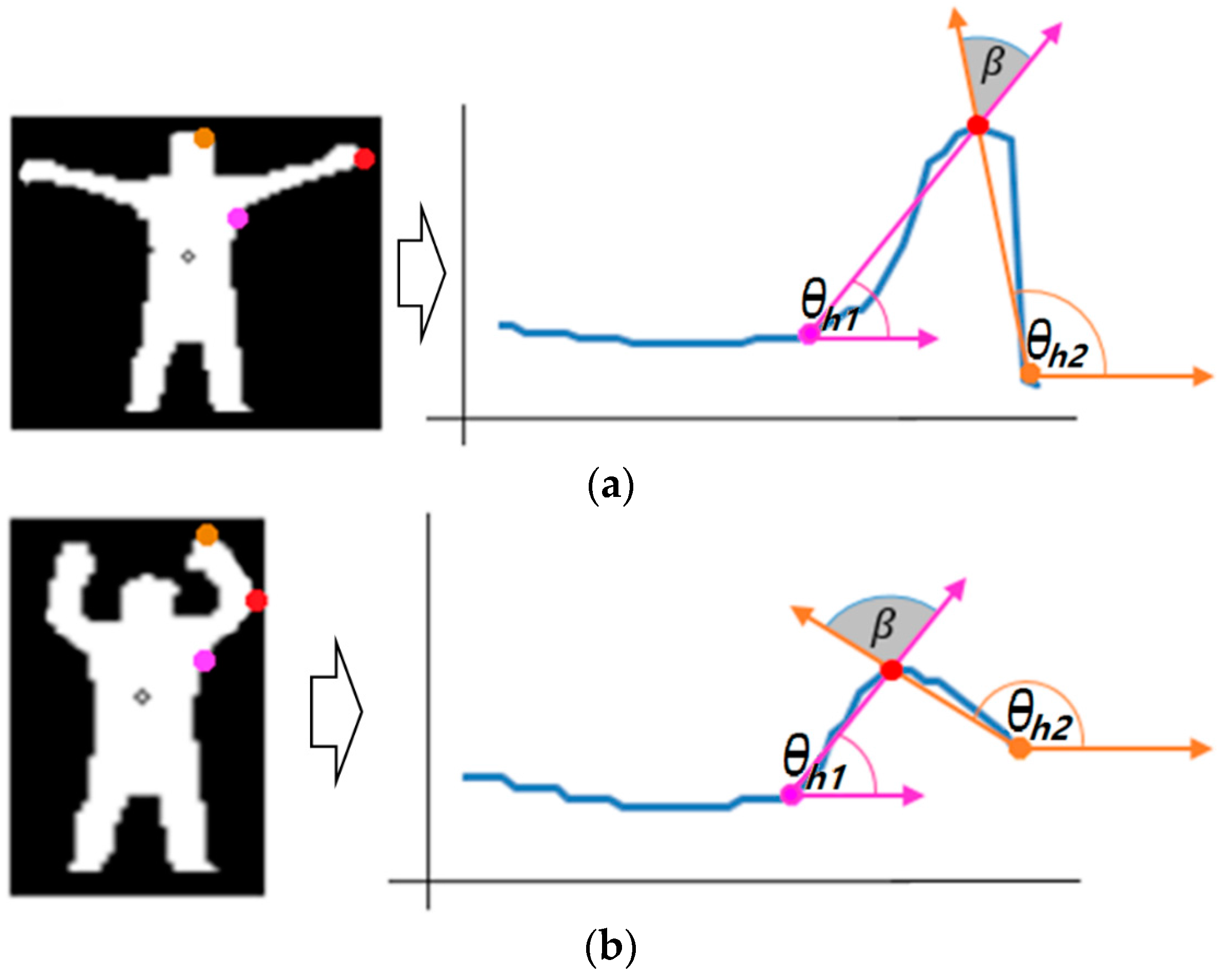

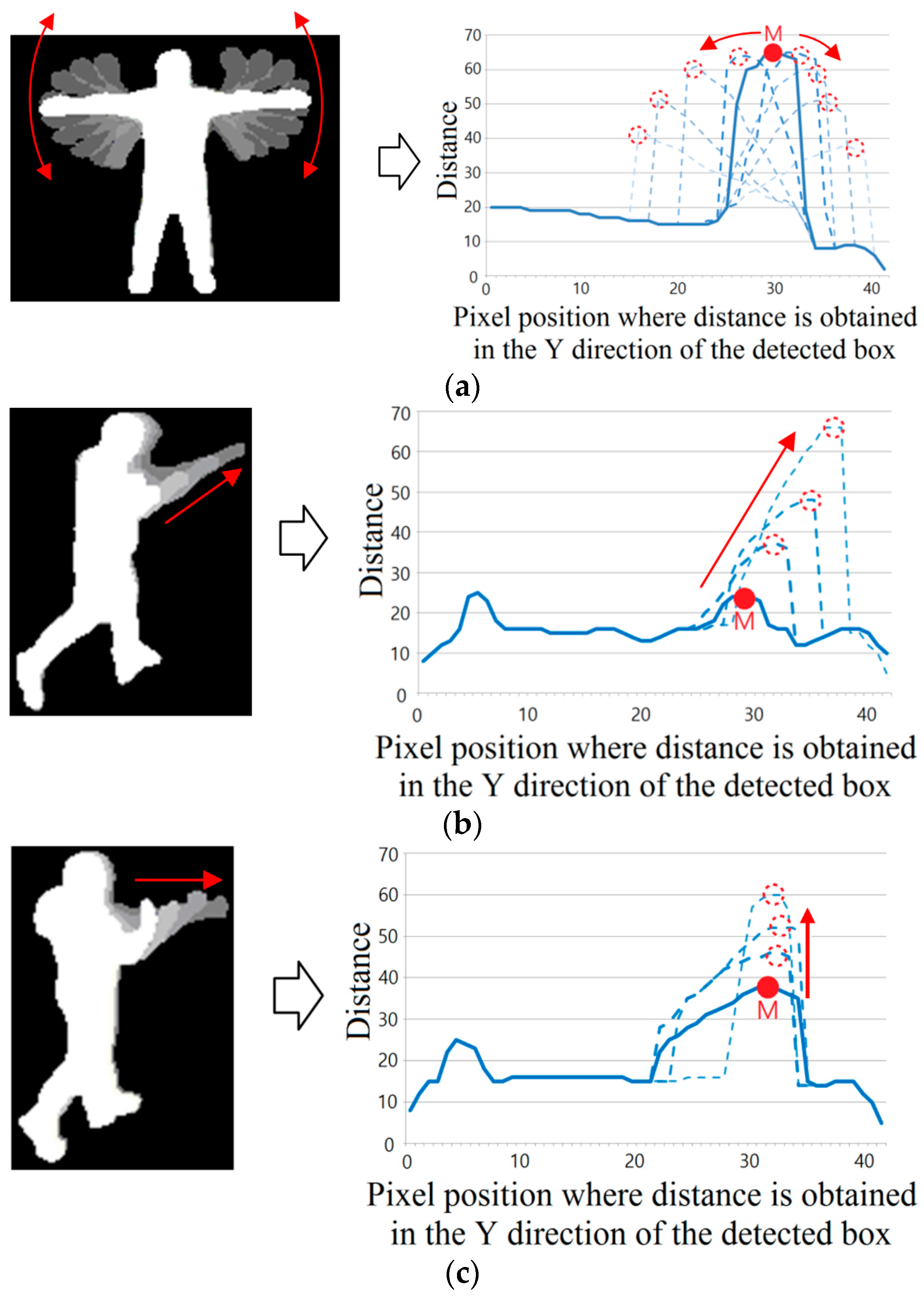

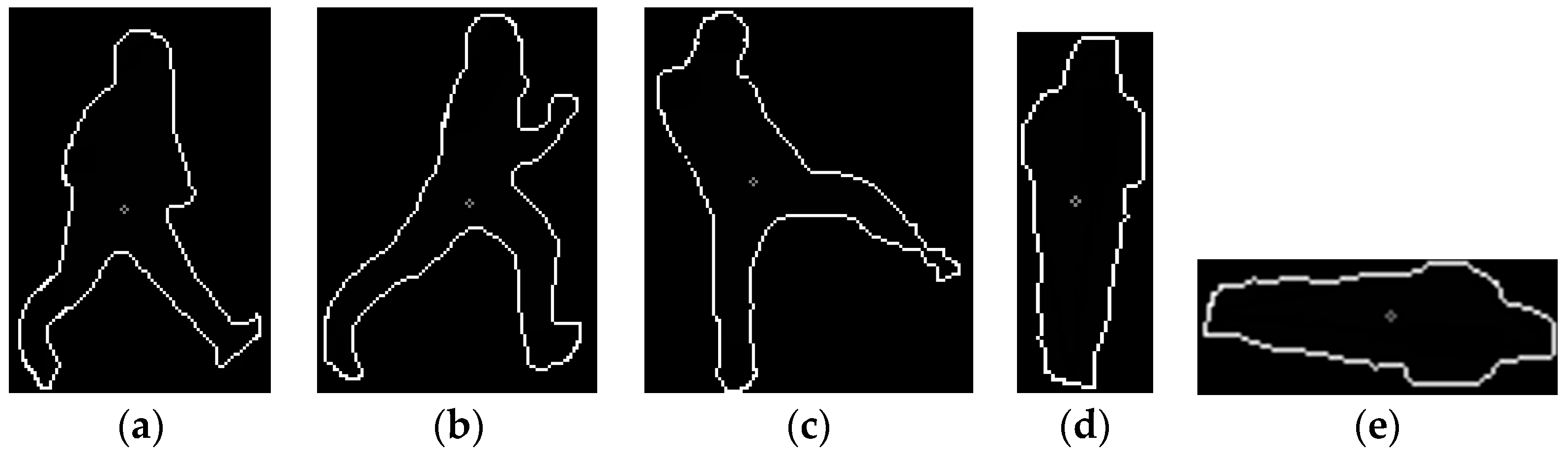

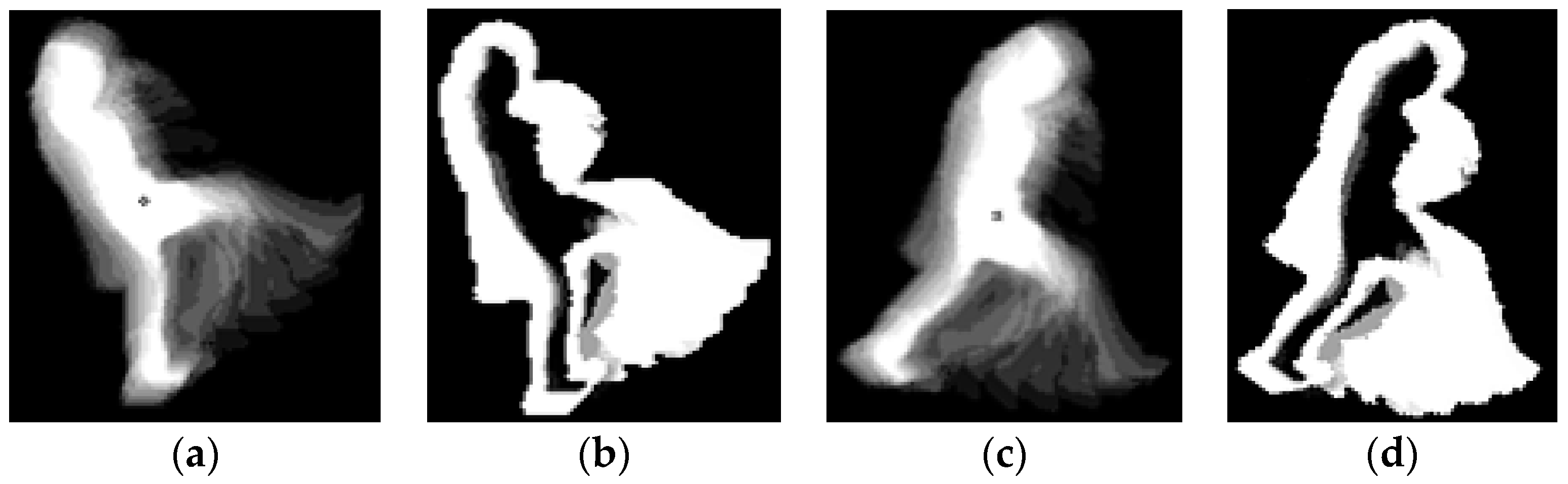

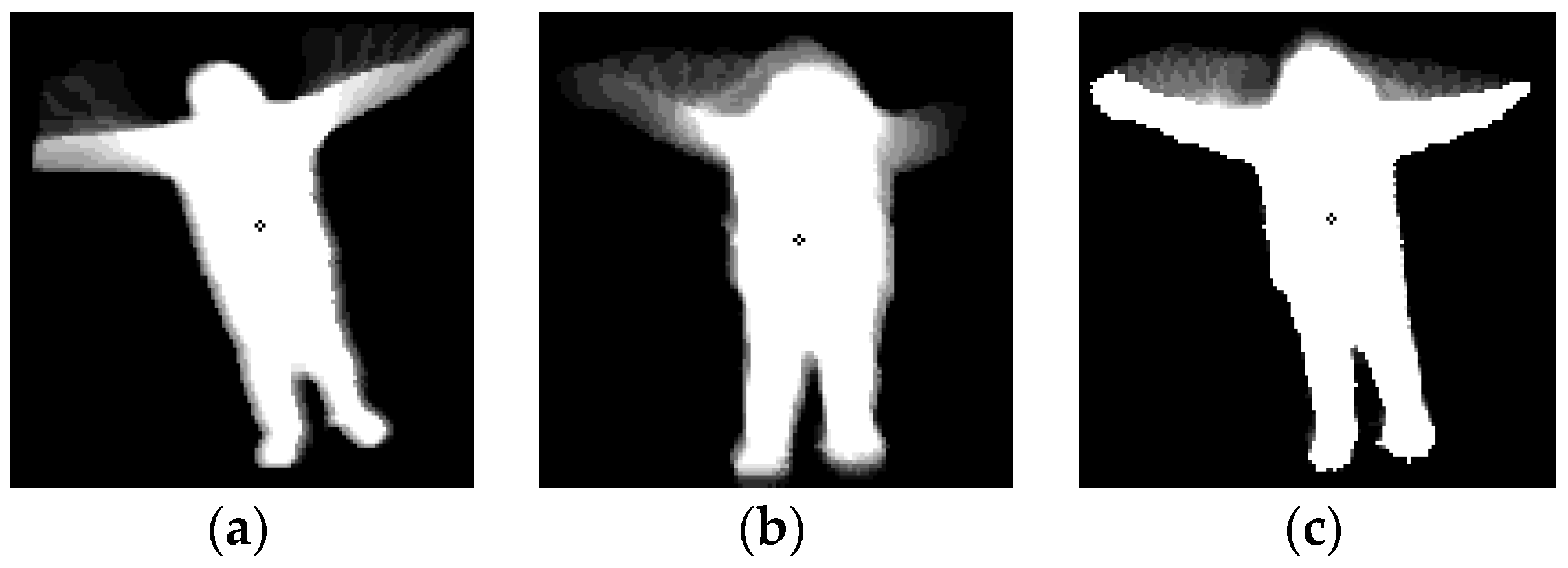

- We overcome the disadvantages of previous solutions of behavior recognition based on GEI and the region-based method by proposing the projection-based distance (PbD) method based on a binarized human blob.

- Based on the PbD method, we detect the tip positions of the hand and leg of the human blob. Then, various types of behavior are recognized based on the tracking information of these tip positions and our decision rules.

2. Proposed System and Method for Behavior Recognition

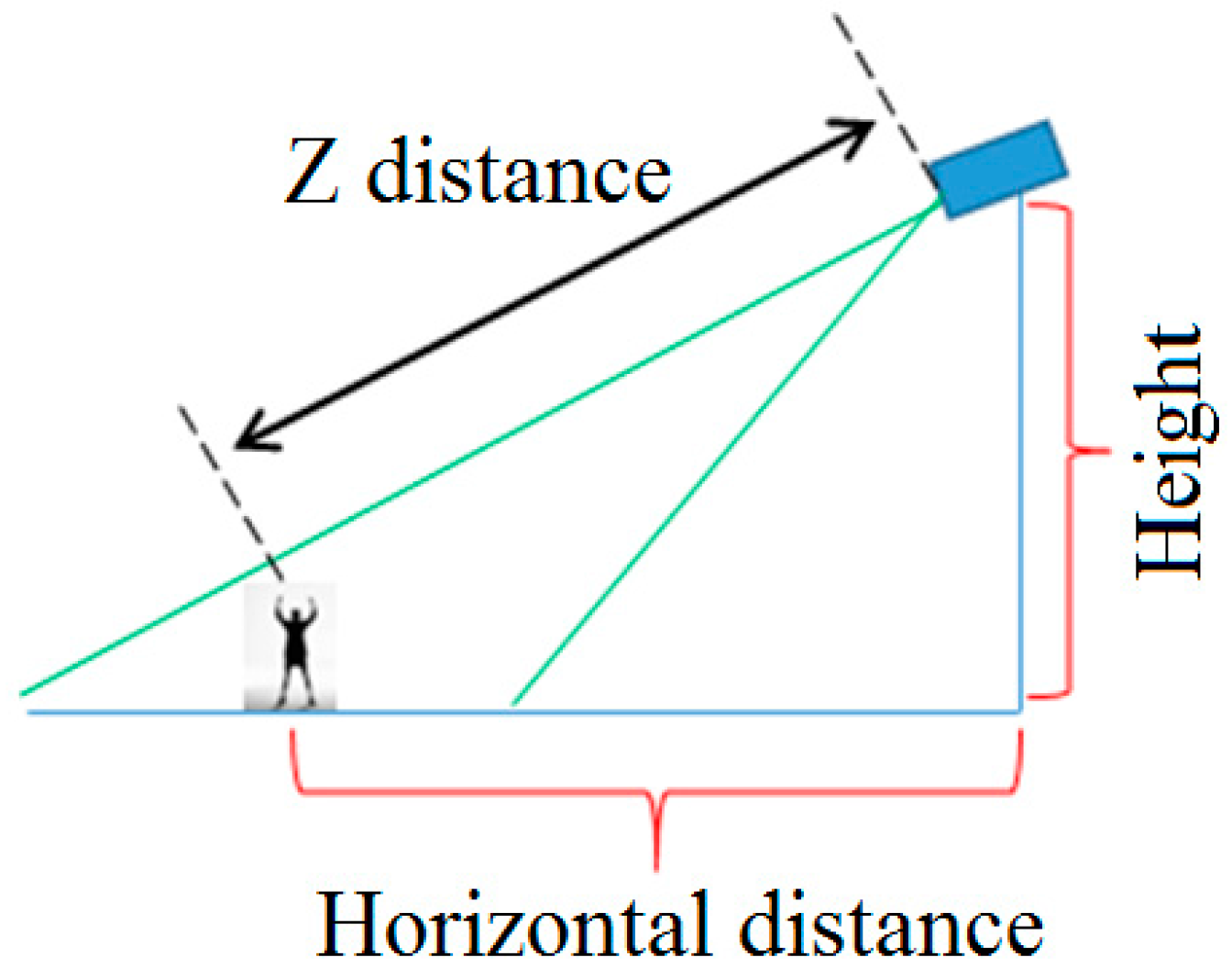

2.1. System Setup and Human Detection

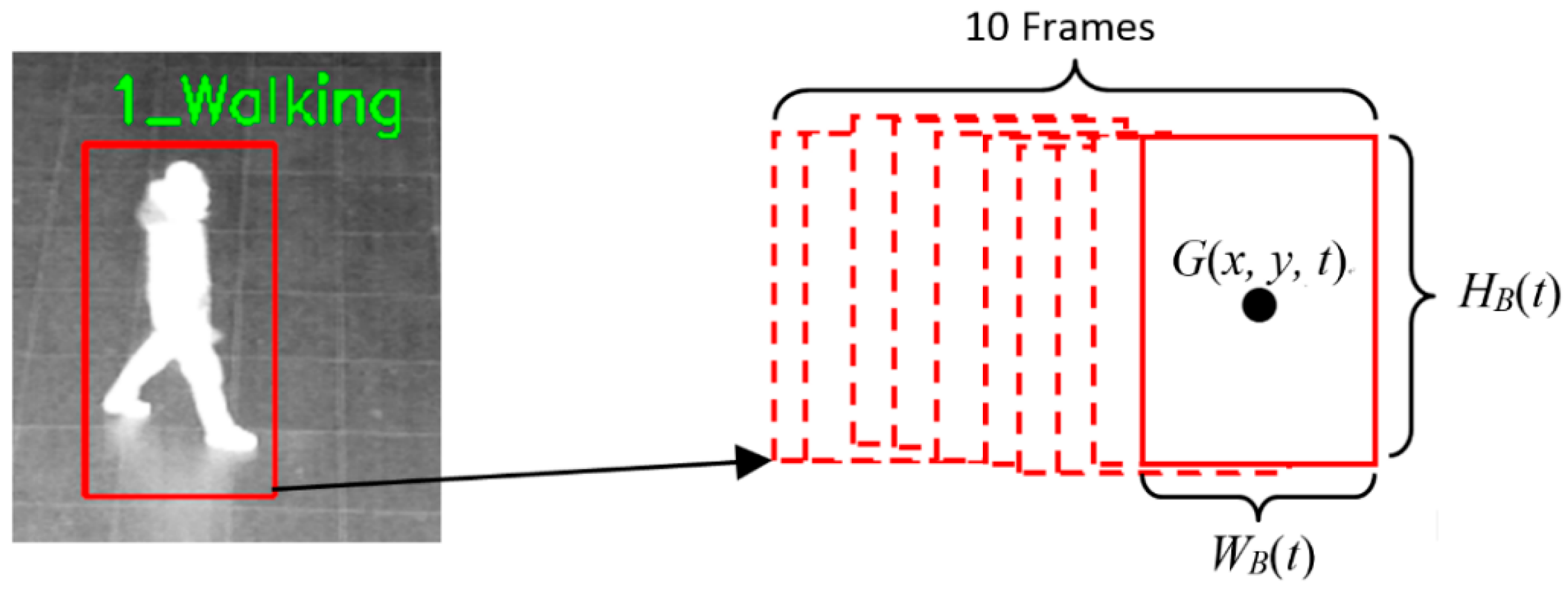

2.2. Proposed Method of Behavior Recognition

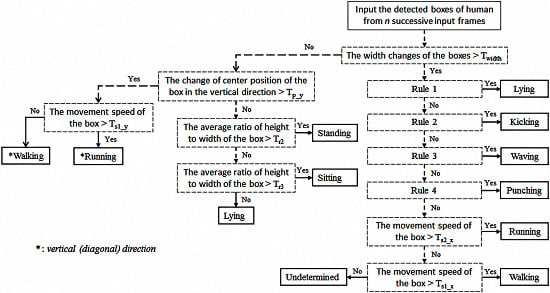

2.2.1. Overall Procedure of Behavior Recognition

- -

- Waving with two hands

- -

- Waving with one hand

- -

- Punching

- -

- Kicking

- -

- Lying down

- -

- Walking (horizontally, vertically, or diagonally)

- -

- Running (horizontally, vertically, or diagonally)

- -

- Standing

- -

- Sitting

- -

- Leaving

- -

- Approaching

- -

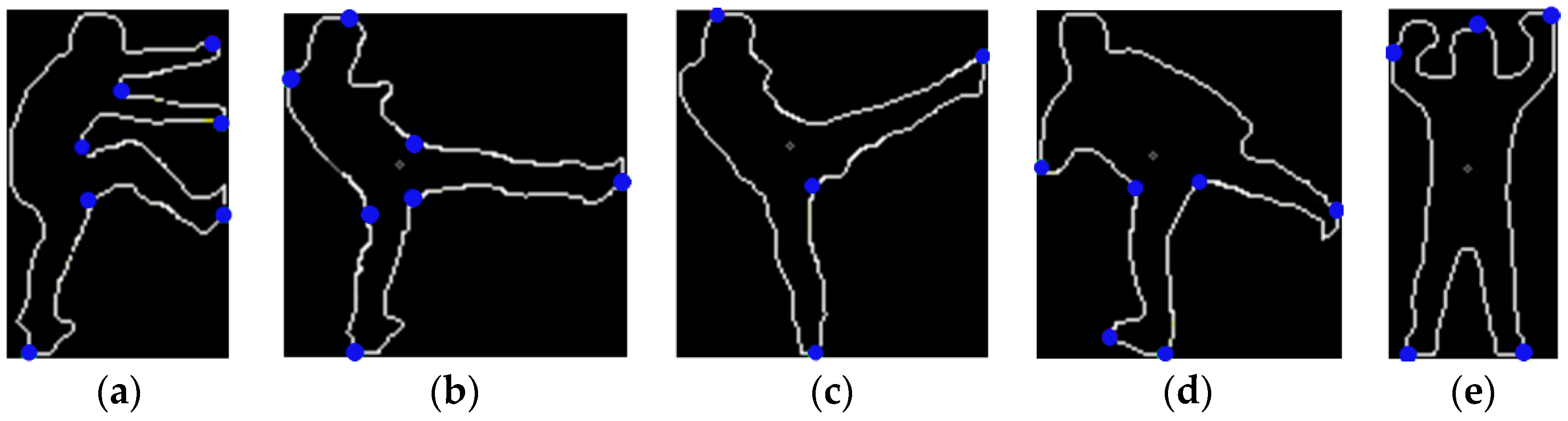

- Full waving, where the arm starts from about 270° and moves up to 90° in a circle as shown in Figure 4a,c.

- -

- Half waving, where the arm starts from about 0° and moves up to 90° in a circle as shown in Figure 4b,d.

- -

- Low punching, in which the hand moves directly toward the lower positions of the target’s chest shown in Figure 4e.

- -

- Middle punching, where the hand moves directly toward the target’s chest as shown in Figure 4f.

- -

- High punching, where the hand moves directly toward the upper positions of the target’s chest as shown in Figure 4g.

- -

- Similarly, in low, middle, and high kicking, the leg moves to different heights as shown in Figure 4h–j, respectively.

2.2.2. Recognition of Behavior in Classes 1 and 3

2.2.3. Recognition of Behavior in Class 2

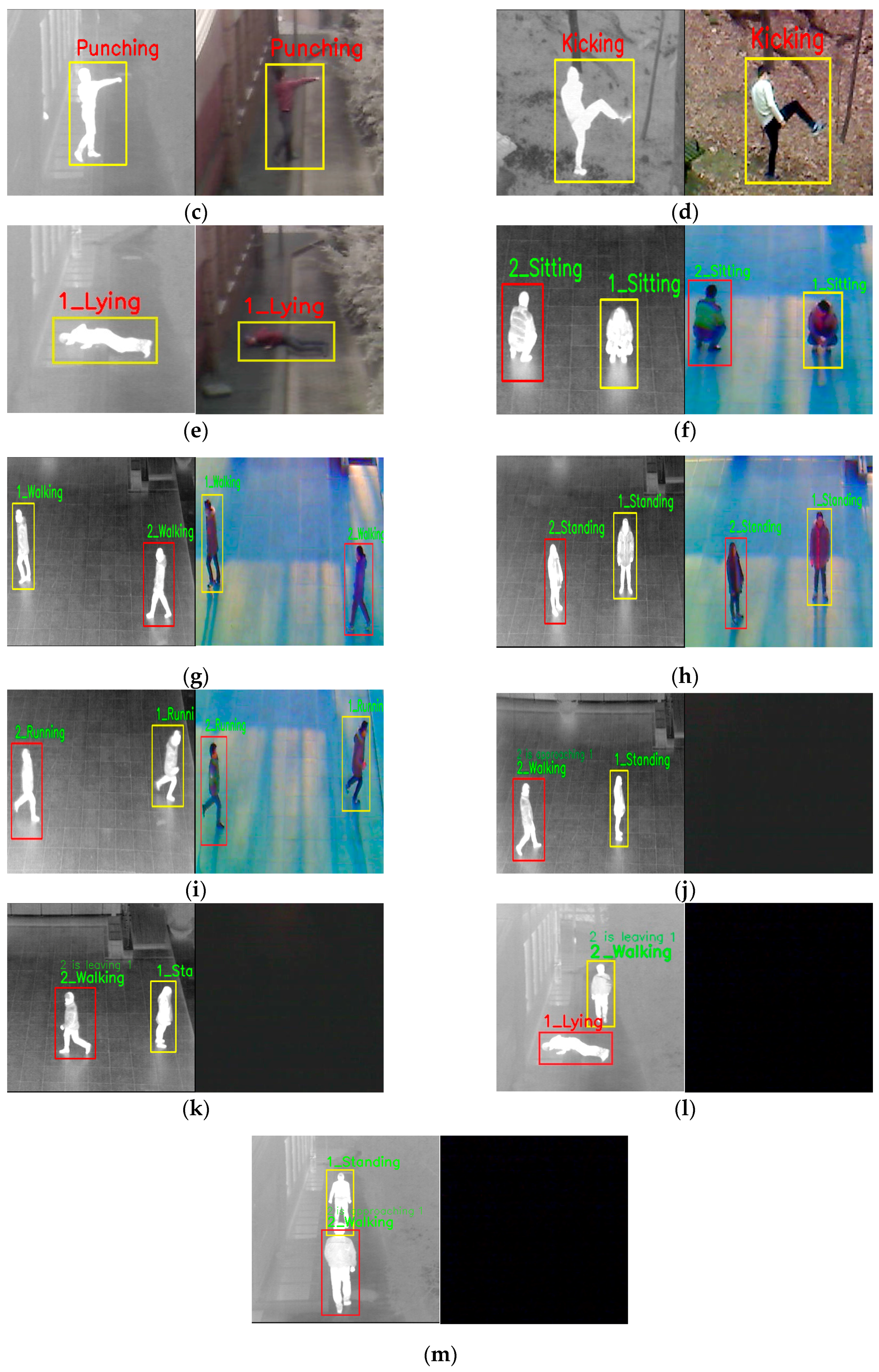

3. Experimental Results

3.1. Description of Database

3.2. Accuracies of Behavior Recognition

3.3. Comparison between the Accuracies Obtained by Our Method and Those Obtained by Previous Methods

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Besbes, B.; Rogozan, A.; Rus, A.-M.; Bensrhair, A.; Broggi, A. Pedestrian Detection in Far-Infrared Daytime Images Using a Hierarchical Codebook of SURF. Sensors 2015, 15, 8570–8594. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; He, Z.; Zhang, S.; Liang, D. Robust Pedestrian Detection in Thermal Infrared Imagery Using a Shape Distribution Histogram Feature and Modified Sparse Representation Classification. Pattern Recognit. 2015, 48, 1947–1960. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, J.; Wu, Q.; Geers, G. Feature Enhancement Using Gradient Salience on Thermal Image. In Proceedings of the International Conference on Digital Image Computing: Techniques and Applications, Sydney, Australia, 1–3 December 2010; pp. 556–562.

- Chang, S.L.; Yang, F.T.; Wu, W.P.; Cho, Y.A.; Chen, S.W. Nighttime Pedestrian Detection Using Thermal Imaging Based on HOG Feature. In Proceedings of the International Conference on System Science and Engineering, Macao, China, 8–10 June 2011; pp. 694–698.

- Lin, C.-F.; Chen, C.-S.; Hwang, W.-J.; Chen, C.-Y.; Hwang, C.-H.; Chang, C.-L. Novel Outline Features for Pedestrian Detection System with Thermal Images. Pattern Recognit. 2015, 48, 3440–3450. [Google Scholar] [CrossRef]

- Bertozzi, M.; Broggi, A.; Rose, M.D.; Felisa, M.; Rakotomamonjy, A.; Suard, F. A Pedestrian Detector Using Histograms of Oriented Gradients and a Support Vector Machine Classifier. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Seattle, WA, USA, 30 September–3 October 2007; pp. 143–148.

- Li, W.; Zheng, D.; Zhao, T.; Yang, M. An Effective Approach to Pedestrian Detection in Thermal Imagery. In Proceedings of the International Conference on Natural Computation, Chongqing, China, 29–31 May 2012; pp. 325–329.

- Wang, W.; Wang, Y.; Chen, F.; Sowmya, A. A Weakly Supervised Approach for Object Detection Based on Soft-Label Boosting. In Proceedings of the IEEE Workshop on Applications of Computer Vision, Tampa, FL, USA, 15–17 January 2013; pp. 331–338.

- Wang, W.; Zhang, J.; Shen, C. Improved Human Detection and Classification in Thermal Images. In Proceedings of the IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 2313–2316.

- Takeda, T.; Kuramoto, K.; Kobashi, S.; Haya, Y. A Fuzzy Human Detection for Security System Using Infrared Laser Camera. In Proceedings of the IEEE International Symposium on Multiple-Valued Logic, Toyama, Japan, 22–24 May 2013; pp. 53–58.

- Sokolova, M.V.; Serrano-Cuerda, J.; Castillo, J.C.; Fernández-Caballero, A. A Fuzzy Model for Human Fall Detection in Infrared Video. J. Intell. Fuzzy Syst. 2013, 24, 215–228. [Google Scholar]

- Ghiass, R.S.; Arandjelović, O.; Bendada, H.; Maldague, X. Infrared Face Recognition: A Literature Review. In Proceedings of the International Joint Conference on Neural Networks, Dallas, TX, USA, 4–9 August 2013; pp. 1–10.

- Davis, J.W.; Sharma, V. Background-subtraction Using Contour-based Fusion of Thermal and Visible Imagery. Comput. Vis. Image Underst. 2007, 106, 162–182. [Google Scholar] [CrossRef]

- Arandjelović, O.; Hammoud, R.; Cipolla, R. Thermal and Reflectance Based Personal Identification Methodology under Variable Illumination. Pattern Recognit. 2010, 43, 1801–1813. [Google Scholar] [CrossRef]

- Leykin, A.; Hammoud, R. Pedestrian Tracking by Fusion of Thermal-Visible Surveillance Videos. Mach. Vis. Appl. 2010, 21, 587–595. [Google Scholar] [CrossRef]

- Kong, S.G.; Heo, J.; Boughorbel, F.; Zheng, Y.; Abidi, B.R.; Koschan, A.; Yi, M.; Abidi, M.A. Multiscale Fusion of Visible and Thermal IR Images for Illumination-Invariant Face Recognition. Int. J. Comput. Vis. 2007, 71, 215–233. [Google Scholar] [CrossRef]

- Rahman, S.A.; Li, L.; Leung, M.K.H. Human Action Recognition by Negative Space Analysis. In Proceedings of the IEEE International Conference on Cyberworlds, Singapore, 20–22 October 2010; pp. 354–359.

- Rahman, S.A.; Leung, M.K.H.; Cho, S.-Y. Human Action Recognition Employing Negative Space Features. J. Vis. Commun. Image Represent. 2013, 24, 217–231. [Google Scholar] [CrossRef]

- Rahman, S.A.; Cho, S.-Y.; Leung, M.K.H. Recognising Human Actions by Analyzing Negative Spaces. IET Comput. Vis. 2012, 6, 197–213. [Google Scholar] [CrossRef]

- Rahman, S.A.; Song, I.; Leung, M.K.H.; Lee, I.; Lee, K. Fast Action Recognition Using Negative Space Features. Expert Syst. Appl. 2014, 41, 574–587. [Google Scholar] [CrossRef]

- Fating, K.; Ghotkar, A. Performance Analysis of Chain Code Descriptor for Hand Shape Classification. Int. J. Comput. Graph. Animat. 2014, 4, 9–19. [Google Scholar] [CrossRef]

- Tahir, N.M.; Hussain, A.; Samad, S.A.; Husain, H.; Rahman, R.A. Human Shape Recognition Using Fourier Descriptor. J. Electr. Electron. Syst. Res. 2009, 2, 19–25. [Google Scholar]

- Toth, D.; Aach, T. Detection and Recognition of Moving Objects Using Statistical Motion Detection and Fourier Descriptors. In Proceedings of the IEEE International Conference on Image Analysis and Processing, Mantova, Italy, 17–19 September 2003; pp. 430–435.

- Harding, P.R.G.; Ellis, T. Recognizing Hand Gesture Using Fourier Descriptors. In Proceedings of the IEEE International Conference on Pattern Recognition, Washington, DC, USA, 23–26 August 2004; pp. 286–289.

- Ismail, I.A.; Ramadan, M.A.; El danaf, T.S.; Samak, A.H. Signature Recognition Using Multi Scale Fourier Descriptor and Wavelet Transform. Int. J. Comput. Sci. Inf. Secur. 2010, 7, 14–19. [Google Scholar]

- Sun, X.; Chen, M.; Hauptmann, A. Action Recognition via Local Descriptors and Holistic Features. In Proceedings of the Workshop on Computer Vision and Pattern Recognition for Human Communicative Behavior Analysis, Miami, FL, USA, 20–26 June 2009; pp. 58–65.

- Schüldt, C.; Laptev, I.; Caputo, B. Recognizing Human Actions: A Local SVM Approach. In Proceedings of the IEEE International Conference on Pattern Recognition, Cambridge, UK, 23–26 August 2004; pp. 32–36.

- Laptev, I.; Lindeberg, T. Space-time Interest Points. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; pp. 432–439.

- Laptev, I. On Space-Time Interest Points. Int. J. Comput. Vis. 2005, 64, 107–123. [Google Scholar] [CrossRef]

- Wang, L.; Qiao, Y.; Tang, X. Motionlets: Mid-level 3D Parts for Human Motion Recognition. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Porland, OR, USA, 23–28 June 2013; pp. 2674–2681.

- Arandjelović, O. Contextually Learnt Detection of Unusual Motion-Based Behaviour in Crowded Public Spaces. In Proceedings of the 26th International Symposium on Computer and Information Sciences, London, UK, 26–28 September 2011; pp. 403–410.

- Eum, H.; Lee, J.; Yoon, C.; Park, M. Human Action Recognition for Night Vision Using Temporal Templates with Infrared Thermal Camera. In Proceedings of the International Conference on Ubiquitous Robots and Ambient Intelligence, Jeju Island, Korea, 30 October–2 November 2013; pp. 617–621.

- Chunli, L.; Kejun, W. A Behavior Classification Based on Enhanced Gait Energy Image. In Proceedings of the IEEE International Conference on Network and Digital Society, Wenzhou, China, 30–31 May 2010; pp. 589–592.

- Liu, J.; Zheng, N. Gait History Image: A Novel Temporal Template for Gait Recognition. In Proceedings of the IEEE International Conference on Multimedia and Expo, Beijing, China, 2–5 July 2007; pp. 663–666.

- Kim, W.; Lee, J.; Kim, M.; Oh, D.; Kim, C. Human Action Recognition Using Ordinal Measure of Accumulated Motion. Eur. J. Adv. Signal Process. 2010, 2010, 1–12. [Google Scholar] [CrossRef]

- Han, J.; Bhanu, B. Human Activity Recognition in Thermal Infrared Imagery. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, San Diego, CA, USA, 25 June 2005; pp. 17–24.

- Lam, T.H.W.; Cheung, K.H.; Liu, J.N.K. Gait Flow Image: A Silhouette-based Gait Representation for Human Identification. Pattern Recognit. 2011, 44, 973–987. [Google Scholar] [CrossRef]

- Wong, W.K.; Lim, H.L.; Loo, C.K.; Lim, W.S. Home Alone Faint Detection Surveillance System Using Thermal Camera. In Proceedings of International Conference on Computer Research and Development, Kuala Lumpur, Malaysia, 7–10 May 2010; pp. 747–751.

- Youssef, M.M. Hull Convexity Defect Features for Human Action Recognition. Ph.D. Thesis, University of Dayton, Dayton, OH, USA, August 2011. [Google Scholar]

- Zhang, D.; Wang, Y.; Bhanu, B. Ethnicity Classification Based on Gait Using Multi-view Fusion. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 108–115.

- Rusu, R.B.; Bandouch, J.; Marton, Z.C.; Blodow, N.; Beetz, M. Action Recognition in Intelligent Environments Using Point Cloud Features Extracted from Silhouette Sequences. In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication, Munich, Germany, 1–3 August 2008; pp. 267–272.

- Kim, S.-H.; Hwang, J.-H.; Jung, I.-K. Object Tracking System with Human Extraction and Recognition of Behavior. In Proceedings of the International Conference on Management and Artificial Intelligence, Bali, Indonesia, 1–3 April 2011; pp. 11–16.

- Lee, J.H.; Choi, J.-S.; Jeon, E.S.; Kim, Y.G.; Le, T.T.; Shin, K.Y.; Lee, H.C.; Park, K.R. Robust Pedestrian Detection by Combining Visible and Thermal Infrared Cameras. Sensors 2015, 15, 10580–10615. [Google Scholar] [CrossRef] [PubMed]

- Tau 2. Available online: http://mds-flir.com/datasheet/FLIR_Tau2_Family_Brochure.pdf (accessed on 21 March 2016).

- Infrared Lens. Available online: http://www.irken.co.kr/ (accessed on 31 March 2016).

- Jeon, E.S.; Choi, J.-S.; Lee, J.H.; Shin, K.Y.; Kim, Y.G.; Le, T.T.; Park, K.R. Human Detection Based on the Generation of a Background Image by Using a Far-Infrared Light Camera. Sensors 2015, 15, 6763–6788. [Google Scholar] [CrossRef] [PubMed]

- Gorelick, L.; Blank, M.; Shechtman, E.; Irani, M.; Basri, R. Actions as Space-Time Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 2247–2253. [Google Scholar] [CrossRef] [PubMed]

- Ikizler, N.; Forsyth, D.A. Searching for Complex Human Activities with No Visual Examples. Int. J. Comput. Vis. 2008, 80, 337–357. [Google Scholar] [CrossRef]

- The LTIR Dataset v1.0. Available online: http://www.cvl.isy.liu.se/en/research/datasets/ltir/version1.0/ (accessed on 21 February 2016).

- Precision and Recall. Available online: https://en.wikipedia.org/wiki/Precision_and_recall (accessed on 7 February 2016).

- Gehrig, D.; Kuehne, H.; Woerner, A.; Schultz, T. HMM-based Human Motion Recognition with Optical Flow Data. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Paris, France, 7–10 December 2009; pp. 425–430.

| Decision rules | |

|---|---|

| Rule 1 (Lying down) | If (“Condition 1 * is TRUE”) : Lying down Else : Go to Rule 2 |

| Rule 2 (Kicking) | If (“M is leg tip” and “Condition 2 * is TRUE”) : Kicking Else : Go to Rule 3 |

| Rule 3 (Hand waving) | If (“M is hand tip” and “Condition 3 * is TRUE”) : Hand waving Else : Go to Rule 4 |

| Rule 4 (Punching) | If (“M is hand tip” and (“Condition 4 * or Condition 5 * is TRUE”)) : Punching Else : Go to the rule (for checking running, walking or undetermined case as shown in the right lower part of Figure 3) |

| Dataset | Condition | Detail Description |

|---|---|---|

| I (see in Figure 11a) | 1.2 °C, morning, humidity 73.0%, wind 1.6 m/s |

|

| II (see in Figure 11b) | −1.0 °C, evening, humidity 73.0%, wind 1.5 m/s |

|

| III (see in Figure 11c) | 1.0 °C, afternoon, cloudy, humidity 50.6%, wind 1.7 m/s |

|

| IV (see in Figure 11d) | −2.0 °C, dark night, humidity 50.6%, wind 1.8 m/s |

|

| V (see in Figure 11e) | 14.0 °C, afternoon, sunny, humidity 43.4%, wind 3.1 m/s |

|

| VI (see in Figure 11f) | 5.0 °C, dark night, humidity 43.4%, wind 3.1 m/s |

|

| VII (see in Figure 11g) | −6.0 °C, afternoon, cloudy, humidity 39.6%, wind 1.9 m/s |

|

| VIII (see in Figure 11h) | −10.0 °C, dark night, humidity 39.6%, wind 1.7 m/s |

|

| IX (see in Figure 11i) | 21.9 °C, afternoon, cloudy, humidity 62.6%, wind 1.3 m/s |

|

| X (see in Figure 11j) | −10.9 °C, dark night, humidity 48.3%, wind 2.0 m/s |

|

| XI (see in Figure 11k) | 27.0 °C, afternoon, sunny, humidity 60.0%, wind 1.0 m/s |

|

| XII (see in Figure 11l) | 20.2 °C, dark night, humidity 58.6%, wind 1.2 m/s |

|

| Datasets | Height | Horizontal Distance | Z Distance |

|---|---|---|---|

| Datasets I and II | 8 | 10 | 12.8 |

| Datasets III and IV | 7.7 | 11 | 13.4 |

| Datasets V and VI | 5 | 15 | 15.8 |

| Datasets VII and VIII | 10 | 15 | 18 |

| Datasets IX and X | 10 | 15 | 18 |

| Datasets XI and XII | 6 | 11 | 12.5 |

| #Frame | #Behavior | |||

|---|---|---|---|---|

| Behavior | Day | Night | Day | Night |

| Walking | 1504 | 2378 | 763 | 1245 |

| Running | 608 | 2196 | 269 | 355 |

| Standing | 604 | 812 | 584 | 792 |

| Sitting | 418 | 488 | 378 | 468 |

| Approaching | 1072 | 1032 | 356 | 354 |

| Leaving | 508 | 558 | 163 | 188 |

| Waving with two hands | 29588 | 14090 | 1752 | 870 |

| Waving with one hand | 24426 | 15428 | 1209 | 885 |

| Punching | 21704 | 13438 | 1739 | 1078 |

| Lying down | 7728 | 5488 | 2621 | 2022 |

| Kicking | 27652 | 22374 | 2942 | 3018 |

| Total | 194094 | 24051 | ||

| Day | Night | |||||||

|---|---|---|---|---|---|---|---|---|

| Behavior | TPR | PPV | ACC | F_Score | TPR | PPV | ACC | F_Score |

| Walking | 92.6 | 100 | 92.7 | 96.2 | 98.5 | 100 | 98.5 | 99.2 |

| Running | 96.6 | 100 | 96.7 | 98.3 | 94.6 | 100 | 94.7 | 97.2 |

| Standing | 100 | 100 | 100 | 100 | 97.3 | 100 | 97.3 | 98.6 |

| Sitting | 92.5 | 100 | 92.5 | 96.1 | 96.5 | 100 | 96.5 | 98.2 |

| Approaching | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| Leaving | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| Waving with two hands | 95.9 | 98.8 | 99.4 | 97.3 | 97.0 | 99.5 | 99.6 | 98.2 |

| Waving with one hand | 93.6 | 99.4 | 99.3 | 96.4 | 90.0 | 100 | 99.0 | 94.7 |

| Punching | 87.8 | 99.5 | 98.0 | 93.3 | 77.4 | 99.6 | 96.4 | 87.1 |

| Lying down | 99.4 | 99.0 | 98.9 | 99.2 | 97.3 | 98.0 | 96.3 | 97.6 |

| Kicking | 90.6 | 95.8 | 97.2 | 93.1 | 88.5 | 90.3 | 94.6 | 89.4 |

| Average | 95.4 | 99.3 | 97.7 | 97.3 | 94.3 | 98.9 | 97.5 | 96.4 |

| Processing Time | ||

|---|---|---|

| Behavior | Day | Night |

| Walking | 2.3 | 2.6 |

| Running | 1.5 | 1.5 |

| Standing | 3.2 | 3.1 |

| Sitting | 1.9 | 2.0 |

| Approaching | 3.3 | 2.9 |

| Leaving | 2.9 | 2.9 |

| Waving with two hands | 3.2 | 3.1 |

| Waving with one hand | 2.6 | 2.1 |

| Punching | 2.7 | 2.3 |

| Lying down | 1.2 | 1.0 |

| Kicking | 1.9 | 2.0 |

| Average | 2.4 | |

| Fourier Descriptor-Based | GEI-Based | Convexity Defect-Based | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Behavior | TPR | PPV | ACC | F_Score | TPR | PPV | ACC | F_Score | TPR | PPV | ACC | F_Score |

| Walking | 0 | 0 | 1.6 | - | 83.9 | 97.7 | 82.4 | 90.3 | 17.4 | 98.4 | 18.4 | 29.6 |

| Running | 13.0 | 97.2 | 16.0 | 22.9 | 0 | 0 | 3.5 | - | 23.4 | 98.4 | 27.6 | 37.8 |

| Standing | 85.9 | 100 | 85.9 | 92.4 | n/a | n/a | n/a | n/a | n/a | n/a | n/a | n/a |

| Sitting | 59.7 | 100 | 59.7 | 74.8 | n/a | n/a | n/a | n/a | n/a | n/a | n/a | n/a |

| Waving with two hands | 81.4 | 13.7 | 47.8 | 23.5 | 96.5 | 19.1 | 59.6 | 31.9 | 89.0 | 68.6 | 94.9 | 77.4 |

| Waving with one hand | 78.2 | 13.8 | 50.3 | 23.5 | 21.2 | 10.1 | 73.8 | 13.7 | 34.9 | 73.4 | 92.4 | 47.3 |

| Punching | 27.1 | 20.3 | 74.6 | 23.2 | 62.2 | 16.5 | 50.1 | 26.1 | 39.7 | 55.7 | 86.9 | 46.4 |

| Lying down | 12.9 | 72.0 | 38.6 | 21.9 | n/a | n/a | n/a | n/a | n/a | n/a | n/a | n/a |

| Kicking | 61.1 | 42.0 | 78.7 | 49.8 | 24.7 | 80.7 | 86.0 | 37.8 | 29.3 | 47.6 | 82.2 | 36.3 |

| Average | 46.6 | 51.0 | 50.4 | 48.7 | 55.5 | 46.3 | 65.1 | 50.5 | 47.7 | 77.4 | 71.8 | 59.0 |

| Predicted | Walking | Running | Standing | Sitting | Waving with two hands | Waving with one hand | Lying down | Kicking | Punching | |

|---|---|---|---|---|---|---|---|---|---|---|

| Actual | ||||||||||

| Walking | 95.5 | 0.3 | 0.4 | 0.5 | ||||||

| Running | 0.4 | 95.6 | 0.3 | 3.3 | 0.4 | |||||

| Standing | 98.5 | |||||||||

| Sitting | 0.5 | 94.6 | ||||||||

| Waving with two hands | 96.4 | 0.3 | ||||||||

| Waving with one hand | 0.2 | 0.1 | 91.8 | 0.1 | ||||||

| Lying down | 0.3 | 0.6 | 98.3 | |||||||

| Kicking | 0.2 | 3.0 | 0.3 | 89.5 | ||||||

| Punching | 0.3 | 1.6 | 0.3 | 82.5 | ||||||

| Method | TPR (%) | PPV (%) | ACC (%) | F_score (%) | Processing Time (ms/frame) |

|---|---|---|---|---|---|

| Fourier descriptor-based | 46.6 | 51.0 | 50.4 | 48.7 | 16.1 |

| GEI-based | 55.5 | 46.3 | 65.1 | 50.5 | 4.9 |

| Convexity defect-based | 47.7 | 77.4 | 71.8 | 59.0 | 5.2 |

| Our method | 94.8 | 99.1 | 97.6 | 96.8 | 2.4 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Batchuluun, G.; Kim, Y.G.; Kim, J.H.; Hong, H.G.; Park, K.R. Robust Behavior Recognition in Intelligent Surveillance Environments. Sensors 2016, 16, 1010. https://doi.org/10.3390/s16071010

Batchuluun G, Kim YG, Kim JH, Hong HG, Park KR. Robust Behavior Recognition in Intelligent Surveillance Environments. Sensors. 2016; 16(7):1010. https://doi.org/10.3390/s16071010

Chicago/Turabian StyleBatchuluun, Ganbayar, Yeong Gon Kim, Jong Hyun Kim, Hyung Gil Hong, and Kang Ryoung Park. 2016. "Robust Behavior Recognition in Intelligent Surveillance Environments" Sensors 16, no. 7: 1010. https://doi.org/10.3390/s16071010