In this section we propose three experiments to test this inverse formula for radial distortion in order to evaluate the relevance of such approach.

4.1. Inverse Distortion Loop

In this experiment, as the formula gives an inverse formula for radial distortion we do it twice and compare the final result with the original one. In a second step, we iterate this process 10,000 times and compare the final result with the original distortion.

The

Table 1 shows the original radial distortion and the computed inverse parameters. The original distortion is obtained by using PhotoModeler to calibrate a Nikon D700 camera with a 14 mm lens from Sigma.

The results for this step are presented in

Table 2. In the columns ‘Delta Loop 1’ and ‘Delta Loop 10000’ we can see that

and

did not change and the delta on

and

are small with respect to the corresponding coefficients: the error is close to

smaller than the corresponding coefficient. Note that

was not present in the original distortion and as the inverse formula is in function of only

, the loop is computed without the coefficients

which influences the results, visible from

.

This first experiments shows the inverse property of the formula and of course not the relevance of an inverse distortion model. But this experiment shows also the high stability of the inversion process. However, even if coefficients are sufficient in order to compensate distortion, the use of coefficients are important for the inversion stability.

The next two experiments show the relevance of this formula for the inverse radial distortion model.

4.2. Inverse Distortion Computation onto a Frame

This second experiment uses a Nikon D700 equipped with a 14 mm lens from Sigma. This camera is a full frame format,

i.e., a 24 mm × 36 mm frame size. The camera was calibrated using PhotoModeler and the inverse distortion coefficients are presented in

Table 1, where Column 1 gives the calibration result on the radial distortion, and Column 2 the computed inverse radial distortion.

Note that the distortion model provided by the calibration using PhotoModeler gives as a result a compensation of the radial distortion, in millimeters, limited to the frame.

The way to use this coefficient is to first express a 2D point on the image in the camera reference system, in millimeters, with the origin on the CoD (Center of Distortion), close to the center of the image. Then the polynomial model is applied from this point.

The inverse of this distortion is the application of such a radial distortion to a point theoretically projected onto the frame.

In all following experiments, the residuals are computed as follows:

A 2D point , is chosen inside the frame, its coordinate are previously computed in millimeters in the camera reference system with the origin on the CoD. Then is compensated by the inverse of distortion. Finally is compensated by the original distortion.

The residual is the value .

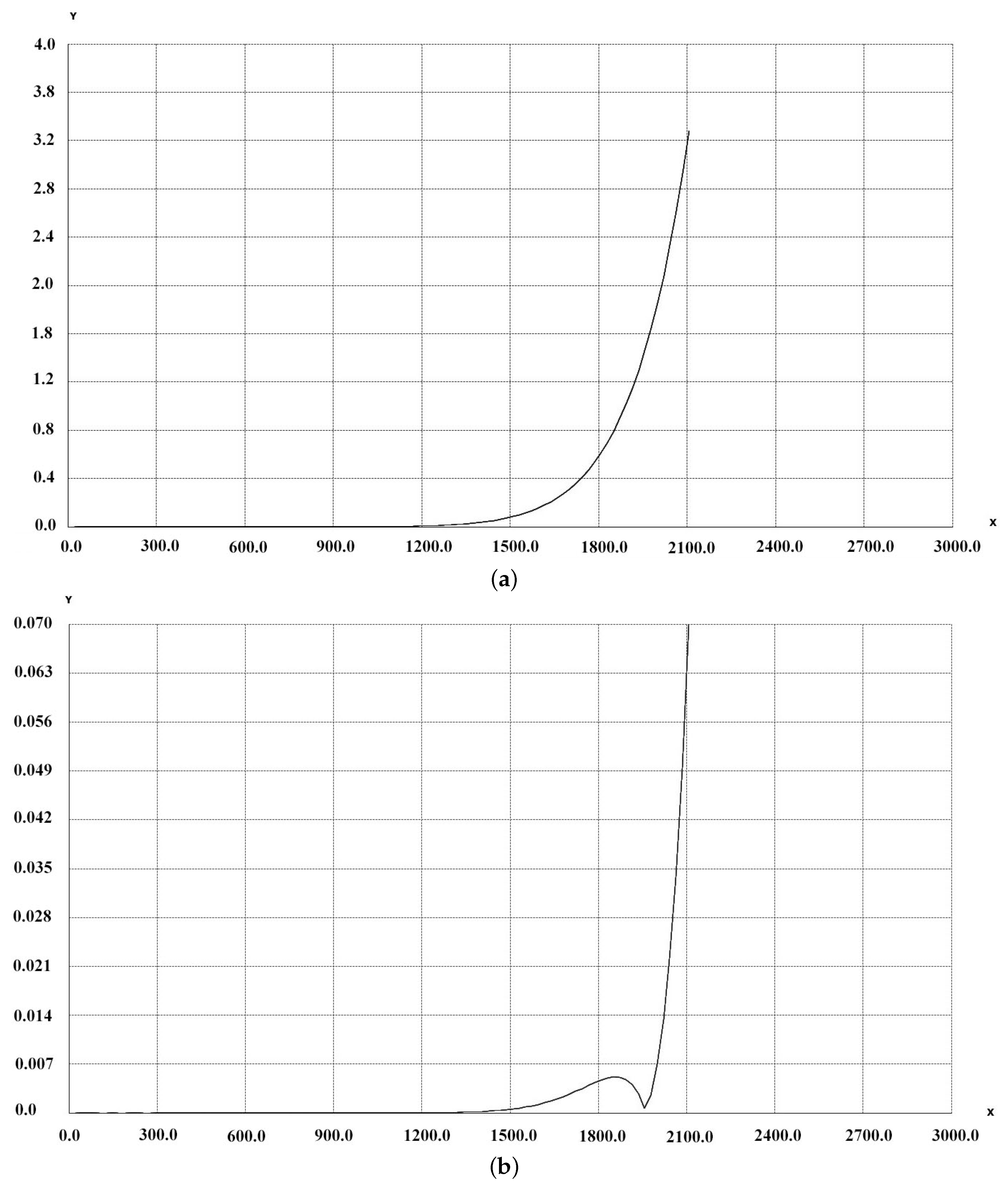

The following results show the 2D distortion residual curve. For a set of points on the segment

the residuals are computed and presented in

Figure 4 as Y-axis. The X-axis represents the distance from the CoD. These data comes from the calibration process and are presented in

Table 1.

In

Figure 4a, below, we present the residuals using only coefficients

of the inverse distortion. The maximum residual is close to 4 pixels, but residuals are less than one pixel until close to the frame border. This can be used when using non configurable software where it is not possible to use more than 4 coefficients for radial distortion modeling.

In

Figure 4, below, we present the residual computed from 0 to

frame using coeficients

for inverse distortion.

The results are very good, less than 0.07 pixel on the frame border along 0X axis and the performance is quite the same as for compensating the original distortion.

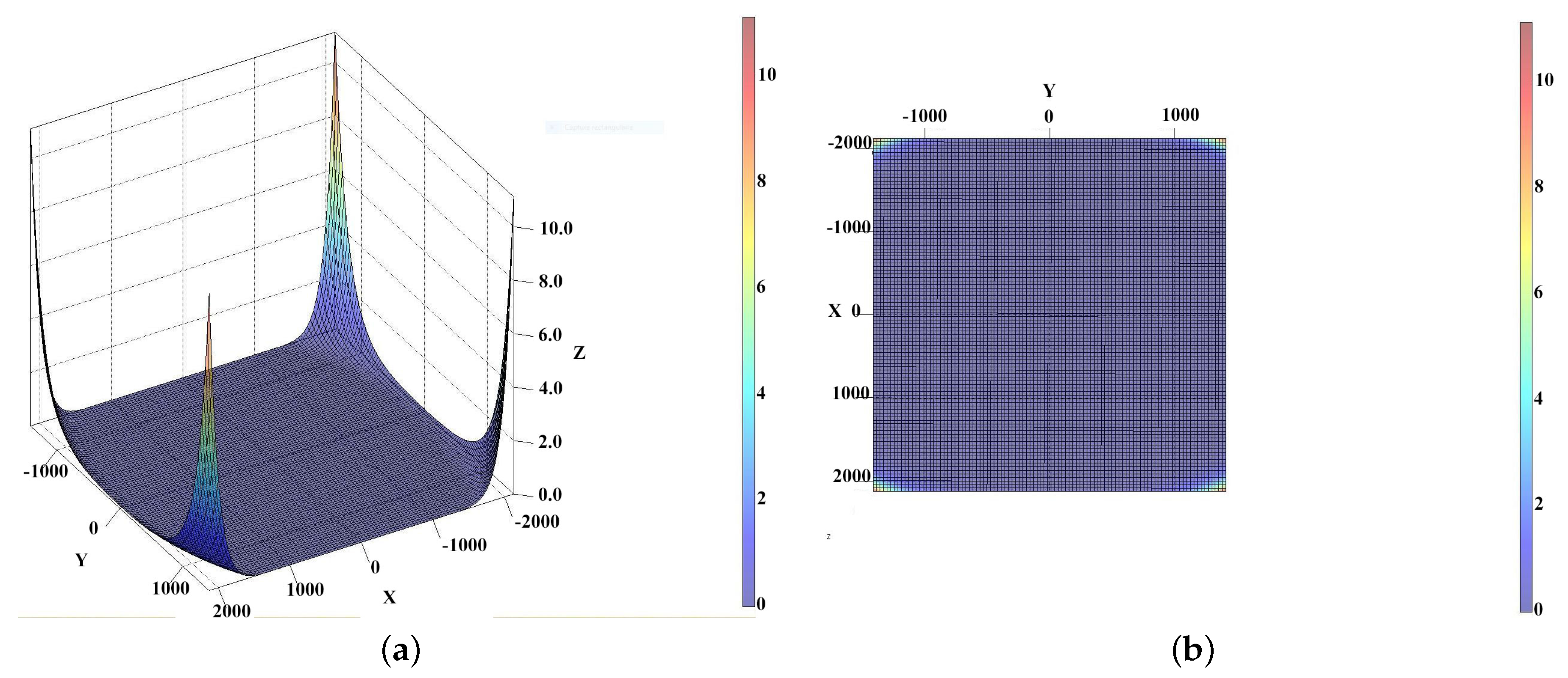

We can see that in almost all the images the residuals are close to the ones presented in

Figure 5. Nevertheless, we can observe in

Figure 4 higher residual in the corners, where the distance to the CoD is the greatest.

Here follows a brief analysis of the residuals:

These two experiments show that the results are totally acceptable even if the residuals are higher in zone furthest from the CoD,

i.e., in the diagonal of the frame. As shown in

Table 3, only 2.7 % of the frames have residuals > 1 pixel.

4.3. Inverse Distortion Computation on an Image Done with a Metric Camera

This short experiment used an image taken with a Wild P32 metric camera in order to work on an image without distortion. The Wild P32 terrestrial camera is a photogrammetric camera designed for close-range photogrammetry, topography, architectural and other special photography and survey applications.

This camera was used as film based, the film is pressed onto a glass plate fixed to the camera body on which 5 fiducial marks are incised. The glass plate prevents any film deformation.

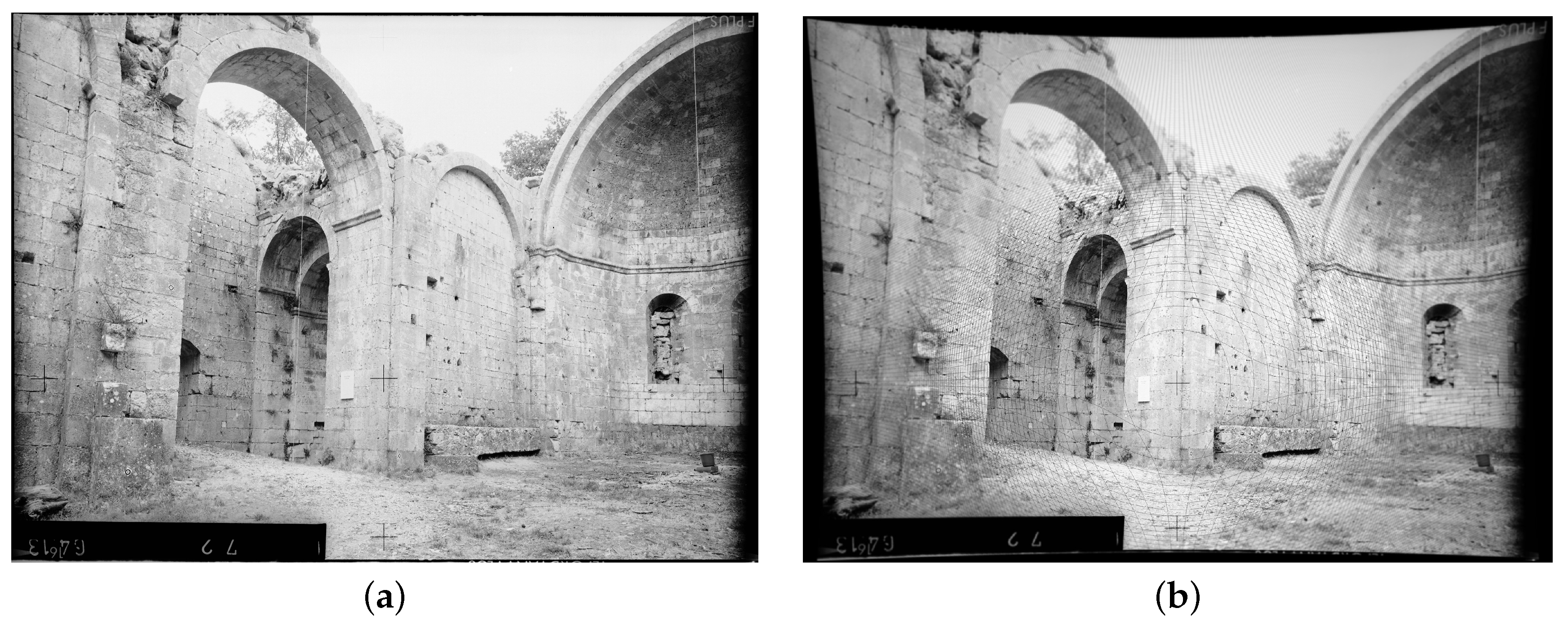

The film format is 65 mm × 80 mm and the focal length, fixed, is 64 mm. Designed for architectural survey the camera has a high eccentricity and the 5 fiducial marks were used in this paper to compute the CoD. In

Figure 6a four fiducial marks are visible (the fifth is overexposed in the sky). The fiducial marks are organized as follows: one at the principal point (PP), three at 37.5 mm from the PP (left,right,top) and one at 17.5 mm (bottom).

This image was taken in 2000 in the remains of the Romanesque Aleyrac Priory, in northern Provence (France) [

42]. Its semi-ruinous state gives a clear insight into the constructional details of its fine ashlar masonry as witnessed by this image taken using a Wild P32 during a photogrammetric survey.

As this image did not have any distortion, we used a polynomial distortion coming from another calibration and adapted it to the P32 file format (see

Table 4). The initial values of the coefficients have been conserved and the distortion polynom expressed in millimeters is the compensation due at any point of the file format. The important eccentricity of the CoD is used in the image rectification: the COD is positioned on the central fiducial marks visible on the images in

Figure 6a,b.

After scanning the image (the film was scanned by Kodak and the result file is a 4860 × 3575 pixel image), we first measure the five fiducial marks in pixels on the scanned image and then compute an affine transformation to pass from the scanned image in pixels to the camera reference system in millimeters where the central cross is located at (0.0, 0.0). This is done according to a camera calibration provided by the vendor, which gives the coordinates of each fiducial mark in millimeters in the camera reference system.

Table 5 shows the coordinates of the fiducial marks and highlights the high eccentricity of the camera built for architectural survey. This operation is called internal orientation in photogrammetry and it is essential when using images coming from film-based camera that were scanned. The results of these measurements are shown in

Table 5.

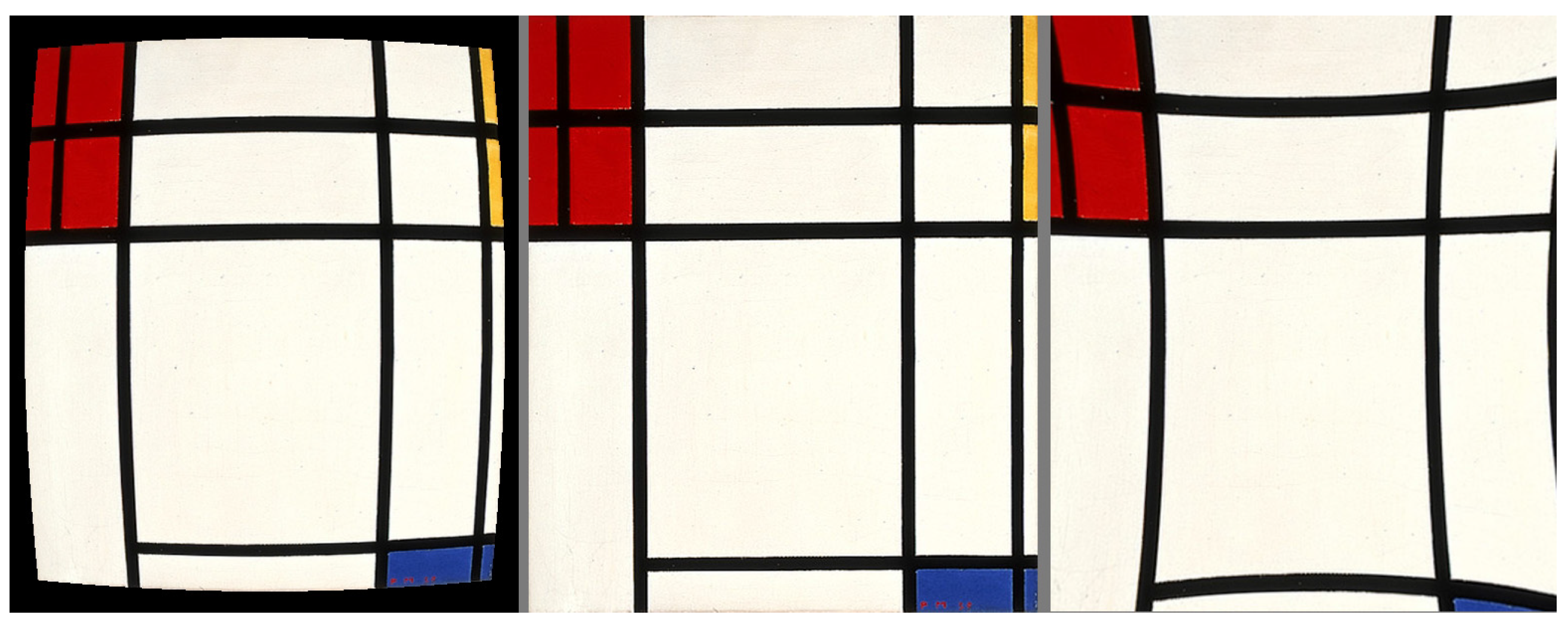

In

Figure 6a we can see the original image taken in Aleyrac while in the

Figure 6b we can see the result of the radial distortion inversion.

Figure 7 shows the original image in grey and the image computed after a double inversion of the radial distortion model in green.

We can observe no visible difference in the image. This is correlated with the previous results in the second experiment, see

Figure 4.