Tools to Perform Local Dense 3D Reconstruction of Shallow Water Seabed †

Abstract

:1. Introduction

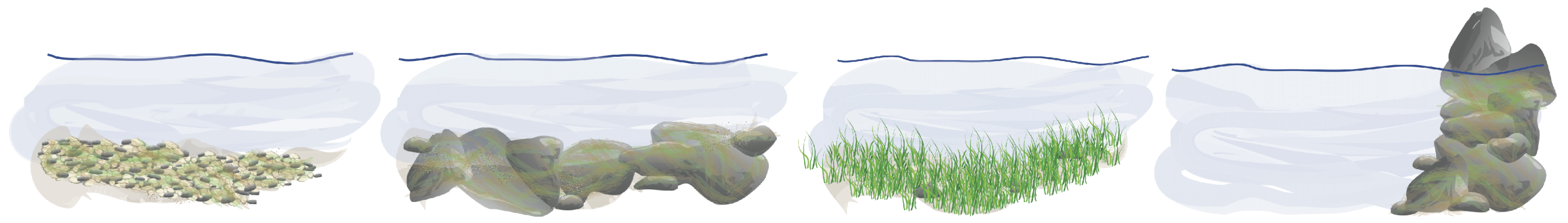

2. Mapping the Underwater Environment in Three Dimensions

2.1. Acquisition Systems for Optical Imagery: Mobile Sensors

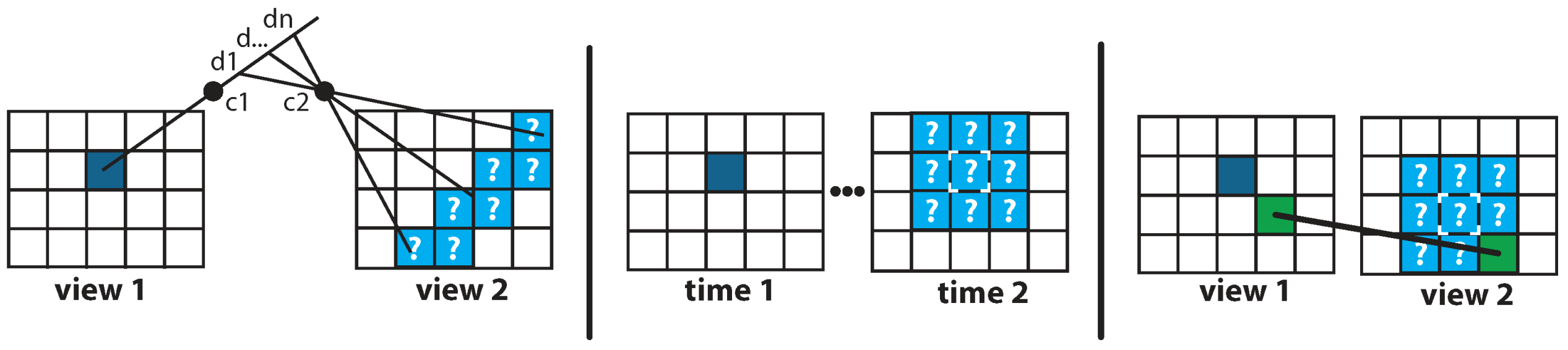

2.2. Processing Systems for Optical Imagery: Three-Dimensional Reconstruction Algorithms

- The spatial neighborhood criterion uses the proximity constraint : if one pixel is close to another, so should their two corresponding pixels in the other view [51].

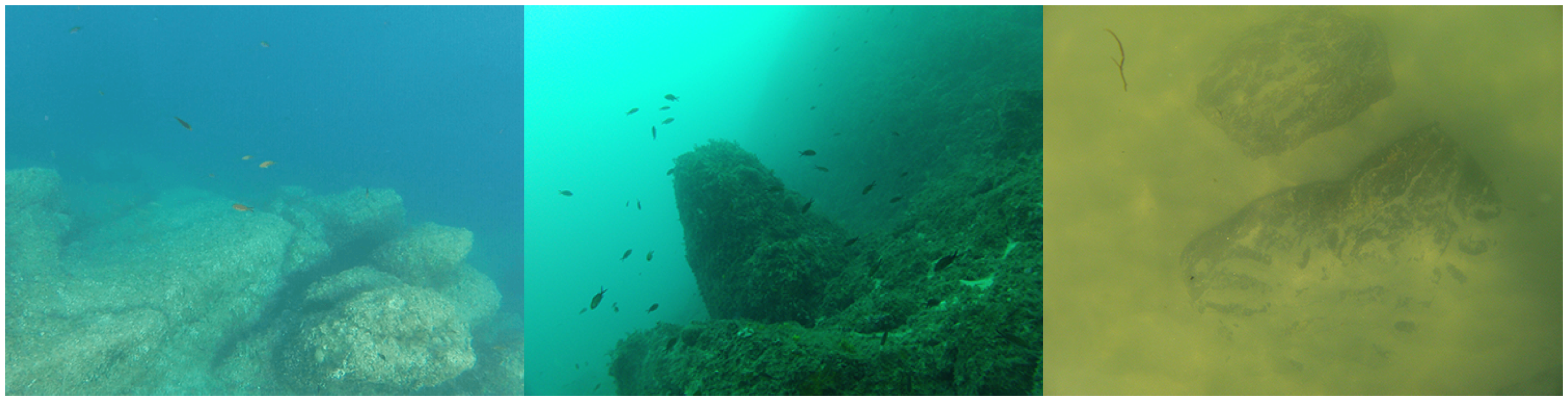

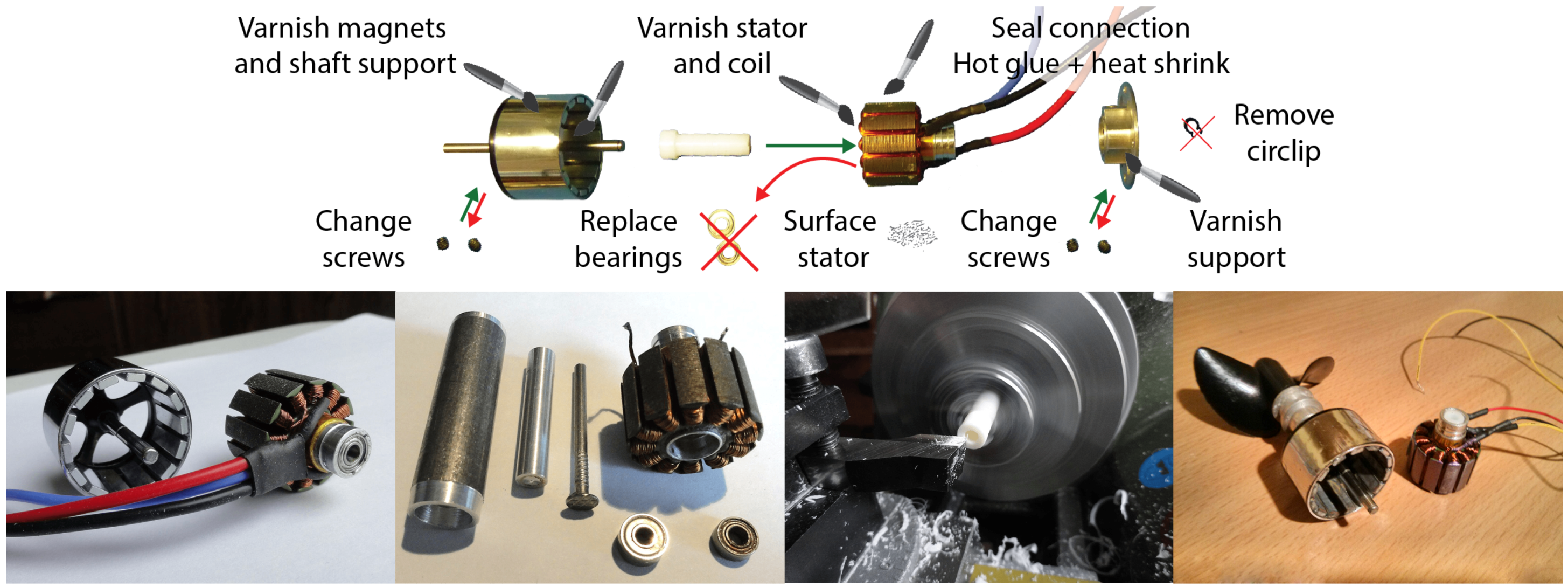

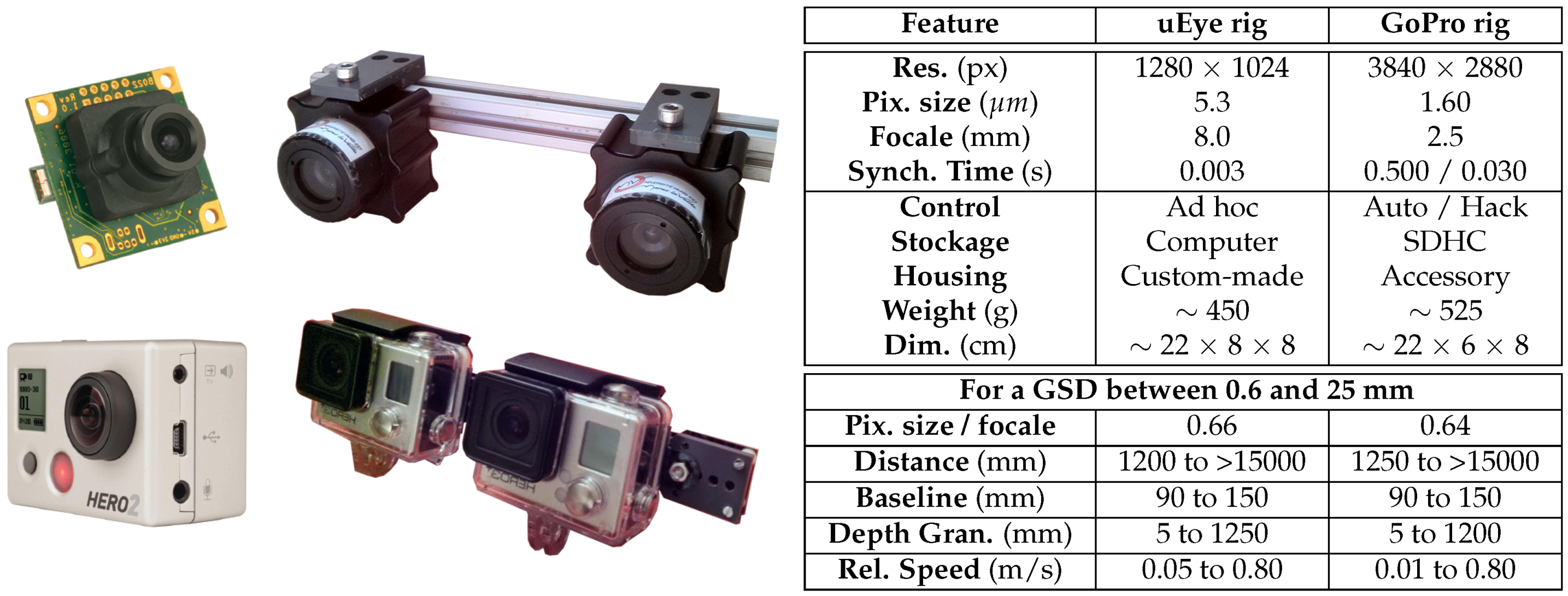

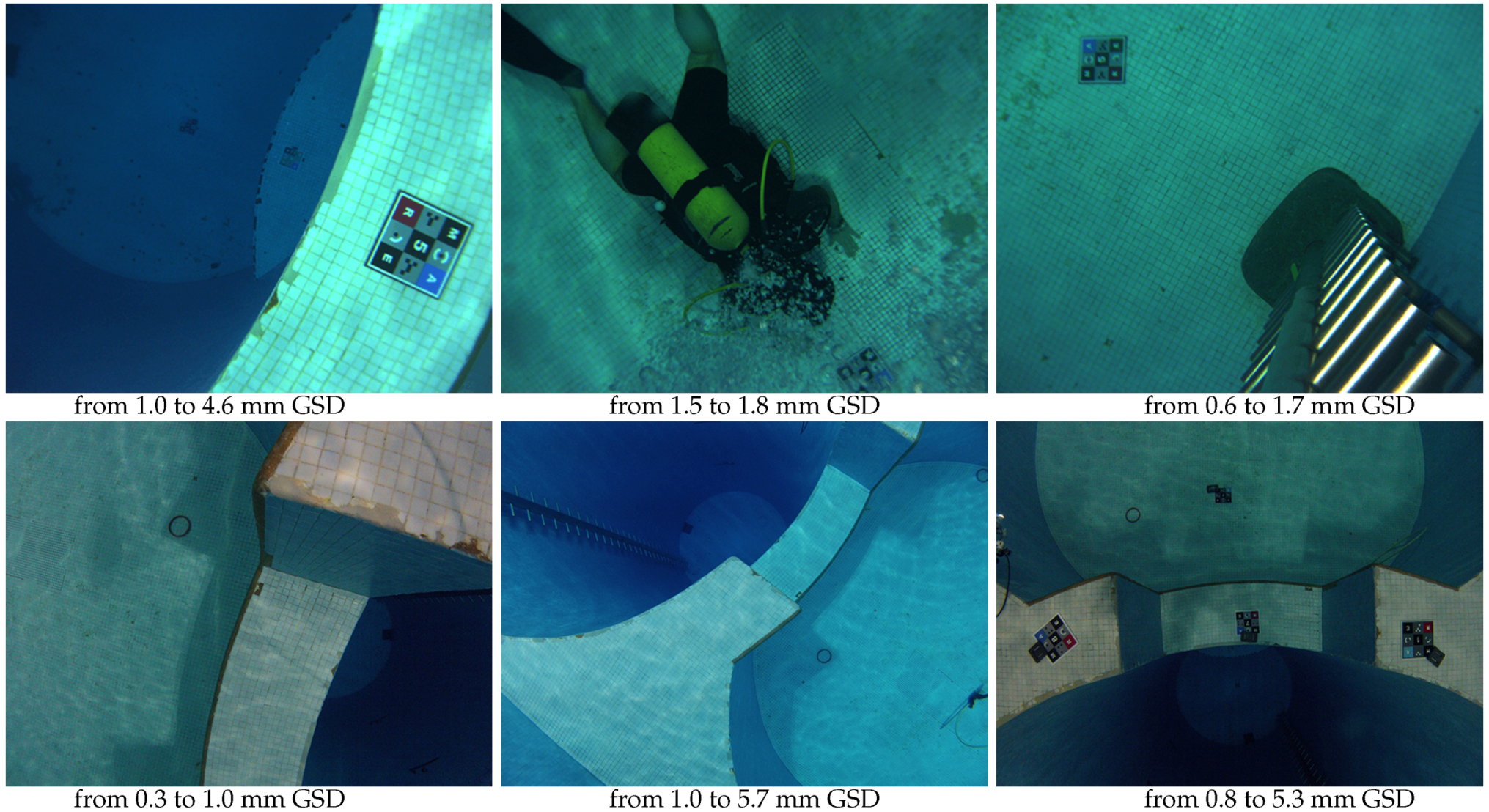

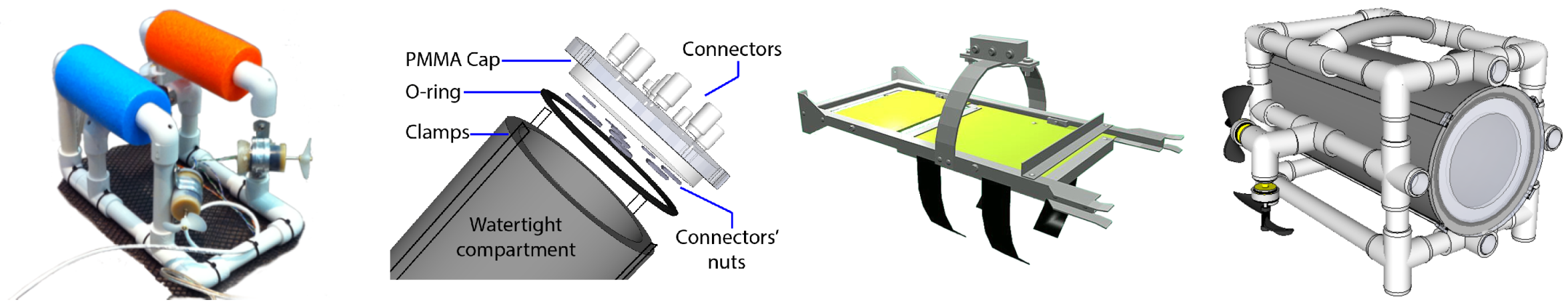

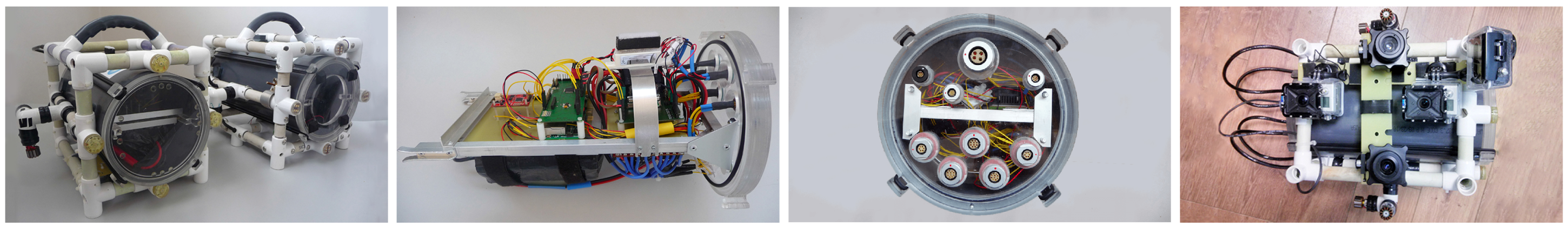

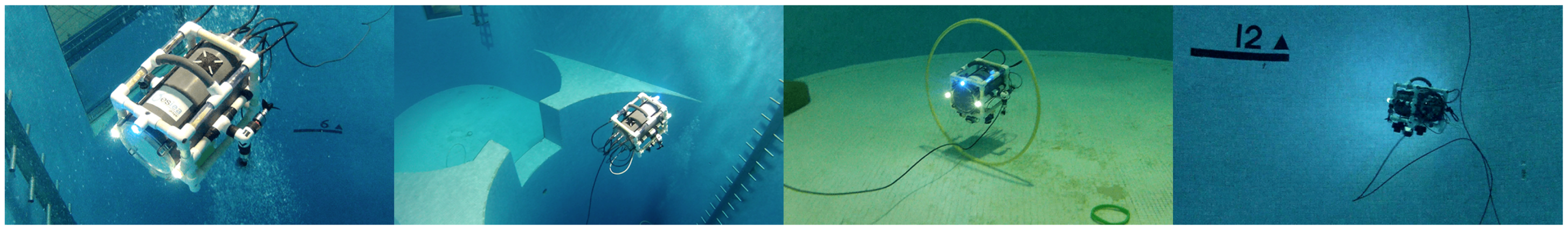

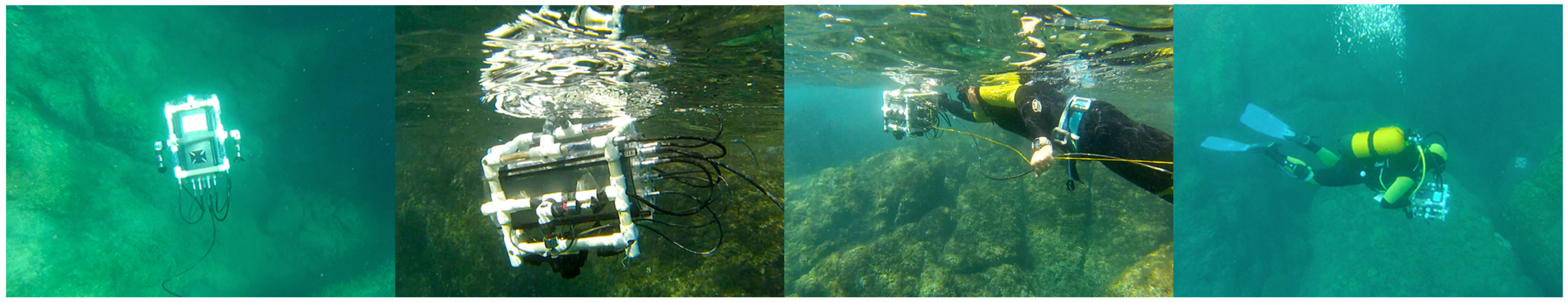

3. Sensor: Our Agile Micro-System Dedicated to Optical Acquisitions in Shallow Water

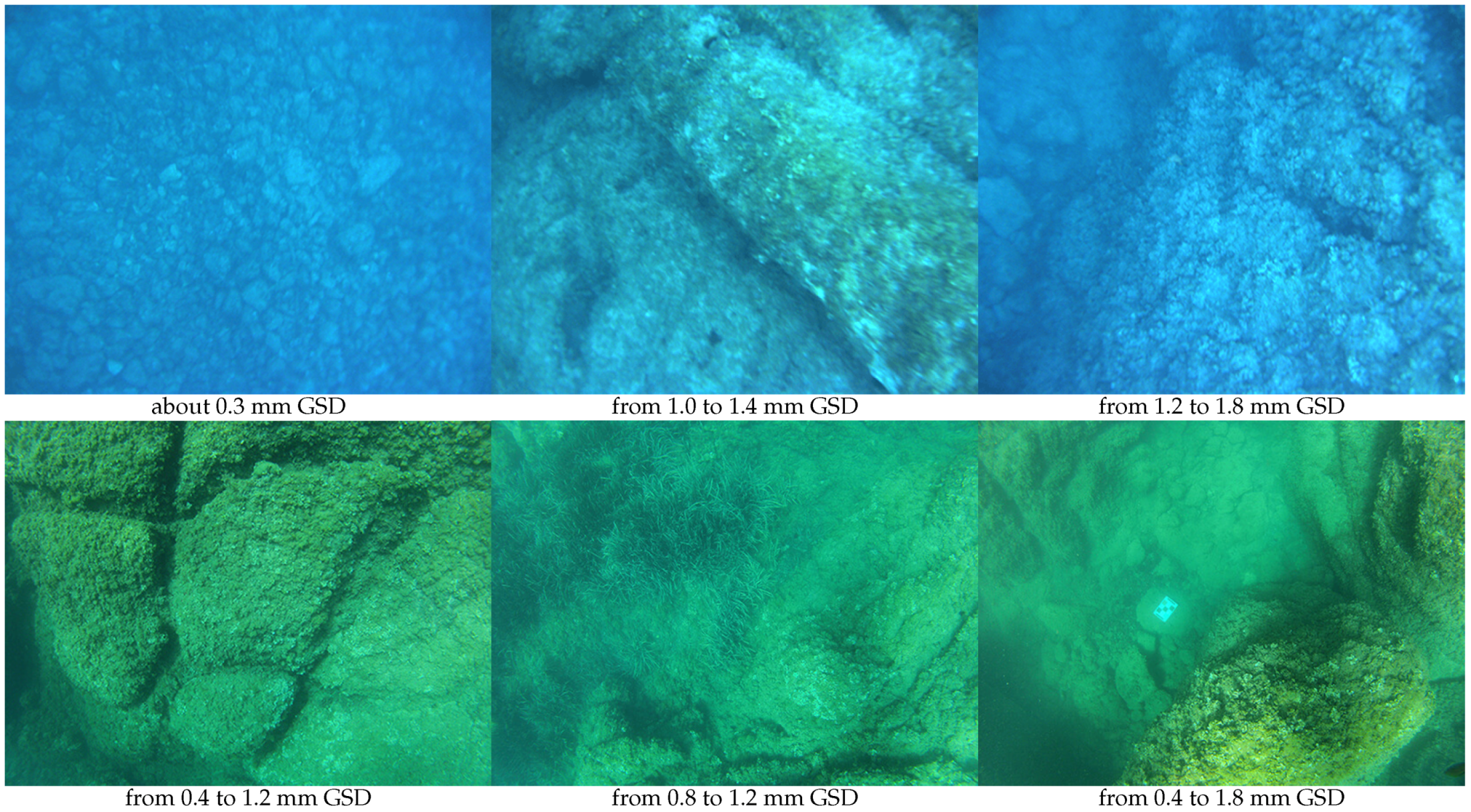

3.1. Designing the Key Parameters of the System to Map at the Scale of Individual Objects of Interest

3.2. A Micro-System to Perform on Demand Acquisition in Shallow Water Using Simple Logistics

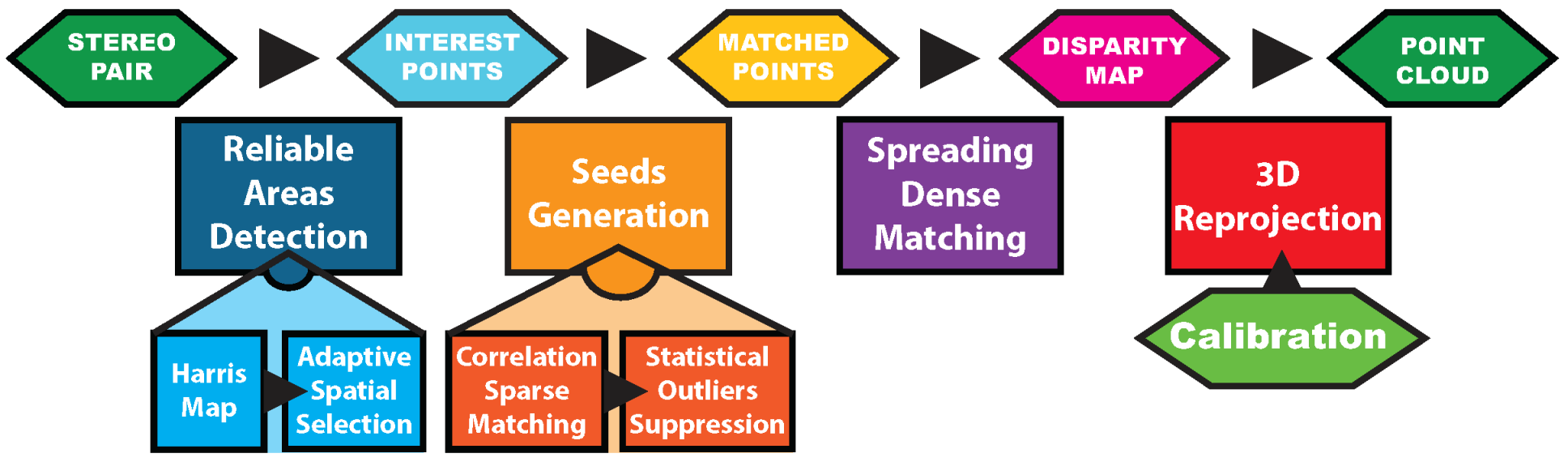

4. Post-Processing Pipeline: Retrieving the Three-Dimensional Information from Underwater Stereopairs

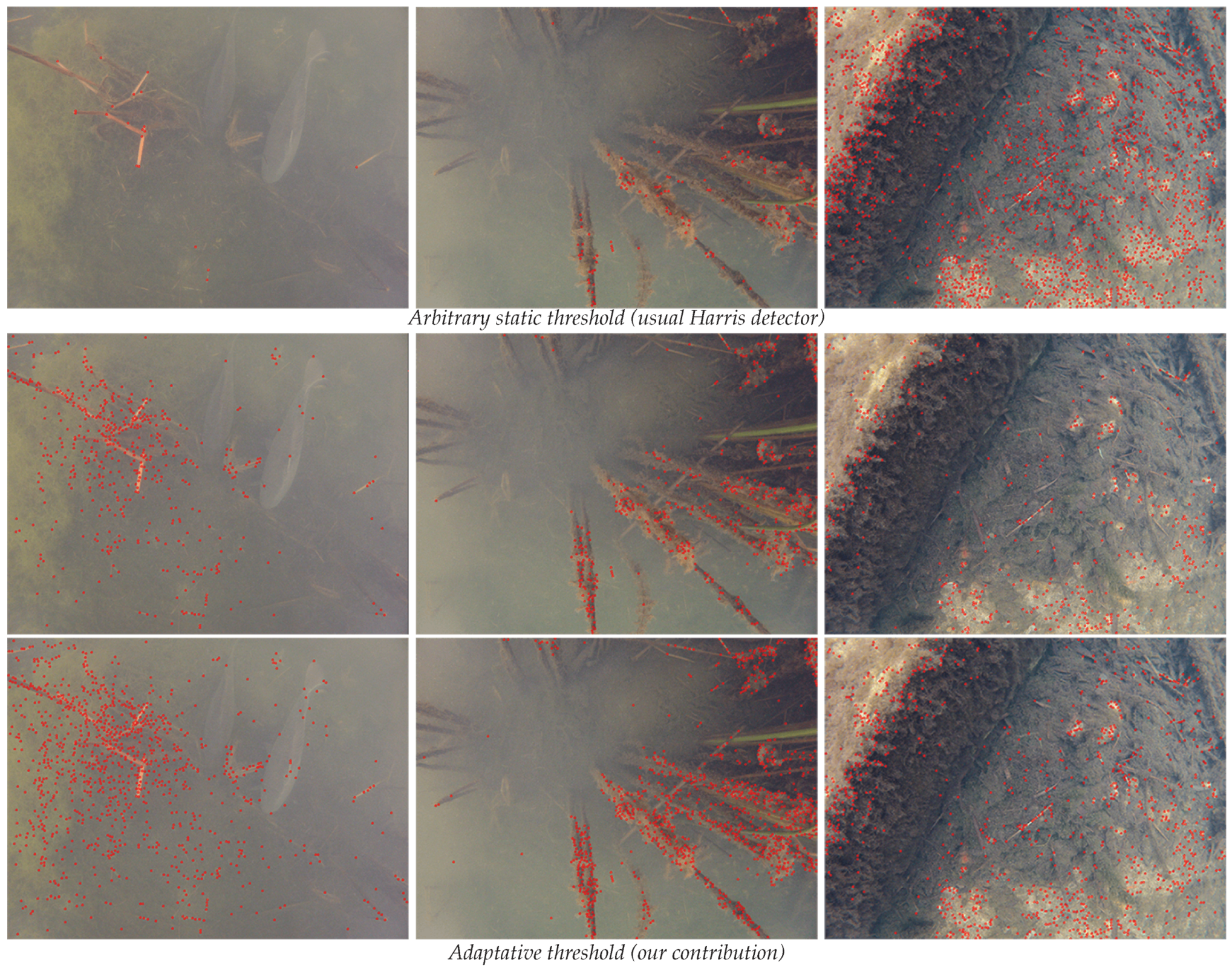

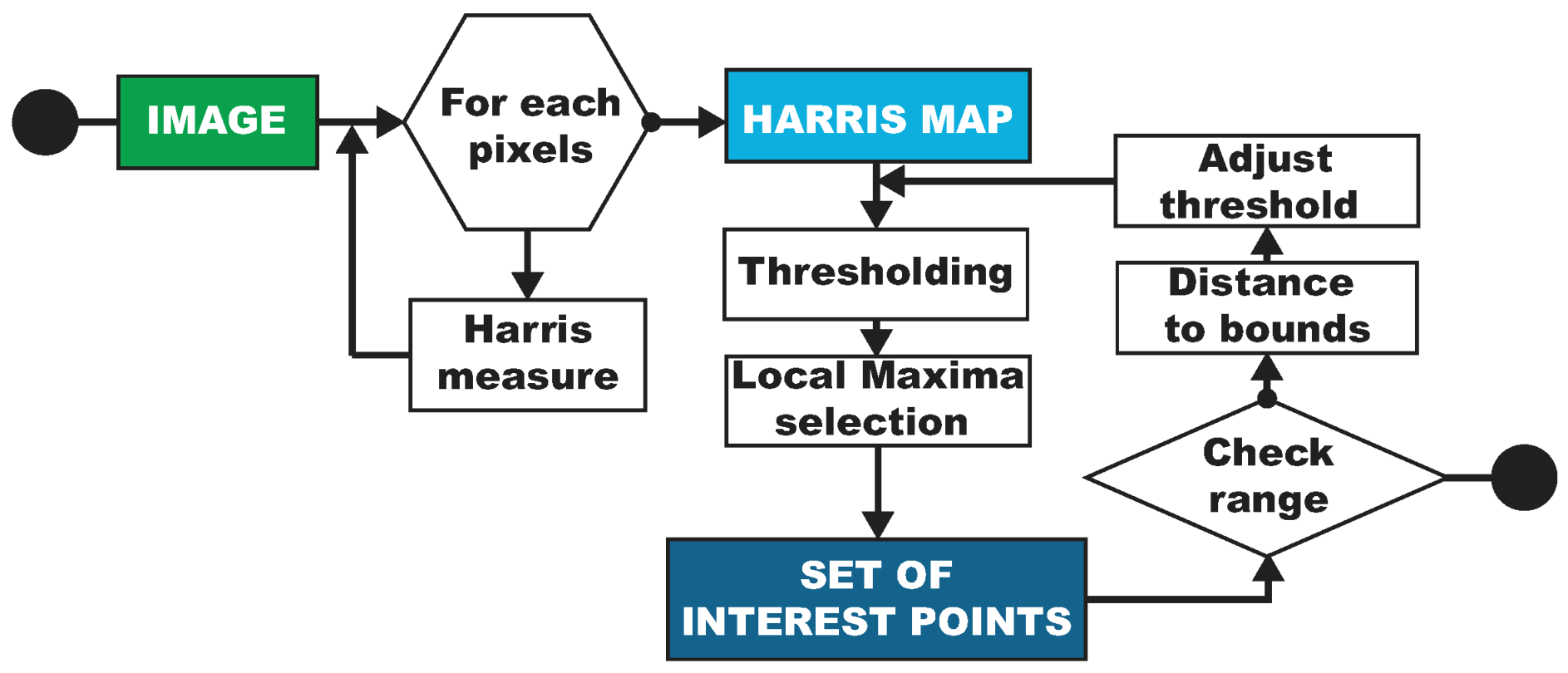

4.1. Robust Identification of the Most Textured Areas in Underwater Images

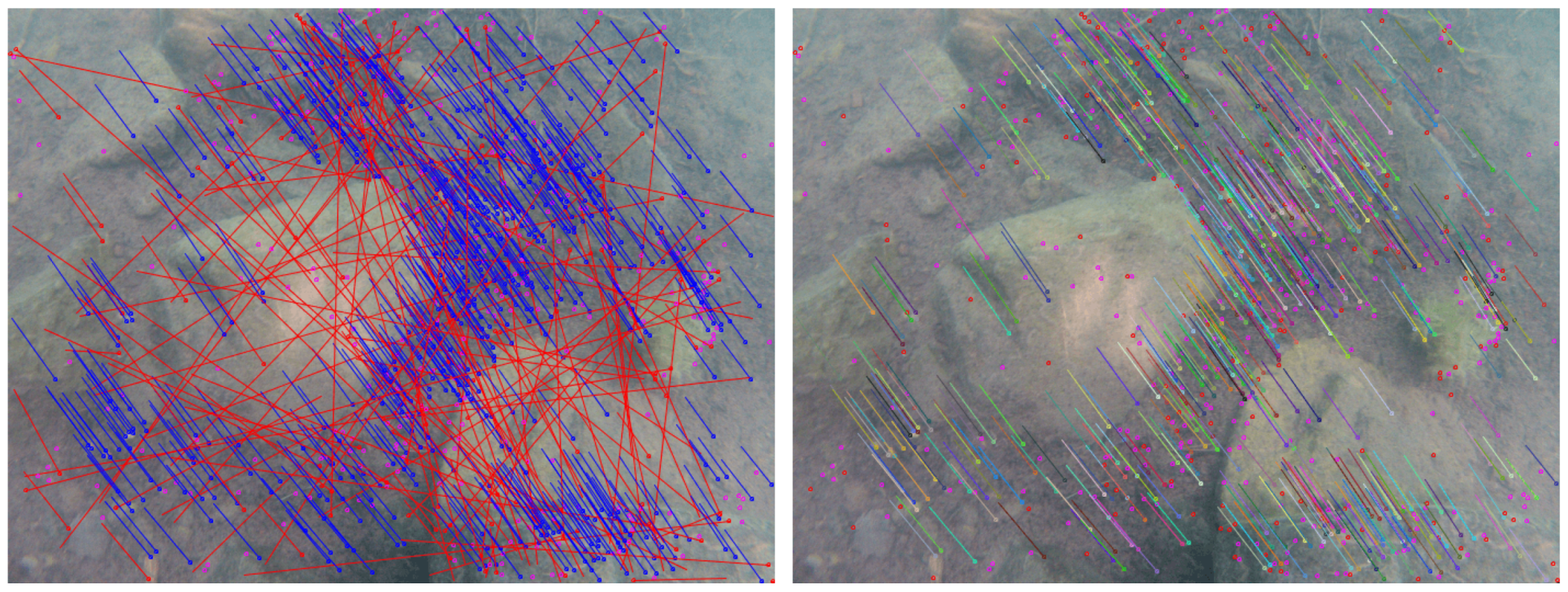

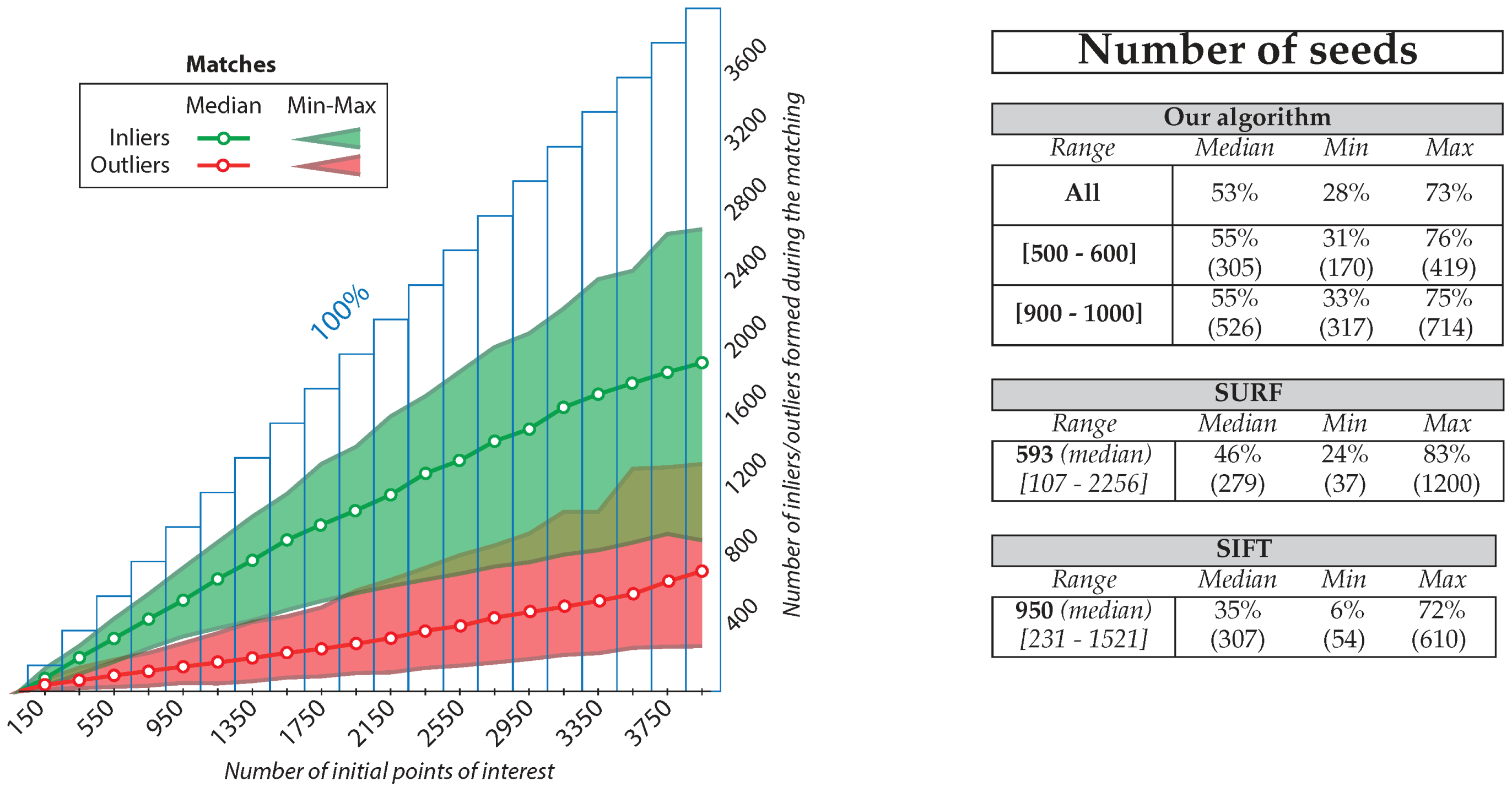

4.2. Creation of a Reliable Set of Seeds

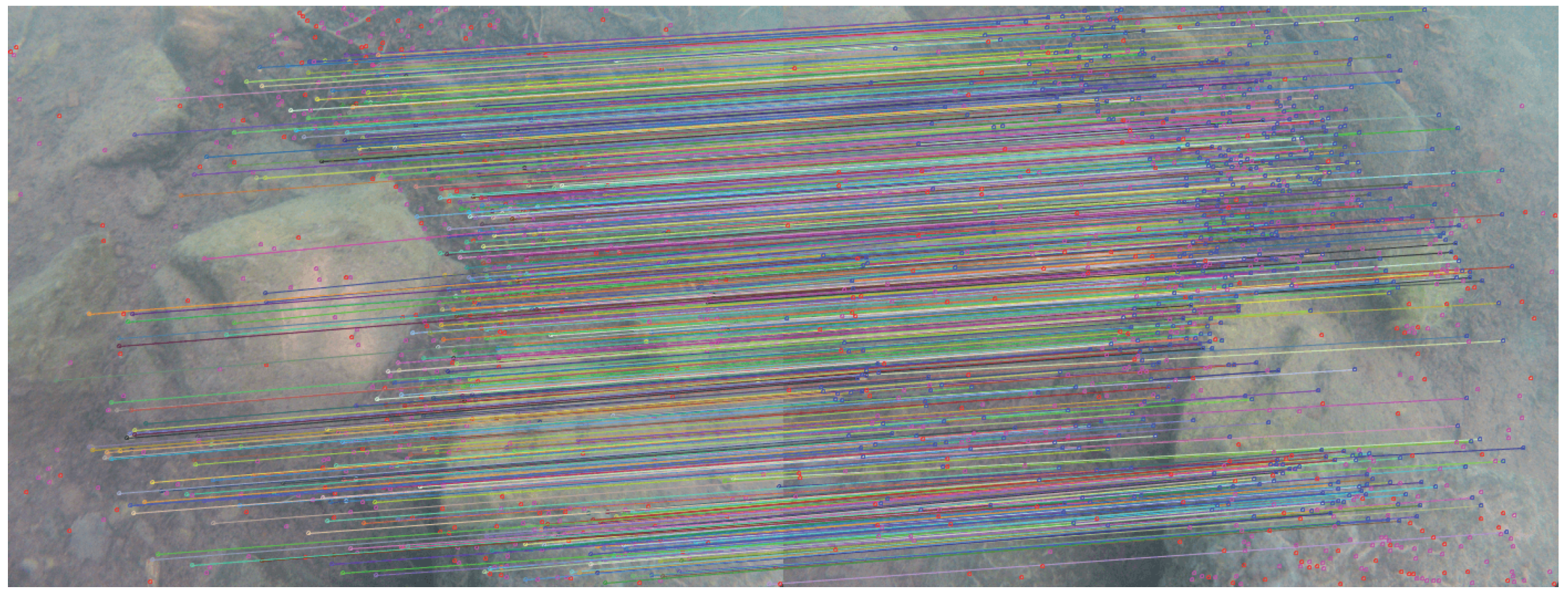

4.3. Spreading of the Matching and Automatic Exclusion of Areas without Information

4.4. Reprojection and Three-Dimensional Point Cloud

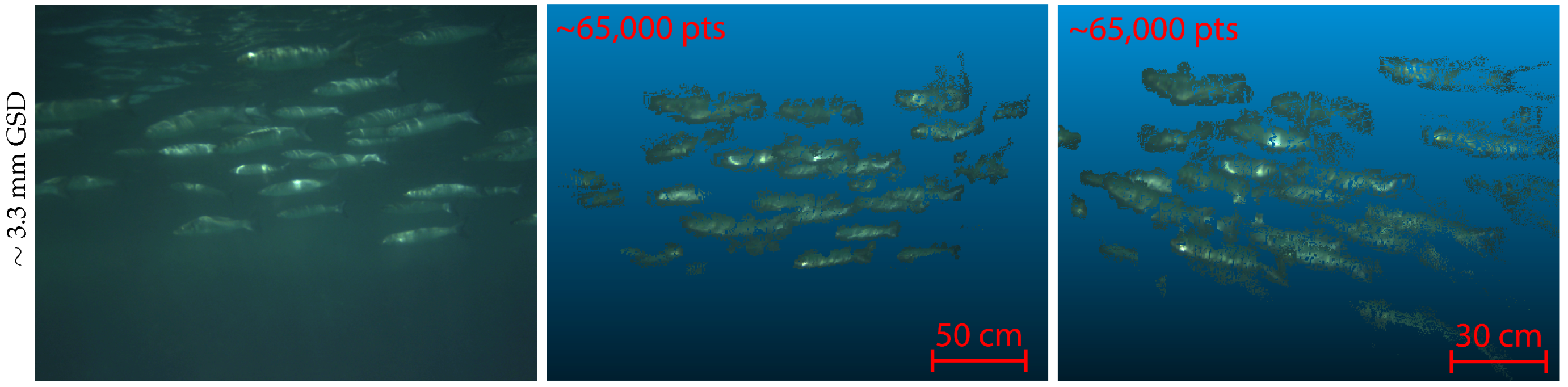

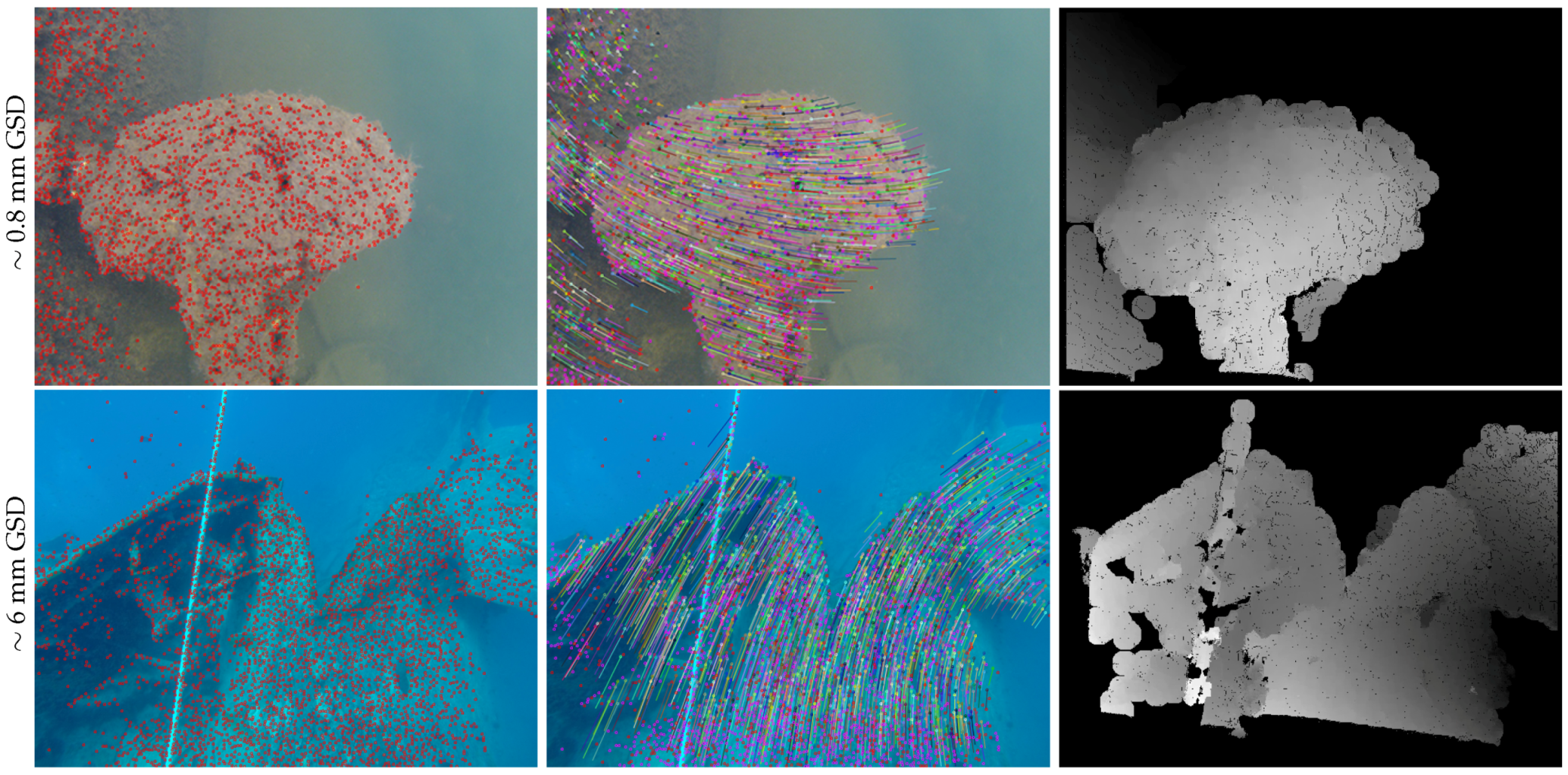

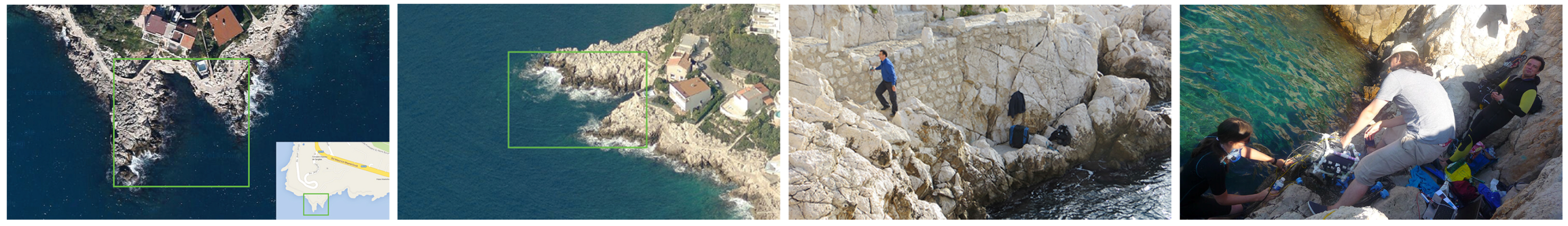

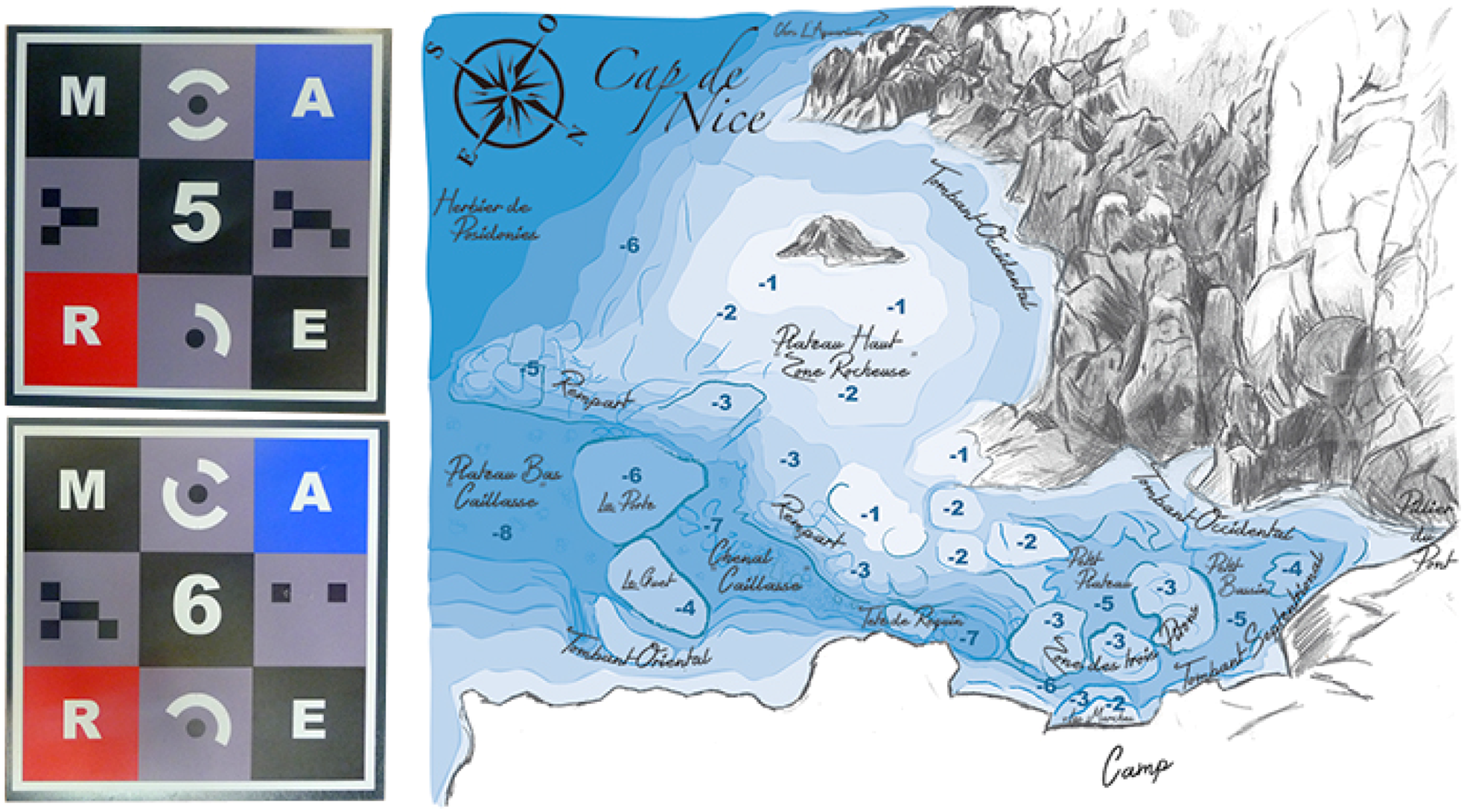

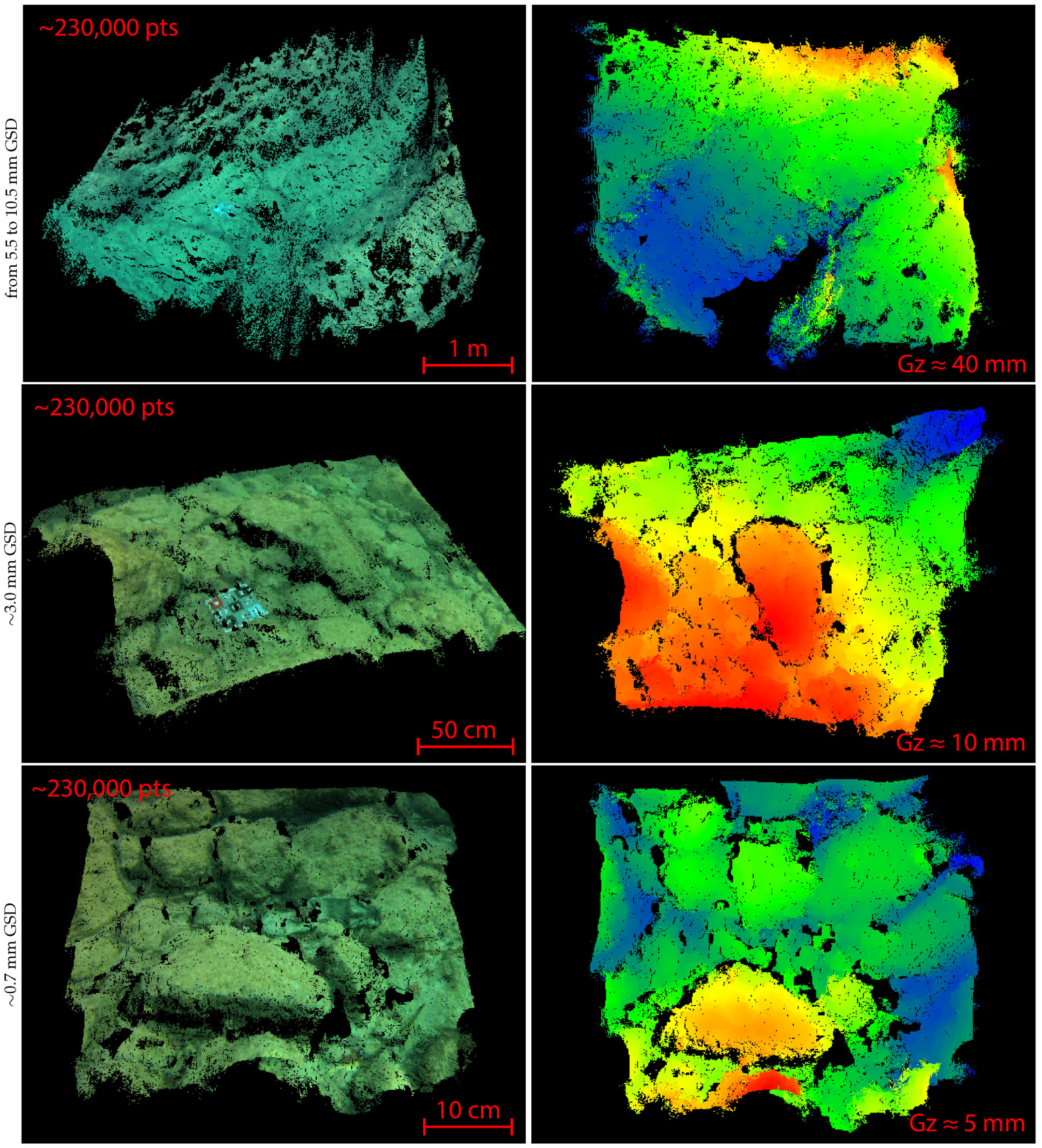

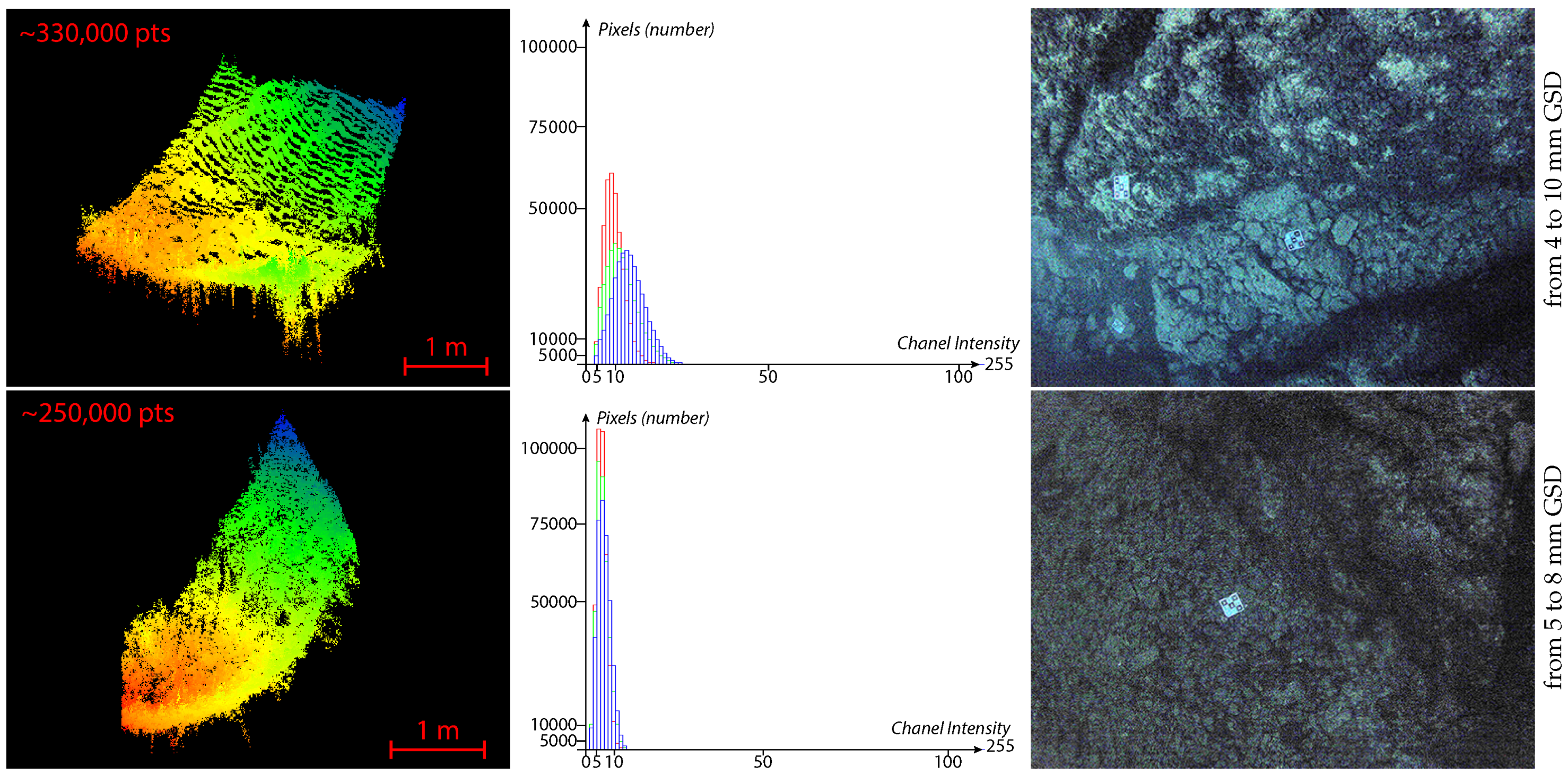

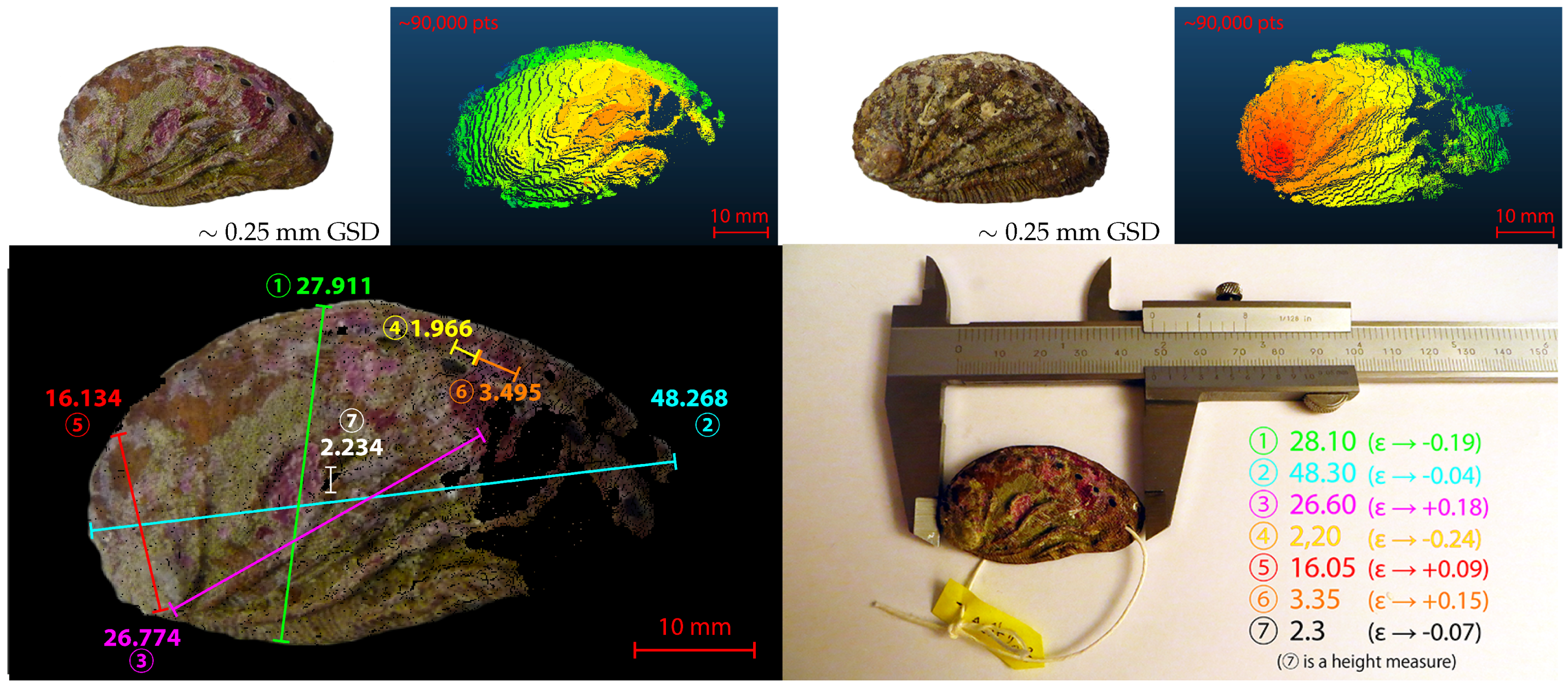

5. Results of Field Missions in the Mediterranean Sea and Analysis

5.1. Equipment of the Field with Landmark Patterns

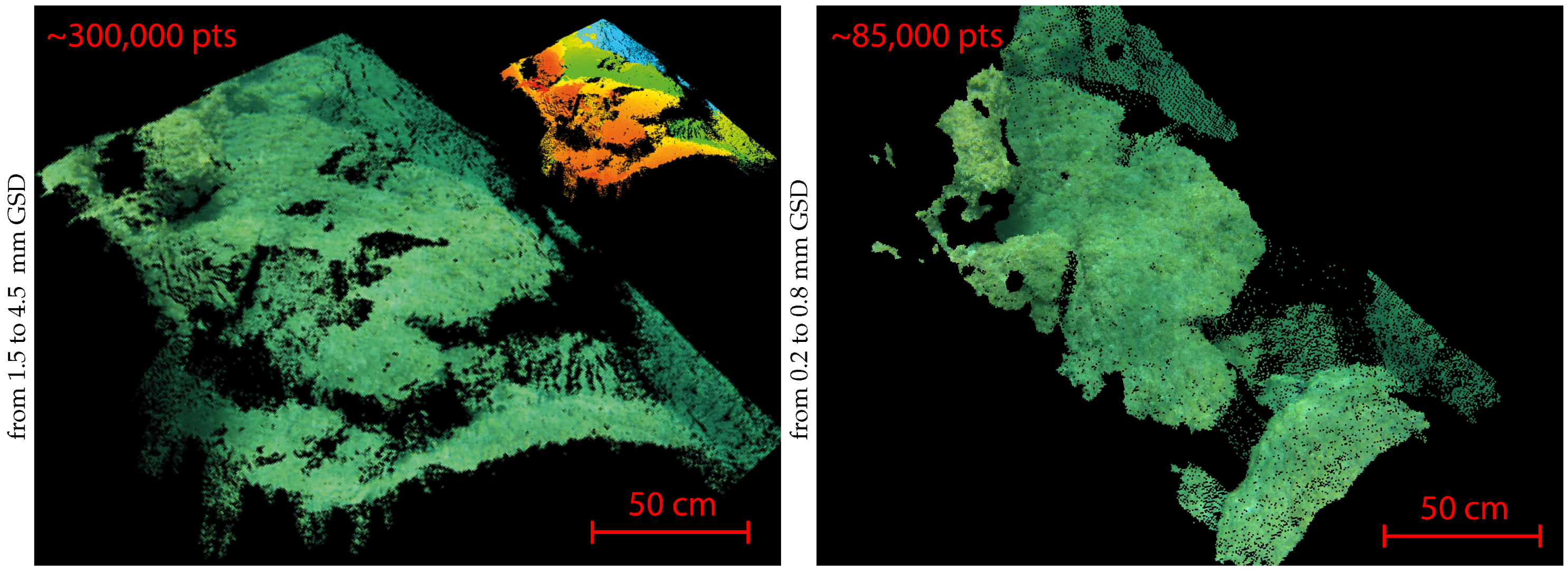

5.2. Analysis of the Obtained Results

6. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Corfield, S.; Hillenbrand, C. Defence Applications for Unmanned Underwater Vehicles. In Ocean Science and Technology: Technology and Applications of Autonomous Underwater Vehicles; Taylor & Francis: London, UK, 2003; Volume 2, pp. 161–178. [Google Scholar]

- Danson, E.F.S. AUV Tasks in the Offshore Industry. In Ocean Science and Technology: Technology and Applications of Autonomous Underwater Vehicles; Taylor & Francis: London, UK, 2003; Volume 2, pp. 127–138. [Google Scholar]

- Bianchi, C.N.; Ardizzone, G.D.; Belluscio, A.; Colantoni, P.; Diviacco, G.; Morri, C.; Tunesi, L. Benthic Cartography. Biol. Mar. Mediterr. 2004, 11, 347–370. [Google Scholar]

- Warren, D.J.; Church, R.A.; Eslinger, K.L.; Technologies, C. Deepwater Archaeology with Autonomous Underwater Vehicle Technology. In Proceedings of the Offshore Technology Conference (OTC), Houston, TX, USA, 30 April–3 May 2007; pp. 1329–1339.

- Payne, C.M. Principles of Naval Weapon Systems, Chapter 9—Principles of Underwater Sound, Section 3—Speed of Sound in the Sea; Naval Institute Press: Annapolis, MD, USA, 2006; p. 159. [Google Scholar]

- Chen, Y.Q.; Lee, Y.C. Geographical Data Acquisition, Chapter 8—Technique for Underwater Data Acquisition, Section 3—Soundings; Springer-Verlag: Wien, Autriche, 2012. [Google Scholar]

- Rives, C.; Pétron, C. La Prise de vue sous-marine; Fillipacchi: Paris, France, 1978. (In French) [Google Scholar]

- Mullen, L.J.; Contarino, V.M.; Laux, A.; Concannon, B.M.; Davis, J.P.; Strand, M.P.; Coles, B.W. Modulated Laser Line Scanner for Enhanced Underwater Imaging. In Proceedings of the SPIE Airborne and In-Water Underwater Imaging, Denver, CO, USA, 18 July 1999; pp. 2–9.

- Moore, K.D.; Jaffe, J.S.; Ochoa, B.L. Development of a New Underwater Bathymetric Laser Imaging System: L-Bath. J. Atmos. Ocean. Technol. 2000, 17, 1106–1117. [Google Scholar] [CrossRef]

- Dalgleish, F.R.; Caimi, F.M.; Britton, W.B.; Andren, C.F. An AUV-Deployable Pulsed Laser Line Scan (PLLS) Imaging Sensor. In Proceedings of the IEEE OCEANS Conference, Aberdeen, Scotland, 18–21 June 2007; pp. 1–5.

- Jaffe, J.S. Multi Autonomous Underwater Vehicle Optical Imaging for Extended Performance. In Proceedings of the IEEE OCEANS Conference, Aberdeen, Scotland, 18–21 June 2007; pp. 1–4.

- Roman, C.; Inglis, G.; Rutter, J. Application of Structured Light Imaging for High Resolution Mapping of Underwater Archaeological Sites. In Proceedings of the IEEE OCEANS Conference, Sydney, Australia, 24–27 May 2010; pp. 1–9.

- Gillham, J. Undertanding the Impact of Water Clarity When Scanning with ULS-100, White Paper; 2G Robotics Inc.: Waterloo, ON, Canada, 2011. [Google Scholar]

- McLeod, D.; Jacobson, J.; Hardy, M.; Embry, C. Autonomous Inspection Using an Underwater 3D LiDAR. In Proceedings of the IEEE OCEANS, San Diego, CA, USA, 23–27 September 2013; pp. 1–8.

- Ballard, R.D.; McCann, A.M.; Yoerger, D.R.; Whitcomb, L.L.; Mindell, D.A.; Oleson, J.; Singh, H.; Foley, B.; Adams, J.; Piechota, D.; et al. The Discovery of Ancient History in the Deep Sea Using Advanced Deep Submergence Technology. Deep Sea Res. Part I Oceanogr. Res. Pap. 2000, 47, 1591–1620. [Google Scholar] [CrossRef]

- Rende, S.F.; Irving, A.D.; Lagudi, A.; Bruno, F.; Scalise, S.; Cappa, P.; Montefalcone, M.; Bacci, T.; Penna, M.; Trabucco, B.; et al. Pilot Application of 3D Underwater Imaging Techniques for Mapping Posidonia Oceanica (L.) Delile Meadows. Int. Arch. Photogramm. Remote Sens. Spat. Inform. Sci. 2015, 1, 177–181. [Google Scholar] [CrossRef]

- Pizarro, O.; Eustice, R.; Singh, H. Large Area 3D Reconstructions from Underwater Surveys. In Proceedings of the IEEE OCEANS Conference, Kobe, Japan, 9–12 November 2004; Volume 2, pp. 678–687.

- Williams, S.B.; Mahon, I. Simultaneous Localisation and Mapping on the Great Barrier Reef. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), New Orleans, LA, USA, 26 April–1 May 2004; Volume 2, pp. 1771–1776.

- Dunbabin, M.; Roberts, J.; Usher, K.; Winstanley, G.; Corke, P. A Hybrid AUV Design for Shallow Water Reef Navigation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Barcelona, Spain, 18–22 April 2005; pp. 2105–2110.

- Ballard, R.D. Archaeological Oceanography. Oceanography 2007, 20, 62–67. [Google Scholar] [CrossRef]

- Brandou, V.; Allais, A.G.; Perrier, M.; Malis, E.; Rives, P.; Sarrazin, J.; Sarradin, P.M. 3D Reconstruction of Natural Underwater Scenes Using the Stereovision System IRIS. In Proceedings of the IEEE OCEANS Conference, Aberdeen, Scotland, 18–21 June 2007; pp. 674–679.

- Lirman, D.; Gracias, N.R.; Gintert, B.E.; Gleason, A.C.R.; Reid, R.P.; Negahdaripour, S.; Kramer, P. Development and Application of a Video-Mosaic Survey Technology to Document the Status of Coral Reef Communities. Environ. Monit. Assess. 2007, 125, 59–73. [Google Scholar] [CrossRef] [PubMed]

- Ludvigsen, M.; Sortland, B.; Johnsen, G.; Singh, H. Applications of geo-referenced underwater photo mosaics in marine biology and archaeology. Oceanography 2007, 20, 140–149. [Google Scholar] [CrossRef]

- Botelho, S.S.D.C.; Drews, P.; Oliveira, G.L.; Figueiredo, M.D.S. Visual Odometry and Mapping for Underwater Autonomous Vehicles. In Proceedings of the IEEE Regional Latin American Robotics Symposium (LARS), Valparaiso, Chile, 29–30 October 2009; pp. 1–6.

- Foley, B.P.; Dellaporta, K.; Sakellariou, D.; Bingham, B.S.; Camilli, R.; Eustice, R.M.; Evagelistis, D.; Ferrini, V.L.; Katsaros, K.; Kourkoumelis, D.; et al. The 2005 Chios Ancient Shipwreck Survey: New Methods for Underwater Archaeology. Hesperia 2009, 78, 269–305. [Google Scholar] [CrossRef] [Green Version]

- Sedlazeck, A.; Köser, K.; Koch, R. 3D Reconstruction Based on Underwater Video from ROV Kiel 6000 Considering Underwater Imaging Conditions. In Proceedings of the IEEE OCEANS Conference, Bremen, Germany, 11–14 May 2009; pp. 1–10.

- Johnson-Roberson, M.; Pizarro, O.; Williams, S.B.; Mahon, I. Generation and Visualization of Large-Scale Three-Dimensional Reconstructions from Underwater Robotic Surveys. J. Field Robot. 2010, 27, 21–51. [Google Scholar] [CrossRef]

- Aulinas, J.; Carreras, M.; Llado, X.; Salvi, J.; Garcia, R.; Prados, R.; Petillot, Y.R. Feature Extraction for Underwater Visual SLAM. In Proceedings of the IEEE OCEANS Conference, Santander, Spain, 6–9 June 2011; pp. 1–7.

- Shkurti, F.; Rekleitis, I.; Dudek, G. Feature Tracking Evaluation for Pose Estimation in Underwater Environments. In Proceedings of the Canadian Conference on Computer and Robot Vision (CRV), St. Johns, NL, USA, 25–27 May 2011; pp. 160–167.

- Fillinger, L.; Funke, T. A New 3-D Modelling Method to Extract Subtransect Dimensions from Underwater Videos. Ocean Sci. 2013, 9, 461–476. [Google Scholar] [CrossRef] [Green Version]

- Gracias, N.; Ridao, P.; Garcia, R.; Escartín, J.; L’Hour, M.; Cibecchini, F.; Campos, R.; Carreras, M.; Ribas, D.; Palomeras, N.; et al. Mapping the Moon: Using a Lightweight AUV to Survey the Site of the 17th Century Ship “La Lune”. In Proceedings of the IEEE OCEANS Conference, Bergen, Norway, 10–14 June 2013; pp. 1–8.

- Nelson, E.A.; Dunn, I.T.; Forrester, J.; Gambin, T.; Clark, C.M.; Wood, Z.J. Surface Reconstruction of Ancient Water Storage Systems—An Approach for Sparse 3D Sonar Scans and Fused Stereo Images. In Proceedings of the International Conference on Computer Graphics Theory and Applications (GRAPP), Lisbon, Portugal, 5–8 January 2014; pp. 161–168.

- Christ, R.D.; Wernli, R.L. The ROV Manual: A User Guide For Observation Class Remotely Operated Vehicles; Elsevier Science: Burlington, VT, USA, 2008. [Google Scholar]

- Nicosevici, T.; Garcia, R. Online Robust 3D Mapping Using Structure from Motion Cues. In Proceedings of the IEEE OCEANS Conference, Kobe, Japan, 8–11 April 2008; pp. 1–7.

- Méline, A.; Triboulet, J.; Jouvencel, B. A Camcorder for 3D Underwater Reconstruction of Archeological Objects. In Proceedings of the IEEE OCEANS Conference, Seattle, WA, USA, 20–23 September 2010; pp. 1–9.

- Mahon, I.; Pizarro, O.; Johnson-Roberson, M.; Friedman, A.; Williams, S.B.; Henderson, J.C. Reconstructing Pavlopetri: Mapping the World’s Oldest Submerged Town Using Stereo-Vision. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 2315–2321.

- Drap, P. Underwater Photogrammetry for Archaeology. In Special Applications of Photogrammetry; InTech: Rijeka, Croatia, 2012; pp. 111–136. [Google Scholar]

- Skarlatos, D.; Demestiha, S.; Kiparissi, S. An “open” method for 3D modelling and mapping in underwater archaeological sites. Int. J. Herit. Digit. Era 2012, 1, 1–24. [Google Scholar] [CrossRef]

- Balletti, C.; Beltrame, C.; Costa, E.; Guerra, F.; Vernier, P. Underwater Photogrammetry and 3D Reconstruction of Marble Cargos Shipwreck. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-5/W5, 7–13. [Google Scholar] [CrossRef]

- Diamanti, E.; Vlachaki, F. 3D Recording of Underwater Antiquities in the South Euboean Gulf. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 1, 93–98. [Google Scholar] [CrossRef]

- Van Damme, T. Computer Vision Photogrammetry for Underwater Archaeological Site Recording in a Low-Visibility Environment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 1, 231–238. [Google Scholar] [CrossRef]

- Jasiobedzki, P.; Se, S.; Bondy, M.; Jakola, R. Underwater 3D Mapping and Pose Estimation for ROV Operations. In Proceedings of the IEEE OCEANS Conference, Quebec, QC, Canada, 15–18 September 2008; pp. 1–6.

- Petillot, Y.; Salvi, J.; Batlle, E. 3D Large-Scale Seabed Reconstruction for UUV Simultaneous Localization and Mapping. In Proceedings of the IFAC Workshop on Navigation, Guidance and Control of Underwater Vehicles (NGCUV), Killaloe, Irland, 8–10 April 2008.

- Williams, S.B.; Pizarro, O.R.; Johnson-Roberson, M.; Mahon, I.; Webster, J.; Beaman, R.; Bridge, T. AUV-Assisted Surveying of Relic Reef Sites. In Proceedings of the IEEE OCEANS Conference, Quebec City, QC, Canada, 15–18 September 2008; pp. 1–7.

- Beall, C.; Lawrence, B.J.; Ila, V.; Dellaert, F. 3D Reconstruction of Underwater Structures. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 4418–4423.

- Méline, A.; Triboulet, J.; Jouvencel, B. Comparative Study of Two 3D Reconstruction Methods for Underwater Archaeology. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Portugal, 7–12 October 2012; pp. 740–745.

- Faugeras, O. Three-Dimensional Computer Vision—A Geometric Viewpoint; MIT Press: Cambridge, MA, USA, 1993. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Forsyth, D.A.; Ponce, J. Computer Vision: A Modern Approach; Prentice Hall: Upper Saddle River, NJ, USA, 2003. [Google Scholar]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer-Verlag: London, UK, 2010. [Google Scholar]

- Lhuillier, M.; Quan, L. Robust Dense Matching Using Local and Global Geometric Constraints. In Proceedings of the IEEE International Conference on Pattern Recognition (ICPR), Barcelona, Portugal, 3–7 September 2000; Volume 1, pp. 968–972.

- Jenkin, M.; Verzijlenberg, B.; Hogue, A. Progress Towards Underwater 3D Scene Recovery. In Proceedings of the ACM C* Conference on Computer Science and Software Engineering (C3S2E), Montréal, QC, Canada, 19–21 May 2010; pp. 123–128.

- Kunz, C.; Singh, H. Stereo Self-Calibration for Seafloor Mapping Using AUVs. In Proceedings of the IEEE Autonomous Underwater Vehicles (AUV), Monterey, CA, USA, 1–3 September 2010; pp. 1–7.

- Prabhakar, C.J.; Kumar, P.U.P. 3D Surface Reconstruction of Underwater Objects. In Proceedings of the National Conference on Advanced Computing and Communications (NCACC), Karnataka, India, 27–28 April 2012; pp. 31–37.

- Schmidt, V.E.; Rzhanov, Y. Measurement of Micro-Bathymetry with a GOPRO Underwater Stereo Camera Pair. In Proceedings of the IEEE OCEANS Conference, Hampton Roads, VA, USA, 14–19 October 2012; pp. 1–6.

- Servos, J.; Smart, M.; Waslander, S.L. Underwater Stereo SLAM with Refraction Correction. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; pp. 3350–3355.

- Pierrot-Deseilligny, M. MicMac, Apero, Pastis and Other Beverages in a Nutshell. Manuel, Institut national de l’information géographique et forestière (IGN), France, 2015. Available online: http://logiciels.ign.fr/IMG/pdf/docmicmac-2.pdf (accessed on 16 May 2016).

- Tang, L.; Wu, C.; Chen, Z. Image Dense Matching Based on Region Growth with Adaptive Window. Pattern Recognit. Lett. 2002, 23, 1169–1178. [Google Scholar] [CrossRef]

- Kannala, J.; Brandt, S.S. Quasi-Dense Wide Baseline Matching Using Match Propagation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8.

- Strecha, C.; Tuytelaars, T.; van Gool, L. Dense Matching of Multiple Wide-Baseline Views. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Nice, France, 13–16 October 2003; pp. 1194–1201.

- Bianco, G.; Gallo, A.; Bruno, F.; Muzzupappa, M. A Comparison Between Active and Passive Techniques for Underwater 3D Applications. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2011, XXXVIII-5/W16, 357–363. [Google Scholar] [CrossRef]

- O’Byrne, M.; Pakrashi, V.; Schoefs, F.; Ghosh, B. A Comparison of Image Based 3D Recovery Methods for Underwater Inspections. In Proceedings of the European Workshop on Structural Health Monitoring (EWSHM), Nantes, France, 8–11 July 2014.

- Furukawa, Y.; Ponce, J. Accurate, Dense, and Robust Multi-View Stereopsis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, United States, 17–22 June 2007; pp. 1–8.

- Hu, H.; Rzhanov, Y.; Boyer, T. A Robust Quasi-Dense Matching Approach for Underwater Images. In Proceedings of the American Society for Photogrammetry and Remote Sensing (ASPRS) Annual Conference, Louisville, KY, USA, 23–28 March 2014.

- Drap, P.; Merad, D.; Hijazi, B.; Gaoua, L.; Nawaf, M.M.; Saccone, M.; Chemisky, B.; Seinturier, J.; Sourisseau, J.C.; Gambin, T.; et al. Underwater Photogrammetry and Object Modeling: A Case Study of Xlendi Wreck in Malta. Sensors 2015, 15, 30351–30384. [Google Scholar] [CrossRef] [PubMed]

- Kramm, S. Production de cartes éparses de profondeur avec un système de stéréovision embarqué non-aligné. PhD Thesis, Université de Rouen, Rouen, France, 2008. [Google Scholar]

- Hogue, A.; German, A.; Zacher, J.; Jenkin, M. Underwater 3D Mapping: Experiences and Lessons Learned. In Proceedings of the IEEE Canadian Conference on Computer and Robot Vision (CRV), Quebec City, QC, Canada, 7–9 June 2006; p. 24.

- Singh, H.; Roman, C.; Pizarro, O.; Eustice, R.; Can, A. Towards High-Resolution Imaging from Underwater Vehicles. Int. J. Robot. Res. 2007, 26, 55–74. [Google Scholar] [CrossRef]

- Gademer, A.; Beaudoin, L.; Avanthey, L.; Rudant, J.P. Application of the Extended Ground Thruth Concept for Risk Anticipation Concerning Ecosystems. Radio Sci. Bull. 2013, 345, 35–50. [Google Scholar]

- Johnson, J. Analysis of Image Forming Systems. In Proceedings of the Image Intensifier Symposium, Fort Belvoir, VA, USA, 6–7 October 1958; p. 249.

- Comer, R.; Kinn, G.; Light, D.; Mondello, C. Talking Digital. Photogramm. Eng. Remote Sens. 1998, 64, 1139–1142. [Google Scholar]

- Cai, Y. How Many Pixels do we Need to See Things? In Proceedings of the International Conference on Computational Science (ICCS), Melbourne, Australia, 2–4 June 2003; Volume 3, pp. 1064–1073.

- Torralba, A. How Many Pixels Make an Image? Vis. Neurosci. 2009, 26, 123–131. [Google Scholar] [CrossRef] [PubMed]

- Krig, S. Computer Vision Metrics: Survey, Taxonomy, and Analysis; Apress: New York, NY, USA, 2014. [Google Scholar]

- McGlone, C.J.; Mikhail, E.M.; Bethel, J.S.; Mullen, R. Manual of Photogrammetry, cinquième (2004) ed.; American Society for Photogrammetry and Remote Sensing (ASPRS): Bethesda, MD, USA, 1980. [Google Scholar]

- Linder, W. Digital Photogrammetry: A Practical Course; Springer-Verlag: Berlin, Germany, 2009. [Google Scholar]

- Harvey, E.S.; Cappo, M.; Shortis, M.; Robson, S.; Buchanan, J.; Speare, P. The Accuracy and Precision of Underwater Measurements of Length and Maximum Body Depth of Southern Bluefin Tuna (Thunnus Maccoyii) with a Stereo-Video Camera System. Fish. Res. 2003, 63, 315–326. [Google Scholar] [CrossRef]

- Birt, M.J.; Harvey, E.S.; Langlois, T.J. Within and Between Day Variability in Temperate Reef Fish Assemblages: Learned Response to Baited Video. J. Exp. Marine Biol. Ecol. 2012, 416, 92–100. [Google Scholar] [CrossRef]

- Seiler, J.; Williams, A.; Barrett, N. Assessing Size, Abundance and Habitat Preferences of the Ocean Perch Helicolenus Percoides Using a AUV-Borne Stereo Camera System. Fish. Res. 2012, 129, 64–72. [Google Scholar] [CrossRef]

- Santana-Garcon, J.; Newman, S.J.; Harvey, E.S. Development and validation of a mid-water baited stereo-video technique for investigating pelagic fish assemblages. J. Exp. Marine Biol. Ecol. 2014, 452, 82–90. [Google Scholar] [CrossRef]

- Shortis, M. Calibration Techniques for Accurate Measurements by Underwater Camera Systems. Sensors 2015, 15, 30810–30826. [Google Scholar] [CrossRef] [PubMed]

- Gademer, A.; Petitpas, B.; Mobaied, S.; Beaudoin, L.; Riera, B.; Roux, M.; Rudant, J.P. Developing a Lowcost Vertical Take Off and Landing Unmanned Aerial System for Centimetric Monitoring of Biodiversity the Fontainebleau Forest Case. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Honolulu, HI, USA, 25–30 July 2010; pp. 600–603.

- Gademer, A.; Vittori, V.; Beaudoin, L. From Light to Ultralight UAV. In Proceedings of the International Conference on Unmanned Aircraft System (ICUAS)—Eurosatory, Paris, France, 14–18 June 2010.

- Avanthey, L.; Vittori, V.; Germain, V.; Barbier, A.; Terisse, R. Ryujin AUV. In International Student Autonomous Underwater Vehicle Challenge—Europe (SAUC-E), La Spezia, Italy, 5–14 July 2011.

- Sanfourche, M.; Vittori, V.; le Besnerais, G. eVO: A Realtime Embedded Stereo Odometry for MAV Applications. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; pp. 2107–2114.

- Walters, P.; Sauder, N.; Thompson, M.; Voight, F.; Gray, A.; Schwarz, E.M. SubjuGator 2013. In Proceedings of the International RoboSub Competition AUVSI/ONR, San Diego, CA, USA, 22–28 July 2013.

- Gintert, B.; Gleason, A.C.R.; Cantwell, K.; Gracias, N.; Gonzalez, M.; Reid, R.P. Third-Generation Underwater Landscape Mosaics for Coral Reef Mapping and Monitoring. In Proceedings of the 12th International Coral Reef Symposium (ICRS), Cairns, Australia, 9–13 July 2012.

- Bohm, H.; Jensen, V. Build Your Own Underwater Robot and Other Wet Projects; Westcoast Words: Vancouver, BC, Canada, 1997. [Google Scholar]

- ONR, A. Seaperch Construction Manual. K-12 Educational Outreach Program, AUVSI Foundation and ONR (Office of Naval Research). Available online: http://www.seaperch.org/build (accessed on 16 May 2016).

- Lowe, D.G. Object Recognition from Local Scale-Invariant Features. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Corfu, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157.

- Bay, H.; Tuytelaars, T.; van Gool, L. Surf: Speeded Up Robust Features. In Proceedings of the European Conference on Computer Vision (ECCV), Graz, Austria, 7–13 May 2006; pp. 404–417.

- Harris, C.; Stephens, M. A Combined Corner and Edge Detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 15, pp. 147–152.

- Minorsky, N. Directional Stability of Automatically Steered Bodies. J. Am. Soc. Nav. Eng. 1922, 42, 280–309. [Google Scholar] [CrossRef]

- Rey Otero, I.; Delbracio, M. Anatomy of the SIFT Method. Image Process. Line 2014, 4, 370–396. [Google Scholar] [CrossRef]

- Gracias, N.; Santos-Victor, J. Underwater Mosaicing and Trajectory Reconstruction Using Global Alignment. In Proceedings of the MTS/IEEE OCEANS Conference and Exhibition, Honolulu, HI, USA, 5–8 November 2001; Volume 4, pp. 2557–2563.

- Drap, P.; Seinturier, J.; Scaradozzi, D.; Gambogi, P.; Long, L.; Gauch, F. Photogrammetry for Virtual Exploration of Underwater Archeological Sites. In Proceedings of the CIPA International Symposium, Athens, Greece, 1–6 October 2007.

- Corke, P. Robotics, Vision and Control: Fundamental Algorithms in MATLAB; Springer-Verlag: Berlin, Germany, 2011; Volume 73. [Google Scholar]

- Bowens, A. (Ed.) Underwater Archaeology: The NAS Guide to Principles and practice; John Wiley & Sons: Oxford, UK, 2011.

- Matthews, N.A. Aerial and Close-Range Photogrammetric Technology: Providing Resource Documentation, Interpretation, and Preservation; Technical Report 428; U.S. Department of the Interior, Bureau of Land Management, National Operations Center: Denver, CO, USA, 2008.

- Skarlatos, D.; Rova, M. Photogrammetric Approaches for the Archaeological Mapping of the Mazotos Shipwreck. In Proceedings of the International Conference on Science and Technology in Archaeology and Conservation, Petra, Jordan, 7–12 December 2010.

- Holt, P. An Assessment of Quality in Underwater Archaeological Surveys Using Tape Measurements. Int. J. Naut. Archaeol. 2003, 32, 246–251. [Google Scholar] [CrossRef]

| Ground Sample Distance | 0.6 mm | 25 mm | |

|---|---|---|---|

| (Fine Analyses) | (Coarse Analyses) | ||

| Maximum distance of observation | 500 to 4000 mm | 10,000 to >15,000 mm | |

| Baseline (for a 80% overlap) | 50 to 300 mm | 1000 to >2000 mm | |

| Depth granularity | 1 to 50 mm | 125 to 250 mm | |

| Maximum Relative Speed | 100 mm/s | 2500 mm/s |

| Ryujin Underwater Vehicle | ||||

|---|---|---|---|---|

| Size | 20 × 20 × 30 cm | Depth rating | 100 m | |

| Weight | 9 Kg | (tested up to 30 m) | ||

| Autonomy | ∼2 h | Depth control accuracy | ∼5 cm | |

| Cost | ∼2000 € | Absolute speed control accuracy | ∼5 % | |

| GSD | Ground T. | Cloud Meas. | Error | GSD | Ground T. | Cloud Meas. | Error |

|---|---|---|---|---|---|---|---|

| (mm) | (mm) | (mm) | (mm) | (mm) | (mm) | (mm) | (mm) |

| 10 | 875 | 862.7 | −12.3 | 0.8 | 433 | 434.1 | 1.1 |

| 10 | 298 | 307.2 | 9.2 | 0.8 | 427 | 427.8 | 0.8 |

| 10 | 49 | 42.3 | −6.7 | 0.8 | 350 | 349.1 | −0.9 |

| 8 | 1273 | 1248.6 | −24.4 | 0.6 | 220 | 219.5 | −0.5 |

| 3 | 732 | 734.2 | 2.2 | 0.6 | 170 | 170.8 | 0.8 |

| 3 | 218 | 216.7 | −1.3 | 0.6 | 113 | 112.9 | −0.1 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Avanthey, L.; Beaudoin, L.; Gademer, A.; Roux, M. Tools to Perform Local Dense 3D Reconstruction of Shallow Water Seabed. Sensors 2016, 16, 712. https://doi.org/10.3390/s16050712

Avanthey L, Beaudoin L, Gademer A, Roux M. Tools to Perform Local Dense 3D Reconstruction of Shallow Water Seabed. Sensors. 2016; 16(5):712. https://doi.org/10.3390/s16050712

Chicago/Turabian StyleAvanthey, Loïca, Laurent Beaudoin, Antoine Gademer, and Michel Roux. 2016. "Tools to Perform Local Dense 3D Reconstruction of Shallow Water Seabed" Sensors 16, no. 5: 712. https://doi.org/10.3390/s16050712