Carrying Position Independent User Heading Estimation for Indoor Pedestrian Navigation with Smartphones

Abstract

:1. Introduction

- We propose a novel system for user heading estimation independent of carrying position.

- We develop a computationally lightweight carrying position recognition technique that performs accurately using acceleration signals.

- We propose a novel carrying position transition detection algorithm, which may be used to distinguish between user turns and position transitions, and reduces the computational cost of position recognition.

- We further examine the application of PCA-based approaches for the other carrying positions and develop an extended Kalman filter-based attitude estimation model for heading estimation.

- We report the evaluation of the proposed position recognition technique based on extensive samples collected from four participants and compare the performance of our heading estimation approach to existing approaches.

2. Related Works

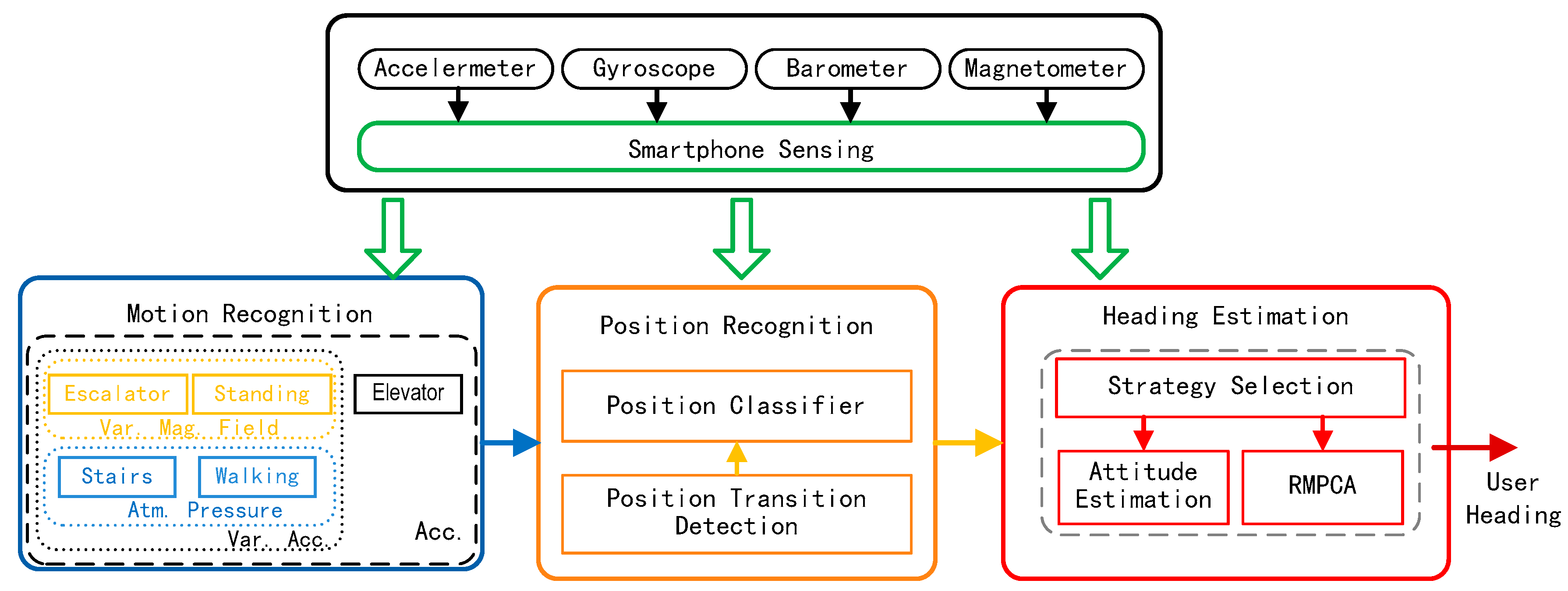

3. System Overview

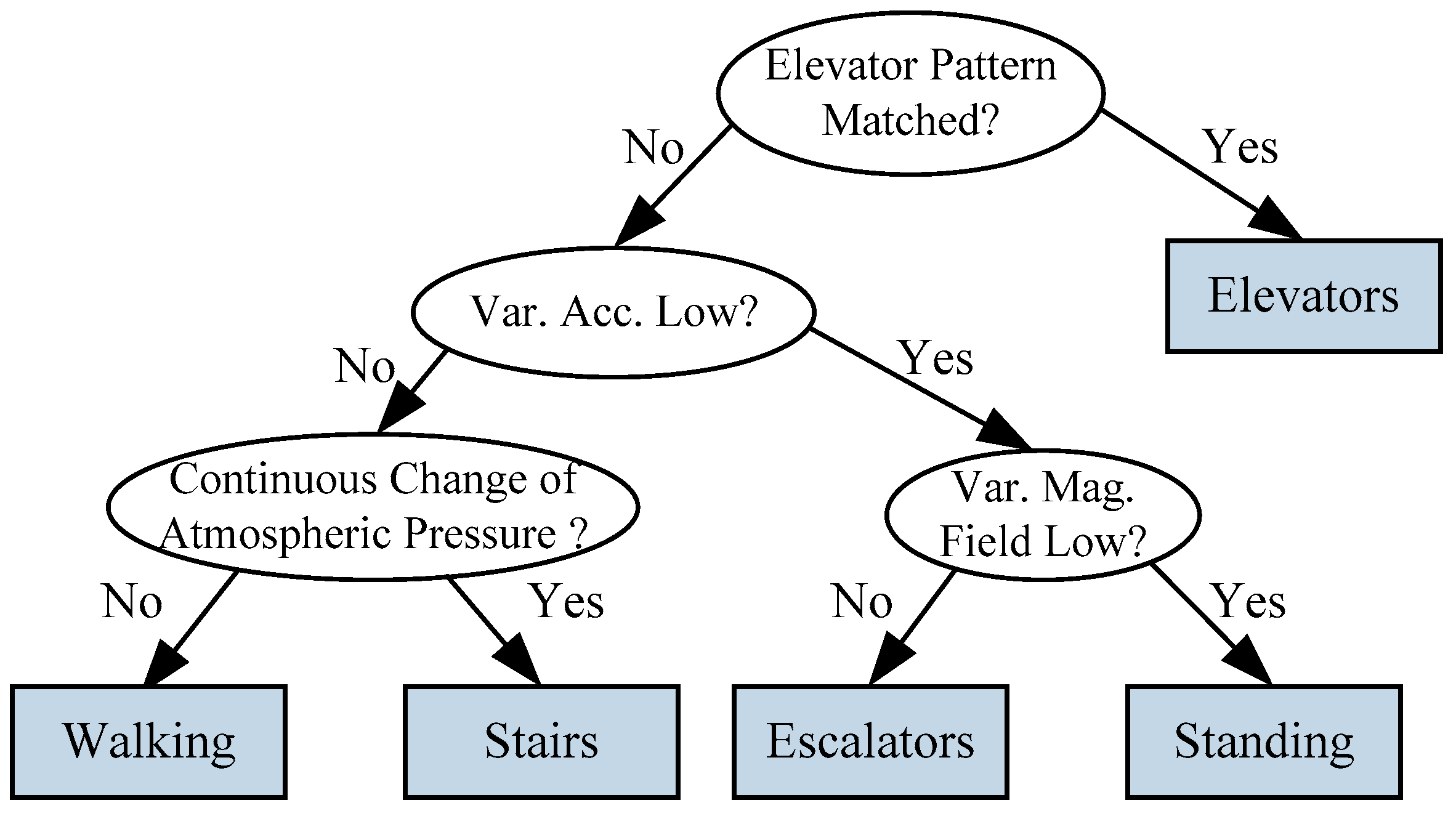

4. Motion State Recognition

5. Position Recognition

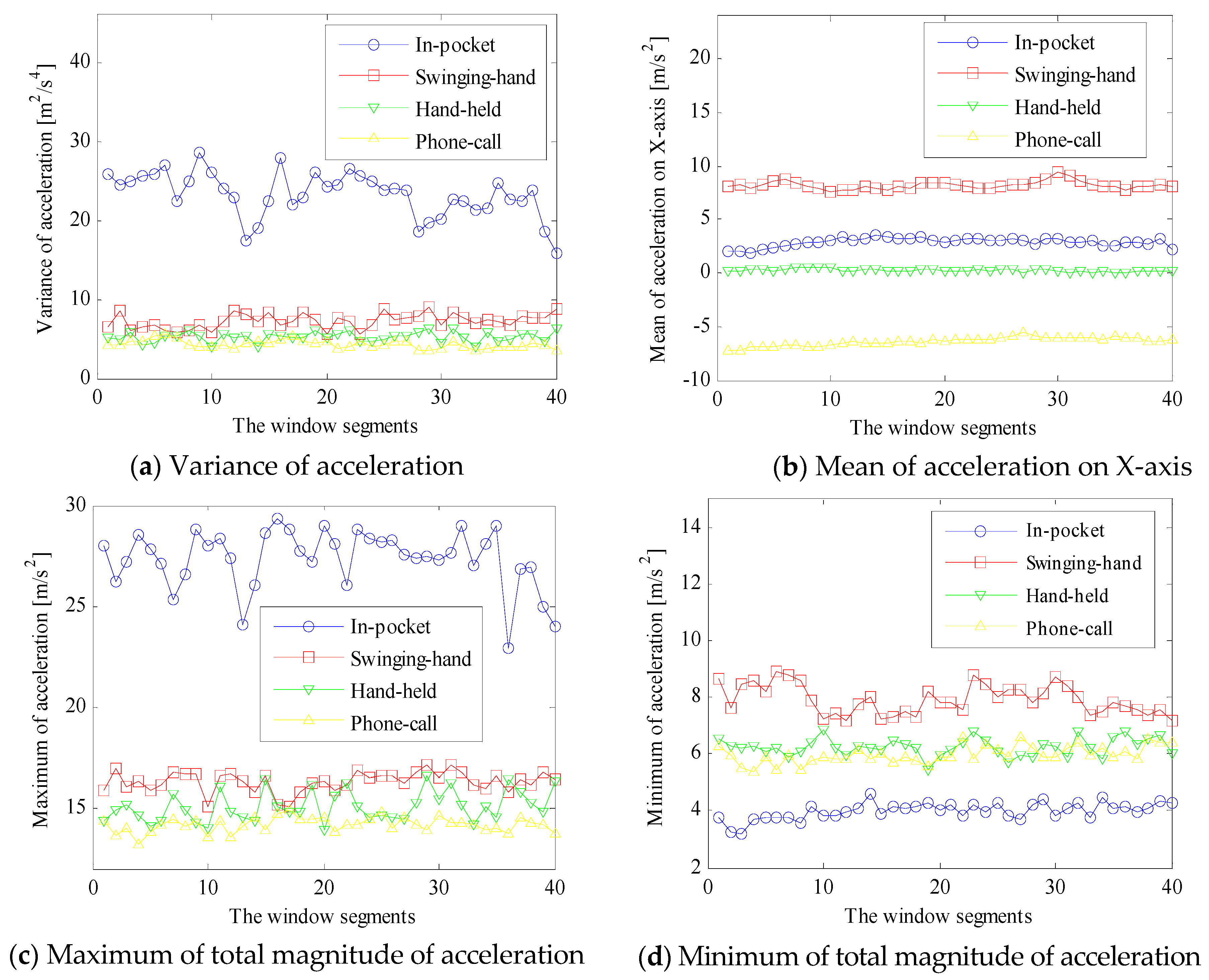

5.1. Carrying Position Classifier

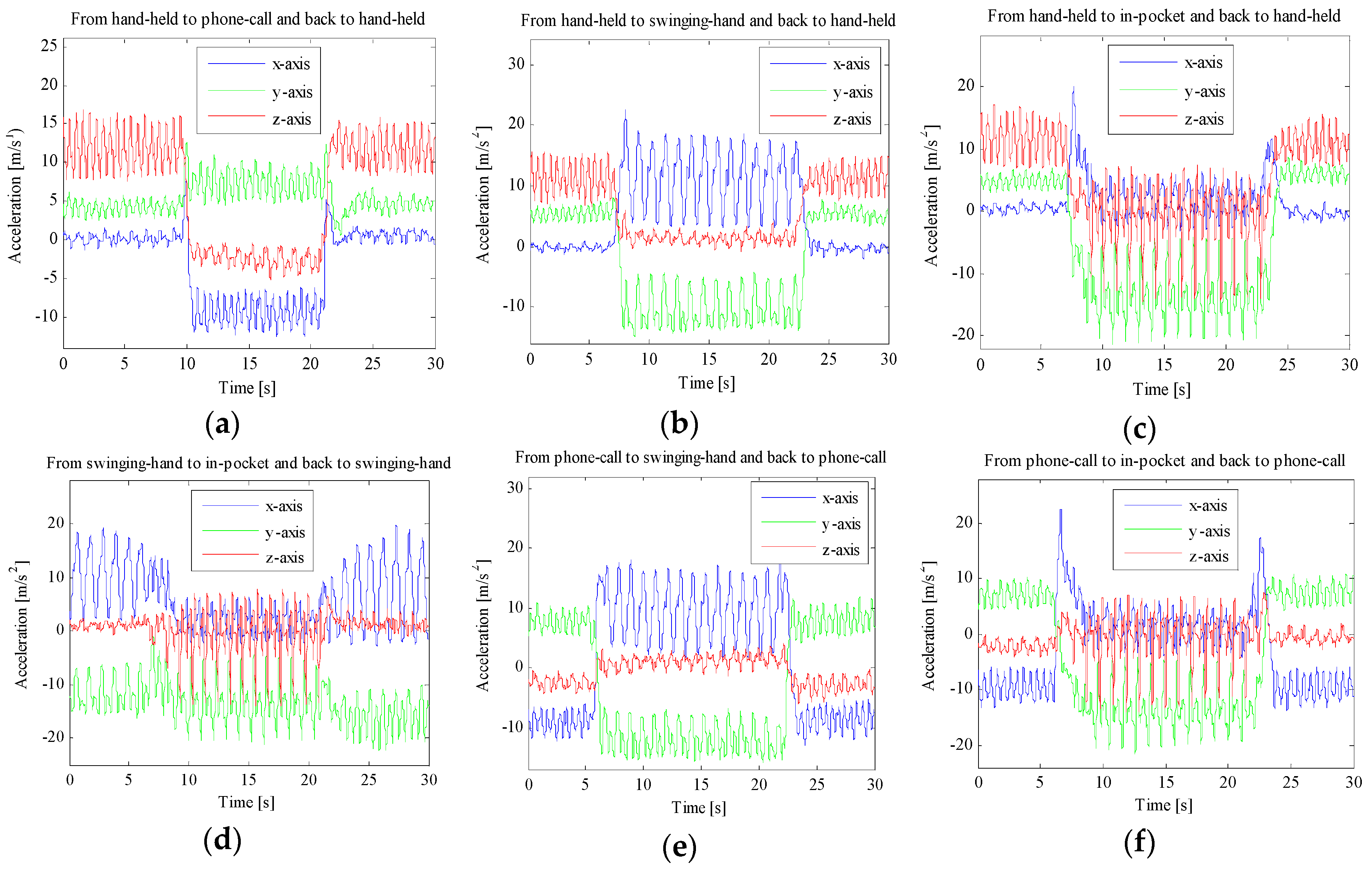

5.2. Carrying Position Transition Detection Algorithm

- The cross-window acceleration changes of swinging-hand and in-pocket positions are always larger than those of hand-held and phone-call positions, due to a larger variance of acceleration signals. Thus, we design different thresholds of cross-window acceleration change for different carrying positions.

- For continuous sliding windows, if cross-window acceleration change Acccw exceeds the related threshold Accth, a position transition is detected.

- If the cross-window acceleration change values of more than two adjacent sliding windows exceed the related threshold, we assume that only one position transition event occurs.

- For the related sliding windows of the first flag exceeding the threshold, we ultimately recognize and capture the position transition duration accurately by selecting the sliding window whose within-window acceleration change is maximal

- Immediately after a position transition and related duration are detected, the position classifier is used to recognize and update the device carrying position.

5.3. Discrimination between Position Transitions and User Turns

6. User Heading Estimation

6.1. EKF Based Attitude Estimation Model

6.2. Heading Estimation for Hand-Held and Phone-Call Positions

6.3. Heading Estimation for In-Pocket and Swinging-Hand Positions

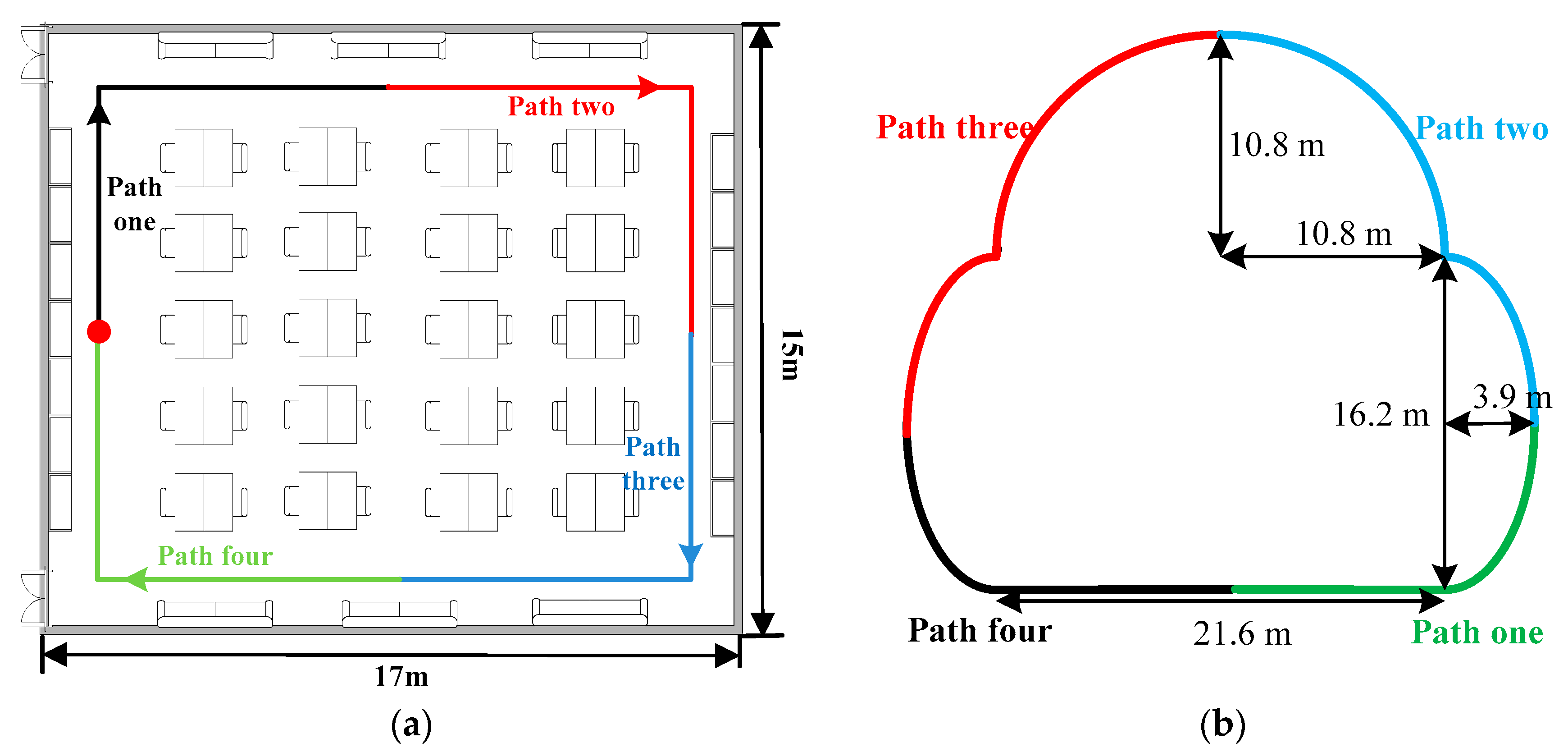

7. Evaluation

7.1. Position Recognition Results

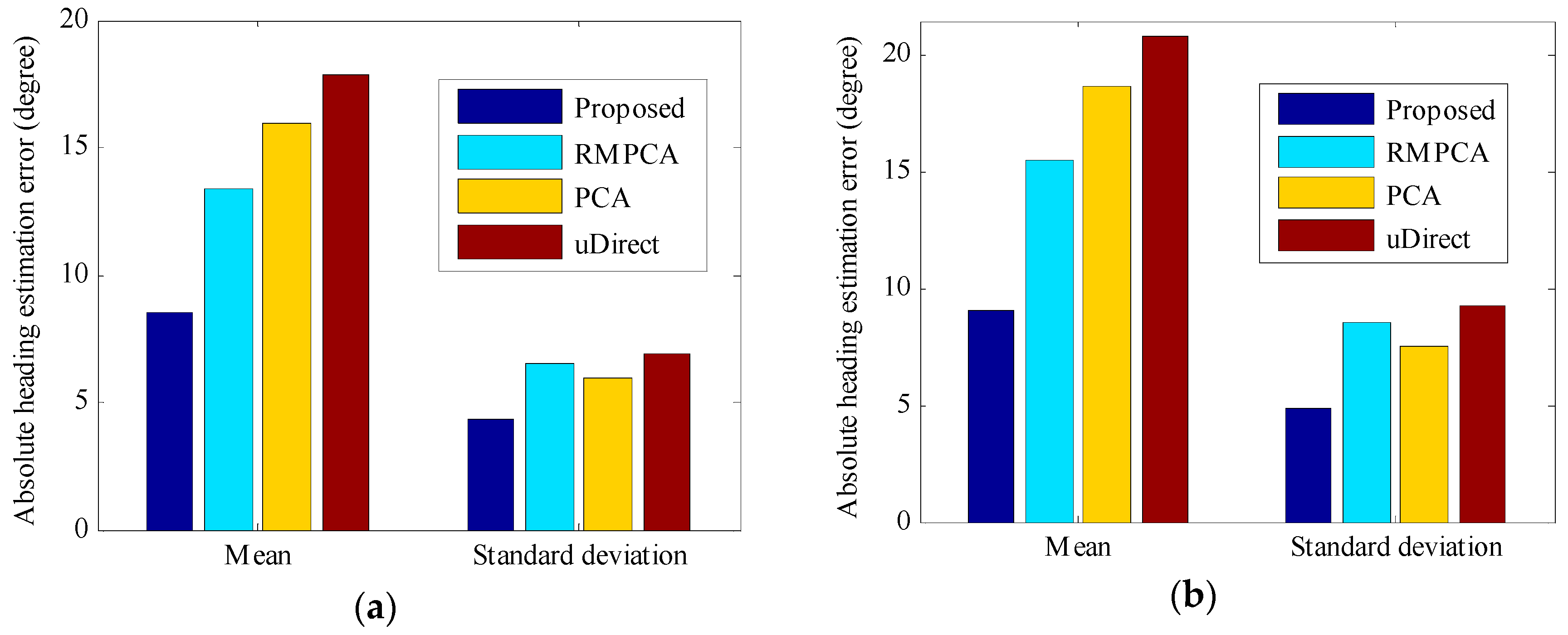

7.2. Heading Estimation Results

8. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Gezici, S.; Tian, Z.; Giannakis, G.B.; Kobayashi, H.; Molisch, A.F.; Poor, H.V.; Sahinoglu, Z. Localization via ultra-wideband radios. IEEE Signal Process. Mag. 2005, 22, 70–84. [Google Scholar] [CrossRef]

- Kong, J. Fault-tolerant RFID reader localization based on passive RFID tags. IEEE Trans. Parallel Distrib. 2014, 25, 2065–2076. [Google Scholar]

- Deng, Z.-A.; Xu, Y.B.; Ma, L. Indoor positioning via nonlinear discriminative feature extraction in wireless local area network. Comput. Commun. 2012, 35, 738–747. [Google Scholar] [CrossRef]

- Zhou, M.; Zhang, Q.; Xu, K.; Tian, Z.; Wang, Y.; He, W. PRIMAL: Page rank-based indoor mapping and localization using gene-sequenced unlabeled WLAN received signal strength. Sensors 2015, 15, 24791–24817. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Chen, X.; Jing, G.; Wang, Y.; Cao, Y.; Li, F.; Zhang, X.; Xiao, H. An indoor continuous positioning algorithm on the move by fusing sensors and Wi-Fi on smartphones. Sensors 2015, 15, 31244–31267. [Google Scholar] [CrossRef] [PubMed]

- Renaudin, V.; Combettes, C. Magnetic, acceleration fields and gyroscope quaternion (MAGYQ)-based attitude estimation with smartphone sensors for indoor pedestrian navigation. Sensors 2014, 14, 22864–22890. [Google Scholar] [CrossRef] [PubMed]

- Olguin, D.O.; Pentland, A. Sensor-based organizational engineering. In Proceedings of the ICMI-MLMI Workshop Multimodal Sensor-Based Systems Mobile Phones Social Computing, Cambridge, MA, USA, 6 November 2009; pp. 1–4.

- Kyunghoon, I.M.; Jeicheong, K.; Mun, R.M. Face direction based human-computer interface using image observation and EMG signal for the disabled. In Proceedings of the IEEE International Conference on Robotics Automation, Taipei, China, 14–19 September 2003; pp. 1515–1520.

- Jung, W.; Woo, W.; Lee, S. Orientation tracking exploiting ubiTrack. In Proceedings of the UbiComp 2005, Tokyo, Japan, 11–14 September 2005; pp. 47–50.

- Jirawimut, R.; Prakoonwit, S.; Cecelja, F.; Balachandran, W. Visual odometer for pedestrian navigation. IEEE Trans. Instrum. Meas. 2003, 52, 1166–1173. [Google Scholar] [CrossRef]

- Jin, Y.; Toh, H.S.; Soh, W.S.; Wong, W.C. A robust dead-reckoning pedestrian tracking system with low cost sensors. In Proceedings of the IEEE International Conference on the Pervasive Computing and Communications (PerCom), Seattle, WA, USA, 21–25 March 2011; pp. 222–230.

- Callmer, J.; Tornqvist, D.; Gustafsson, F. Robust heading estimation indoors using convex optimization. In Proceedings of the 16th International Conference on Information Fusion (FUSION), Istanbul, Turkey, 9–12 July 2013; pp. 1173–1179.

- Deng, Z.-A.; Hu, Y.; Yu, J.; Na, Z. Extended kalman filter for real time indoor localization by fusing WiFi and smartphone inertial sensors. Micromachines 2015, 6, 523–543. [Google Scholar] [CrossRef]

- Li, Y.; Georgy, J.; Niu, X.; Li, Q.; El-Sheimy, N. Autonomous calibration of MEMS gyros in consumer portable devices. IEEE Sens. J. 2015, 15, 4062–4072. [Google Scholar] [CrossRef]

- Ichikawa, F.; Chipchase, J.; Grignani, R. Where’s the phone? A study of mobile phone location in public spaces. In Proceedings of the 2005 Mobility Conference on Mobile Technology Applications & Systems Retrieve, Guangzhou, China, 15–17 November 2005; pp. 1–8.

- Kai, K.; Lukowicz, P.; Partridge, K.; Begole, B. Which way am I facing: Inferring horizontal device orientation from an accelerometer signal? In Proceedings of the International Symposium on Wearable Computers, Linz, Austria, 4–7 September 2009; pp. 149–150.

- Deng, Z.-A.; Wang, G.; Hu, Y.; Wu, D. Heading estimation for indoor pedestrian navigation using a smartphone in the pocket. Sensors 2015, 15, 21518–21536. [Google Scholar] [CrossRef] [PubMed]

- Hoseinitabatabaei, S.A.; Gluhak, A.; Tafazolli, R.; Headley, W. Design, realization, and evaluation of uDirect-An approach for pervasive observation of user facing direction on mobile phones. IEEE Trans. Mob. Comput. 2014, 13, 1981–1994. [Google Scholar] [CrossRef]

- Foxlin, E. Pedestrian tracking with shoe-mounted inertial sensors. IEEE Comput. Graph. Appl. 2005, 25, 38–46. [Google Scholar] [CrossRef] [PubMed]

- Leppäkoski, H.; Collin, J.; Takala, J. Pedestrian navigation based on inertial sensors, indoor map and WLAN signals. J. Signal Process. Syst. 2012, 71, 287–296. [Google Scholar] [CrossRef]

- Chen, Z.; Zou, H.; Jiang, H.; Zhu, Q.; Soh, Y.C.; Xie, L. Fusion of WiFi, smartphone sensors and landmarks using the Kalman filter for indoor localization. Sensors 2015, 15, 715–732. [Google Scholar] [CrossRef] [PubMed]

- Steinhoff, U.; Schiele, B. Dead reckoning from the pocket—An experimental study. In Proceedings of the 2010 IEEE International Conference on Pervasive Computing and Communications (PerCom), Mannheim, Germany, 29 March–2 April 2010; pp. 162–170.

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J.M. Fusion of smartphone motion sensors for physical activity recognition. Sensors 2014, 14, 10146–10176. [Google Scholar] [CrossRef] [PubMed]

- Abdelnasser, H.; Mohamed, R.; Elgohary, A.; Farid, M.; Wang, H.; Sen, S.; Choudhury, R.; Youssef, M. SemanticSLAM: Using environment landmarks for unsupervised indoor localization. IEEE Trans. Mob. Comput. 2015, 1, 1–14. [Google Scholar] [CrossRef]

- Tian, Z.; Fang, X.; Zhou, M.; Li, L. Smartphone-based indoor integrated WiFi/MEMS positioning algorithm in a multi-floor environment. Micromachines 2015, 6, 347–363. [Google Scholar] [CrossRef]

- Kourogi, M.; Kurata, T. A method of pedestrian dead reckoning for smartphones using frequency domain analysis on patterns of acceleration and angular velocity. In Proceedings of the 2014 IEEE/ION Position, Location and Navigation Symposium, Monterey, CA, USA, 5–8 May 2014; pp. 164–168.

- Qian, J.; Pei, L.; Ma, J.; Ying, R.; Liu, P. Vector graph assisted pedestrian dead reckoning using an unconstrained smartphone. Sensors 2015, 15, 5032–5057. [Google Scholar] [CrossRef] [PubMed]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. Human activity recognition on smartphones using a multiclass hardware-friendly support vector machine. In Ambient Assisted Living and Home Care; Bravo, J., Hervs, R., Rodrguez, M., Eds.; Springer: Berlin, Germany; Heidelberg, Germany, 2012; pp. 216–223. [Google Scholar]

- Kose, M.; Incel, O.D.; Ersoy, C. Online human activity recognition on smart phones. In Proceedings of the Workshop on Mobile Sensing: From Smartphones and Wearables to Big Data, Beijing, China, 16 April 2012; pp. 11–15.

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Li, F.; Zhao, C.; Ding, G.; Gong, J.; Liu, C.; Zhao, F. A reliable and accurate indoor localization method using phone inertial sensors. In Proceedings of the 14th ACM International Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 1–10.

- Kim, Y.; Chon, Y.; Cha, H. Smartphone-based collaborative and autonomous radio fingerprinting. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 2012, 42, 112–122. [Google Scholar] [CrossRef]

- Zhou, M.; Qiu, F.; Tian, Z. An information-based approach to precision analysis of indoor WLAN localization using location fingerprint. Entropy 2015, 17, 8031–8055. [Google Scholar] [CrossRef]

- Wang, H.; Sen, S.; Elgohary, A.; Farid, M.; Youssef, M.; Choudhury, R.R. No need to war-drive: Unsupervised indoor localization. In Proceedings of the 10th International Conference on Mobile Systems, Applications, and Services, Lake District, UK, 25–29 June 2012; pp. 197–210.

- Sabatini, A.M. Quaternion-based extended Kalman filter for determining orientation by inertial and magnetic sensing. IEEE Trans. Biomed. Eng. 2006, 53, 1346–1356. [Google Scholar] [CrossRef] [PubMed]

- Bar-Shalom, Y.; Li, X.-R.; Kirubarajan, T. Estimation with Applications to Tracking and Navigation; Wiley: New York, NY, USA, 2001. [Google Scholar]

| SVM | Hand-Held | Phone-Call | Swinging-Hand | In-Pocket |

| Hand-Held | 0.972 | 0.021 | 0.007 | 0 |

| Phone-Call | 0.029 | 0.958 | 0 | 0.013 |

| Swinging-Hand | 0 | 0 | 0.981 | 0.019 |

| In-Pocket | 0 | 0.008 | 0.040 | 0.952 |

| Bayesian Network | Hand-Held | Phone-Call | Swinging-Hand | In-Pocket |

| Hand-Held | 0.945 | 0.031 | 0.024 | 0 |

| Phone-Call | 0.020 | 0.972 | 0.008 | 0 |

| Swinging-Hand | 0 | 0.02 | 0.936 | 0.044 |

| In-Pocket | 0 | 0.014 | 0.064 | 0.922 |

| Random Forest | Hand-Held | Phone-Call | Swinging-Hand | In-Pocket |

| Hand-Held | 0.990 | 0.007 | 0 | 0.003 |

| Phone-Call | 0.018 | 0.982 | 0 | 0 |

| Swinging-Hand | 0 | 0.011 | 0.969 | 0.020 |

| In-Pocket | 0 | 0.004 | 0.025 | 0.971 |

| Position Transition | User Turn | |

|---|---|---|

| Position Transition | 1 | 0 |

| User Turn | 0.02 | 0.98 |

| Mean (E1/E2) | Hand-Held | Phone-Call | Swinging-Hand | In-Pocket |

| RMPCA | 14.41/17.43 | 18.47/22.9 | 10.91/11.56 | 8.66/9.05 |

| Attitude Estimation | 5.85/6.17 | 7.83/8.36 | \ | \ |

| PCA | 14.41/17.98 | 18.61/23.43 | 16.48/18.21 | 12.85/13.77 |

| uDirect | 18.07/21.97 | 20.93/26.35 | 17.10/18.85 | 13.23/15.22 |

| Standard Deviation (E1/E2) | Hand-Held | Phone-Call | Swinging-Hand | In-Pocket |

| RMPCA | 5.89/7.95 | 6.28/8.67 | 4.86/5.31 | 4.11/4.42 |

| Attitude Estimation | 2.60/2.78 | 3.71/4.09 | \ | \ |

| PCA | 5.89/8.01 | 6.36/8.82 | 5.22/6.16 | 4.62/4.99 |

| uDirect | 6.63/9.14 | 6.82/9.37 | 5.91/6.93 | 5.76/6.97 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, Z.-A.; Wang, G.; Hu, Y.; Cui, Y. Carrying Position Independent User Heading Estimation for Indoor Pedestrian Navigation with Smartphones. Sensors 2016, 16, 677. https://doi.org/10.3390/s16050677

Deng Z-A, Wang G, Hu Y, Cui Y. Carrying Position Independent User Heading Estimation for Indoor Pedestrian Navigation with Smartphones. Sensors. 2016; 16(5):677. https://doi.org/10.3390/s16050677

Chicago/Turabian StyleDeng, Zhi-An, Guofeng Wang, Ying Hu, and Yang Cui. 2016. "Carrying Position Independent User Heading Estimation for Indoor Pedestrian Navigation with Smartphones" Sensors 16, no. 5: 677. https://doi.org/10.3390/s16050677