An Efficient Seam Elimination Method for UAV Images Based on Wallis Dodging and Gaussian Distance Weight Enhancement

Abstract

:1. Introduction

2. Study Site and Data

3. Methodology

3.1. Wallis Dodging

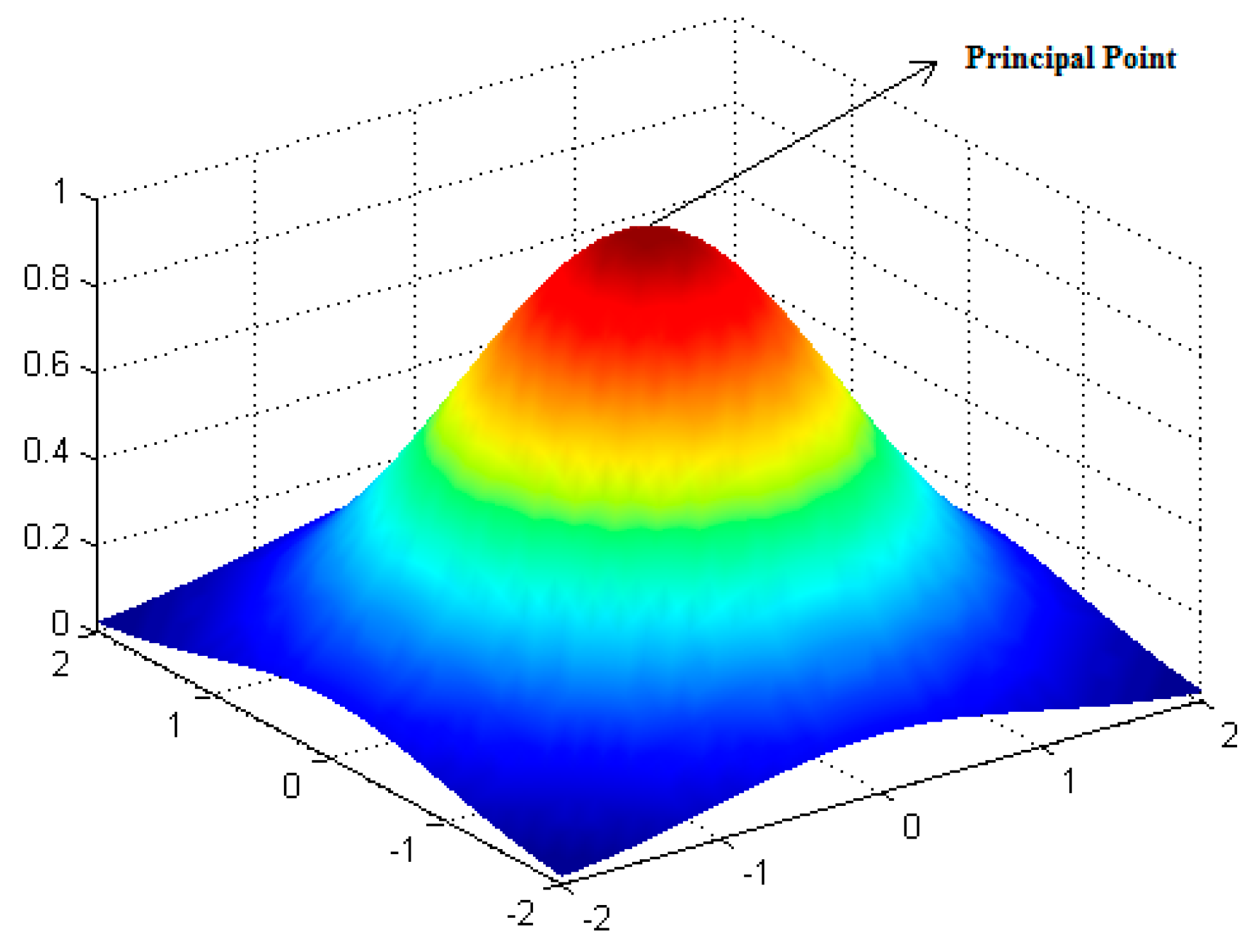

3.2. GDWE Method

3.2.1. Theoretical Basis

3.2.2. Seam Elimination

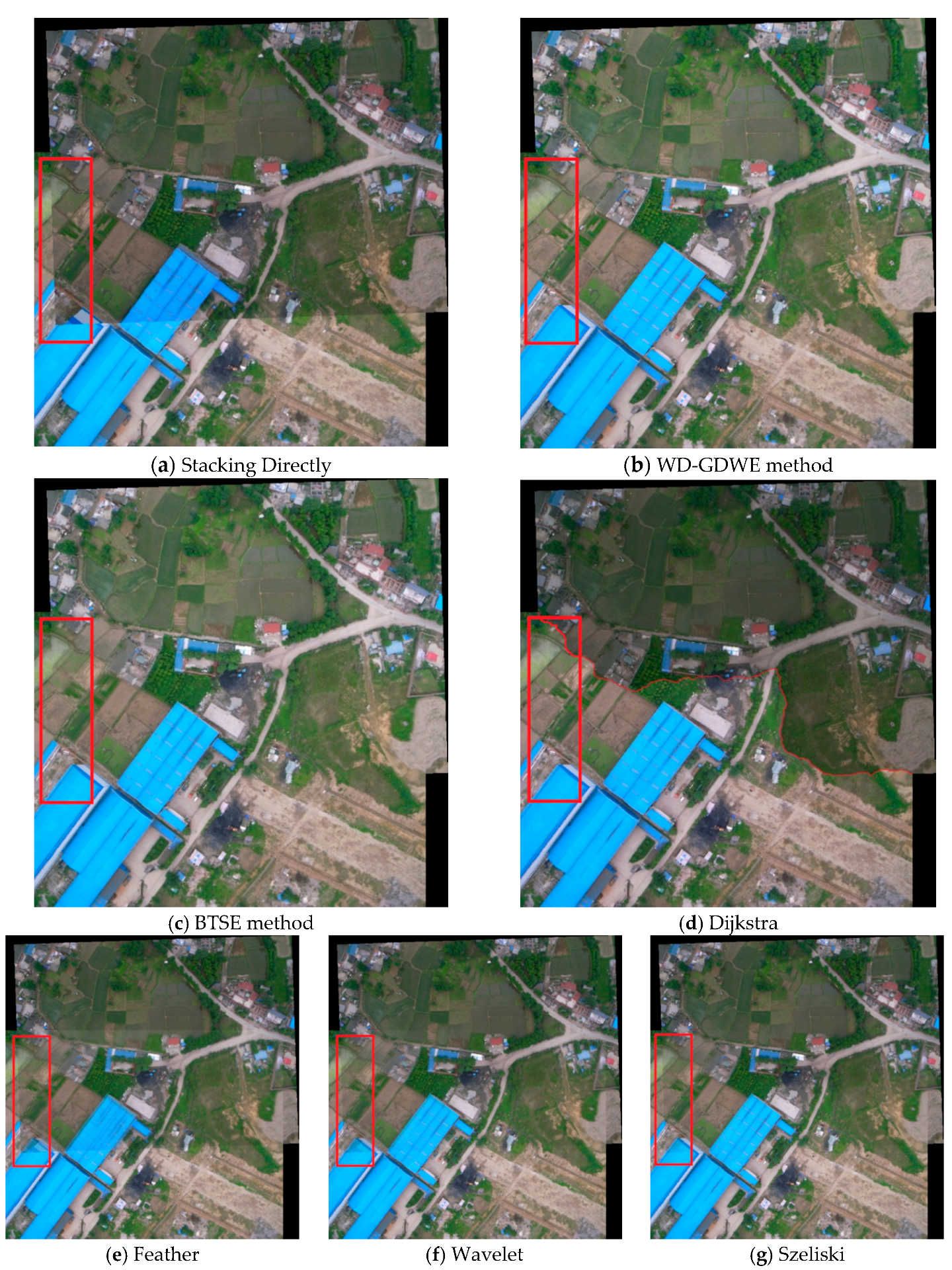

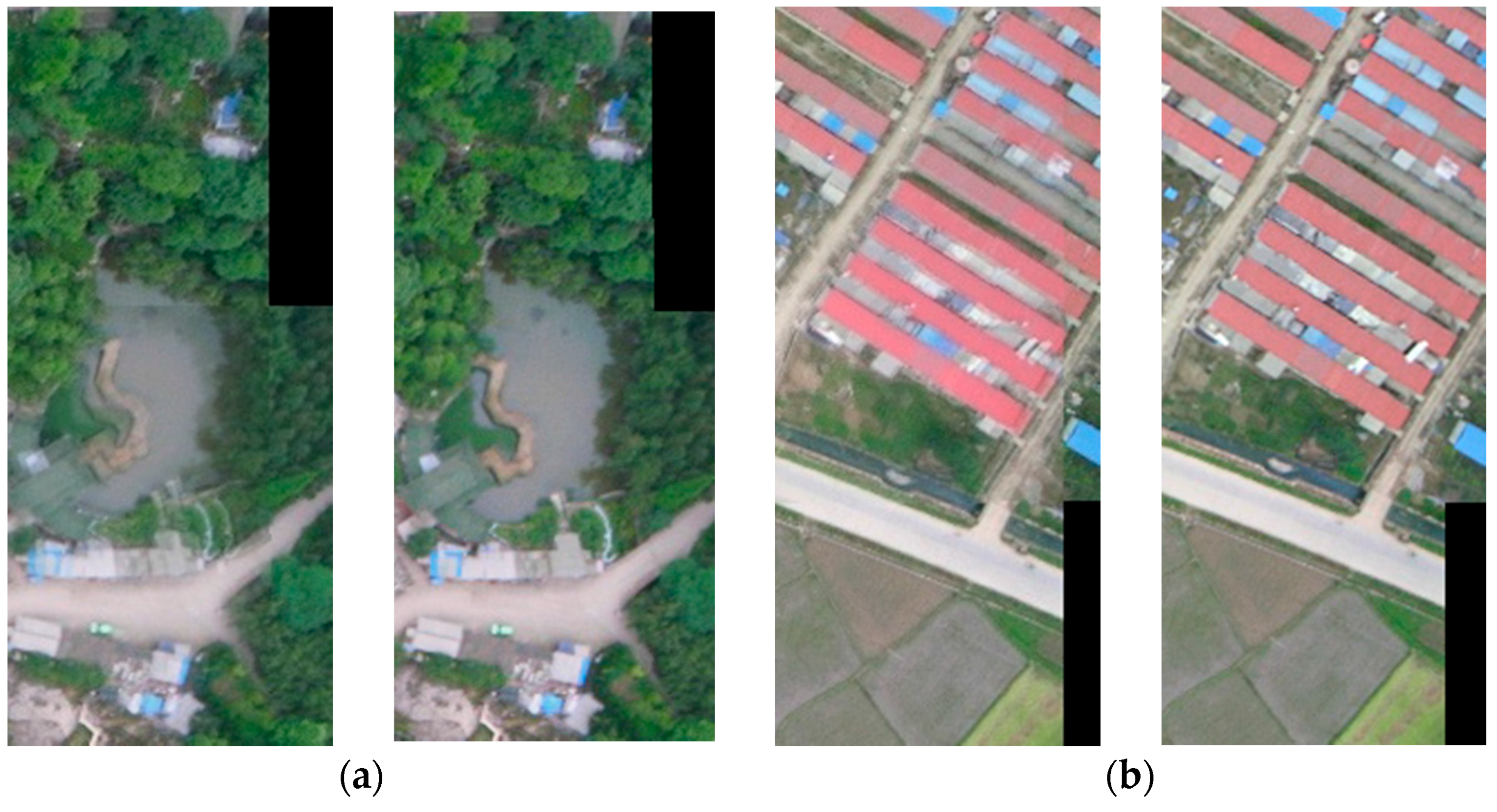

4. Results and Discussion

4.1. Wallis Dodging

4.2. WD-GDWE Method

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Mesas-Carrascosa, F.J.; Rumbao, I.C.; Berrocal, J.A. Porras AG Positional quality assessment of orthophotos obtained from sensors onboard multi-rotor UAV platforms. Sensors 2014, 14, 22394–22407. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Ou, J.; He, H.; Zhang, X.; Mills, J. Mosaicking of Unmanned Aerial Vehicle Imagery in the Absence of Camera Poses. Remote Sens. 2016, 8, 204. [Google Scholar] [CrossRef]

- Karpenko, S.; Konovalenko, I.; Miller, A.; Miller, B.; Nikolaev, D. UAV Control on the Basis of 3D Landmark Bearing-Only Observations. Sensors 2015, 15, 29802–29820. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.C.; Liu, J.G. Evaluation methods for the autonomy of unmanned systems. Chin. Sci. Bull. 2012, 57, 3409–3418. [Google Scholar] [CrossRef]

- Gonzalez, L.F.; Montes, G.A.; Puig, E.; Johnson, S.; Mengersen, K.; Gaston, K.J. Unmanned Aerial Vehicles (UAVs) and Artificial Intelligence Revolutionizing Wildlife Monitoring and Conservation. Sensors 2016, 16, 97. [Google Scholar] [CrossRef] [PubMed]

- Zhou, G. Near real-time orthorectification and mosaic of small UAV video flow for time-critical event response. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3. [Google Scholar] [CrossRef]

- Wehrhan, M.; Rauneker, P.; Sommer, M. UAV-Based Estimation of Carbon Exports from Heterogeneous Soil Landscapes—A Case Study from the CarboZALF Experimental Area. Sensors 2016, 16, 255. [Google Scholar] [CrossRef] [PubMed]

- Sun, M.W.; Zhang, J.Q. Dodging research for digital aerial images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 349–353. [Google Scholar]

- Choi, J.; Jung, H.S.; Yun, S.H. An Efficient Mosaic Algorithm Considering Seasonal Variation: Application to KOMPSAT-2 Satellite Images. Sensors 2015, 15, 5649–5665. [Google Scholar] [CrossRef] [PubMed]

- Davis, J. Mosaics of scenes with moving objects. In Proceedings of the 1998 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Santa Barbara, CA, USA, 23–25 June 1998.

- Philip, S.; Summa, B.; Tierny, J.; Bremer, P.T.; Pascucci, V. Distributed Seams for Gigapixel Panoramas. IEEE Trans. Vis. Comput. Graph. 2015, 21, 350–362. [Google Scholar] [CrossRef] [PubMed]

- Yuan, X.X.; Zhong, C. An improvement of minimizing local maximum algorithm on searching Seam line on searching seam line for orthoimage mosaicking. Acta Geod. Cartgraph. Sin. 2012, 41, 199–204. [Google Scholar]

- Kerschner, M. Seamline detection in colour orthoimage mosaicking by use of twin snakes. ISPRS J. Photogramm. Remote Sens. 2001, 56, 53–64. [Google Scholar] [CrossRef]

- Chon, J.; Kim, H.; Lin, C.S. Seam-line determination for image mosaicking: A technique minimizing the maximum local mismatch and the global cost. ISPRS J. Photogramm. Remote Sens. 2010, 65, 86–92. [Google Scholar] [CrossRef]

- Mills, S.; McLeod, P. Global seamline networks for orthomosaic generation via local search. ISPRS J. Photogramm. Remote Sens. 2013, 75, 101–111. [Google Scholar] [CrossRef]

- Wan, Y.; Wang, D.; Xiao, J.; Lai, X.; Xu, J. Automatic determination of seamlines for aerial image mosaicking based on vector roads alone. ISPRS J. Photogramm. Remote Sens. 2013, 76, 1–10. [Google Scholar] [CrossRef]

- Zuo, Z.Q.; Zhang, Z.X.; Zhang, J.Q. Seam line intelligent detection in large urban orthoimage mosaicking. Acta Geod. Cartgraph. Sin. 2011, 40, 84–89. [Google Scholar]

- Borra-Serrano, I.; Peña, J.M.; Torres-Sánchez, J.; Mesas-Carrascosa, F.J.; López-Granados, F. Spatial Quality Evaluation of Resampled Unmanned Aerial Vehicle-Imagery for Weed Mapping. Sensors 2015, 15, 19688–19708. [Google Scholar] [CrossRef] [PubMed]

- Uyttendaele, M.; Eden, A.; Skeliski, R. Eliminating ghosting and exposure artifacts in image mosaics. In Proceedings of the CVPR 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, December 11–14 2001.

- Szeliski, R. Video mosaics for virtual environments. Comput. Graph. Appl. 1996, 16, 22–30. [Google Scholar] [CrossRef]

- Szeliski, R.; Shum, H.Y. Creating full view panoramic image mosaics and environment maps. In Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 3–8 August 1998.

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer Science & Business Media: London, UK, 2010. [Google Scholar]

- Su, M.S.; Hwang, W.L.; Cheng, K.Y. Analysis on multiresolution mosaic images. IEEE Trans. Image Proc. 2004, 13, 952–959. [Google Scholar] [CrossRef]

- Gracias, N.; Mahoor, M.; Negahdaripour, S.; Gleason, A. Fast image blending using watersheds and graph cuts. Image Vis. Comput. 2009, 27, 597–607. [Google Scholar] [CrossRef]

- Zomet, A.; Levin, A.; Peleg, S.; Weiss, Y. Seamless image stitching by minimizing false edges. IEEE Trans. Image Proc. 2006, 15, 969–977. [Google Scholar] [CrossRef]

- Avidan, S.; Shamir, A. Seam carving for content-aware image resizing. ACM Trans. Gr. 2007. [Google Scholar] [CrossRef]

- Duan, F.Z.; Li, X.; Qu, X.; Tian, J.; Wang, L. UAV image seam elimination method based on Wallis and distance weight enhancement. J. Image Graph. 2014, 19, 806–813. [Google Scholar]

- Zhang, J.; Deng, W. Multiscale Spatio-Temporal Dynamics of Economic Development in an Interprovincial Boundary Region: Junction Area of Tibetan Plateau, Hengduan Mountain, Yungui Plateau and Sichuan Basin, Southwestern China Case. Sustainability 2016, 8, 215. [Google Scholar] [CrossRef]

- Chen, F.; Guo, H.; Ishwaran, N.; Zhou, W.; Yang, W.; Jing, L.; Cheng, F.; Zeng, H. Synthetic aperture radar (SAR) interferometry for assessing Wenchuan earthquake (2008) deforestation in the Sichuan giant panda site. Remote Sens. 2014, 6, 6283–6299. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greek, 20–27 September 1999.

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Zhou, R.; Zhong, D.; Han, J. Fingerprint identification using SIFT-based minutia descriptors and improved all descriptor-pair matching. Sensors 2013, 13, 3142–3156. [Google Scholar] [CrossRef] [PubMed]

- Lingua, A.; Marenchino, D.; Nex, F. Performance analysis of the SIFT operator for automatic feature extraction and matching in photogrammetric applications. Sensors 2009, 9, 3745–3766. [Google Scholar] [CrossRef] [PubMed]

- Civera, J.; Grasa, O.G.; Davison, A.J.; Montiel, J.M.M. 1-Point RANSAC for extended Kalman filtering: Application to real-time structure from motion and visual odometry. J. Field Robot. 2010, 27, 609–631. [Google Scholar] [CrossRef]

- Li, D.R.; Wang, M.; Pan, J. Auto-dodging processing and its application for optical remote sensing images. Geom. Inf. Sci. Wuhan Univ. 2006, 6, 183–187. [Google Scholar]

- Pham, B.; Pringle, G. Color correction for an image sequence. Comput. Graph. Appl. 1995, 15, 38–42. [Google Scholar] [CrossRef]

- Tobler, W.R. A computer movie simulating urban growth in the Detroit region. Econ. Geogr. 1970, 46, 234–240. [Google Scholar] [CrossRef]

- Kemp, K. Encyclopedia of Geographic Information Science; SAGE: Thousand Oaks, CA, USA, 2008; pp. 146–147. [Google Scholar]

- Efros, A.A.; Freeman, W.T. Image quilting for texture synthesis and transfer. In Proceedings of the 28th Annual Conference on COMPUTER Graphics and Interactive Techniques, Los Angeles, CA, USA, 12–17 August 2001.

- Kwatra, V.; Schödl, A.; Essa, I.; Turk, G.; Bobick, A. Graphcut textures: image and video synthesis using graph cuts. ACM Trans. Graph. 2003. [Google Scholar] [CrossRef]

- Kuang, D.; Yan, Q.; Nie, Y.; Feng, S.; Li, J. Image seam line method based on the combination of dijkstra algorithm and morphology. SPIE Proc. 2015. [Google Scholar] [CrossRef]

- Pan, J.; Wang, M.; Li, D.; Li, J. Automatic generation of seamline network using area Voronoi diagrams with overlap. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1737–1744. [Google Scholar] [CrossRef]

- Agarwala, A.; Donteheva, M.; Agarwala, M.; Drucker, M.; Colburn, A.; Curless, B.; Salesin, D.; Cohen, M. Interactive digital photomontage. ACM Trans. Graph. 2004, 23, 294–302. [Google Scholar] [CrossRef]

- Brown, M.; Lowe, D.G. Automatic panoramic image stitching using invariant features. Int. J. Comput. Vis. 2007, 74, 59–73. [Google Scholar] [CrossRef]

- Summa, B.; Tierny, J.; Pascucci, V. Panorama weaving: fast and flexible seam processing. ACM Trans. Graph. (TOG) 2012, 31, 83. [Google Scholar] [CrossRef]

- Shum, H.Y.; Szeliski, R. Systems and experiment paper: Construction of panoramic image mosaics with global and local alignment. Int. J. Comput. Vis. 2000, 36, 101–130. [Google Scholar] [CrossRef]

| Items | Parameters |

|---|---|

| Image Sensor | Ricoh Digital |

| Pixel Number | 3648 × 2736 |

| Focal Distance | 28 mm |

| CCD | 1/1.75 inch |

| Navigation sensor | GPS |

| Image Format | JPEG |

| Land Use | RMSE | ||

|---|---|---|---|

| M | SD | ||

| Building | Stacking Directly | 24.5 | 6.5 |

| Wallis Dodging | 0.0 | 0.2 | |

| Woodland | Stacking Directly | 23.6 | 6.2 |

| Wallis Dodging | 0.0 | 0.1 | |

| Farmland | Stacking Directly | 19.8 | 5.7 |

| Wallis Dodging | 0.0 | 0.1 | |

| Road | Stacking Directly | 17.5 | 3.6 |

| Wallis Dodging | 0.0 | 0.1 | |

| Water | Stacking Directly | 36.2 | 9.5 |

| Wallis Dodging | 0.0 | 0.3 | |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, J.; Li, X.; Duan, F.; Wang, J.; Ou, Y. An Efficient Seam Elimination Method for UAV Images Based on Wallis Dodging and Gaussian Distance Weight Enhancement. Sensors 2016, 16, 662. https://doi.org/10.3390/s16050662

Tian J, Li X, Duan F, Wang J, Ou Y. An Efficient Seam Elimination Method for UAV Images Based on Wallis Dodging and Gaussian Distance Weight Enhancement. Sensors. 2016; 16(5):662. https://doi.org/10.3390/s16050662

Chicago/Turabian StyleTian, Jinyan, Xiaojuan Li, Fuzhou Duan, Junqian Wang, and Yang Ou. 2016. "An Efficient Seam Elimination Method for UAV Images Based on Wallis Dodging and Gaussian Distance Weight Enhancement" Sensors 16, no. 5: 662. https://doi.org/10.3390/s16050662