Classification of E-Nose Aroma Data of Four Fruit Types by ABC-Based Neural Network

Abstract

:1. Introduction

2. Methodology

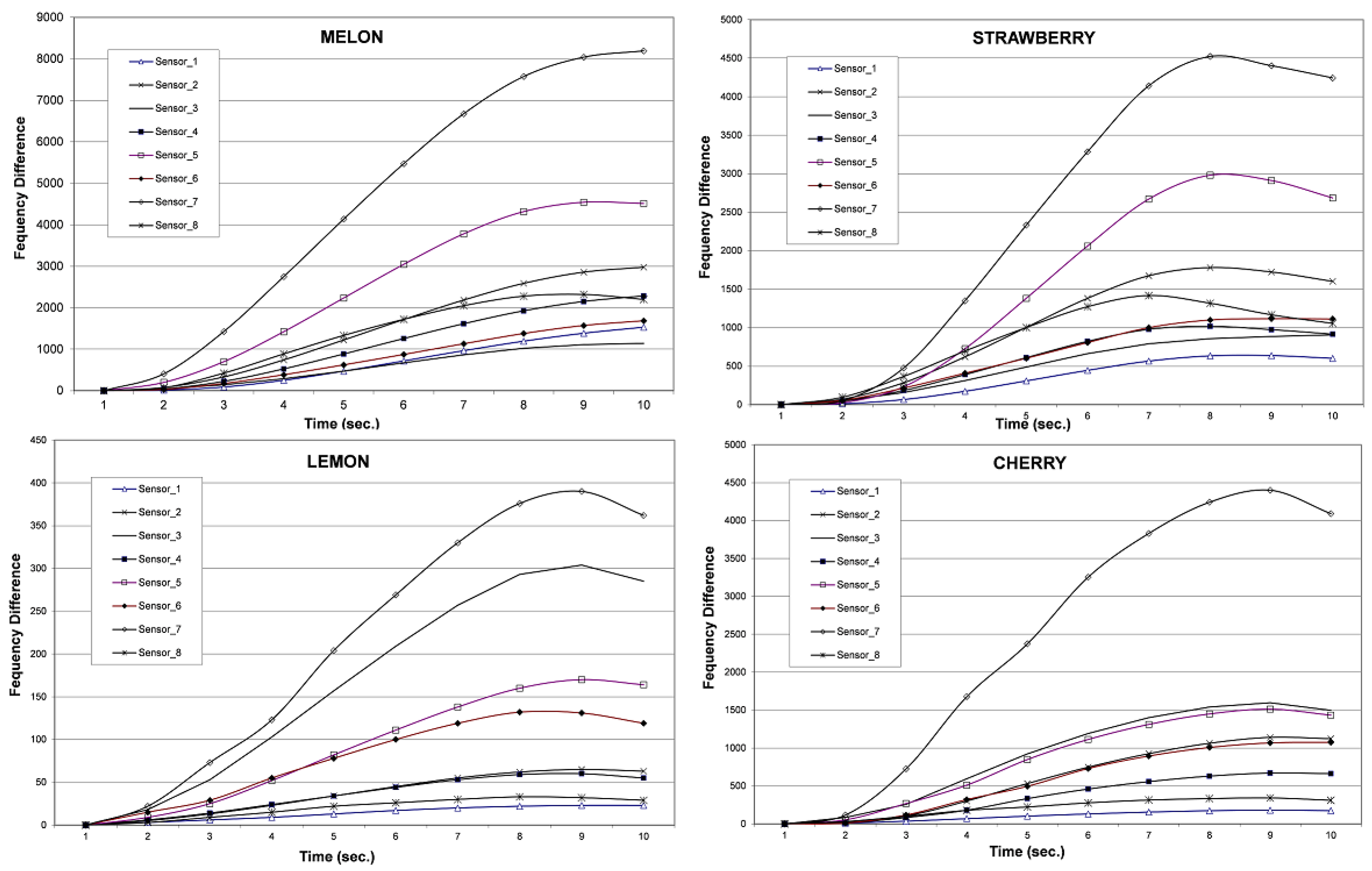

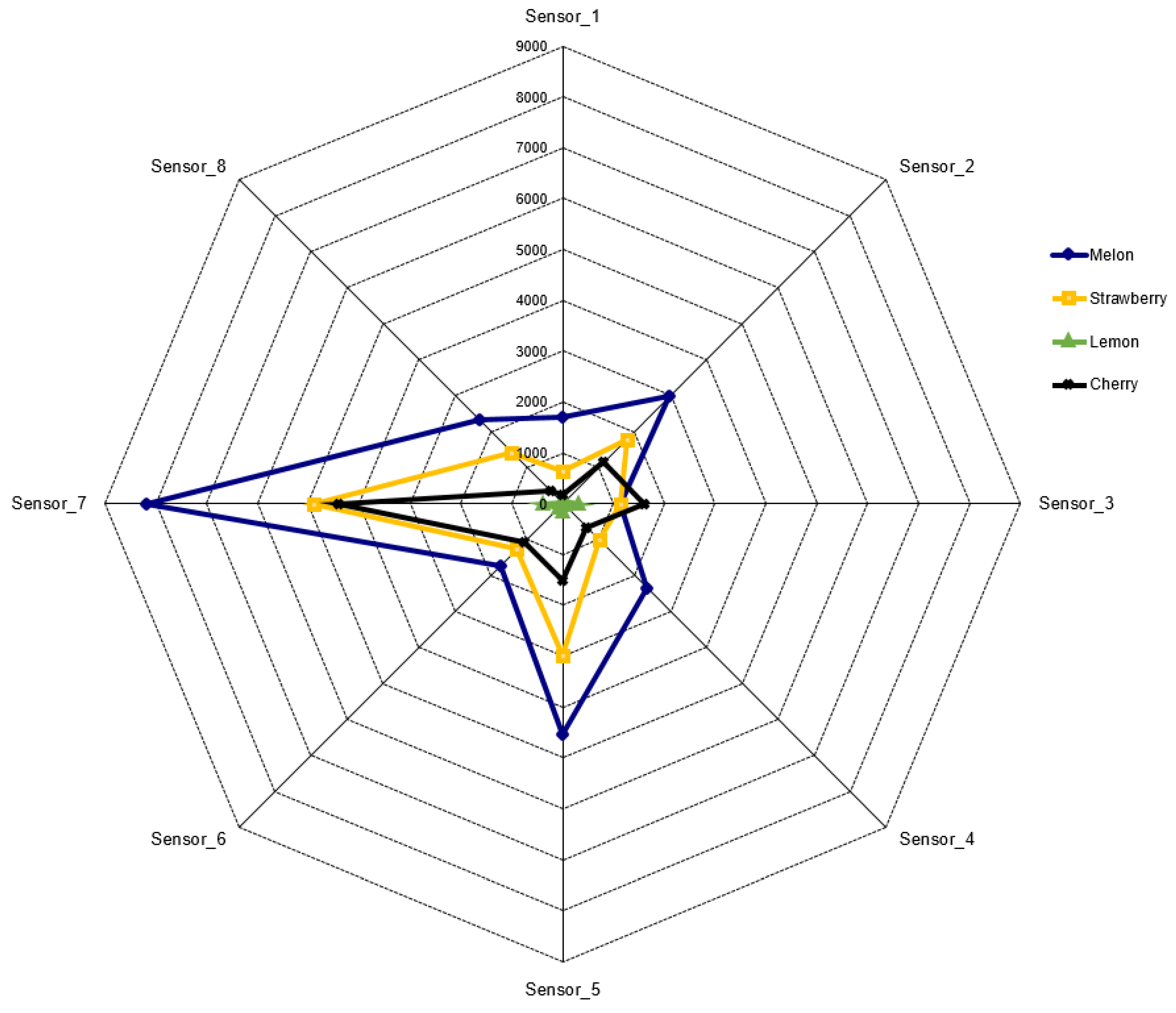

2.1. Preparation of Data

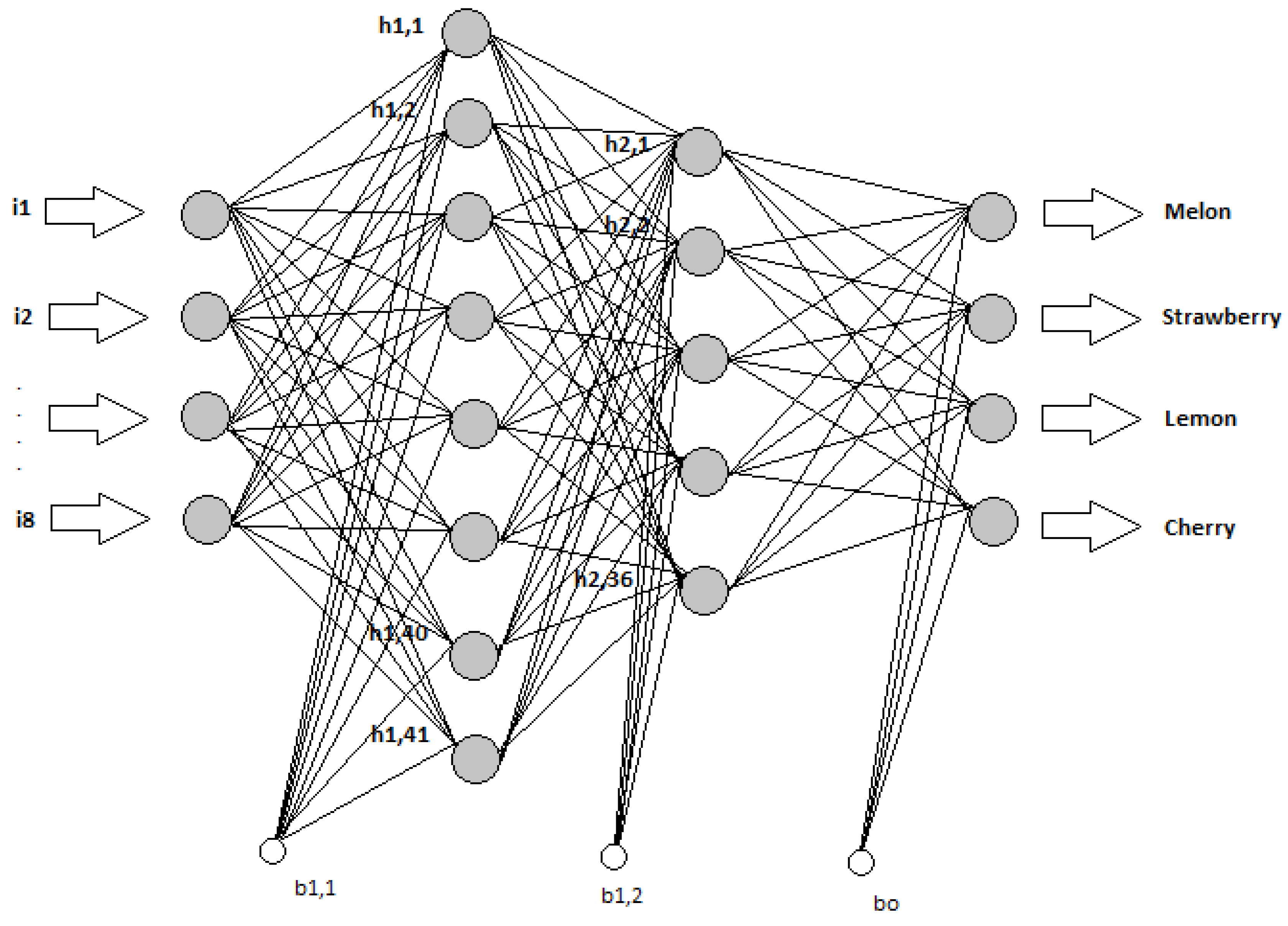

2.2. Artificial Neural Network (ANN)

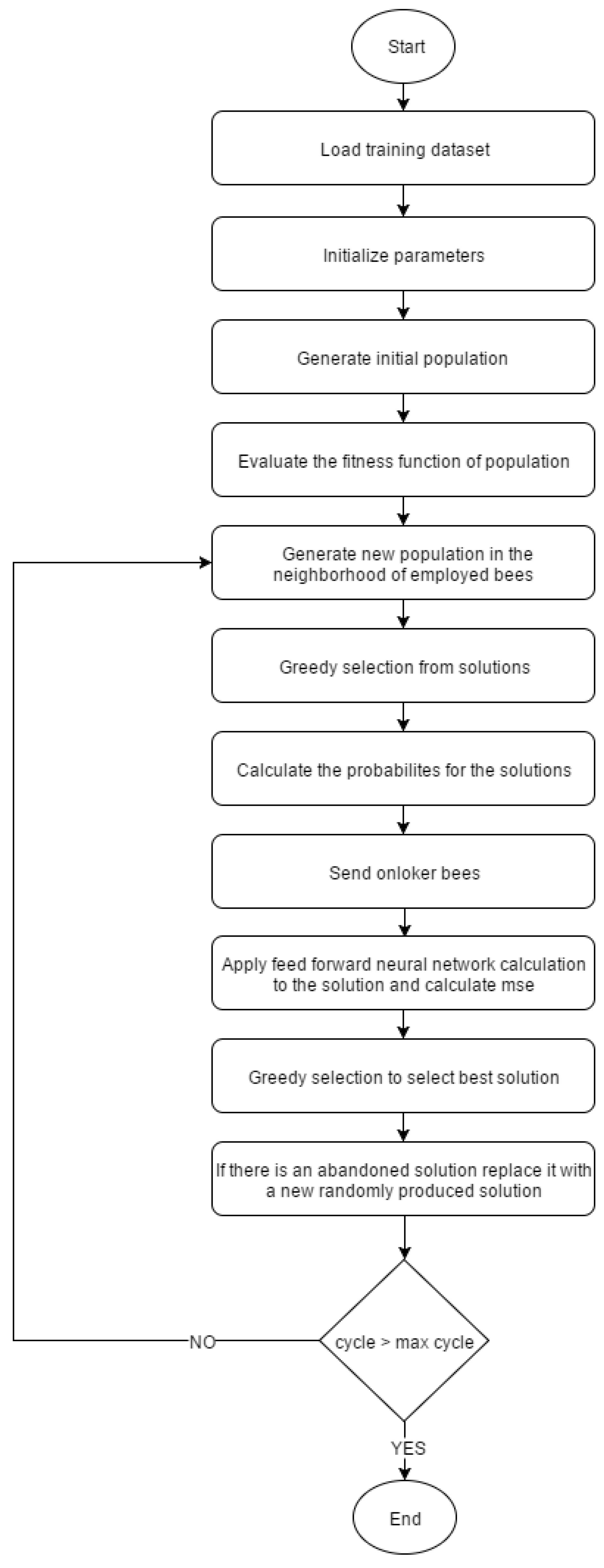

2.3. Artificial Bee Colony Algorithm (ABC)

2.4. Training ANN with ABC Algorithm

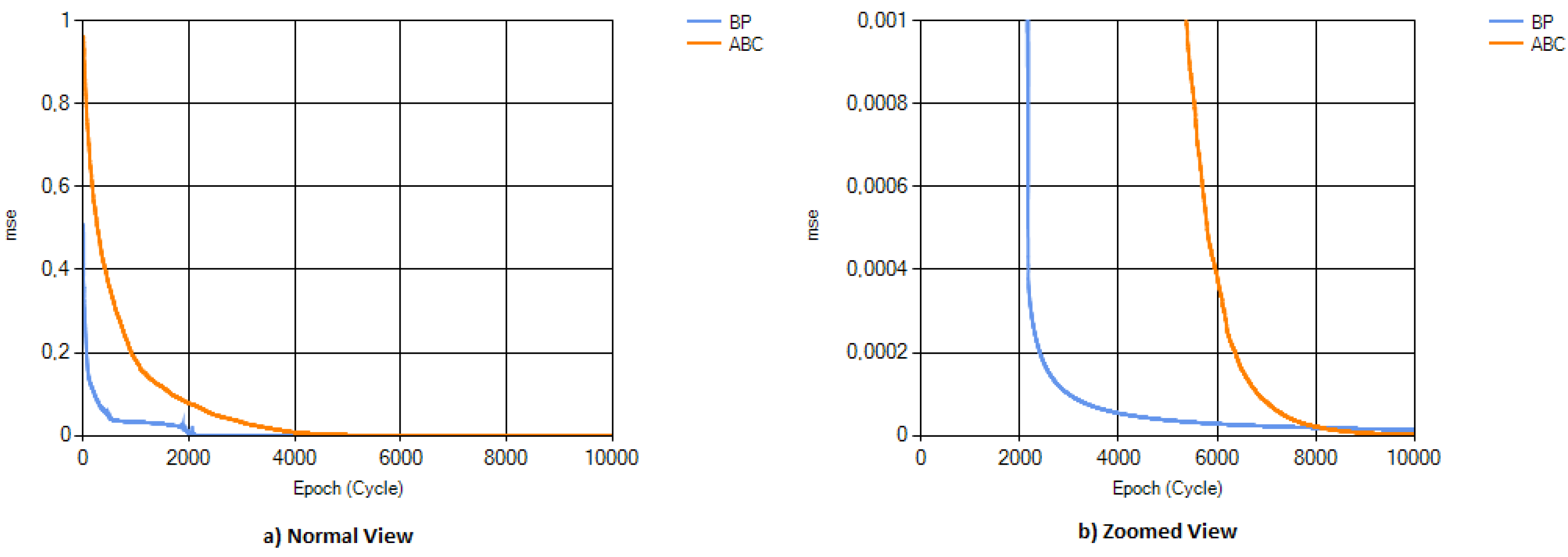

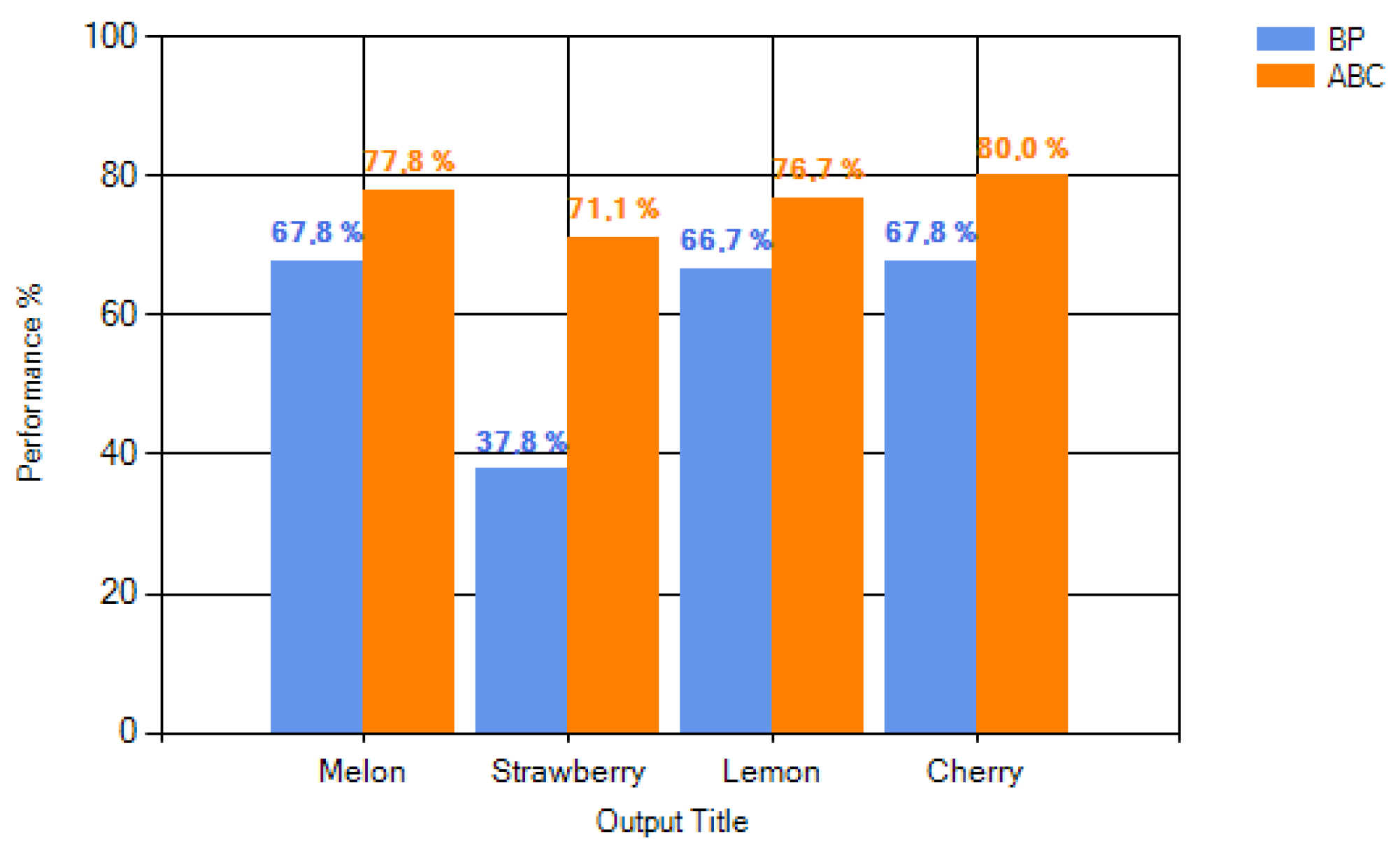

3. Results and Discussion

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Wilson, A.D.; Baietto, M. Applications and Advances in Electronic-Nose Technologies. Sensors 2009, 9, 5099–5148. [Google Scholar] [CrossRef] [PubMed]

- Wilson, A. Diverse Applications of Electronic-Nose Technologies in Agriculture and Forestry. Sensors 2013, 13, 2295–2348. [Google Scholar] [CrossRef] [PubMed]

- Baietto, M.; Wilson, A. Electronic-Nose Applications for Fruit Identification, Ripeness and Quality Grading. Sensors 2015, 15, 899–931. [Google Scholar] [CrossRef] [PubMed]

- Pearce, T.C.; Schiffman, S.S.; Nagle, H.T.; Gardner, J.W. Handbook of Machine Olfaction: Electronic Nose Technology; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2006; p. 624. [Google Scholar]

- Haddad, R.; Carmel, L.; Harel, D. A feature extraction algorithm for multi-peak signals in electronic noses. Sens. Actuators B Chem. 2007, 120, 467–472. [Google Scholar] [CrossRef]

- Carmel, L.; Sever, N.; Harel, D. On predicting responses to mixtures in quartz microbalance sensors. Sens. Actuators B Chem. 2005, 106, 128–135. [Google Scholar] [CrossRef]

- Carmel, L.; Sever, N.; Lancet, D.; Harel, D. An eNose algorithm for identifying chemicals and determining their concentration. Sens. Actuators B Chem. 2003, 93, 77–83. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning, 1st ed.; Springer-Verlag New York: New York, NY, USA, 2006; p. 738. [Google Scholar]

- Karaboga, D.; Gorkemli, B.; Ozturk, C.; Karaboga, N. A comprehensive survey: Artificial bee colony (ABC) algorithm and applications. Artif. Intell. Rev. 2012, 42, 21–57. [Google Scholar] [CrossRef]

- Qiu, S.; Gao, L.; Wang, J. Classification and regression of ELM, LVQ and SVM for E-nose data of strawberry juice. J. Food Eng. 2015, 144, 77–85. [Google Scholar] [CrossRef]

- Pizzoni, D.; Compagnone, D.; Di Natale, C.; D’Alessandro, N.; Pittia, P. Evaluation of aroma release of gummy candies added with strawberry flavours by gas-chromatography/mass-spectrometry and gas sensors arrays. J. Food Eng. 2015, 167, 77–86. [Google Scholar] [CrossRef]

- Xiao, Z.; Yu, D.; Niu, Y.; Chen, F.; Song, S.; Zhu, J.; Zhu, G. Characterization of aroma compounds of Chinese famous liquors by gas chromatography-mass spectrometry and flash GC electronic-nose. J. Chromatogr. B Anal. Technol. Biomed. Life Sci. 2014, 945–946, 92–100. [Google Scholar] [CrossRef] [PubMed]

- Liu, M.; Han, X.; Tu, K.; Pan, L.; Tu, J.; Tang, L.; Liu, P.; Zhan, G.; Zhong, Q.; Xiong, Z. Application of electronic nose in Chinese spirits quality control and flavour assessment. Food Control 2012, 26, 564–570. [Google Scholar] [CrossRef]

- Roy, R.B.; Chattopadhyay, P.; Tudu, B.; Bhattacharyya, N.; Bandyopadhyay, R. Artificial flavor perception of black tea using fusion of electronic nose and tongue response: A Bayesian statistical approach. J. Food Eng. 2014, 142, 87–93. [Google Scholar]

- Qin, Z.; Pang, X.; Chen, D.; Cheng, H.; Hu, X.; Wu, J. Evaluation of Chinese tea by the electronic nose and gas chromatography-mass spectrometry: Correlation with sensory properties and classification according to grade level. Food Res. Int. 2013, 53, 864–874. [Google Scholar] [CrossRef]

- Roy, R.B.; Tudu, B.; Shaw, L.; Jana, A.; Bhattacharyya, N.; Bandyopadhyay, R. Instrumental testing of tea by combining the responses of electronic nose and tongue. J. Food Eng. 2012, 110, 356–363. [Google Scholar]

- Tudu, B.; Jana, A.; Metla, A.; Ghosh, D.; Bhattacharyya, N.; Bandyopadhyay, R. Electronic nose for black tea quality evaluation by an incremental RBF network. Sens. Actuators B Chem. 2009, 138, 90–95. [Google Scholar] [CrossRef]

- Bhattacharya, N.; Tudu, B.; Jana, A.; Ghosh, D.; Bandhopadhyaya, R.; Bhuyan, M. Preemptive identification of optimum fermentation time for black tea using electronic nose. Sens. Actuators B Chem. 2008, 131, 110–116. [Google Scholar] [CrossRef]

- Dutta, A.; Tudu, B.; Bandyopadhyay, R.; Bhattacharyya, N. Black tea quality evaluation using electronic nose: An Artificial Bee Colony approach. In Proceedings of the 2011 IEEE Recent Advances in Intelligent Computational Systems, Trivandrum, India, 22–24 September 2011; pp. 143–146.

- Gupta, S.; Variyar, P.S.; Sharma, A. Application of mass spectrometry based electronic nose and chemometrics for fingerprinting radiation treatment. Radiat. Phys. Chem. 2015, 106, 348–354. [Google Scholar] [CrossRef]

- Dymerski, T.; Gȩbicki, J.; Wardencki, W.; Namieśnik, J. Application of an Electronic Nose Instrument to Fast Classification of Polish Honey Types. Sensors 2014, 14, 10709–10724. [Google Scholar] [CrossRef] [PubMed]

- Versari, A.; Laurie, V.F.; Ricci, A.; Laghi, L.; Parpinello, G.P. Progress in authentication, typification and traceability of grapes and wines by chemometric approaches. Food Res. Int. 2014, 60, 2–18. [Google Scholar] [CrossRef]

- Zakaria, A.; Ali, A.Y.; Adom, A.H.; Ahmad, M.N.; Masnan, M.J.; Aziz, A.H.A.; Fikri, N.A.; Abdullah, A.H.; Kamarudin, L.M. Improved classification of Orthosiphon stamineus by data fusion of electronic nose and tongue sensors. Sensors 2010, 10, 8782–8796. [Google Scholar] [CrossRef] [PubMed]

- Lebrun, M.; Plotto, A.; Goodner, K.; Ducamp, M.N.; Baldwin, E. Discrimination of mango fruit maturity by volatiles using the electronic nose and gas chromatography. Postharvest Biol. Technol. 2008, 48, 122–131. [Google Scholar] [CrossRef]

- Li, C.; Heinemann, P.; Sherry, R. Neural network and Bayesian network fusion models to fuse electronic nose and surface acoustic wave sensor data for apple defect detection. Sens. Actuators B Chem. 2007, 125, 301–310. [Google Scholar] [CrossRef]

- Cevoli, C.; Cerretani, L.; Gori, A.; Caboni, M.; Gallina Toschi, T.; Fabbri, A. Classification of Pecorino cheeses using electronic nose combined with artificial neural network and comparison with GC–MS analysis of volatile compounds. Food Chem. 2011, 129, 1315–1319. [Google Scholar] [CrossRef] [PubMed]

- Hanafizadeh, P.; Zare Ravasan, A.; Khaki, H.R. An expert system for perfume selection using artificial neural network. Expert Syst. Appl. 2010, 37, 8879–8887. [Google Scholar] [CrossRef]

- Al-Bastaki, Y. An Artificial Neural Networks-Based on-Line Monitoring Odor Sensing System. J. Comput. Sci. 2009, 5, 878–882. [Google Scholar] [CrossRef]

- Saraoglu, H.M.; Edin, B. E-Nose System for Anesthetic Dose Level Detection using Artificial Neural Network. J. Med. Syst. 2007, 31, 475–482. [Google Scholar] [CrossRef] [PubMed]

- Pavlou, A.K.; Magan, N.; Jones, J.M.; Brown, J.; Klatser, P.; Turner, A.P.F. Detection of Mycobacterium tuberculosis (TB) in vitro and in situ using an electronic nose in combination with a neural network system. Biosens. Bioelectron. 2004, 20, 538–544. [Google Scholar] [CrossRef] [PubMed]

- Uzlu, E.; AkpÄśnar, A.; Özturk, H.T.; Nacar, S.; Kankal, M. Estimates of hydroelectric generation using neural networks with the artificial bee colony algorithm for Turkey. Energy 2014, 69, 638–647. [Google Scholar] [CrossRef]

- Ozkan, C.; Ozturk, C.; Sunar, F.; Karaboga, D. The artificial bee colony algorithm in training artificial neural network for oil spill detection. Neural Netw. World 2011, 21, 473–492. [Google Scholar] [CrossRef]

- Nasimi, R.; Irani, R.; Moradi, B. An Improved Ant Colony Algorithm-Based ANN for Bottom Hole Pressure Prediction in Underbalanced Drilling. Pet. Sci. Technol. 2012, 30, 1307–1316. [Google Scholar] [CrossRef]

- Ghaedi, M.; Shojaeipour, E.; Ghaedi, A.; Sahraei, R. Isotherm and kinetics study of malachite green adsorption onto copper nanowires loaded on activated carbon: Artificial neural network modeling and genetic algorithm optimization. Spectrochim. Acta A Mol. Biomol. Spectrosc. 2015, 142, 135–149. [Google Scholar] [CrossRef] [PubMed]

- Ghaedi, M.; Ansari, A.; Bahari, F.; Ghaedi, A.M.; Vafaei, A. A hybrid artificial neural network and particle swarm optimization for prediction of removal of hazardous dye brilliant green from aqueous solution using zinc sulfide nanoparticle loaded on activated carbon. Spectrochim. Acta A Mol. Biomol. Spectrosc. 2015, 137, 1004–1015. [Google Scholar] [CrossRef] [PubMed]

- Ghaedi, M.; Ghaedi, A.M.; Ansari, A.; Mohammadi, F.; Vafaei, A. Artificial neural network and particle swarm optimization for removal of methyl orange by gold nanoparticles loaded on activated carbon and Tamarisk. Spectrochim. Acta A Mol. Biomol. Spectrosc. 2014, 132, 639–654. [Google Scholar] [CrossRef] [PubMed]

- Dhanarajan, G.; Mandal, M.; Sen, R. A combined artificial neural network modeling-particle swarm optimization strategy for improved production of marine bacterial lipopeptide from food waste. Biochem. Eng. J. 2014, 84, 59–65. [Google Scholar] [CrossRef]

- Majhi, B.; Panda, G. Robust identification of nonlinear complex systems using low complexity ANN and particle swarm optimization technique. Expert Syst. Appl. 2011, 38, 321–333. [Google Scholar] [CrossRef]

- Saraoglu, H.M.; Selvi, A.O.; Ebeoglu, M.A.; Tasaltin, C. Electronic Nose System Based on Quartz Crystal Microbalance Sensor for Blood Glucose and HbA1c Levels From Exhaled Breath Odor. IEEE Sens. J. 2013, 13, 4229–4235. [Google Scholar] [CrossRef]

- Gulbag, A.; Temurtas, F.; Yusubov, I. Quantitative discrimination of the binary gas mixtures using a combinational structure of the probabilistic and multilayer neural networks. Sens. Actuators B Chem. 2008, 131, 196–204. [Google Scholar] [CrossRef]

- Wei, G.; An, W.; Zhu, Z. Gas Mixture Quantification Based on Hilbert-Huang Transform and Neural Network by a Single Sensor. Int. J. Pattern Recognit. Artif. Intell. 2011, 25, 927–942. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Technical Report TR06; Erciyes University: Kayseri, Turkey, 2005; p. 10. [Google Scholar]

| Seconds | Frequency Difference | Norm | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.0000 |

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | 0.0091 |

| 3 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | 89 | 0.0581 |

| 4 | 0 | 0 | 0 | 0 | 0 | 14 | 89 | 251 | 0.1639 |

| 5 | 0 | 0 | 0 | 0 | 14 | 89 | 251 | 472 | 0.3083 |

| 6 | 0 | 0 | 0 | 14 | 89 | 251 | 472 | 718 | 0.4690 |

| 7 | 0 | 0 | 14 | 89 | 251 | 472 | 718 | 965 | 0.6303 |

| 8 | 0 | 14 | 89 | 251 | 472 | 718 | 965 | 1194 | 0.7799 |

| 9 | 14 | 89 | 251 | 472 | 718 | 965 | 1194 | 1385 | 0.9046 |

| 10 | 89 | 251 | 472 | 718 | 965 | 1194 | 1385 | 1531 | 1.0000 |

| Parameter | Value |

|---|---|

| Weight range | [−1, 1] |

| Threshold range | [−1, 1] |

| Activation function | Sigmoid |

| Learning coefficient | 0.2 |

| Momentum | 0.8 |

| Stopping rule (epoch) | 10000 |

| Parameter | Value |

|---|---|

| Parameter’s lower bound | −10 |

| Parameter’s upper bound | 10 |

| Colony size | 200 |

| Food source limit | 1000 |

| Max cycle | 10,000 |

| Number of Parameters | |

|---|---|

| Between input layer and the first hidden layer | 8 × 41 = 328 |

| Between the first and the second hidden layer | 41 × 36 = 1476 |

| Between the second hidden layer and the output layer | 36 × 4 = 144 |

| Number of threshold values in hidden layers and the output layer | 41 + 36 + 4 = 81 |

| Total number of parameters | 328 + 1476 + 144 + 81 = 2029 |

| Number of Hidden Layers | Number of Neurons | Min mse Value |

|---|---|---|

| 1 | 41 | 0.00023 |

| 1 | 45 | 0.00101 |

| 2 | 25 + 10 | 0.0011 |

| 2 | 41 + 36 | 0.000032 |

| 3 | 41 + 36 + 15 | 0.0034 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adak, M.F.; Yumusak, N. Classification of E-Nose Aroma Data of Four Fruit Types by ABC-Based Neural Network. Sensors 2016, 16, 304. https://doi.org/10.3390/s16030304

Adak MF, Yumusak N. Classification of E-Nose Aroma Data of Four Fruit Types by ABC-Based Neural Network. Sensors. 2016; 16(3):304. https://doi.org/10.3390/s16030304

Chicago/Turabian StyleAdak, M. Fatih, and Nejat Yumusak. 2016. "Classification of E-Nose Aroma Data of Four Fruit Types by ABC-Based Neural Network" Sensors 16, no. 3: 304. https://doi.org/10.3390/s16030304