Removing the Interdependency between Horizontal and Vertical Eye-Movement Components in Electrooculograms

Abstract

:1. Introduction

2. Experimental Environment

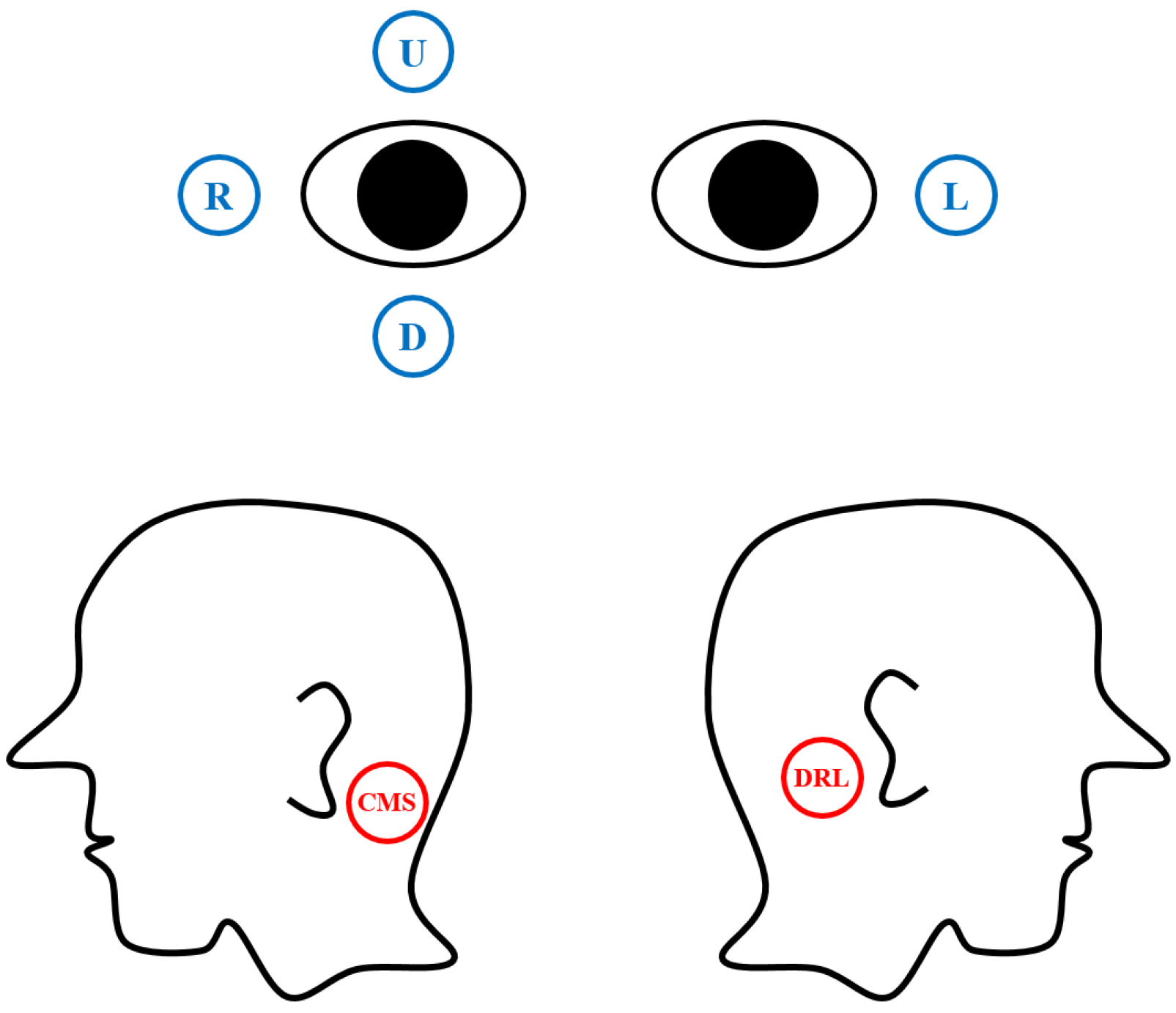

2.1. Recording of EOG Signals

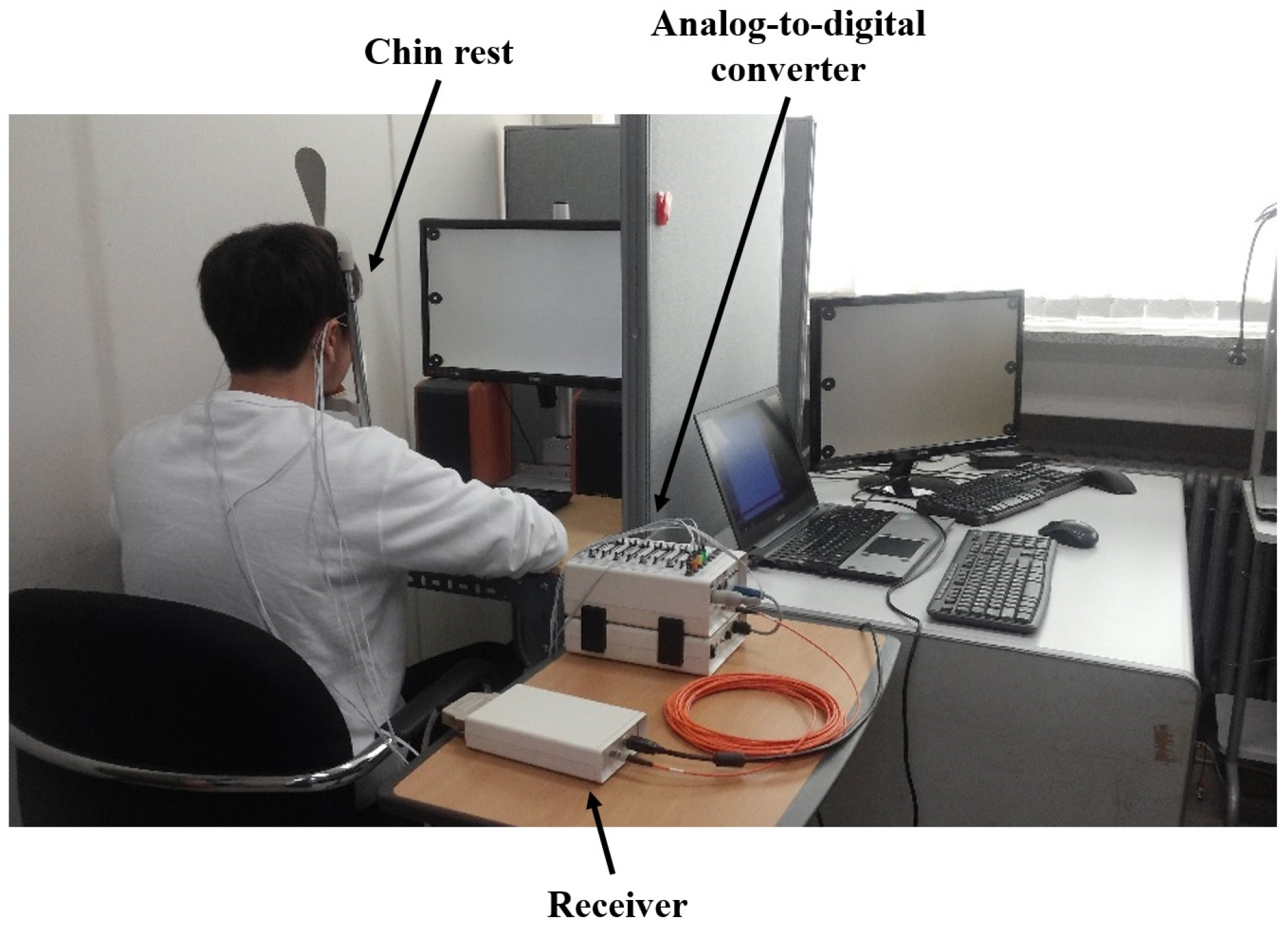

2.2. Experimental Setups

3. Methods

3.1. Preprocessing

3.2. Study on EOG Dynamics

3.3. Removal of Interdependency between Horizontal and Vertical EOG Components

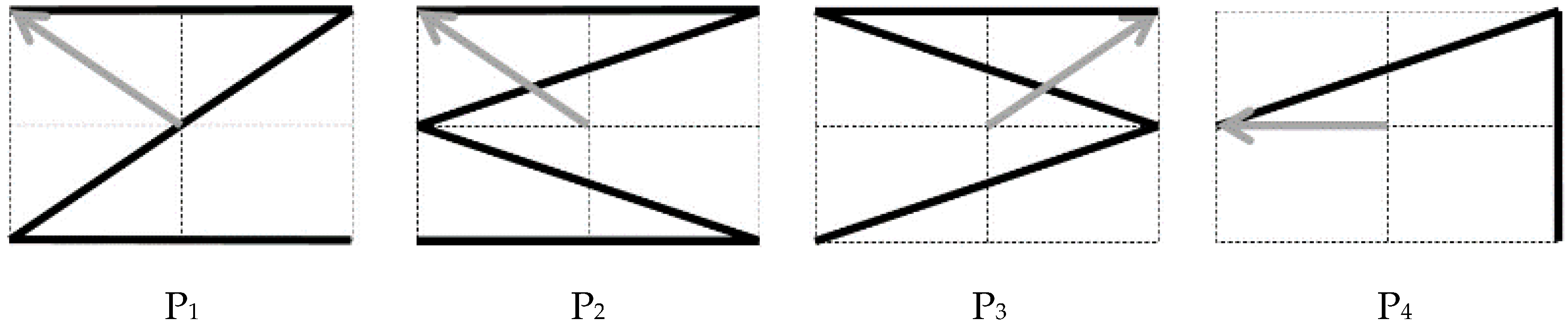

3.4. Experimental Validation of the Proposed Compensation Method

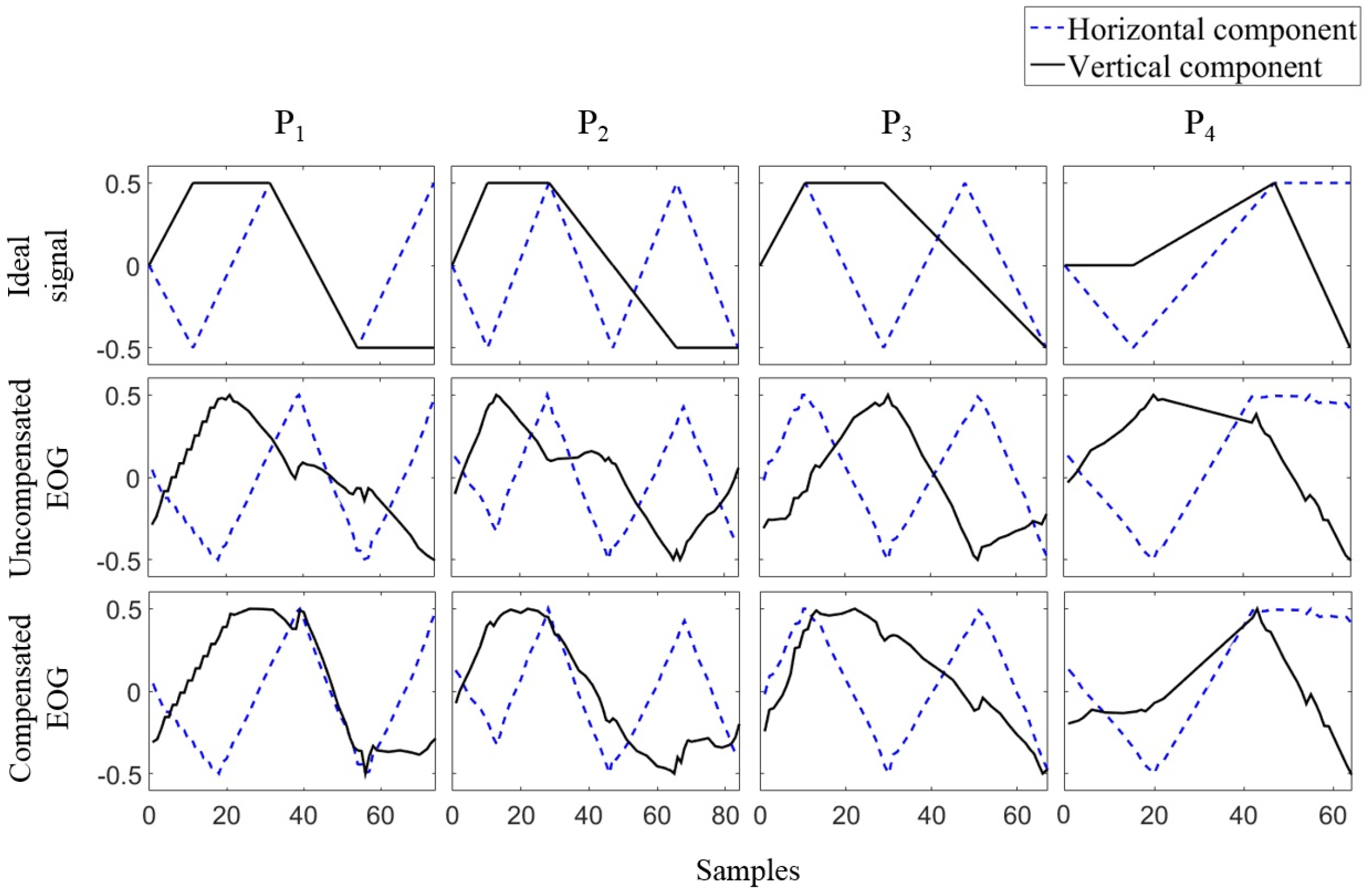

4. Results

| Patterns | P1 | P2 | P3 | P4 | Average |

|---|---|---|---|---|---|

| Compensated EOG | 0.97 ± 0.05 | 0.96 ± 0.08 | 0.98 ± 0.02 | 0.91 ± 0.09 | 0.95 ± 0.07 |

| Uncompensated EOG | 0.92 ± 0.08 | 0.93 ± 0.08 | 0.92 ± 0.06 | 0.79 ± 0.20 | 0.90 ± 0.13 |

| Subject ID | α | Compensated EOG | Uncompensated EOG | p-Value |

|---|---|---|---|---|

| S01 | −0.12 | 0.93 ± 0.05 | 0.90 ± 0.04 | 0.001 |

| S02 | −0.25 | 0.94 ± 0.07 | 0.81 ± 0.16 | 0.007 |

| S03 | −0.35 | 0.91 ± 0.13 | 0.80 ± 0.18 | 0.021 |

| S04 | −0.5 | 0.92 ± 0.12 | 0.79 ± 0.14 | 0.006 |

| S05 | 0 | 0.98 ± 0.02 | 0.98 ± 0.02 | - |

| S06 | −0.02 | 0.97 ± 0.02 | 0.97 ± 0.02 | 0.022 |

| S07 | −0.04 | 0.97 ± 0.03 | 0.98 ± 0.01 | 0.181 |

| S08 | −0.22 | 0.96 ± 0.06 | 0.93 ± 0.03 | 0.066 |

| S09 | −0.13 | 0.98 ± 0.02 | 0.97 ± 0.02 | 0.099 |

| S10 | −0.48 | 0.97 ± 0.02 | 0.80 ± 0.18 | 0.005 |

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

References

- Malmivuo, J.; Plonsey, R. Bioelectromagnetism: Principles and Applications of Bioelectric and Biomagnetic Fields; Oxford University Press: North Baldwin, NY, USA, 1995. [Google Scholar]

- Bulling, A.; Ward, J.A.; Gellersen, H.; Tröster, G. Eye movement analysis for activity recognition using electrooculography. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 741–753. [Google Scholar] [CrossRef] [PubMed]

- Barea, R.; Boquete, L.; López, E.; Mazo, M. Guidance of a wheelchair using electrooculography. In Proceedings of the 3rd IMACS International Multiconf. Circuits, Systems, Commun. and Computers, Piraeus, Greece, 16–19 July 1999; pp. 2421–2426.

- Venkataramanan, S.; Prabhat, P.; Choudhury, S.R.; Nemade, H.B.; Sahambi, J.S. Biomedical instrumentation based on electrooculogram (EOG) signal processing and application to a hospital alarm system. In Proceedings of the 2005 International Conference on Intelligent Sensing and Information Processing, Chennai, India, 4–7 January 2005; pp. 535–540.

- Young, L.R.; Sheena, D. Survey of eye movement recording methods. Behav. Res. Methods Instrum. 1975, 7, 397–429. [Google Scholar] [CrossRef]

- Kherlopian, A.R.; Gerrein, J.P.; Yue, M.; Kim, K.E.; Kim, J.W.; Sukumaran, M.; Sajda, P. Electrooculogram based system for computer control using a multiple feature classification model. IEEE Eng. Med. Biol. Soc. Int. Conf. 2006, 1, 1295–1298. [Google Scholar]

- Kim, M.R.; Yoon, G. Control signal from EOG analysis and its application. Int. J. Electr. Comput. Electron. Commun. Eng. 2013, 7, 864–867. [Google Scholar]

- Yan, M.; Tamura, H.; Tanno, K. A study on gaze estimation system using cross-channels electrooculogram signals. Int. Multiconf. Eng. Comput. Sci. 2014, 1, 112–116. [Google Scholar]

- Tsai, J.-Z.; Lee, C.-K.; Wu, C.-M.; Wu, J.-J.; Kao, K.-P. A feasibility study of an eye-writing system based on electro-oculography. J. Med. Biol. Eng. 2008, 28, 39–46. [Google Scholar]

- Joyce, C.A.; Gorodnitsky, I.F.; King, J.W.; Kutas, M. Tracking eye fixations with electroocular and electroencephalographic recordings. Psychophysiology 2002, 39, 607–618. [Google Scholar] [CrossRef] [PubMed]

- Young, L.R.; Sheena, D. Eye-movement measurement techniques. Am. Psychol. 1975, 30, 315–330. [Google Scholar] [CrossRef] [PubMed]

- Deng, L.Y.; Hsu, C.-L.; Lin, T.-C.; Tuan, J.-S.; Chang, S.-M. EOG-based Human-Computer Interface system development. Expert Syst. Appl. 2010, 37, 3337–3343. [Google Scholar] [CrossRef]

- Frequently Asked Questions. Available online: http://www.biosemi.com/faq/cms&drl.htm (accessed on 16 October 2015).

- Yagi, T.; Kuno, Y.; Koga, K.; Mukai, T. Drifting and blinking compensation in electro-oculography (EOG) eye-gaze interface. IEEE Int. Conf. Syst. Man Cybern. 2007, 4, 3222–3226. [Google Scholar]

- Chang, W.-D.; Cha, H.-S.; Kim, K.; Im, C.-H. Detection of eye blink artifacts from single prefrontal channel electroencephalogram. Comput. Methods Programs Biomed. 2016. [Google Scholar] [CrossRef] [PubMed]

- Ge, S.; Hong, X. A fully automatic ocular artifact removal from EEG based on fourth-order tensor method. Biomed. Eng. Lett. 2014, 4, 55–63. [Google Scholar] [CrossRef]

- Delaye, A.; Anquetil, E. HBF49 feature set: A first unified baseline for online symbol recognition. Pattern Recognit. 2013, 46, 117–130. [Google Scholar] [CrossRef] [Green Version]

- Chang, W.-D.; Shin, J. Dynamic positional warping: Dynamic time warping for online handwriting. Int. J. Pattern Recognit. Artif. Intell. 2009, 23, 967–986. [Google Scholar] [CrossRef]

- Juhola, M.; Zhang, Y.; Rasku, J. Biometric verification of a subject through eye movements. Comput. Biol. Med. 2013, 43, 42–50. [Google Scholar] [CrossRef] [PubMed]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, W.-D.; Cha, H.-S.; Im, C.-H. Removing the Interdependency between Horizontal and Vertical Eye-Movement Components in Electrooculograms. Sensors 2016, 16, 227. https://doi.org/10.3390/s16020227

Chang W-D, Cha H-S, Im C-H. Removing the Interdependency between Horizontal and Vertical Eye-Movement Components in Electrooculograms. Sensors. 2016; 16(2):227. https://doi.org/10.3390/s16020227

Chicago/Turabian StyleChang, Won-Du, Ho-Seung Cha, and Chang-Hwan Im. 2016. "Removing the Interdependency between Horizontal and Vertical Eye-Movement Components in Electrooculograms" Sensors 16, no. 2: 227. https://doi.org/10.3390/s16020227