Local Tiled Deep Networks for Recognition of Vehicle Make and Model

Abstract

:1. Introduction

2. Related Work

2.1. RBM and Auto-Encoder

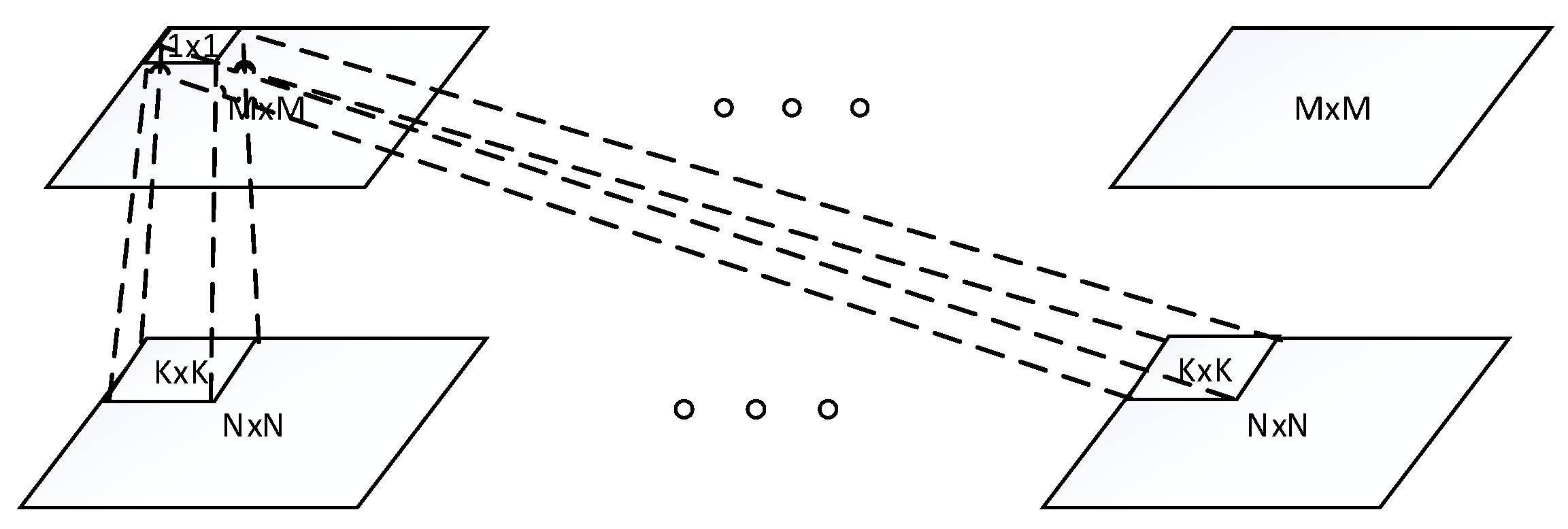

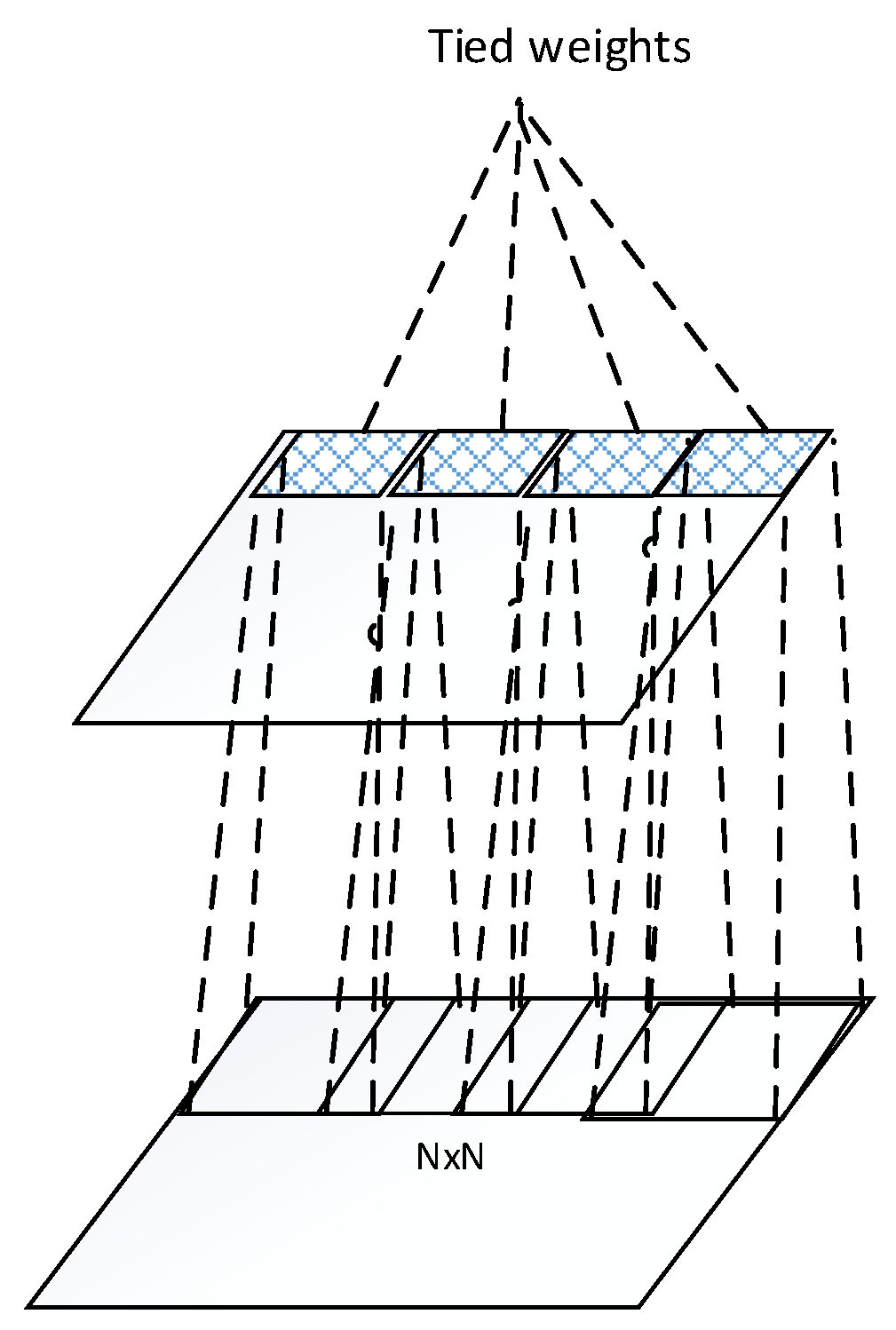

2.2. Convolutional Models

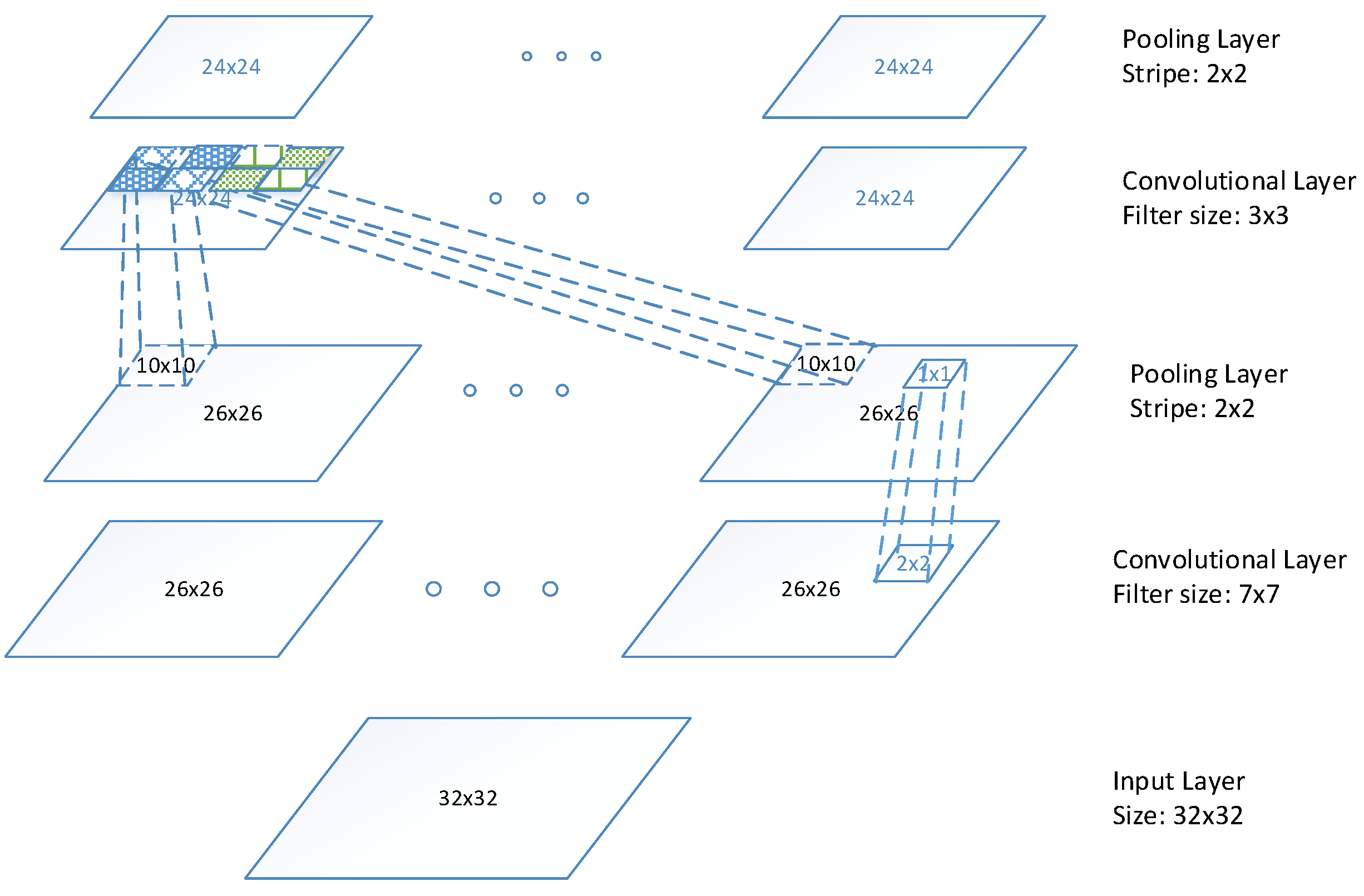

3. Framework of Proposed MMR System

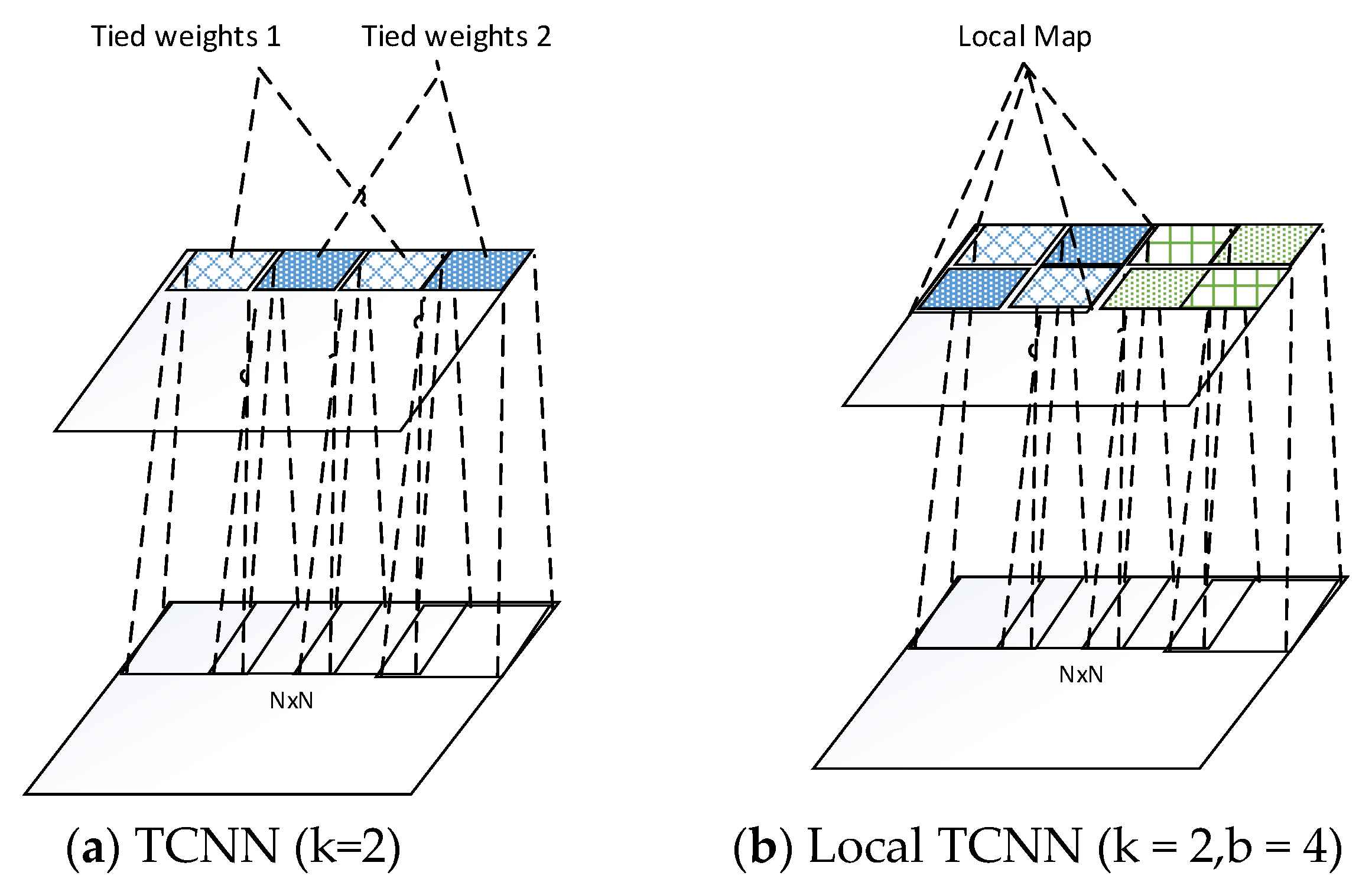

4. Local Tiled Convolutional Neural Networks

5. Histogram of Oriented Gradient

- (1)

- Gamma/Color Normalization: Normalization is necessary to reduce the effects of illumination and shadow; therefore, gamma/colour normalization is performed prior to the HOG extraction.

- (2)

- Gradient Computation: Gradient and orientation are calculated for further processing, as they provide information regarding contour and texture that can reduce the effect of illumination. Gaussian smoothing followed by a discrete derivative mask are effective for calculating the gradient. For the color image, gradients are calculated separately for each color channel, and the one with the largest norm is considered the gradient vector.

- (3)

- HOG of cells: Images are divided into local spatial patches called “cells”. An edge orientation histogram is calculated for each cell based on the weighted votes of the gradient and orientation. The votes are usually weighted by either the magnitude of gradient (MOG), the square of MOG, the square root of MOG, or the clipped form of MOG; in our experiment, the function of MOG was used. Moreover, the votes were linearly interpolated into both the neighbouring orientation and position.

- (4)

- Contrast normalization of blocks: Illumination varies from cell to cell resulting in uneven gradient strengths, and local contrast normalization is essential in this case. By grouping cells into a larger spatial block with an overlapped scheme, we were able to perform contrast normalization at a block level.

6. Results

6.1. Frontal-View Extraction

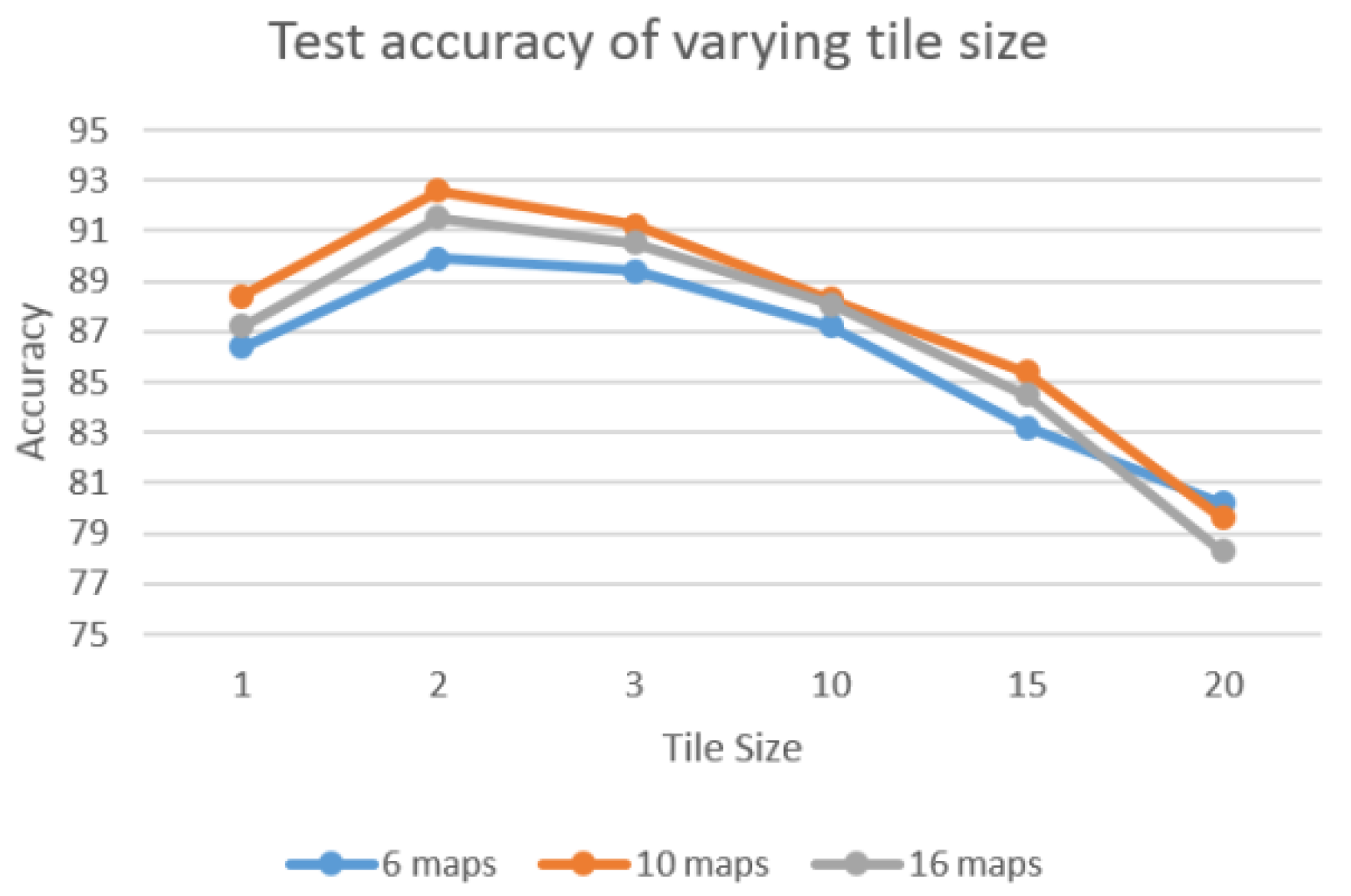

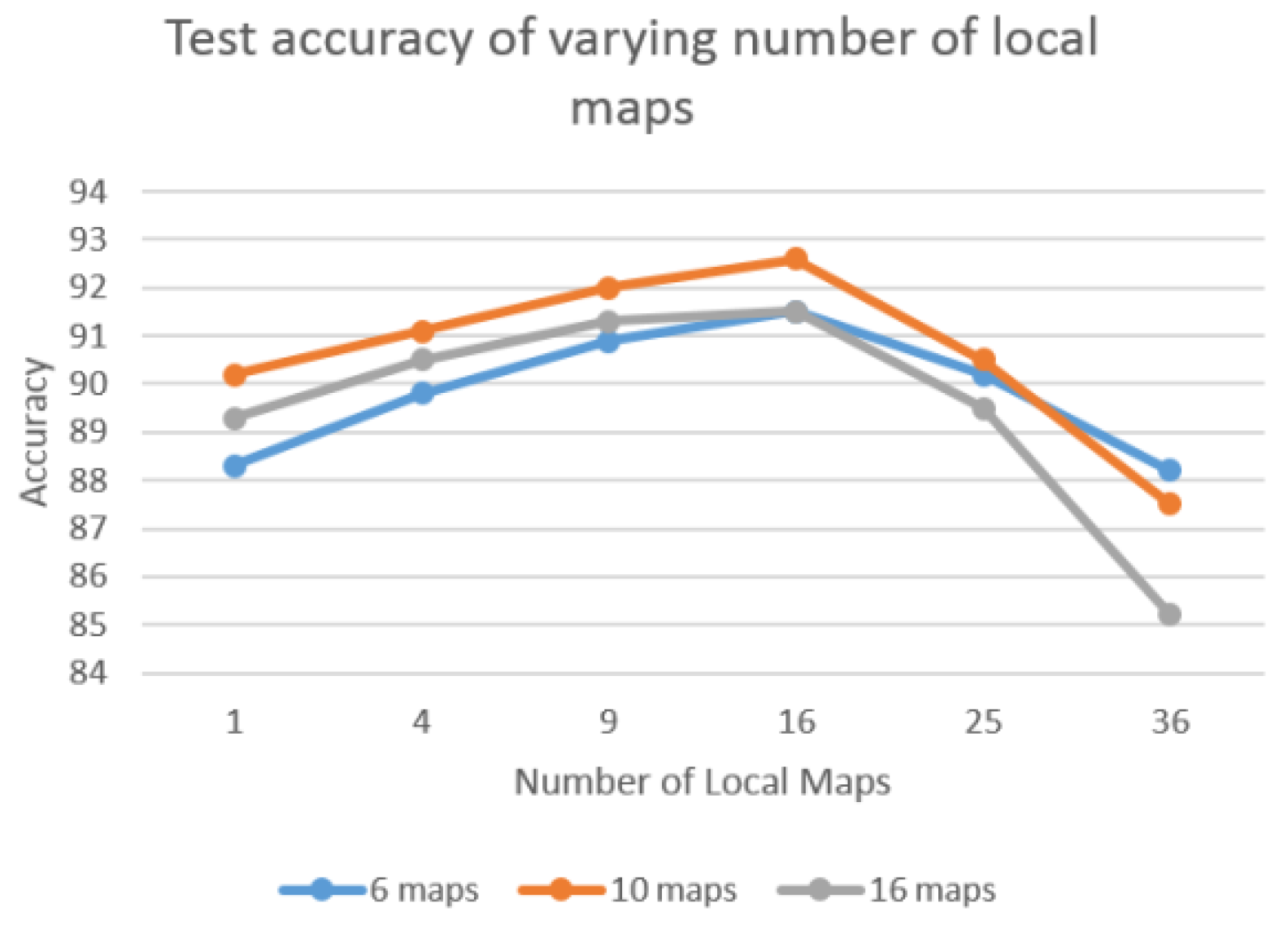

6.2. Local Tiled CNN

6.3. Enhancement with HOG Feature

6.4. Comparison with Other Algorithms

- (1)

- LBP: The LBP operator, one of the most popular texture descriptors of various applications, is invariant to monotonic changes and computational efficiency. In our experiment, frontal-view images were characterized by a histogram vector of 59 bins, and the weighted Chi-square distance was used to measure the difference between the two LBP histograms.

- (2)

- LGBP: LGBP is the extension of LBP incorporated with a Gabor filter, whereby the vehicle images are first filtered using Gabor and the results are in Gabor Magnitude Pictures (GMPs) frequency domain. In our experiment, five scales and eight orientations were used for the Gabor filter; as a result, 40 GMPs were generated for each vehicle image. Also, the weighted Chi-square distance was used to measure the differences between the LGBP features.

- (3)

- SIFT: SIFT is an effective local descriptor with scale and rotation-invariant properties. Training is not necessary for the SIFT algorithm. To compare SIFT with our LTCNN that requires training in advance, we used the same number of training sets to make a comparison with a test image, and a summation of the matched keypoints was used to measure the similarities between the two images.

| Algorithm | Accuracy (%) | Time (ms) |

|---|---|---|

| LBP | 46.0 | 2385 |

| LGBP | 68.8 | 3210 |

| SIFT | 78.3 | 3842 |

| Linear SVM | 88.0 | 1875 |

| RBM | 88.2 | 539 |

| CNN | 88.4 | 1274 |

| TCNN | 90.2 | 843 |

| LTCNN | 93.5 | 921 |

| LTCNN (with HOG) | 98.5 | 1022 |

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Gao, Y.; Lee, H.J. Vehicle Make Recognition based on Convolutional Neural Networks. In Proceedings of the International Conference on Information Science and Security, Seoul, Korea, 14–16 December 2015.

- Faro, A.; Giordano, D.; Spampinato, C. Adaptive background modeling integrated with luminosity sensors and occlusion processing for reliable vehicle detection. IEEE Trans. Intell. Transp. Syst. 2011, 12, 1398–1412. [Google Scholar] [CrossRef]

- Unno, H.; Ojima, K.; Hayashibe, K.; Saji, H. Vehicle motion tracking using symmetry of vehicle and background subtraction. In Proceedings of the 2007 IEEE Intelligent Vehicles Symposium, Istanbul, Turkey, 13–15 June 2007; pp. 1127–1131.

- Jazayeri, A.; Cai, H.-Y.; Zheng, J.-Y.; Tuceryan, M. Vehicle detection and tracking in vehicle video based on motion model. IEEE Trans. Intell. Transp. Syst. 2011, 12, 583–595. [Google Scholar] [CrossRef]

- Foresti, G.L.; Murino, V.; Regazzoni, C. Vehicle recognition and tracking from road image sequences. IEEE Trans. Veh. Technol. 1999, 48, 301–318. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, X.; Zhou, J. Vehicle detection in static road images with PCA-and-wavelet-based classifier. In Proceedings of the 2001 IEEE Intelligent Transportation Systems, Oakland, CA, USA, 25–29 August 2001; pp. 740–744.

- Tzomakas, C.; Seelen, W. Vehicle Detection in Traffic Scenes Using Shadow; Internal Report 98–06; Institut Fur Nueroinformatik, Ruhr-Universitat: Bochum, Germany, August 1998. [Google Scholar]

- Ratan, A.L.; Grimson, W.E.L.; Wells, W.M. Object detection and localization by dynamic template warping. Int. J. Comput. Vis. 2000, 36, 131–147. [Google Scholar] [CrossRef]

- Chen, Z.; Ellis, T.; Velastin, S.A. Vehicle type categorization: A comparison of classification schemes. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 74–79.

- Ma, X.; Eric, W.; Grimson, L. Edge-based rich representation for vehicle classification. In Proceedings of the 2005 Tenth IEEE International Conference on Computer Vision (ICCV), Beijing, China, 17–21 October 2005; pp. 1185–1192.

- AbdelMaseeh, M.; BadreIdin, I.; Abdelkader, M.F.; EI Saban, M. Car Make and Model recognition combining global and local cues. In Proceedings of the 2012 21st International Conference on Pattern Recognition (ICPR), Tsukuba, Japan, 11–15 November 2012; pp. 910–913.

- Hsieh, J.W.; Chen, L.C.; Chen, D.Y. Symmetrical SURF and Its Applications to Vehicle Detection and Vehicle Make and Model Recognition. IEEE Trans. Intell. Transp. Syst. 2014, 15, 6–20. [Google Scholar] [CrossRef]

- Petrovic, V.; Cootes, T. Analysis of features for rigid structure vehicle type recognition. In Proceedings of the British Machine Vision Conference, London, UK, 7–9 September 2004; pp. 587–596.

- Zafar, I.; Edirisinghe, E.A.; Avehicle, B.S. Localized contourlet features in vehicle make and model recognition. In Proceedings of the SPIE Image Processing: Machine Vision Applications II, San Jose, CA, USA, 18–22 January 2009; p. 725105.

- Pearce, G.; Pears, N. Automatic make and model recognition from frontal images of vehicles. In Proceedings of the IEEE International Conference on Advanced Video and Signal-Based Surveillance, Klagenfurt, Austria, 30 August–2 September 2011; pp. 373–378.

- Llorca, D.F.; Colás, D.; Daza, I.G.; Parra, I.; Sotelo, M.A. Vehicle model recognition using geometry and appearance of car emblems from rear view images. In Proceedings of the International Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 3094–3099.

- Prokaj, J.; Medioni, G. 3-D model based vehicle recognition. In Proceedings of the 2009 Workshop on Applications of Computer Vision, Snowbird, UT, USA, 7–8 December 2009; pp. 1–7.

- Ramnath, K.; Sinha, S.N.; Szeliski, R.; Hsiao, E. Car make and model recognition using 3D curve alignment. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Steamboat Springs, CO, USA, 24–26 March 2014.

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. Scale and Affine Invariant Interest Point Detectors. Int’l J. Comput. Vision 2004, 1, 63–86. [Google Scholar] [CrossRef]

- Varjas, V.; Tanacs, A. Vehicle recognition from frontal images in mobile environment. In Proceedings of the 8th International Symposium on Image and Signal Processing and Analysis, Trieste, Italy, 4–6 September 2013; pp. 819–823.

- Ahonen, T.; Hadid, A.; Pietikainen, M. Face Description with Local Binary Patterns: Application to Face Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2037–2041. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Shan, S.; Gao, W.; Chen, X.; Zhang, H. Local Gabor Binary Pattern Histogram Sequence (LGBPHS): A Novel Non-Statistical Model for Face Representation and Recognition. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Beijing, China, 17–20 October 2005; pp. 786–791.

- Lee, H.J. Neural network approach to identify model of vehicles. Lect. Notes Comput. Sci. 2006, 3973, 66–72. [Google Scholar]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Taigman, Y.; Yang, M.; Ranzato, M.A.; Wolf, L. DeepFace: Closing the Gap to Human-Level Performance in Face Verification. In Proceedings of the IEEE International Conference on Computer Vision and Patter Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708.

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Vincent, P. A Connection between Score Matching and Denoising Autoencoders. Neural Comput. 2011, 23, 1661–1674. [Google Scholar] [CrossRef] [PubMed]

- Ranzato, M.; Hinton, G. Modeling Pixel Means and Covariances Using Factorized Third-Order Boltzmann Machines. In Proceedings of the IEEE Conf. Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2551–2558.

- Ranzato, M.; Mnih, V.; Hinton, G. Generating More Realistic Images Using Gated MRF’s. In Proceedings of the Neural Information and Processing Systems, Vancouver, BC, Canada, 6–9 December 2010.

- Rifai, S.; Vincent, P.; Muller, X.; Glorot, X.; Bengio, Y. Contractive Auto-Encoders: Explicit Invariance during Feature Extraction. In Proceedings of the the 28th International Conference on Machine Learning, Bellevue, WA, USA, 28 June–2 July 2011.

- Vincent, P.; Larochelle, H.; Bengio, Y.; Manzagol, P.-A. Extracting and Composing Robust Features with Denoising Autoencoders. In Proceedings of the the 25th international conference on Machine learning, Helsinki, Filand, 5–9 July 2008.

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient based learning applied to document recognition. In Proceeding of the IEEE; 1998. [Google Scholar]

- Lee, H.; Grosse, R.; Ranganath, R.; Ng, A.Y. Convolutional Deep Belief Networks for Scalable Unsupervised Learning of Hierarchical Representations. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009.

- Alex, K.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in neural information processing systems, Lake Tahoe, NV, USA, 3–6 December 2012.

- Sermanet, P.; Eigen, D.; Zhang, X. Overfeat: Integrated recognition, localization and detection using convolutional networks. Available online: http://arxiv.org/abs/1312.6229 (accessed on 21 December 2013).

- Szegedy, C.; Liu, W.; Jia, Y. Going deeper with convolutions. Available online: http://arxiv.org/abs/1409.4842 (accessed on 17 September 2014).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. Available online: http://arxiv.org/abs/1512.03385 (accessed on 10 December 2015).

- Fan, R.E.; Chang, K.W.; Hsieh, C.J.; Wang, X.R.; Lin, C.J. LIBLINEAR: A library for large linear classification. J. Mach Learn. Res. 2008, 9, 1871–1874. [Google Scholar]

- Jiquan, N.; Chen, Z.; Chia, D.; Pang, W.K.; Quoc, V.L.; Andrew, Y.N. Tiled convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 6–9 December 2010; pp. 1279–1287.

- Hyvarinen, A.; Hoyer, P. Topographic independent component analysis as a model of V1 organization and receptive fields. Neural Comput. 2001, 13, 1527–1558. [Google Scholar] [CrossRef] [PubMed]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, Y.; Lee, H.J. Local Tiled Deep Networks for Recognition of Vehicle Make and Model. Sensors 2016, 16, 226. https://doi.org/10.3390/s16020226

Gao Y, Lee HJ. Local Tiled Deep Networks for Recognition of Vehicle Make and Model. Sensors. 2016; 16(2):226. https://doi.org/10.3390/s16020226

Chicago/Turabian StyleGao, Yongbin, and Hyo Jong Lee. 2016. "Local Tiled Deep Networks for Recognition of Vehicle Make and Model" Sensors 16, no. 2: 226. https://doi.org/10.3390/s16020226