Vision-Based Corrosion Detection Assisted by a Micro-Aerial Vehicle in a Vessel Inspection Application

Abstract

:1. Introduction

2. Background and Related Work

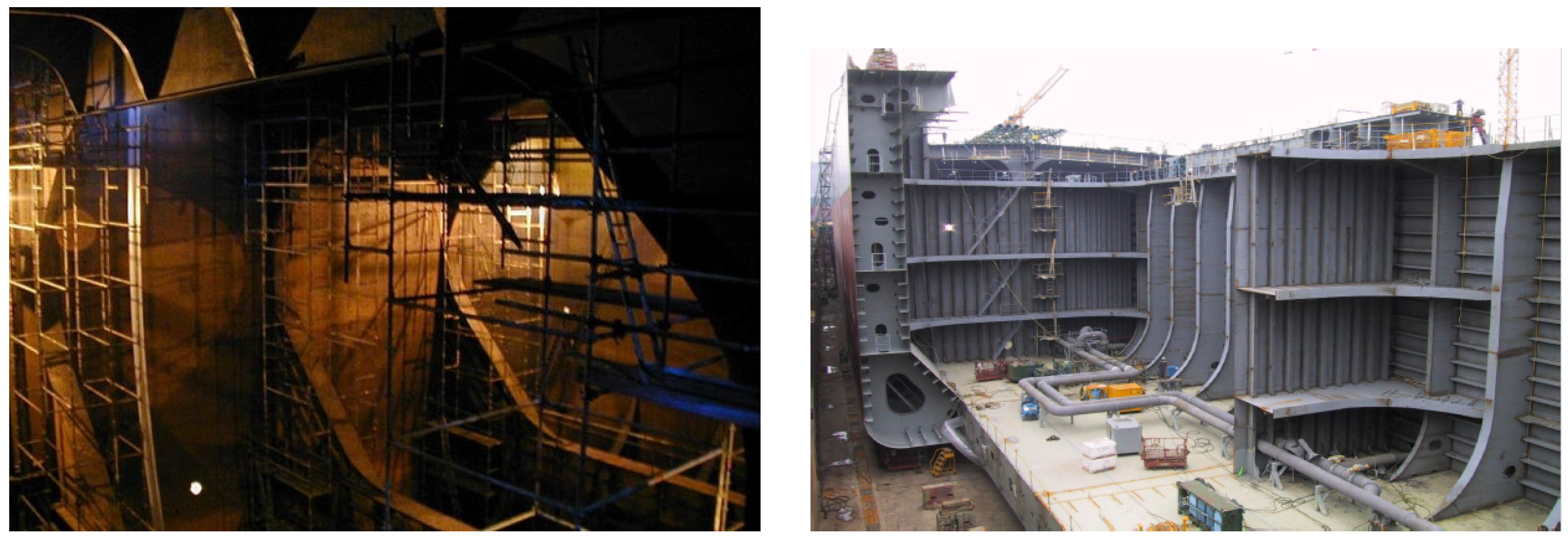

2.1. Inspection Problem and Platform Requirements

2.2. Aerial Robots for Visual Inspection

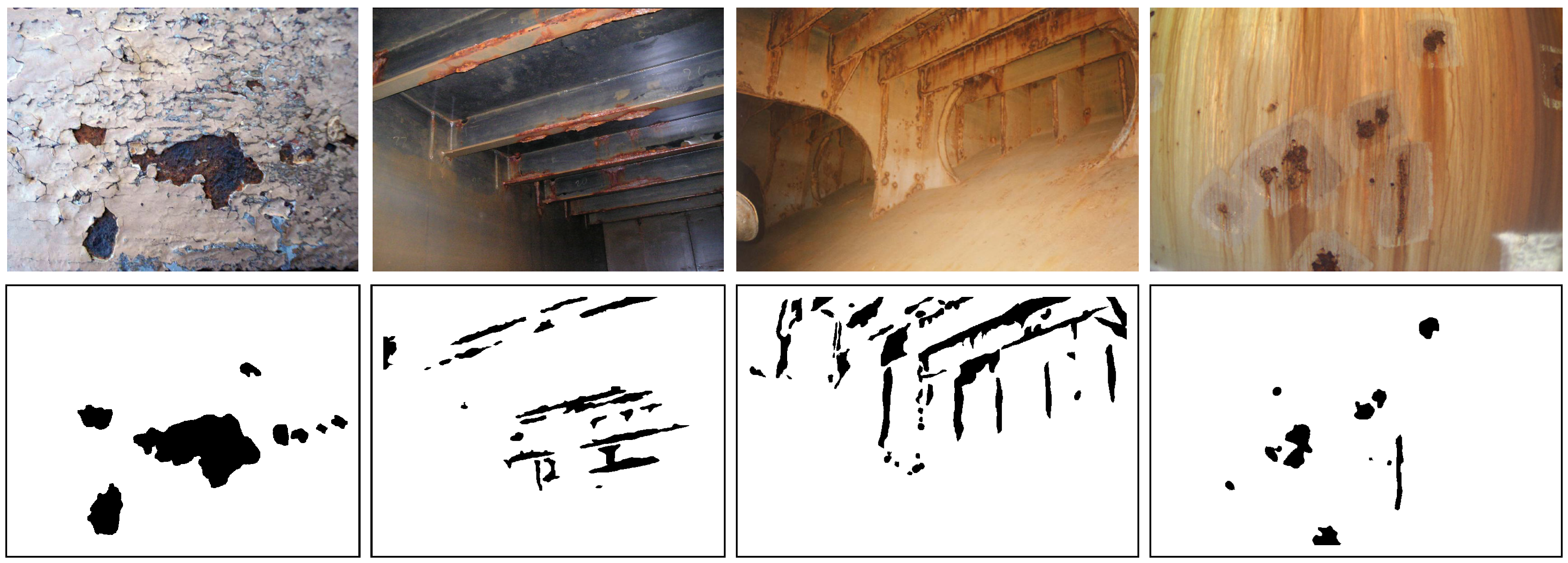

2.3. Defect Detection

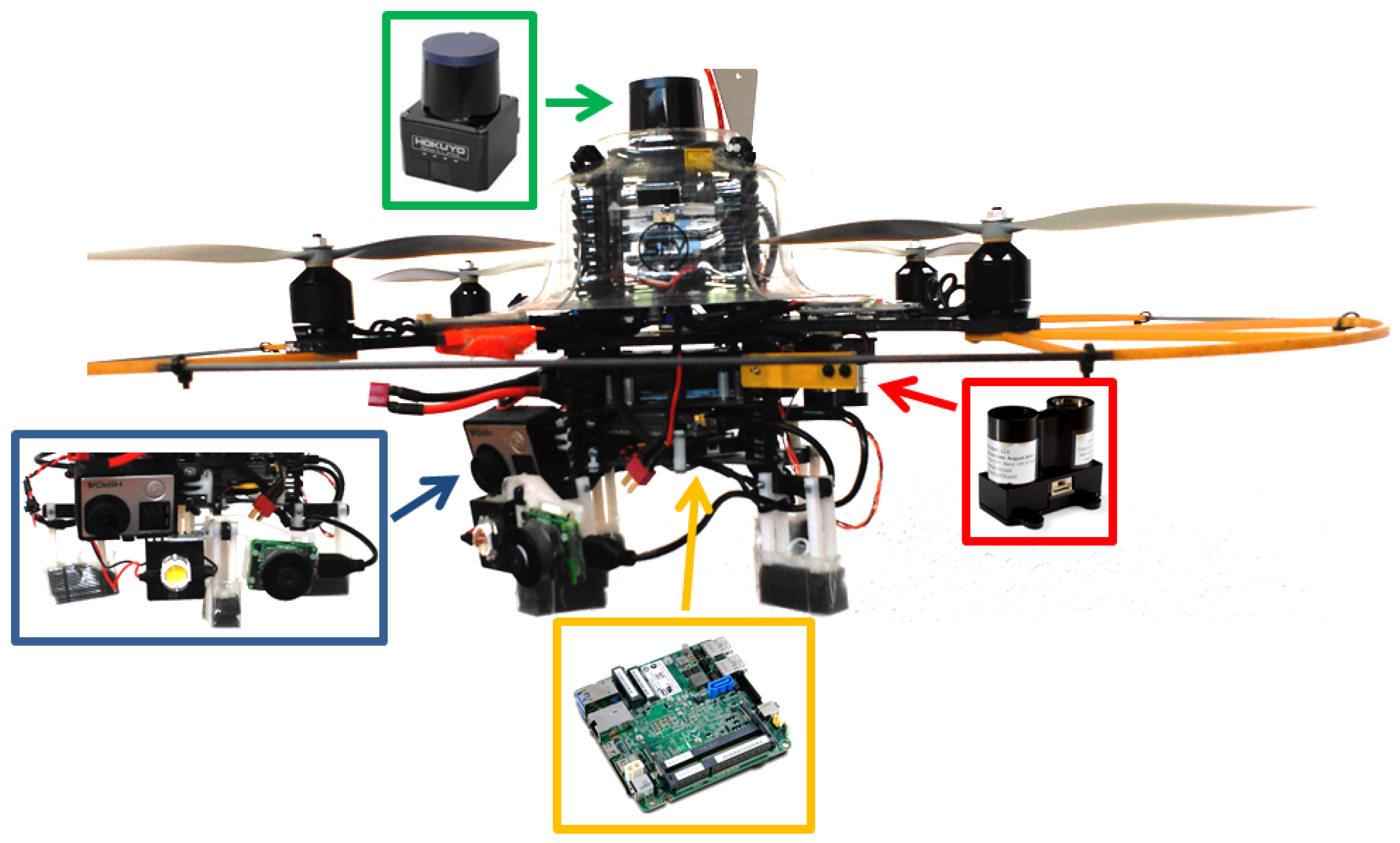

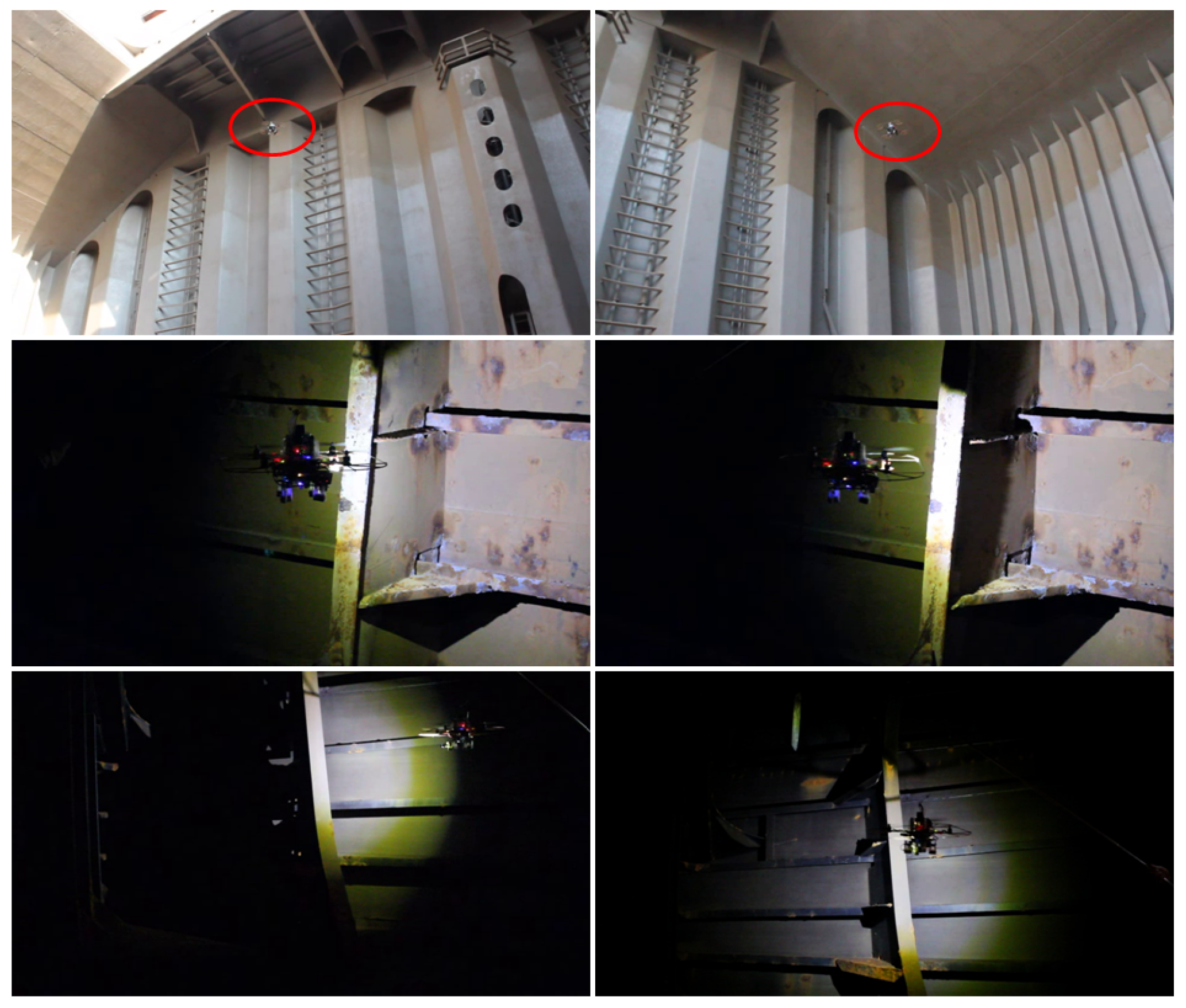

3. The Aerial Platform

3.1. General Overview

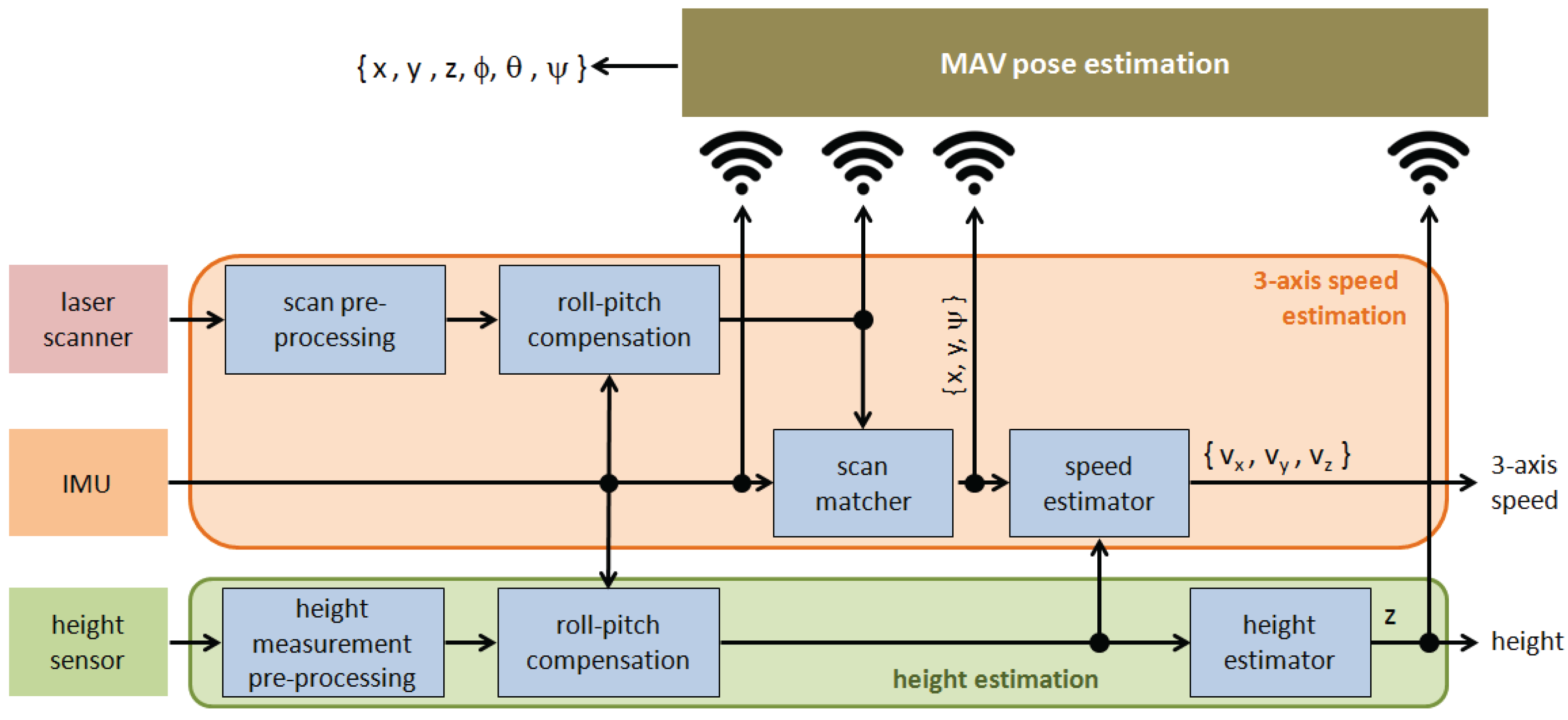

- The lightweight laser scanner Hokuyo UST-20LX, which provides 20 m coverage for a 270∘ angular sector. This sensor is used to estimate 2D speed as well as distances to the surrounding obstacles.

- A downward-looking LIDAR-Lite laser range finder used to supply height data for a maximum range of 40 m. Vertical speed is estimated by proper differentiation of the height measurements.

- Two cameras to collect, from the vessel structures under inspection, images on demand (a Chameleon 3 camera, by Pointgrey (Richmond, VA, USA), fitted with a Sony IMX265, CMOS, 1/1.8″, 2048 × 1536-pixel imaging sensor, by Sony (Tokyo, Japan), and a fixed-focal length lightweight M12 8 mm lens) and video footage (a GoPro 4 camera, by Gopro (San Mateo, CA, USA), which supplies stabilized HD video).

- A 10 W pure white LED (5500–6500 K) delivering 130 lumens/watt for a 90∘ beam angle.

- An Intel NUC D54250WYB embedded PC featuring an Intel Core i5-4250U 1.3 GHz processor and 4 GB RAM.

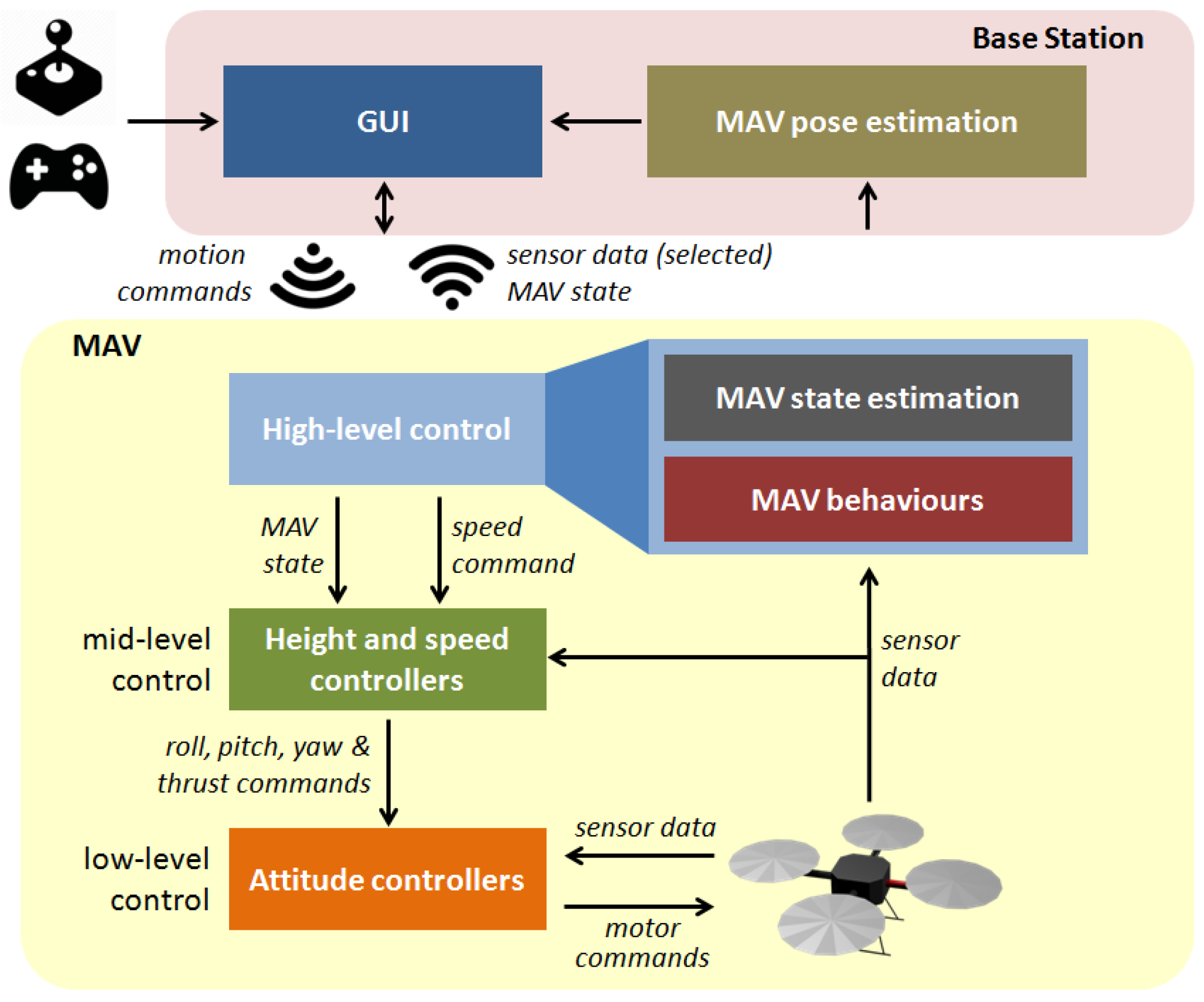

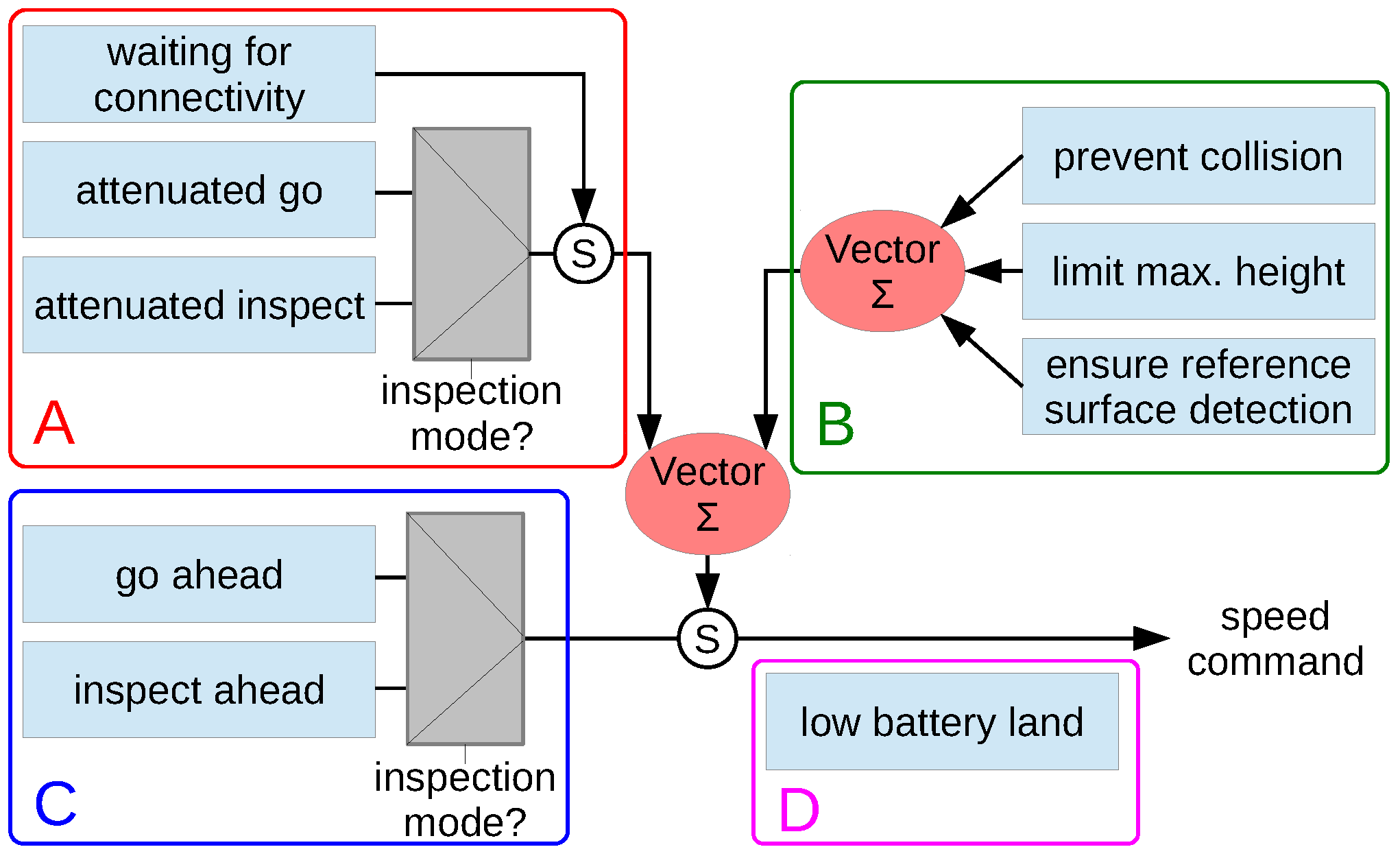

3.2. Control Software

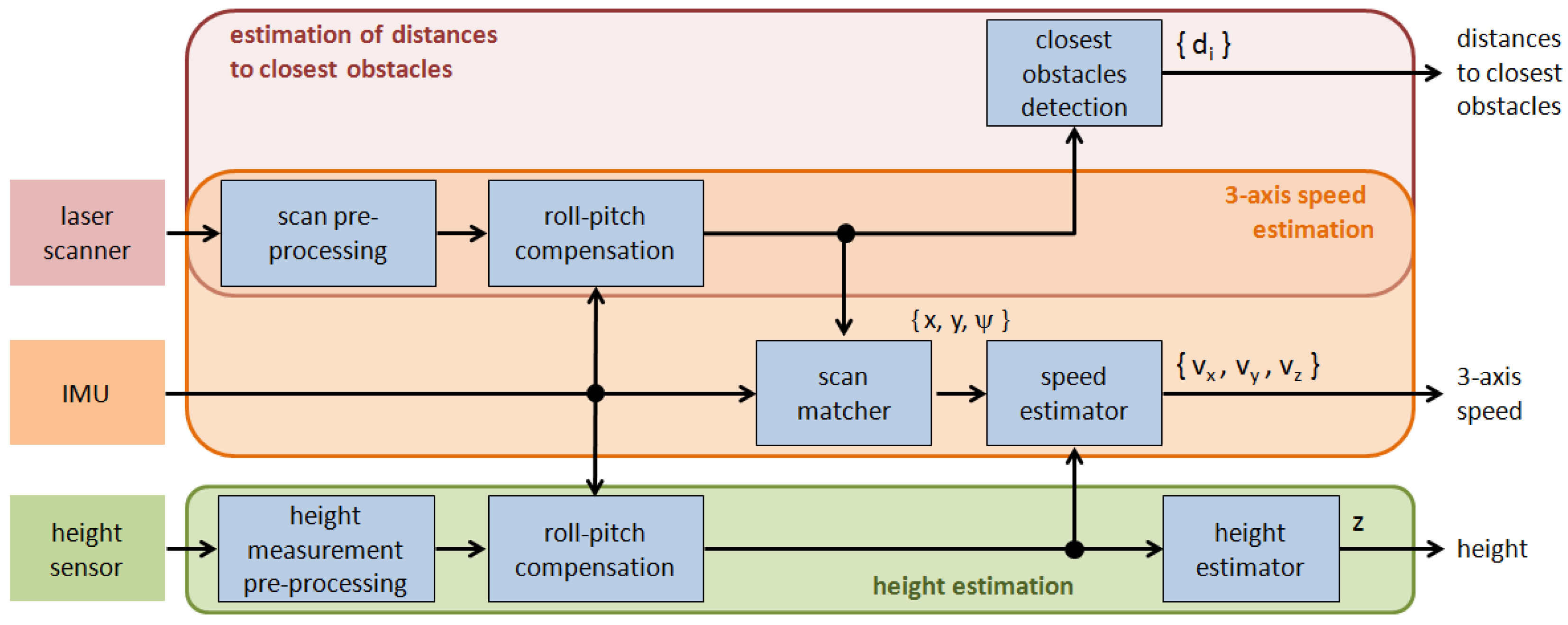

3.2.1. Estimation of MAV State and Distance to Obstacles

3.2.2. Generation of MAV Speed Commands

3.2.3. Base Station

4. Detection of Defects

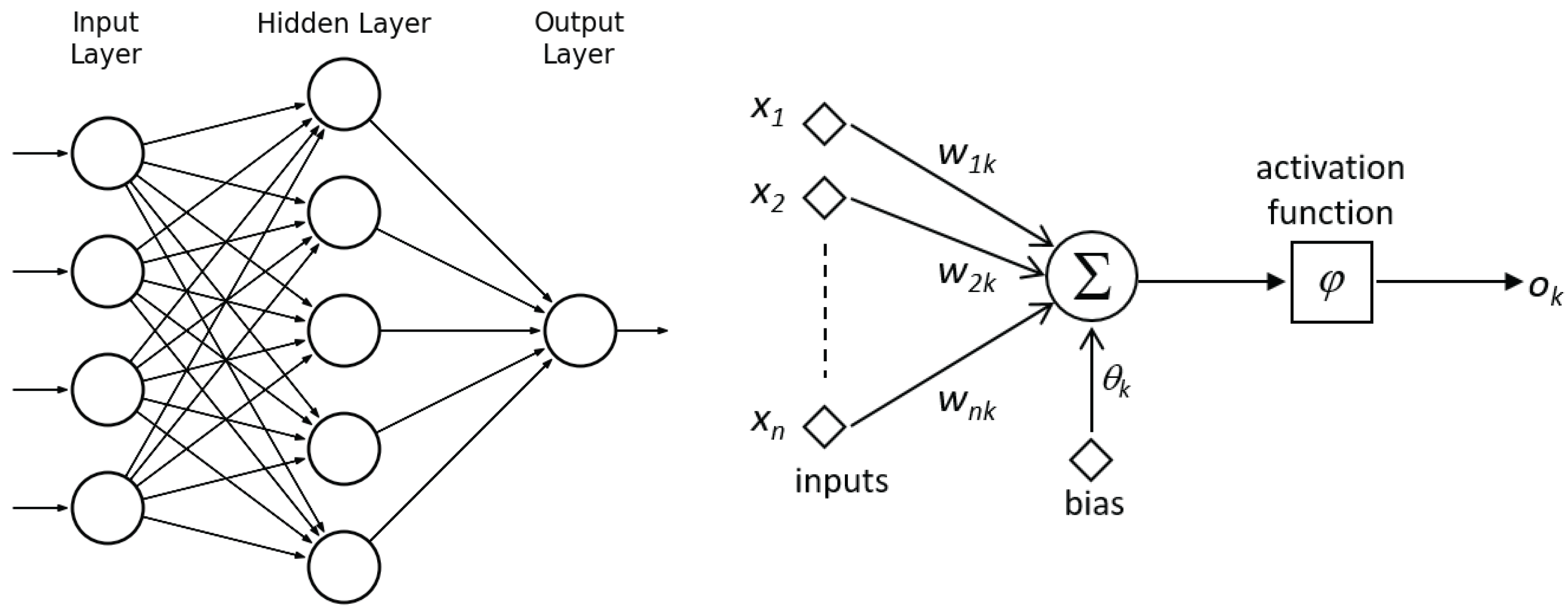

4.1. Background

4.2. Network Features

- For describing colour, we find the dominant colours inside a square patch of size pixels, centered at the pixel under consideration. The colour descriptor comprises as many components as the number of dominant colours multiplied by the number of colour channels.

- Regarding texture, center-surround changes are accounted for in the form of signed differences between a central pixel and its neighbourhood at a given radius for every colour channel. The texture descriptor consists of a number of statistical measures about the differences occurring inside -pixel patches.

- As anticipated above, we perform a number of tests varying the different parameters involved in the computation of the patch descriptors, such as, e.g., the patch size w, the number of dominant colours m, or the size of the neighbourhood for signed differences computation .

- Finally, the number of hidden neurons are varied as a fraction f > 0 of the number of components n of the input patterns: .

4.2.1. Dominant Colours

4.2.2. Signed Surrounding Differences

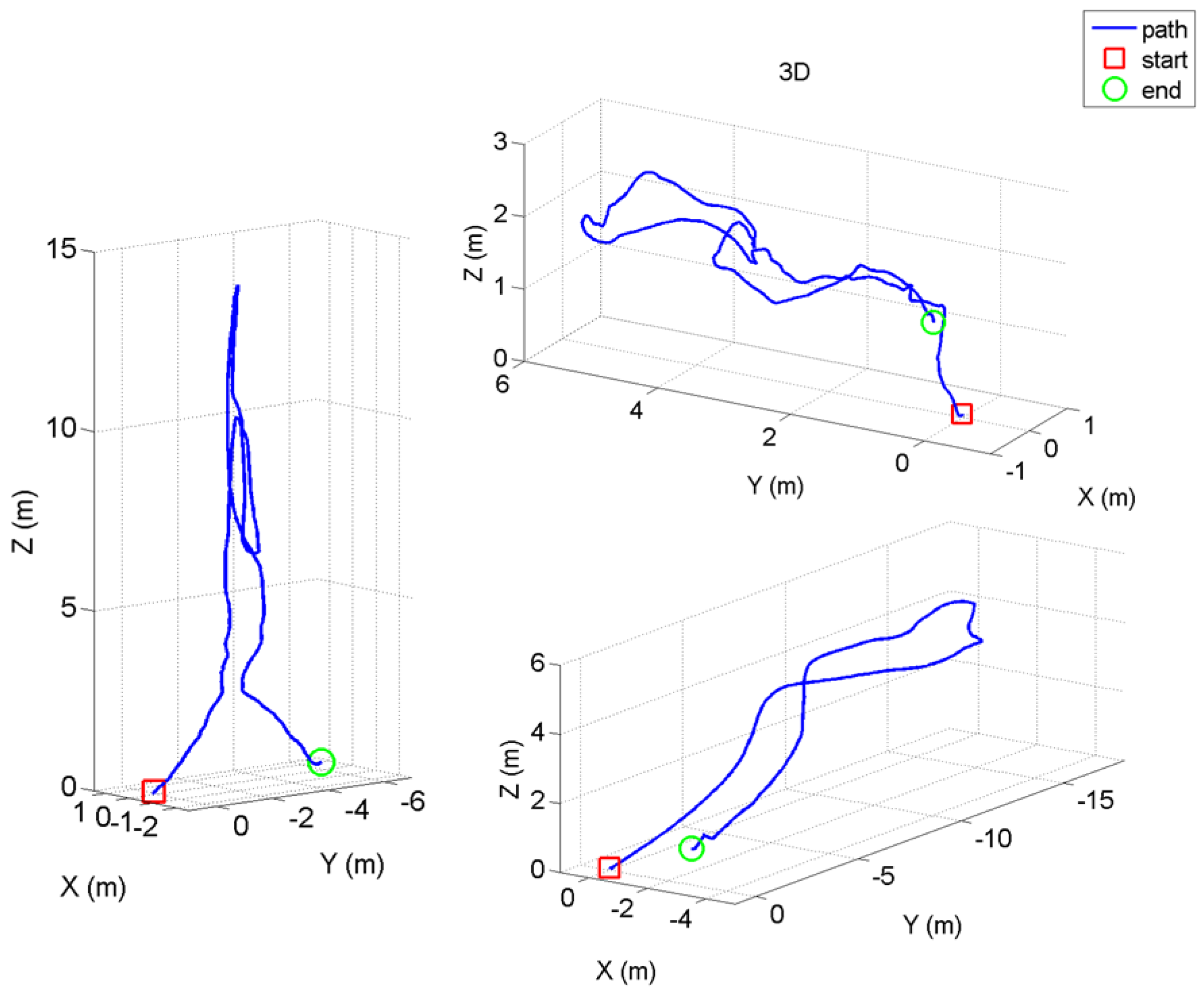

5. Experimental Results

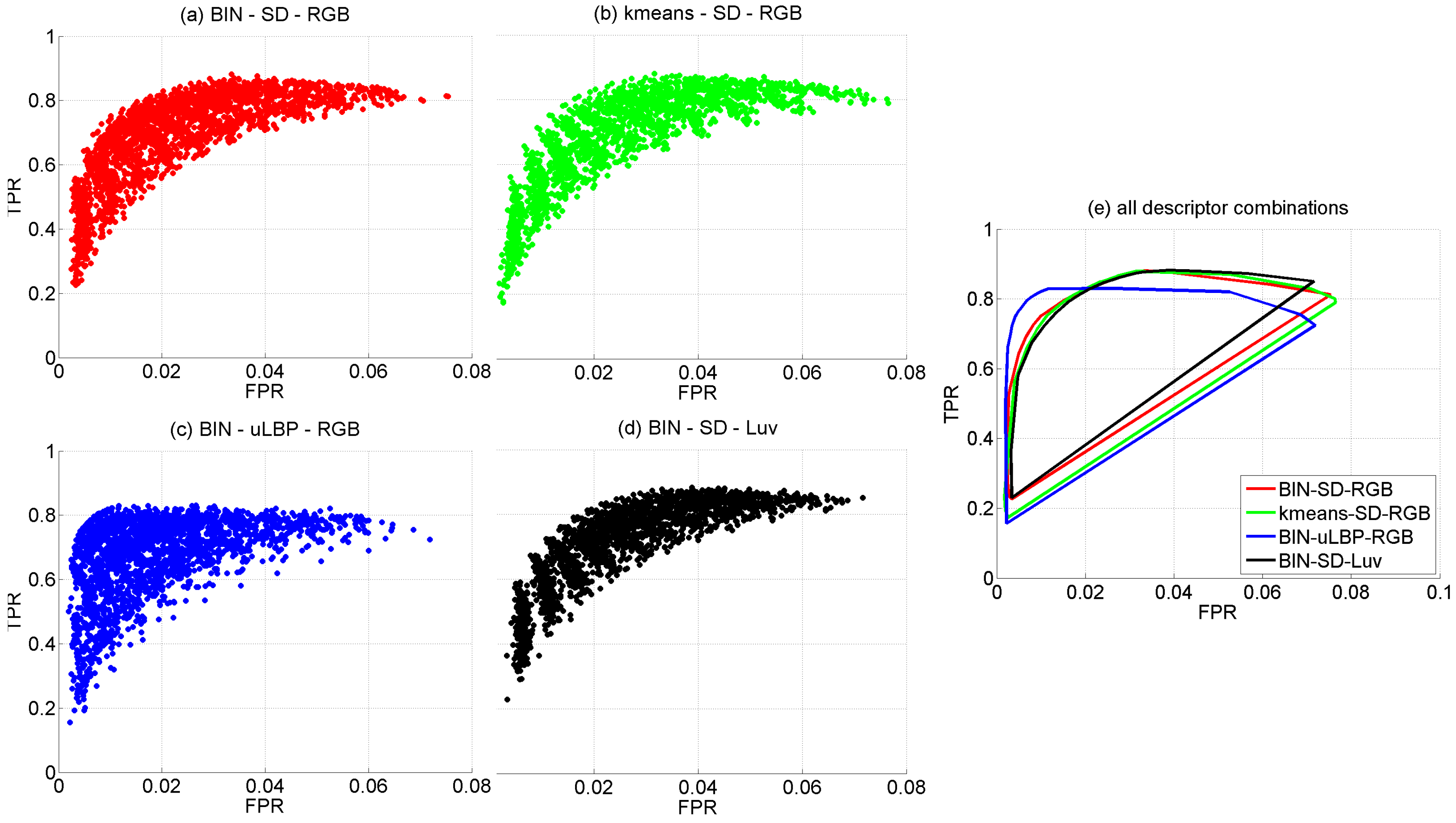

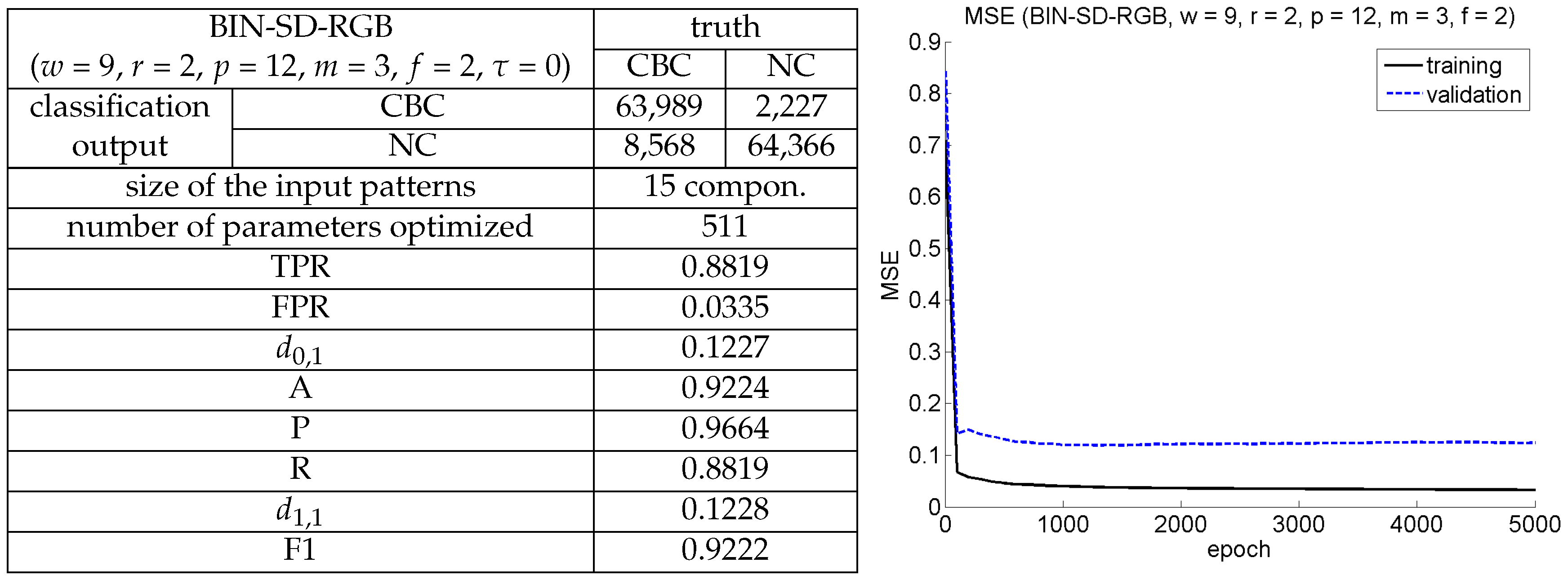

5.1. Configuration of the CBC Detector

- Half-patch size: w = 3, 5, 7, 9 and 11, giving rise to neighbourhood sizes ranging from to pixels.

- Number of DC: m = 2, 3 and 4.

- Number of neighbours p and radius r to compute the SD: and .

- Number of neurons in the hidden layer: , with f = 0.6, 0.8, 1, 1.2, 1.4, 1.6, 1.8 and 2. Taking into account the previous configurations, the number of components in the input patterns n varies from 12 () to 18 (), and hence goes from 8 (, ) to 36 (, ).

- We select a number of patches from the images belonging to the generic corrosion dataset. The set of patches is split into the training patch set and the test patch set (additional patches are used to define a validation patch set, which will be introduced later).

- A patch is considered positive (CBC class) if the central pixel appears labelled as CBC in the ground truth. The patch is considered negative (NC class) if none of its pixels belong to the CBC class.

- Positive samples are thus selected using ground truth CBC pixels as patch centers and shifting them a certain amount of pixels to pick the next patch in order to ensure a certain overlapping between them (ranging from 57% to 87% taking into account all the patch sizes), and, hence, a rich enough dataset.

- Negative patches, much more available in the input images, are selected randomly trying to ensure approximately the same number of positive and negative patterns, to prevent training from biasing towards one of the classes.

- Initially, 80% of the set of patches are placed in the training patch dataset, and the remaining patches are left for testing.

- Training, as far as the CBC class is concerned, is constrained to patches with at least 75% of pixels labelled as CBC. This has meant that, approximately, 25% of the initial training patches have had to be moved to the test patch set. Notice that this somehow penalizes the resulting detector during testing—i.e., consider the extreme case of a patch with only the central pixel belonging to the CBC class. In any case, it is considered useful to check the detector generality.

- Regarding the patch test set, TPR = R = 0.8819 and FPR = 0.0335 respectively indicate that less than 12% of positive patches and around 3% of negative patches of the set are not identified as such, while A = 0.9224 means that the erroneous identifications represent less than 8% of the total set of patches.

- At the pixel level, A = 0.9414, i.e., accuracy turns out to be higher than for patches, leading to an average incidence of errors (1 − A = FFP + FFN) of about 5%, slightly higher for false positives, 3.08% against 2.78%.

- In accordance to the aforementioned, the CBC detector can be said to perform well under general conditions, improving at the pixel level (5% of erroneous identifications) against the test patch set (8% of erroneous identifications).

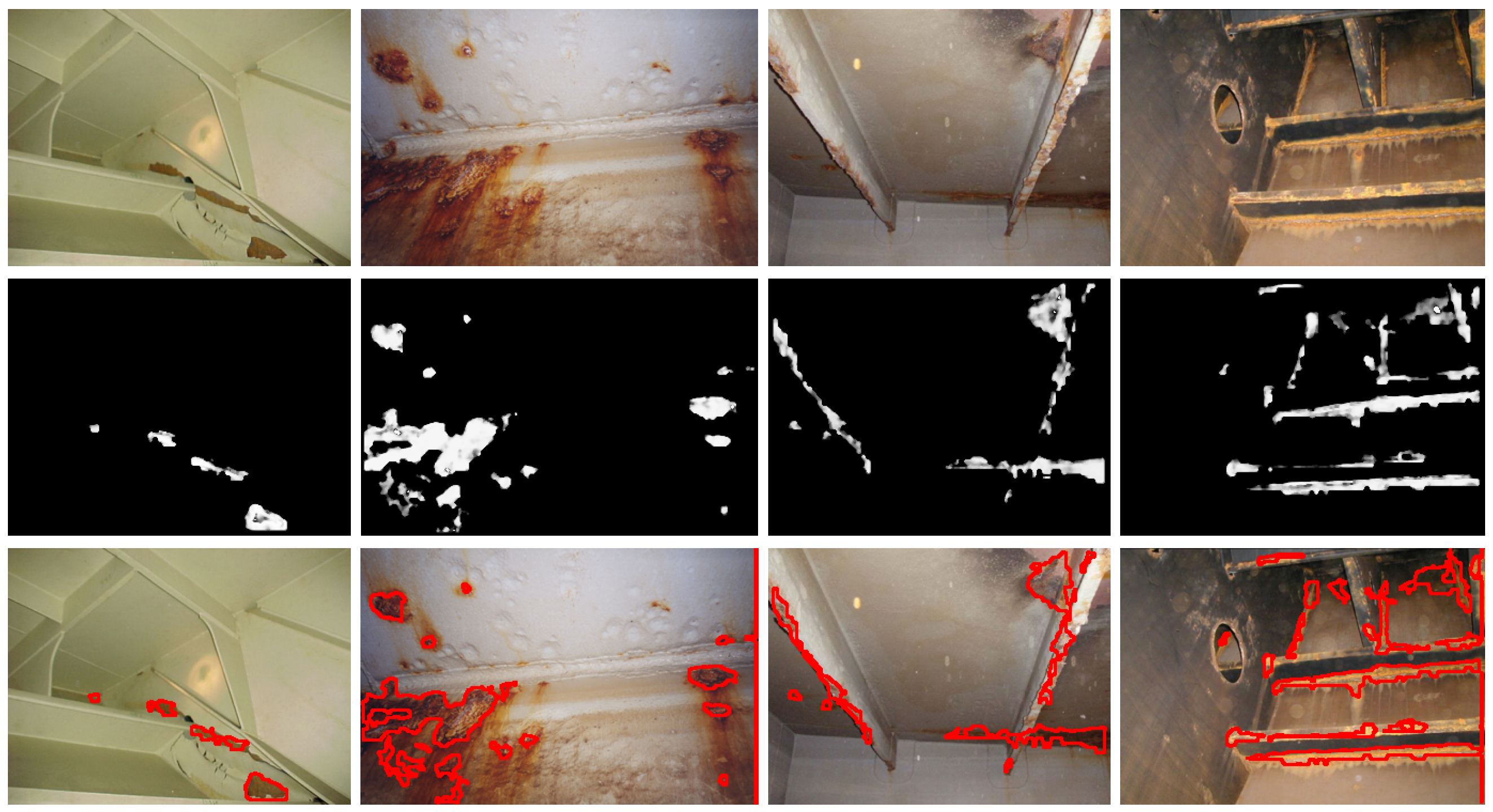

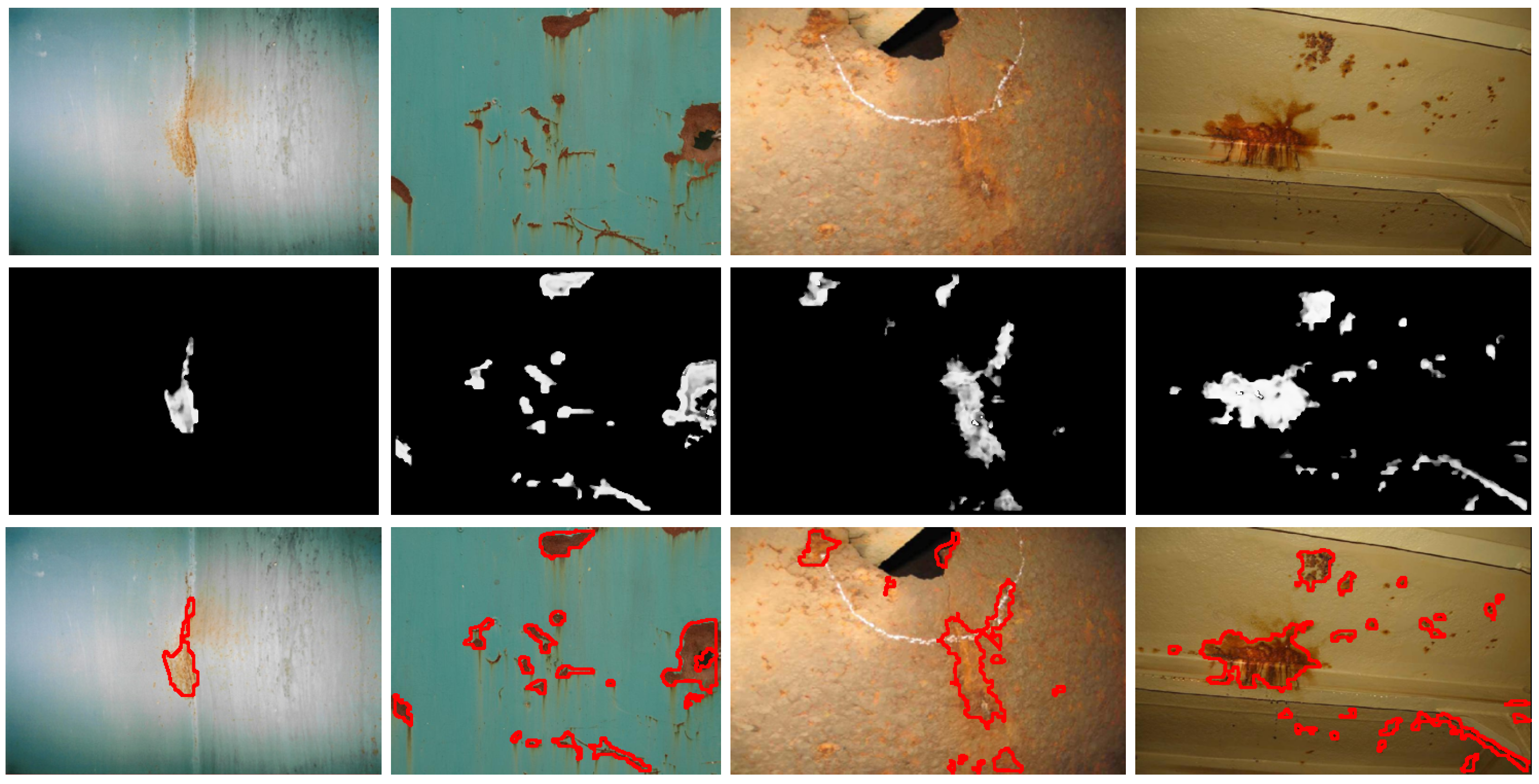

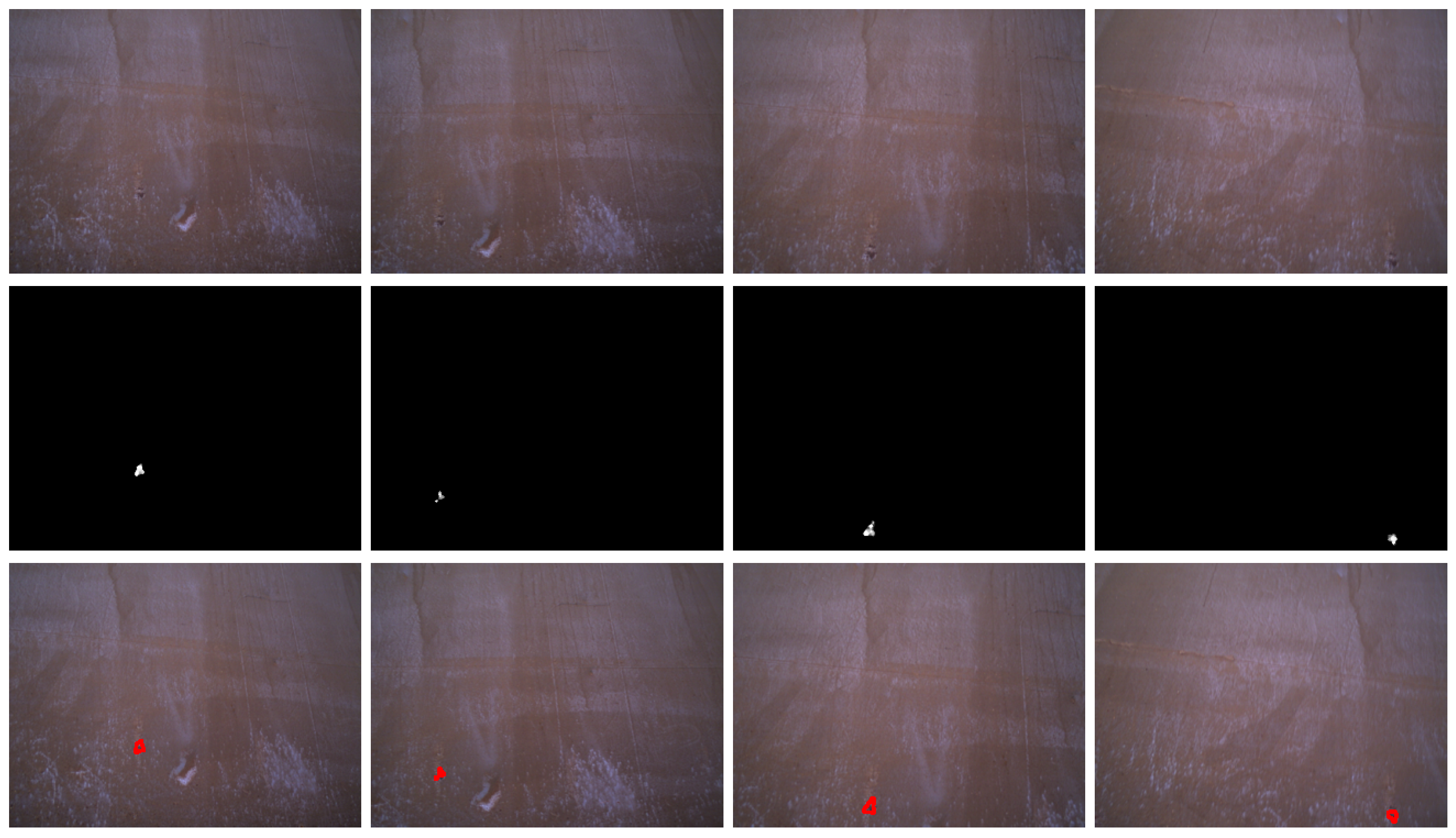

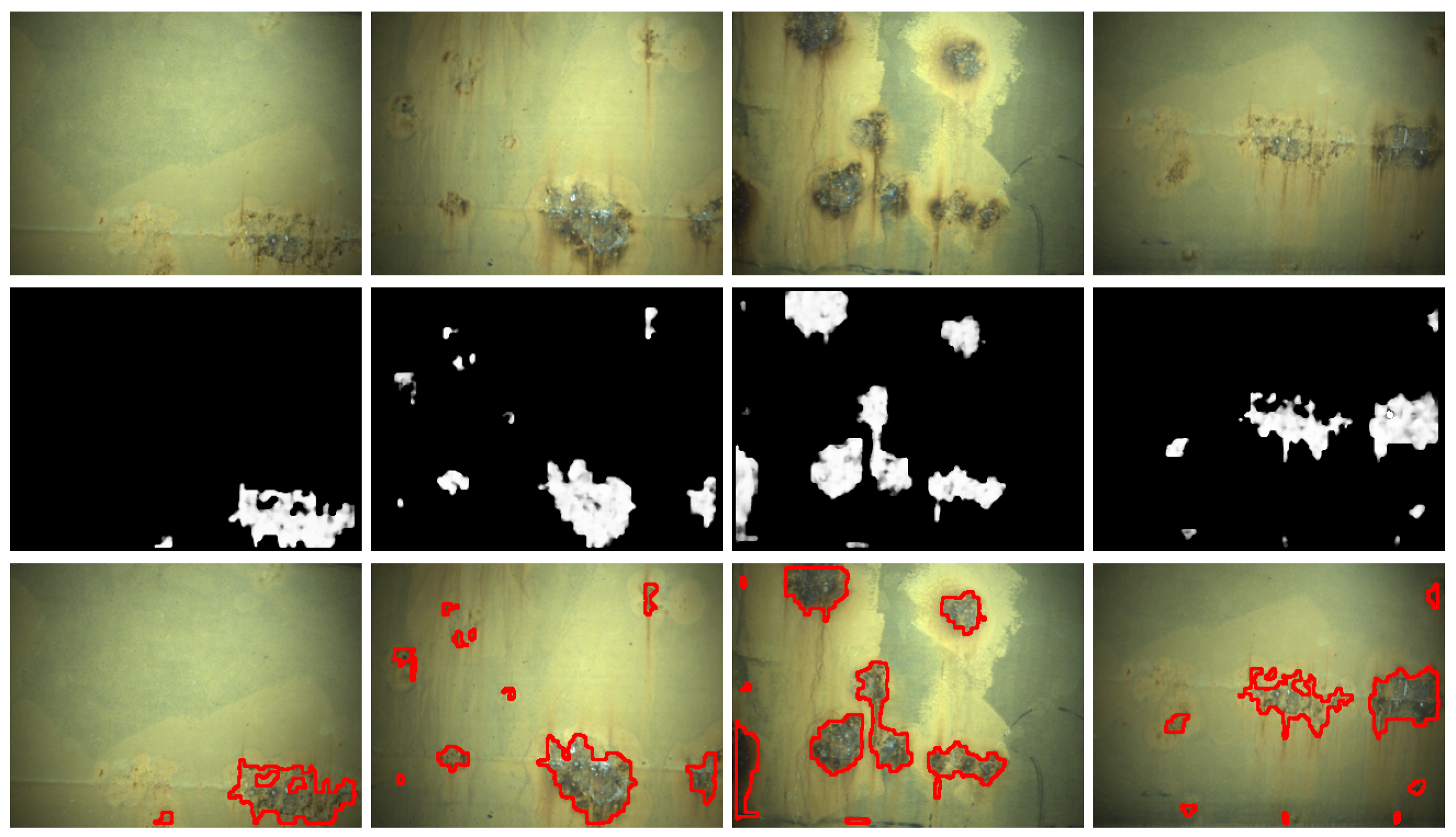

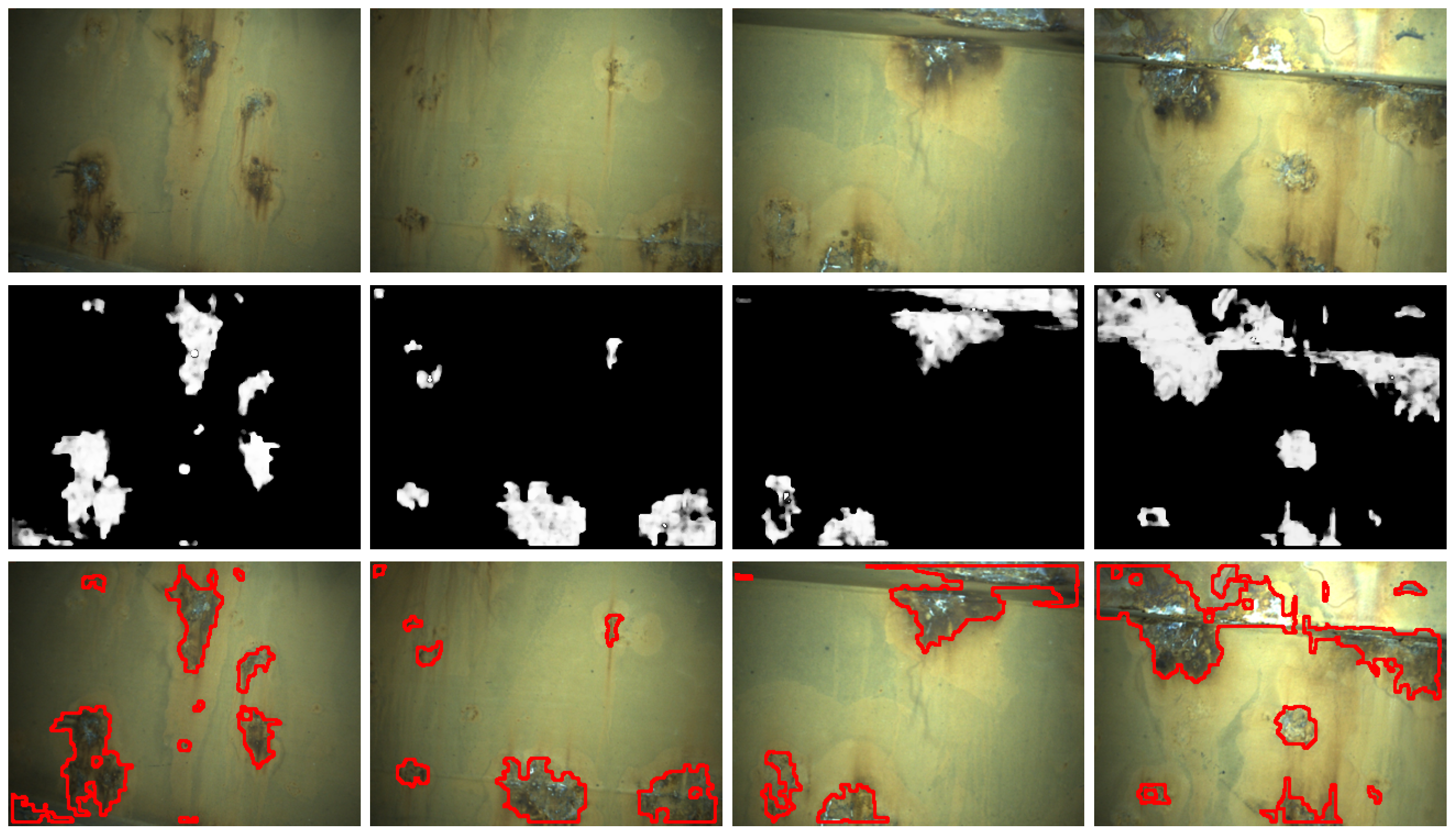

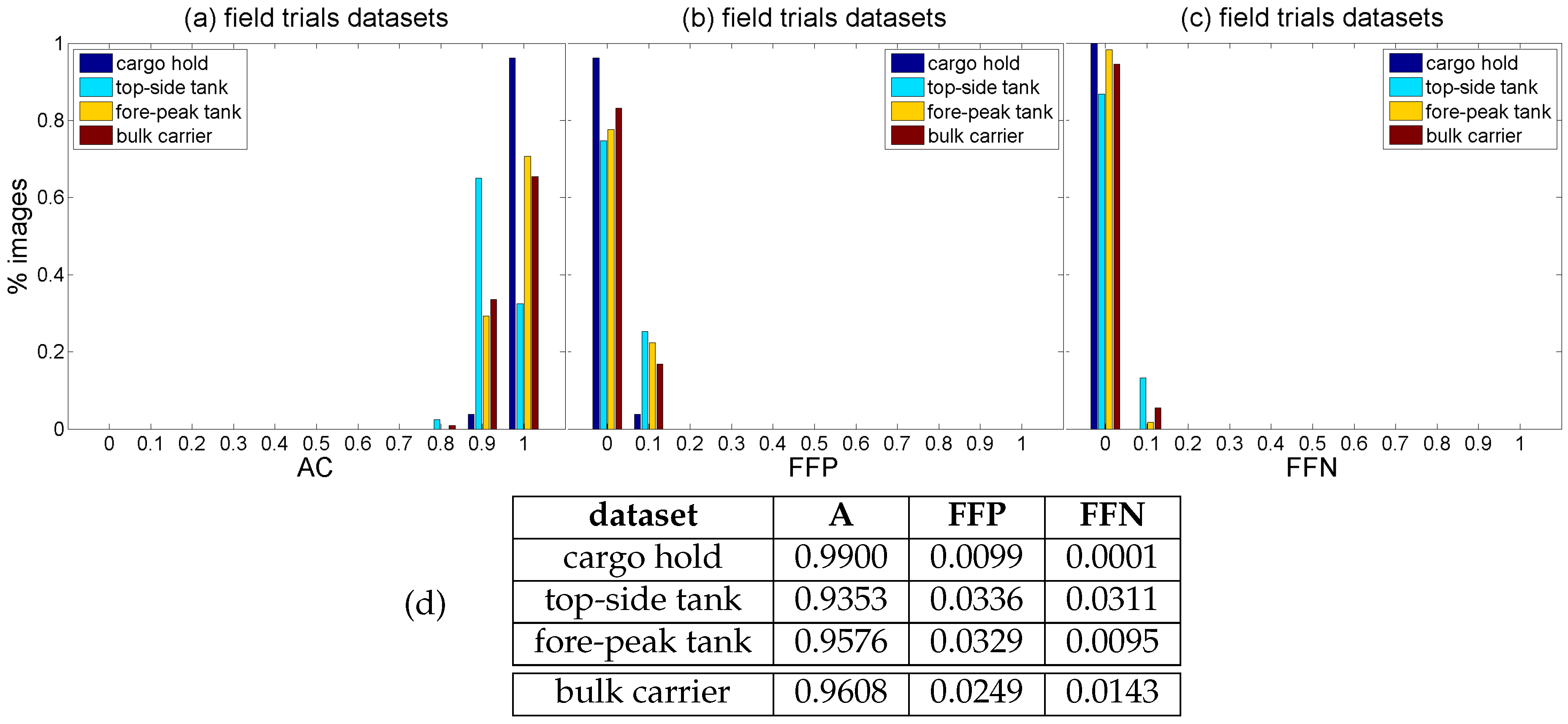

5.2. Results for Field Test Images

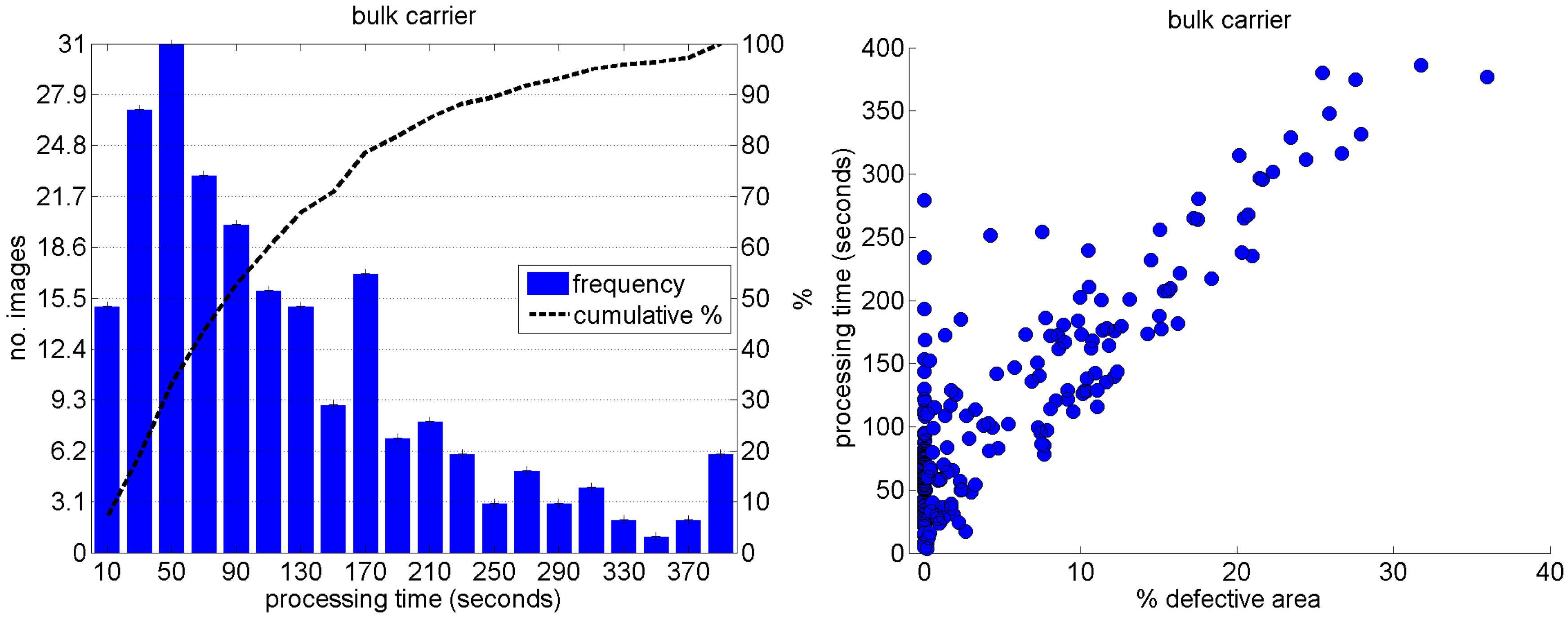

5.3. Some Comments on the Time Complexity of the Defect Detector

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| A | Accuracy |

| CBC | Coating-Breakdown/Corrosion |

| DC | Dominant Colours |

| FFN | Fraction of False Negatives |

| FFP | Fraction of False Positives |

| FN | False Negatives |

| FP | False Positives |

| FPR | False Positive Rate |

| P | Precision |

| R | Recall |

| SD | Signed (surrounding) Differences |

| TN | True Negatives |

| TP | True Positives |

| TPR | True Positive Rate |

References

- Marine InspectioN Robotic Assistant System (MINOAS) Project Website. Available online: http://www.minoasproject.eu (accessed on 11 December 2016).

- Bonnin-Pascual, F.; Garcia-Fidalgo, E.; Ortiz, A. Semi-autonomous Visual Inspection of Vessels Assisted by an Unmanned Micro Aerial Vehicle. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 3955–3961.

- Eich, M.; Bonnin-Pascual, F.; Garcia-Fidalgo, E.; Ortiz, A.; Bruzzone, G.; Koveos, Y.; Kirchner, F. A Robot Application to Marine Vessel Inspection. J. Field Robot. 2014, 31, 319–341. [Google Scholar] [CrossRef]

- Inspection Capabilities for Enhanced Ship Safety (INCASS) Project Website. Available online: http://www.incass.eu (accessed on 11 December 2016).

- Bonnin-Pascual, F.; Ortiz, A.; Garcia-Fidalgo, E.; Company, J.P. A Micro-Aerial Platform for Vessel Visual Inspection based on Supervised Autonomy. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 September–2 October 2015; pp. 46–52.

- Ortiz, A.; Bonnin-Pascual, F.; Garcia-Fidalgo, E.; Company, J.P. Visual Inspection of Vessels by Means of a Micro-Aerial Vehicle: An Artificial Neural Network Approach for Corrosion Detection. In Iberian Robotics Conference; Reis, P.L., Moreira, P.A., Lima, U.P., Montano, L., Muñoz-Martinez, V., Eds.; Springer: Lisbon, Portugal, 2016; Volume 2, pp. 223–234. [Google Scholar]

- Ortiz, A.; Bonnin, F.; Gibbins, A.; Apostolopoulou, P.; Bateman, W.; Eich, M.; Spadoni, F.; Caccia, M.; Drikos, L. First Steps Towards a Roboticized Visual Inspection System for Vessels. In Proceedings of the IEEE International Conference on Emerging Technologies and Factory Automation, Bilbao, Spain, 13–16 September 2010.

- Dryanovski, I.; Valenti, R.G.; Xiao, J. An Open-source Navigation System for Micro Aerial Vehicles. Auton. Robot 2013, 34, 177–188. [Google Scholar] [CrossRef]

- Grzonka, S.; Grisetti, G.; Burgard, W. A Fully Autonomous Indoor Quadrotor. IEEE Trans. Robot. 2012, 28, 90–100. [Google Scholar] [CrossRef]

- Bachrach, A.; Prentice, S.; He, R.; Roy, N. RANGE-Robust Autonomous Navigation in GPS-denied Environments. J. Field Robot. 2011, 28, 644–666. [Google Scholar] [CrossRef]

- Bouabdallah, S.; Murrieri, P.; Siegwart, R. Towards Autonomous Indoor Micro VTOL. Auton. Robot. 2005, 18, 171–183. [Google Scholar] [CrossRef]

- Matsue, A.; Hirosue, W.; Tokutake, H.; Sunada, S.; Ohkura, A. Navigation of Small and Lightweight Helicopter. Trans. Jpn. Soc. Aeronaut. Space Sci. 2005, 48, 177–179. [Google Scholar] [CrossRef]

- Roberts, J.F.; Stirling, T.; Zufferey, J.C.; Floreano, D. Quadrotor Using Minimal Sensing for Autonomous Indoor Flight. In Proceedings of the European Micro Air Vehicle Conference and Flight Competition, Toulouse, France, 17–21 September 2007.

- Engel, J.; Sturm, J.; Cremers, D. Scale-Aware Navigation of a Low-Cost Quadrocopter with a Monocular Camera. Robot. Auton. Syst. 2014, 62, 1646–1656. [Google Scholar] [CrossRef]

- Fraundorfer, F.; Heng, L.; Honegger, D.; Lee, G.H.; Meier, L.; Tanskanen, P.; Pollefeys, M. Vision-based Autonomous Mapping and Exploration Using a Quadrotor MAV. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–11 October 2012; pp. 4557–4564.

- Chowdhary, G.; Johnson, E.; Magree, D.; Wu, A.; Shein, A. GPS-denied Indoor and Outdoor Monocular Vision Aided Navigation and Control of Unmanned Aircraft. J. Field Robot. 2013, 30, 415–438. [Google Scholar] [CrossRef]

- Zingg, S.; Scaramuzza, D.; Weiss, S.; Siegwart, R. MAV Navigation through Indoor Corridors Using Optical Flow. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 3361–3368.

- Meier, L.; Tanskanen, P.; Heng, L.; Lee, G.H.; Fraundorfer, F.; Pollefeys, M. PIXHAWK: A Micro Aerial Vehicle Design for Autonomous Flight Using Onboard Computer Vision. Auton. Robot. 2012, 33, 21–39. [Google Scholar] [CrossRef]

- Wenzel, K.E.; Masselli, A.; Zell, A. Automatic Take Off, Tracking and Landing of a Miniature UAV on a Moving Carrier Vehicle. J. Intell. Robot. Syst. 2011, 61, 221–238. [Google Scholar] [CrossRef]

- Huerzeler, C.; Caprari, G.; Zwicker, E.; Marconi, L. Applying Aerial Robotics for Inspections of Power and Petrochemical Facilities. In Proceedings of the International Conference on Applied Robotics for the Power Industry, Zurich, Switzerland, 11–13 September 2012; pp. 167–172.

- Burri, M.; Nikolic, J.; Hurzeler, C.; Caprari, G.; Siegwart, R. Aerial Service Robots for Visual Inspection of Thermal Power Plant Boiler Systems. In Proceedings of the International Conference on Applied Robotics for the Power Industry, Zurich, Switzerland, 11–13 September 2012; pp. 70–75.

- Omari, S.; Gohl, P.; Burri, M.; Achtelik, M.; Siegwart, R. Visual Industrial Inspection Using Aerial Robots. In Proceedings of the International Conference on Applied Robotics for the Power Industry, Foz do Iguassu, Brazil, 14–16 October 2014; pp. 1–5.

- Satler, M.; Unetti, M.; Giordani, N.; Avizzano, C.A.; Tripicchio, P. Towards an Autonomous Flying Robot for Inspections in Open and Constrained Spaces. In Proceedings of the Multi-Conference on Systems, Signals and Devices, Barcelona, Spain, 11–14 February 2014; pp. 1–6.

- Sa, I.; Hrabar, S.; Corke, P. Inspection of Pole-Like Structures Using a Vision-Controlled VTOL UAV and Shared Autonomy. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 4819–4826.

- Chang, C.L.; Chang, H.H.; Hsu, C.P. An intelligent defect inspection technique for color filter. In Proceedings of the IEEE International Conference on Mechatronics, Taipei, Taiwan, 10–12 July 2005; pp. 933–936.

- Jiang, B.C.; Wang, C.C.; Chen, P.L. Logistic regression tree applied to classify PCB golden finger defects. Int. J. Adv. Manuf. Technol. 2004, 24, 496–502. [Google Scholar] [CrossRef]

- Zhang, X.; Liang, R.; Ding, Y.; Chen, J.; Duan, D.; Zong, G. The system of copper strips surface defects inspection based on intelligent fusion. In Proceedings of the IEEE International Conference on Automation and Logistics, Qingdao, China, 1–3 September 2008; pp. 476–480.

- Boukouvalas, C.; Kittler, J.; Marik, R.; Mirmehdi, M.; Petrou, M. Ceramic tile inspection for colour and structural defects. In Proceedings of the AMPT95, Dublin, Ireland, 8–12 August 1995; pp. 390–399.

- Amano, T. Correlation Based Image Defect Detection. In Proceedings of the International Conference on Pattern Recognition, Hong Kong, China, 20–24 August 2006; pp. 163–166.

- Bonnin-pascual, F.; Ortiz, A. A Probabilistic Approach for Defect Detection Based on Saliency Mechanisms. In Proceedings of the IEEE International Conference on Emerging Technologies and Factory Automation, Barcelona, Spain, 16–19 September 2014.

- Castilho, H.; Pinto, J.; Limas, A. An automated defect detection based on optimized thresholding. In Proceedings of the International Conference on Image Analysis & Recognition, Póvoa de Varzim, Portugal, 18–20 September 2006; pp. 790–801.

- Hongbin, J.; Murphey, Y.; Jinajun, S.; Tzyy-Shuh, C. An intelligent real-time vision system for surface defect detection. In Proceedings of the International Conference on Pattern Recognition, Cambridge, UK, 23–26 August 2004; Volume III, pp. 239–242.

- Kumar, A.; Shen, H. Texture inspection for defects using neural networks and support vector machines. In Proceedings of the IEEE International Conference on Image Processing, Rochester, NY, USA, 22–25 September 2002; Volume III, pp. 353–356.

- Fujita, Y.; Mitani, Y.; Hamamoto, Y. A Method for Crack Detection on a Concrete Structure. In Proceedings of the International Conference on Pattern Recognition, Hong Kong, China, 20–24 August 2006; pp. 901–904.

- Oullette, R.; Browne, M.; Hirasawa, K. Genetic algorithm optimization of a convolutional neural network for autonomous crack detection. In Proceedings of the IEEE Congress on Evolutionary Computation, Portland, OR, USA, 19–23 June 2004; pp. 516–521.

- Yamaguchi, T.; Hashimoto, S. Fast Crack Detection Method for Large-size Concrete Surface Images Using Percolation-based Image Processing. Mach. Vis. Appl. 2010, 21, 797–809. [Google Scholar] [CrossRef]

- Zhao, G.; Wang, T.; Ye, J. Anisotropic clustering on surfaces for crack extraction. Mach. Vis. Appl. 2015, 26, 675–688. [Google Scholar] [CrossRef]

- Mumtaz, M.; Masoor, A.B.; Masood, H. A New Approach to Aircraft Surface Inspection based on Directional Energies of Texture. In Proceedings of the International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 4404–4407.

- Jahanshahi, M.; Masri, S. Effect of Color Space, Color Channels, and Sub-Image Block Size on the Performance of Wavelet-Based Texture Analysis Algorithms: An Application to Corrosion Detection on Steel Structures. In Proceedings of the ASCE International Workshop on Computing in Civil Engineering, Los Angeles, CA, USA, 23–25 June 2013; pp. 685–692.

- Ji, G.; Zhu, Y.; Zhang, Y. The Corroded Defect Rating System of Coating Material Based on Computer Vision. In Transactions on Edutainment VIII; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7220, pp. 210–220. [Google Scholar]

- Siegel, M.; Gunatilake, P.; Podnar, G. Robotic assistants for aircraft inspectors. Ind. Robot Int. J. 1998, 25, 389–400. [Google Scholar] [CrossRef]

- Xu, S.; Weng, Y. A new approach to estimate fractal dimensions of corrosion images. Pattern Recogn. Lett. 2006, 27, 1942–1947. [Google Scholar] [CrossRef]

- Zaidan, B.B.; Zaidan, A.A.; Alanazi, H.O.; Alnaqeib, R. Towards Corrosion Detection System. Int. J. Comput. Sci. Issues 2010, 7, 33–35. [Google Scholar]

- Cheng, G.; Zelinsky, A. Supervised Autonomy: A Framework for Human-Robot Systems Development. Auton. Robot. 2001, 10, 251–266. [Google Scholar] [CrossRef]

- Gurdan, D.; Stumpf, J.; Achtelik, M.; Doth, K.M.; Hirzinger, G.; Rus, D. Energy-efficient Autonomous Four-rotor Flying Robot Controlled at 1 kHz. In Proceedings of the IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 361–366.

- Arkin, R.C. Behavior-Based Robotics; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved Techniques for Grid Mapping With Rao-Blackwellized Particle Filters. Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef]

- Theodoridis, S.; Koutroumbas, K. Pattern Recognition; Academic Press: San Diego, CA, USA, 2008. [Google Scholar]

- Duda, R.; Hart, P.; Stork, D. Pattern Classification; Wiley: New York, NY, USA, 2001. [Google Scholar]

- Orchard, M.T.; Bouman, C.A. Color quantization of images. IEEE Trans. Signal Process. 1991, 39, 2677–2690. [Google Scholar] [CrossRef]

- Dekker, A.H. Kohonen neural networks for optimal colour quantization. Netw. Comput. Neural Syst. 1994, 5, 351–367. [Google Scholar] [CrossRef]

- Gervautz, M.; Purgathofer, W. A Simple Method for Color Quantization: Octree Quantization. In New Trends in Computer Graphics: Proceedings of CG International ’88; Magnenat-Thalmann, N., Thalmann, D., Eds.; Springer: Berlin/Heidelberg, Germany, 1988; pp. 219–231. [Google Scholar]

- Heckbert, P. Color image quantization for frame buffer display. Comput. Graph. 1982, 16, 297–307. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Structural Defects Datasets Website. Available online: http://dmi.uib.es/~xbonnin/resources (accessed on 11 December 2016).

- LeCun, Y.A.; Bottou, L.; Orr, G.B.; Müller, K.R. Efficient BackProp. In Neural Networks: Tricks of the Trade, 2nd ed.; Montavon, G., Orr, G.B., Müller, K.R., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 9–48. [Google Scholar]

- Nguyen, D.; Widrow, B. Improving the learning speed of 2-layer neural networks by choosing initial values of the adaptive weights. In Proceedings of the International Joint Conference on Neural Networks, San Diego, CA, USA, 17–21 June 1990; Volume 3, pp. 21–26.

- Forouzanfar, M.; Dajani, H.R.; Groza, V.Z.; Bolic, M.; Rajan, S. Feature-Based Neural Network Approach for Oscillometric Blood Pressure Estimation. IEEE Trans. Instrum. Meas. 2011, 60, 2786–2796. [Google Scholar] [CrossRef]

- Igel, C.; Hüsken, M. Empirical Evaluation of the Improved Rprop Learning Algorithm. Neurocomputing 2003, 50, 105–123. [Google Scholar] [CrossRef]

- Manevitz, L.; Yousef, M. One-class document classification via Neural Networks. Neurocomputing 2007, 70, 1466–1481. [Google Scholar] [CrossRef]

- Arthur, D.; Vassilvitskii, S. K-means++: The Advantages of Careful Seeding. In Proceedings of the Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007; pp. 1027–1035.

- Roman-Roldan, R.; Gomez-Lopera, J.F.; Atae-Allah, C.; Martinez-Aroza, J.; Luque-Escamilla, P.L. A measure of quality for evaluating methods of segmentation and edge detection. Pattern Recognit. 2001, 34, 969–980. [Google Scholar] [CrossRef]

- Ortiz, A.; Oliver, G. On the use of the overlapping area matrix for image segmentation evaluation: A survey and new performance measures. Pattern Recognit. Lett. 2006, 27, 1916–1926. [Google Scholar] [CrossRef]

- INCASS Project Field Trials: Cargo Hold Video. Available online: https://www.youtube.com/watch?v=M43Ed6qFjOI (accessed on 11 December 2016).

- INCASS Project Field Trials: Top-Side Tank Video. Available online: https://www.youtube.com/watch?v=RpBX1qcOQ8I (accessed on 11 December 2016).

- INCASS Project Field Trials: Fore-Peak Tank Video. Available online: https://www.youtube.com/watch?v=THA_8nSQp7w (accessed on 11 December 2016).

| Parameter | Symbol | Value |

|---|---|---|

| activation function free parameter | a | 1 |

| iRprop weight change increase factor | 1.2 | |

| iRprop weight change decrease factor | 0.5 | |

| iRprop minimum weight change | 0 | |

| iRprop maximum weight change | 50 | |

| iRprop initial weight change | 0.5 | |

| (final) number of training patches | 232,094 | |

| — positive patches | 120,499 | |

| — negative patches | 111,595 | |

| (final) number of test patches | 139,150 | |

| — positive patches | 72,557 | |

| — negative patches | 66,593 | |

| (a) | w | |||||

| m | 3 | 5 | 7 | 9 | 11 | |

| 2 | 0.1747 (1.0,0.0) | 0.1688 (1.8,0.0) | 0.1512 (1.6,0.0) | 0.1484 (1.2,0.0) | 0.1629 (1.8,0.0) | |

| (1, 8) | 3 | 0.1712 (2.0,0.0) | 0.1600 (1.4,0.0) | 0.1496 (1.4,0.0) | 0.1382 (1.6,0.0) | 0.1692 (1.8,0.0) |

| 4 | 0.1696 (2.0,0.0) | 0.1741 (2.0,0.0) | 0.1657 (1.6,0.0) | 0.1501 (2.0,0.0) | 0.1795 (1.8,0.0) | |

| 2 | 0.1849 (1.6,0.0) | 0.1627 (1.6,0.0) | 0.1377 (2.0,0.0) | 0.1370 (2.0,0.0) | 0.1574 (1.2,0.0) | |

| (2,12) | 3 | 0.1652 (2.0,0.0) | 0.1519 (1.8,0.0) | 0.1450 (1.4,0.0) | 0.1227 (2.0,0.0) | 0.1468 (2.0,0.0) |

| 4 | 0.1641 (1.8,0.0) | 0.1555 (1.2,0.0) | 0.1473 (0.8,0.0) | 0.1394 (1.2,0.0) | 0.1735 (0.6,0.0) | |

| (b) | w | |||||

| m | 3 | 5 | 7 | 9 | 11 | |

| 2 | 0.8851 (1.0,0.0) | 0.8908 (1.8,0.0) | 0.9027 (1.6,0.0) | 0.9050 (1.2,0.0) | 0.9004 (1.8,0.0) | |

| (1, 8) | 3 | 0.8873 (2.0,0.0) | 0.8956 (1.4,0.0) | 0.9034 (1.4,0.0) | 0.9124 (1.6,0.0) | 0.8964 (1.8,0.0) |

| 4 | 0.8881 (2.0,0.0) | 0.8886 (2.0,0.0) | 0.8965 (1.6,0.0) | 0.9075 (2.0,0.0) | 0.8930 (1.8,0.0) | |

| 2 | 0.8800 (1.6,0.0) | 0.8941 (2.0,0.0) | 0.9116 (2.0,0.0) | 0.9142 (2.0,0.0) | 0.9024 (1.2,0.0) | |

| (2,12) | 3 | 0.8915 (2.0,0.0) | 0.9010 (1.4,0.0) | 0.9070 (1.8,0.0) | 0.9224 (2.0,0.0) | 0.9107 (2.0,0.0) |

| 4 | 0.8928 (1.8,0.0) | 0.9003 (1.2,0.0) | 0.9055 (1.4,0.0) | 0.9135 (1.6,0.0) | 0.8980 (2.0,0.0) | |

| (c) | w | |||||

| m | 3 | 5 | 7 | 9 | 11 | |

| 2 | 0.1753 (1.0,0.0) | 0.1694 (1.8,0.0) | 0.1515 (1.6,0.0) | 0.1487 (1.2,0.0) | 0.1631 (1.8,0.0) | |

| (1, 8) | 3 | 0.1717 (2.0,0.0) | 0.1603 (1.4,0.0) | 0.1499 (1.4,0.0) | 0.1383 (1.6,0.0) | 0.1694 (1.8,0.0) |

| 4 | 0.1701 (2.0,0.0) | 0.1748 (2.0,0.0) | 0.1662 (1.6,0.0) | 0.1504 (2.0,0.0) | 0.1797 (1.8,0.0) | |

| 2 | 0.1858 (1.6,0.0) | 0.1631 (1.6,0.0) | 0.1379 (2.0,0.0) | 0.1372 (2.0,0.0) | 0.1575 (1.2,0.0) | |

| (2,12) | 3 | 0.1656 (2.0,0.0) | 0.1522 (1.8,0.0) | 0.1452 (1.4,0.0) | 0.1228 (2.0,0.0) | 0.1468 (2.0,0.0) |

| 4 | 0.1645 (1.8,0.0) | 0.1558 (1.2,0.0) | 0.1475 (0.8,0.0) | 0.1396 (1.2,0.0) | 0.1737 (0.6,0.0) | |

| (d) | w | |||||

| m | 3 | 5 | 7 | 9 | 11 | |

| 2 | 0.8837 (1.0,0.0) | 0.8891 (1.8,0.0) | 0.9017 (1.6,0.0) | 0.9041 (1.2,0.0) | 0.8995 (1.8,0.0) | |

| (1, 8) | 3 | 0.8860 (2.0,0.0) | 0.8946 (1.4,0.0) | 0.9025 (1.4,0.0) | 0.9117 (1.6,0.0) | 0.8953 (1.8,0.0) |

| 4 | 0.8870 (2.0,0.0) | 0.8864 (2.0,0.0) | 0.8942 (1.6,0.0) | 0.9059 (2.0,0.0) | 0.8908 (1.8,0.0) | |

| 2 | 0.8776 (1.6,0.0) | 0.8923 (1.6,0.0) | 0.9111 (2.0,0.0) | 0.9134 (2.0,0.0) | 0.9020 (1.2,0.0) | |

| (2,12) | 3 | 0.8904 (2.0,0.0) | 0.9003 (1.4,0.0) | 0.9059 (1.8,0.0) | 0.9222 (2.0,0.0) | 0.9105 (2.0,0.0) |

| 4 | 0.8916 (1.8,0.0) | 0.8991 (1.2,0.0) | 0.9044 (0.8,0.0) | 0.9124 (1.6,0.0) | 0.8959 (2.0,0.0) | |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ortiz, A.; Bonnin-Pascual, F.; Garcia-Fidalgo, E.; Company-Corcoles, J.P. Vision-Based Corrosion Detection Assisted by a Micro-Aerial Vehicle in a Vessel Inspection Application. Sensors 2016, 16, 2118. https://doi.org/10.3390/s16122118

Ortiz A, Bonnin-Pascual F, Garcia-Fidalgo E, Company-Corcoles JP. Vision-Based Corrosion Detection Assisted by a Micro-Aerial Vehicle in a Vessel Inspection Application. Sensors. 2016; 16(12):2118. https://doi.org/10.3390/s16122118

Chicago/Turabian StyleOrtiz, Alberto, Francisco Bonnin-Pascual, Emilio Garcia-Fidalgo, and Joan P. Company-Corcoles. 2016. "Vision-Based Corrosion Detection Assisted by a Micro-Aerial Vehicle in a Vessel Inspection Application" Sensors 16, no. 12: 2118. https://doi.org/10.3390/s16122118