Radius and Orientation Measurement for Cylindrical Objects by a Light Section Sensor

Abstract

:1. Introduction

- A new method for non-contact measurement of the radius and orientation of cylindrical object is presented. This method can measure the radius and orientation with interference.

- This method can obtain the radii and orientations of several cylindrical objects in one measurement.

2. Principle

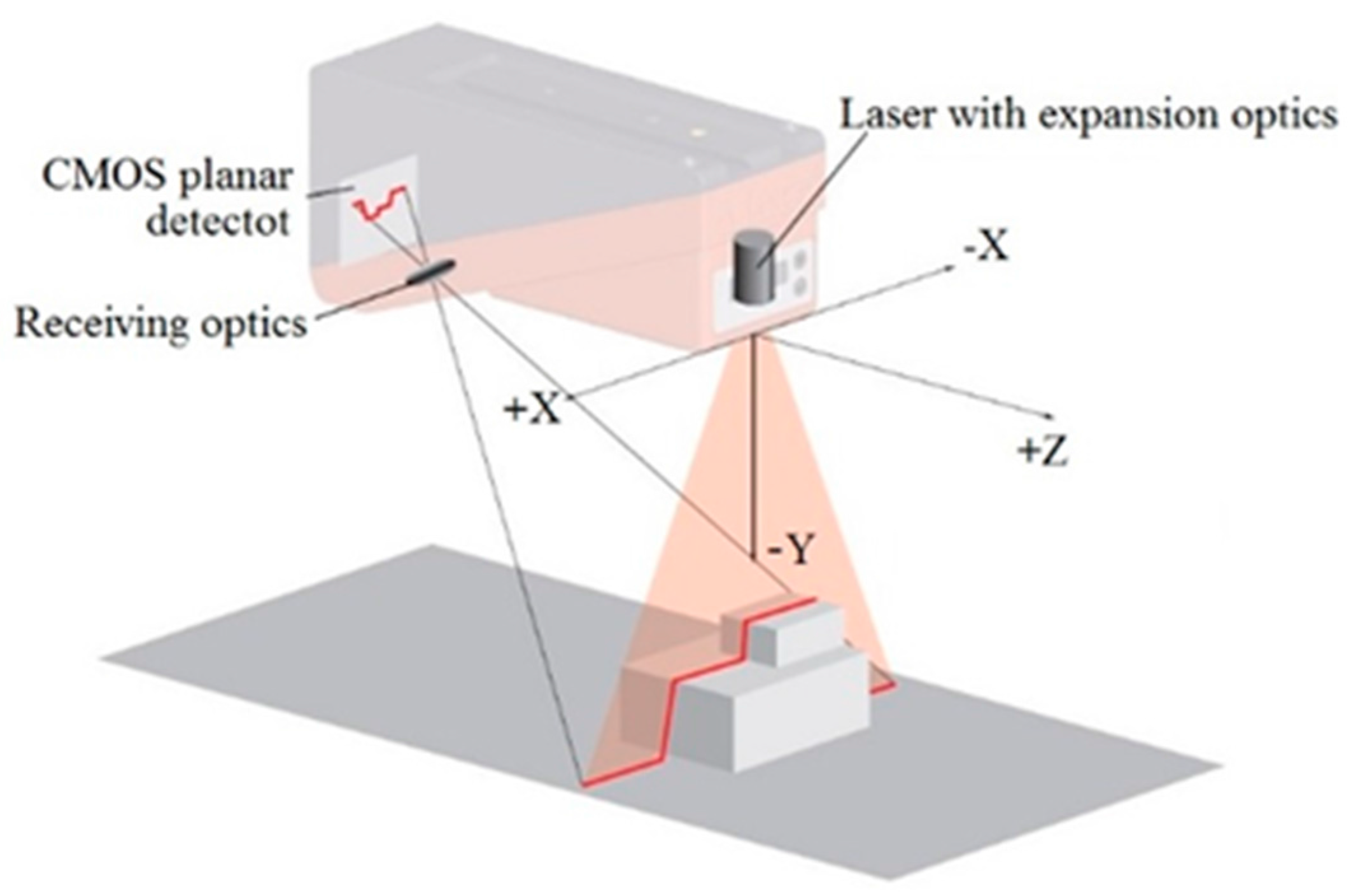

2.1. Light Section Sensor

2.2. Measurement by the Light Section Sensor

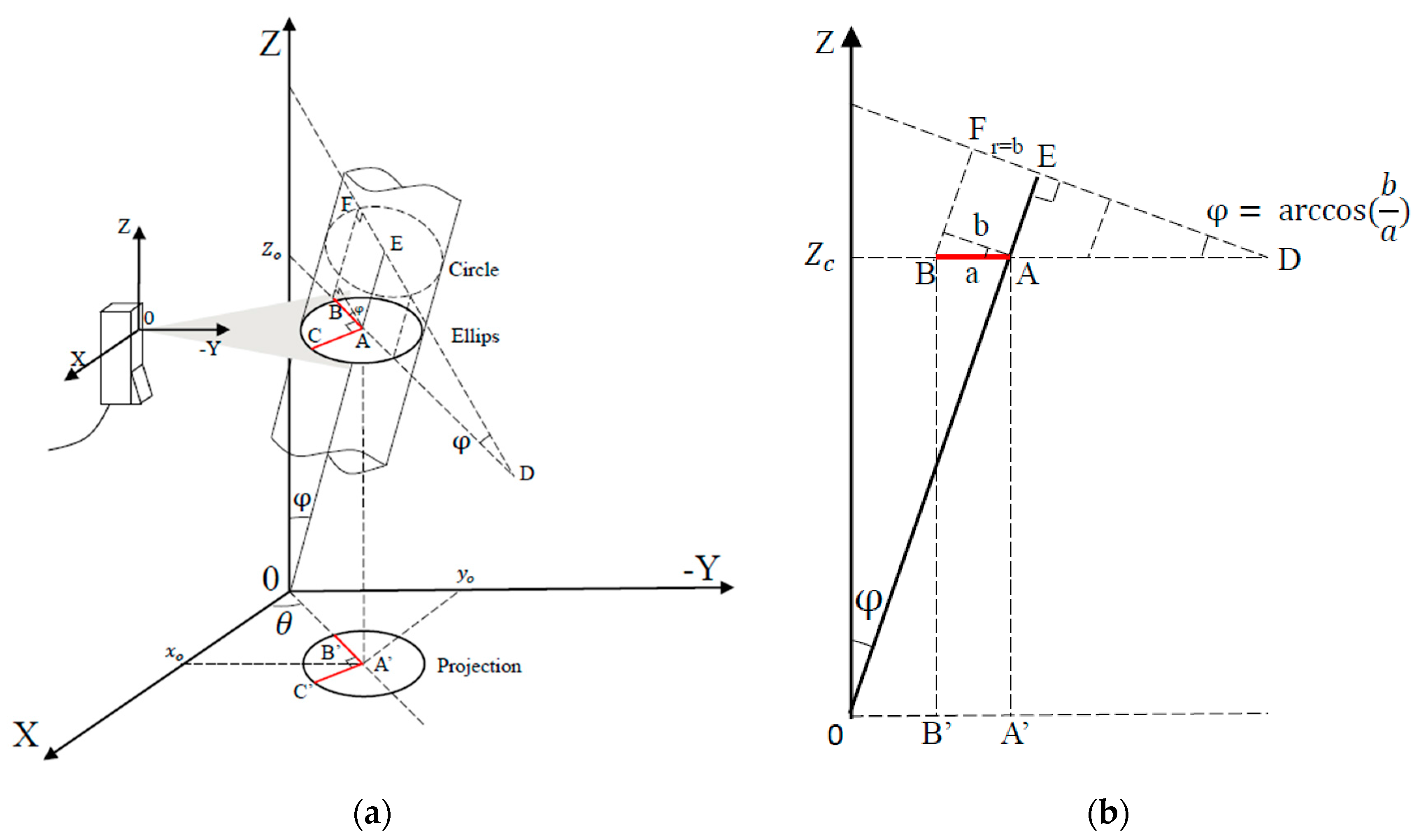

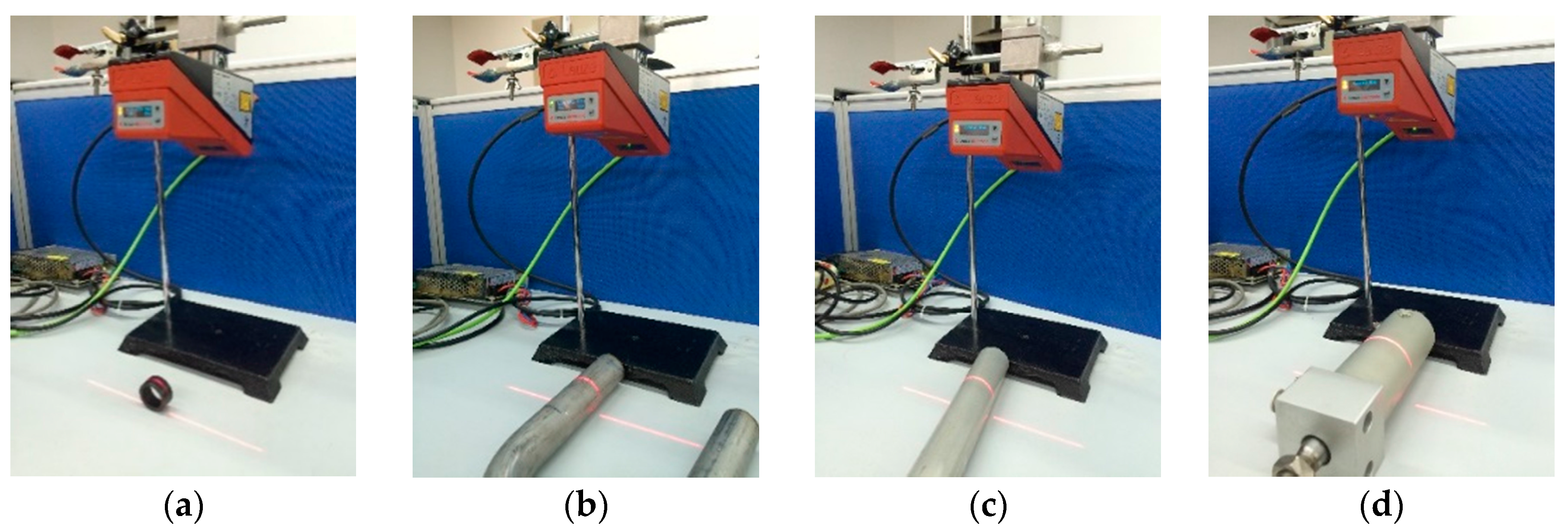

2.2.1. Measurement Scheme

2.2.2. Radius Calculation

2.2.3. Orientation Calculation

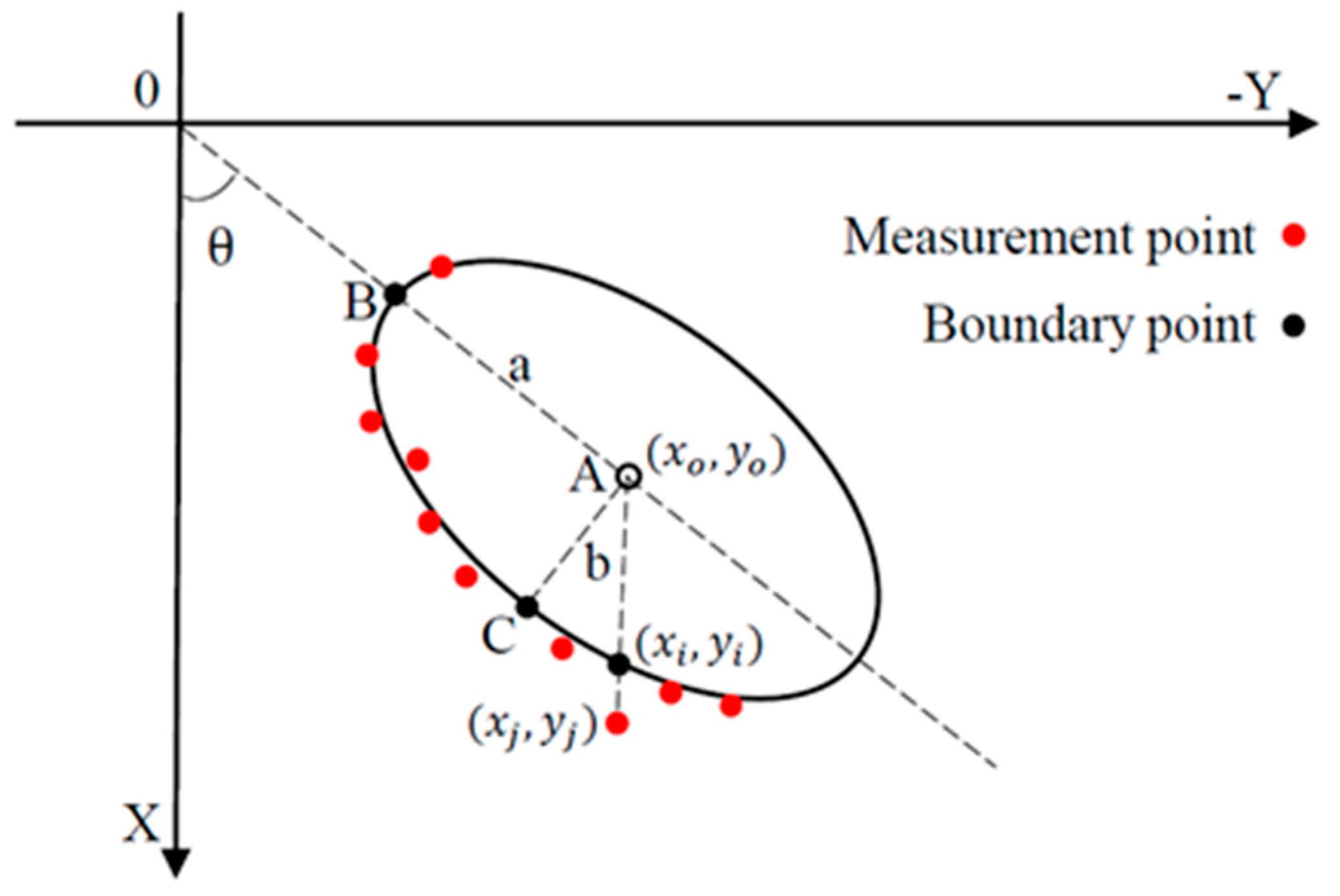

2.3. Fitting Method

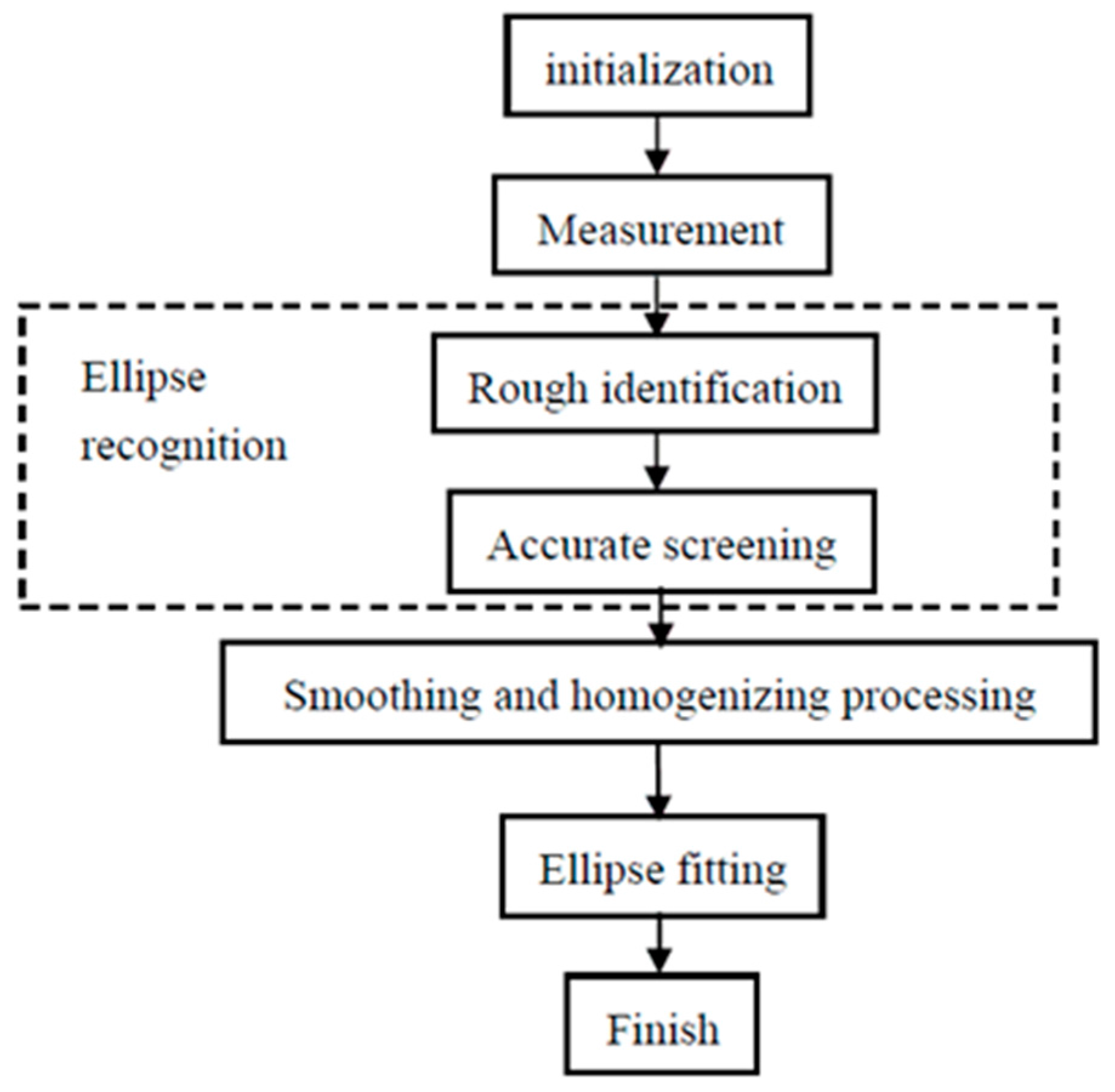

3. Measurement Procedure

3.1. Measurement Process

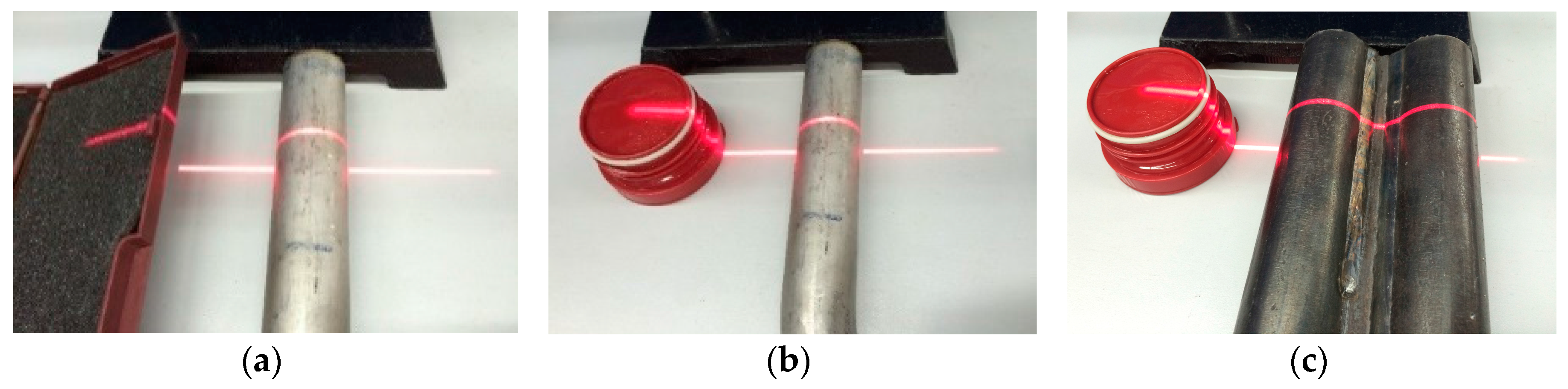

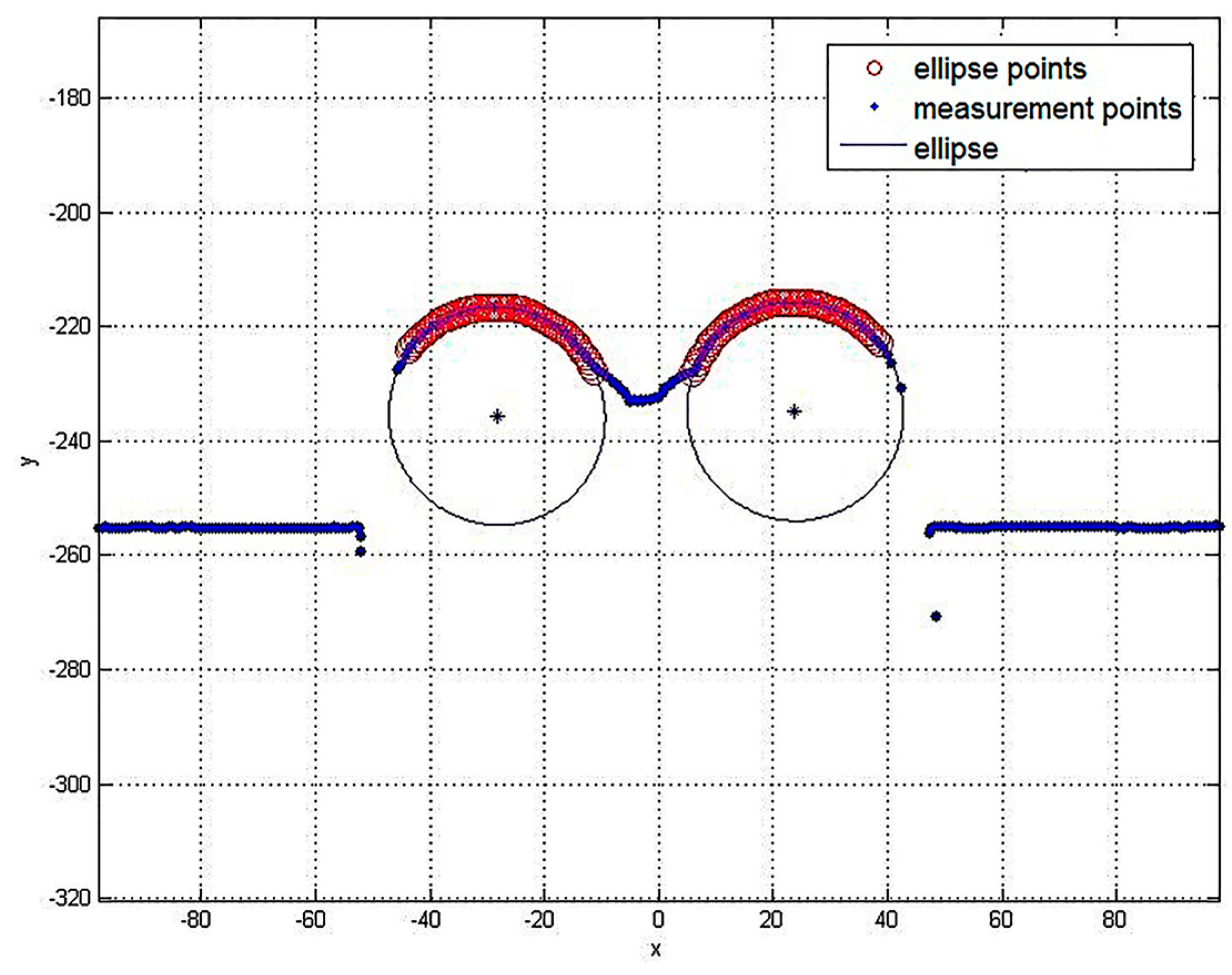

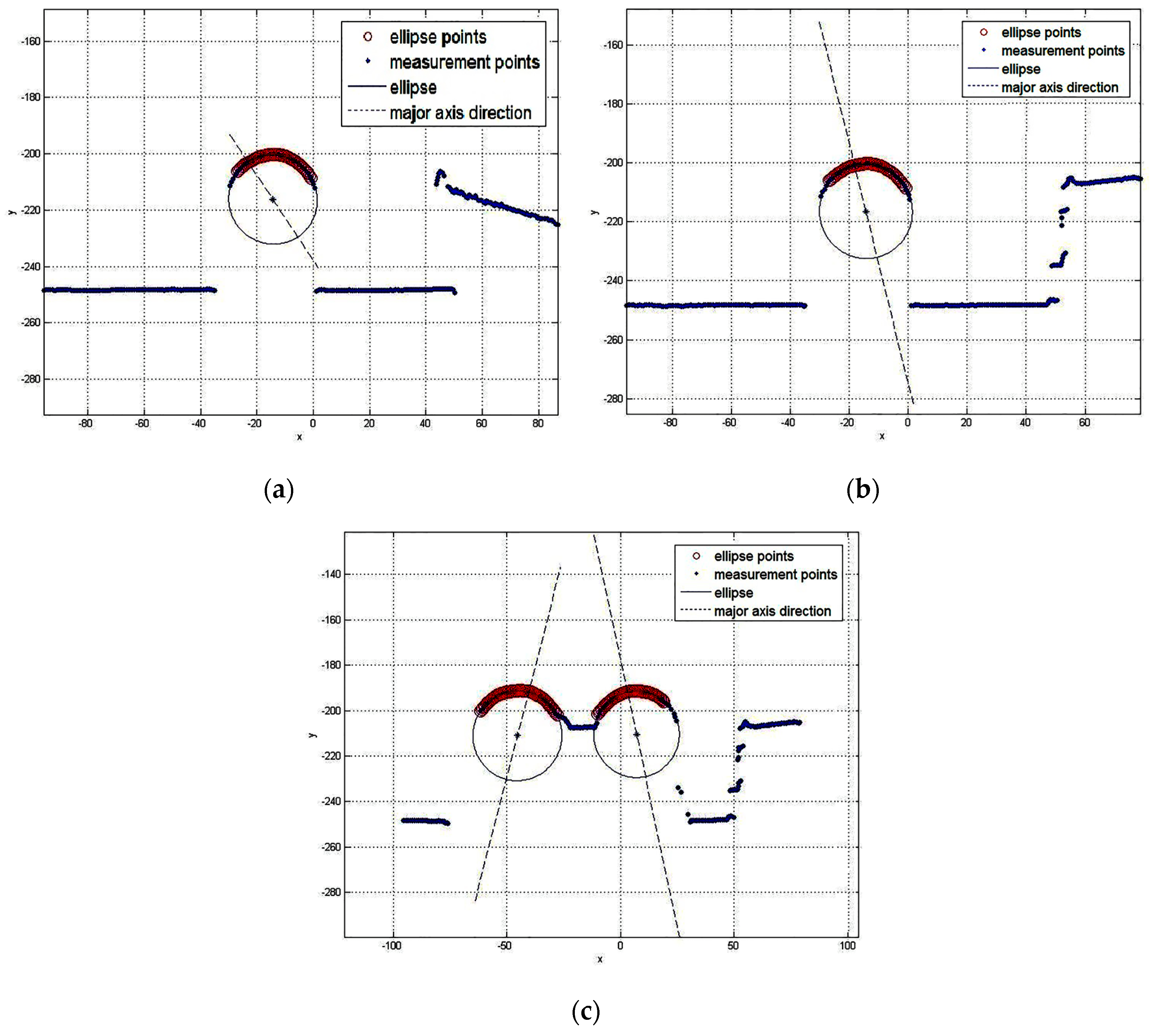

3.2. Ellipse Recognition

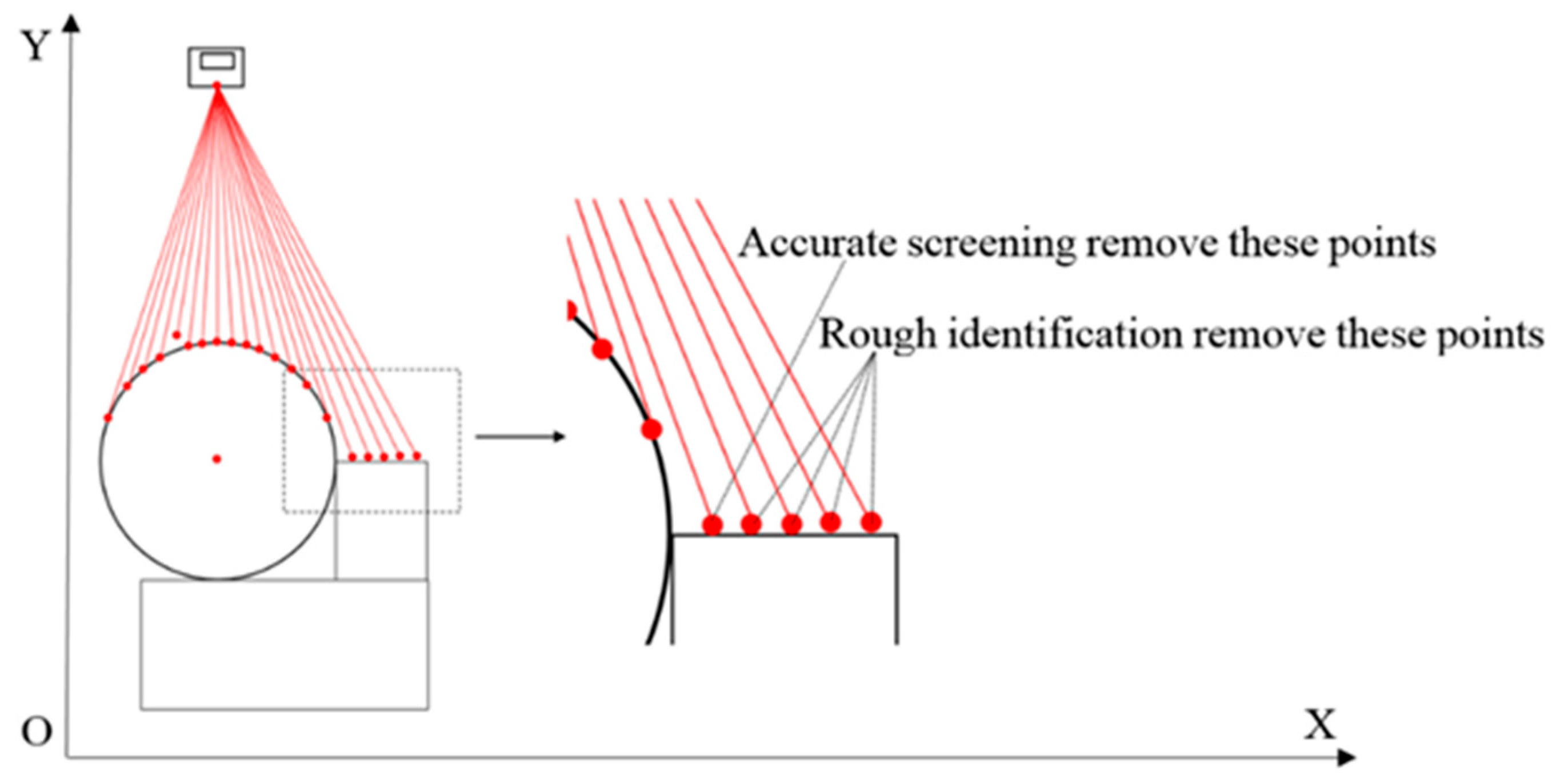

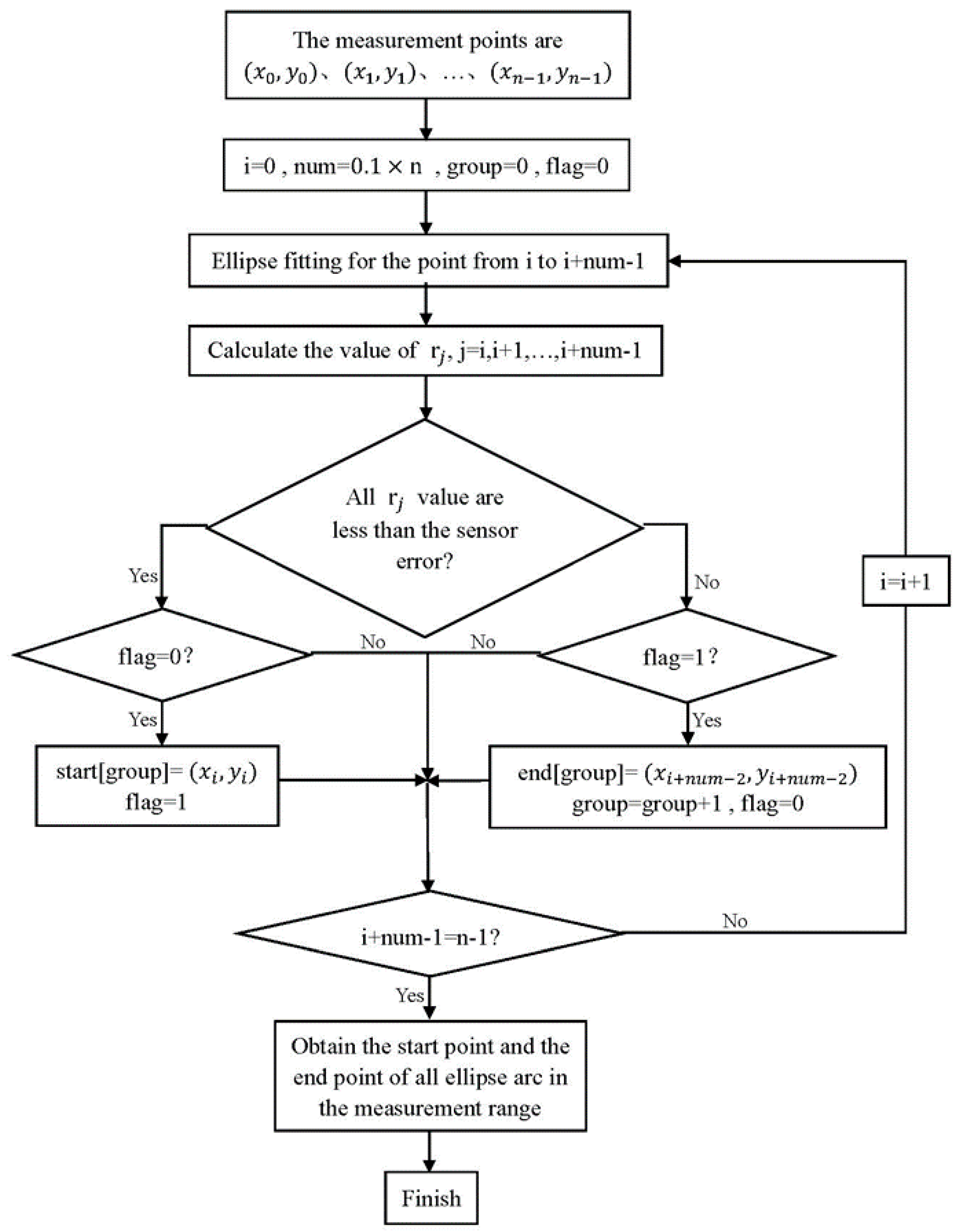

3.2.1. Rough Identification

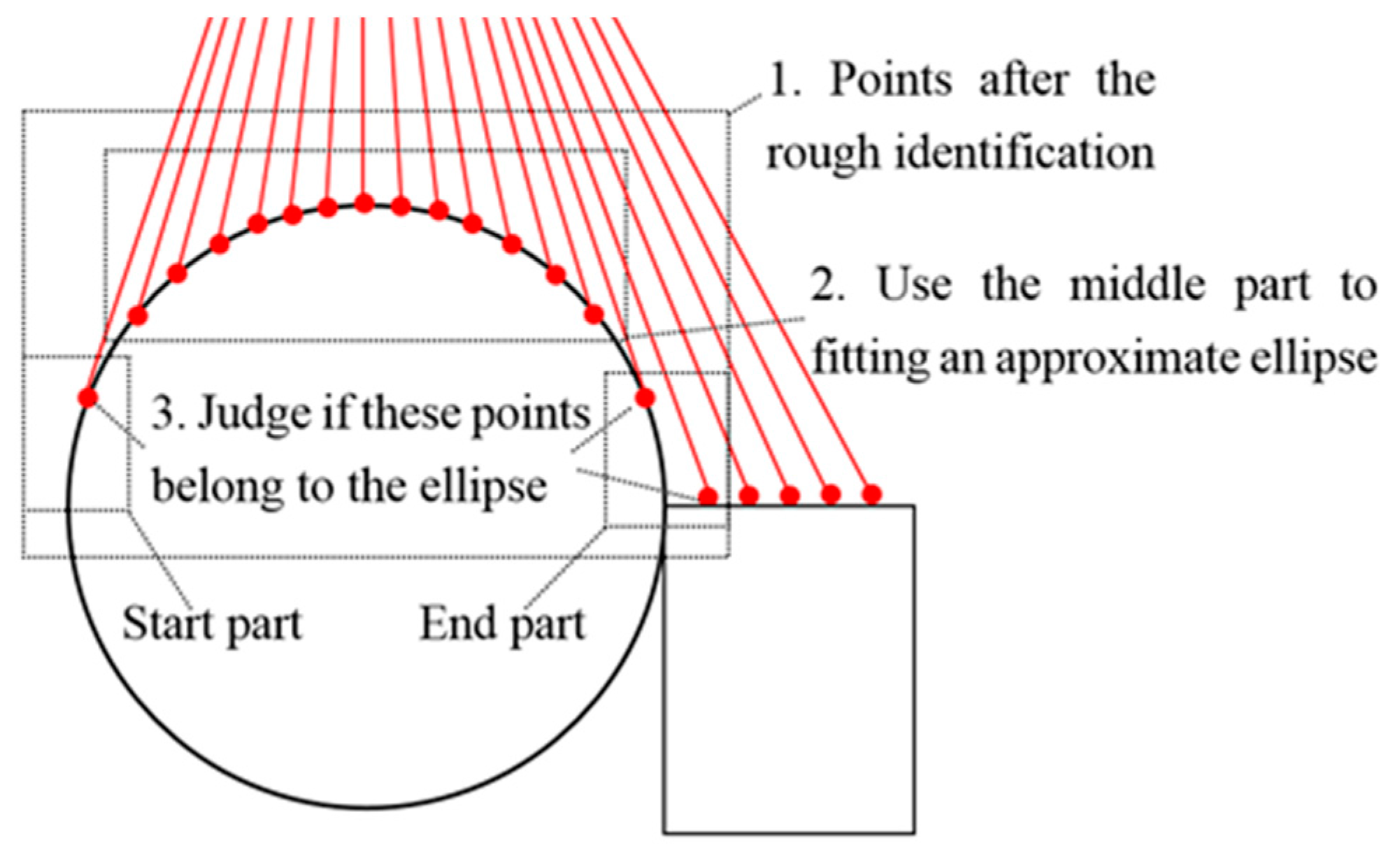

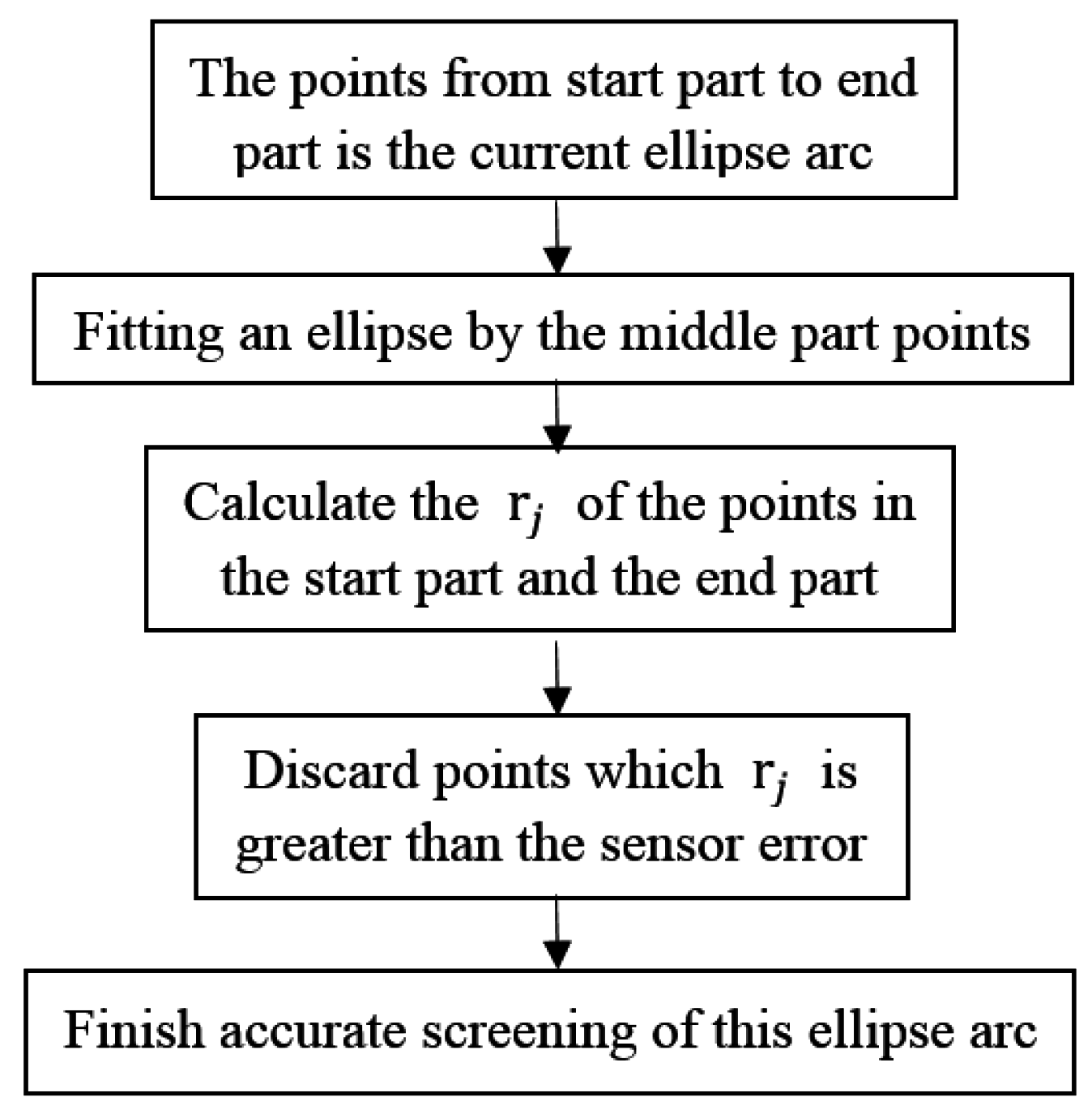

3.2.2. Accurate Screening

3.3. Smoothing and Homogenizing

3.3.1. Smoothing

3.3.2. Homogenizing

4. Experiments and Results

4.1. Single Cylindrical Object

4.2. Multiple Cylindrical Objects

4.3. Interference

4.4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Aoki, N.; Misaka, A.; Miyaoka, M. 3D vision system for cylindrical objects using highlight patterns produced by 2 point light sources at once. In Proceedings of the SICE Annual Conference 2002, Osaka, Japan, 5–7 August 2002; pp. 1915–1917.

- Wang, J.; Gao, B.; Zhang, X. Error correction for high-precision measurement of cylindrical objects diameter based on machine vision. In Proceedings of the 12th IEEE International Conference on Electronic Measurement and Instruments (ICEMI), Qingdao, China, 16–19 July 2015; pp. 1113–1117.

- Liu, S.; Xu, D.; Liu, F. Relative Pose Estimation for Alignment of Long Cylindrical Components Based on Microscopic Vision. IEEE/ASME Trans. Mech. 2015, 21, 1388–1398. [Google Scholar] [CrossRef]

- Nikhil, S.; Alexander, P.; Caixia, C.; Markus, R.; Alois, K. Object detection using boundary representations of primitive shapes. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics, Zhuhai, China, 6–9 December 2015; pp. 108–113.

- Oleari, F.; Kallasi, F.; Lodi Rizzini, D.; Aleotti, J.; Caselli, S. Performance Evaluation of a Low-Cost Stereo Vision System for Underwater Object Detection. In Proceedings of the 19th World Congress of the International Federation of Automatic Control, Cape Town, South Africa, 24–29 August 2014; pp. 3388–3394.

- Tamura, H.; Sasaki, T.; Hashimoto, H. Position Measurement System for Cylindrical Objects Using Laser Range Finder. In Proceedings of the SICE Annual Conference 2010, Taipei, Taiwan, 18–21 August 2010; pp. 291–296.

- Lin, H.I.; Hsueh, Y.C. An automatic position measurement approach for cylindrical objects. In Proceedings of the 9th World Congress on Intelligent Control and Automation (WCICA), Taipei, Taiwan, 21–25 June 2011; pp. 1035–1040.

- Richtsfeld, M.; Schwarz, R.; Vincze, M. Detection of cylindrical objects in tabletop scenes. In Proceedings of the 19th International Workshop on Robotics in Alpe-Adria-Danube Region, Budapest, Hungary, 23–25 June 2010; pp. 363–369.

- Demeyere, M.; Eugene, C. Measurement of cylindrical objects by laser telemetry in an ambulatory context. In Proceedings of the 2002 IEEE Instrumentation and Measurement Technology Conference, Anchorage, AK, USA, 21–23 May 2002; pp. 1571–1576.

- Demeyere, M.; Dereine, E.; Eugene, C. Measurement of cylindrical objects through laser telemetry: Application to a new forest caliper. IEEE Trans. Instrum. Meas. 2002, 51, 645–649. [Google Scholar] [CrossRef]

- Demeyere, M.; Eugene, C. Measurement of cylindrical objects by laser telemetry: A generalization to a randomly tilted cylinder. IEEE Trans. Instrum. Meas. 2004, 53, 566–570. [Google Scholar] [CrossRef]

- Demeyere, M.; Rurimunzu, D.; Eugene, C. Diameter Measurement of Spherical Objects by Laser Triangulation in an Ambulatory Context. IEEE Trans. Instrum. Meas. 2007, 56, 867–872. [Google Scholar] [CrossRef]

- Thamrin, N.M.; Arshad, N.H.M.; Adnan, R. A low-cost rotational infrared scanner for cylindrical object diameter measurement. In Proceedings of the 2013 IEEE International Conference on Control System, Computing and Engineering, Penang, Malaysia, 29 November–1 December 2013; pp. 616–620.

- Pavlov, V.; Ruser, H.; Horn, M. Feature extraction from an infrared sensor array for localization and surface recognition of moving cylindrical objects. In Proceedings of the 2007 IEEE Instrumentation and Measurement Technology Conference, Warsaw, Poland, 1–3 May 2007; pp. 1–6.

- Choi, B.K.; Sim, M.S.; Lee, D.W. Radius and thickness estimation of cylindrical objects using shockwave-scattering analysis in water. In Proceedings of the IEEE Underwater Technology, Pallikaranai Chennai, India, 23–25 February 2015.

- Shihab, S.; Al-Nuaimy, W.; Eriksen, A. Radius estimation for subsurface cylindrical objects detected by ground penetrating radar. In Proceedings of the 10th International Conference on Ground Penetrating Radar, Delft, The Netherlands, 21–24 June 2004; pp. 319–322.

- Sareh, S.; Noh, Y.; Li, M.; Ranzani, T.; Liu, H.; Althoefer, K. Macro-bend optical sensing for pose measurement in soft robot arms. Smart Mater. Struct. 2015, 24, 125024. [Google Scholar] [CrossRef]

- Sklar, E.; Sareh, S.; Secco, E.; Faragasso, A.; Althoefer, K. A Non-linear Model for Predicting Tip Position of a Pliable Robot Arm Segment Using Bending Sensor Data. Sens. Transducers 2016, 199, 52–61. [Google Scholar]

- An, X.; Zhou, Z.; Hu, D. Ellipse fitting based on non-linear least squares. Comput. Eng. Appl. 2009, 45, 188–190. [Google Scholar]

- Li, L. Double-Smoothing Local Linear Regression Analysis of Clustered Data; Central China Normal University Press: Wuhan, China, 2015. [Google Scholar]

| No. | Radius/mm | Radius Error/mm | θ/° | θ Error/° | φ/° | φ Error/° |

|---|---|---|---|---|---|---|

| 1 | 14.7543 | −0.2457 | 9.8507 | −0.1493 | 30.0530 | 0.0530 |

| 2 | 15.8807 | −0.1193 | 10.1130 | 0.1130 | 30.1040 | 0.1040 |

| 3 | 18.3890 | −0.1110 | 10.1706 | 0.1706 | 29.9349 | −0.0651 |

| 4 | 28.6034 | −0.3966 | 9.8863 | −0.1137 | 29.8747 | −0.1253 |

| No. | Radius/mm | Radius Error/mm | θ/° | θ Error/° | φ/° | φ Error/° |

|---|---|---|---|---|---|---|

| 1 | 14.7369 | −0.2631 | 20.1233 | 0.1233 | 20.1270 | 0.1270 |

| 2 | 15.6270 | −0.3730 | 19.8507 | −0.1493 | 19.8762 | −0.1238 |

| 3 | 18.3996 | −0.1004 | 20.1077 | 0.1077 | 19.8374 | −0.1626 |

| 4 | 28.9285 | −0.0715 | 19.8386 | −0.1614 | 20.1383 | 0.1383 |

| No. | Radius/mm | Radius Error/mm | θ/° | θ Error/° | φ/° | φ Error/° |

|---|---|---|---|---|---|---|

| 1 | 14.7740 | −0.2260 | 30.1347 | 0.1347 | 10.1887 | 0.1887 |

| 2 | 15.6107 | −0.3893 | 30.0804 | 0.0804 | 10.1678 | 0.1678 |

| 3 | 18.4960 | −0.0040 | 29.8521 | −0.1479 | 9.7860 | −0.2140 |

| 4 | 28.8330 | −0.1670 | 29.8281 | −0.1719 | 10.1884 | 0.1884 |

| No. | Radius/mm | Radius Error/mm | θ/° | θ Error/° | φ/° | φ Error/° |

|---|---|---|---|---|---|---|

| 1 | 18.9072 | −0.0928 | 10.2417 | 0.2417 | 30.1787 | 0.1787 |

| 2 | 18.9203 | −0.0797 | 9.8943 | −0.1057 | 29.8621 | −0.1379 |

| 1 | 18.8611 | −0.1389 | 20.1903 | 0.1903 | 19.5594 | −0.4406 |

| 2 | 18.9103 | −0.0897 | 19.8831 | −0.1169 | 20.1129 | 0.1129 |

| 1 | 18.9223 | −0.0777 | 30.2043 | 0.2043 | 9.8105 | −0.1895 |

| 2 | 18.9360 | −0.0640 | 29.8952 | −0.1048 | 9.7175 | −0.2825 |

| Conditions | Time/s | Time per Cylindrical Object/s |

|---|---|---|

| Single | 1.201 | 1.201 |

| Welded together | 2.526 | 1.263 |

| Single with planar interferenct | 1.343 | 1.343 |

| Single with complex interferenct | 1.471 | 1.471 |

| Welded together with complex interferences | 2.981 | 1.491 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Liu, C. Radius and Orientation Measurement for Cylindrical Objects by a Light Section Sensor. Sensors 2016, 16, 1981. https://doi.org/10.3390/s16111981

Chen Y, Liu C. Radius and Orientation Measurement for Cylindrical Objects by a Light Section Sensor. Sensors. 2016; 16(11):1981. https://doi.org/10.3390/s16111981

Chicago/Turabian StyleChen, Youdong, and Chongxu Liu. 2016. "Radius and Orientation Measurement for Cylindrical Objects by a Light Section Sensor" Sensors 16, no. 11: 1981. https://doi.org/10.3390/s16111981