Efficient Time-Domain Imaging Processing for One-Stationary Bistatic Forward-Looking SAR Including Motion Errors

Abstract

:1. Introduction

2. DTDA for OS-BFSAR Imaging Processing

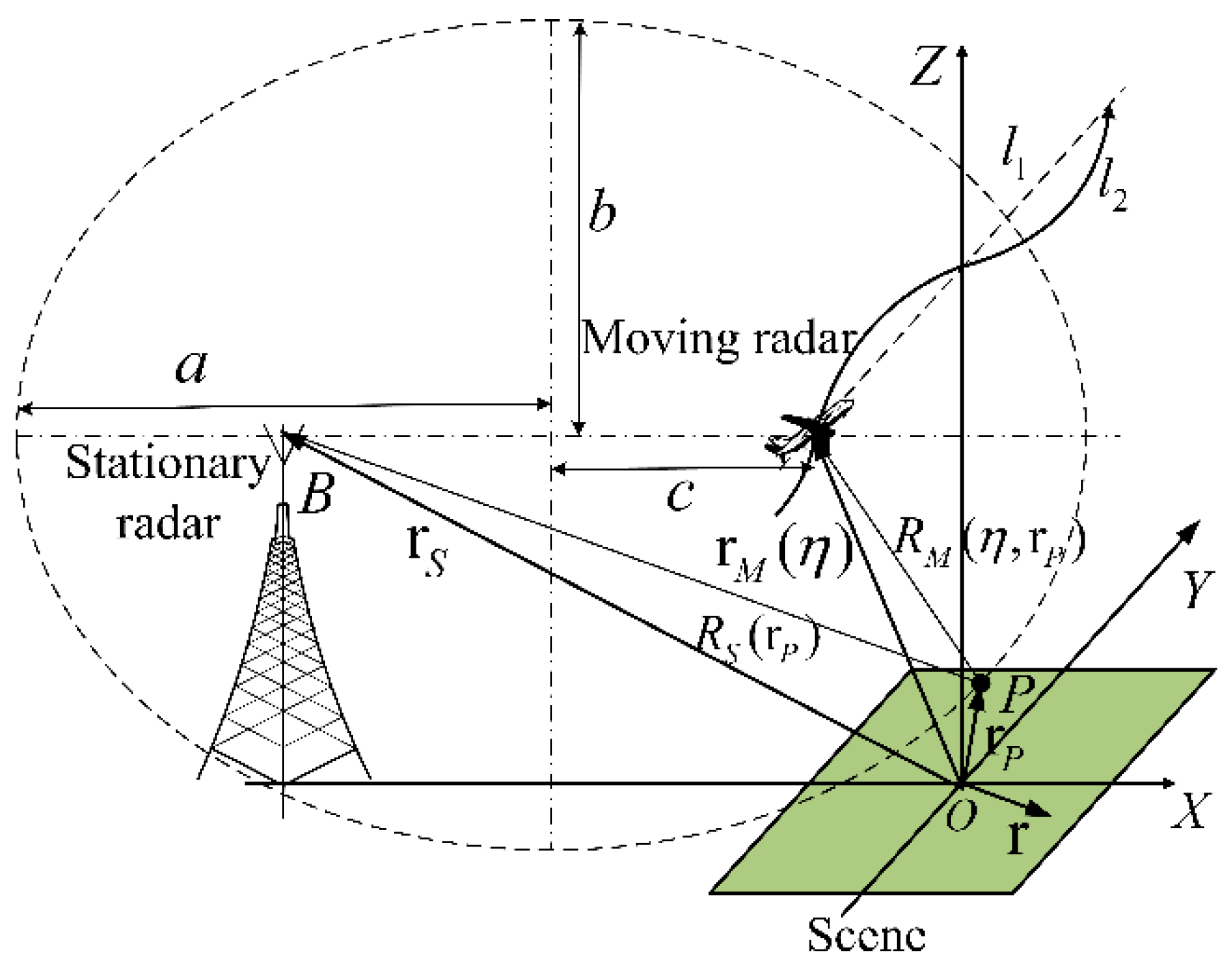

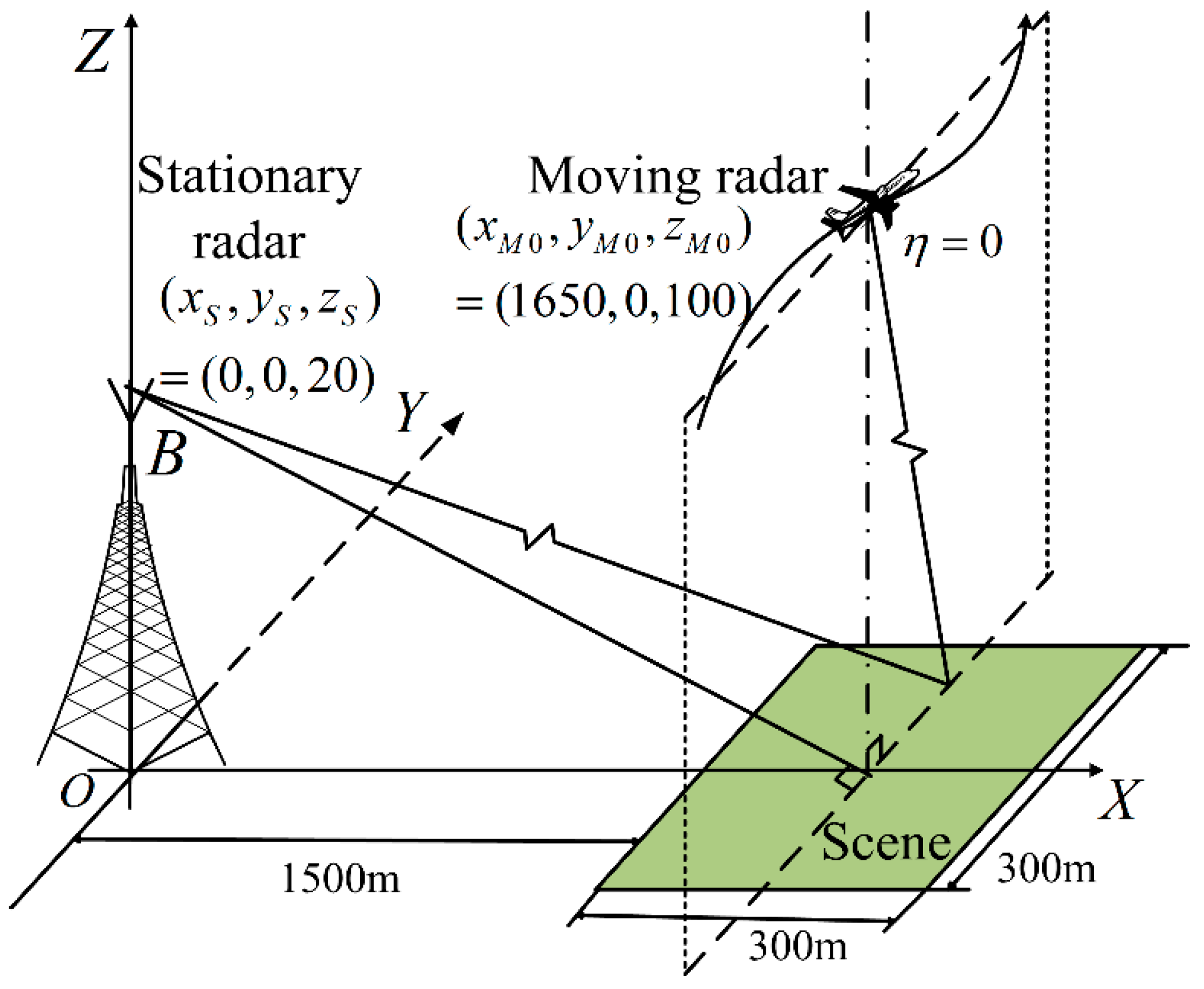

2.1. Imaging Geometry

2.2. DTDA for OS-BFSAR Imaging

3. ETDA for OS-BFSAR Imaging Processing

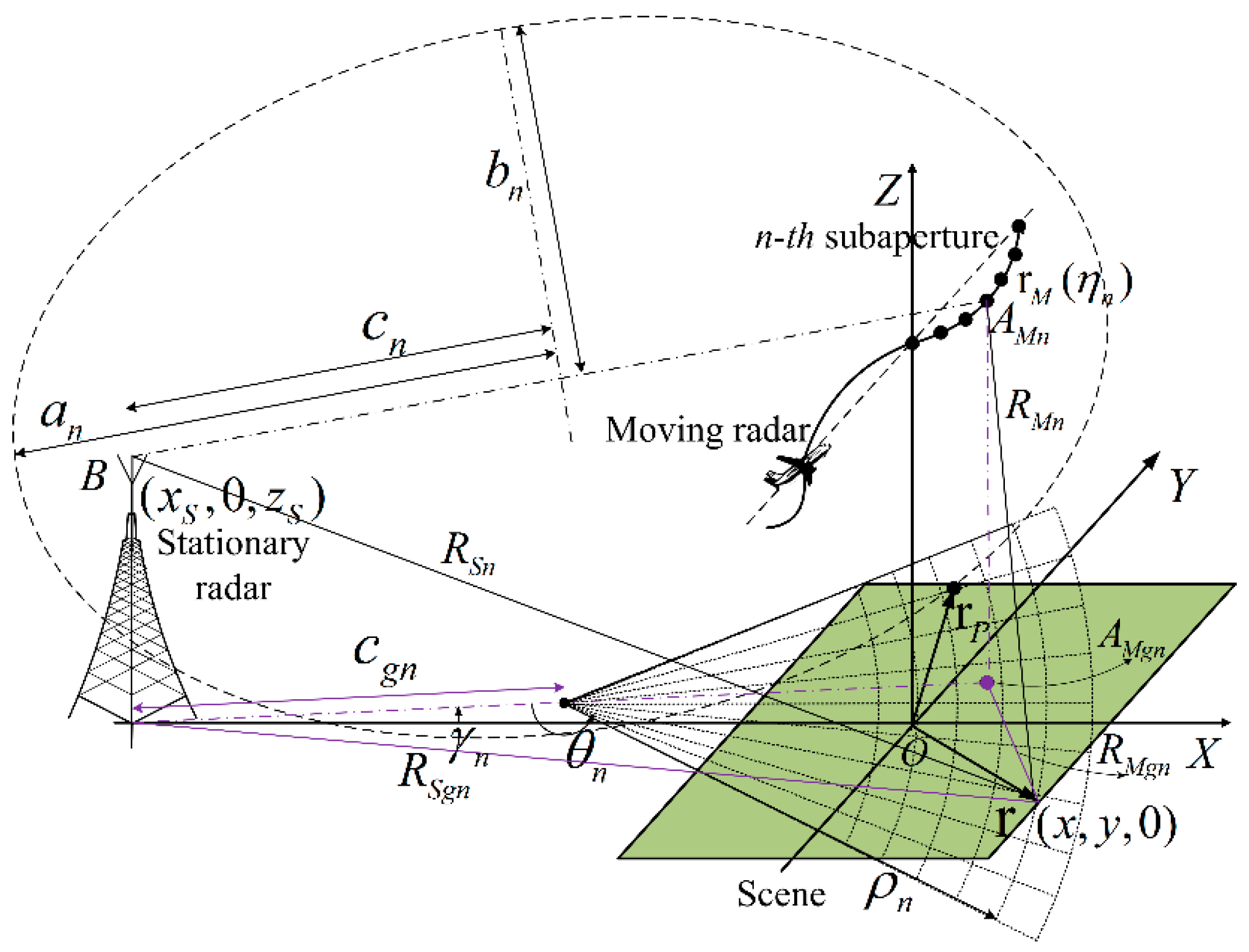

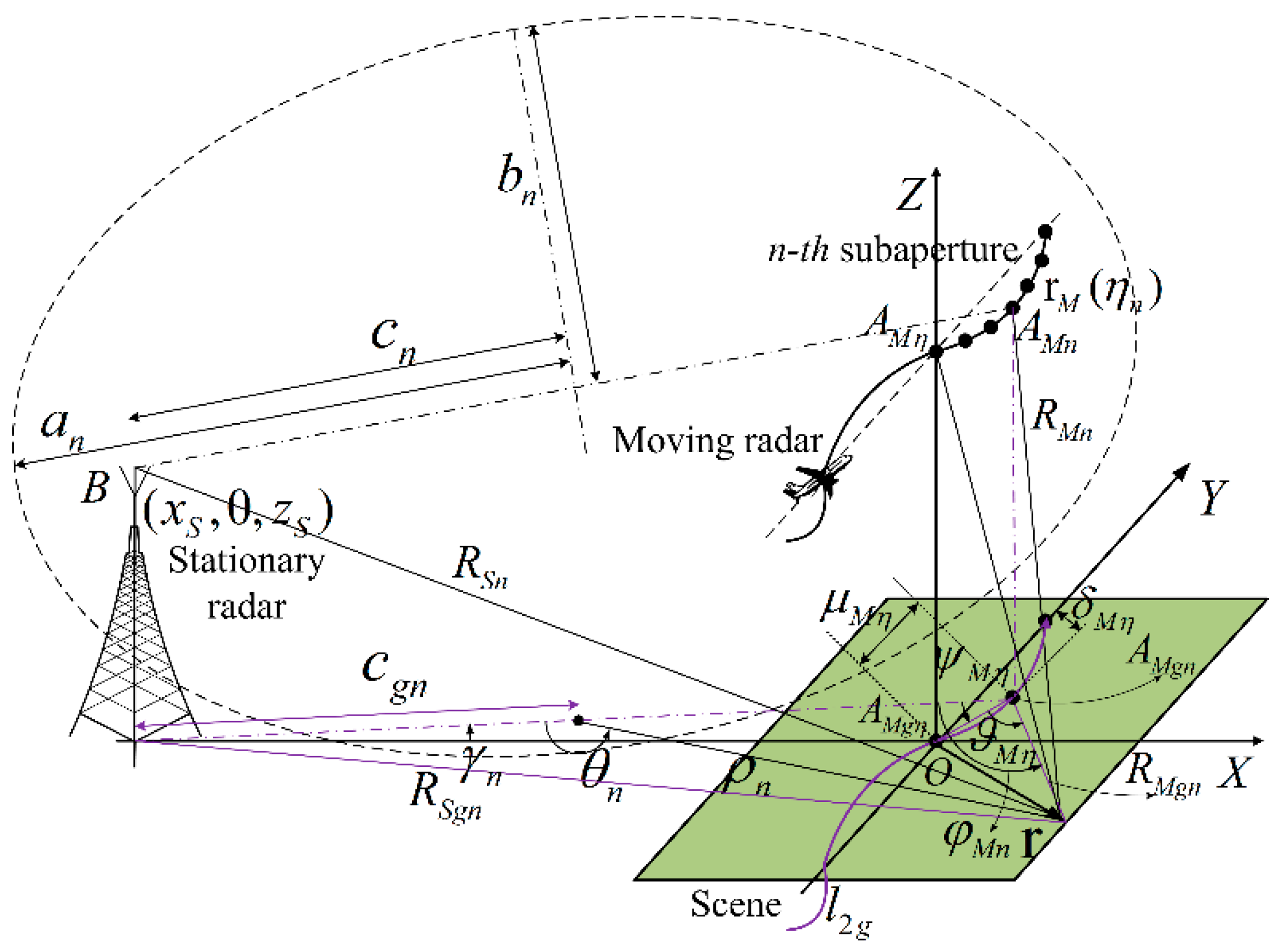

3.1. DTDA with Subaperture and Polar Grid Processing

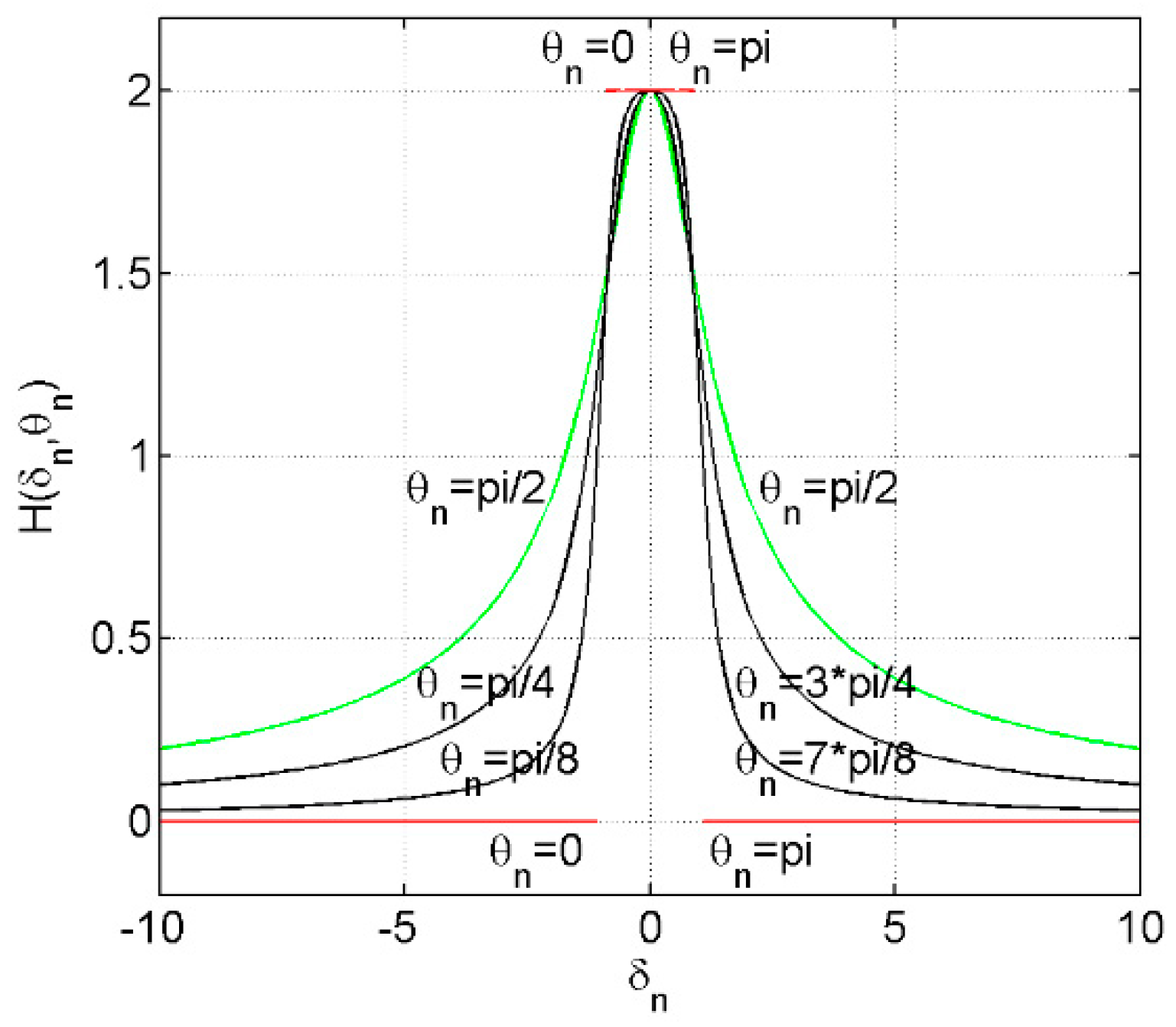

3.2. Sampling Requirements for Polar Grids Considering Motion Errors

3.3. Algorithm Implementation

3.4. Computational Load

4. Experimental Results

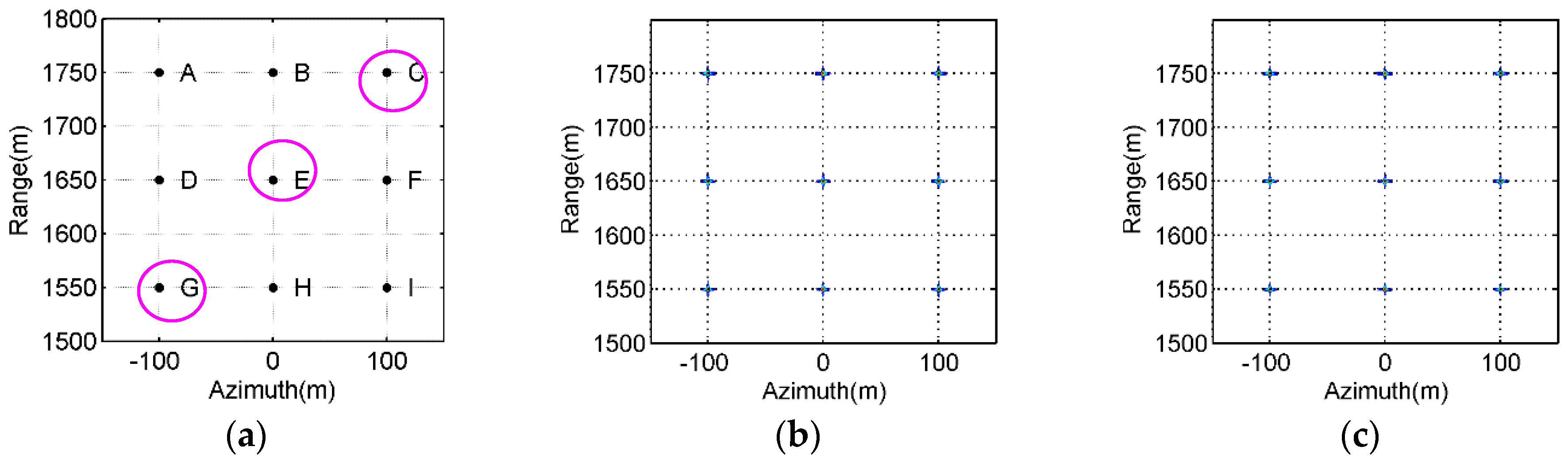

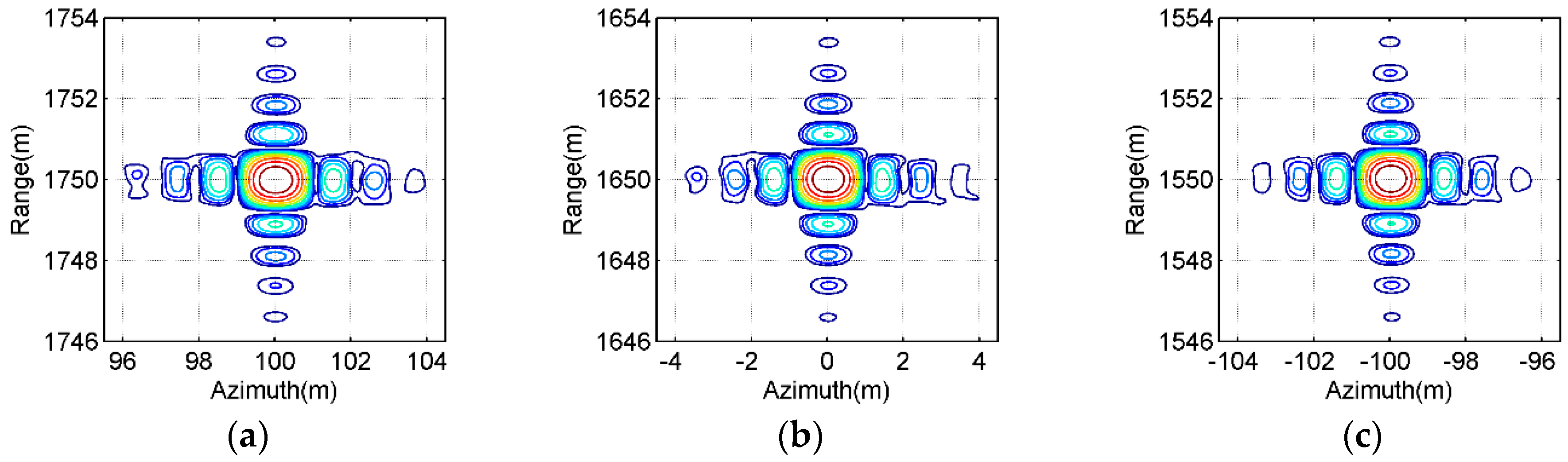

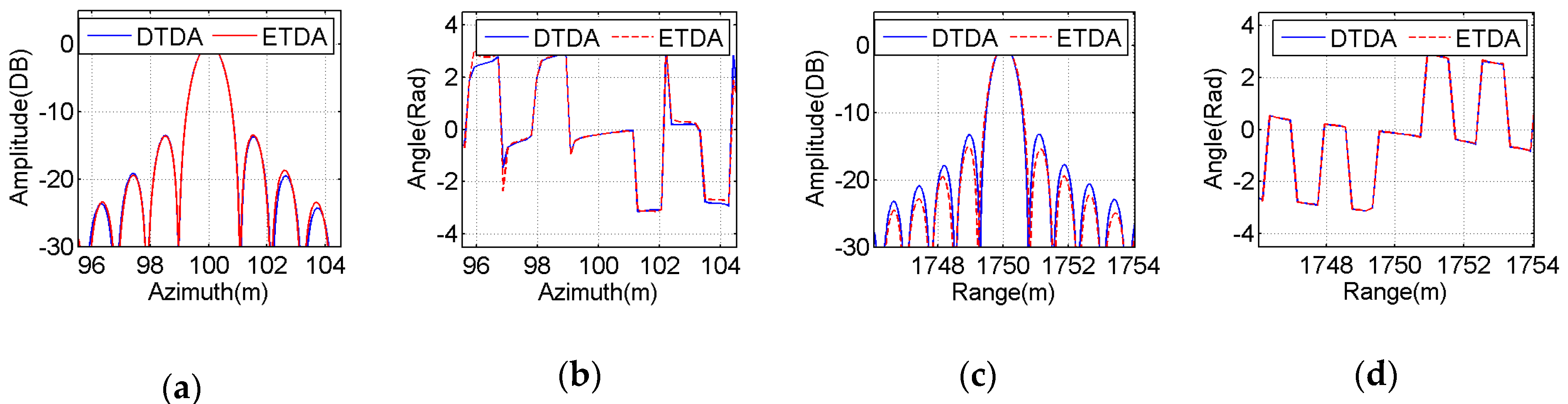

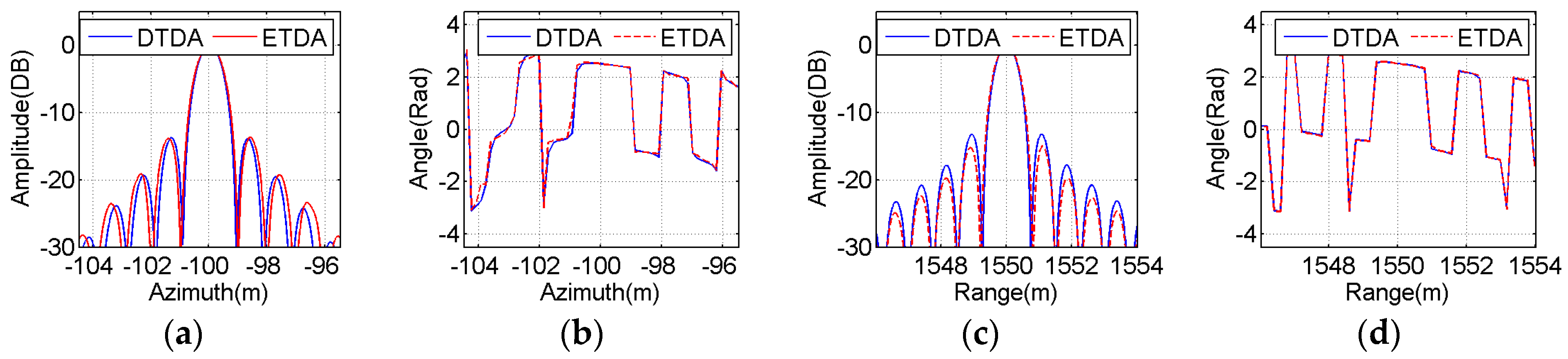

4.1. Simulated Data Results

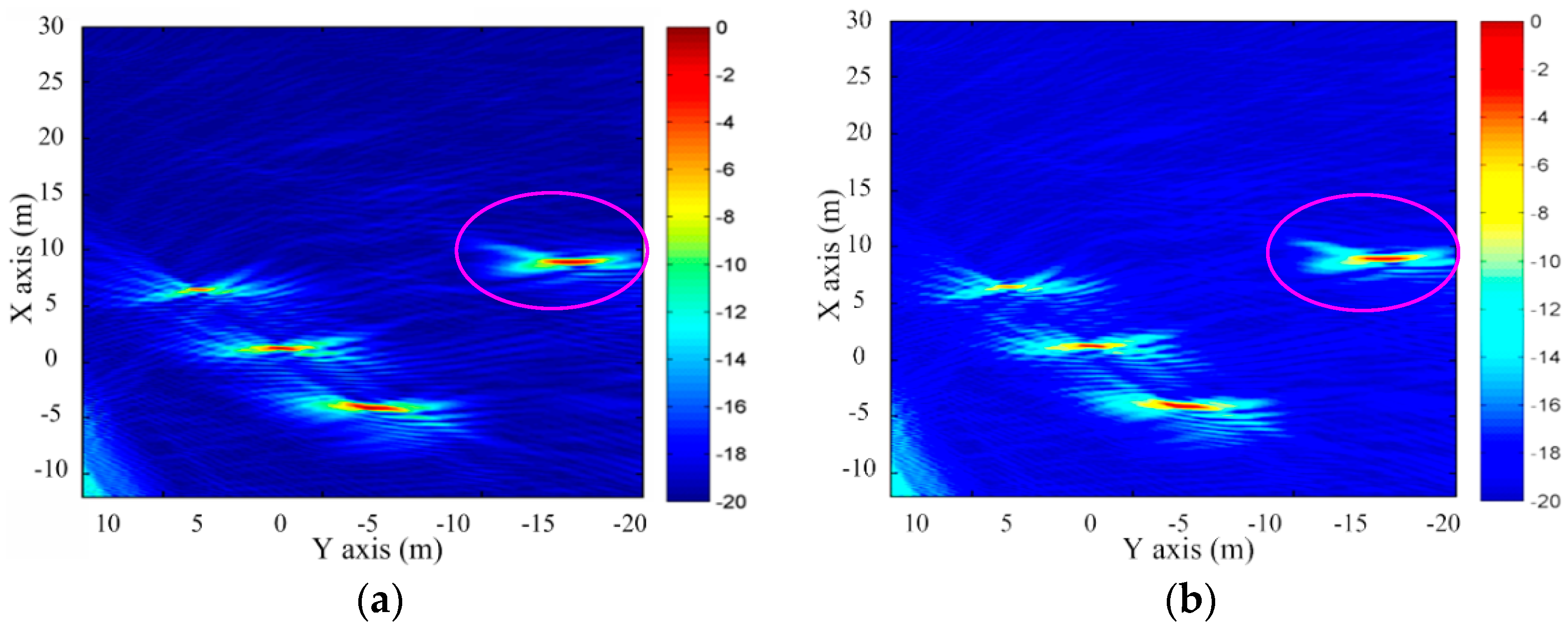

4.2. Measured Data Results

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| 1PPS | One pulse per second |

| BP | Backprojection |

| SAR | Synthetic aperture radar |

| BSAR | Bistatic SAR |

| OS-BSAR | One-stationary BSAR |

| BFSAR | Bistatic forward-looking SAR |

| OS-BFSAR | One-stationary BFSAR |

| BLSAR | Side-looking SAR |

| OS-BSSAR | One-stationary BSSAR |

| PAMIR | Phased array multifunctional imaging radar |

| UWB | Ultrawideband |

| PSLR | Peak sidelobe ratio |

| ISLR | Integrated sidelobe ratio |

| RFI | Radio frequency interfere |

| FDA | Frequency-domain algorithm |

| TDA | Time-domain algorithm |

| DTDA | Direct TDA |

| ETDA | Efficient TDA |

| BPA | Backprojection algorithm |

| FBPA | Fast BPA |

| FFBPA | Fast factorized BPA |

| RDA | Doppler algorithm |

| OKA | Omega-k algorithm |

| CSA | Chirp scaling algorithm |

| NLCSA | Nonlinear CSA |

| P2P | Polar subimages into polar subimages |

| P2C | Polar subimages into the Cartesian image |

| FFT | Fast Fourier transform |

| IFFT | Inverse FFT |

| GPS | Global positioning system |

| VCO | Voltage-controlled oscillator |

| DPLL | Digital phase-locked loop |

| PC | Personal Computer |

| CPU | Central Processing Unit |

| GPU | Graphic Processing Unit |

| RAM | Random Access Memory |

References

- Cumming, I.G.; Wong, F.H. Digital Processing of Synthetic Aperture Radar Data: Algorithm and Implementation; Artech House Publishers: Norwood, MA, USA, 2005. [Google Scholar]

- An, D.X.; Huang, X.T.; Jin, T.; Zhou, Z.M. Extended nonlinear chirp scaling algorithm for high-resolution highly squint SAR data focusing. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3761–3773. [Google Scholar] [CrossRef]

- An, D.X.; Huang, X.T.; Jin, T.; Zhou, Z.M. Extended two-step focusing approach for squinted spotlight SAR imaging. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2889–2900. [Google Scholar] [CrossRef]

- An, D.X.; Li, Y.H.; Huang, X.T.; Li, X.Y.; Zhou, Z.M. Performance evaluation of frequency-domain algorithms for chirped low frequency UWB SAR data processing. IEEE J. Sel. Topics Appl. Earth Observ. 2014, 7, 678–690. [Google Scholar]

- Xie, H.T.; An, D.X.; Huang, X.T.; Zhou, Z.M. Efficient raw signal generation based on equivalent scatterer and subaperture processing for SAR with arbitrary motion. Radioengineering 2014, 23, 1169–1178. [Google Scholar]

- Xie, H.T.; An, D.X.; Huang, X.T.; Zhou, Z.M. Spatial resolution analysis of low frequency ultrawidebeam-ultrawideband synthetic aperture radar based on wavenumber domain support of echo data. J. Appl. Remote Sens. 2015, 9, 095033. [Google Scholar] [CrossRef]

- Walterscheid, I.; Espeter, T.; Klare, J.; Brenner, A.B.; Ender, J.H.G. Potential and limitations of forward-looking bistatic SAR. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; pp. 216–219.

- Xie, H.T.; An, D.X.; Huang, X.T.; Zhou, Z.M. Research on spatial resolution of one-stationary bistatic ultrahigh frequency ultrawidebeam-ultrawideband SAR. IEEE J. Sel. Topics Appl. Earth Observ. 2015, 8, 1782–1798. [Google Scholar] [CrossRef]

- Xie, H.T.; An, D.X.; Huang, X.T.; Zhou, Z.M. Efficient raw signal generation based on equivalent scatterer and subaperture processing for one-stationary bistatic SAR including motion errors. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3360–3377. [Google Scholar] [CrossRef]

- Zhang, H.R.; Wang, Y.; Li, J.W. New application of parameter-adjusting polar format algorithm in spotlight forward-looking bistatic SAR processing. In Proceedings of the 2013 Asia-Pacific Conference on Synthetic Aperture Radar, Tsukuba, Japan, 23–27 September 2013; pp. 384–387.

- Balke, J. Field test of bistatic forward-looking synthetic aperture radar. In Proceedings of the 2005 IEEE International Radar Conference, Arlington, VA, USA, 9–12 May 2005; pp. 424–429.

- Balke, J. SAR image formation for forward-looking radar receivers in bistatic geometry by airborne illumination. In Proceedings of the 2008 IEEE Radar Conference, Rome, Italy, 26–30 May 2008; pp. 1–5.

- Walterscheid, I.; Brenner, A.R.; Klare, J. Radar imaging with very low grazing angles in a bistatic forward-looking configuration. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 327–330.

- Walterscheid, I.; Brenner, A.R.; Klare, J. Bistatic radar imaging of an airfield in forward direction. In Proceedings of the 2012 European Conference on Synthetic Aperture Radar, Nuremberg, Germany, 23–26 April 2012; pp. 227–230.

- Walterscheid, I.; Papke, B. Bistatic forward-looking SAR imaging of a runway using a compact receiver on board an ultralight aircraft. In Proceedings of the 2013 International Radar Symposium (IRS), Dresden, Germany, 24–26 June 2013; pp. 461–466.

- Walterscheid, I.; Espeter, T.; Klare, J.; Brenner, A.B. Bistatic spaceborne-airborne forward-looking SAR. In Proceedings of the 2010 European Conference on Synthetic Aperture Radar, Aachen, Germany, 7–10 June 2010; pp. 1–4.

- Espeter, T.; Walterscheid, I.; Klare, J.; Brenner, A.R.; Ender, J.H.G. Bistatic forward-looking SAR: Results of a spaceborne-airborne experiment. IEEE Geosci. Remote Sens. Lett. 2011, 8, 765–768. [Google Scholar] [CrossRef]

- Ender, J.H.G.; Walterscheid, I.; Brenner, A.R. New aspects of bistatic SAR: Processing and experiments. In Proceedings of the 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; pp. 1758–1762.

- Yew, L.N.; Wong, F.H.; Cumming, I.G. In Proceedings of azimuth invariant bistatic SAR data using the range doppler algorithm. IEEE Trans. Geosci. Remote Sens. 2008, 46, 14–21. [Google Scholar]

- Jun, S.; Zhang, X.; Yang, J. Principle and methods on bistatic SAR signal processing via time correlation. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3163–3178. [Google Scholar] [CrossRef]

- Soumekh, M. Bistatic synthetic aperture radar imaging using wide-bandwidth continuous-wave sources. In Proceedings of the 1998 SPIE Radar Process, Technology and Application III, San Diego, CA, USA, 14–16 October 1998; pp. 99–109.

- Walterscheid, I.; Ender, J.H.G.; Brenner, A.R.; Loffeld, O. Bistatic SAR processing using an omega-k type algorithm. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 25–29 July 2005; pp. 1064–1067.

- Qiu, X.; Hu, D.; Ding, C. An omega-k algorithm with phase error compensation for bistatic SAR of a translational invariant case. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2224–2232. [Google Scholar]

- Shin, H.; Lim, J. Omega-k algorithm for airborne spatial invariant bistatic spotlight SAR imaging. IEEE Trans. Geosci. Remote Sens. 2009, 47, 238–250. [Google Scholar] [CrossRef]

- Rodriguez-Cassola, M.; Krieger, G.; Wendler, M. Azimuth-invariant, bistatic airborne SAR processing strategies based on monostatic algorithms. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 25–29 July 2005; pp. 1047–1050.

- Li, F.; Li, S.; Zhao, Y. Focusing azimuth-invariant bistatic SAR data with chirp scaling. IEEE Geosci. Remote Sens. Lett. 2008, 5, 484–486. [Google Scholar]

- Wang, R.; Loffeld, O.; Nies, H.; Knedlik, S.; Ender, J.H.G. Chirp scaling algorithm for bistatic SAR data in the constant offset configuration. IEEE Trans. Geosci. Remote Sens. 2009, 47, 952–964. [Google Scholar] [CrossRef]

- Wong, F.H.; Cumming, I.G.; Yew, L.N. Focusing bistatic SAR data using the nonlinear chirp scaling algorithm. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2493–2505. [Google Scholar] [CrossRef]

- Qiu, X.; Hu, D.; Ding, C. An improved NLCS algorithm with capability analysis for one-stationary bistatic SAR. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3179–3186. [Google Scholar] [CrossRef]

- Li, Z.Y.; Wu, J.J.; Li, W.C.; Huang, Y.L.; Yang, J.Y. One-stationary bistatic side-looking SAR imaging algorithm based on extended keystone transforms and nonlinear chirp scaling. IEEE Geosci. Remote Sens. Lett. 2012, 10, 211–215. [Google Scholar]

- Zeng, T.; Wang, R.; Li, F.; Long, T. A modified nonlinear chirp scaling algorithm for spaceborne/stationary bistatic SAR based on series reversion. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3108–3118. [Google Scholar] [CrossRef]

- Bauck, J.L.; Jenkins, W.K. Convolution-backprojection image reconstruction for bistatic synthetic aperture radar. In Proceedings of the 1989 IEEE International Symposium on Circuit System, Portland, OR, USA, 8–11 May 1989; pp. 631–634.

- Seger, O.; Herberthson, M.; Hellsten, H. Real-time SAR processsing of low frequency ultra wide band radar data. In Proceedings of the 1998 European Conference on Synthetic Aperture Radar, Friedrichshafen, Germany, 25–27 May 1998; pp. 489–492.

- Yegulalp, A.F. Fast backprojection algorithm for synthetic aperture radar. In Proceedings of the 1999 IEEE Radar Conference, Waltham, MA, USA, 20–22 April 1999; pp. 60–65.

- McCorkle, J.; Rofheart, M. An order N2log(N) backprojector algorithm for focusing wide-angle wide-bandwidth arbitrary- motion synthetic aperture radar. In Proceedings of the 1996 SPIE Aerosense Conference, Orlando, FL, USA, 8–12 June 1996; pp. 25–36.

- Ulander, L.M.H.; Hellsten, H.; Stenström, G. Synthetic-aperture radar processing using fast factorized backprojection. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 760–776. [Google Scholar] [CrossRef]

- Ding, Y.; Munson, D.C. A fast back-projection algorithm for bistatic SAR imaging. In Proceedings of the 2002 International Conference on Image Processing, Rochester, NY, USA, 22–25 September 2002; pp. 449–452.

- Vu, V.T.; Sjögren, T.K.; Pettersson, M.I. Fast backprojection algorithm for UWB bistatic SAR. In Proceedings of the 2011 IEEE Radar Conference, Kansas, MO, USA, 23–27 May 2011; pp. 431–434.

- Vu, V.T.; Sjögren, T.K.; Pettersson, M.I. Phase error calculation for fast time-domain bistatic SAR algorithms. IEEE Trans. Aerosp. Electron. Syst. 2013, 49, 631–639. [Google Scholar] [CrossRef]

- Vu, V.T.; Sjögren, T.K.; Pettersson, M.I. SAR imaging in ground plane using fast backprojection for mono-and bistatic cases. In Proceedings of the 2012 IEEE Radar Conference, Atlanta, GA, USA, 7–11 May 2012; pp. 184–189.

- Vu, V.T.; Sjögren, T.K.; Pettersson, M.I. Nyquist sampling requirements for polar grids in bistatic time-domain algorithms. IEEE Trans. Signal Process. 2015, 63, 457–465. [Google Scholar] [CrossRef]

- Shao, Y.F.; Wang, R.; Deng, Y.K.; Liu, Y.; Chen, R.; Liu, G.; Loffeld, O. Fast backprojection algorithm for bistatic SAR imaging. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1080–1084. [Google Scholar] [CrossRef]

- Ulander, L.M.H.; Flood, B.; Froelind, P.-O.; Jonsson, T.; Gustavsson, A.; Rasmusson, G.; Stenstroem, G.; Barmettler, A.; Meier, E. Bistatic experiment with ultra-wideband VHF synthetic aperture radar. In Proceedings of the 2008 European Conference on Synthetic Aperture Radar, Friedrichshafen, Germany, 2–5 June 2008; pp. 1–4.

- Ulander, L.M.H.; Frölind, P.-O.; Gustavsson, A.; Murdin, D.; Stenström, G. Fast factorized back-projection for bistatic SAR processing. In Proceedings of the 2010 European Conference on Synthetic Aperture Radar, Aachen, Germany, 7–10 June 2010; pp. 1002–1005.

- Rodriguez-Cassola, M.; Prats, P.; Krieger, G.; Moreira, A. Efficient time-domain image formation with precise topography accommodation for general bistatic SAR configurations. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 2949–2966. [Google Scholar] [CrossRef] [Green Version]

- Xie, H.T.; An, D.X.; Huang, X.T.; Li, X.Y.; Zhou, Z.M. Fast factorised backprojection algorithm in elliptical polar coordinate for one-stationary bistatic very high frequency/ultrahigh frequency ultra wideband synthetic aperture radar with arbitrary motion. IET Radar Sonar Navig. 2014, 8, 946–956. [Google Scholar] [CrossRef]

- Xie, H.T.; An, D.X.; Huang, X.T.; Zhou, Z.M. Fast time-domain imaging in elliptical polar coordinate for general bistatic VHF/UHF ultra-wideband SAR with arbitrary motion. IEEE J. Sel. Topics Appl. Earth Observ. 2015, 8, 879–895. [Google Scholar] [CrossRef]

- Vu, V.T.; Sjögren, T.K.; Pettersson, M.I. Fast time-domain algorithms for UWB bistatic SAR processing. IEEE Trans. Aerosp. Electron. Syst. 2013, 49, 1982–1994. [Google Scholar] [CrossRef]

- Vu, V.T.; Sjögren, T.K.; Pettersson, M.I. Fast backprojection algorithms based on subapertures and local polar coordinates for general bistatic airborne SAR systems. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2706–2712. [Google Scholar] [CrossRef]

- Frölind, P.-O.; Ulander, L.M.H. Evaluation of angular interpolation kernels in fast back-projection SAR processing. IET Radar Sonar Navig. 2006, 153, 243–249. [Google Scholar] [CrossRef]

| Parameters | Values | Parameters | Values |

|---|---|---|---|

| Carrier frequency | 700 MHz | Signal bandwidth | 200 MHz |

| Sampling frequency | 220 MHz | Pulse duration | 1 μs |

| Pulse repetition frequency | 120 Hz | Stationary radar position | (0,0,20) m |

| Moving radar ideal speed | 45 m/s | Moving radar ideal altitude | 100 m |

| Algorithms | Measured Parameters | ScattererC | ScattererE | ScattererG | |

|---|---|---|---|---|---|

| Bistatic DTDA | Resolution (m) | Azimuth | 0.9470 | 0.8863 | 0.8240 |

| Range | 0.6702 | 0.6703 | 0.6705 | ||

| PSLR (dB) | Azimuth | −13.53 | −13.60 | −13.76 | |

| Range | −13.22 | −12.26 | −13.26 | ||

| ISLR (dB) | Azimuth | −10.51 | −10.41 | −9.68 | |

| Range | −10.21 | −10.43 | −9.73 | ||

| Proposed ETDA | Resolution (m) | Azimuth | 0.9486 | 0.8873 | 0.8288 |

| Range | 0.6711 | 0.6713 | 0.6716 | ||

| PSLR (dB) | Azimuth | −13.47 | −13.36 | −13.87 | |

| Range | −15.34 | −15.19 | −15.01 | ||

| ISLR (dB) | Azimuth | −10.62 | −10.46 | −9.71 | |

| Range | −10.32 | −10.49 | −9.84 | ||

| Parameters | Values | Parameters | Values |

|---|---|---|---|

| Signal frequency | P-band | Sampling frequency | 220 MHz |

| Pulse repetition frequency | 500 Hz | Pulse duration | 100 ns |

| Stationary radar position | (−54,0,6) m | Moving radar initial position | (−40,26,4) m |

| Moving radar ideal speed | 12.8 km/h | Moving radar ideal altitude | 4 m |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, H.; Shi, S.; Xiao, H.; Xie, C.; Wang, F.; Fang, Q. Efficient Time-Domain Imaging Processing for One-Stationary Bistatic Forward-Looking SAR Including Motion Errors. Sensors 2016, 16, 1907. https://doi.org/10.3390/s16111907

Xie H, Shi S, Xiao H, Xie C, Wang F, Fang Q. Efficient Time-Domain Imaging Processing for One-Stationary Bistatic Forward-Looking SAR Including Motion Errors. Sensors. 2016; 16(11):1907. https://doi.org/10.3390/s16111907

Chicago/Turabian StyleXie, Hongtu, Shaoying Shi, Hui Xiao, Chao Xie, Feng Wang, and Qunle Fang. 2016. "Efficient Time-Domain Imaging Processing for One-Stationary Bistatic Forward-Looking SAR Including Motion Errors" Sensors 16, no. 11: 1907. https://doi.org/10.3390/s16111907