Internet of Things Platform for Smart Farming: Experiences and Lessons Learnt

Abstract

:1. Introduction

- Allows a bring-your-own IoT sensor principle , i.e., permits effortless integration and use of virtually any IoT device, including commercially available sensors, cameras, weather stations, etc. This reduces sensor installation and maintenance costs, while providing for easy upgrade to newer and more advanced sensors.

- Supports scalable data analytics that can continuously process large crop performance data.

- Offers do-it-yourself tools that allow plant biologists and farmers/growers to analyze and visualize plant performance data.

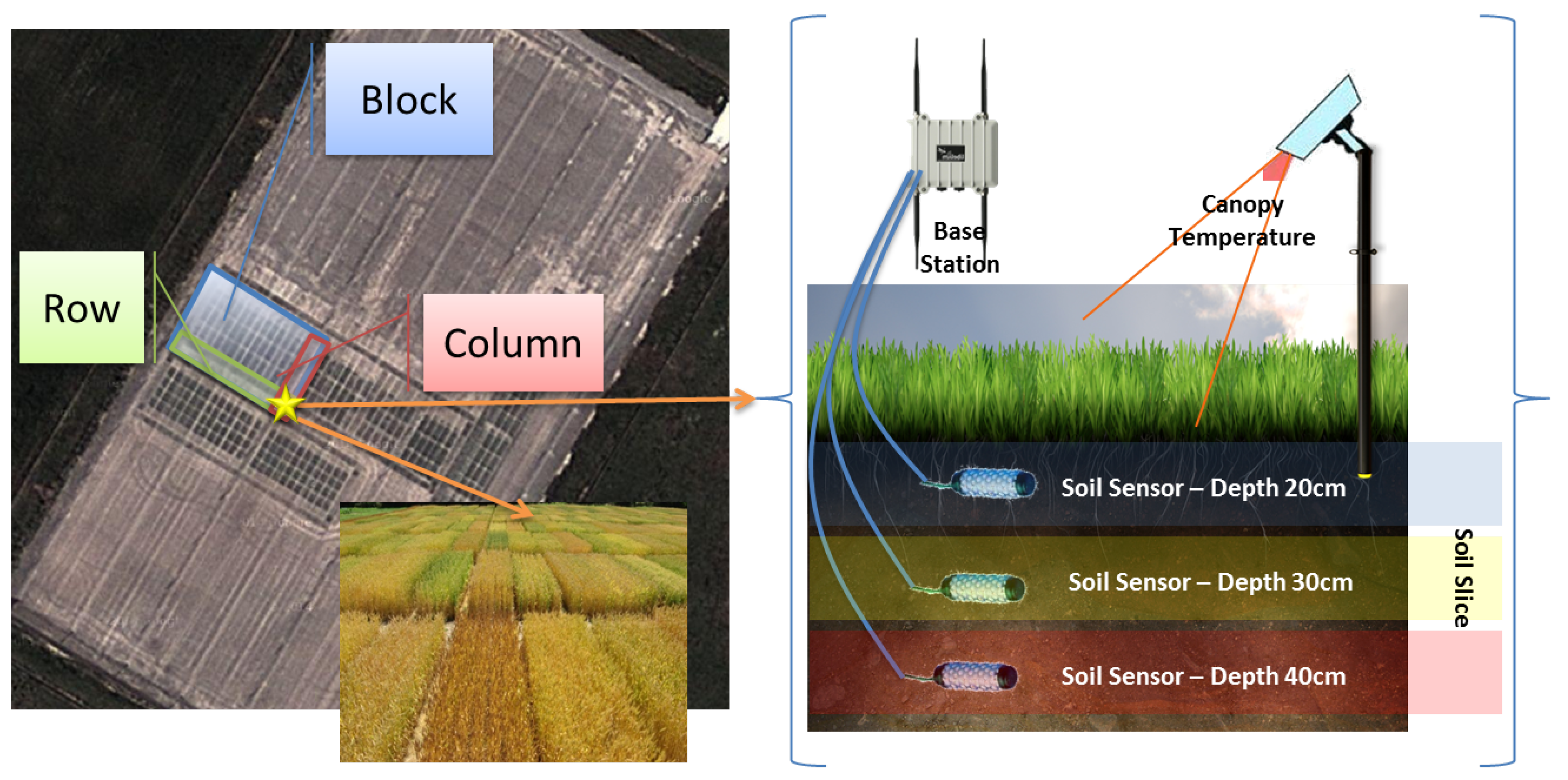

2. A Use Case and Related Challenges for Smart Farming

- Capturing the large volume of heterogeneous data produced by a variety of IoT sensors (and possibly manual measurements), and doing this for a large number and variety of activities involving different studies as well as crops.

- Supporting the integration and use of almost any IoT device, including all commercially available sensors, camera, weather stations, etc., as this will achieve a bring-your-own sensor model of operations that will allow farmers, growers, and scientists to take advantage of cheaper/more capable IoT sensors, as well as individual preferences and budgets.

- Integrating heterogeneous data from such a great variety of IoT devices and also historical crop performance data produced by past studies (such data and results are typically available in CSV files that make it harder to use, analyse, explore, and share).

- Providing crop performance data analysis software and related tools for do-it-yourself search, analysis and visualisation of collected data across multiple studies.

- Sharing sensors, data, analysis tools, results, and data visualizations.

3. Related Work and Challenges is Building IoT-Based Platforms for Smart Farming

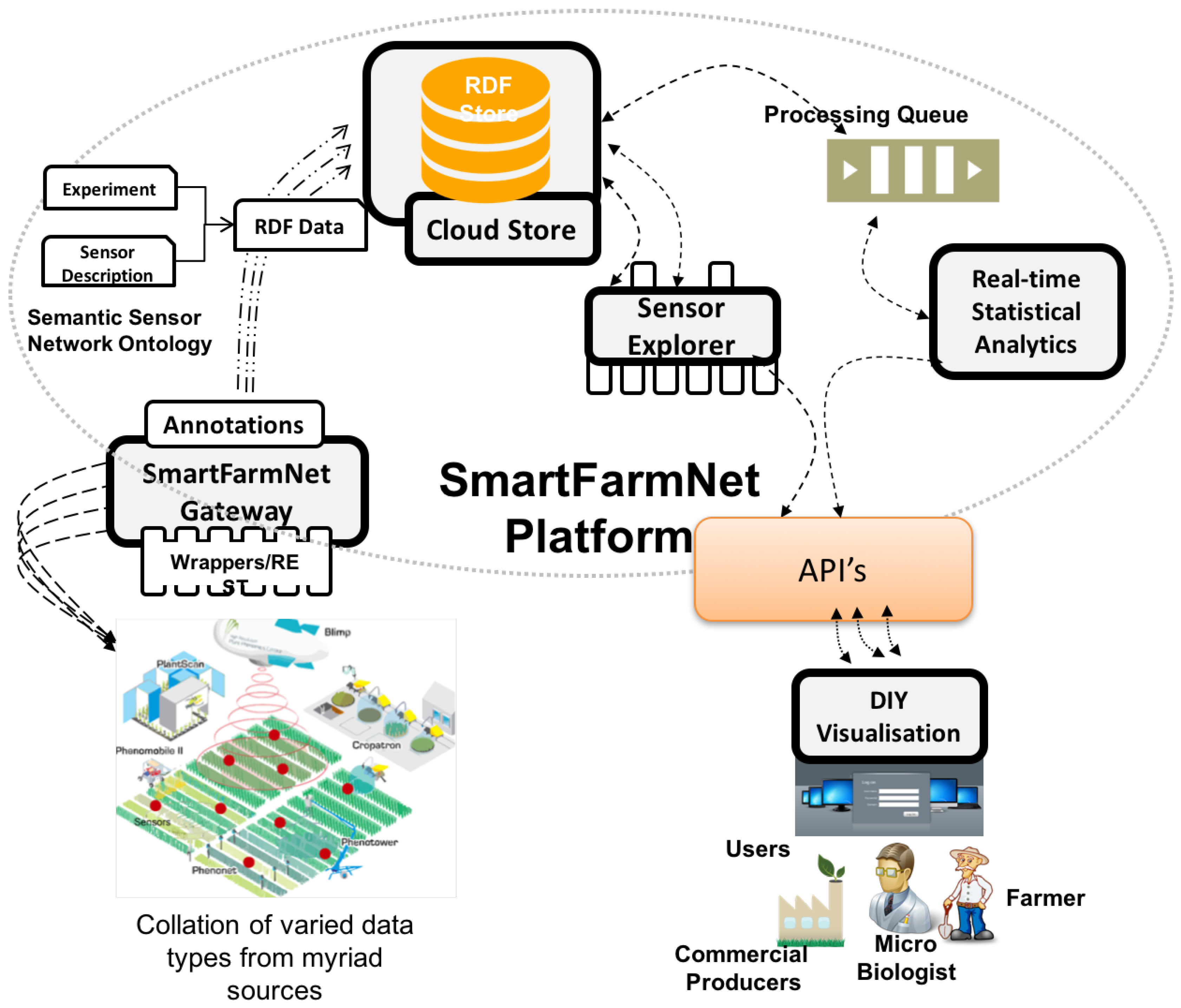

4. The SmartFarmNet IoT Platform for Smart Farming

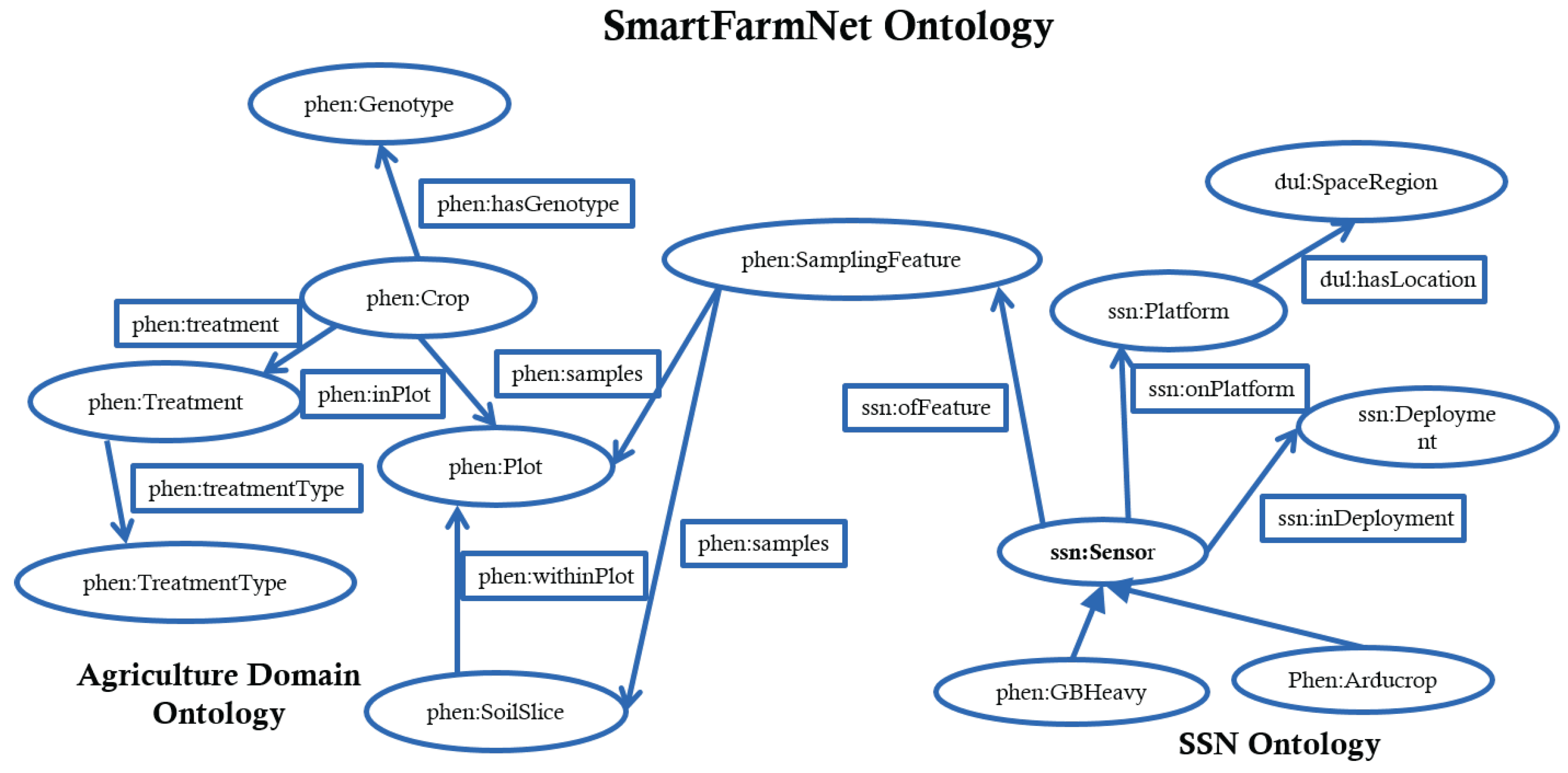

4.1. SmartFarmNet’s Data Model

- Any user can have zero or more studies.

- Any study can have zero or more nodes.

- Any node can have zero or more streams. Each node can also have latitude, longitude, and altitude values.

- Any stream is a set of (timestamp, value) pairs. Each stream has one unit of measurement.

4.2. Bring-Your-Own Sensors Support via the Semantic Sensor Network Ontology

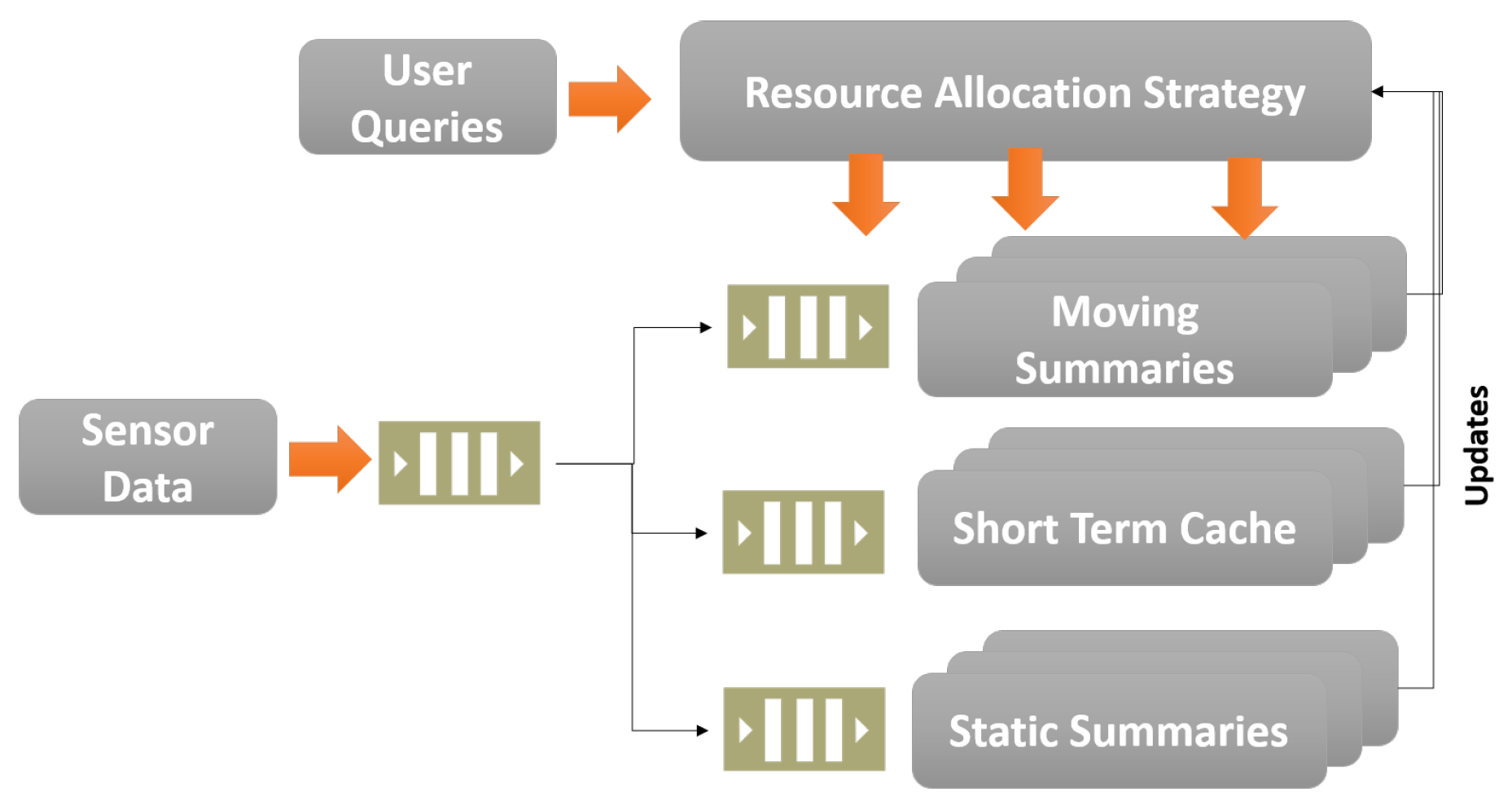

4.3. Scalable Data Analysis of Sensor Data in SmartFarmNet

4.4. SmartFarmNet Architecture

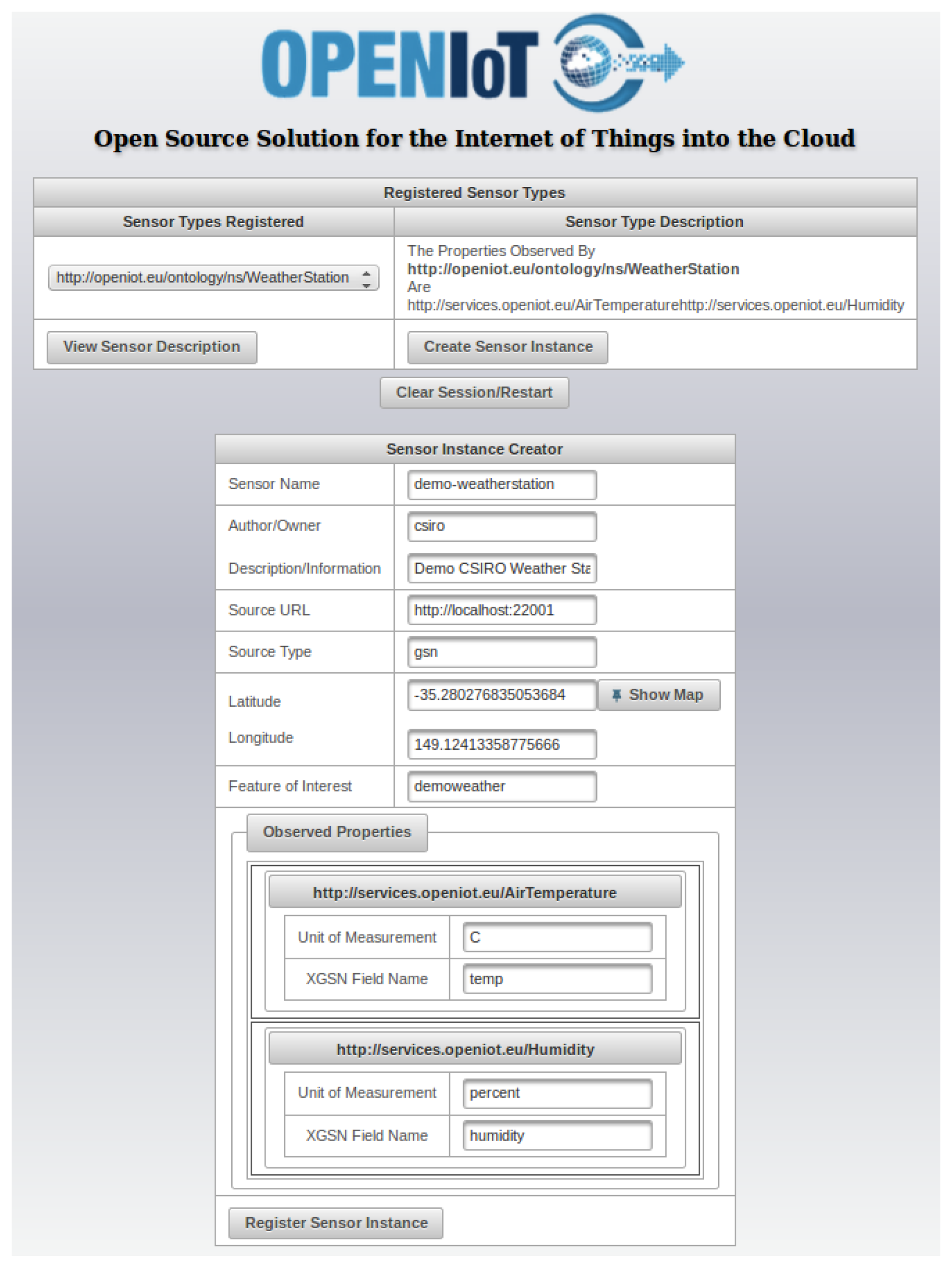

- SmartFarmNet gateway: This collects, filters, and collates data streams from virtually any IoT device. The SmartFarmNet gateway uses the OpenIoT X-GSN component [31] for data ingestion. The component communicates with sensors using wrappers. A wrapper is an interface that allows the gateway to pull/push data from/to the underlying IoT device. The SmartFarmNet platform currently has inbuilt support for data ingestion from more than thirty IoT device platforms, including Arduino, Netatmo, Libelium WaspMotes, Remote, IP-Cameras (to name a few). It also provides support for virtual sensors such as CSV files. The data from the IoT devices are annotated on-the-fly with metadata describing the IoT device and the data is encoded using the SmartFarmNet ontology described in Section 4.2. In Section 5, we will introduce the sensor schema editor that is used to describe all employed sensors via a graphical user interface. This graphical interface hides the complexities of dealing with ontologies from the user, and also provides support for the uploading of historical data. The gateway can be deployed across multiple sensor sites/locations, and data could potentially be aggregated at an intermediate location (via SmartFarmNet gateways) or in the cloud. The annotated data from the IoT devices are represented using the resource description framework (RDF) and stored in the cloud store. A sample RDF stream computed from the description of a sensor is presented in the listing below. This listing involves a Canopy Temperature sensor deployed in plot 4001, which is part of the study kirkegaard-and-danish.

<rdf : Description rdf:about="http://sensordb.csiro.au/phenonet/sensor/

arducrop/20140611–1962–0012">

<ssn : onPlatform rdf:resource="http://sensordb.csiro.au/

phenonet/experiment/kirkegaard-and-danish/plot/4001

/platform/phen077"/>

<ssn:inDeployment rdf:resource="http://sensordb.

csiro . au/phenonet/deployment/site/ges–creek–range/20140611-1962-0000"/>

<ssn : ofFeature rdf:resource="http://sensordb . csiro . au/phenonet/experiment

/kirkegaard–and–danish/plot/4001/sf"/>

<rdfs : label rdf:datatype="http://www

.w3.org/2001/XMLSchema#string">Canopy Temp</rdfs:label>

<rdf : type rdf:

resource="http://sensordb . csiro . au/ontology/phenonet#ArduCrop"/>

</rdf : Description >

-

Cloud Store: This enables the storage and management of data streams generated by the SmartFarmNet gateway. The cloud store uses the linked sensor middleware-light (LSM-Light) component of OpenIoT [31]. The cloud infrastructure stores all of the relevant sensor annotations (descriptions and metadata), the ontology, functional data related to user accounts, and permissions to enforce privacy and security. SmartFarmNet uses a semantic data store (a No-SQL graph database) to store the sensor data in RDF format. It internally implements publish/subscribe queues to handle large streams of sensor data stemming from virtual and physical sensors. The components provide APIs to perform the basic create, read, update and delete (CRUD) operations over the sensor data, and is responsible for transforming the data generated by the IoT devices into RDF triples using the SmartFarmNet ontologies.

-

Sensor Explorer: This responsible for sensor discovery function, a novel feature of the SmartFarmNet platform. The detection feature uses ontology matching, allowing data collected to be re-used/re-purposed (i.e., re-purpose data gathered from one study for another study). For example, using the SmartFarmNet ontology, a semantic reasoner can discover data sources (IoT devices) that measure canopy temperature data deployed in plots that have had a specific nitrogen fertilizer treatment applied (study property). The discovery process provides much more features than a simple filtering operation by using semantic matching and reasoning methods. The sensor explorer uses the Scheduler and Service Delivery and Utility Manager of OpenIoT [31] for sensor and data discovery.

5. SmartFarmNet Platform Implementation

5.1. Do-It-Yourself User Interfaces

5.1.1. Sensor Schema Editor

5.1.2. Sensor Data Visualisation

- Resource Discovery: The user interface allows a user to search for sensors based on domain-based criteria, including genotype, crop treatment, and the barcodes used to identify studies, as well as sensor-based criteria, such as specific platform or location dynamically obtained from the ontology (discovery based on location and genotype i.e. Revenue is presented in the left side of Figure 7).

- Query Composition: The provide search interface presents a list of sensors matching the discovery criteria provided by a user (depicted in the bottom part of Figure 7). The user can then compose a query targeted to the selected sensors. Internally, this makes a request to the Sensor Explorer and the real-time statistical analysis components.

- Service Visualisation: This visualizes the fetched data using a one of the provided visualisation outputs, such as the time-series graph illustrated in the top part of Figure 7.

6. SmartFarmNet Evaluation

select ?sensor, ?values from <graphname> where {

?sensor ssn:type ?type .

?type ssn:observes <http://purl.oclc.orgINET/ssnxlcf/cfproperty#

soil_suction aCsaturation>

?sensor ssn:ofFeature ?samples

?sensor ssn:Observation ?observation

?observation ssn:ObservationValue ?values

?samples phenonet:samples ?plot

?crop phenonet : treated/phenonet : treatmentType

?treatment

FILTER ( bound(?treatment ) && ( ?treatment =

<http://sensordb.csiro.au/id/treatment_type/grazed_high_n>))

}

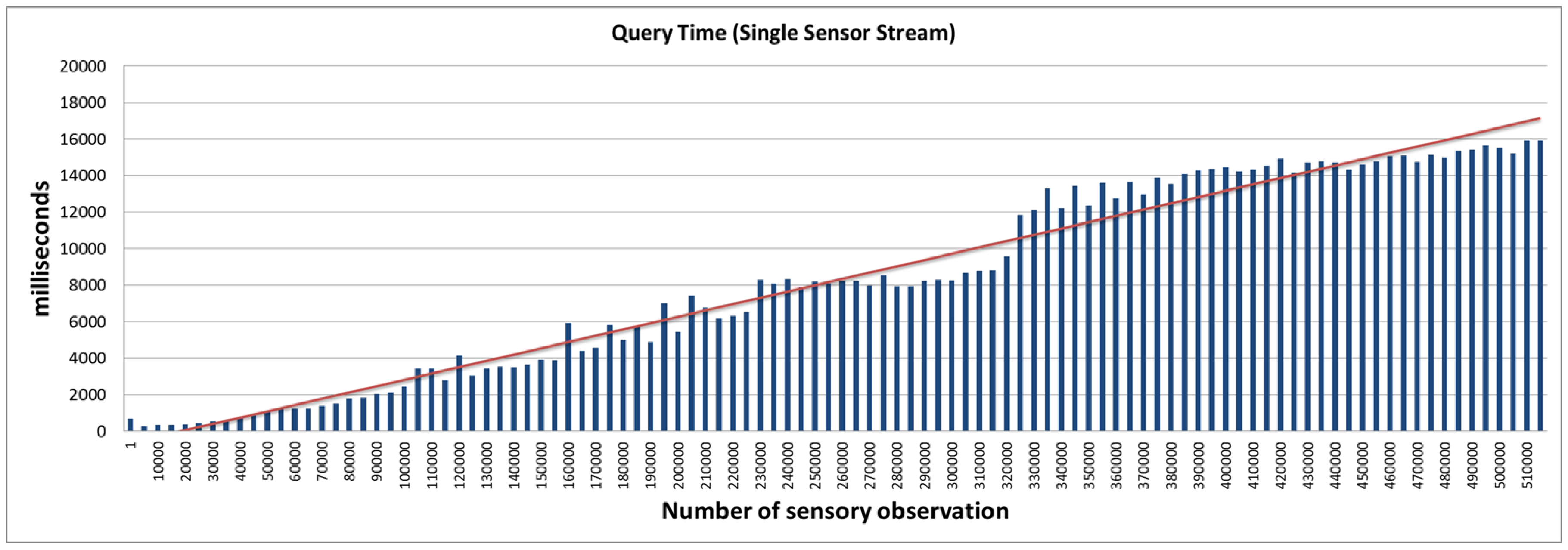

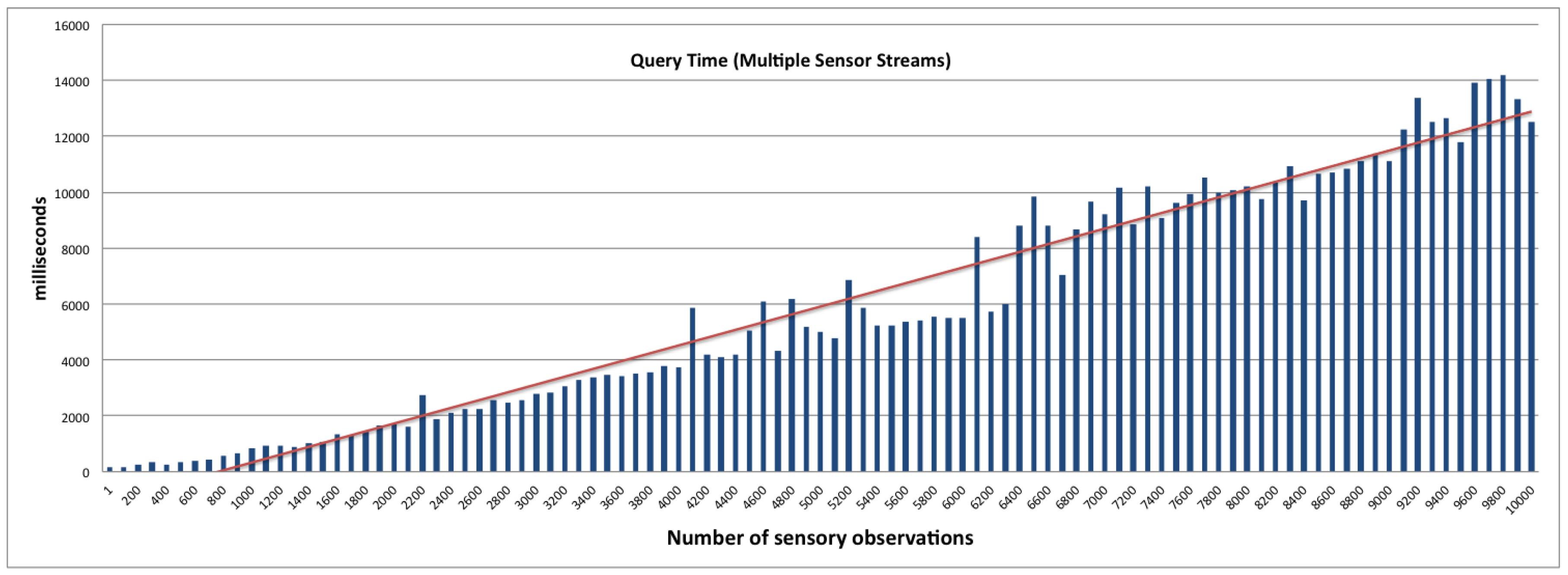

6.1. Query Access Performance Latency

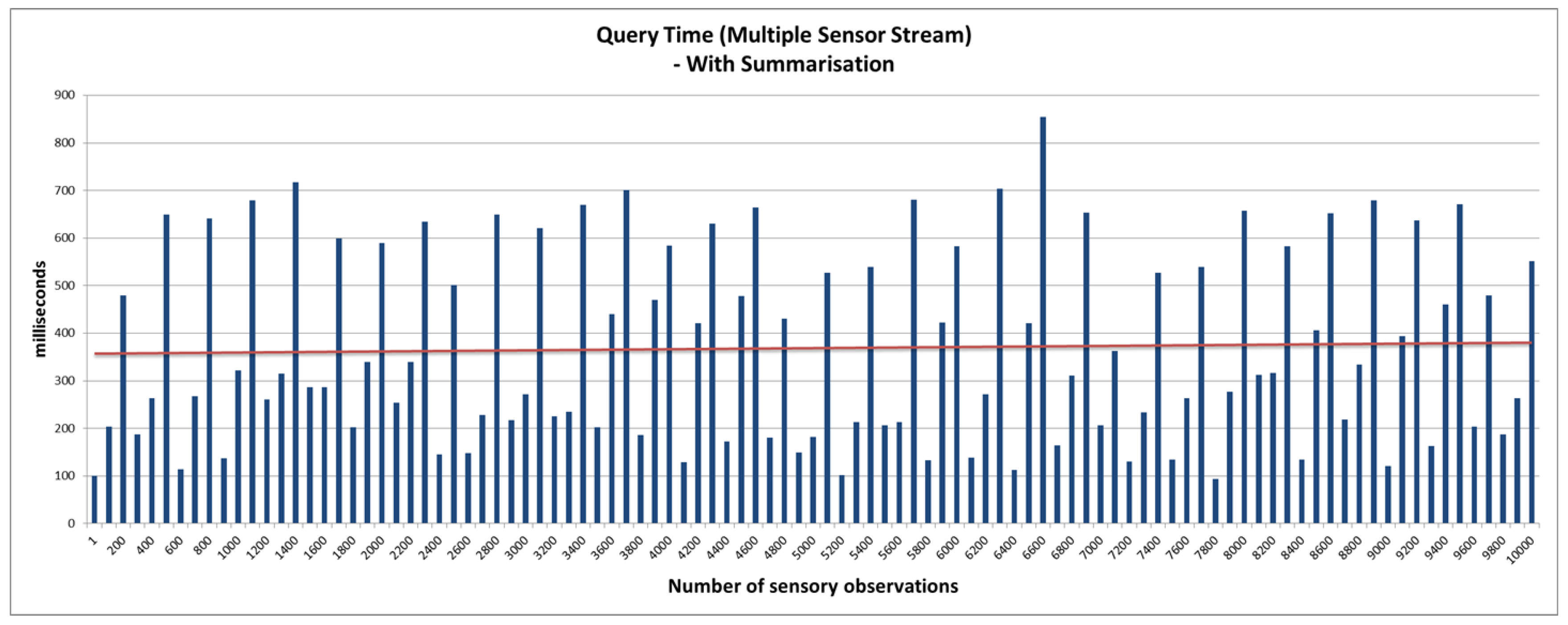

6.2. Evaluation of Micro Summary Computation

7. Lessons Learnt

- Support for virtually any IoT device: The key challenge that we faced in developing the SmartFarmNet platform was managing the plethora of Internet of Things devices, ranging from wireless sensor networks to mobile smart phones to cameras, etc. Our solution was to focus on developing common interfaces (API) and consistent representation of sensors and their data using semantic web technologies and thus moving away from the traditional packaged hardware/software solutions. By using a consistent way of representing data and providing different means to ingest data into the system (from API to wrappers built in Java, Python, R, etc.), we were able to interoperate between IoT hardware silos.

- Provide rapid analysis of data in real-time: One fundamental challenge that underpinned most IoT platforms was in their ability to perform fast analysis of data over a large number of sensor data streams. By employing real-time statistical data analysis, the SmartFarmNet platform was able to achieve this objective. The platform incorporates a scalable methodology, delivering near real-time query response time as compared to traditional SQL-based systems [4].Integration with Semantic Web: By using the semantic web technologies such as ontologies and linked open data, we were able to 1)use currently available semantic web standards, allowing the system to exchange data with other IoT services in the internet; and 2) enforce standards in IoT application development that is currently not prevalent in most existing solutions. Using the semantic web technologies also enabled the platform to be easily extended to new domains, such as aquaculture, cotton growing, etc. In certain cases, SmartFarmNet was also used as a diagnostic tool to understand the performance of the underlying sensor network and detect failures using a pre-computed error estimate for each sensor.

- Do-it-yourself approach for visualisation and analysis of data: The design objective of SmartFarmNet was to empower its users by providing standard tools combined with a flexible and powerful API. By employing a do-it-yourself approach, SmartFarmNet has reached a wider set of users and enabled them to collect crop performance data with any sensor(s). The ability for a farmer to explore and analyse crop growth data using simple selection based on familiar concepts such as crop phenotype, treatment, etc., was very useful in breaking many barriers to the SmartFarmNet uptake.

8. Conclusions

Author Contributions

Conflicts of Interest

References

- Department of Agriculture and Water Resources, Australian Government. Maintaining Food Security in Australia. June 2016. Available online: http://www.agriculture.gov.au/ag-farm-food/food/publications/national_food_plan/white-paper/5-1-maintaining-food-security-in-australia (accessed on 6 September 2016). [Google Scholar]

- FAO. 2016. Available online: http://www.fao.org/home/en/ (accessed on 8 July 2016).

- Phenomics. 2016. Available online: https://en.wikipedia.org/wiki/Phenomics (accessed on 11 July 2016).

- Salehi, A.; Jimenez-Berni, J.; Deery, D.M.; Palmer, D.; Holland, E.; Rozas-Larraondo, P.; Chapman, S.C.; Georgakopoulos, D.; Furbank, R.T. SensorDB: A virtual laboratory for the integration, visualization and analysis of varied biological sensor data. Plant Methods 2015, 11, 53. [Google Scholar] [CrossRef] [PubMed]

- Jayaraman, P.P.; Palmer, D.; Zaslavsky, A.; Georgakopoulos, D. Do-it-Yourself Digital Agriculture applications with semantically enhanced IoT platform. In Proceedings of the 2015 IEEE Tenth International Conference on Intelligent Sensors, Sensor Networks and Information Processing (ISSNIP), Singapore, 7–9 April 2015; pp. 1–6.

- Georgakopoulos, D.; Jayaraman, P.P. Internet of things: From internet scale sensing to smart services. Computing 2016, 98, 10. [Google Scholar] [CrossRef]

- MySQL. 2016. Available online: https://www.mysql.com (accessed on 10 July 2016).

- Big Data-Startup. 2016. Available online: https://datafloq.com/read/j (accessed on 6 July 2016).

- Analytics in Agriculture. 2016. Available online: http://www-01.ibm.com/ (accessed on 6 July 2016).

- SensorCloud. 2016. Available online: http://www.sensorcloud.com (accessed on 10 July 2016).

- UBIDOTS. 2016. Available online: http://www.ubidots.com (accessed on 10 July 2016).

- Xively. 2016. Available online: http://xively.com (accessed on 10 July 2016).

- Think Speak. 2016. Available online: http://www.thinkspeak.comcom (accessed on 10 July 2016).

- opensen. 2016. Available online: http://open.sen.se (accessed on 10 July 2016).

- Amazon IoT Platform. 2016. Available online: https://aws.amazon.com/iot/how-it-works/ (accessed on 3 July 2016).

- IBM Watson Internet of Things. 2016. Available online: http://www.ibm.com/internet-of-things/ (accessed on 18 July 2016).

- Open Source IoT platform. 2016. Available online: https://site.google.com/site/opensourceiotcloud/ (accessed on 12 July 2016).

- Data Turbine. 2016. Available online: http://www.dataturbine.org/ (accessed on 12 July 2016).

- Apache Storm. 2016. Available online: https://github.com/nathanmarz/storm/wiki (accessed on 14 July 2016).

- Libelium. 2016. Available online: http://www.libelium.com/ (accessed on 14 July 2016).

- Arduino. 2016. Available online: http://www.libelium.com/ (accessed on 14 July 2016).

- tMotes. 2016. Available online: http://wirelesssensornetworks.weebly.com/1/post/2013/08/tmote-sky.html (accessed on 14 July 2016).

- Zaslavsky, A.; Jayaraman, P.P. Discovery in the Internet of Things: The Internet of Things (Ubiquity Symposium). Ubiquity 2015, 2015, 1–10. [Google Scholar] [CrossRef]

- Dawes, N.; Kumar, K.A.; Michel, S.; Aberer, K.; Lehning, M. Sensor Metadata Management and Its Application in Collaborative Environmental Research. In Proceedings of the IEEE Fourth International Conference on eScience, eScience ’08, Bloomington, IN, USA, 7 December 2008; pp. 143–150.

- Jeung, H.; Sarni, S.; Paparrizos, I.; Sathe, S.; Aberer, K.; Dawes, N.; Papaioannou, T.G.; Lehning, M. Effective Metadata Management in Federated Sensor Networks. In Proceedings of the 2010 IEEE International Conference on Sensor Networks, Ubiquitous, and Trustworthy Computing (SUTC), Newport Beach, CA, USA, 7–9 June 2010; pp. 107–114.

- Michel, S.; Salehi, A.; Luo, L.; Dawes, N.; Aberer, K.; Barrenetxea, G.; Bavay, M.; Kansal, A.; Kumar, K.A.; Nath, S.; et al. Environmental Monitoring 2.0 (Demonstration). In Proceedings of the 25th International Conference on Data Engineering (ICDE), Shanghai, China, 29 March–2 April 2009.

- SSN. 2016. Available online: https://www.w3.org/2005/Incubator/ssn/ssnx/ssn (accessed on 10 July 2016).

- Berners-Lee, T. Linked Data. 2006. Available online: http://www.w3.org/DesignIssues/LinkedData.html (accessed on 8 July 2016).

- RDF. 2016. Available online: http://www.w3c.org/RDF/ (accessed on 14 July 2016).

- DOLCE. 2016. Available online: http://www.loa.istc.cnr.it/old/DOLCE.html (accessed on 14 July 2016).

- Serrano, M.; Quoc, H.N.M.; Phuoc, D.L.; Hauswirth, M.; Soldatos, J.; Kefalakis, N.; Jayaraman, P.P.; Zaslavsky, A. Defining the Stack for Service Delivery Models and Interoperability in the Internet of Things: A Practical Case With OpenIoT-VDK. IEEE J. Sel. Areas Commun. 2015, 33, 676–689. [Google Scholar] [CrossRef]

- JBOSS. 2016. Available online: http://www.jboss.org (accessed on 16 July 2016).

- Redis. 2016. Available online: http://redis.io/ (accessed on 14 July 2016).

- AWS. 2016. Available online: https://aws.amazon.com/ec2/ (accessed on 14 July 2016).

| Platform | Sensor Discovery | Bring-Your-Own IoT Device | Scalable Data Analysis | Sharing Sensor, Data, and Analysis Results (Virtual Lab) |

|---|---|---|---|---|

| UBIDOTS | Not Supported | Yes, but requires considerable efforts to develop new interfaces | No | No. Only provides API for raw data access |

| Xively | Partial support with no specific approach for metadata description/management | Yes, but requires considerable efforts to develop new interfaces | No | No. Only provides API for raw data access |

| SensorCloud | Not Supported | Supports only vendor-specific sensors (some support for CSV file data) | Partial | Partial |

| IBM Bluemix | Not Supported | Yes, but requires considerable efforts to develop new interfaces | Partial with additional development required | Partial by using existing bluemix infrastructure as a service platform |

| Amazon IoT | Not Supported | Yes, but requires considerable efforts to develop new interfaces | Partial, with additional development required | Partial by using existing EC2 infrastructure as a service platform |

| IoTCloud | Not Supported | Yes, but requires considerable efforts to develop new interfaces | No | No |

| Apache Storm | Not Supported | Yes, but requires considerable efforts to develop new interfaces | No | No |

| SmartFarmNet | Supported via Semantic Web Technologies | Yes with in-built support for 30+ commercial and experimental sensors | Yes, real-time data analytics functions are built in | Yes, easy to use e-commerce-like use interaction model |

| Components | Implementation Details |

|---|---|

| SmartFarmNet gateway (X-GSN) | JAVA-based semantic sensor stream processor. Arduino and ArduCrop sensor wrappers to interface with IoT devices |

| Cloud Data Store (LSM-Light) | LSM-Light developed using JAVA and Open Virtuoso triple store. |

| Sensor Explorer | Java applications deployed in JBOSS |

| Reasoner Service | Apache Jena supported by SmartFarmNet OWL ontology |

| User Interfaces | Do-it-yourself tools developed in Java Server Faces (JSF) |

| Data Analytics | Redis [33] |

| Summarisation | Processing Time Total |

|---|---|

| Hourly | 6620 milliseconds |

| Daily | 9971 milliseconds |

| Weekly | 10,926 milliseconds |

| Monthly | 24,543 milliseconds |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jayaraman, P.P.; Yavari, A.; Georgakopoulos, D.; Morshed, A.; Zaslavsky, A. Internet of Things Platform for Smart Farming: Experiences and Lessons Learnt. Sensors 2016, 16, 1884. https://doi.org/10.3390/s16111884

Jayaraman PP, Yavari A, Georgakopoulos D, Morshed A, Zaslavsky A. Internet of Things Platform for Smart Farming: Experiences and Lessons Learnt. Sensors. 2016; 16(11):1884. https://doi.org/10.3390/s16111884

Chicago/Turabian StyleJayaraman, Prem Prakash, Ali Yavari, Dimitrios Georgakopoulos, Ahsan Morshed, and Arkady Zaslavsky. 2016. "Internet of Things Platform for Smart Farming: Experiences and Lessons Learnt" Sensors 16, no. 11: 1884. https://doi.org/10.3390/s16111884