Epipolar Rectification with Minimum Perspective Distortion for Oblique Images

Abstract

:1. Introduction

2. Methodology

2.1. Algorithm Principle

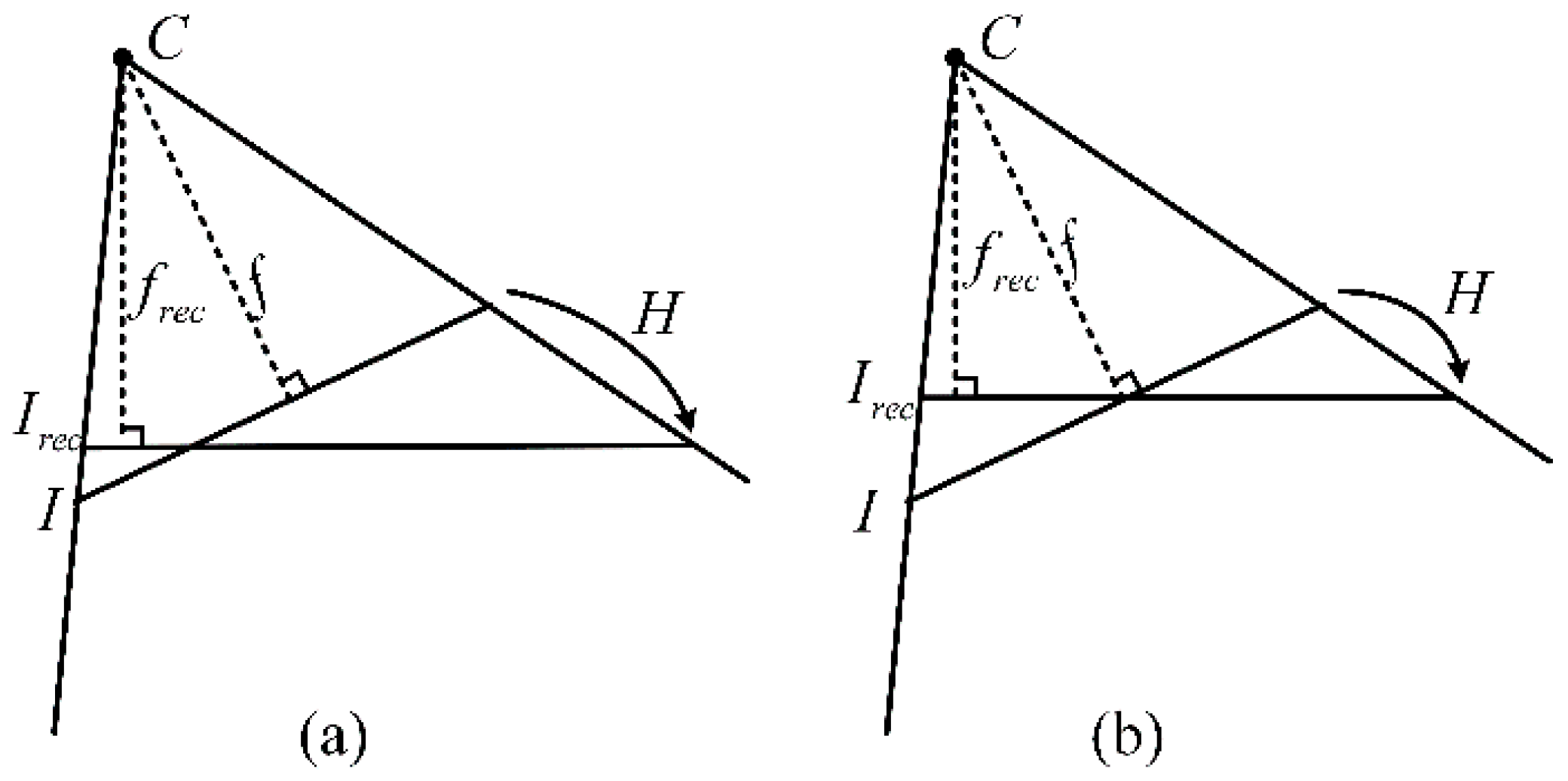

2.1.1. Homographic Transformation

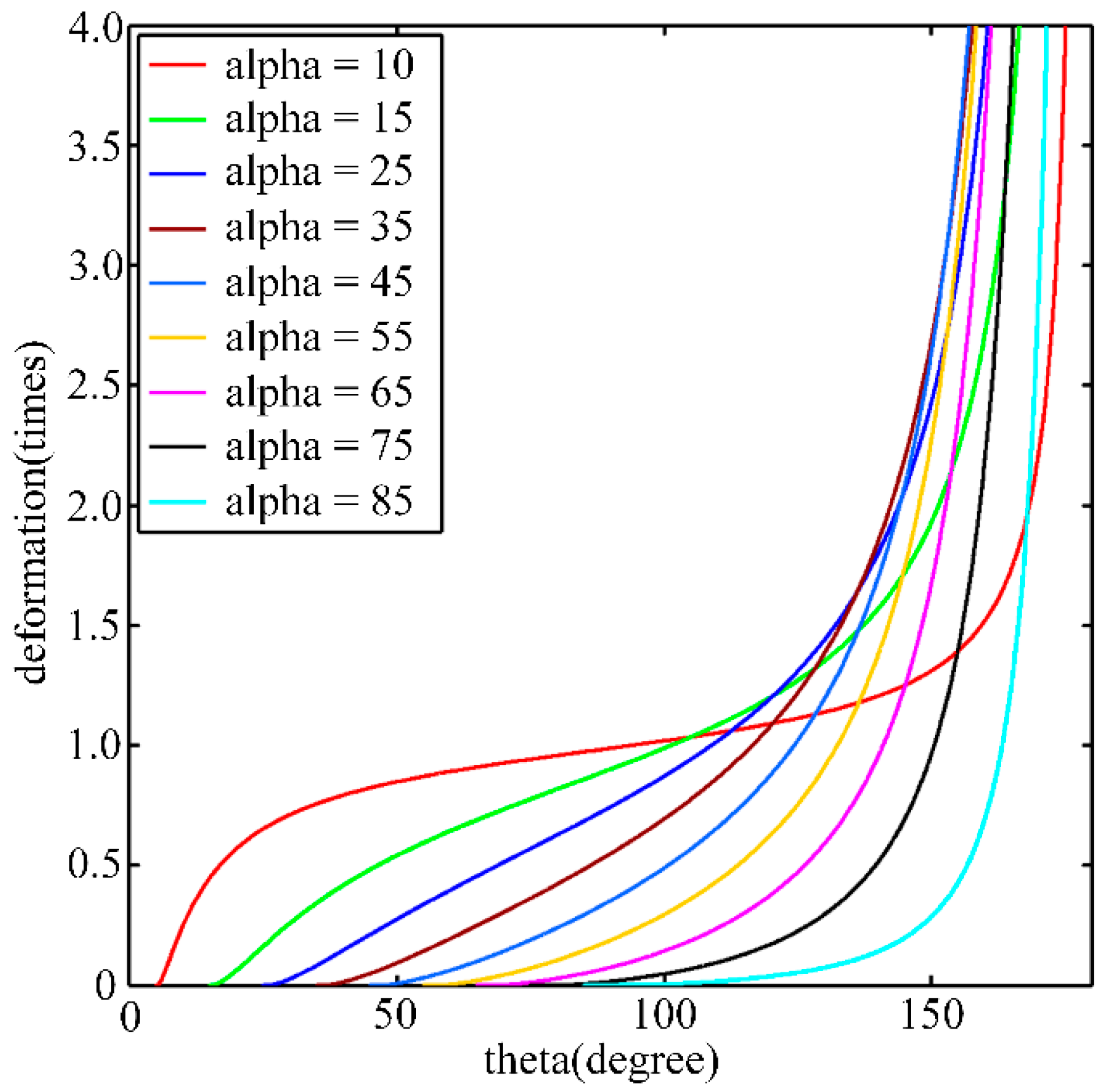

2.1.2. Minimizing Perspective Distortion

2.2. Rectification Algorithm

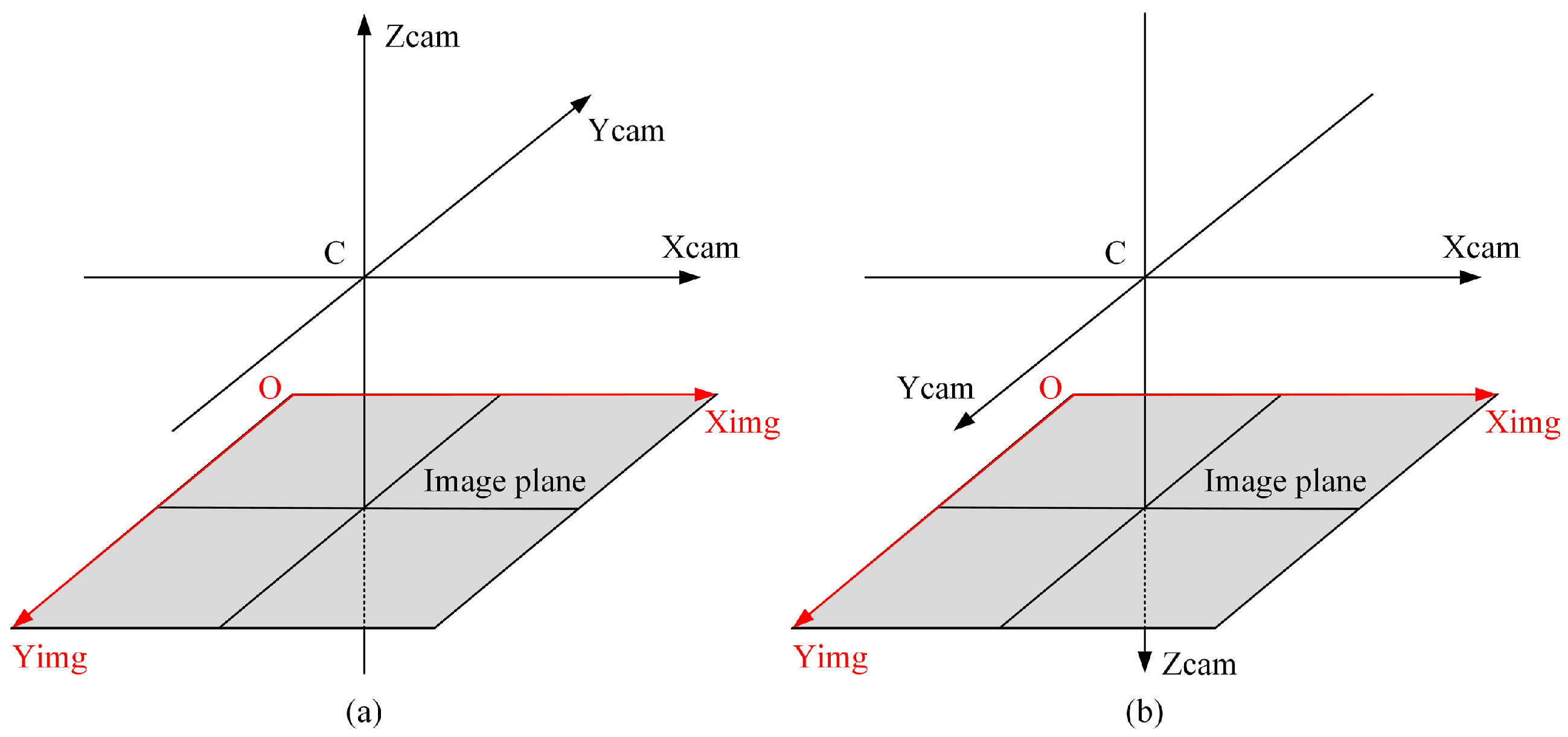

2.2.1. R Matrix of Rectified Image

Basic Rectification

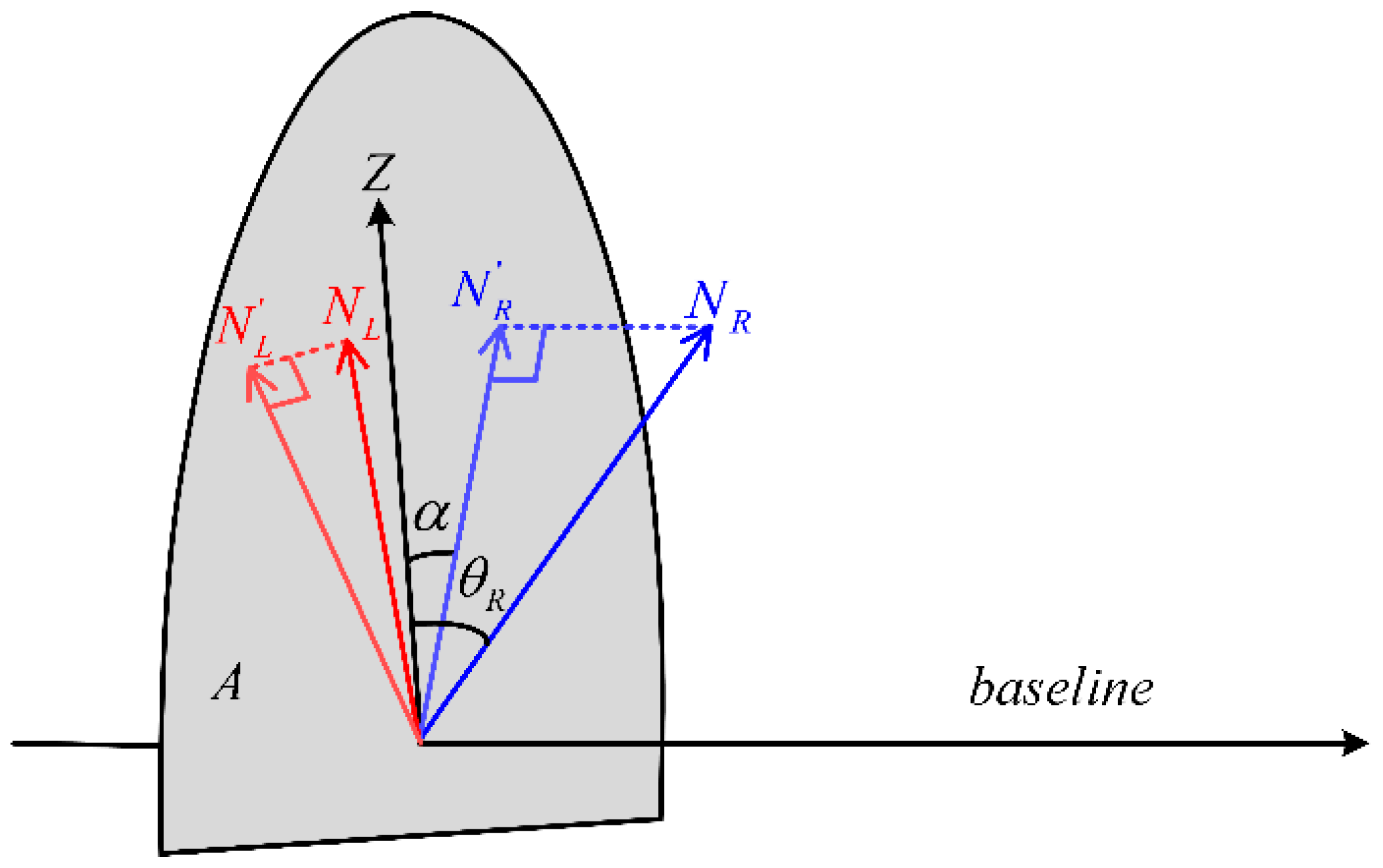

Horizontal or Vertical Rectification

- ;

- must be orthogonal to the baseline;

- should be consistent with the two direction vectors of the original images’ optical axes, i.e., .

General Rectification

2.2.2. Camera Matrix of Rectified Image

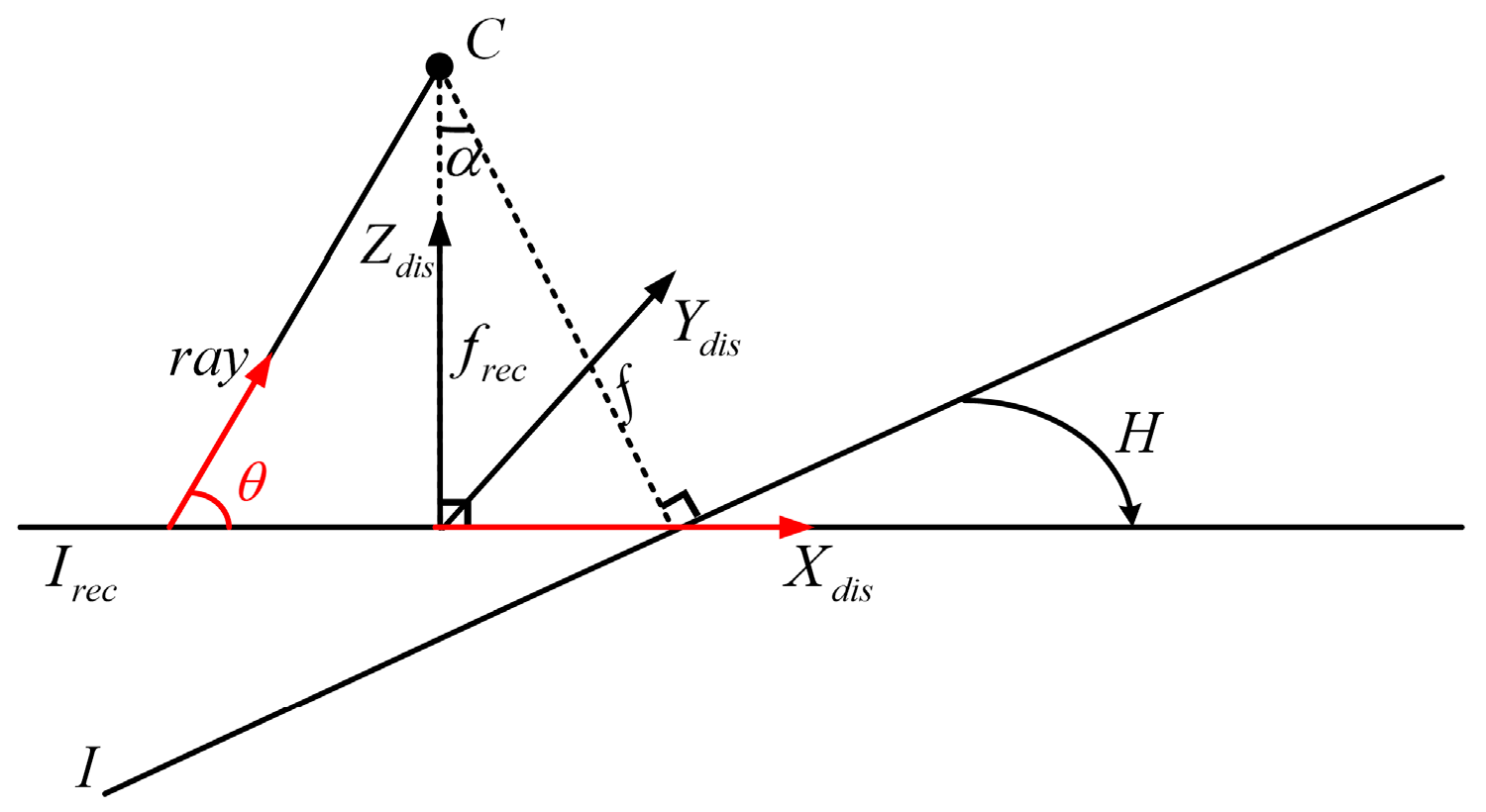

2.3. Distortion Constraints

2.3.1. Distortion Coordinate Frame

2.3.2. Characteristics of Distortion

2.3.3. Constraint Method

3. Experimental Evaluations

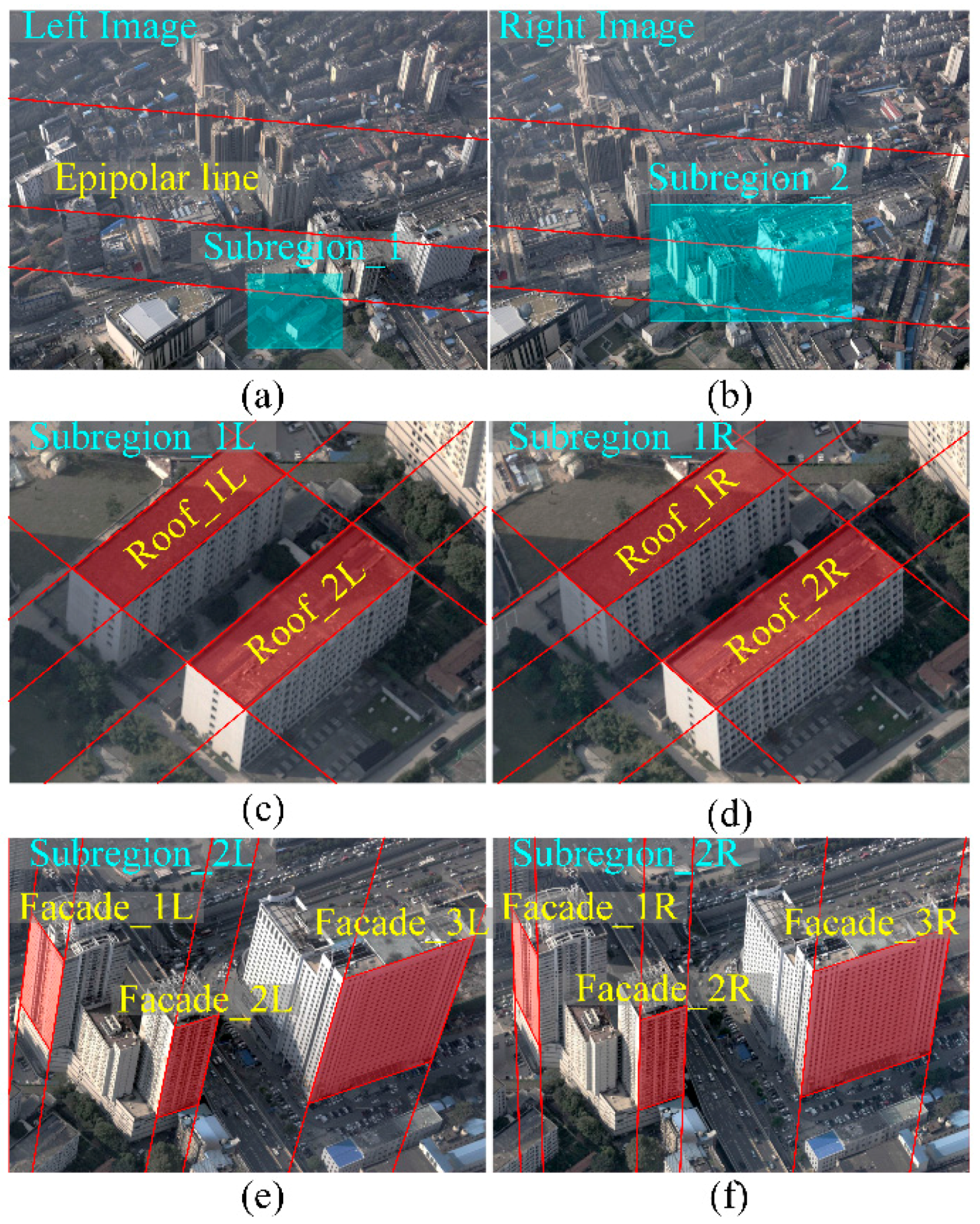

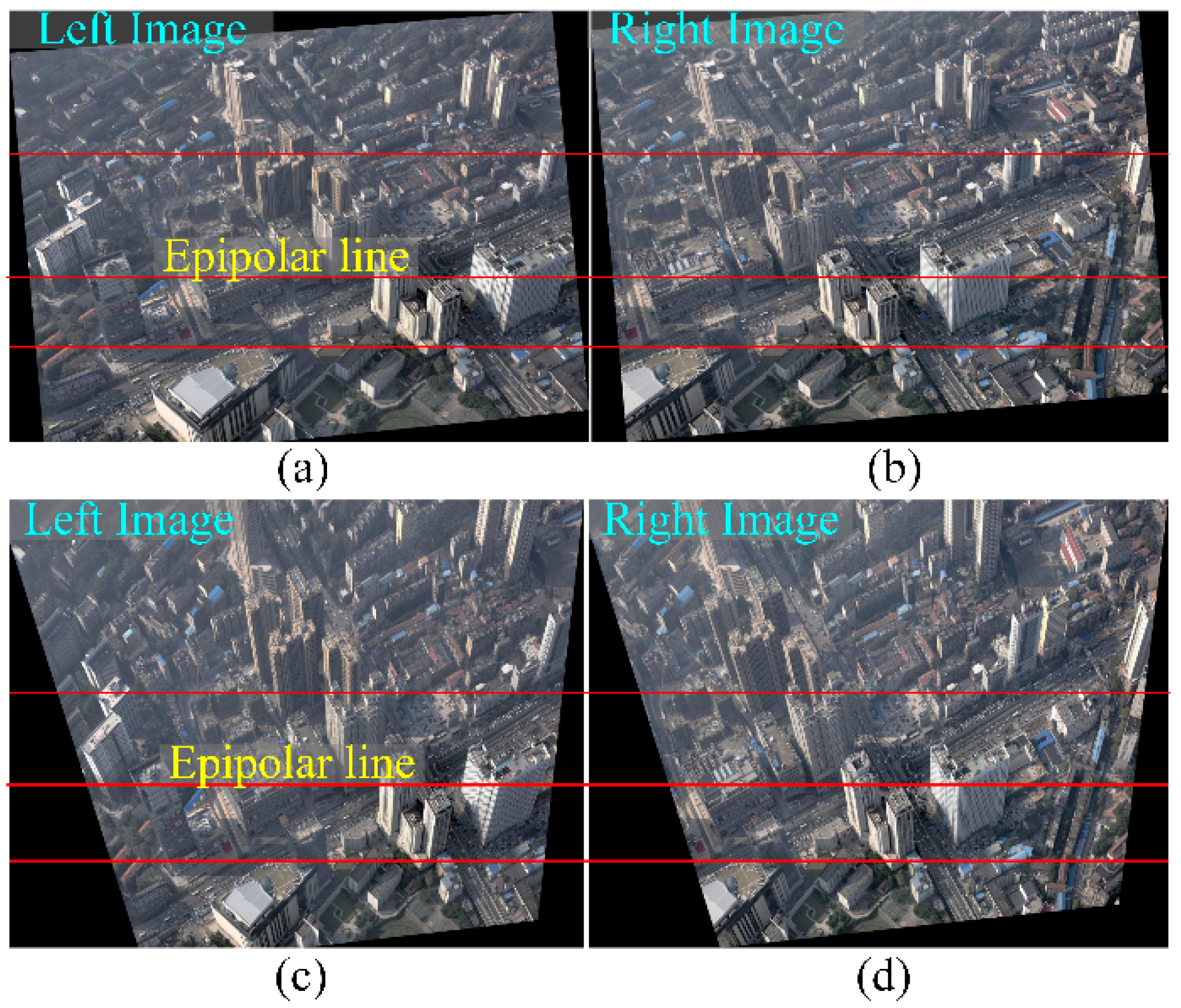

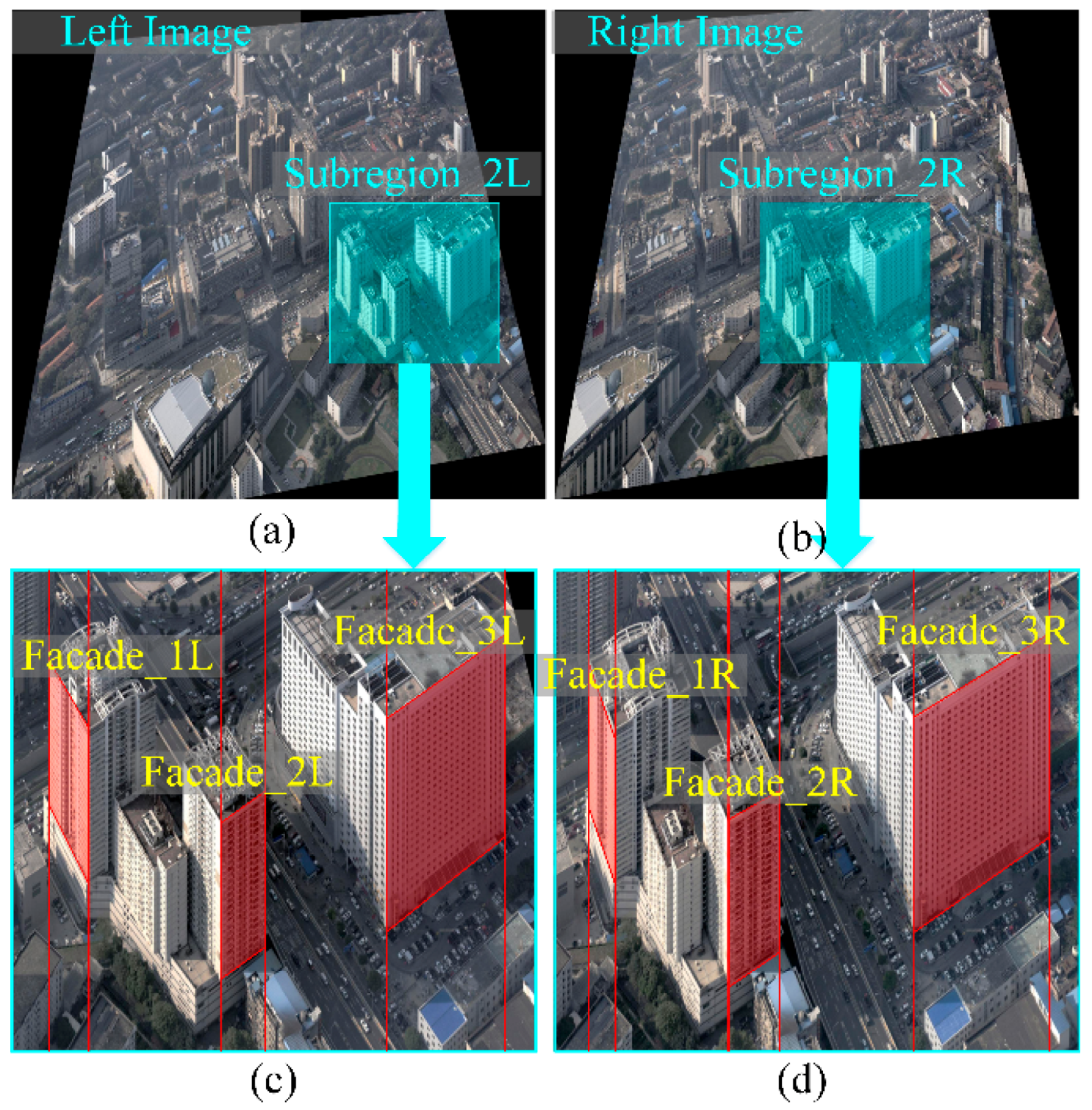

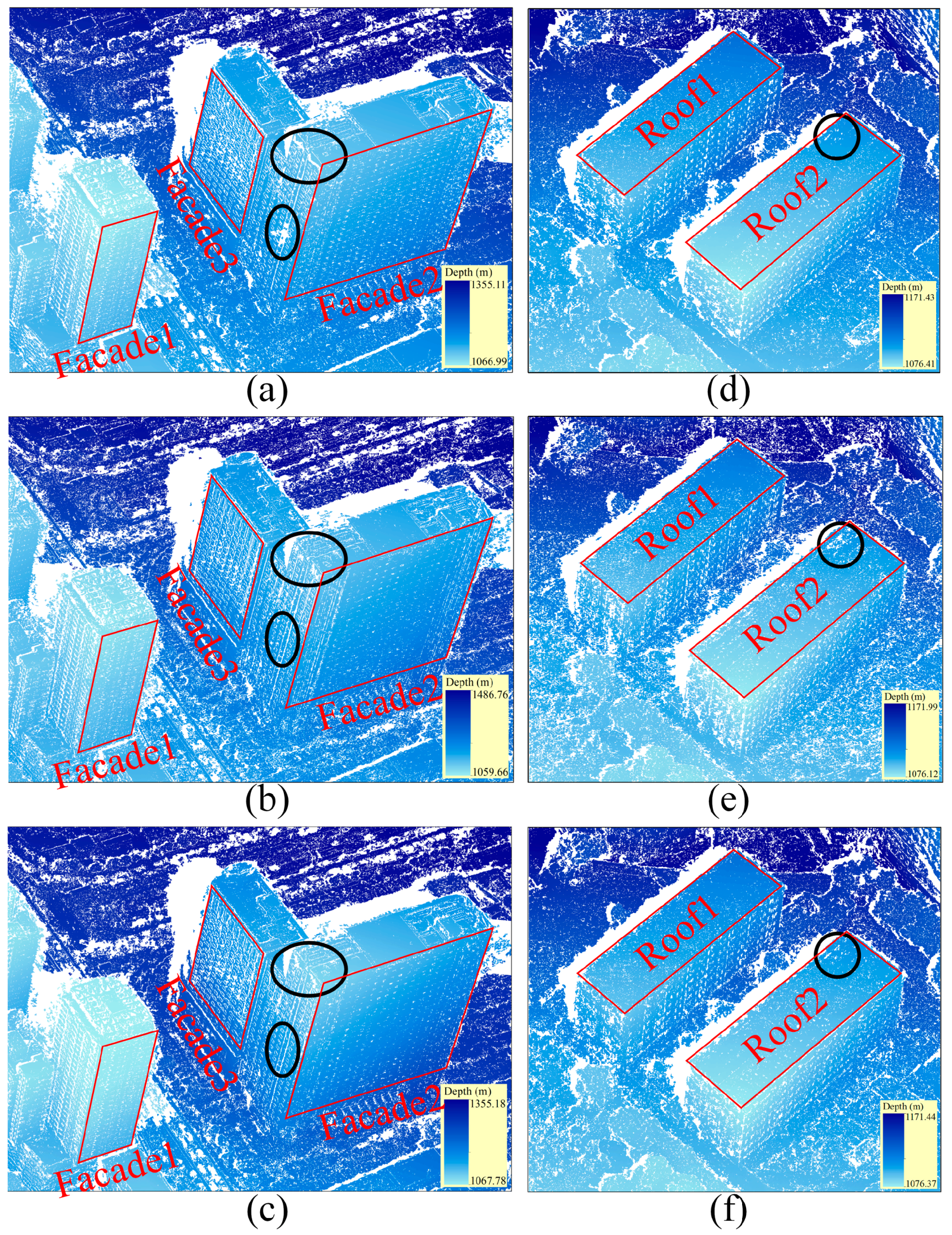

3.1. Performance of Rectification

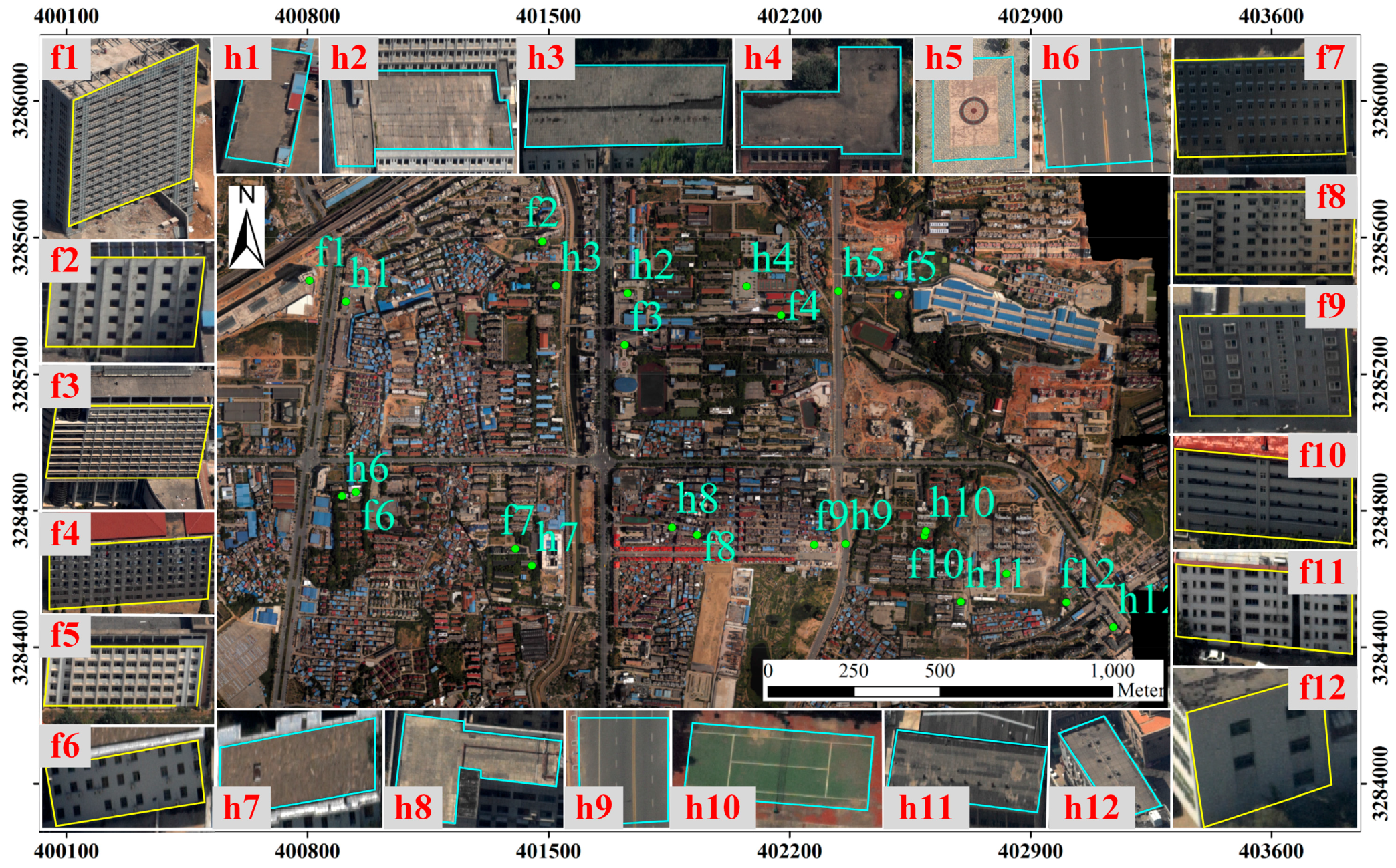

3.2. Quantitative Evaluation of the Matching Results

3.3. Robustness Evaluation

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Peters, J. A look at making real-imagery 3D scenes (texture mapping with nadir and oblique aerial imagery). Photogramm. Eng. Remote Sens. 2015, 81, 535–536. [Google Scholar] [CrossRef]

- Heiko, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar]

- Shahbazi, M.; Sohn, G.; Théau, J.; Menard, P. Development and evaluation of a UAV-photogrammetry system for precise 3D environmental modeling. Sensors 2015, 15, 27493–27524. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.-I.; Kim, T. Comparison of computer vision and photogrammetric approaches for epipolar resampling of image sequence. Sensors 2016, 16, 412. [Google Scholar] [CrossRef] [PubMed]

- Sun, C. Trinocular stereo image rectification in closed-form only using fundamental matrices. In Proceedings of the 2013 20th IEEE International Conference on Image Processing (ICIP), Melbourne, Victoria, Australia, 15–18 September 2013; pp. 2212–2216.

- Chen, Z.; Wu, C.; Tsui, H.T. A new image rectification algorithm. Pattern Recognit. Lett. 2003, 24, 251–260. [Google Scholar] [CrossRef]

- Oram, D. Rectification for any epipolar geometry. In Proceedings of the British Machine Vision Conference, Cardiff, UK, 2–5 September 2002.

- Pollefeys, M.; Koch, R.; Van Gool, L. A simple and efficient rectification method for general motion. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–27 September 1999; pp. 496–501.

- Roy, S.; Meunier, J.; Cox, I.J. Cylindrical rectification to minimize epipolar distortion. In Proceedings of 1997 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’97), San Juan, Puerto Rico, 17–19 June 1997; p. 393.

- Fujiki, J.; Torii, A.; Akaho, S. Epipolar Geometry via Rectification of Spherical Images; Springer: Berlin/Heidelberg, Germany, 2007; pp. 461–471. [Google Scholar]

- Hartley, R.; Gupta, R. Computing matched-epipolar projections. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 15–17 June 1993; pp. 549–555.

- Hartley, R.I. Theory and practice of projective rectification. Int. J. Comput. Vis. 1999, 35, 1–16. [Google Scholar] [CrossRef]

- Loop, C.; Zhang, Z. Computing Rectifying Homographies for Stereo Vision; Microsoft Research Technical Report MSR-TR-99-21; Institute of Electrical and Electronics Engineers, Inc.: New York, NY, USA, 1999. [Google Scholar]

- Wu, H.H.P.; Yu, Y.H. Projective rectification with reduced geometric distortion for stereo vision and stereoscopic video. J. Intell. Robot. Syst. 2005, 42, 71–94. [Google Scholar] [CrossRef]

- Mallon, J.; Whelan, P.F. Projective rectification from the fundamental matrix. Image Vis. Comput. 2005, 23, 643–650. [Google Scholar] [CrossRef] [Green Version]

- Fusiello, A.; Irsara, L. Quasi-euclidean epipolar rectification of uncalibrated images. Mach. Vis. Appl. 2011, 22, 663–670. [Google Scholar] [CrossRef] [Green Version]

- Monasse, P.; Morel, J.M.; Tang, Z. Three-Step Image Rectification; BMVA Press: Durham, UK, 2010; pp. 1–10. [Google Scholar]

- Al-Zahrani, A.; Ipson, S.S.; Haigh, J.G.B. Applications of a direct algorithm for the rectification of uncalibrated images. Inf. Sci. 2004, 160, 53–71. [Google Scholar] [CrossRef]

- Fusiello, A.; Trucco, E.; Verri, A. A compact algorithm for rectification of stereo pairs. Mach. Vis. Appl. 2000, 12, 16–22. [Google Scholar] [CrossRef]

- Kang, Y.S.; Ho, Y.S. Efficient Stereo Image Rectification Method Using Horizontal Baseline; Springer: Berlin/Heidelberg, Germany, 2011; pp. 301–310. [Google Scholar]

- Wang, H.; Shen, S.; Lu, X. Comparison of the camera calibration between photogrammetry and computer vision. In Proceedings of the International Conference on System Science and Engineering, Dalian, China, 30 June–2 July 2012; pp. 358–362.

- Kedzierski, M.; Delis, P. Fast orientation of video images of buildings acquired from a UAV without stabilization. Sensors 2016, 16, 951. [Google Scholar] [CrossRef] [PubMed]

- Triggs, B.; Mclauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle Adjustment—A Modern Synthesis; Springer: Berlin/Heidelberg, Germany, 1999; pp. 298–372. [Google Scholar]

- Rothermel, M.; Wenzel, K.; Fritsch, D.; Haala, N. Sure: Photogrammetric surface reconstruction from imagery. In Proceedings of the LC3D Workshop, Berlin, Germany, 4–5 December 2012.

| Objects | Horizontal Rectification | Commonly Used Methods | |||

|---|---|---|---|---|---|

| Integrity (%) | Precision (RMSE) | Integrity (%) | Precision (RMSE) | ||

| Horizontal Objects | Roof 1 | 99.15% | 10.1 cm | 98.89% | 15.2 cm |

| Roof 2 | 98.86% | 18.1 cm | 98.55% | 21.5 cm | |

| Objects | Commonly Used Methods | Vertical Rectification | |||

|---|---|---|---|---|---|

| Integrity (%) | Precision (RMSE) | Integrity (%) | Precision (RMSE) | ||

| Vertical Façades | Façade 1 | 92.12% | 53.3 cm | 92.47% | 52.5 cm |

| Façade 2 | 97.45% | 24.7 cm | 98.52% | 24.5cm | |

| Façade 3 | 79.28% | 27.1 cm | 82.40% | 25.6 cm | |

| Objects | Horizontal Rectification | Vertical Rectification | |||

|---|---|---|---|---|---|

| Integrity (%) | Precision (RMSE) | Integrity (%) | Precision (RMSE) | ||

| Horizontal Objects | Roof 1 | 99.15% | 10.1 cm | 97.94% | 22.4 cm |

| Roof 2 | 98.86% | 18.1 cm | 97.31% | 27.2 cm | |

| Vertical Façades | Façade 1 | 91.44% | 56.6 cm | 92.47% | 52.5 cm |

| Façade 2 | 97.18% | 30.4 cm | 98.52% | 24.5cm | |

| Façade 3 | 78.63% | 27.6 cm | 82.40% | 25.6 cm | |

| Objects | Commonly Used Methods Precision (RMSE) | Horizontal Rectification Precision (RMSE) | Improvement (%) |

|---|---|---|---|

| h1 | 20.02 cm | 15.30 cm | 23.56% |

| h2 | 15.81 cm | 9.57 cm | 39.46% |

| h3 | 14.98 cm | 8.14 cm | 45.69% |

| h4 | 10.08 cm | 6.55 cm | 35.00% |

| h5 | 39.75 cm | 25.59 cm | 35.62% |

| h6 | 8.92 cm | 6.34 cm | 28.91% |

| h7 | 20.50 cm | 13.78 cm | 32.80% |

| h8 | 13.82 cm | 7.64 cm | 44.75% |

| h9 | 37.63 cm | 27.41 cm | 27.16% |

| h10 | 33.30 cm | 20.93 cm | 37.15% |

| h11 | 17.99 cm | 11.26 cm | 37.41% |

| h12 | 28.20 cm | 24.95 cm | 11.54% |

| Objects | Commonly Used Methods Precision (RMSE) | Vertical Rectification Precision (RMSE) | Improvement (%) |

|---|---|---|---|

| f1 | 17.18 cm | 15.05 cm | 12.42% |

| f2 | 22.18 cm | 18.25 cm | 17.72% |

| f3 | 32.88 cm | 25.12 cm | 23.58% |

| f4 | 44.15 cm | 36.67 cm | 16.94% |

| f5 | 17.02 cm | 8.95 cm | 47.39% |

| f6 | 30.72 cm | 22.92 cm | 25.38% |

| f7 | 23.71 cm | 14.46 cm | 39.02% |

| f8 | 39.87 cm | 35.09 cm | 12.00% |

| f9 | 27.83 cm | 21.18 cm | 23.90% |

| f10 | 41.20 cm | 32.79 cm | 20.40% |

| f11 | 44.21 cm | 32.42 cm | 26.68% |

| f12 | 50.84 cm | 47.12 cm | 7.31% |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Guo, B.; Jiang, W.; Gong, W.; Xiao, X. Epipolar Rectification with Minimum Perspective Distortion for Oblique Images. Sensors 2016, 16, 1870. https://doi.org/10.3390/s16111870

Liu J, Guo B, Jiang W, Gong W, Xiao X. Epipolar Rectification with Minimum Perspective Distortion for Oblique Images. Sensors. 2016; 16(11):1870. https://doi.org/10.3390/s16111870

Chicago/Turabian StyleLiu, Jianchen, Bingxuan Guo, Wanshou Jiang, Weishu Gong, and Xiongwu Xiao. 2016. "Epipolar Rectification with Minimum Perspective Distortion for Oblique Images" Sensors 16, no. 11: 1870. https://doi.org/10.3390/s16111870