An Integrated Wireless Wearable Sensor System for Posture Recognition and Indoor Localization

Abstract

:1. Introduction

2. System Design

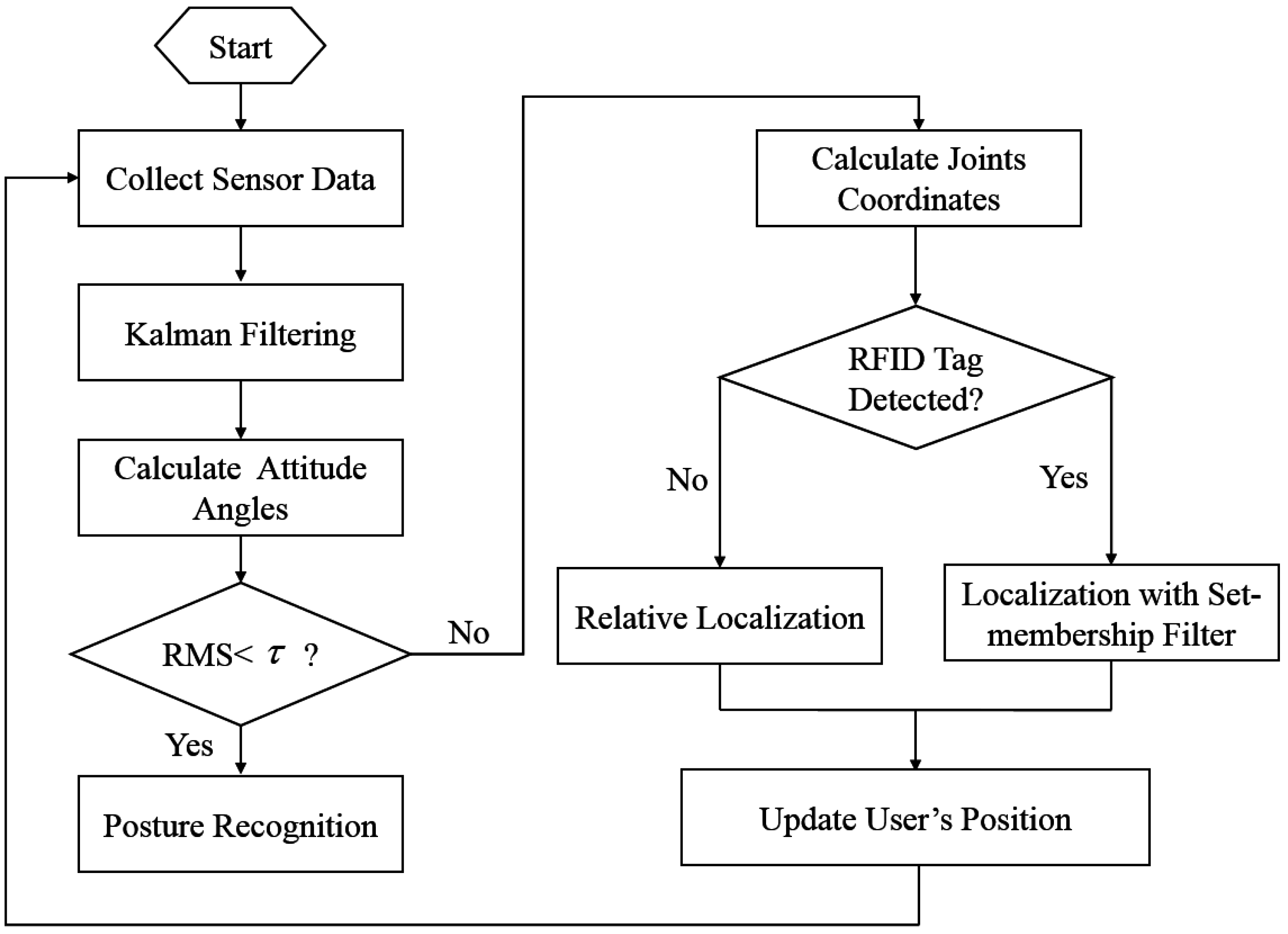

2.1. System Structure and Working Principle

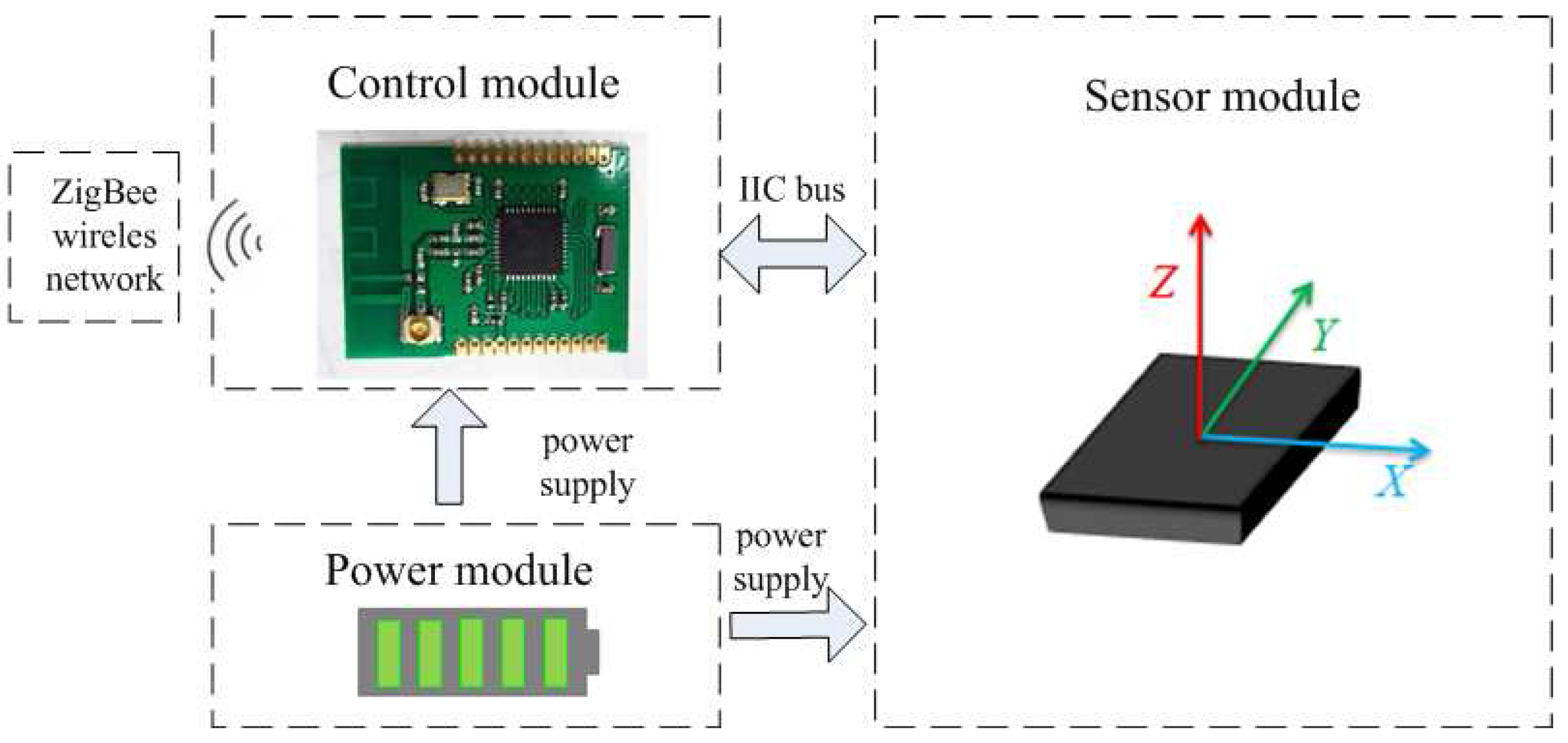

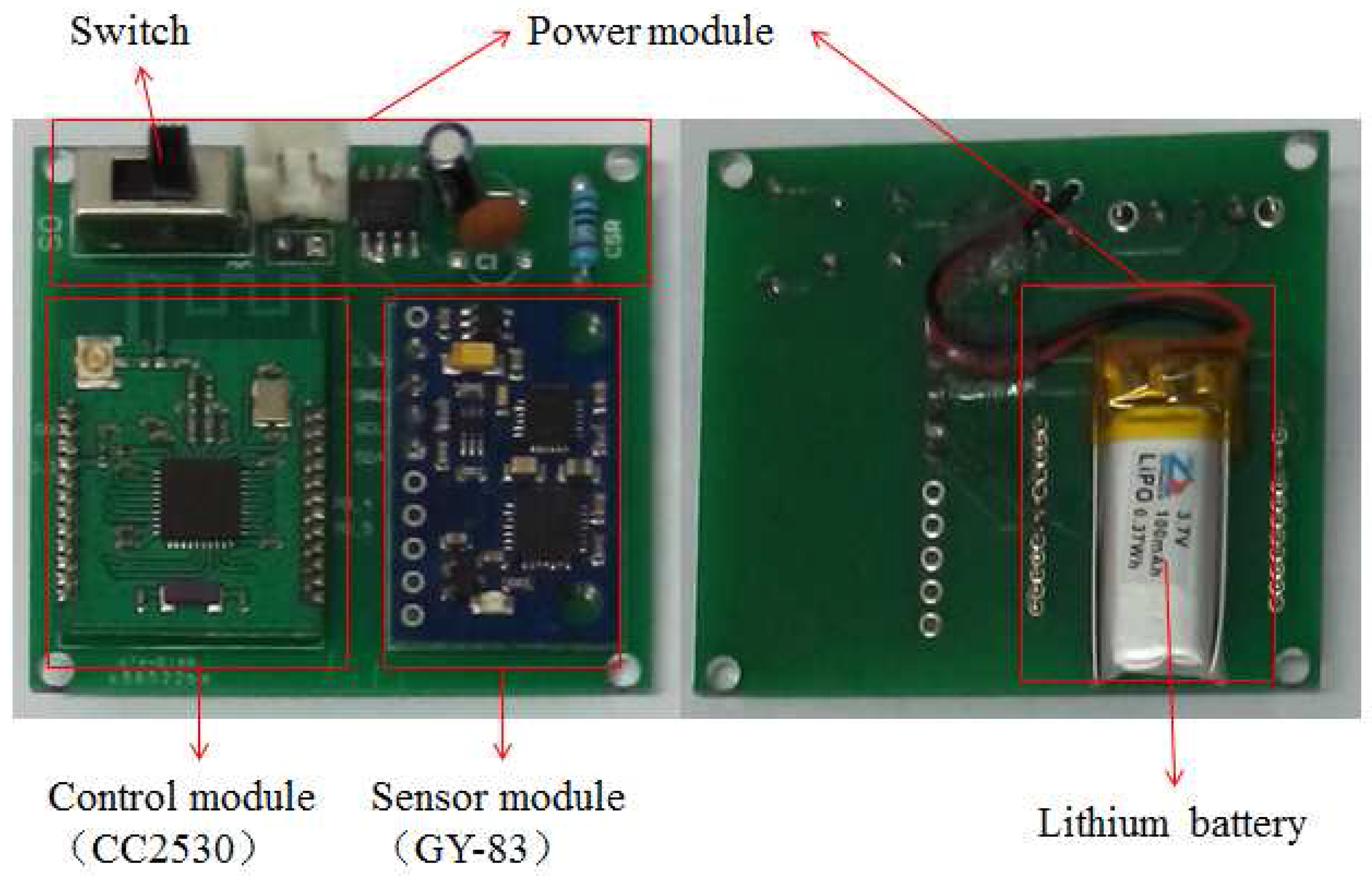

2.2. Sensor Node Design

3. Related Algorithm Description

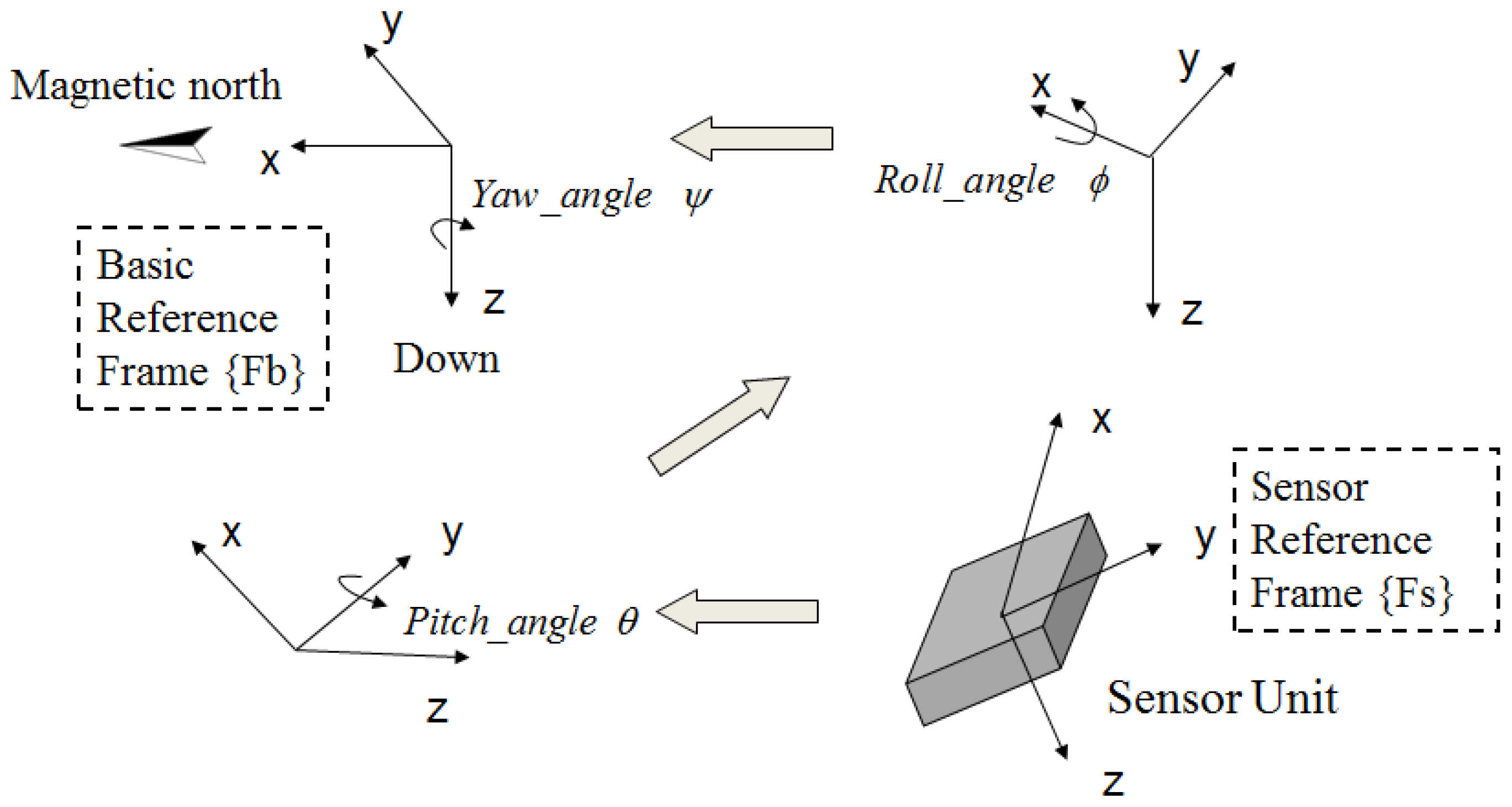

3.1. The Calculation of Attitude Angle for Single Sensor Node

3.2. Posture Recognition

3.3. Localization Algorithm Based on Inertial Navigation

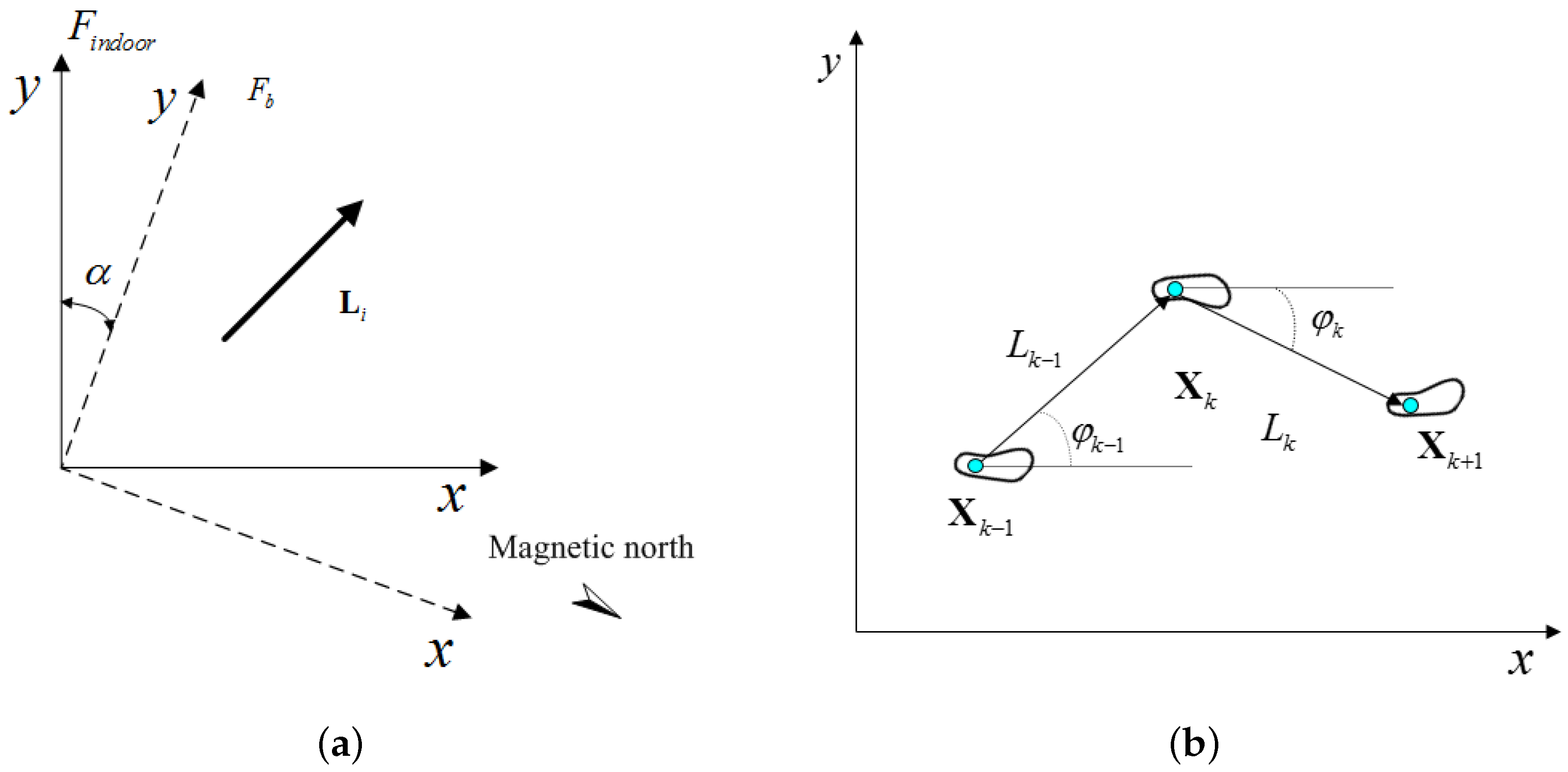

3.3.1. The Calculation of Joints Coordinates

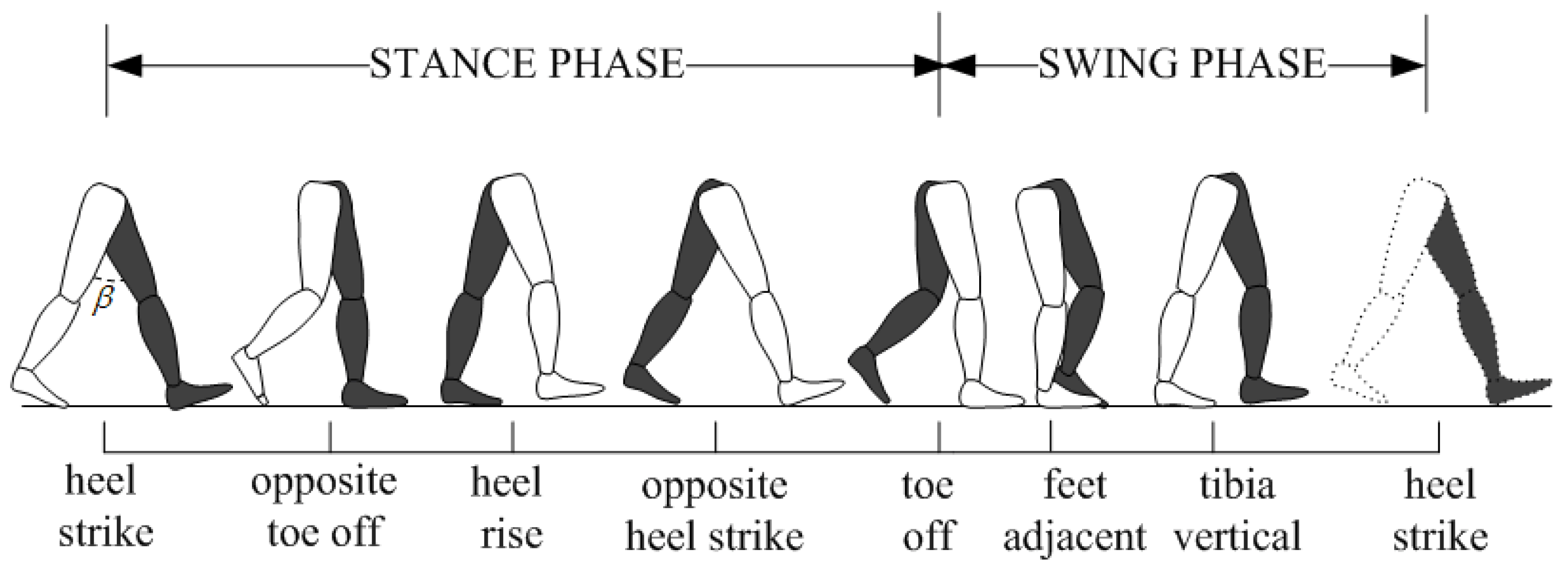

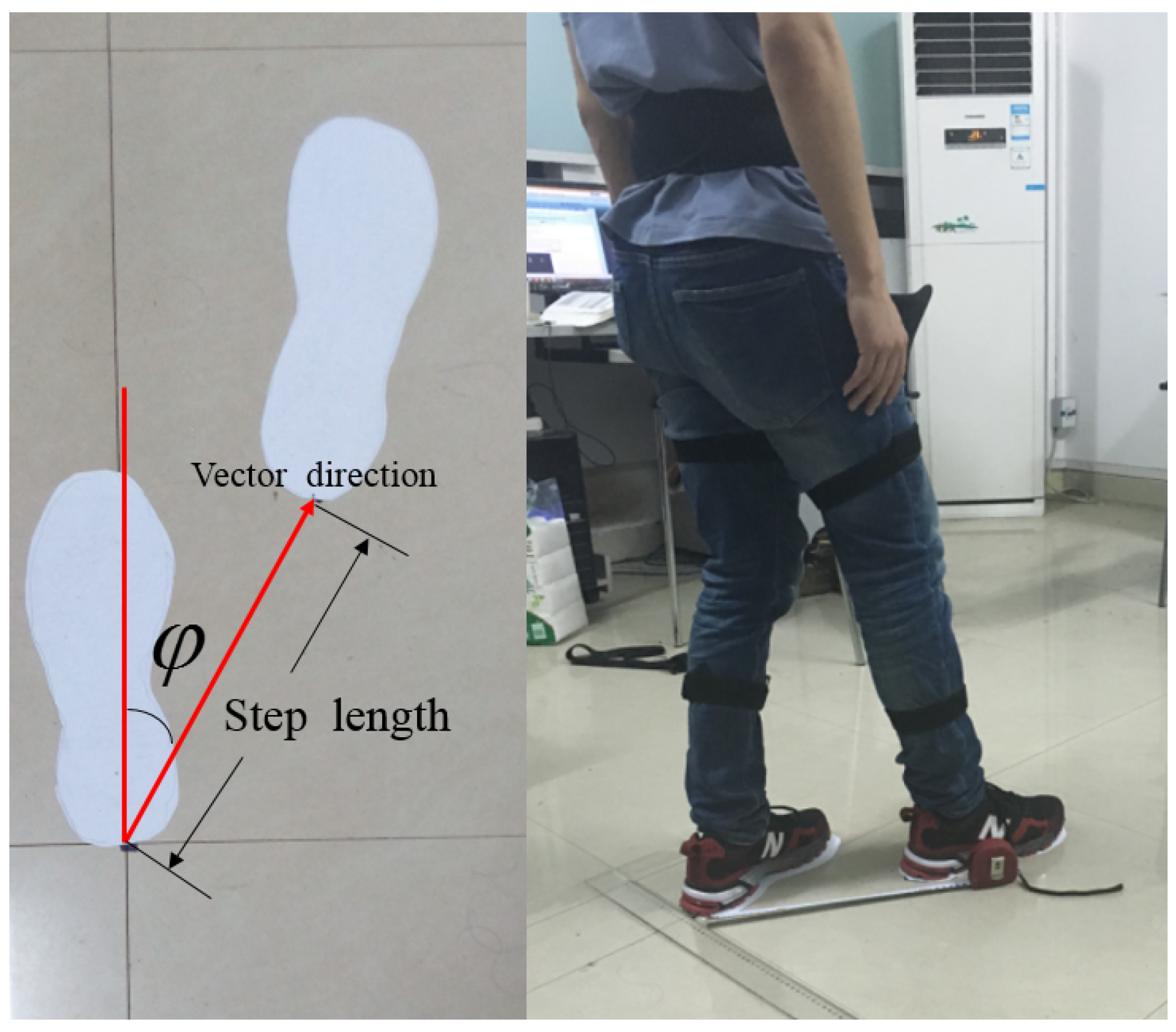

3.3.2. Relative Localization Algorithm Based on Step Length

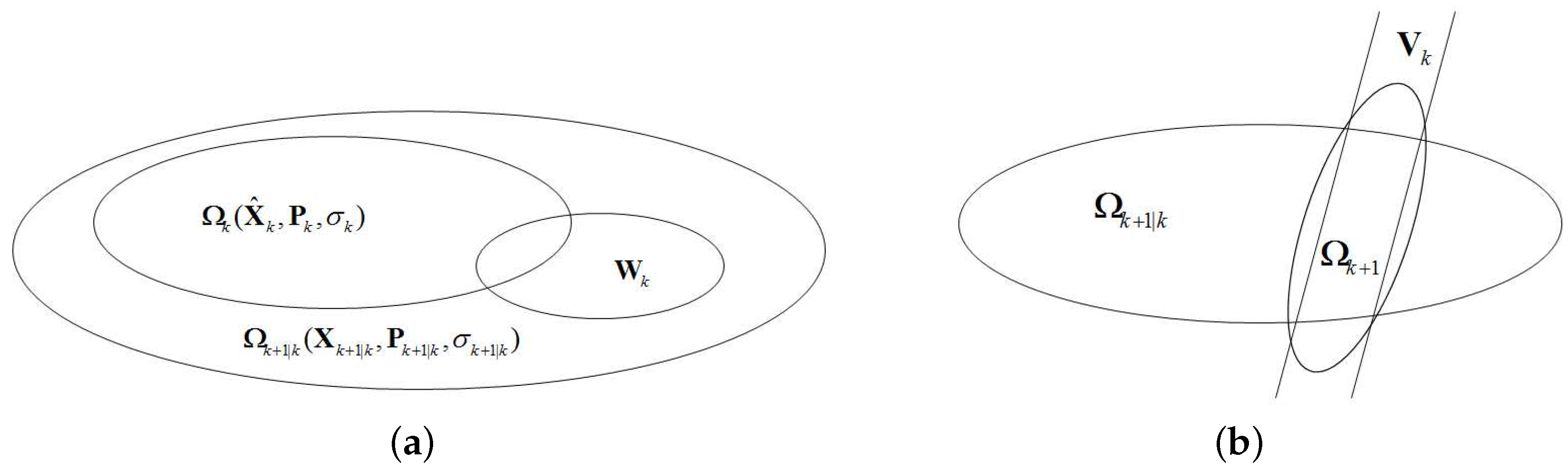

3.3.3. Set-Membership Filter Algorithm with Incomplete Observation

| Algorithm 1: Set-membership filter with incomplete observation |

| Require: |

| 1: Calculate from Equation (29) |

| 2: Select the parameter from Equation (33) |

| 3: Calculate from Equation (31), calculate from Equation (30) |

| 4: if then |

| 5: Select the parameter from Equation (40) |

| 6: Calculate from Equation (35), calculate from Equation (34), calculate from Equation (36) |

| 7: else |

| 8: Calculate from Equation (42) , calculate from Equation (41), calculate from Equation (43) |

| 9: end if |

| 10: return |

4. Experiments Results and Discussion

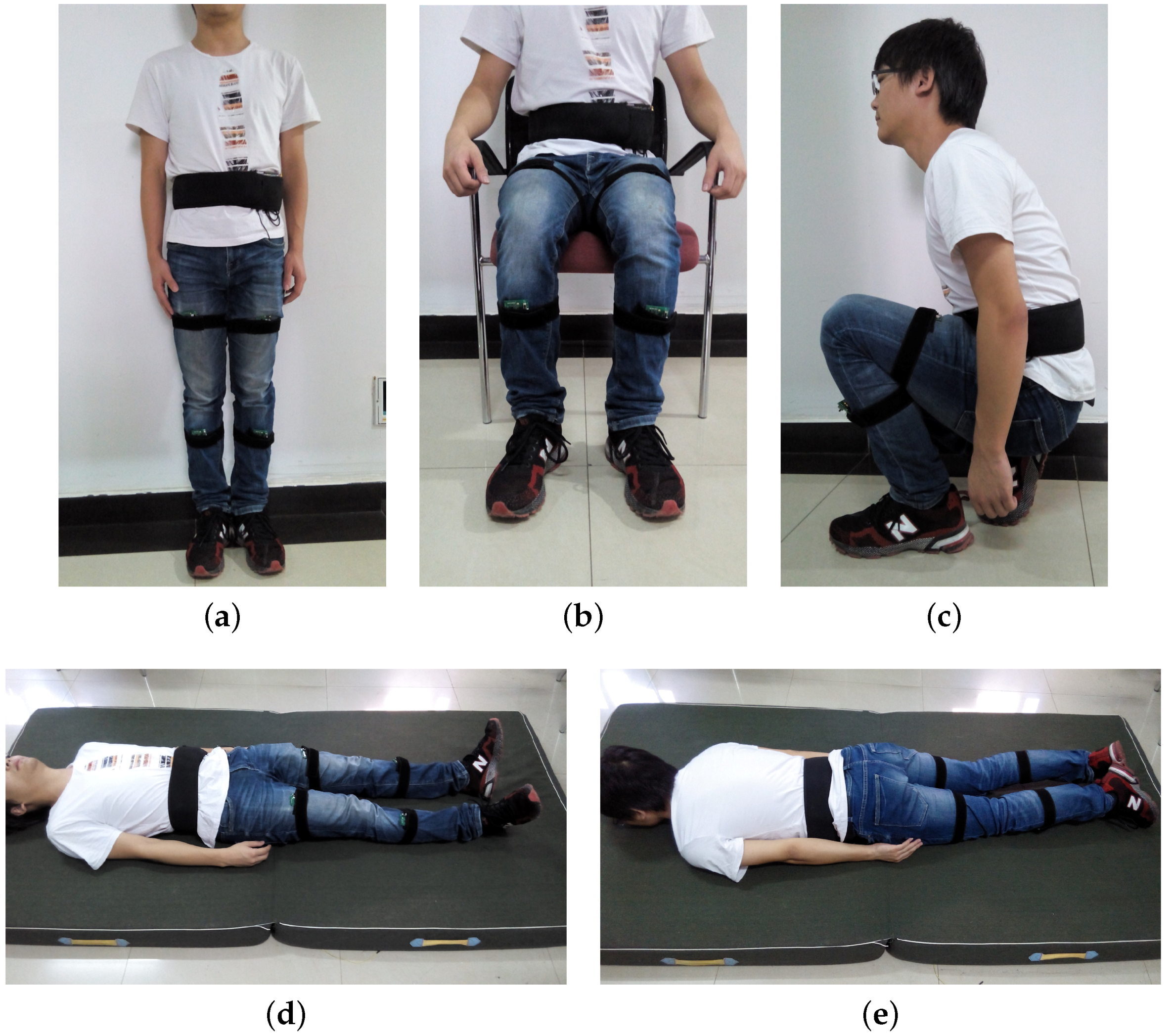

4.1. Posture Recognition Using Wireless Wearable Sensors System

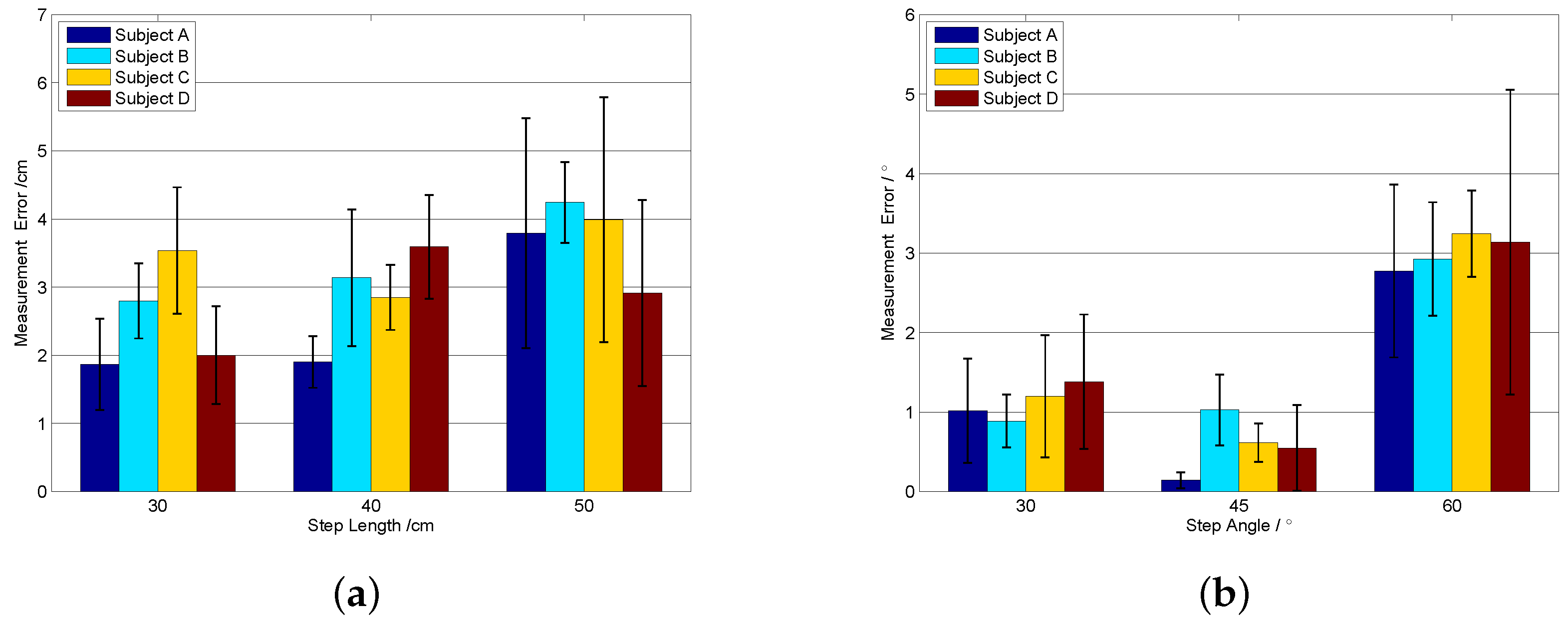

4.2. One-Step Vector Measurement Experiments

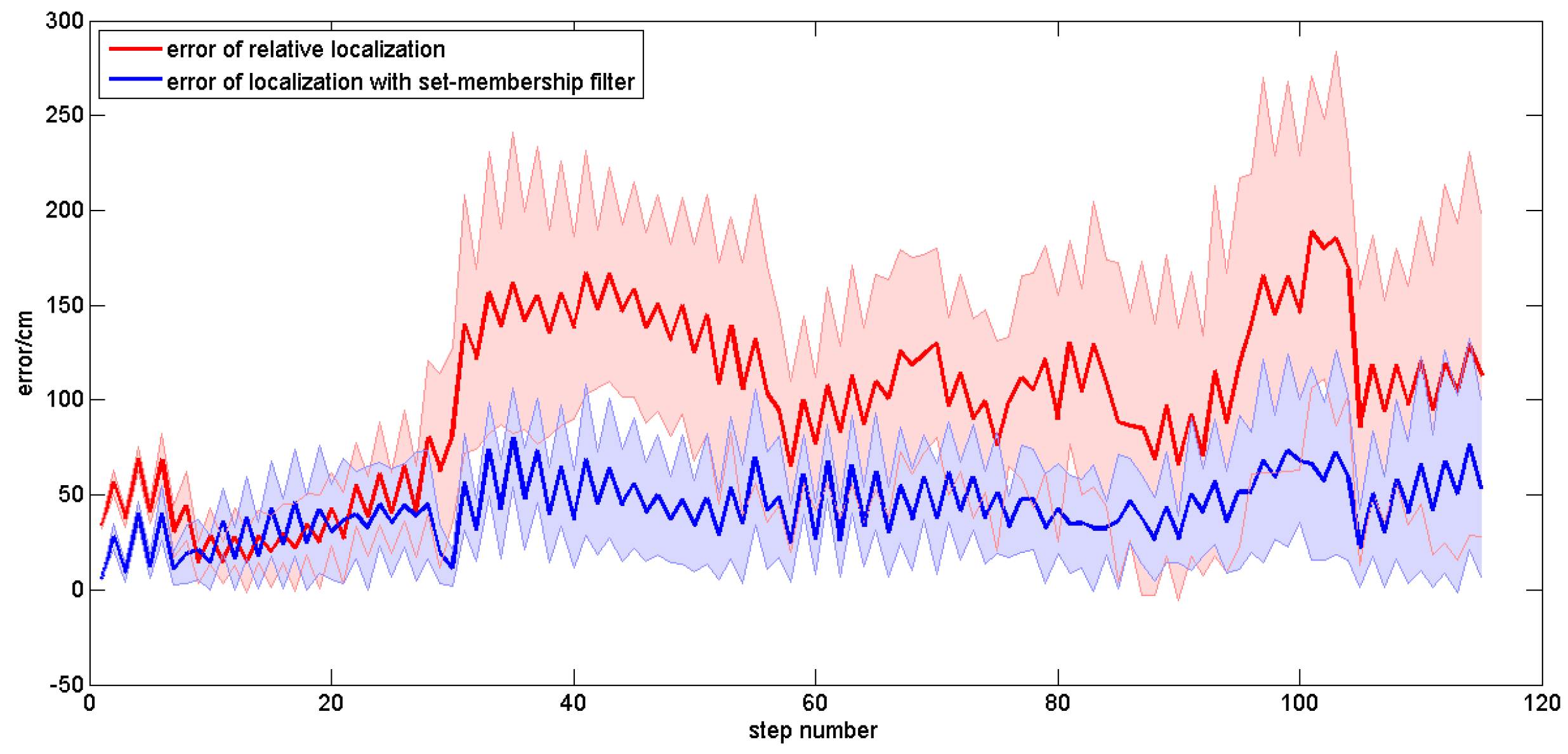

4.3. Indoor Localization Experiments

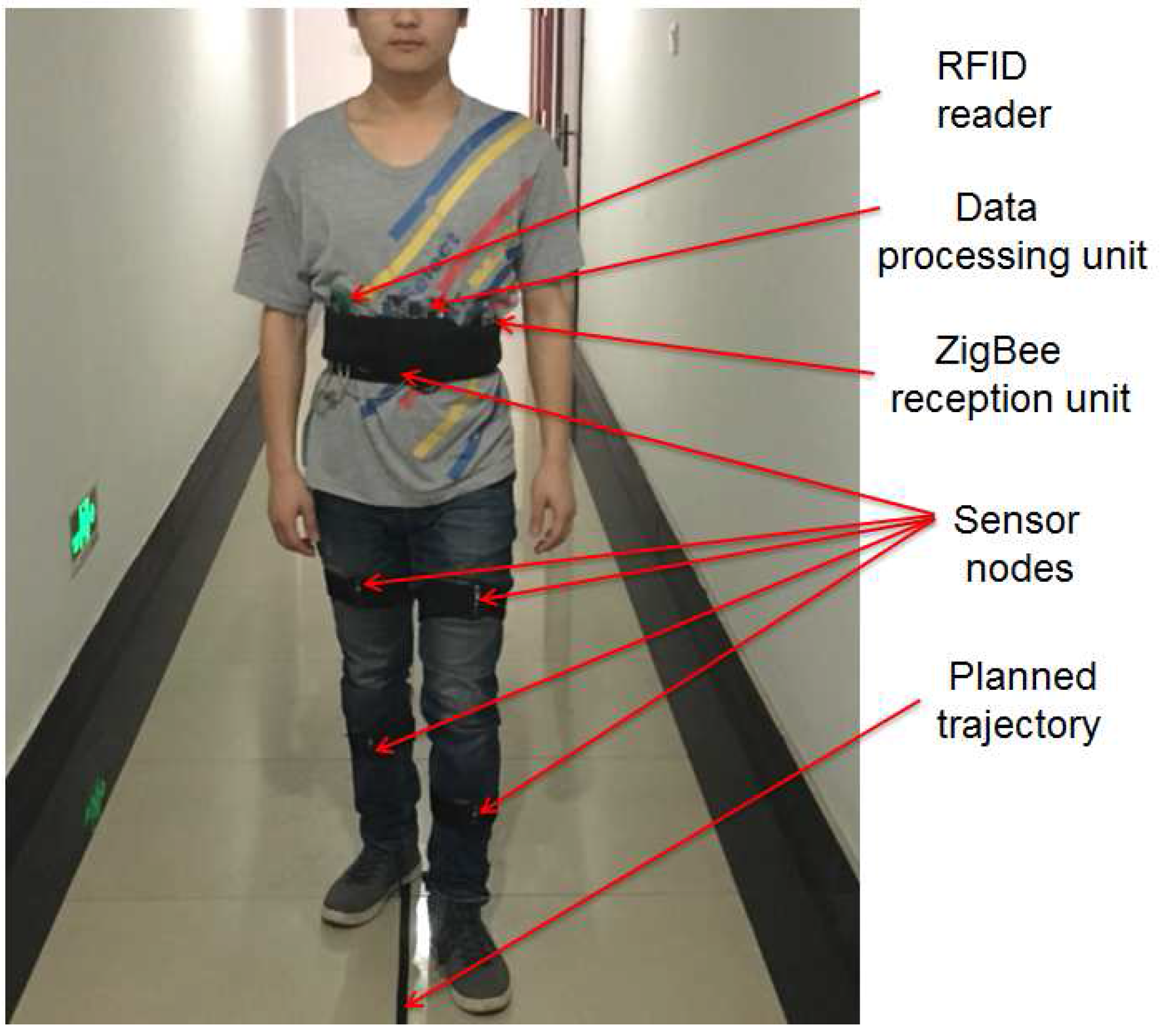

4.3.1. Description of Experiments

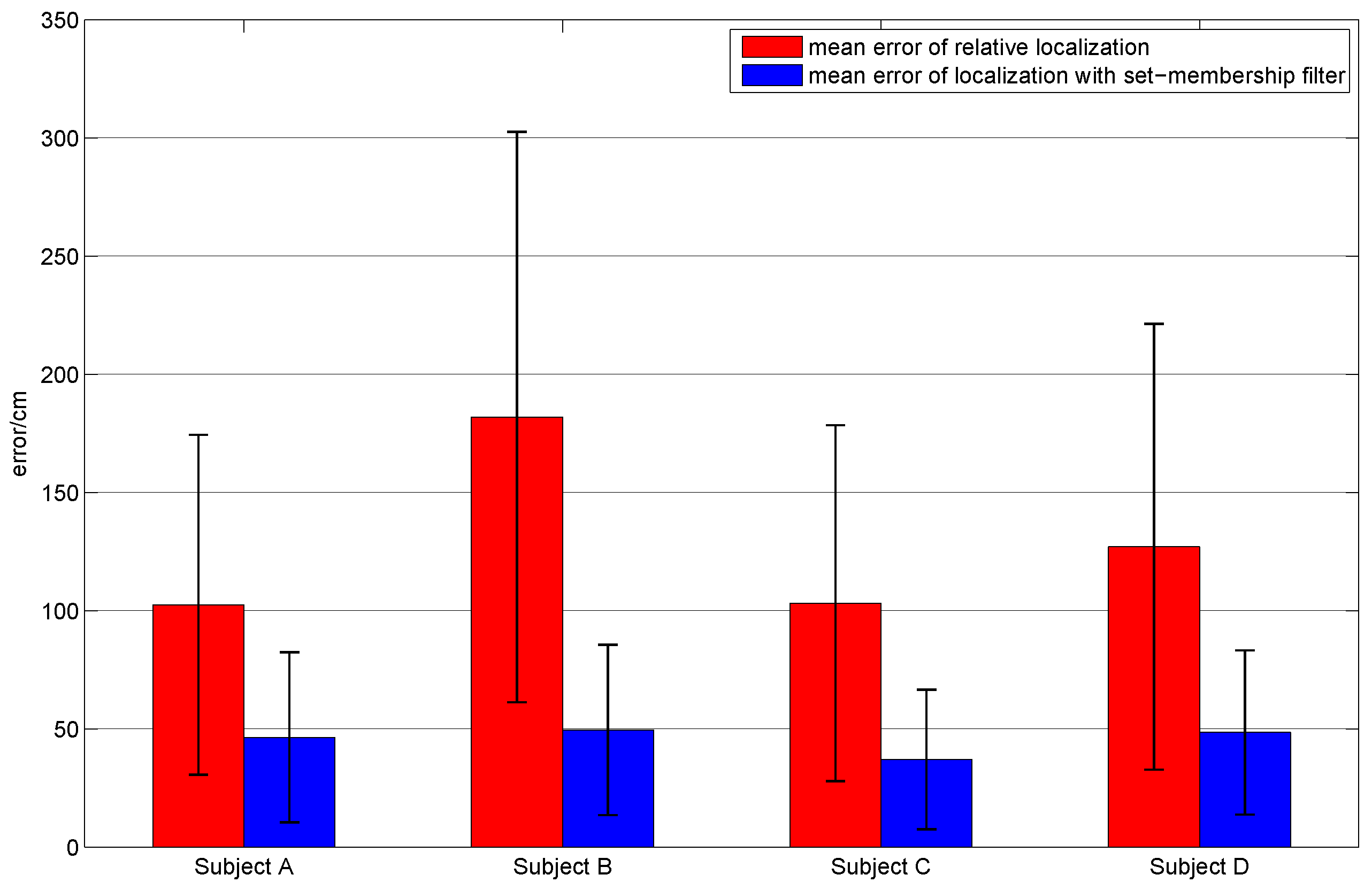

4.3.2. Experiments on Different Subjects

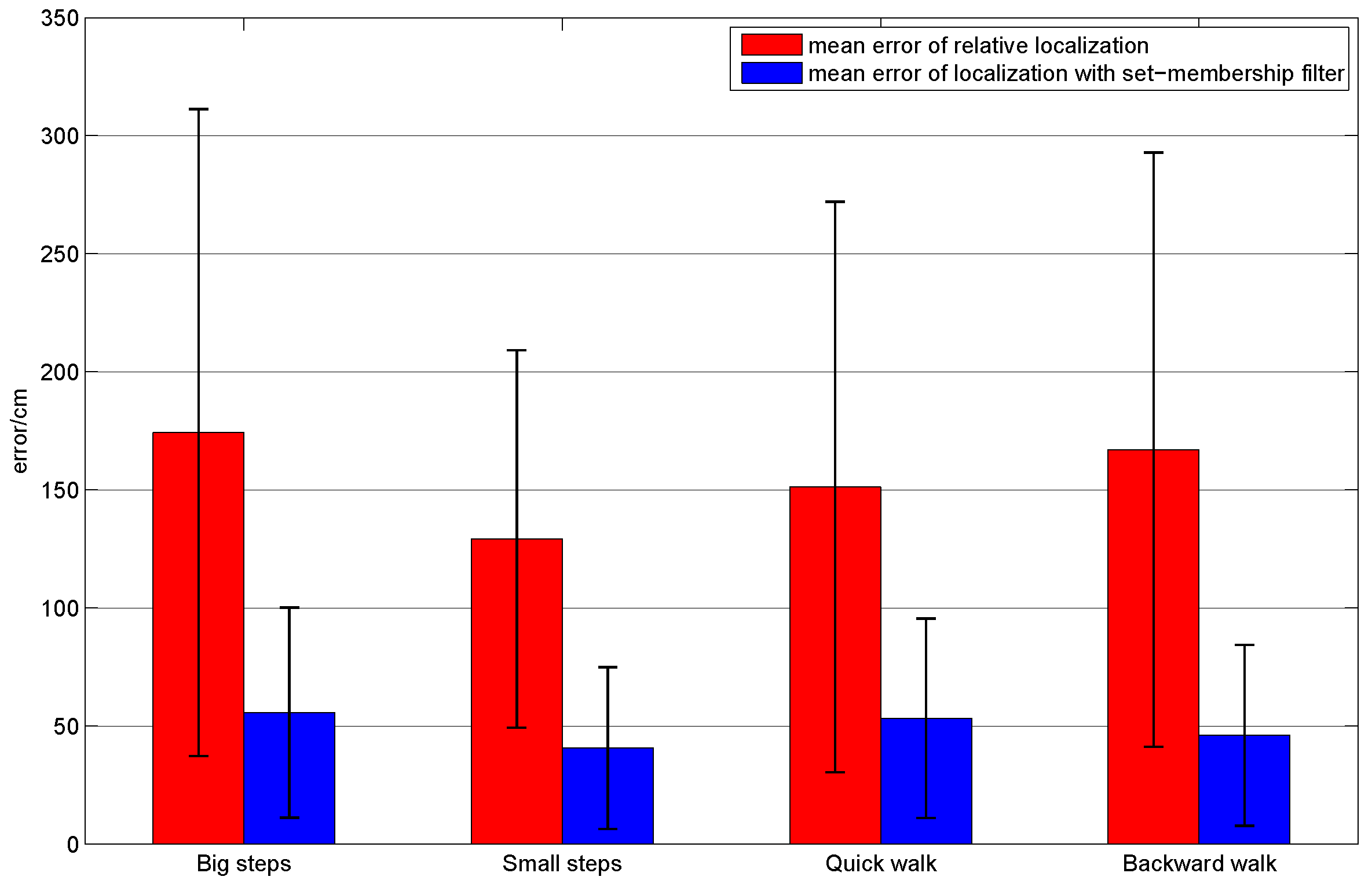

4.3.3. Experiments Regarding Different Ways of Walking

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Shi, C.; Luu, D.K.; Yang, Q.; Liu, J.; Sun, Y. Recent Advances in Nanorobotic Manipulation inside Scanning Electron Microscopes. Microsys. Nanoengi. 2016, 8, 16024. [Google Scholar] [CrossRef]

- Yang, Z.; Wang, Y.; Yang, B.; Li, G.; Chen, T.; Nakajima, M.; Sun, L.; Fukuda, T. Mechatronic development and vision feedback control of a nanorobotics manipulation system inside sem for nanodevice assembly. Sensors 2016, 16, 1479. [Google Scholar] [CrossRef] [PubMed]

- Heidari, M.; Alsindi, N.A.; Pahlavan, K. UDP identification and error mitigation in toa-based indoor localization systems using neural network architecture. IEEE Trans. Wirel. Commun. 2009, 8, 3597–3607. [Google Scholar] [CrossRef]

- Xu, Y.; Zhou, M.; Ma, L. WiFi indoor location determination via ANFIS with PCA methods. In Proceedings of the 2009 IEEE International Conference on Network Infrastructure and Digital Content, Beijing, China, 6–8 Novenber 2009; pp. 647–651.

- Figuera, C.; Rojo-álvarez, J.L.; Mora-Jiménez, I.; Guerrero-Curieses, A.; Wilby, M.; Ramos-López, J. Time-space sampling and mobile device calibration for WiFi indoor location systems. IEEE Trans. Mob. Comput. 2011, 10, 913–926. [Google Scholar] [CrossRef]

- Aparicio, S.; Perez, J.; Tarrío, P.; Bernardos, A.M.; Casar, J.R. An indoor location method based on a fusion map using bluetooth and WLAN technologies. In International Symposium on Distributed Computing and Artificial Intelligence 2008; Corchado, J.M., Rodríguez, S., Llinas, J., Molina, J.M., Eds.; Springer: Berlin, Germany, 2009; pp. 702–710. [Google Scholar]

- Zhuang, Y.; Yang, J.; Li, Y.; Qi, L.; El-Sheimy, N. Smartphone-based indoor localization with bluetooth low energy beacons. Sensors 2016, 16, 596. [Google Scholar] [CrossRef] [PubMed]

- Cheng, Y.M. Using ZigBee and Room-Based Location Technology to Constructing an Indoor Location-Based Service Platform. In Proceedings of the IEEE 5th International Conference on Intelligent Information Hiding & Multimedia Signal Processing, Kyoto, Japan, 12–14 September 2009; pp. 803–806.

- Huang, C.N.; Chan, C.T. ZigBee-based indoor location system by k-nearest neighbor algorithm with weighted RSSI. Procedia Comput. Sci. 2011, 5, 58–65. [Google Scholar] [CrossRef]

- Bao, X.; Wang, G. Random sampling algorithm in RFID indoor location system. In Proceedings of the 3rd IEEE International Workshop on Electronic Design, Test and Applications, Kuala Lumpur, Malaysia, 17–19 January 2006; pp. 168–176.

- Zou, T.; Lin, S.; Li, S. Blind RSSD-based indoor localization with confidence calibration and energy control. Sensors 2016, 16, 788. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Zhao, C.; Ding, G.; Gong, J.; Liu, C.; Zhao, F. A reliable and accurate indoor localization method using phone inertial sensors. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing ACM Conference on Ubiquitous Computing, New York, NY, USA, 2009; pp. 421–430.

- Gusenbauer, D.; Isert, C.; KröSche, J. Self-contained indoor positioning on off-the-shelf mobile devices. In Proceedings of the 2010 International Conference on Indoor Positioning and Indoor Navigation, Zürich, Switzerland, 15–17 September 2010; pp. 1–9.

- Jimenez, A.R.; Seco, F.; Prieto, J.C.; Guevara, J. Indoor pedestrian navigation using an INS/EKF framework for yaw drift reduction and a foot-mounted IMU. In Proceedings of the 2010 7th Workshop on Positioning Navigation and Communication (WPNC), Dresden, Germany, 2010; pp. 135–143.

- Hoflinger, F.; Zhang, R.; Reindl, L.M. Indoor-localization system using a Micro-Inertial Measurement Unit (IMU). In Proceedings of the European Frequency and Time Forum (EFTF), Gothenburg, Sweden, 23–27 April 2012; pp. 443–447.

- Zhang, R.; Hoflinger, F.; Reindl, L. Inertial sensor based indoor localization and monitoring system for emergency responders. IEEE Sens. J. 2013, 13, 838–848. [Google Scholar] [CrossRef]

- Zhang, R.; Hoeflinger, F.; Gorgis, O.; Reindl, L.M. Indoor Localization Using Inertial Sensors and Ultrasonic Rangefinder. In Proceedings of the 2011 IEEE International Conference on Wireless Communications and Signal Processing (WCSP2011), Nanjing, China, 9–11 November 2011; pp. 1–5.

- Yuan, X.; Yu, S.; Zhang, S.; Wang, S.; Liu, S. Quaternion-based unscented kalman filter for accurate indoor heading estimation using wearable multi-sensor system. Sensors 2015, 5, 10872–10890. [Google Scholar] [CrossRef] [PubMed]

- Ruiz, A.R.J.; Granja, F.S.; Honorato, J.C.P.; Rosas, J.I.G. Accurate pedestrian indoor navigation by tightly coupling foot-mounted IMU and RFID measurements. IEEE Trans. Instrum. Meas. 2012, 61, 178–189. [Google Scholar] [CrossRef] [Green Version]

- Woodman, O.J. An Introduction to Inertial Navigation; Technical Report UCAMCL-TR-696; University of Cambridge, Computer Laboratory: Cambridge, UK, 2007. [Google Scholar]

- Boulay, B.; Brémond, F.; Thonnat, M. Applying 3D human model in a posture recognition system. Pattern Recognit. Lett. 2006, 27, 1788–1796. [Google Scholar] [CrossRef]

- Le, T.L.; Nguyen, M.Q.; Nguyen, T.T.M. Human posture recognition using human skeleton provided by Kinect. In Proceedings of the 2013 IEEE International Conference on Computing, Management and Telecommunications, Ho Chi Minh City, Vietnam, 21–24 January 2013; pp. 340–345.

- Yang, C.; Ugbolue, U.C.; Kerr, A.; Stankovic, V.; Stankovic, L.; Carse, B.; Kaliarntas, K.T.; Rowe, P.J. Autonomous gait event detection with portable single-camera gait kinematics analysis system. J. Sens. 2016, 2016, 5036857. [Google Scholar] [CrossRef]

- Diraco, G.; Leone, A.; Siciliano, P. An active vision system for fall detection and posture recognition in elderly healthcare. Am. J. Physiol. 2010, 267, 1536–1541. [Google Scholar]

- Gallagher, A.; Matsuoka, Y.; Ang, W.T. An efficient real-time human posture tracking algorithm using low-cost inertial and magnetic sensors. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robotsand Systems (IROS 2004), Sendai, Japan, 28 September–2 October 2004; pp. 2967–2972.

- Jung, P.G.; Lim, G.; Kong, K. Human posture measurement in a three-dimensional space based on inertial sensors. In Proceedings of the 2012 12th IEEE International Conference on Control, Automation and Systems, Jeju Island, Korea, 17–21 October 2012; pp. 1013–1016.

- Harms, H.; Amft, O.; Troster, G. Influence of a loose-fitting sensing garment on posture recognition in rehabilitation. In Proceedings of the 2008 IEEE Biomedical Circuits and Systems Conference, Baltimore, MD, USA, 20–22 November 2008; pp. 353–356.

- Zhang, S.; Mccullagh, P.; Nugent, C.; Zheng, H.; Baumgarten, M. Optimal model selection for posture recognition in home-based healthcare. Int. J. Mach. Learn. Cybern. 2011, 2, 1–14. [Google Scholar] [CrossRef]

- Gjoreski, H.; Lustrek, M.; Gams, M. Accelerometer Placement for Posture Recognition and Fall Detection. In Proceedings of the 2011 Seventh IEEE International Conference on Intelligent Environments, Notingham, UK, 25–28 July 2011; pp. 47–54.

- Chen, C.; Jafari, R.; Kehtarnavaz, N. A survey of depth and inertial sensor fusion for human action recognition. Multimed. Tools Appl. 2016, 74. [Google Scholar] [CrossRef]

- Redondi, A.; Chirico, M.; Borsani, L.; Cesana, M.; Tagliasacchi, M. An integrated system based on wireless sensor networks for patient monitoring, localization and tracking. Ad Hoc Netw. 2013, 11, 39–53. [Google Scholar] [CrossRef]

- Lee, S.W.; Mase, K. Activity and location recognition using wearable sensors. IEEE Pervasive Comput. 2002, 1, 24–32. [Google Scholar]

- Maria, S.A. Estimating three-dimensional orientation of human body parts by inertial/magnetic sensing. Sensors 2011, 11, 1489–1525. [Google Scholar]

- Tayebi, A.; Mcgilvray, S.; Roberts, A.; Moallem, M. Attitude estimation and stabilization of a rigid body using low-cost sensors. In Proceedings of the 2007 46th IEEE Conference on Decision & Control, New Orleans, LA, USA, 12–14 December 2007; pp. 6424–6429.

- Lee, J.K.; Park, E.J. Minimum-order kalman filter with vector selector for accurate estimation of human body orientation. IEEE Trans. Robot. 2009, 25, 1196–1201. [Google Scholar]

- Huang, J.; Huo, W.; Xu, W.; Mohammed, S.; Amirat, Y. Control of Upper-Limb Power-Assist Exoskeleton Using a Human-Robot Interface Based on Motion Intention Recognition. IEEE Trans. Autom. Sci. Eng. 2015, 12, 1257–1270. [Google Scholar] [CrossRef]

- Zhu, R.; Zhou, Z.A. Real-time articulated human motion tracking using tri-axis inertial/magnetic sensors package. IEEE Trans. Neural Syst. Rehabil. Eng. 2004, 12, 295–302. [Google Scholar] [CrossRef] [PubMed]

- Liu, T.; Inoue, Y.; Shibata, K. Simplified Kalman filter for a wireless inertial-magnetic motion sensor. In Proceedings of the 2011 IEEE Sensors, Limerick, Ireland, 28–31 October 2011; pp. 569–572.

- Mathie, M. Monitoring and Interpreting Human Movement Patterns Using a Triaxial Accelerometer; The University of New South Wales: Sydney, Australia, 2003; pp. 56–57. [Google Scholar]

- Wang, Y.; Huang, J.; Wang, Y. Wearable sensor-based indoor localisation system considering incomplete observations. Int. J. Model. Identif. Control 2015, 24. [Google Scholar] [CrossRef]

- Yang, F.; Wang, Z.; Hung, Y.S. Robust Kalman filtering for discrete time-varying uncertain systems with multiplicative noises. IEEE Trans. Autom. Control 2002, 47, 1179–1183. [Google Scholar] [CrossRef] [Green Version]

- Deller, J.R.; Nayeri, M.; Liu, M.S. Unifying the landmark developments in optimal bounding ellipsoid identification. Int. J. Adapt. Control Signal Process. 1994, 8, 43–60. [Google Scholar] [CrossRef]

- Schweppe, F.C. Recursive state estimation: Unknown but bounded errors and system inputs. IEEE Trans. Autom. Control 1968, 13, 22–28. [Google Scholar] [CrossRef]

- Chernousko, F.L. Optimal guaranteed estimates of indeterminacies with the aid of ellipsoids. I. Eng. Cybern. 1980, 18, 729–796. [Google Scholar]

- Nagaraj, S.; Gollamudi, S.; Kapoor, S.; Huang, Y.F. BEACON: An adaptive set-membership filtering technique with sparse updates. IEEE Trans. Signal Process. 1999, 47, 2928–2941. [Google Scholar] [CrossRef]

| Parameter | Subject A | Subject B | Subject C | Subject D | Description |

|---|---|---|---|---|---|

| 73 cm | 76 cm | 75 cm | 70 cm | The length of HAT(Head-Arm-Trunk) | |

| 28 cm | 30 cm | 30 cm | 32 cm | The distance between two hip joints | |

| 48 cm | 52 cm | 45 cm | 50 cm | The length of thigh | |

| 46 cm | 50 cm | 48 cm | 48 cm | The length of shank |

| Posture | Standing | Sitting | Squatting | Supine | Prone |

|---|---|---|---|---|---|

| Subject A | 100% | 100% | 100% | 100% | 100% |

| Subject B | 100% | 100% | 100% | 100% | 100% |

| Subject C | 100% | 100% | 100% | 100% | 100% |

| Subject D | 100% | 100% | 100% | 100% | 100% |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, J.; Yu, X.; Wang, Y.; Xiao, X. An Integrated Wireless Wearable Sensor System for Posture Recognition and Indoor Localization. Sensors 2016, 16, 1825. https://doi.org/10.3390/s16111825

Huang J, Yu X, Wang Y, Xiao X. An Integrated Wireless Wearable Sensor System for Posture Recognition and Indoor Localization. Sensors. 2016; 16(11):1825. https://doi.org/10.3390/s16111825

Chicago/Turabian StyleHuang, Jian, Xiaoqiang Yu, Yuan Wang, and Xiling Xiao. 2016. "An Integrated Wireless Wearable Sensor System for Posture Recognition and Indoor Localization" Sensors 16, no. 11: 1825. https://doi.org/10.3390/s16111825