Spectral Skyline Separation: Extended Landmark Databases and Panoramic Imaging

Abstract

:1. Introduction

1.1. Motivation

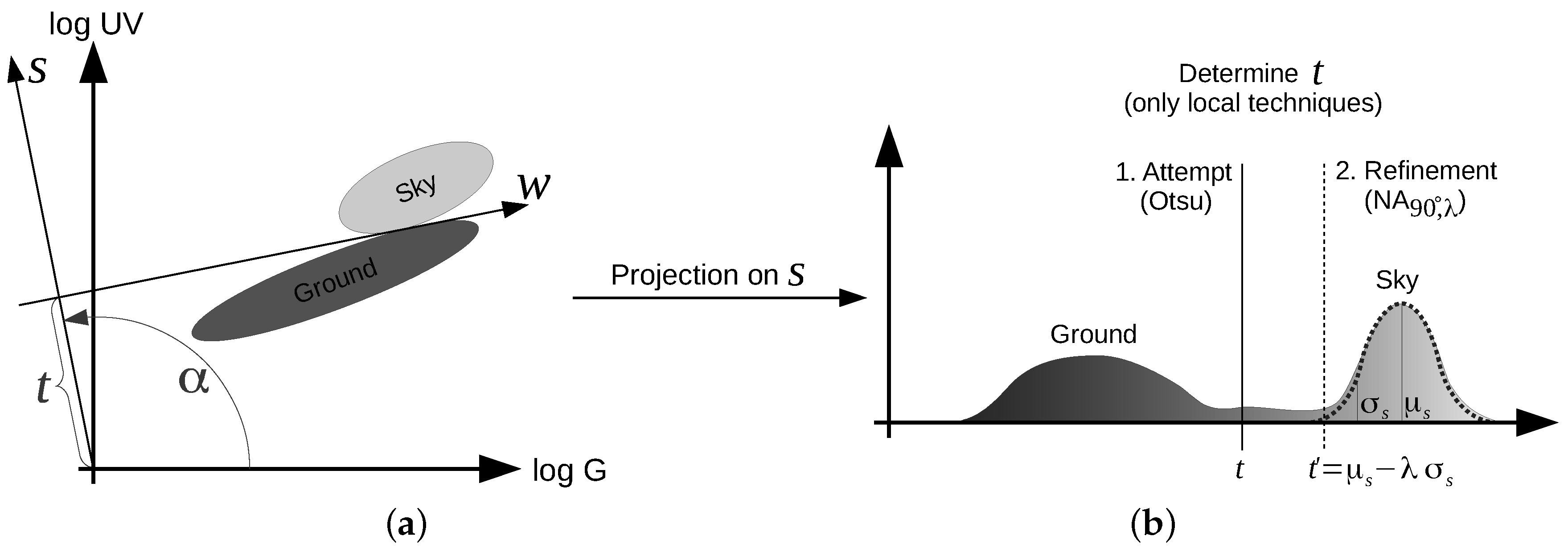

1.2. Global and Local Classification Methods

- Local: Unsupervised methods, where the threshold is learned for each HDR image pair (UV and green) individually.

- Global: Supervised methods, where the threshold is learned offline on a given, hand-labeled dataset.

1.3. Related Work

1.4. Contributions

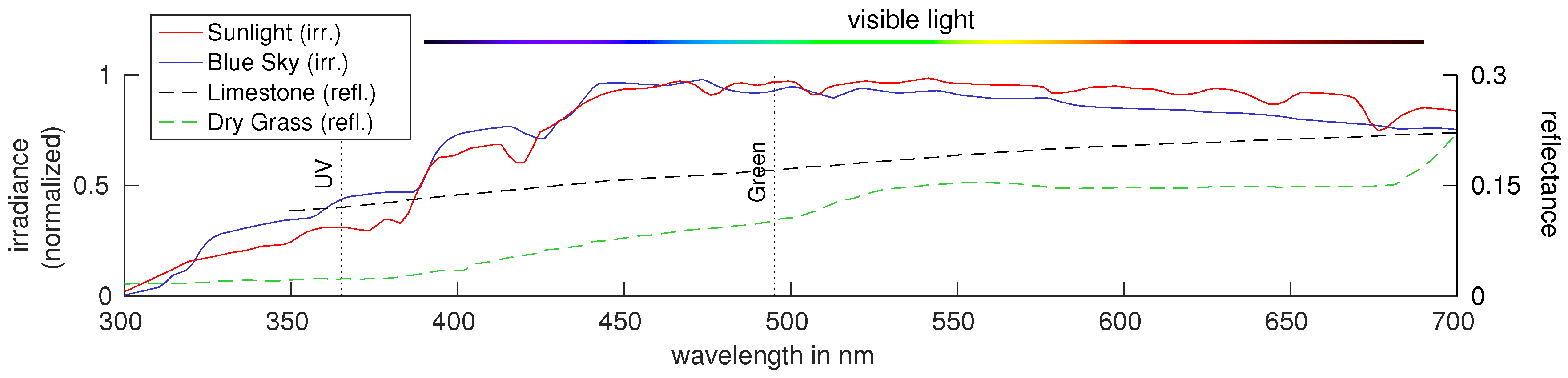

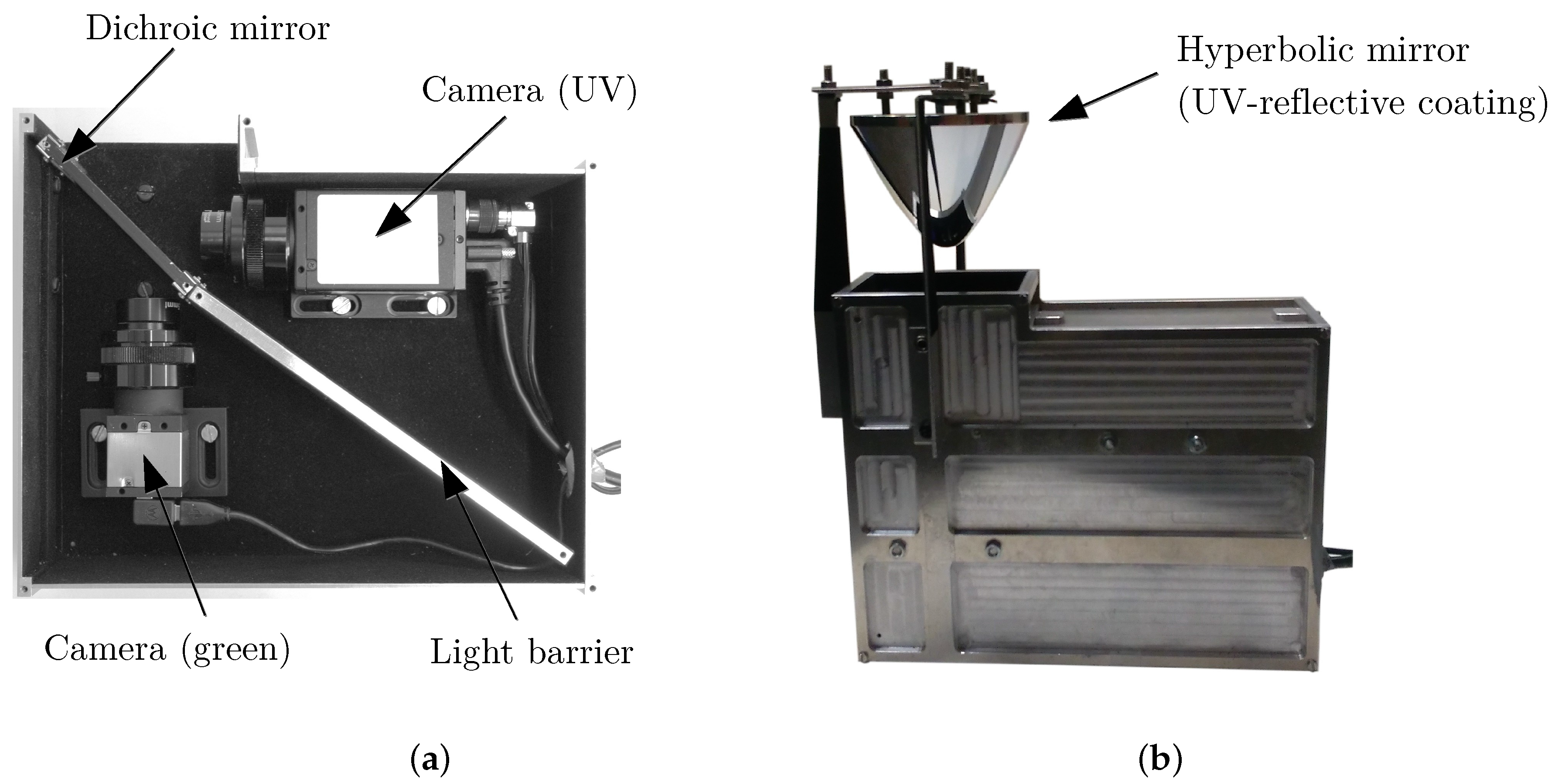

2. Materials and Methods

2.1. Image Acquisition

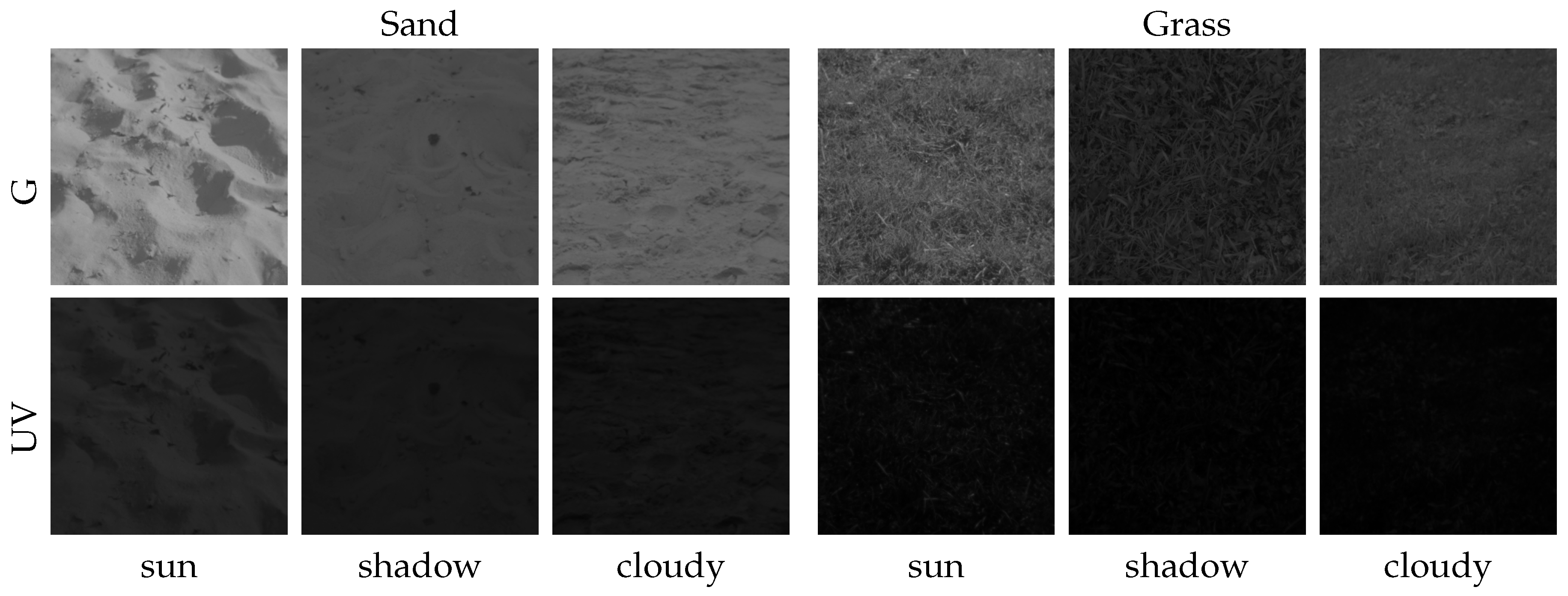

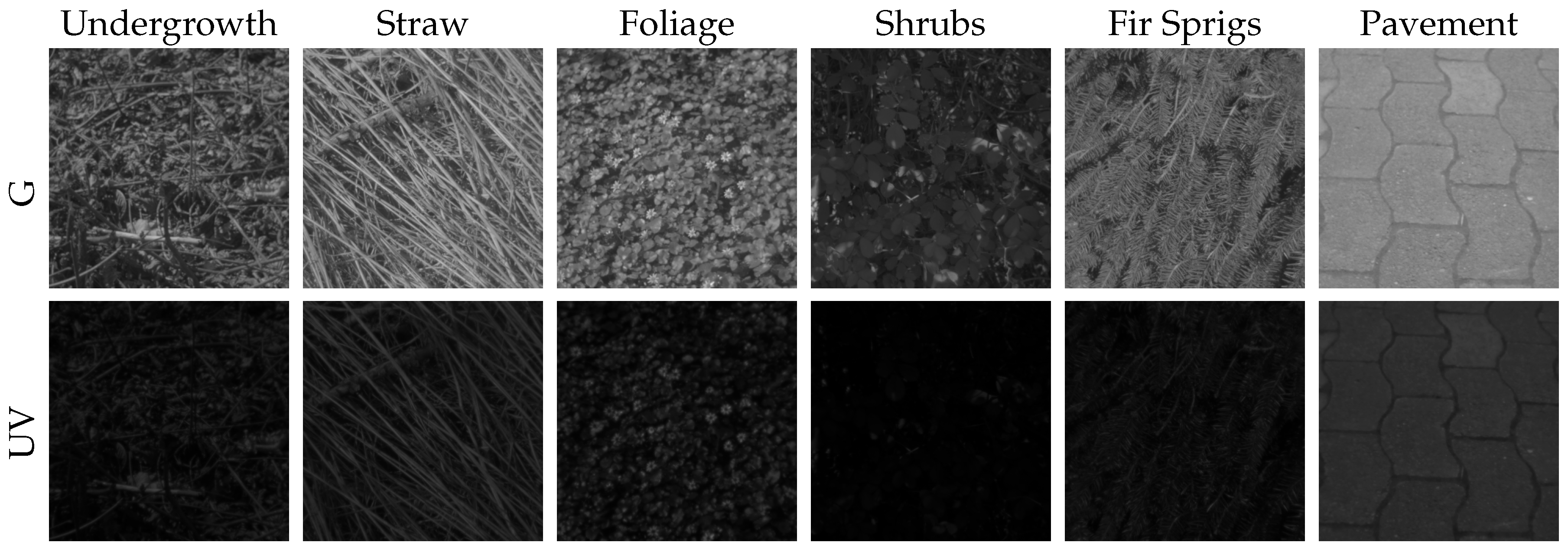

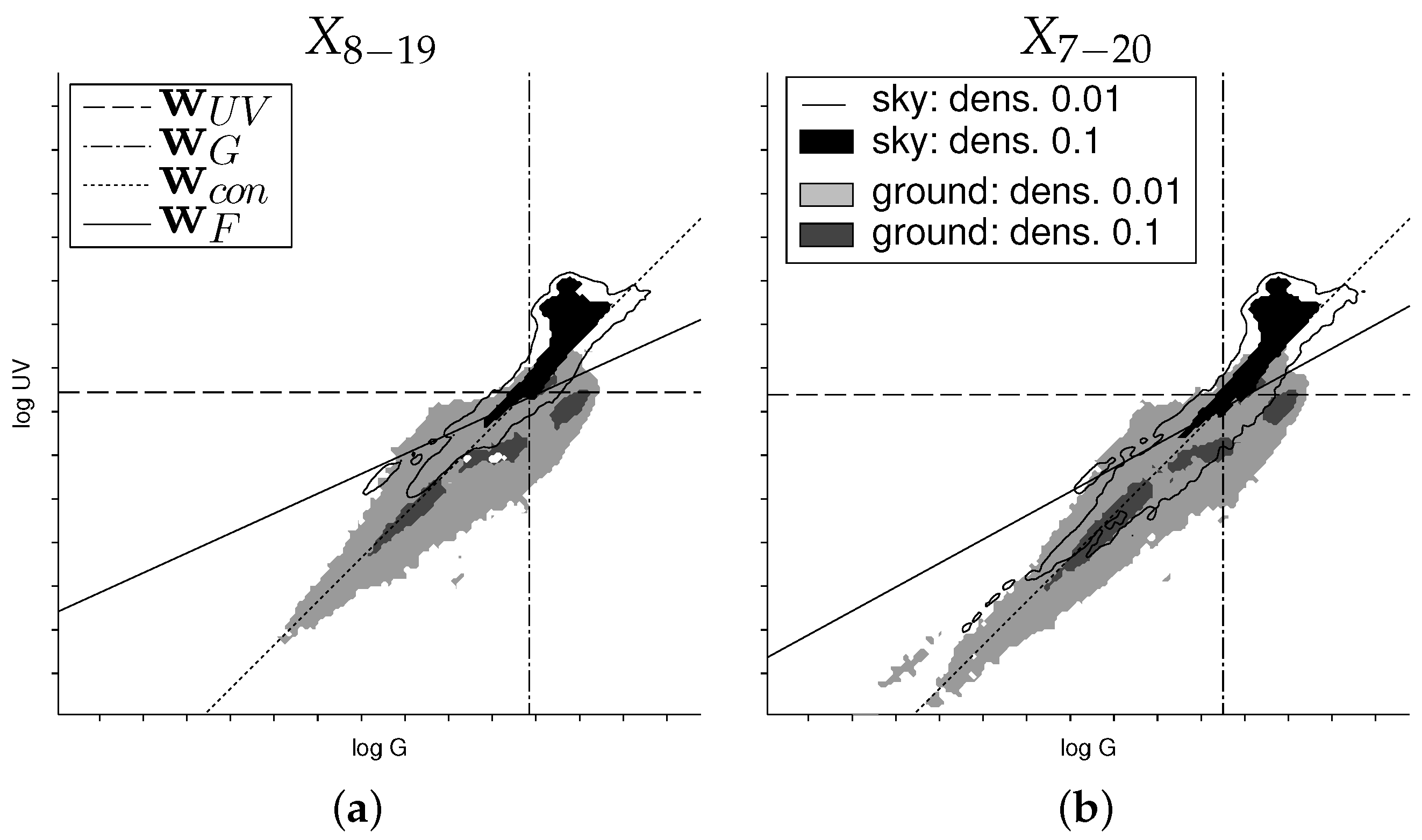

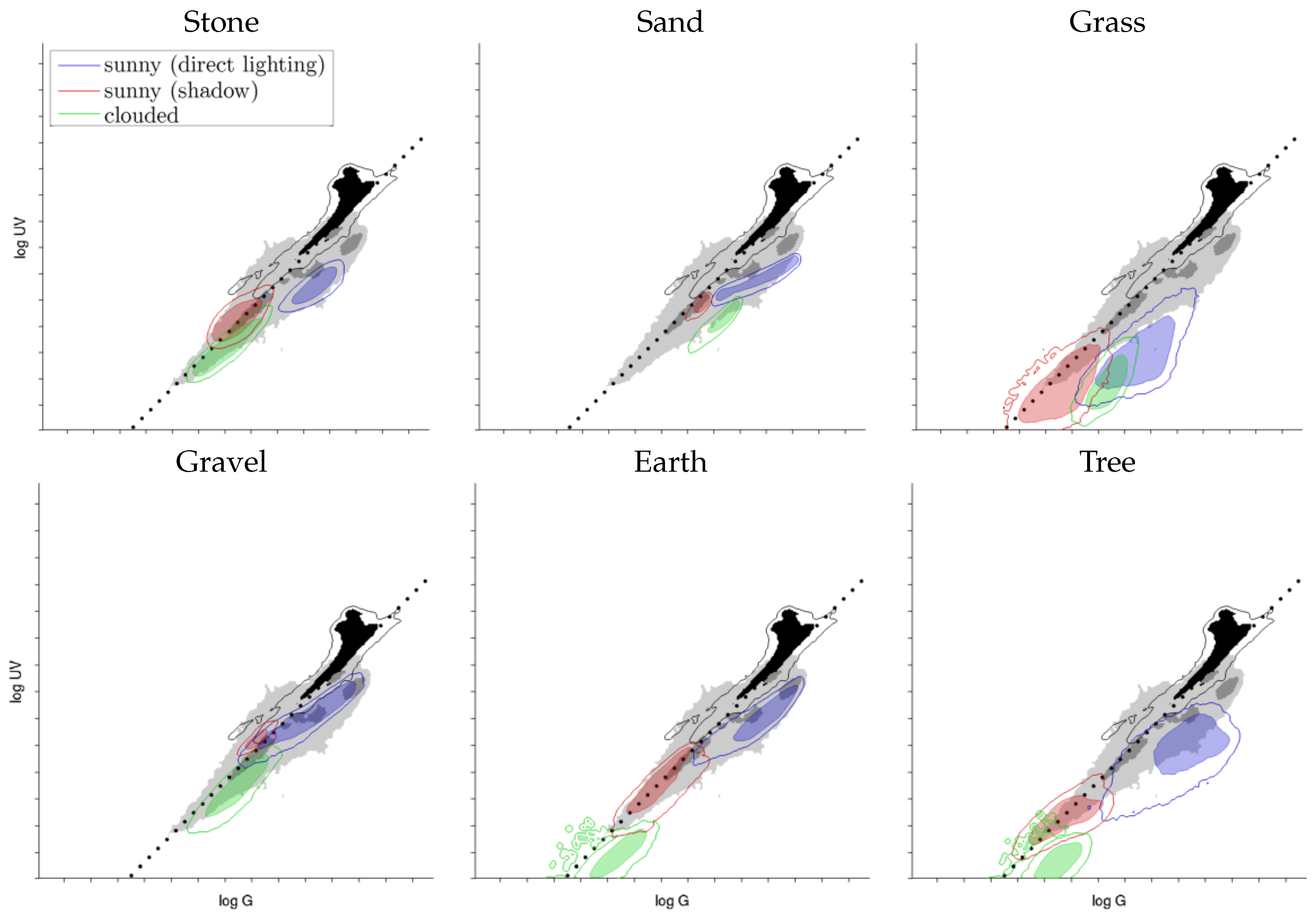

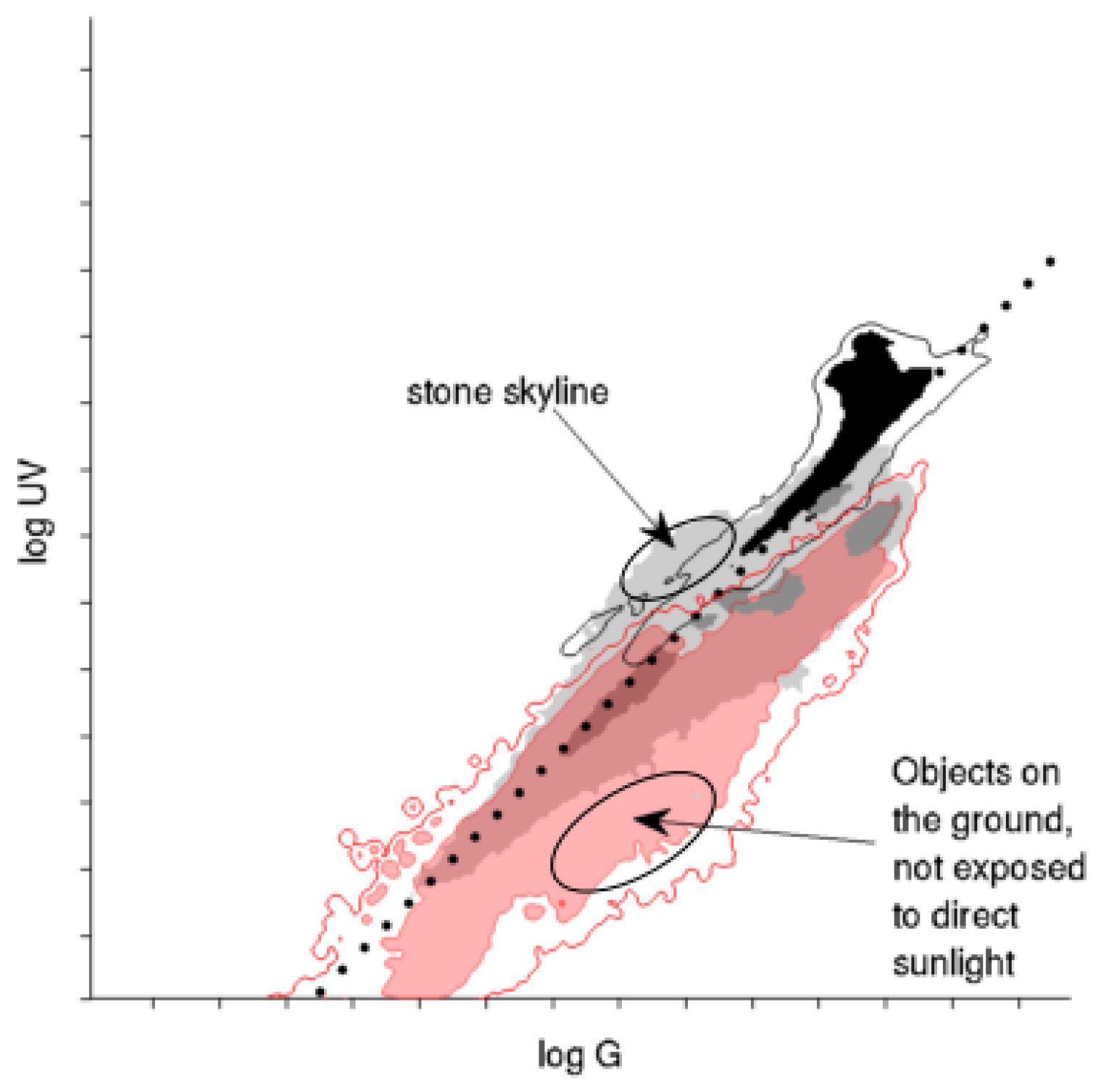

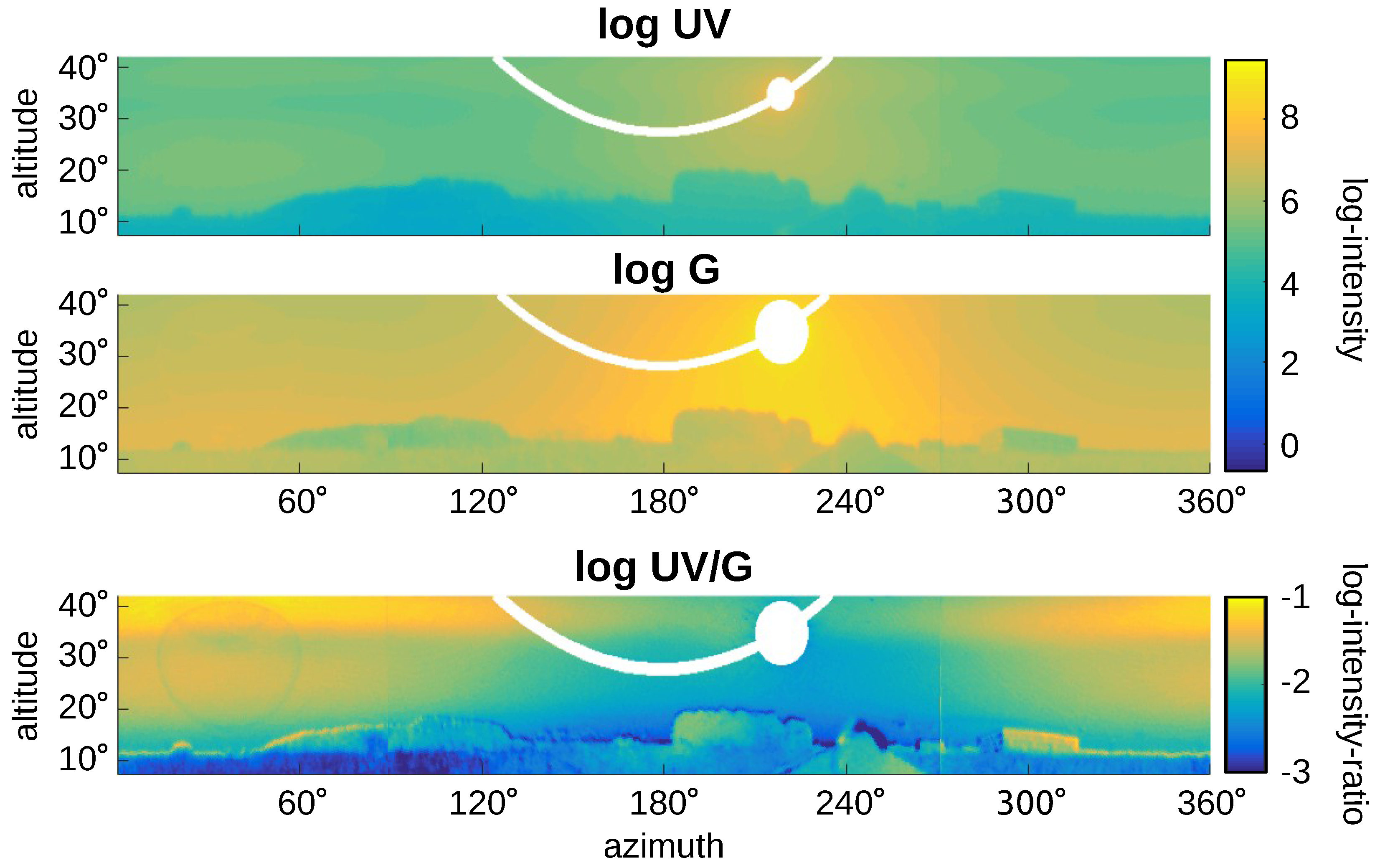

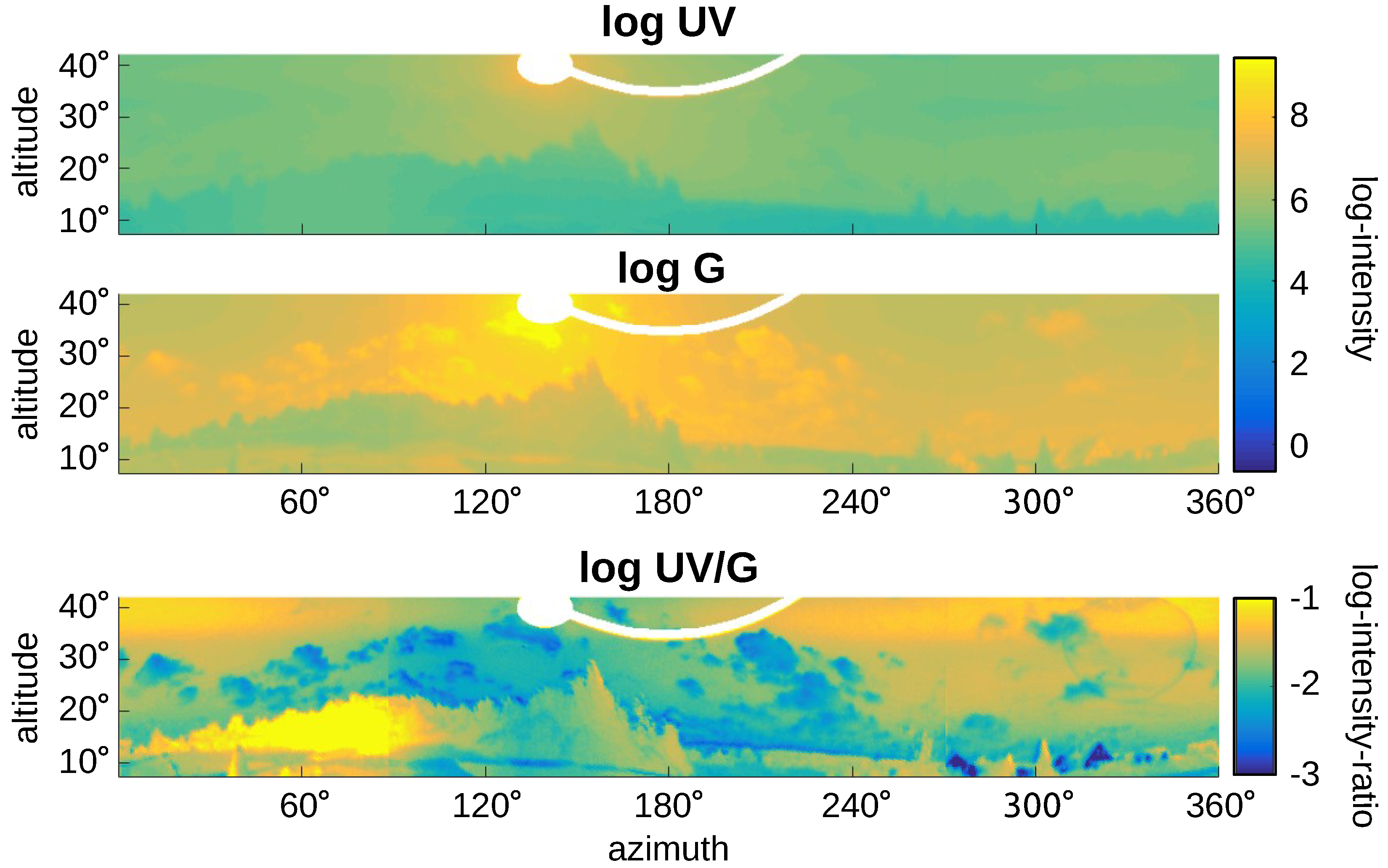

2.2. Data Visualization

2.3. Data Classification

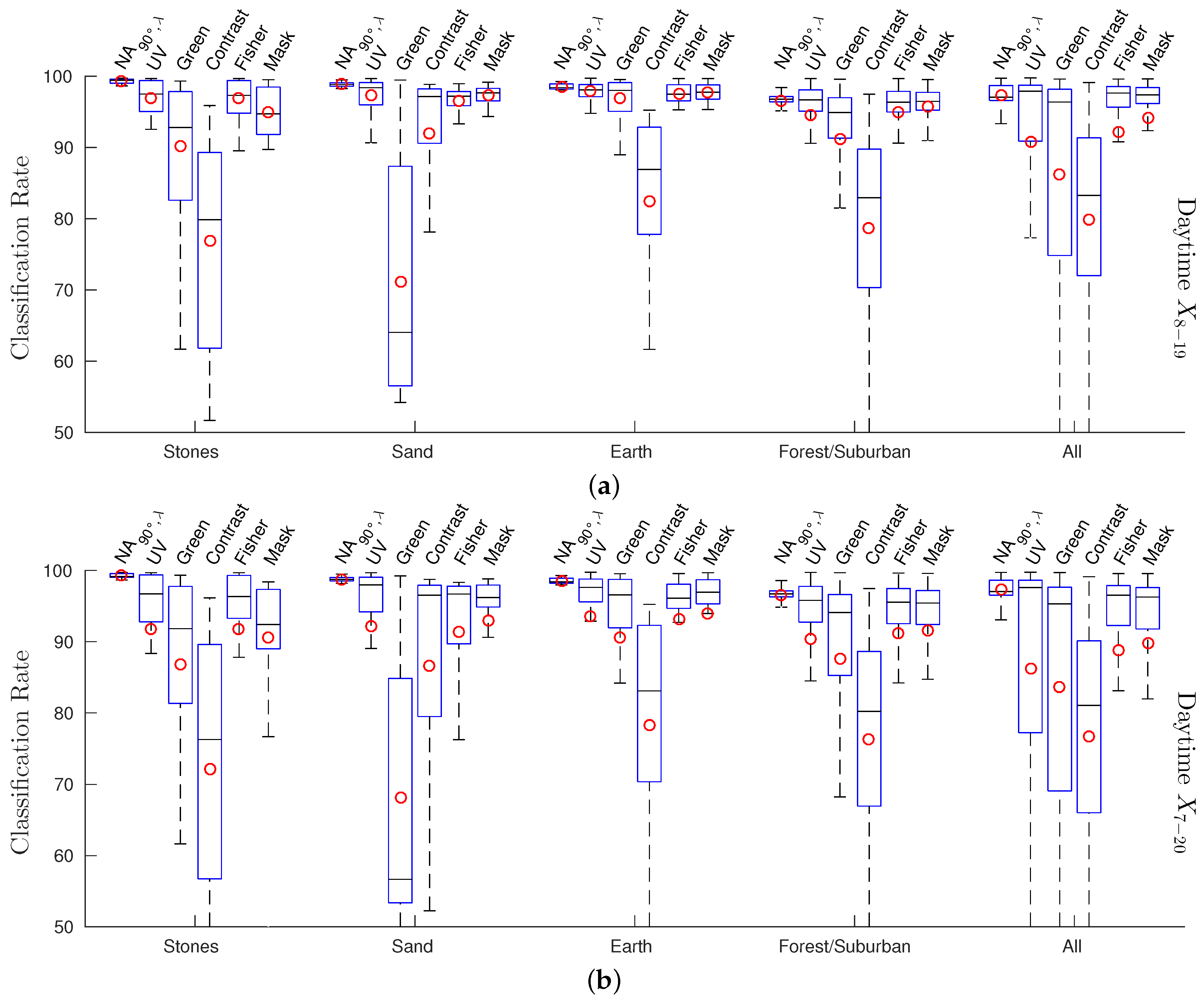

3. Results

3.1. Records of Skylines

3.1.1. Global Separation Techniques

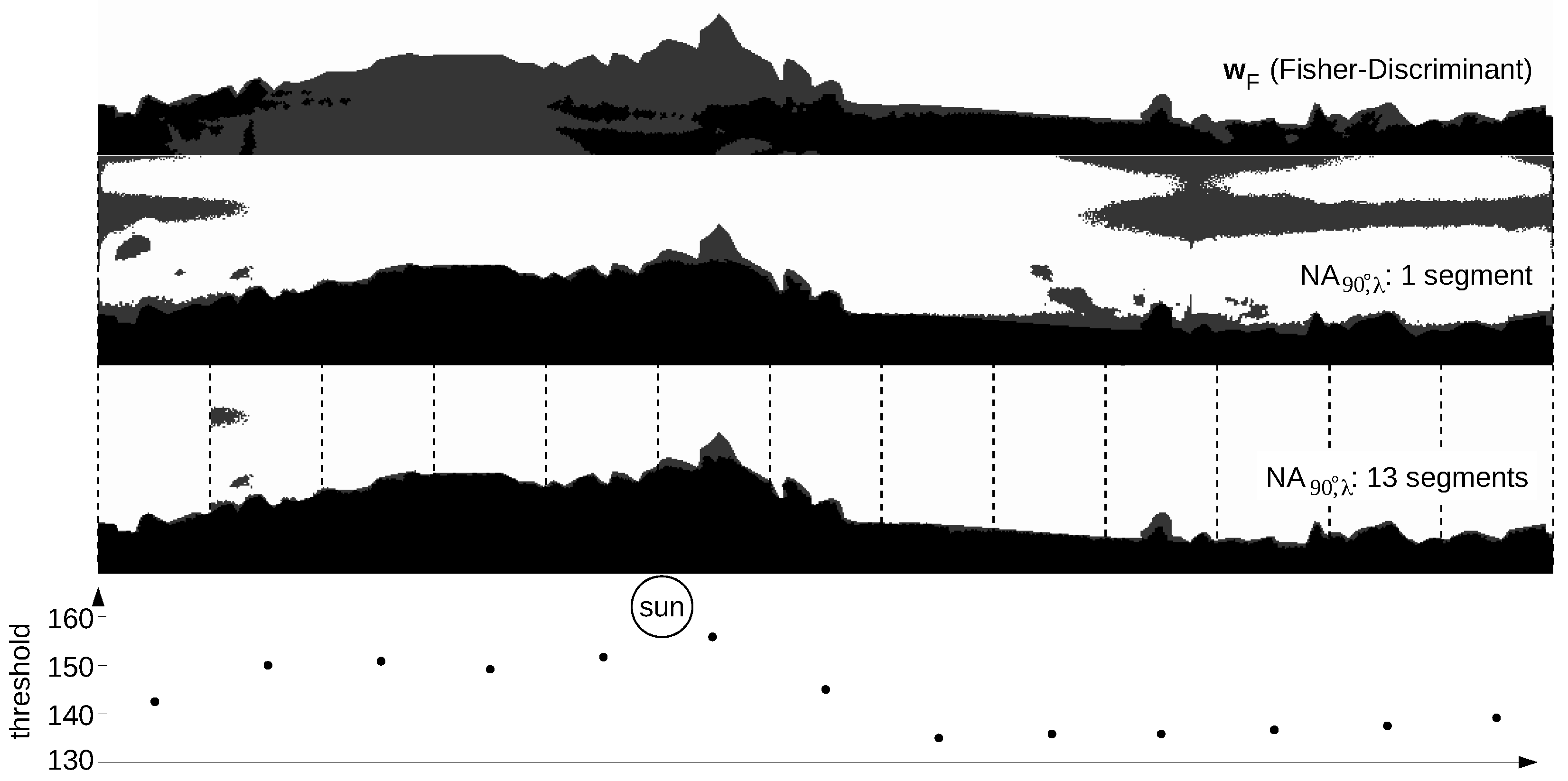

3.1.2. Local Separation Techniques

3.2. Records of Ground Objects

3.3. Panoramic Images

4. Discussion

4.1. Skyline Separation Using Global Separation Techniques

4.2. Skyline Separation Using Local Separation Techniques

4.3. Omnidirectional Skyline Extraction

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Cheng, K.; Freas, C.A. Path integration, views, search, and matched filters: The contributions of Rüdiger Wehner to the study of orientation and navigation. J. Comp. Physiol. A 2015, 201, 517–532. [Google Scholar] [CrossRef] [PubMed]

- Graham, P. Insect navigation. Encycl. Anim. Behav. 2010, 2, 167–175. [Google Scholar]

- Madl, T.; Chen, K.; Montaldi, D.; Trappl, R. Computational cognitive models of spatial memory in navigation space: A review. Neural Netw. 2015, 65, 18–43. [Google Scholar] [CrossRef] [PubMed]

- Wolf, H. Review: Odometry and insect navigation. J. Exp. Biol. 2011, 214, 1629–1641. [Google Scholar] [CrossRef] [PubMed]

- Deneulle, A.; Srinivasan, M.V. Bio-inspired visual guidance: From insect homing to UAS navigation. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 326–332.

- Stone, T.; Differt, D.; Milford, M.; Webb, B. Skyline-Based Localisation for Aggressively Manoeuvring Robots Using UV Sensors and Spherical Harmonics. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 5615–5622.

- Bird, R.E.; Hulstrom, R.L. Terrestrial solar spectral data sets. Solar Energy 1983, 30, 563–573. [Google Scholar] [CrossRef]

- Nann, S.; Riordan, C. Solar spectral irradiance under clear and cloudy skies: Measurements and a semiempirical model. Am. Meteorol. Soc. 1991, 30, 447–462. [Google Scholar] [CrossRef]

- Clark, R.N.; Swayze, G.A.; Wise, R.; Livo, K.E.; Hoefen, T.M.; Kokaly, R.F.; Sutley, S.J. USGS Digital Spectral Library Splib06a. Available online: http://speclab.cr.usgs.gov/spectral.lib06/ (accessed on 9 August 2016).

- Graham, P.; Cheng, K. Which portion of the natural panorama is used for view-based navigation in the Australian desert ant? J. Comp. Physiol. A 2009, 195, 681–689. [Google Scholar] [CrossRef] [PubMed]

- Möller, R. Insects could exploit UV-green contrast for landmark navigation. J. Theor. Biol. 2002, 214, 619–631. [Google Scholar] [CrossRef] [PubMed]

- Wystrach, A.; Graham, P. What can we learn from studies of insect navigation? Anim. Behav. 2012, 84, 13–20. [Google Scholar] [CrossRef]

- Zeil, J. Visual homing: An insect perspective. Curr. Opin. Neurobiol. 2012, 22, 285–293. [Google Scholar] [CrossRef] [PubMed]

- Mote, M.I.; Wehner, R. Functional characteristics of photoreceptors in the compound eye and ocellus of the desert ant. Cataglyphis bicolor. J. Comp. Physiol. A 1980, 137, 63–71. [Google Scholar] [CrossRef]

- Ogawa, Y.; Falkowski, M.; Narendra, A.; Zeil, J.; Hemmi, J.M. Three spectrally distinct photoreceptors in diurnal and nocturnal Australian ants. Proc. R. Soc. Lond. B Biol. Sci. 2015, 282. [Google Scholar] [CrossRef] [PubMed]

- Wehner, R. Himmelsnavigation bei Insekten. Neujahrsblatt der Naturforschenden Gesellschaft Zürich 1982, 184, 1–132. [Google Scholar]

- Schultheiss, P.; Wystrach, A.; Schwarz, S.; Tack, A.; Delor, J.; Nooten, S.S.; Bibost, A.L.; Freas, C.A.; Cheng, K. Crucial role of ultraviolet light for desert ants in determining direction from the terrestrial panorama. Anim. Behav. 2016, 115, 19–28. [Google Scholar] [CrossRef]

- Kollmeier, T.; Röben, F.; Schenck, W.; Möller, R. Spectral contrasts for landmark navigation. J. Opt. Soc. Am. A 2007, 24, 1–10. [Google Scholar] [CrossRef]

- Differt, D.; Möller, R. Insect models of illumination-invariant skyline extraction from UV and green channels. J. Theor. Biol. 2015, 380, 444–462. [Google Scholar] [CrossRef] [PubMed]

- Stone, T.; Mangan, M.; Ardin, P.; Webb, B. Sky segmentation with ultraviolet images can be used for navigation. In Proceedings of the 2014 Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014.

- Bazin, J.C.; Kweon, I.; Demonceaux, C.; Vasseur, P. Dynamic programming and skyline extraction in catadioptric infrared images. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 409–416.

- Shen, Y.; Wang, Q. Sky Region Detection in a Single Image for Autonomous Ground Robot Navigation. Int. J. Adv. Rob. Syst. 2013, 10. [Google Scholar] [CrossRef]

- Carey, N.; Stürzl, W. An insect-inspired omnidirectional vision system including UV-sensitivity and polarisation. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 312–319.

- Tehrani, M.H.; Garratt, M.A.; Anavatti, S. Horizon-based attitude estimation from a panoramic vision sensor. IFAC Proc. 2012, 45, 185–188. [Google Scholar] [CrossRef]

- Tehrani, M.H.; Garratt, M.; Anavatti, S. Gyroscope offset estimation using panoramic vision-based attitude estimation and extended Kalman filter. In Proceedings of the 2nd International Conference on Communications, Computing and Control Applications (CCCA), Marseilles, France, 6–8 December 2012; pp. 1–5.

- Basten, K.; Mallot, H.A. Simulated visual homing in desert ant natural environments: Efficiency of skyline cues. Biol. Cybern. 2010, 102, 413–425. [Google Scholar] [CrossRef] [PubMed]

- Friedrich, H.; Dederscheck, D.; Rosert, E.; Mester, R. Optical Rails: View-based point-to-point navigation using spherical harmonics. In Pattern Recognition; Rigoll, G., Ed.; Springer Verlag: Berlin, Germany, 2008; pp. 345–354. [Google Scholar]

- Möller, R.; Krzykawski, M.; Gerstmayr, L. Three 2D-Warping Schemes for Visual Robot Navigation. Auton. Robots 2010, 29, 253–291. [Google Scholar] [CrossRef]

- Debevec, P.E.; Malik, J. Recovering high dynamic range radiance maps from photographs. In Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’97), Los Angeles, CA, USA, 3–8 August 1998; pp. 369–378.

- Laughlin, S.B. The role of sensory adaptation in the retina. J. Exp. Biol. 1989, 146, 39–62. [Google Scholar] [PubMed]

- Laughlin, S.B. Matching coding, circuits, cells, and molecules to signals: General principles of retinal design in the fly’s eye. Prog. Retinal Eye Res. 1994, 13, 165–196. [Google Scholar] [CrossRef]

- Fechner, G.T. Elemente der Psychophysik; Breitkopf & Härtel: Leipzig, Germany, 1860. [Google Scholar]

- Goldstein, E.B. Sensation and Perception, 9th ed.; Cengage Learning: Pacific Grove, CA, USA, 2014. [Google Scholar]

- Garcia, J.E.; Dyer, A.G.; Greentree, A.D.; Spring, G.; Wilksch, P.A. Linearisation of RGB camera responses for quantitative image analysis of visible and UV photography: A comparison of two techniques. PLoS ONE 2013, 8, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Garcia, J.E.; Wilksch, P.A.; Spring, G.; Philp, P.; Dyer, A. Characterization of digital cameras for reflected ultraviolet photography; implications for qualitative and quantitative image analysis during forensic examination. J. Forensic Sci. 2014, 59, 117–122. [Google Scholar] [CrossRef] [PubMed]

- Fisher, R.A. The use of multiple measurements in taxonomic problems. Ann. Eugenics 1936, 7, 179–188. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar]

- Efron, B.; Tibshirani, R.J. An Introduction to the Bootstrap, 1st ed.; CRC press: Boca Raton, FL, USA, 1994. [Google Scholar]

- Sgavetti, M.; Pompilio, L.; Meli, S. Reflectance spectroscopy (0.3–2.5 μm) at various scales for bulk-rock identification. Geosphere 2006, 2, 142–160. [Google Scholar] [CrossRef]

- Grant, R.H.; Heisler, G.M.; Gao, W.; Jenks, M. Ultraviolet leaf reflectance of common urban trees and the prediction of reflectance from leaf surface characteristics. Agric. For. Meteorol. 2006, 120, 127–139. [Google Scholar] [CrossRef]

- Coemans, M.A.J.M.; Vos Hzn, J.J.; Nuboer, J.F.W. The relation between celestial colour gradients and the position of the sun, with regard to the sun compass. Vision Res. 1994, 34, 1461–1470. [Google Scholar] [CrossRef]

- Rossel, S.; Wehner, R. Celestial orientation in bees: The use of spectral cues. J. Comp. Physiol. A 1984, 155, 605–613. [Google Scholar] [CrossRef]

| Global | |||||

| UV | Green | Contrast | Fisher | Mask | |

| Stones | |||||

| Sand | |||||

| Earth | |||||

| Forest/Suburban | |||||

| All | |||||

| Global | |||||

| UV | Green | Contrast | Fisher | Mask | |

| Stones | |||||

| Sand | |||||

| Earth | |||||

| Forest/Suburban | |||||

| All | |||||

| Stones | UV | Green | Contrast | Fisher | Mask | |

| *** | *** | *** | *** | *** | ||

| UV | *** | *** | - | - | ||

| Green | *** | *** | *** | |||

| Contrast | *** | *** | ||||

| Fisher | - | |||||

| Mask | ||||||

| Sand | UV | Green | Contrast | Fisher | Mask | |

| *** | *** | *** | *** | *** | ||

| UV | *** | *** | - | *** | ||

| Green | *** | *** | *** | |||

| Contrast | *** | *** | ||||

| Fisher | - | |||||

| Mask | ||||||

| Earth | UV | Green | Contrast | Fisher | Mask | |

| *** | *** | *** | *** | *** | ||

| UV | *** | *** | - | ** | ||

| Green | *** | *** | *** | |||

| Contrast | *** | *** | ||||

| Fisher | - | |||||

| Mask |

| Separation-Technique | Sunlight Conditions | Average | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Covered (C) | Direct (D) | C | D | |||||||||||

| 99% | 97% | 98% | 97% | 95% | 67% | 97% | 82% | 81% | 79% | 78% | 82% | 92% | 83% | |

| , 1 Seg. | 99% | 91% | 98% | 98% | 94% | 82% | 99% | 87% | 72% | 91% | 85% | 87% | 94% | 87% |

| , 13 Seg. | 99% | 97% | 97% | 97% | 98% | 92% | 98% | 98% | 99% | 96% | 97% | 97% | 97% | 97% |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Differt, D.; Möller, R. Spectral Skyline Separation: Extended Landmark Databases and Panoramic Imaging. Sensors 2016, 16, 1614. https://doi.org/10.3390/s16101614

Differt D, Möller R. Spectral Skyline Separation: Extended Landmark Databases and Panoramic Imaging. Sensors. 2016; 16(10):1614. https://doi.org/10.3390/s16101614

Chicago/Turabian StyleDiffert, Dario, and Ralf Möller. 2016. "Spectral Skyline Separation: Extended Landmark Databases and Panoramic Imaging" Sensors 16, no. 10: 1614. https://doi.org/10.3390/s16101614