Drivers’ Visual Behavior-Guided RRT Motion Planner for Autonomous On-Road Driving

Abstract

:1. Introduction

- RRT-based planners always generate jerky and unnatural trajectories that contain a number of unnecessary turns or unreachable positions [8]. These issues negatively affect the operations of autonomous vehicles because of the limited turning capability of the vehicles. For example, making a simple lane keeping maneuver on road, it is difficult to plan a straight lined trajectory for RRT-based planners. Especially, the problems become increasingly serious when driving on curved roads, which sometimes even result in the vehicle leaving the roadway.

- The key point of motion planning for autonomous vehicle is real time, whereas the existing RRT-based algorithms consume considerable time to draw useless samples, which decline the overall efficiency. For this issue, the approach in [2] employs different bias sampling strategies according to the various traffic scenes. However, this approach cannot cover every traffic scene, and the related parameters of the sampling lack universality.

- The trajectories planned by RRT barely consider the curvature continuity. For the practical application, this issue can result in the control system problems of autonomous vehicles such as instability, mechanical failure, and riding discomfort. Furthermore, this issue can negatively affect the trajectory tracking of low-level controls, thus increasing the tracking error and controller effort.

2. Preliminary Work and Problem Formulation

2.1. Motion Planning Problem Formulation

2.2. Vehicle Kinematic Model

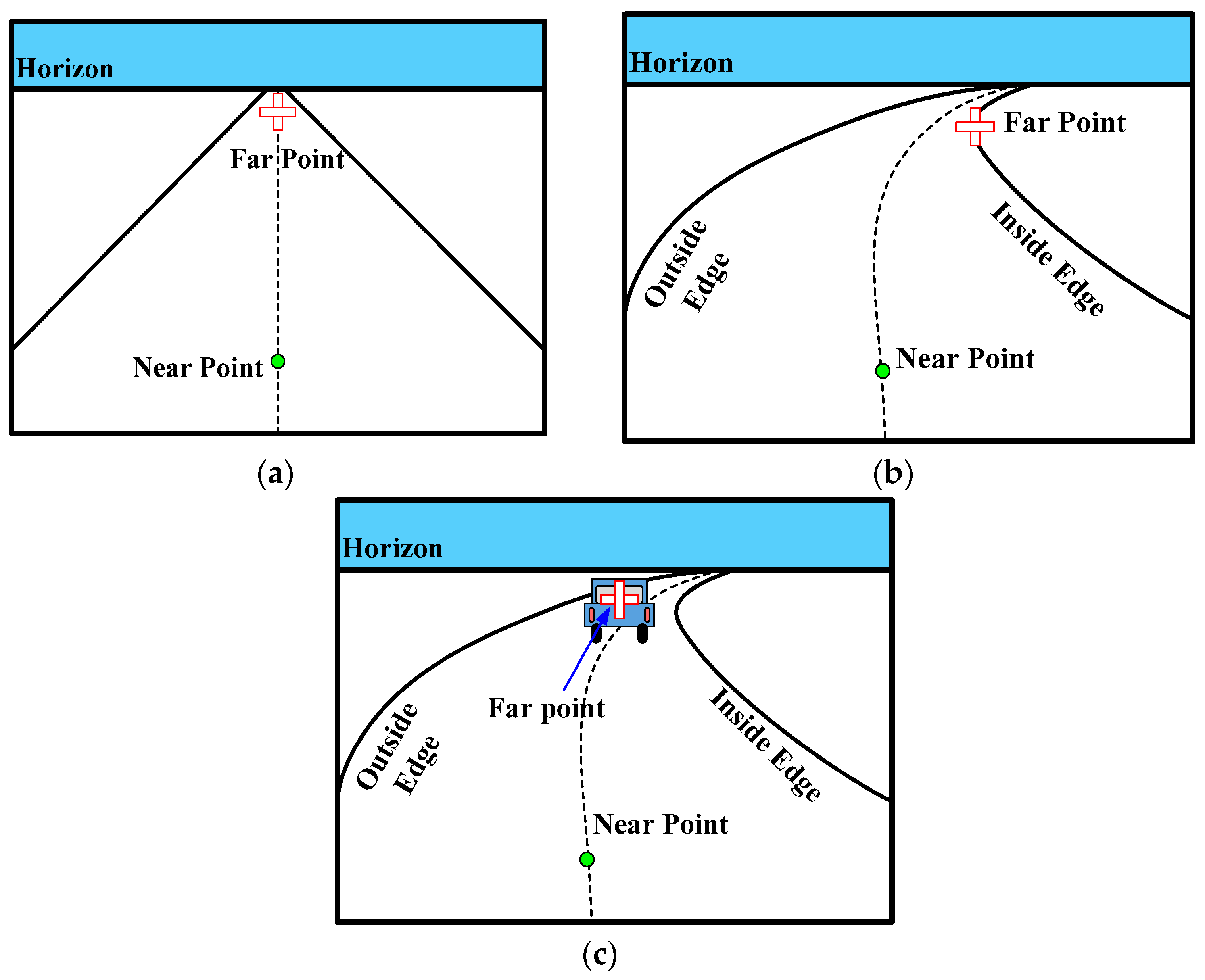

2.3. Drivers’ Visual Search Behavior

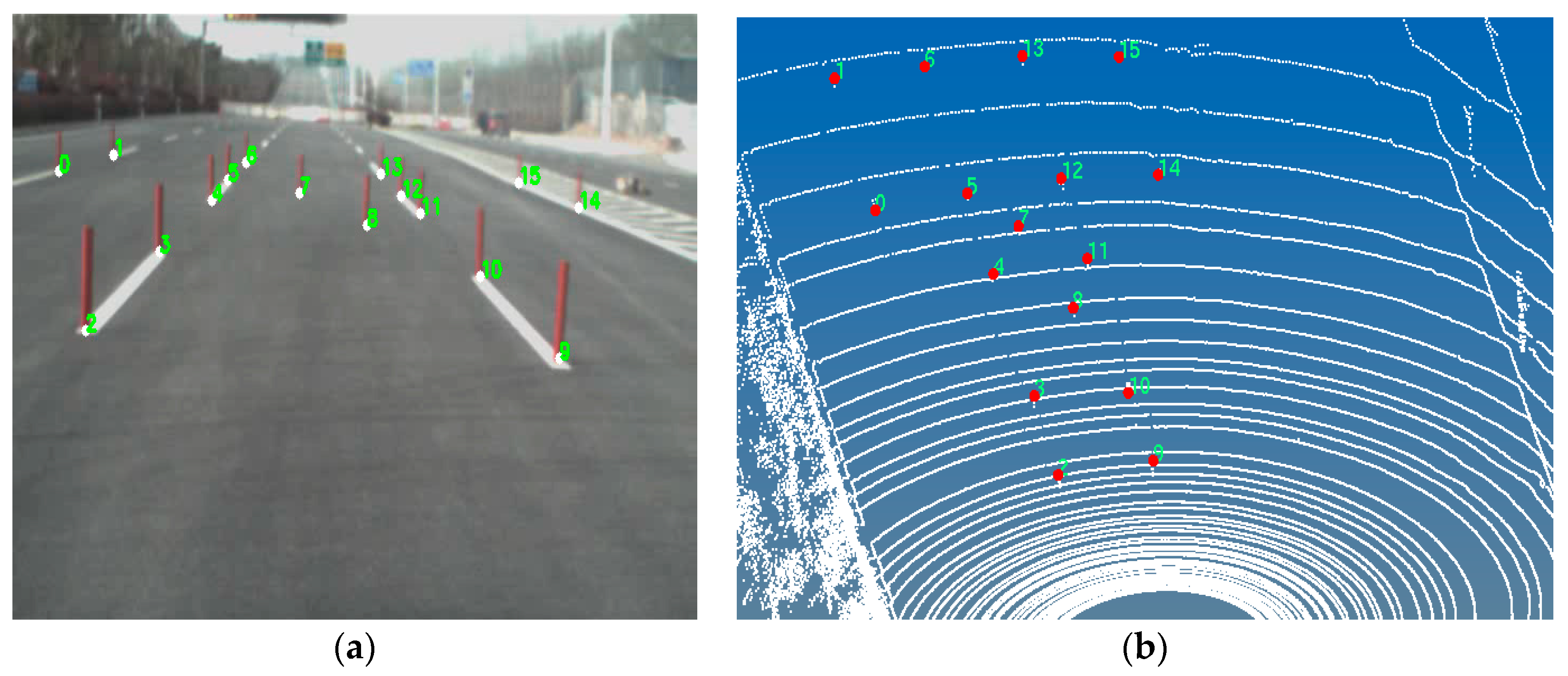

2.4. Calibration between Camera and Laser Radar

3. DV-RRT Algorithm

3.1. Basic RRT Operation

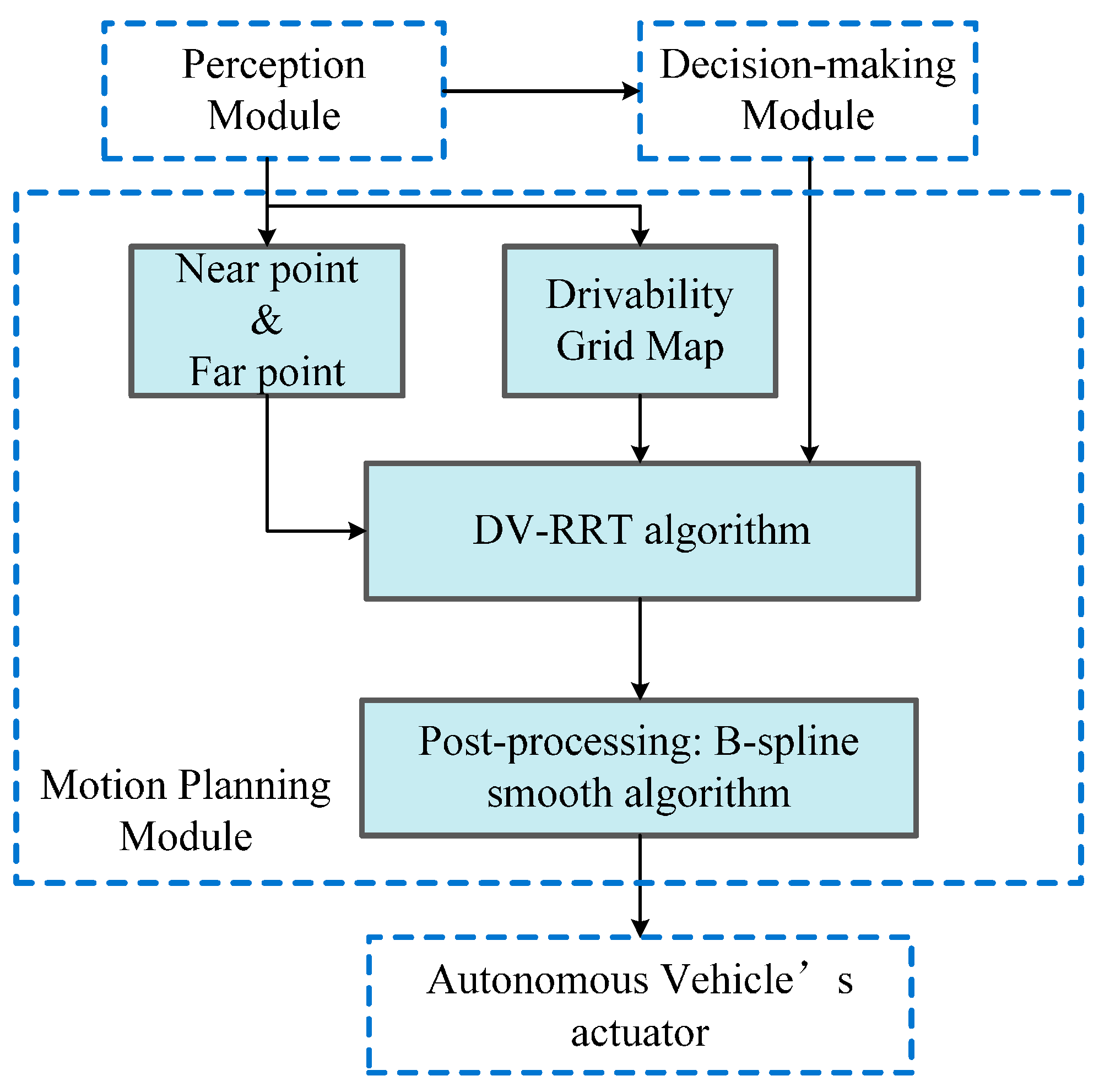

3.2. Overview of the DV-RRT Algorithm

| Algorithm 1. DV-RRT Algorithm |

|

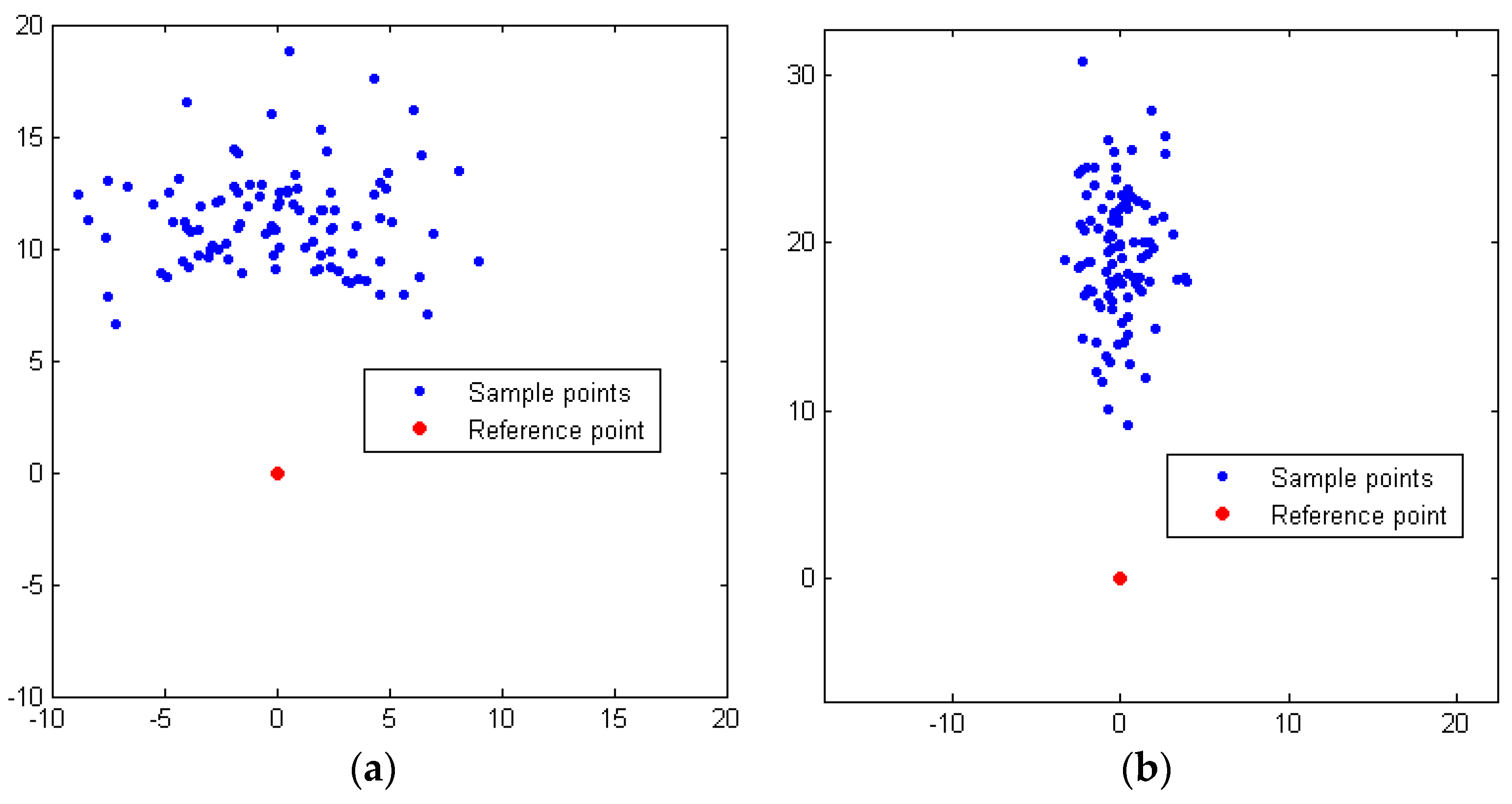

3.3. Effective Hybrid Sampling Strategies

3.4. Reasonable Metric Function

3.5. B-Spline-Based Post-Processing Method

| Algorithm 2. Post-Processing Method |

|

4. Experimental Results and Discussion

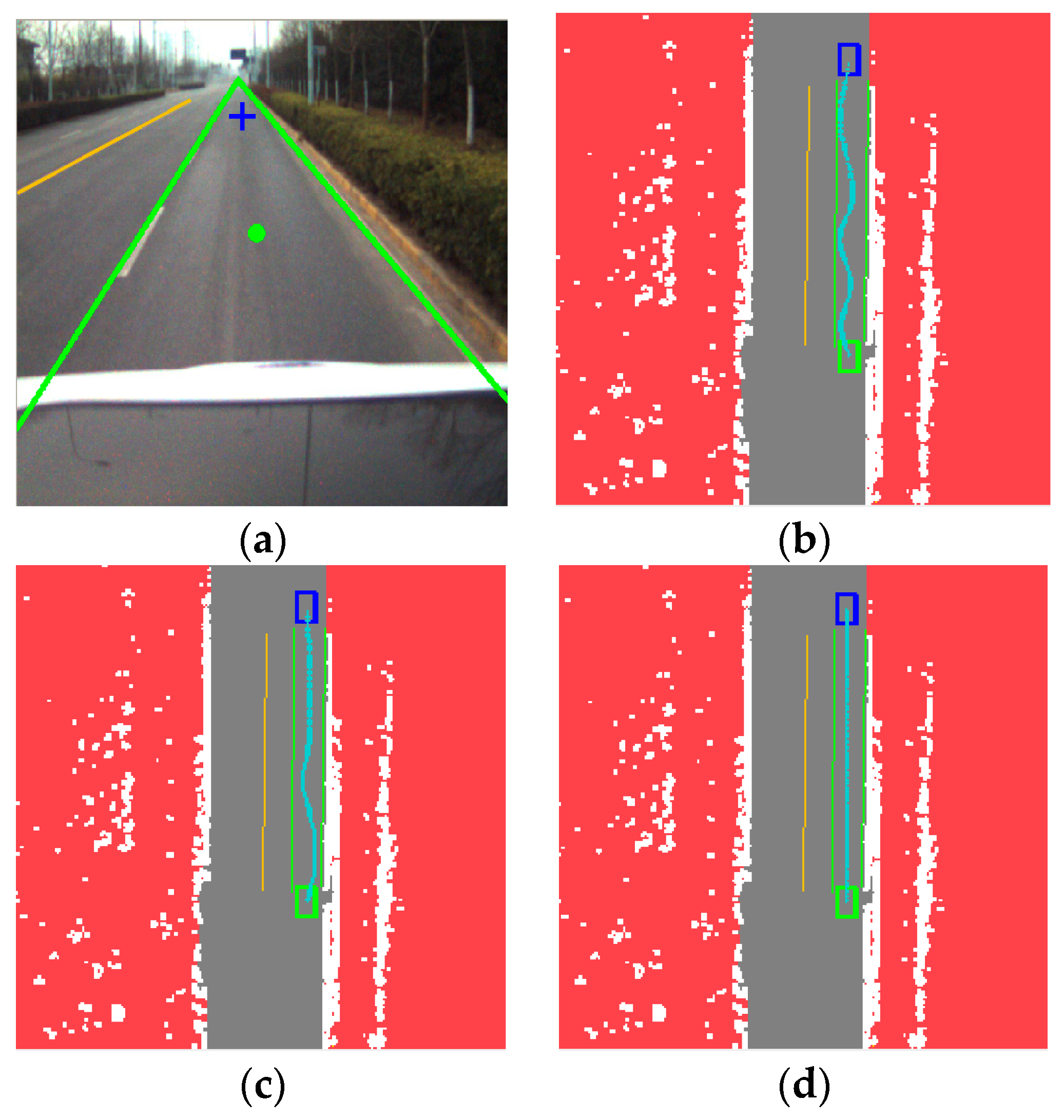

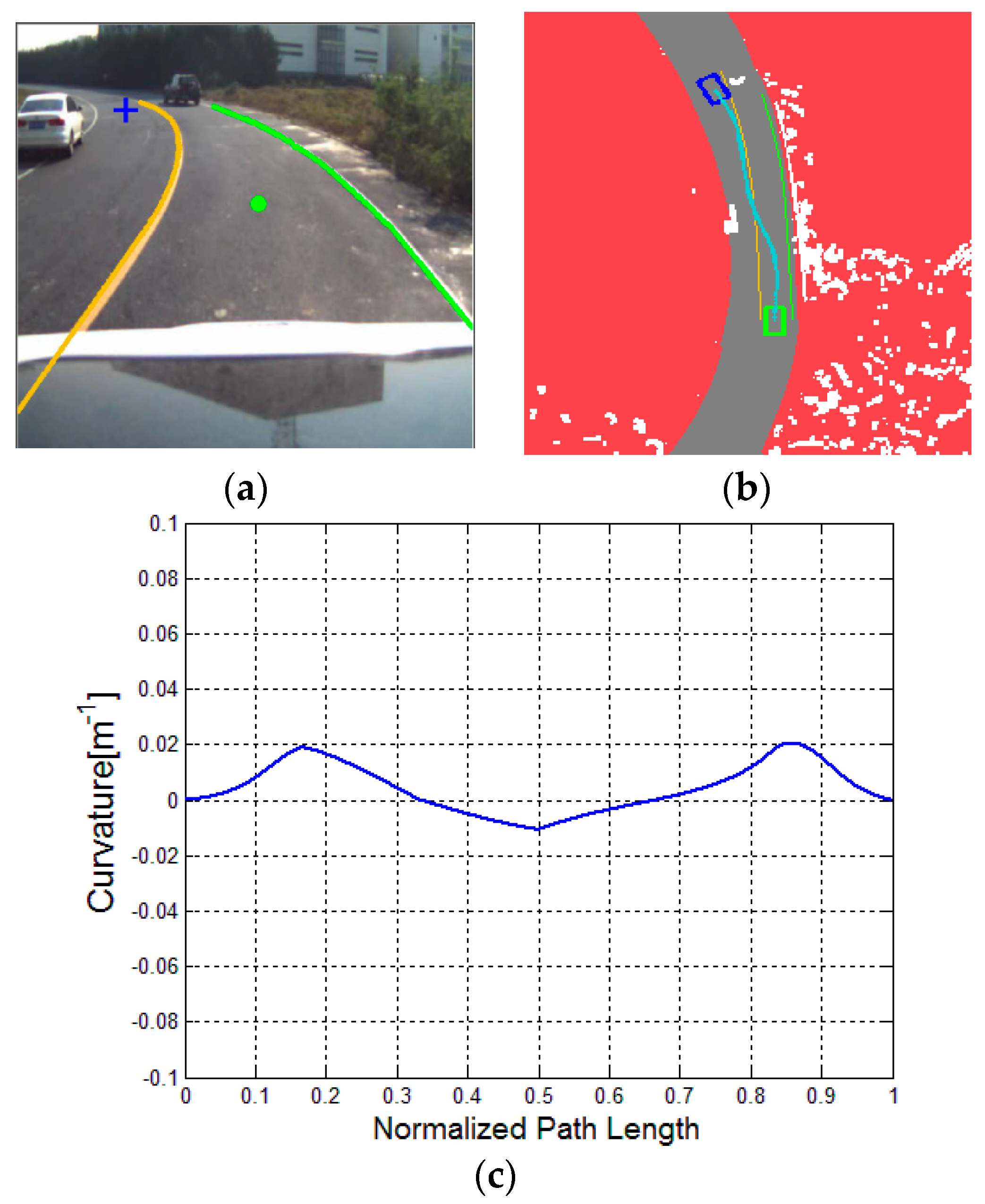

4.1. Drivability Grid Map

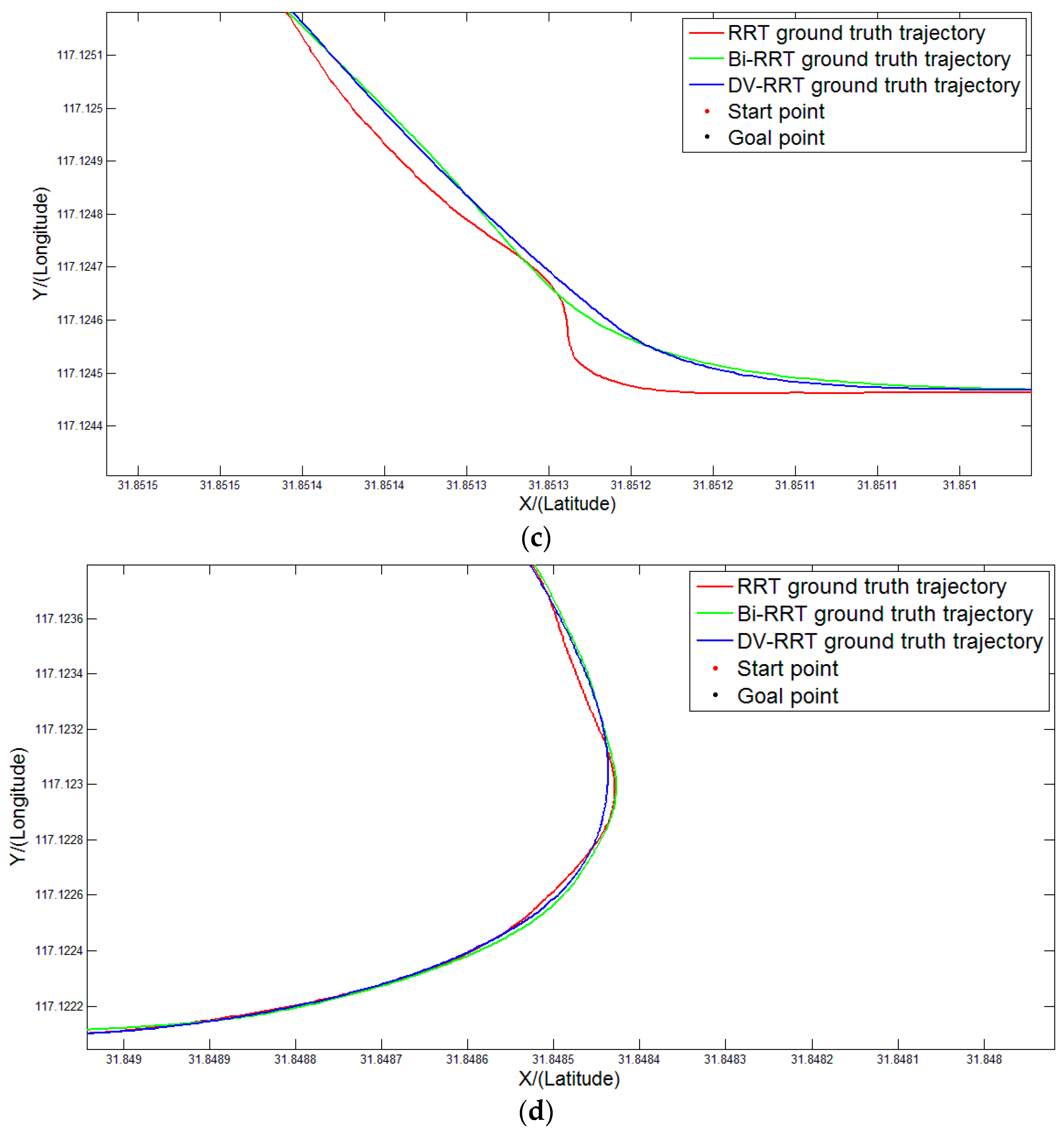

4.2. Comparative Test and Analysis

| Scenarios | Indicators | Approaches | ||

|---|---|---|---|---|

| Basic RRT | Bi-RRT | DV-RRT | ||

| Scenario 1: Straight road | Average number of samples | 351.4 | 180.5 | 89.2 |

| Average number of nodes | 163.6 | 89.7 | 39.7 | |

| Average running time (ms) | 210.5 | 100.4 | 43.3 | |

| Maximum curvature (m−1) | 0.086 | 0.044 | 0 | |

| Average path length (m) | 43.76 | 41.81 | 40.10 | |

| Scenario 2: Curved road | Average number of samples | 481.7 | 250.4 | 110.6 |

| Average number of nodes | 249.5 | 136.2 | 50.7 | |

| Average running time (ms) | 264.2 | 160.4 | 53.1 | |

| Maximum curvature (m−1) | 0.105 | 0.066 | 0.009 | |

| Average path length (m) | 33.45 | 32.61 | 31.53 | |

| Scenario 3: With a parked car on the straight road | Average number of samples | 410.3 | 203.4 | 130.2 |

| Average number of nodes | 191.6 | 124.7 | 64.4 | |

| Average running time (ms) | 211.2 | 143.5 | 66.7 | |

| Maximum curvature (m−1) | 0.092 | 0.078 | 0.015 | |

| Average path length (m) | 43.57 | 41.63 | 39.22 | |

| Scenario 4: With two parked cars on the curved road | Average number of samples | 551.8 | 326.1 | 198.5 |

| Average number of nodes | 302.4 | 172.8 | 83.6 | |

| Average running time (ms) | 330.7 | 190.2 | 86.2 | |

| Maximum curvature (m−1) | 0.112 | 0.093 | 0.02 | |

| Average path length (m) | 39.01 | 37.82 | 36.15 | |

| Scenario 5: With a lead (dynamic) car on the curved road | Average number of samples | 504.1 | 297.3 | 162.4 |

| Average number of nodes | 276.5 | 158.6 | 71.9 | |

| Average running time (ms) | 290.3 | 173.7 | 73.4 | |

| Maximum curvature (m−1) | 0.097 | 0.084 | 0.008 | |

| Average path length (m) | 36.58 | 35.13 | 33.71 | |

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ferguson, D.; Howard, T.M.; Likhachev, M. Motion planning in urban environments. J. Field Robot. 2008, 25, 939–960. [Google Scholar] [CrossRef]

- Kuwata, Y.; Teo, J.; Fiore, G.; Karaman, S.; Frazzoli, E.; How, J. Real-time motion planning with applications to autonomous urban driving. IEEE Trans. Control Syst. Technol. 2009, 17, 1105–1118. [Google Scholar] [CrossRef]

- Kuwata, Y.; Teo, J.; Fiore, G.; Karaman, S.; Frazzoli, E.; How, J. Motion planning in complex environments usingclosed-loop prediction. In Proceedings of the AIAA Guidance, Navigation Control Conference, Honolulu, HI, USA, 18–21 August 2008; pp. 1–22.

- Keonyup, C.; Lee, M.; Sunwoo, M. Local path planning for off-road autonomous driving with avoidance of static obstacles. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1599–1616. [Google Scholar]

- Buehler, M.; Iagnemma, K.; Singh, S. The DARPA Urban Challenge: Autonomous Vehicles in City Traffic; Springer-Verlag: New York, NY, USA, 2010. [Google Scholar]

- Özgüner, Ü.; Stiller, C.; Redmill, K. Systems for safety and autonomous behavior in cars: The DARPA grand challenge experience. IEEE Proc. 2007, 95, 397–412. [Google Scholar] [CrossRef]

- Chen, J.; Zhao, P.; Liang, H.; Mei, T. Motion Planning for Autonomous vehicle Based on Radial Basis Function Neural in Unstructured Environment. Sensors 2014, 14, 17548–17566. [Google Scholar] [CrossRef] [PubMed]

- Du, M.; Chen, J.; Zhao, P.; Liang, H.; Xin, Y.; Mei, T. An Improved RRT-based Motion Planner for Autonomous Vehicle in Cluttered Environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 4674–4679.

- LaValle, S.M. Planning Algorithms; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Dijkstra, E.W. A note on two problems in connection with graphs. Numer. Math. 1959, 1, 269–271. [Google Scholar] [CrossRef]

- Koren, Y.; Borenstein, J. Potential field methods and their inherent limitations for mobile robot navigation. In Proceedings of the IEEE Conference on Robotics and Automation, Sacramento, CA, USA, 9–11 April 1991; pp. 1398–1404.

- Likhachev, M.; Ferguson, D.; Gordon, G.; Thrun, S.; Stenz, A. Anytime dynamic A*: An anytime, Replanning algorithm. In Proceedings of the International Conference on Automated Planning and Scheduling, Monterey, CA, USA, 5–10 June 2005; pp. 262–271.

- Katrakazas, C.; Quddus, M.; Chen, W.H.; Lipika, D. Real-time motion planning methods for autonomous on-road driving: State-of-the-art and future research directions. Transp. Res. C Emerg. Technol. 2015, 60, 416–442. [Google Scholar] [CrossRef] [Green Version]

- Gu, T.Y.; Dolan, J.M. On-road motion planning for autonomous vehicles. In Intelligent Robotics and Applications; Springer: Berlin, Germany, 2012; pp. 588–597. [Google Scholar]

- Liang, M.; Yang, J.; Zhang, M. A two-level path planning method for on-road autonomous driving. In Proceedings of the IEEE 2012 2nd International Conference on Intelligent System Design and Engineering Application (ISDEA), Sanya, China, 6–7 January 2012; pp. 661–664.

- Pivtoraiko, M.; Kelly, A. Fast and feasible deliberative motion planner for dynamic environments. In Proceedings of the International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009; pp. 1–7.

- Fernández, C.; Domínguez, R.; Fernández-Llorca, D.; Alonso, J.; Sotelo, M. Autonomous Navigation and Obstacle Avoidance of a Micro-bus. Int. J. Adv. Robot. Syst. 2013. [Google Scholar] [CrossRef]

- Ferguson, D.; Stentz, A. The Field D*Algorithm for Improved Pathplanning and Replanning in Uniform and Nonuniform Cost Environments; Technical Report; Robotics Institute, Carnegie Mellon University: Pittsburgh, PA, USA, 2005. [Google Scholar]

- Kuffner, J.; LaValle, S. RRT-connect: An efficient approach to single-query path planning. In Proceedings of the IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 24–28 April 2000; pp. 995–1001.

- Jaillet, L.; Hoffman, J.; van den Berg, J.; Abbeel, P.; Porta, J.; Goldberg, K. EG-RRT: Environment-guided random trees for kinodynamicmotion planning with uncertainty and obstacles. In Proceedings of the IROS, San Francisco, CA, USA, 25–30 September 2011; pp. 2646–2652.

- Melchior, N.A.; Simmons, R. Particle RRT for path planning withuncertainty. In Proceedings of the IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 1617–1624.

- Karaman, S.; Frazzoli, E. Sampling-based algorithms for optimalmotion planning. Int. J. Robot. Res. 2011, 30, 846–894. [Google Scholar] [CrossRef]

- Jeon, J.H.; Karaman, S.; Frazzoli, E. Anytime computation of time-optimal off-road vehicle maneuvers using the RRT*. In Proceedings of the IEEE 50th Conference on Decision and Control and European Control, Orlando, FL, USA, 12–15 December 2011; pp. 3276–3282.

- Ma, L.; Xue, J.; Kawabata, K.; Zhu, J.; Ma, C.; Zheng, N. Efficient sampling-based motion planning for on-road autonomous driving. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1–16. [Google Scholar] [CrossRef]

- Land, M.F.; Lee, D.N. Where do we look when we steer. Nature 1994, 369, 742–744. [Google Scholar] [CrossRef] [PubMed]

- Salvucci, D.D.; Gray, R. A two-point visual control model of steering. Perception 2004, 33, 1233–1248. [Google Scholar] [CrossRef] [PubMed]

- Mars, F. Driving around bends with manipulated eye-steeringcoordination. J. Vis. 2008, 8, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Lappi, O. Future path and tangent point models in the visual control of locomotion in curve driving. J. Vis. 2014, 14. [Google Scholar] [CrossRef] [PubMed]

- Sentouh, C.; Chevrel, P.; Mars, F.; et al. A sensorimotor driver model for steering control. In Proceedings of the 2009 IEEE International Conference on Systems, Man, and Cybernetics, San Antonio, TX, USA, 11–14 October 2009; pp. 2462–2467.

- Cao, S.; Qin, Y.; Shen, M.W. Modeling the effect of driving experience on lane keeping performance using ACT-R cognitive architecture. Chin. Sci. Bull. 2013, 58, 2078–2086. [Google Scholar] [CrossRef]

- Kandil, F.I.; Rotter, A.; Lappe, M. Driving is smoother and more stable when using the tangent point. J. Vis. 2009, 9, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Lehtonen, E.; Lappi, O.; Koirikivi, I.; Heikki, S. Effect of driving experience on anticipatory look-ahead fixations in real curve driving. Accid. Anal. Prev. 2014, 70, 195–208. [Google Scholar] [CrossRef] [PubMed]

- Minh, V.T.; Pumwa, J. Feasible Path Planning for Autonomous Vehicles. Math. Probl. Eng. 2014, 2014. [Google Scholar] [CrossRef]

- Rushton, S.K.; Wen, J.; Allison, R.S. Egocentric direction and the visual guidance of robot locomotion: Background, theory and implementation. In Biologically Motivated Computer Vision; Springer: Berlin, Germany, 2002; pp. 576–591. [Google Scholar]

- Liu, J.; Liang, H.; Wang, Z.; Chen, X. A Framework for Applying Point Clouds Grabbed by Multi-Beam LIDAR in Perceiving the Driving Environment. Sensors 2015, 15, 21931–21956. [Google Scholar] [CrossRef] [PubMed]

- Du, M.; Chen, J.; Mei, T.; Zhao, P.; Liang, H. RRT-based Motion Planning Algorithm for Intelligent Vehicle in Complex Environments. Robot 2015, 37, 443–450. [Google Scholar]

- Shkel, A.M.; Lumelsky, V. Classification of the dubins set. Robot. Auton. Syst. 2001, 34, 179–202. [Google Scholar] [CrossRef]

- Dubins, L.E. On curves of minimal length with a constraint on average curvature, and with prescribed initial and terminal positions andtangents. Am. J. Math. 1957, 79, 497–516. [Google Scholar] [CrossRef]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, M.; Mei, T.; Liang, H.; Chen, J.; Huang, R.; Zhao, P. Drivers’ Visual Behavior-Guided RRT Motion Planner for Autonomous On-Road Driving. Sensors 2016, 16, 102. https://doi.org/10.3390/s16010102

Du M, Mei T, Liang H, Chen J, Huang R, Zhao P. Drivers’ Visual Behavior-Guided RRT Motion Planner for Autonomous On-Road Driving. Sensors. 2016; 16(1):102. https://doi.org/10.3390/s16010102

Chicago/Turabian StyleDu, Mingbo, Tao Mei, Huawei Liang, Jiajia Chen, Rulin Huang, and Pan Zhao. 2016. "Drivers’ Visual Behavior-Guided RRT Motion Planner for Autonomous On-Road Driving" Sensors 16, no. 1: 102. https://doi.org/10.3390/s16010102