INS/GPS/LiDAR Integrated Navigation System for Urban and Indoor Environments Using Hybrid Scan Matching Algorithm

Abstract

:1. Introduction

2. Literature Review

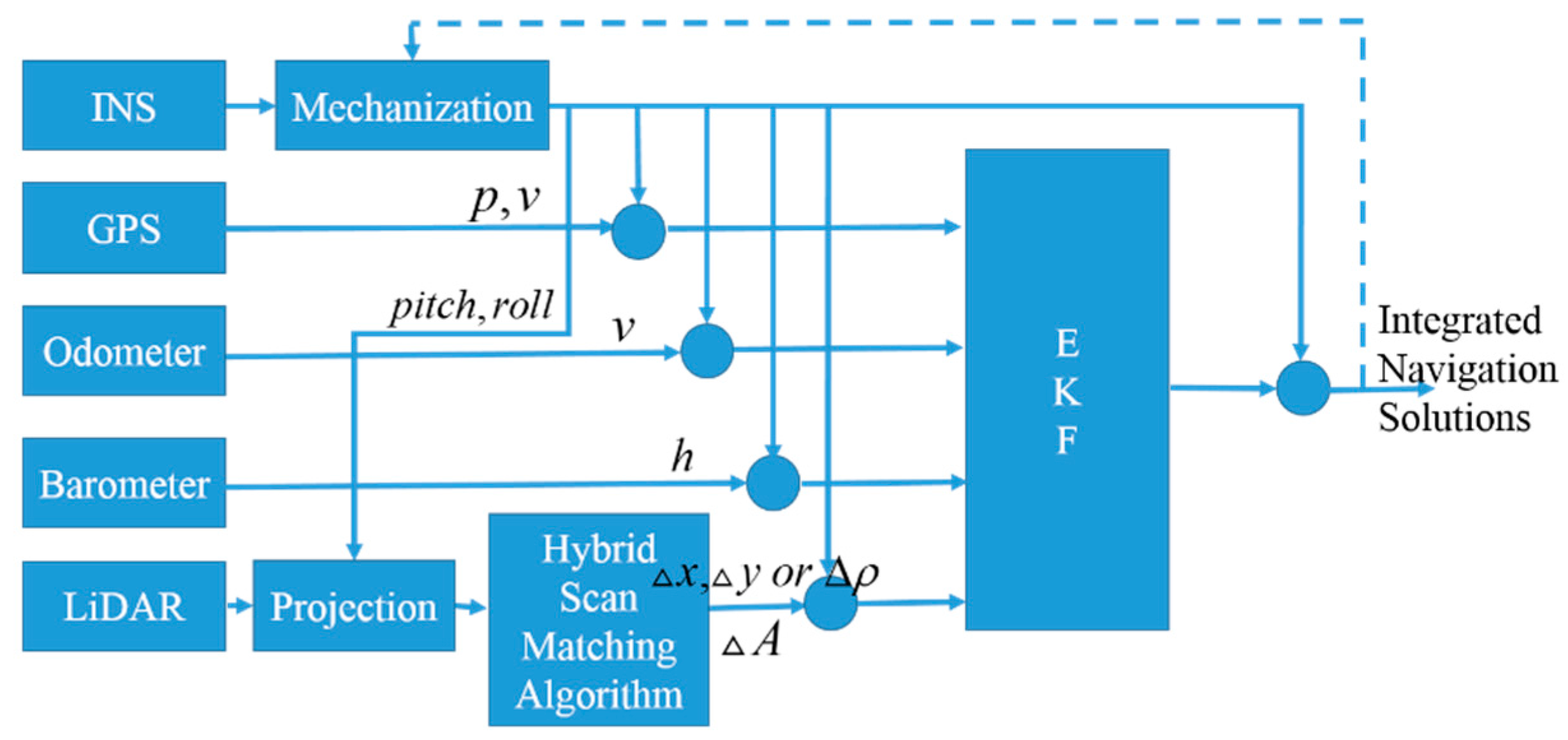

- GPS and LiDAR are used as aiding systems to alternatively provide periodic corrections to INS in different environments. A quaternion-based error model is used to fuse multi-sensor information.

- An innovative hybrid scan matching algorithm that combines feature-based scan matching method with ICP-based scan matching method is proposed due to their complementary characteristics.

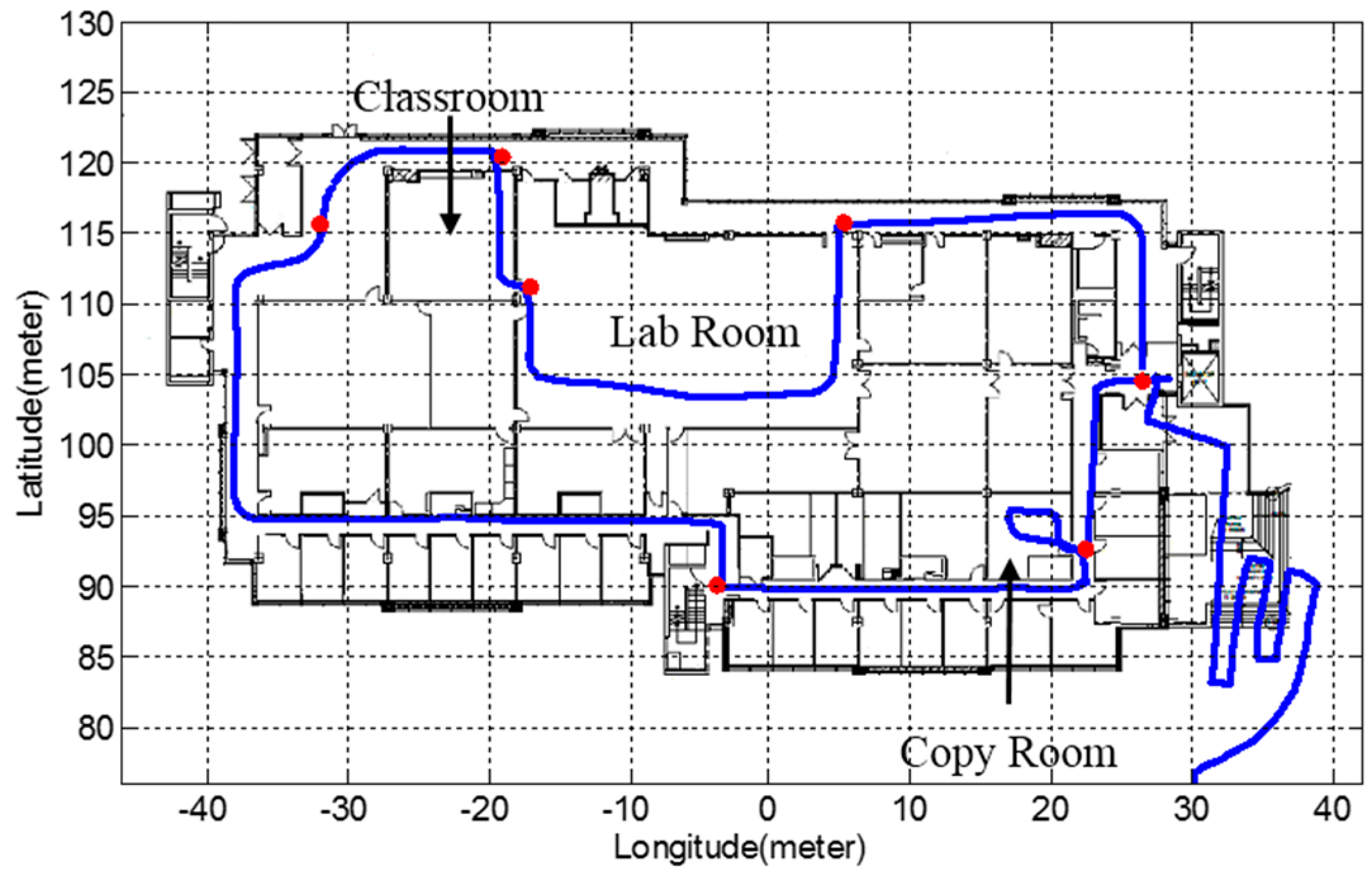

- Based on the proposed hybrid scan matching algorithm, both loosely coupled and tightly coupled INS and LiDAR integration are implemented and compared using real experimental data.

3. Quaternion-Based INS Mechanization

| Frames | Definition |

|---|---|

| Body frame | Origin: Vehicle center of mass. |

| Y: Longitudinal (forward) direction. | |

| X: Transversal (lateral) direction. | |

| Z: Up vertical direction. | |

| Navigation frame | Origin: Vehicle center of mass. |

| Y: True north direction. | |

| X: East direction. | |

| Z: Up direction. |

3.1. Position Mechanization Equations

3.2. Velocity Mechanization Equations

3.3. Attitude Mechanization Equations

4. Hybrid Scan Matching Algorithm

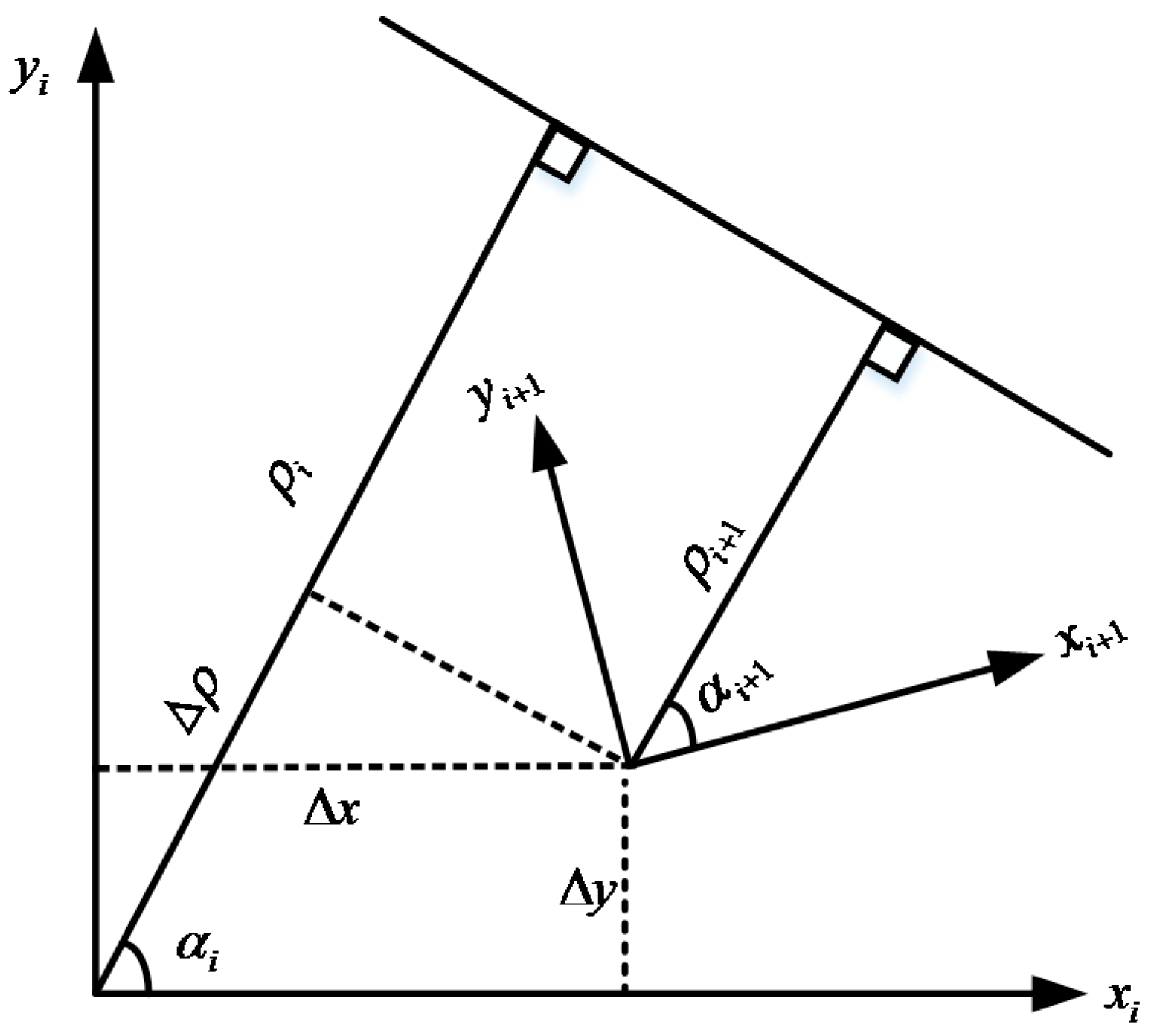

4.1. Feature-Based Scan Matching Method

4.2. Iterative Closest Point (ICP) Scan Matching Method

- (1)

- Inertial sensors provide initial rotation and translation;

- (2)

- Transform the current scan using the current rotation and translation;

- (3)

- For each point in the transformed current scan, find its two closest corresponding points in the reference scan;

- (4)

- Minimize the sum of the square distance from point in the transformed current scan to the line segment containing the two closest corresponding points.

- (5)

- Check whether the convergence is reached. If so, the algorithm will continue to process the next new scan. Otherwise, it will return to step two to search new correspondences again and repeat the procedures.

4.3. Hybrid Scan Matching Algorithm

5. Filter Design

5.1. System Model

5.2. Measurement Model

5.2.1. GPS Measurements

5.2.2. LiDAR Measurements

5.2.3. Odometer and Barometer Measurements

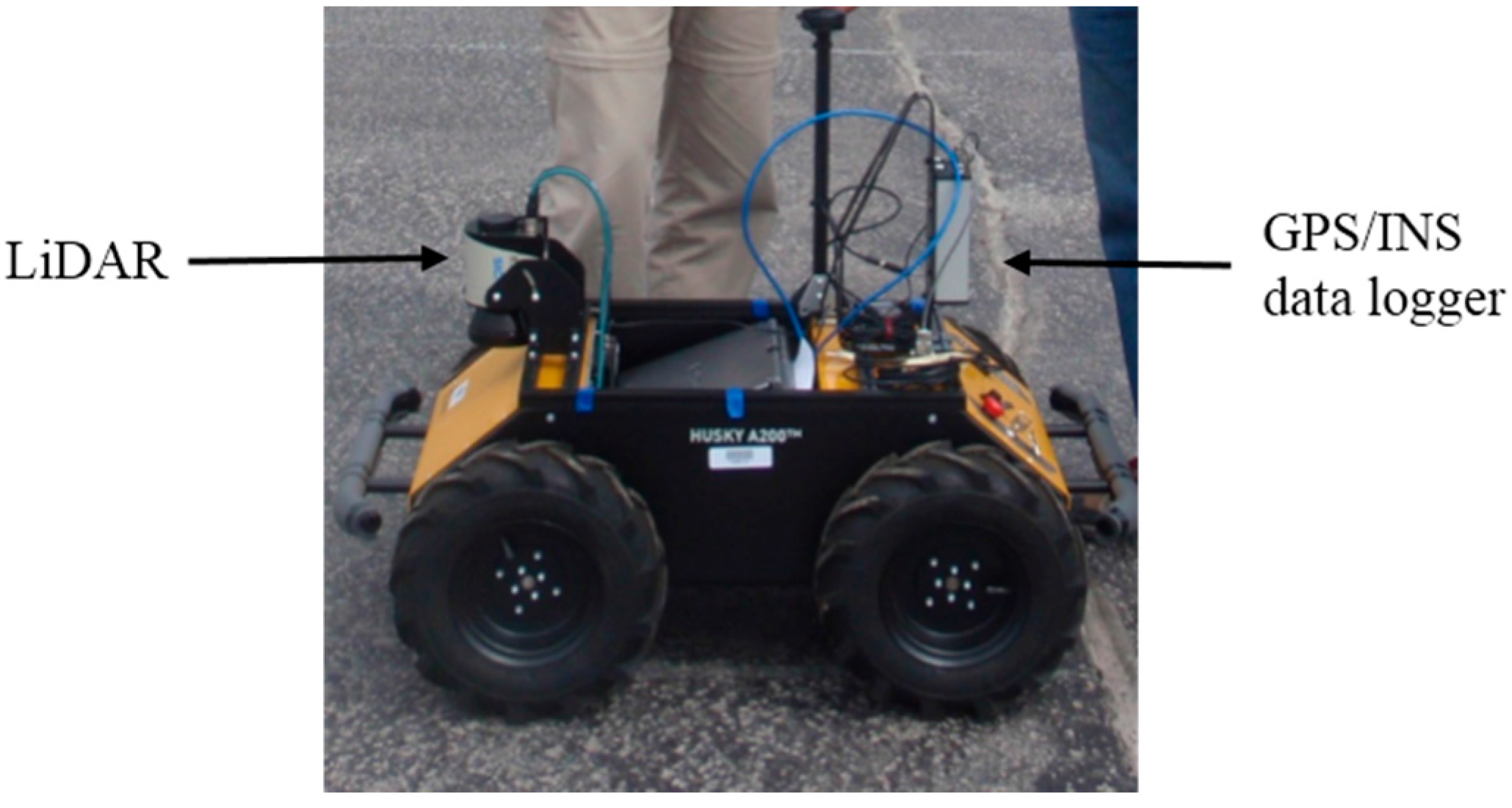

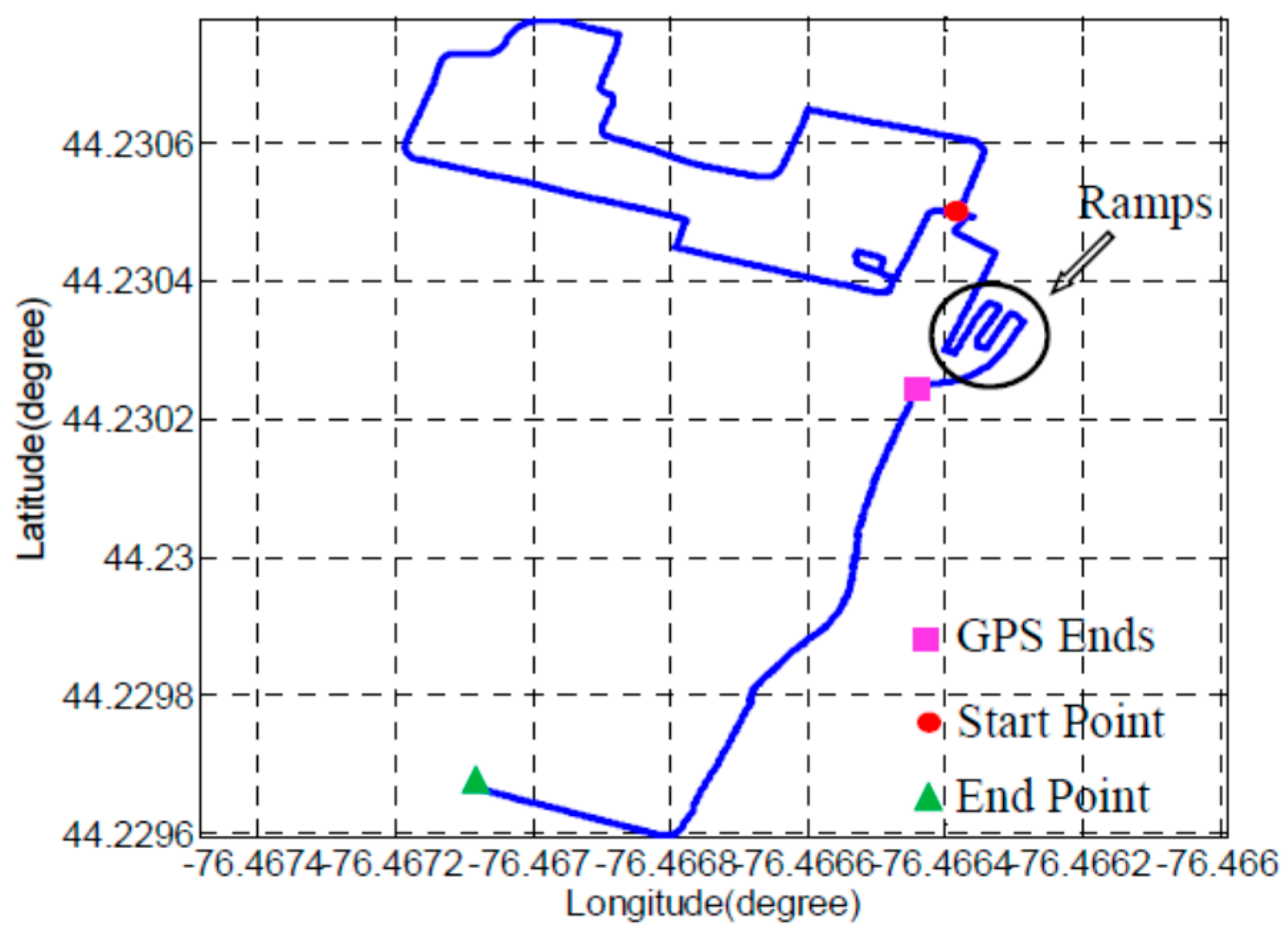

6. Experimental Results and Analysis

| Integration Schemes | Feature-Based Scan Matching Activated Times (Percentage) | ICP-Based Scan Matching Activated Times (Percentage) | |

|---|---|---|---|

| Outdoor | Indoor | ||

| Loosely coupled system | 3392 (96.17%) | 105 (2.98%) | 30 (0.85%) |

| Tightly coupled system | 3521 (99.83%) | 6 (0.17%) | 0 |

| Localization Errors(m) | 1 | 2 | 3 | 4 | 5 | 6 | 7 | Average |

|---|---|---|---|---|---|---|---|---|

| INS | 1.43 | 4.66 | 10.96 | 8.73 | 6.22 | 6.48 | 13.53 | 7.43 |

| Loosely coupled system | 0.18 | 0.69 | 0.62 | 0.60 | 0.46 | 0.73 | 0.60 | 0.55 |

| Tightly coupled system | 0.12 | 0.45 | 0.27 | 0.63 | 0.32 | 0.51 | 0.80 | 0.44 |

7. Conclusions

Author Contributions

Conflicts of Interest

References

- Cassimon, D.; Engelen, P.-J.; Yordanov, V. Compound real option valuation with phase-specific volatility: A multi-phase mobile payments case study. Technovation 2011, 31, 240–255. [Google Scholar] [CrossRef] [Green Version]

- Ozdenizci, B.; Coskun, V.; Ok, K. NFC internal: An indoor navigation system. Sensors 2015, 15, 7571–7595. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Soloviev, A. Tight coupling of GPS, laser scanner, and inertial measurements for navigation in urban environments. In Proceedings of the 2008 IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 6–8 May 2008; pp. 511–525.

- Kohlbrecher, S.; von Stryk, O.; Meyer, J.; Klingauf, U. A flexible and scalable slam system with full 3D motion estimation. In Proceedings of the 2011 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Kyoto, Japan, 1–5 November 2011; pp. 155–160.

- Hornung, A.; Wurm, K.M.; Bennewitz, M. Humanoid robot localization in complex indoor environments. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 1690–1695.

- Bry, A.; Bachrach, A.; Roy, N. State estimation for aggressive flight in GPS-denied environments using onboard sensing. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012.

- Shen, S.; Michael, N.; Kumar, V. Autonomous multi-floor indoor navigation with a computationally constrained MAV. In Proceedings of the 2011 IEEE international conference on Robotics and automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 20–25.

- Fallon, M.F.; Johannsson, H.; Brookshire, J.; Teller, S.; Leonard, J.J. Sensor fusion for flexible human-portable building-scale mapping. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Portugal, 7–12 October 2012; pp. 4405–4412.

- Joerger, M.; Pervan, B. Range-domain integration of GPS and laser scanner measurements for outdoor navigation. In Proceedings of the ION GNSS 19th International Technical Meeting, Fort Worth, TX, USA, 26–29 September 2006; pp. 1115–1123.

- Hentschel, M.; Wulf, O.; Wagner, B. A GPS and laser-based localization for urban and non-urban outdoor environments. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2008, Nice, France, 22–26 September 2008; pp. 149–154.

- Jabbour, M.; Bonnifait, P. Backing up GPS in urban areas using a scanning laser. In Proceedings of the 2008 IEEE/ION Position, Location and Navigation Symposium, Monterey, CA, USA, 5–8 May 2008; pp. 505–510.

- Soloviev, A.; Bates, D.; van Graas, F. Tight coupling of laser scanner and inertial measurements for a fully autonomous relative navigation solution. Navigation 2007, 54, 189–205. [Google Scholar] [CrossRef]

- Liu, S.; Atia, M.M.; Karamat, T.B.; Noureldin, A. A LiDAR-aided indoor navigation system for UGVs. J. Navig. 2015, 68, 253–273. [Google Scholar] [CrossRef]

- Martínez, J.L.; González, J.; Morales, J.; Mandow, A.; García‐Cerezo, A.J. Mobile robot motion estimation by 2D scan matching with genetic and iterative closest point algorithms. J. Field Robot. 2006, 23, 21–34. [Google Scholar] [CrossRef]

- Garulli, A.; Giannitrapani, A.; Rossi, A.; Vicino, A. Mobile robot slam for line-based environment representation. In Proceedings of the 44th IEEE Conference on Decision and Control, 2005 and 2005 European Control Conference. CDC-ECC’05, Seville, Spain, 12–15 December 2005; pp. 2041–2046.

- Arras, K.O.; Siegwart, R.Y. Feature extraction and scene interpretation for map-based navigation and map building. In Proceedings of the SPIE 3210, Mobile Robots XII, Pittsburgh, PA, USA, 25 January 1998; pp. 42–53.

- Pfister, S.T.; Roumeliotis, S.I.; Burdick, J.W. Weighted line fitting algorithms for mobile robot map building and efficient data representation. In Proceedings of the IEEE International Conference on Robotics and Automation, ICRA’03, 14–19 September 2003; pp. 1304–1311.

- Nguyen, V.; Martinelli, A.; Tomatis, N.; Siegwart, R. A comparison of line extraction algorithms using 2D laser rangefinder for indoor mobile robotics. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2005, Edmonton, AB, Canada, 2–6 August 2005; pp. 1929–1934.

- Lingemann, K.; Nüchter, A.; Hertzberg, J.; Surmann, H. High-speed laser localization for mobile robots. Robot. Auton. Syst. 2005, 51, 275–296. [Google Scholar] [CrossRef]

- Aghamohammadi, A.A.; Taghirad, H.D.; Tamjidi, A.H.; Mihankhah, E. Feature-based range scan matching for accurate and high speed mobile robot localization. In Proceedings of the 3rd European Conference on Mobile Robots, Freiburg, Germany, 19–21 September 2007.

- Kammel, S.; Pitzer, B. LiDAR-based lane marker detection and mapping. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 1137–1142.

- Núñez, P.; Vazquez-Martin, R.; Bandera, A.; Sandoval, F. Fast laser scan matching approach based on adaptive curvature estimation for mobile robots. Robotica 2009, 27, 469–479. [Google Scholar] [CrossRef]

- Segal, A.V.; Haehnel, D.; Thrun, S. Generalized-ICP. In Proceedings of the Robotics: Science and Systems, Seattle, WA, USA, 28 June–1 July 2009.

- Besl, P.J.; Mckay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Censi, A. An ICP variant using a point-to-line metric. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, ICRA 2008, Pasadena, CA, USA, 19–23 May 2008; pp. 19–25.

- Weiß, G.; von Puttkamer, E. A map based on laserscans without geometric interpretation. In Intelligent Autonomous Systems; IOS Press: Amsterdam, The Netherlands, 1995; pp. 403–407. [Google Scholar]

- Censi, A.; Iocchi, L.; Grisetti, G. Scan matching in the Hough domain. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, ICRA 2005, Barcelona, Spain, 18–22 April 2005; pp. 2739–2744.

- Burguera, A.; González, Y.; Oliver, G. On the use of likelihood fields to perform sonar scan matching localization. Auton. Robots 2009, 26, 203–222. [Google Scholar] [CrossRef]

- Olson, E.B. Real-time correlative scan matching. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, ICRA’09, Kobe, Japan, 12–17 May 2009; pp. 4387–4393.

- Liu, S.; Atia, M.M.; Gao, Y.; Noureldin, A. Adaptive covariance estimation method for LiDAR-aided multi-sensor integrated navigation systems. Micromachines 2015, 6, 196–215. [Google Scholar] [CrossRef]

- Liu, S.; Atia, M.M.; Gao, Y.; Noureldin, A. An inertial-aided lidar scan matching algorithm for multisensor land-based navigation. In Proceedings of the 27th International Technical Meeting of the Satellite Division of the Institute of Navigation (ION GNSS+ 2014), Tampa, FL, USA, 8–12 September 2014; pp. 2089–2096.

- Farrell, J. Aided Navigation: GPS with High Rate Sensors; McGraw-Hill, Inc.: New York, NY, USA, 2008. [Google Scholar]

- Zhou, J. Low-cost MEMS-INS/GPS Integration Using Nonlinear Filtering Approaches. Ph.D. Thesis, Universität Siegen, Siegen, Germany, 18 April 2013. [Google Scholar]

- SICK. Operating Instructions: Lms100/111/120 Laser Measurement Systems; SICK AG: Waldkirch, Germany, 2008. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, Y.; Liu, S.; Atia, M.M.; Noureldin, A. INS/GPS/LiDAR Integrated Navigation System for Urban and Indoor Environments Using Hybrid Scan Matching Algorithm. Sensors 2015, 15, 23286-23302. https://doi.org/10.3390/s150923286

Gao Y, Liu S, Atia MM, Noureldin A. INS/GPS/LiDAR Integrated Navigation System for Urban and Indoor Environments Using Hybrid Scan Matching Algorithm. Sensors. 2015; 15(9):23286-23302. https://doi.org/10.3390/s150923286

Chicago/Turabian StyleGao, Yanbin, Shifei Liu, Mohamed M. Atia, and Aboelmagd Noureldin. 2015. "INS/GPS/LiDAR Integrated Navigation System for Urban and Indoor Environments Using Hybrid Scan Matching Algorithm" Sensors 15, no. 9: 23286-23302. https://doi.org/10.3390/s150923286