Fusion of Visible and Thermal Descriptors Using Genetic Algorithms for Face Recognition Systems

Abstract

:1. Introduction

2. Visible-Thermal Methods

2.1. LDP Histograms

2.2. LBP Histograms

2.3. Histograms of Oriented Gradients

2.4. Weber Local Descriptor

2.5. Gabor Jet Descriptor

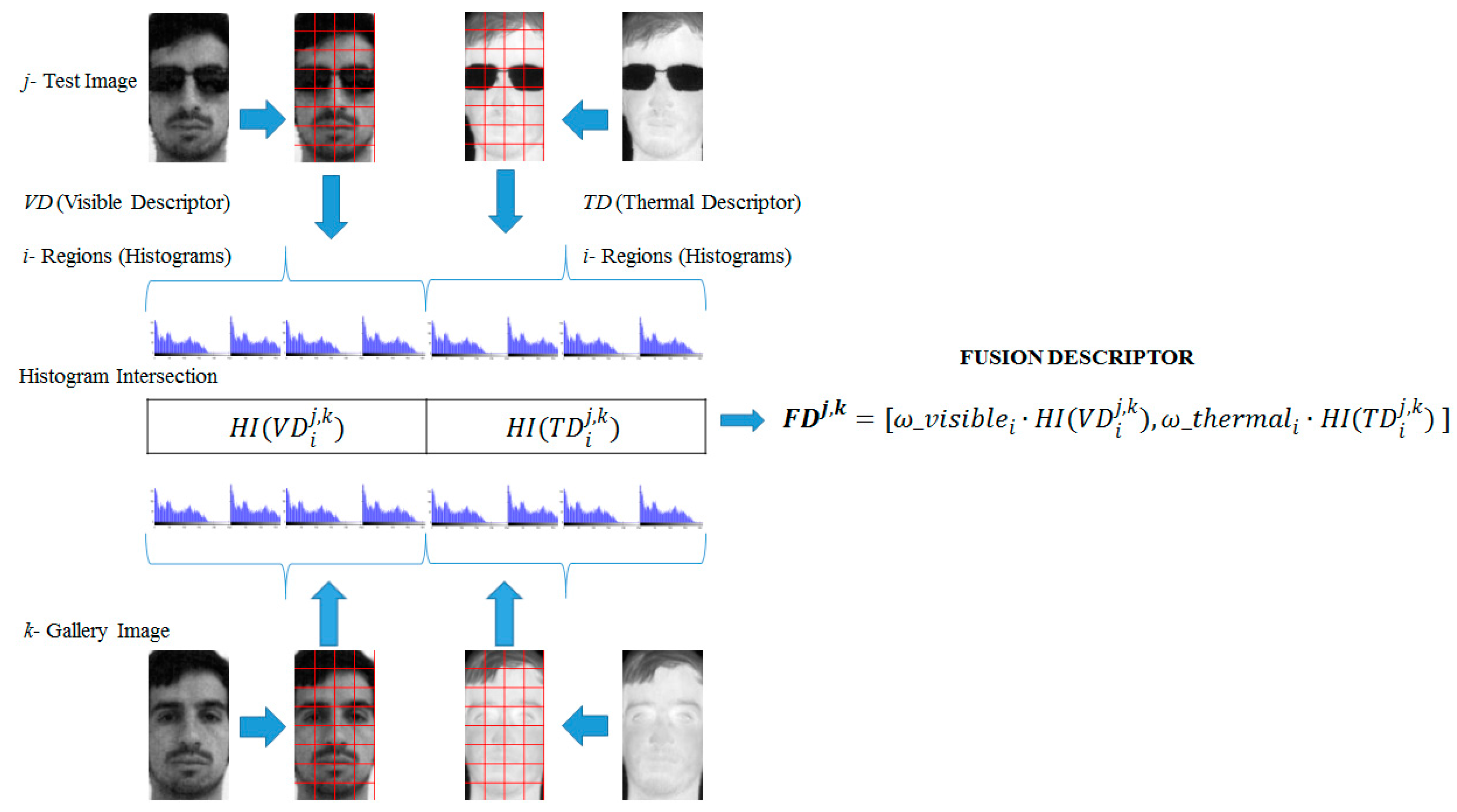

3. Proposed Method: Visible-Thermal Face Descriptor Fusion by Genetic Algorithms

3.1. Proposed Genetic Algorithm for Fused Descriptors

- An initial population of 100 genetic codes was randomly made with 64 weights in the interval [0, 1]. The initial population had complementary weights for visible and thermal regions.

- The genetic code was applied to the descriptor database of gallery and test images using Equation 1. The similarity value was then calculated using .

- The face recognition was performed, giving the maximum value of the similarity value (SV) to each test image j, and the recognition rate was then calculated using the correctly-recognized faces. Note that the fitness value of each genetic code is the recognition rate, which was then optimized.

- 4.

- 5.

- The parents were crossed with a probability of 25% at each point, obtaining two offspring (the weights of visible and thermal were no longer complementary).

- 6.

- The offspring was mutated at three random points with a probability of 25%.

- 7.

- The fitness values of both offspring were calculated as for the initial population (Steps 2–3).

- 8.

- If the fitness value of a given offspring was greater than the lowest fitness value in the population, then a random genetic code was replaced, otherwise it was discarded.

- 9.

- If the iteration number was under 100,000, Step 4 was repeated.

- 10.

- End.

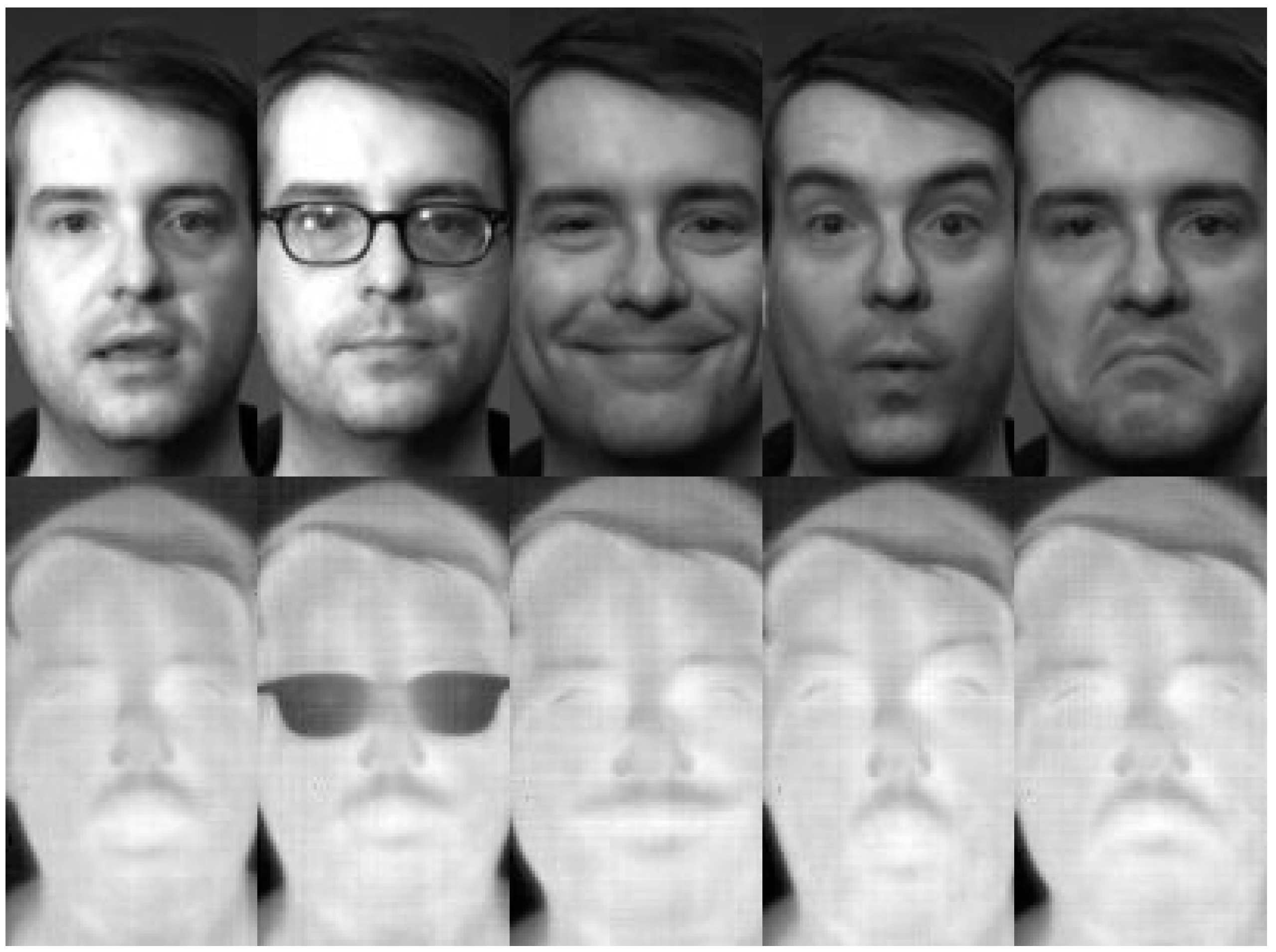

4. Visible and Thermal Databases

4.1. Equinox Database Description

| Sets | Description | Subjects | Illuminations | Image Number |

|---|---|---|---|---|

| VA EA | Vowel Frames Expressions frames | All Subjects All Subjects | All illuminations All illuminations | 729 images 729 images |

| VF | Vowel frames | All Subjects | Frontal illumination | 243 images |

| EF | Expressions frames | All Subjects | Frontal illumination | 243 images |

| VL | Vowel frames | All Subjects | Lateral illumination | 486 images |

| EL | Expressions frames | All Subjects | Lateral illumination | 486 images |

| VG | Vowel frames | Subjects Using Glasses | All illuminations | 324 images |

| EG | Expressions frames | Subjects Using Glasses | All illuminations | 324 images |

| RR | Random 500 frames | Chosen at random | All illuminations | 500 images |

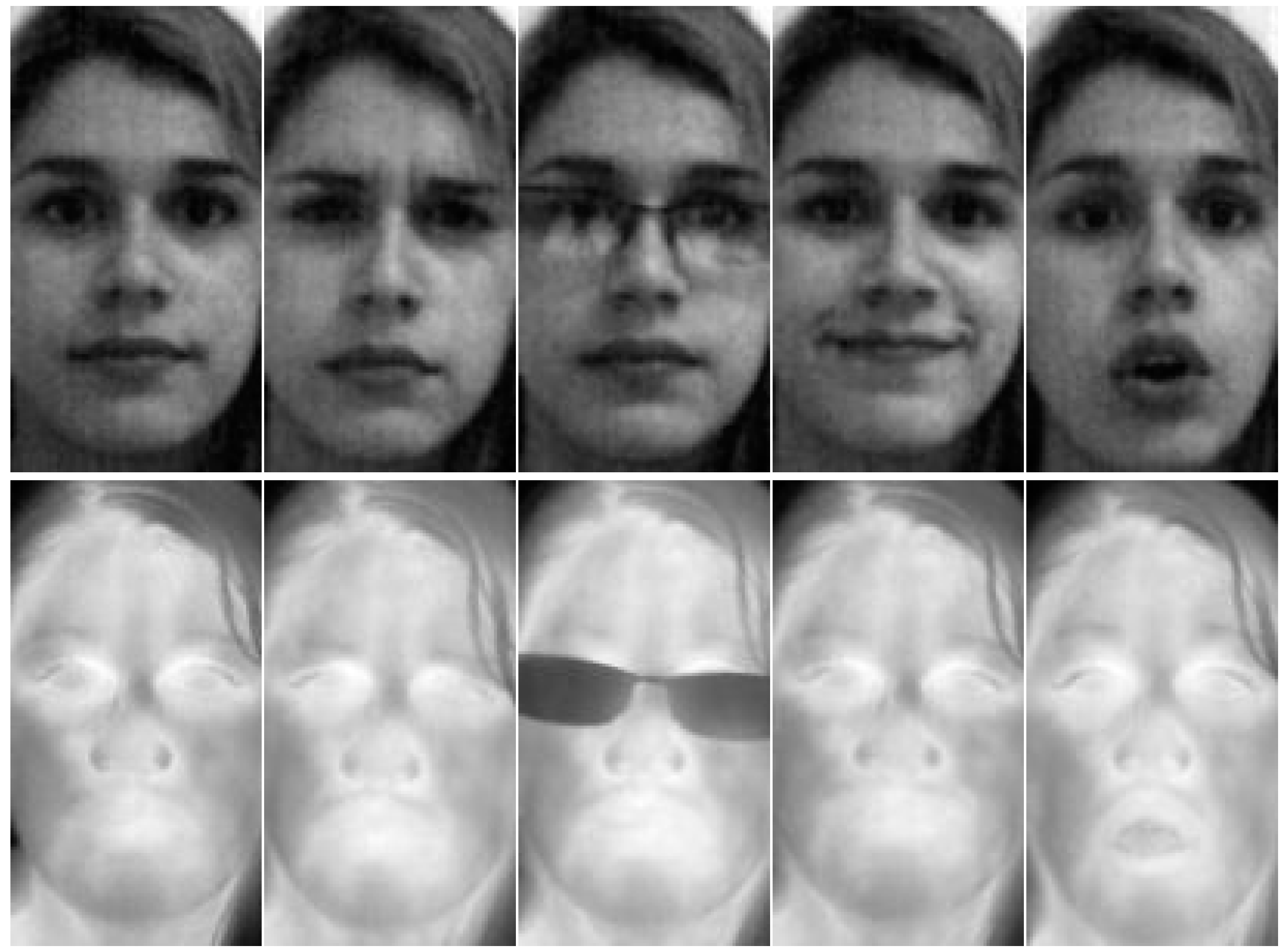

4.2. PUCV-Visible Thermal-Face Database Description

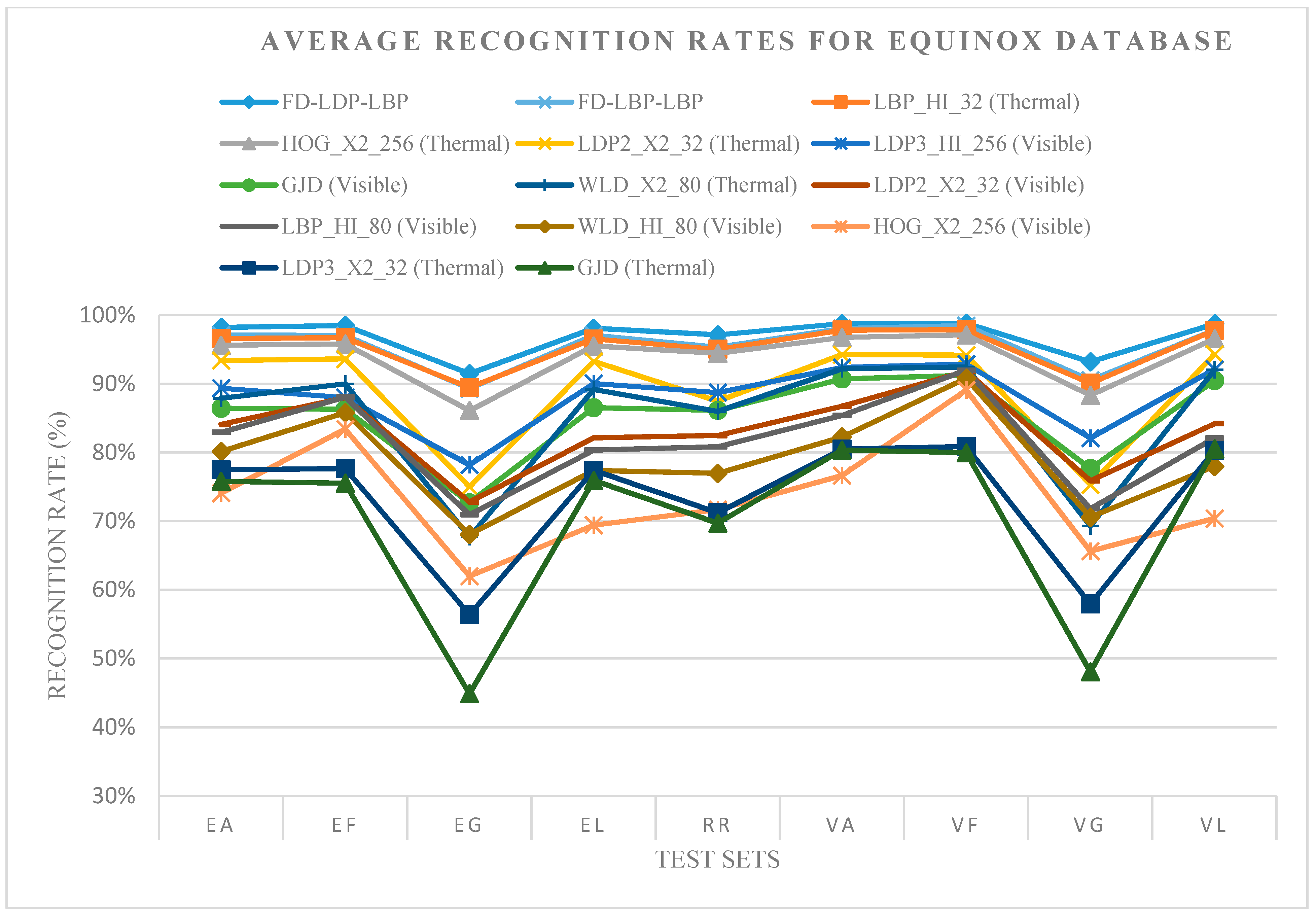

5. Experiments

5.1. Experiment 1: Optimal Population in the Equinox Database

| Method | Visible (%) | Thermal (%) |

|---|---|---|

| LBP_HI_80 | 81.60 | 93.89 |

| LBP_HI_32 | 80.11 | 95.32 |

| LDP2_X2_32 | 83.12 | 88.98 |

| LDP3_HI_256 | 88.20 | 61.10 |

| LDP3_X2_32 | 85.22 | 73.30 |

| HOG_X2_256 | 73.59 | 94.03 |

| WLD_HI_80 | 78.89 | 84.86 |

| WLD_X2_80 | 38.05 | 85.19 |

| GJD | 85.35 | 70.07 |

| FD-LDP-LBP | 96.99 | |

| FD-LBP-LBP | 95.64 | |

| Wavelets [18] | 93.50 | |

| PCA [18] | 92.90 | |

| KPCA [19] | 82.70 | |

| KFLD [19] | 96.30 | |

| Wavelets [20] | 96.10 | |

5.2. Experiment 2: Fusion Method Validation Using the PUCV-VTF Database

| Variant | Visible (%) | Thermal (%) |

|---|---|---|

| LBP_HI_80 | 91.45 | 98.68 |

| LBP_X2_32 | 96.71 | 98.03 |

| LDP2_HI_32 | 94.08 | 91.78 |

| LDP2_HI_256 | 91.12 | 93.09 |

| LDP3_X2_32 | 78.95 | 83.22 |

| LDP3_EU_256 | 74.01 | 86.51 |

| HOG_EU_256 | 93.09 | 97.04 |

| HOG_X2_256 | 92.76 | 97.70 |

| WLD_HI_80 | 90.46 | 97.70 |

| GJD | 80.26 | 92.76 |

| FD-LDP-LBP | 98.68 | |

| FD-LBP-LBP | 99.01 | |

| Variant | Average Recognition Rate-Test Sets | Average | |||

|---|---|---|---|---|---|

| Frown | Glasses | Smile | Vowels | ||

| LBP_X2_32 (Visible) | 96.05 | 96.05 | 98.68 | 96.05 | 96.71 |

| LBP_HI_80 (Thermal) | 96.05 | 98.68 | 100.00 | 100.00 | 98.68 |

| LDP2_HI_32 (Visible) | 94.74 | 93.42 | 94.74 | 93.42 | 94.08 |

| LDP2_HI_256 (Thermal) | 89.47 | 92.11 | 97.37 | 93.42 | 93.09 |

| LDP3_X2_32 (Visible) | 65.79 | 77.63 | 86.84 | 85.53 | 78.95 |

| LDP3_EU_256 (Thermal) | 82.89 | 78.95 | 98.68 | 85.53 | 86.51 |

| HOG_EU_256 (Visible) | 88.16 | 92.11 | 96.05 | 96.05 | 93.09 |

| HOG_X2_256 (Thermal) | 96.05 | 97.37 | 98.68 | 98.68 | 93.09 |

| WLD_HI_80 (Visible) | 85.53 | 85.53 | 97.37 | 93.42 | 90.46 |

| WLD_HI_80 (Thermal) | 96.05 | 97.37 | 100.00 | 97.37 | 97.70 |

| GJD (Visible) | 60.53 | 69.74 | 97.37 | 93.42 | 80.26 |

| GJD (Thermal) | 92.11 | 82.89 | 100.00 | 96.05 | 92.76 |

| FD-LDP-LBP | 98.68 | 97.37 | 100.00 | 98.68 | 98.68 |

| FD-LBP-LBP | 98.68 | 98.68 | 100.00 | 98.68 | 99.01 |

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Akhloufi, M.; Bendada, A.; Batsale, J.C. State of the art in infrared face recognition. Quant. InfraRed Thermogr. J. 2008, 5, 3–26. [Google Scholar] [CrossRef]

- FLIR, The Ultimate Infrared Handbook for R&D Professionals. Available online: http://www.flirmedia.com/MMC/THG/Brochures/T559243/T559243_EN.pdf (accessed on 22 July 2015).

- Chen, X.; Flynn, P.J.; Bowyer, K.W. PCA-based face recognition in infrared imagery: Baseline and comparative studies. In Proceedings of the IEEE International Workshop on Analysis and Modeling of Faces and Gestures (AMFG 2003), Nice, France, 17 October 2003; pp. 127–134.

- Socolinsky, D.; Selinger, A. Thermal Face Recognition over Time. In Proceedings of the 17th International Conference on Pattern Recognition (ICPR’04), 23–26 August 2004; Volume 4, pp. 187–190.

- Holland, J.H. Adaptation in Natural and Artificial Systems; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Sastry, K.; Goldberg, D.E.; Kendall, G. Search Methodologies: Introductory Tutorials in Optimization, Search and Decision Support Techniques; Burke, E., Kendall, G., Eds.; Springer: Berlin, Germany, 2005. [Google Scholar]

- Hermosilla, G.; Ruiz-del-Solar, J.; Verschae, R.; Correa, M. A Comparative Study of Thermal Face Recognition Methods in Unconstrained Environments. Pattern Recognit. 2012, 45, 2445–2459. [Google Scholar] [CrossRef]

- Ruiz-del-Solar, J.; Verschae, R.; Correa, M. Recognition of Faces in Unconstrained Environments: A Comparative Study. EURASIP J. Adv. Signal Process. 2009, 2009, 1–19. [Google Scholar] [CrossRef]

- Selinger, A.; Socolinsky, D. Appearance-Based Facial Recognition Using Visible and Thermal Imagery: A Comparative Study. Technical Report; Equinox Corporation: New York, NY, USA, 2001. [Google Scholar]

- Socolinsky, D.; Selinger, A. A comparative analysis of face recognition performance with visible and thermal infrared imagery. In Proceedings of the 16th International Conference on Pattern Recognition (ICPR), Quebec, QC, Canada, 11–15 August 2002; pp. 217–222.

- Zou, J.; Ji, Q.; Nagy, G. A Comparative Study of Local Matching Approach for Face Recognition. IEEE Trans. Image Process. 2007, 16, 2617–2628. [Google Scholar] [CrossRef] [PubMed]

- Hermosilla, G.; Farias, G.; Vargas, H.; Gallardo, F.; San-Martin, C. Thermal Face Recognition Using Local Patterns. In Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications; Lecture Notes in Computer Science; Springer: Puerto Vallarta, Jalisco, Mexico, 2014; Volume 8827, pp. 486–497. [Google Scholar]

- Ahonen, T.; Hadid, A.; Pietikainen, M. Face Description with Local Binary Patterns: Application to Face Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2037–2041. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Shan, S.; He, C.; Zhao, G.; Pietikäinen, M.; Chen, C.X.; Gao, W. WLD: A Robust Local Image Descriptor. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1705–1720. [Google Scholar] [CrossRef] [PubMed]

- Zhang, B.; Gao, Y.; Zhao, S.; Liu, J. Local Derivative Pattern Versus Local Binary Pattern: Face Recognition with High-Order Local Pattern Descriptor. IEEE Trans. Image Process. 2010, 19, 533–544. [Google Scholar] [CrossRef] [PubMed]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 25 June 2005; Volume 1, pp. 886–893.

- Deniz, O.; Bueno, G.; Salido, J.; de la Torre, F. Face recognition using histograms of oriented gradients. Pattern Recognit. Lett. 2011, 32, 1598–1603. [Google Scholar] [CrossRef]

- Bebis, G.; Gyaourova, A.; Singh, S.; Pavlidis, I. Face recognition by fusing thermal infrared and visible imagery. Image Vis. Comput. 2006, 24, 727–742. [Google Scholar] [CrossRef]

- Desa, S.; Hati, S. IR and Visible Face Recognition using Fusion of Kernel Based Features. In Proceedings of the 19th International Conference on Pattern Recognition (ICPR 2008), Tampa, FL, USA, 8–11 December 2008; pp. 1–4.

- Kwon, O.K.; Kong, S.G. Multiscale fusion of visual and thermal images for robust face recognition. In Proceedings of the IEEE International Conference Computational Intelligence for Homeland Security and Personal Safety, Orlando, FL, USA, 31 March–1 April 2005; Volume IV, pp. 112–116.

- Abidi, B.; Huq, S.; Abidi, M. Fusion of visual, thermal, and range as a solution to illumination and pose restrictions in face recognition. In Proceedings of the IEEE International Carnahan Conference on Security Technology, Albuquerque, NM, USA, 11–14 October 2004; pp. 325–330.

- Pop, F.M.; Gordan, M.; Florea, C.; Vlaicu, A. Fusion based approach for thermal and visible face recognition under pose and expresivity variation. In Proceedings of the Roedunet International Conference (RoEduNet), Sibiu, Romania, 24–26 June 2010; pp. 61–66.

- Erkanli, S.; Li, J.; Oguslu, E. Fusion of Visual and Thermal Images Using Genetic Algorithms. In Bio-Inspired Computational Algorithms and Their Applications; Gao, S., Ed.; InTech: Rijeka, Croatia, 2012. [Google Scholar]

- Equinox Corporation. Available online: http://www.equinoxsensors.com/products/HID.html (accessed on 1 March 2011).

- Socolinsky, D.; Wolff, L.; Neuheisel, J.; Eveland, C. Illumination invariant face recognition using thermal infrared imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December, 2001; Volume 1, pp. I-527–I-534.

- PUCV-VTF database. Available online: http://goo.gl/5bWnYp (accessed on 22 July 2015).

- PS3 eye camera. Available online: http://us.playstation.com/ps3/accessories/playstation-eye-camera-ps3.html (accessed on 5 March 2015).

- IR Camera FLIR TAU 2. Available online: http://www.flir.com/cvs/cores/uncooled/products/tau (accessed on 22 July 2015).

- Jothi, J.A.A.; Rajam, V.M.A. Segmentation of Nuclei from Breast Histopathology Images Using PSO-based Otsu’s Multilevel Thresholding. In Artificial Intelligence and Evolutionary Algorithms in Engineering Systems; Advances in Intelligent Systems and Computing; Springer: Kumaracoil, India, 2015; Volume 325, pp. 835–843. [Google Scholar]

- Liu, H.; Hsu, S.; Huang, C. Single-sample-per-person-based face recognition using fast Discriminative Multi-manifold Analysis. In Proceedings of the Asia-Pacific Signal and Information Processing Association, 2014 Annual Summit and Conference (APSIPA), Siem Reap, Cambodia, 9–12 December 2014; pp. 1–9.

- Hu, S.; Choi, J.; Chan, A.; Schwartz, W. Thermal-to-visible face recognition using partial least squares. J. Opt. Soc. Am. 2015, 32, 431–442. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hermosilla, G.; Gallardo, F.; Farias, G.; Martin, C.S. Fusion of Visible and Thermal Descriptors Using Genetic Algorithms for Face Recognition Systems. Sensors 2015, 15, 17944-17962. https://doi.org/10.3390/s150817944

Hermosilla G, Gallardo F, Farias G, Martin CS. Fusion of Visible and Thermal Descriptors Using Genetic Algorithms for Face Recognition Systems. Sensors. 2015; 15(8):17944-17962. https://doi.org/10.3390/s150817944

Chicago/Turabian StyleHermosilla, Gabriel, Francisco Gallardo, Gonzalo Farias, and Cesar San Martin. 2015. "Fusion of Visible and Thermal Descriptors Using Genetic Algorithms for Face Recognition Systems" Sensors 15, no. 8: 17944-17962. https://doi.org/10.3390/s150817944