Multisensor Super Resolution Using Directionally-Adaptive Regularization for UAV Images

Abstract

: In various unmanned aerial vehicle (UAV) imaging applications, the multisensor super-resolution (SR) technique has become a chronic problem and attracted increasing attention. Multisensor SR algorithms utilize multispectral low-resolution (LR) images to make a higher resolution (HR) image to improve the performance of the UAV imaging system. The primary objective of the paper is to develop a multisensor SR method based on the existing multispectral imaging framework instead of using additional sensors. In order to restore image details without noise amplification or unnatural post-processing artifacts, this paper presents an improved regularized SR algorithm by combining the directionally-adaptive constraints and multiscale non-local means (NLM) filter. As a result, the proposed method can overcome the physical limitation of multispectral sensors by estimating the color HR image from a set of multispectral LR images using intensity-hue-saturation (IHS) image fusion. Experimental results show that the proposed method provides better SR results than existing state-of-the-art SR methods in the sense of objective measures.1. Introduction

Multispectral images contain complete spectrum information at every pixel in the image plane and are currently applied to various unmanned aerial vehicle (UAV) imaging applications, such as environmental monitoring, weather forecasting, military intelligence, target tracking, etc. However, it is not easy to acquire a high-resolution (HR) image using a multispectral sensor, because of the physical limitation of the sensor. A simple way to enhance the spectral resolution of a multispectral image is to increase the number of photo-detectors at the cost of sensitivity and signal-to-noise ratio due to the reduced size of pixels. In order to overcome such physical limitations of a multispectral imaging sensor, an image fusion-based resolution enhancement method is needed [1,2].

Various enlargement and super-resolution (SR) methods have been developed in many application areas over the past few decades. The goal of these methods is to estimate an HR image from one or more low-resolution (LR) images. They can be classified into two groups: (i) single image based; and (ii) multiple image based. The latter requires a set of LR images to reconstruct an HR image. It performs the warping process to align multiple LR images with a sub-pixel precision. If LR images are degraded by motion blur and additive noise, the registration process becomes more difficult. To solve this problem, single image-based SR methods became popular, including: an interpolation-based SR [3–6], patch-based SR [7–12], image fusion-based SR [13–17], and others [18].

In order to solve the problem of simple interpolation-based methods, such as linear and cubic-spline interpolation [3], a number of improved and/or modified versions of image interpolation methods have been proposed in the literature. Li et al. used the geometric duality between the LR and HR images using local variance in the LR image [4]. Zhang et al. proposed an edge-guided non-linear interpolation algorithm using directionally-adaptive filters and data fusion [5]. Giachetti et al. proposed a curvature-based iterative interpolation using a two-step grip filling and an iterative correction of the estimated pixels [6]. Although the modified versions of interpolation methods can improve the image quality in the sense of enhancing the edge sharpness and visual improvement to a certain degree, fundamental interpolation artifacts, such as blurring and jagging, cannot be completely removed, due to the nature of the interpolation framework.

Patch-based SR methods estimate an HR image from the LR image, which is considered as a noisy, blurred and down-sampled version of the HR image. Freeman et al. proposed the example-based SR algorithm using the hidden Markov model that estimates the optimal HR patch corresponding to the input LR patch from the external training dataset [7]. Glasner et al. used a unified SR framework using patch similarity between in- and cross-scale images in the scale space [8]. Yang et al. used patch similarity from the learning dataset of HR and LR patch pairs in the sparse representation model [9]. Kim et al. proposed a sparse kernel regression-based SR method using kernel matching pursuit and gradient descent optimization to map the pairs of trained example patches from the input LR image to the output HR image [10]. Freedman et al. used non-dyadic filter banks to preserve the property of an input LR image and searched a similar patch using local self-similarity in the locally-limited region [11]. He et al. proposed a Gaussian regression-based SR method using soft clustering based on the local structure of pixels [12]. Existing patch-based SR methods can better reduce the blurring and jagging artifacts than interpolation-based SR methods. However, non-optimal patches make the restored image look unnatural, because of the inaccurate estimation of the high-frequency components.

On the other hand, image fusion-based SR methods have been proposed in the remote sensing fields. The goal of these methods is to improve the spatial resolution of LR multispectral images using the detail of the corresponding HR panchromatic image. Principal component analysis (PCA)-based methods used the projection of the image into the differently-transformed space [13]. Intensity-hue-saturation (IHS) [14,15] and Brovery [17] methods considered the HR panchromatic image as a linear combination of the LR multispectral images. Ballester et al. proposed an improved variational-based method [16]. These methods assume that an HR panchromatic image is a linear combination of LR multispectral images. Therefore, conventional image fusion-based SR methods have the problem of using an additional HR panchromatic imaging sensor.

In order to improve the performance of the fusion-based SR methods, this paper presents a directionally-adaptive regularized SR algorithm. Assuming that the HR monochromatic image is a linear combination of multispectral images, the proposed method consists of three steps: (i) acquisition of monochromatic LR images from the set of multispectral images; (ii) restoration of the monochromatic HR image using the proposed directionally-adaptive regularization; and (iii) reconstruction of the color HR image using image fusion. The proposed SR algorithm is an extended version of the regularized restoration algorithms proposed in [19,20] for optimal adaptation to directional edges and uses interpolation algorithms in [21–23] for resizing the interim images at each iteration.

The major contribution of this work is two-fold: (i) the proposed method can estimate the monochromatic HR image using directionally-adaptive regularization that provides the optimal adaptation to directional edges in the image; and (ii) it uses an improved version of the image fusion method proposed in [14,15] to reconstruct the color HR image. Therefore, the proposed method can generate a color HR image without additional high-cost imaging sensors using image fusion and the proposed regularization-based SR method. In experimental results, the proposed SR method is compared with seven existing image enlargement methods, including interpolation-based, example-based SR and patch similarity-based SR methods in the sense of objective assessments.

The rest of this paper is organized as follows. Section 2 summarizes the theoretical background of regularized image restoration and image fusion. Section 3 presents the proposed directional adaptive regularized SR algorithm and image fusion. Section 4 summarizes experimental results on multi- and hyper-spectral images, and Section 5 concludes the paper.

2. Theoretical Background

The proposed multispectral SR framework is based on regularized image restoration and multispectral image fusion. This section presents the theoretical background of multispectral image representation, regularized image restoration and image fusion in the following subsection.

2.1. Multispectral Image Representation

A multispectral imaging sensor measures the radiance of multiple spectral bands whose range is divided into a series of contiguous and narrow spectral bands. On the other hand, a monochromatic or single-band imaging sensor measures the radiance of the entire spectrum of the wavelength. The relationship between multispectral and monochromatic images is assumed to be modeled as the gray-level images between wavelength ω1 and ω2 as [24]:

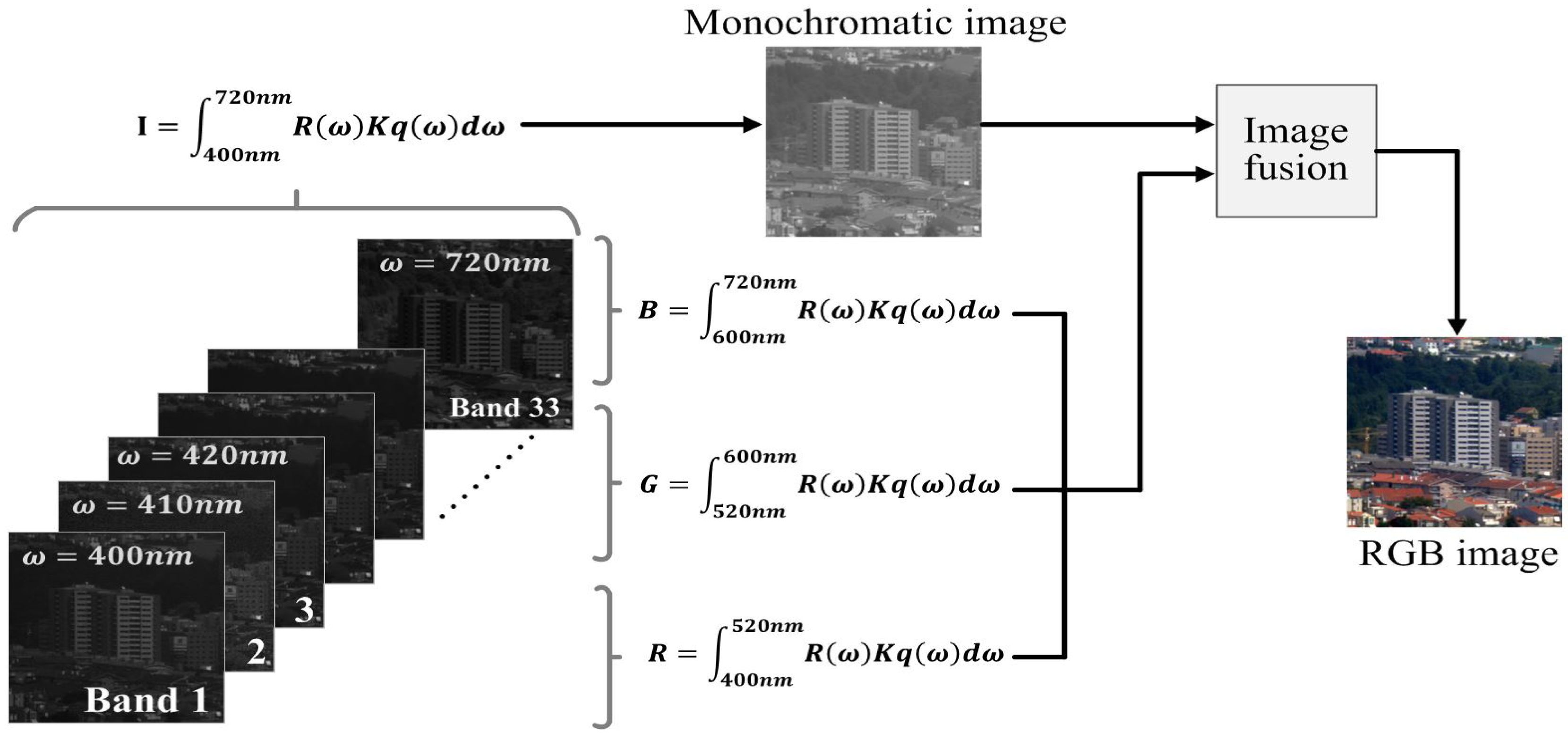

Since the spectral radiance R(ω) does not change by the sensor, the initial monochromatic HR image is generated by Equation (1), and it is used to reconstruct the monochromatic HR image from multispectral LR images using image fusion [14,15]. Figure 1 shows the multispectral imaging process, where a panchromatic image is acquired by integrating the entire spectral band, and the corresponding RGB image is also acquired using, for example, 33 bands. The high-resolution (HR) panchromatic and low-resolution (LR) RGB image are fused to generate an HR color image.

2.2. Multispectral Image Fusion

In order to improve the spatial resolution of multispectral images, the intensity-hue-saturation (IHS) image fusion method is widely used in remote sensing fields [14,15]. More specifically, this method converts a color image into the IHS color space, where only the intensity band is replaced by the monochromatic HR image. The resulting HR image is obtained by converting the replaced intensity and the original hue and saturation back to the RGB color space.

2.3. Regularized Image Restoration

Regularization-based image restoration or enlargement algorithms regard the noisy, LR images as the output of a general image degradation process and incorporate a priori constraints into the restoration process to make the inverse problem better posed [19–23].

The image degradation model for a single LR image can be expressed as:

The restoration problem is to estimate the HR image f from the observed LR image g. Therefore, the regularization approach minimizes the cost function as:

The derivative of Equation (3) with respect to f is computed as:

Thus, Equation (5) can be solved using the well-known regularized iteration process as:

3. Directionally-Adaptive Regularization-Based Super-Resolution with Multiscale Non-Local Means Filter

Since the estimation of the original HR image from the image degradation process given in (2) is almost always an ill-posed problem, there is no unique solution, and a simple inversion process, such as inverse filtering, results in significant amplification of noise and numerical errors [7–12]. To solve this problem, regularized image restoration incorporates a priori constraints on the original image to make the inverse problem better posed.

In this section, we describe a modified version of the regularized SR algorithm using a non-local means (NLM) filter [25] and the directionally-adaptive constraint as a regularization term to preserve edge sharpness and to suppress noise amplification. The reconstructed monochromatic HR image is used to generate a color HR image together with given LR multispectral images using IHS image fusion [14,15]. The block-diagram of the proposed method is shown in Figure 2.

3.1. Multispectral Low-Resolution Image Degradation Model

Assuming that the original monochromatic image is a linear combination of multispectral images [24], the observed LR multispectral images are generated by low-pass filtering and down-sampling from the differently translated version of the original HR monochromatic image. More specifically, the observed LR image in the i-th multispectral band is defined as:

3.2. Multiscale Non-Local Means Filter

If noise is present in the image degradation model given in Equation (2), the estimated HR image using the simple inverse filter yields:

If Δf is unbounded, the corresponding image restoration of the SR problem is ill posed. To solve the ill-posed restoration problem, we present an improved multiscale non-local means (NLM) filter to minimize the noise before the main restoration problem. The estimated noise-removed monochromatic image can be obtained using the least-squares optimization as:

Since the cubic-spline kernel performs low-pass filtering, it decreases the noise variance and guarantees searching sufficiently similar patches [8]. The similarity weight value is computed in the down-scaled image as:

The solution of the least-squares estimation in Equation (9) is given as:

3.3. Directionally-Adaptive Constraints

In minimizing the cost function in Equation (3), minimization of ‖g − Hf‖2 results in noise amplification, while minimization of ‖Cf‖2 results in a non-edge region. In this context, conventional regularized image restoration or SR algorithms [21–23] tried to estimate the original image by minimizing the cost function that is a linear combination of the two energies as ‖g − Hf‖2 + λ‖Cf‖2. In this paper, we incorporate directionally-adaptive smoothness constraints into regularization process to preserve directional edge sharpness and to suppress noise amplification as:

By applying the directionally-adaptive constraints, an HR image can be restored from the input LR image. In the restored HR image, four directional edges are well preserved. In order to suppress noise amplification in the non-edge (NE) regions, the following constraint is used.

3.4. Combined Directionally-Adaptive Regularization and Modified Non-Local Means Filter

Given the multispectral LR images gj, for i = 1,…, L, the estimated monochromatic HR image f̂ is given by the following optimization:

The derivative of Equation (19) with respect to f is computed as:

Finally, Equation (21) can be solved using the well-known iterative optimization with the proposed multiscale NLM filter as:

For the implementation of Equation (22), the term implies that the i-th multispectral LR images are first enlarged by simple interpolation as:

In order to represent the geometric misalignment among different spectral bands, pixel shifting by (−xi, −yi) is expressed as Si = S̃xi ⨂ S̃yi, where S̃p is the 1D translating matrix that shifts a 1D vector by p samples. The term implies that the k-th iterative solution is shifted by (xi, yi), down-sampled by H, enlarged by interpolation HT and then shifted by (−xi, −yi), respectively.

3.5. Image Fusion-Based HR Color Image Reconstruction

A multispectral imaging sensor measures the radiance of multiple spectral bands whose ranges are divided into a series of contiguous and narrow spectral bands. In this paper, we adopt the IHS fusion method mentioned to estimate the HR color image from multispectral LR images as [14,15]:

In this paper, we used the cubic-spline interpolation method to enlarge the hue (H) and saturation (S) channels by the given magnification factor. Figure 3 shows the image fusion-based HR color image reconstruction process.

4. Experimental Results

In this section, the proposed method is tested on various simulated, multispectral, real UAV and remote sensing images to evaluate the SR performance. In the following experiments, parameters were selected to produce the visually best results. In order to provide comparative experimental results, various existing interpolation and state-of-the-art SR methods were tested, such as cubic-spline interpolation [3], advanced interpolation-based SR [4–6], example-based SR [7] and patch-based SR [9–12].

To compare the performance of several SR methods, we used a set of full-reference metrics of image quality, including peak-to-peak signal-to-noise ratio (PSNR), structural similarity index measure (SSIM) [26], multiscale-SSIM (MS-SSIM) [27] and feature similarity index (FSIM) [28]. On the other hand, for the evaluation of the magnified image quality without the reference HR image, we adopted the completely blind image quality assessment methods, including the blind/referenceless image spatial quality evaluator (BRISQUE) [29] and natural image quality evaluator (NIQE) [30]. The higher image quality results in lower BRISQUE and NIQE values, but higher PSNR, SSIM, MS-SSIM and FSIM values.

The BRISQUE quantifies the amount of naturalness using the locally-normalized luminance values on a priori knowledge of both natural and artificially-distorted images. The NIQE takes into account the amount of deviations from the statistical regularities observed in the undistorted natural image contents using statistical features in natural scenes. Since BRISQUE and NIQE are referenceless metrics, they may not give the same ranking to the well-known metrics with reference, such as PSNR and SSIM.

4.1. Experiment Using Simulated LR Images

In order to evaluate the qualitative performance of various SR algorithms, we used five multispectral test images, each of which consists of 33 spectral bands in the wavelength range from 400 to 720 nanometers (nm), as shown Figure 4. In order to compare the objective image quality measures, such as PSNR, SSIM, MS-SSIM, FSIM and NIQE, the original multispectral HR image is first down-sampled by a factor of four to simulate the input LR images. Next, the input LR images are magnified four times using the nine existing methods and the proposed multispectral SR method.

In simulating LR images, the discrete approximation of Equation (1) is used, and the RGB color image is degraded by Equation (2). Given the simulated LR images, existing SR algorithms enlarge the RGB channels, whereas the proposed method generates the monochromatic HR image, including all spectral wavelengths, using the directionally-adaptive SR algorithm, and the IHS image fusion finally generates the color HR image using the monochromatic HR and RGB LR images [14,15].

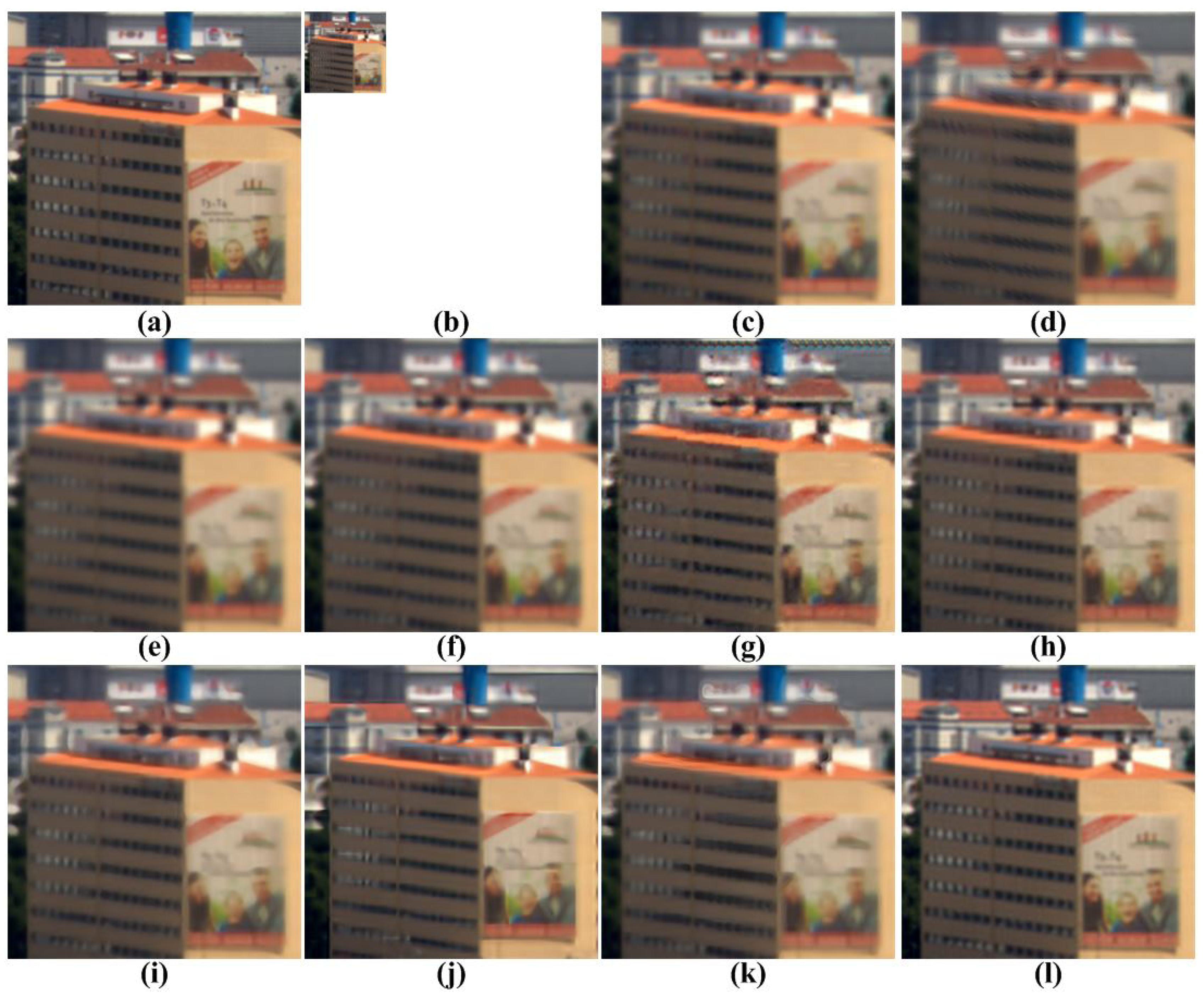

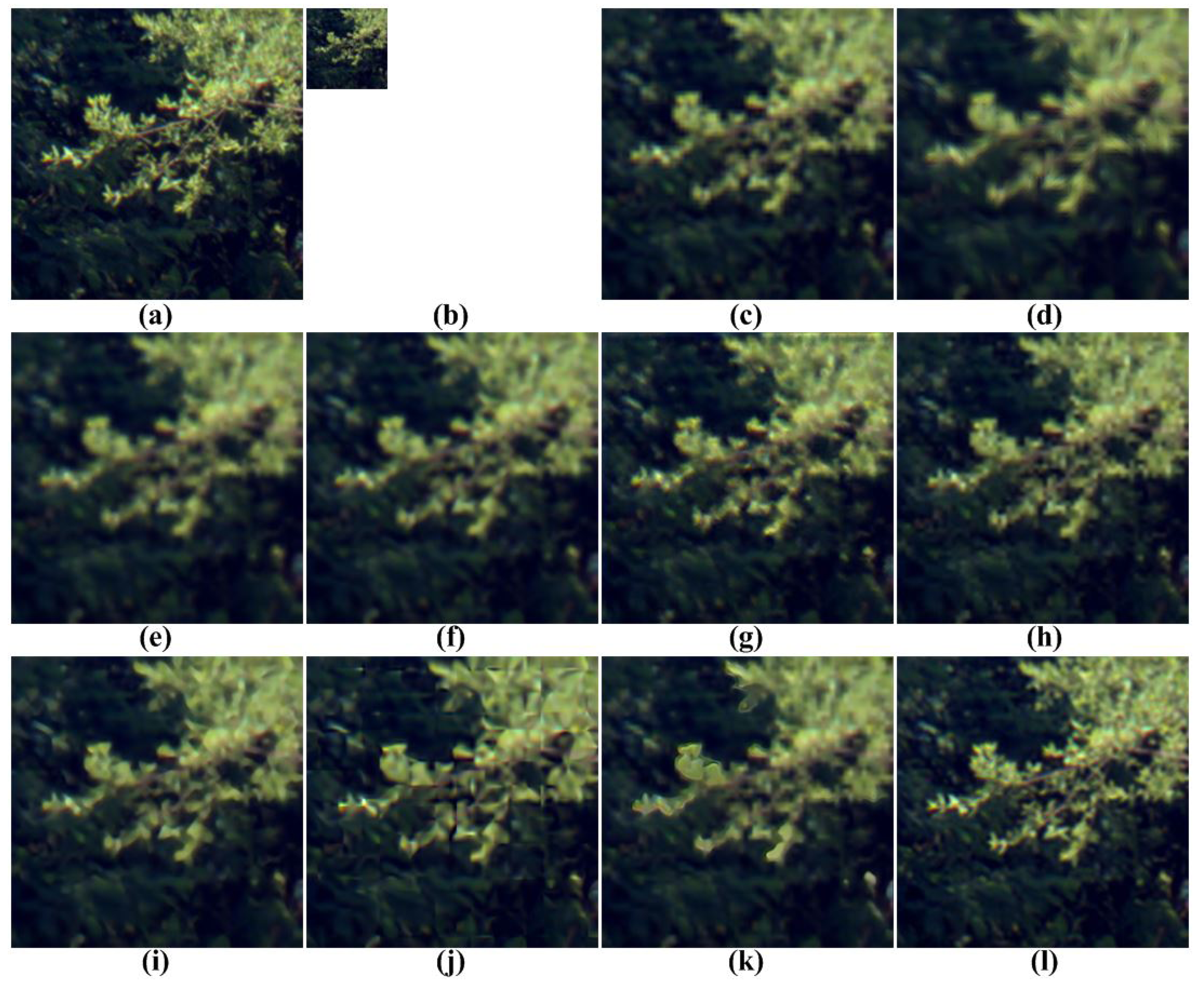

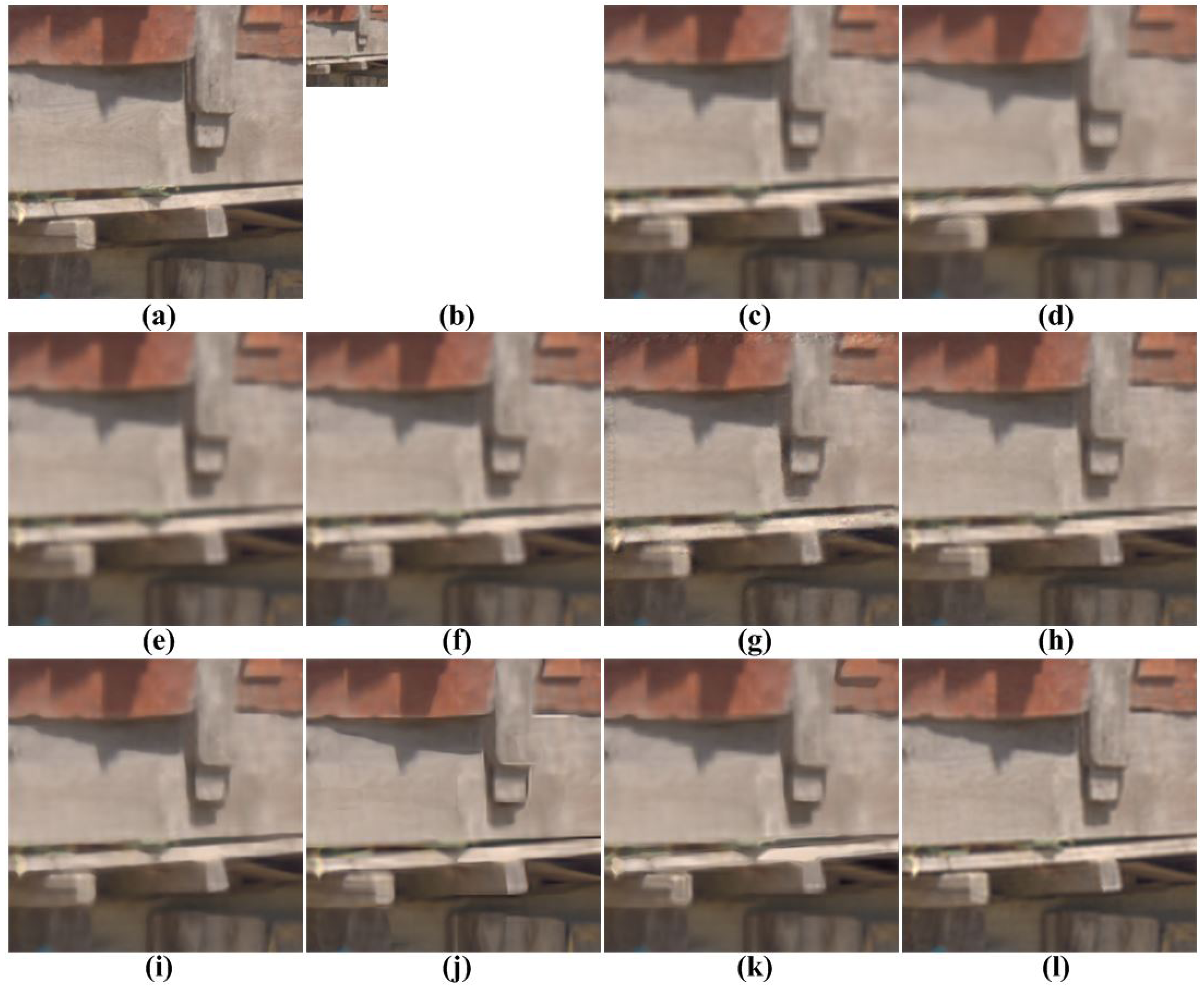

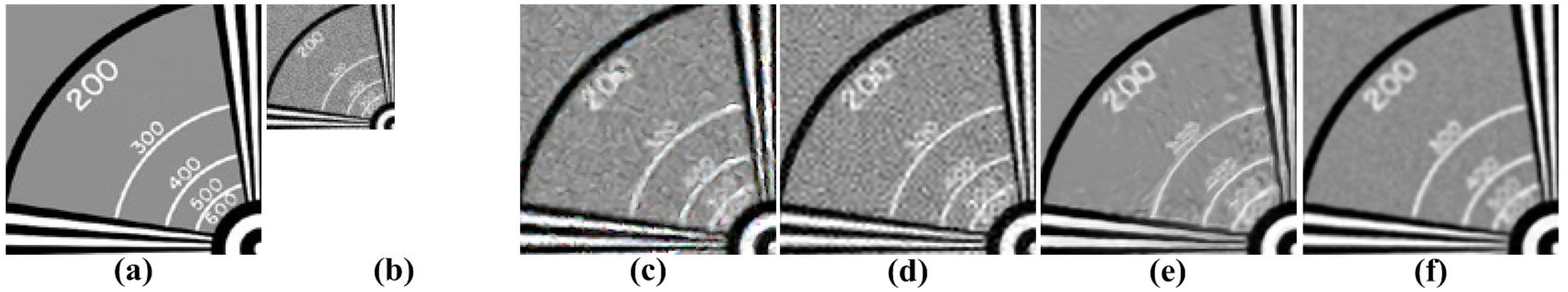

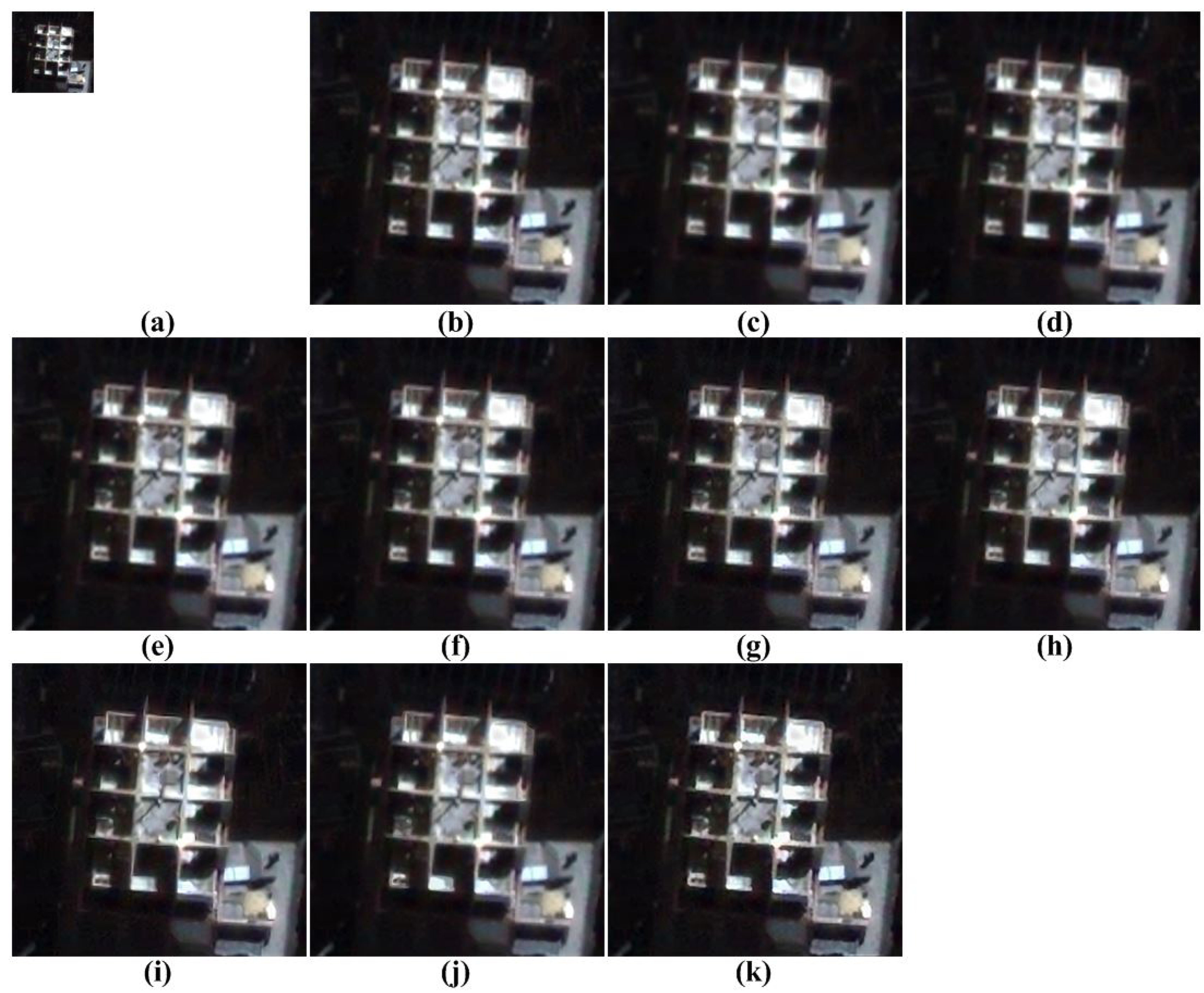

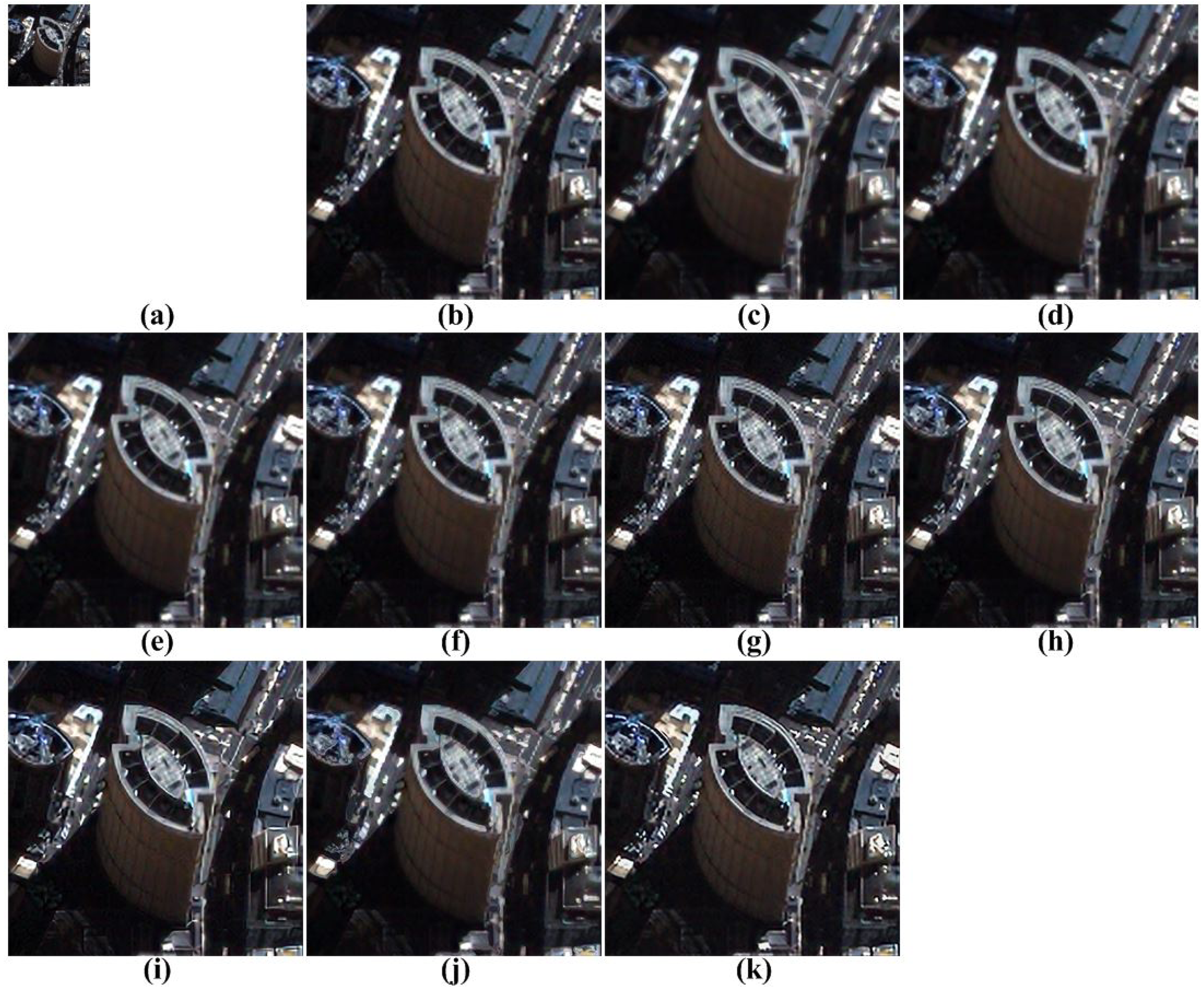

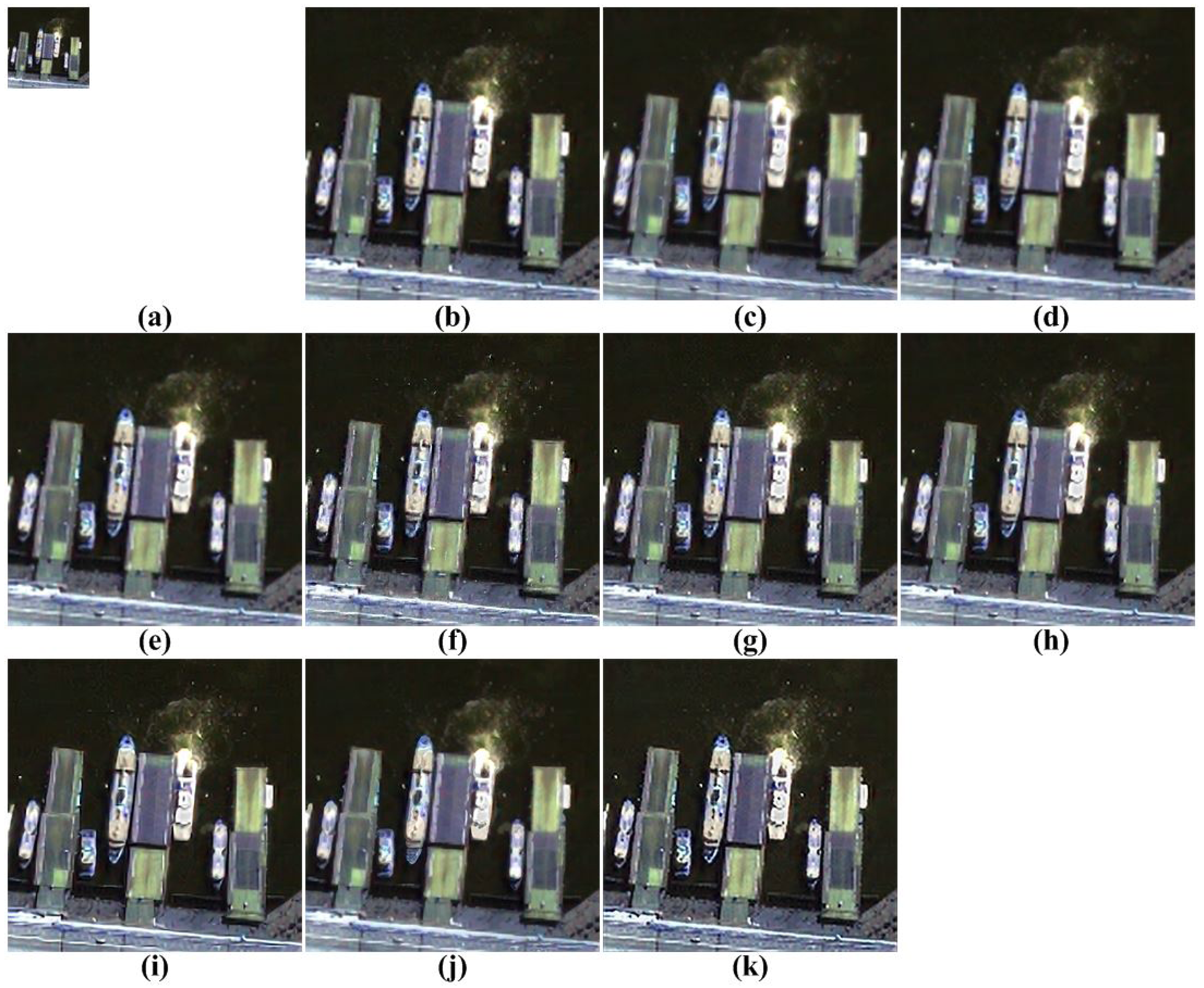

Figures 5, 6, 7, 8 and 9 show the results of enhancing the resolution of multispectral images using nine existing SR methods and the proposed multispectral SR method. Interpolation-based SR methods proposed in [3–6] commonly generate the blurring and jagging artifacts and cannot successfully recover the edge and texture details. The example-based method [7] and patch-based SR methods proposed in [9–12] can reconstruct clearer HR images than interpolation-based methods, but they cannot avoid unnatural artifacts in the neighborhood of the edge.

On the other hand, the proposed method shows a significantly improved SR result by successfully reconstructing the original high-frequency details and sharpens edges without unnatural artifacts. The PSNR, SSIM, MS-SSIM, FSIM, BRISQUE and NIQE values of the simulated multispectral test images shown in Figure 4 are computed for nine different methods, as summarized in Table 1.

Based on Table 1, the proposed method gives better results than existing SR methods in the sense of PSNR, SSIM, MS-SSIM and FSIM. Although the proposed method did not always provide the best results in the sense of NIQR and BRISQUE, the averaged performance using the extended set of test images shows that the proposed SR method performs the best.

In the additional experiment, an original monochromatic HR image is down-sampled and added by zero-mean white Gaussian noise with standard deviation σ = 10 to obtain a simulated version of the noisy LR image. The simulated LR image is enlarged by three existing SR [7,9,12] and the proposed methods, as shown in Figure 10. As shown in Figure 10, existing SR methods can neither remove the noise, nor recover the details in the image, whereas the proposed method can successfully reduce the noise and successfully reconstruct the original details. Table 2 shows PSNR and SSIM values of three existing SR methods for the same test image shown in Figure 10.

The original version of the example-based SR method was not designed for real-time processing, since it requires a patch dictionary before starting the SR process [7]. The performance and processing time of the patch searching process also depend on the size of the dictionary. The sparse representation-based SR method needs iterative optimization for the ℓ1 minimization process [9], which results in indefinite processing time. Although the proposed SR method also needs iterative optimization for the directionally-adaptive regularization, the regularized optimization can be replaced by an approximated finite-support spatial filter at the cost of the quality of the resulting images [31]. The non-local means filtering is another time-consuming process in the proposed work. However, a finite processing time can be guaranteed by restricting the search range of patches.

4.2. Experiment Using Real UAV Images

The proposed method is tested to enhance real UAV images, as shown Figure 11. More specifically, the remote sensing image is acquired by QuickBird equipped with a push broom-type image sensor to obtain a 0.65-m ground sample distance (GSD) panchromatic image.

Figures 12, 13, 14 to 15 show the results of enhanced versions using nine different SR and the proposed methods. In order to obtain no-reference measures, such as NIQE and BRISQUE values, Figure 11a–d are four-times magnified. In addition, the original UAV images are four-times down-sampled to generate simulated LR images and compared by the full-reference image quality measures, as summarized in Table 3.

As shown in Figures 12, 13, 14 and 15, the interpolation-based SR methods cannot successfully recover the details in the image. Since they are not sufficiently close to the unknown HR image, their NIQE and BRISQUE values are high, whereas example-based SR methods generate unnatural artifacts near the edge because of the inappropriate training dataset. Patch-based and the proposed SR methods provide better SR results.

The PSNR, SSIM, MS-SSIM, FSIM, NIQE and BRISQUE values are computed using nine different SR methods, as summarized in Table 3. Based on Table 3, the proposed method gives better results than existing SR methods in the sense of PSNR, SSIM, MS-SSIM and FSIM. Although the proposed method did not always provide the best results in the sense of NIQE and BRISQUE, the averaged performance using the extended set of test images shows that the proposed SR method performs the best.

5. Conclusions

In this paper, we presented a multisensor super-resolution (SR) method using directionally-adaptive regularization and multispectral image fusion. The proposed method can overcome the physical limitation of a multispectral image sensor by estimating the color HR image from a set of multispectral LR images. More specifically, the proposed method combines the directionally-adaptive regularized image reconstruction and a modified multiscale non-local means (NLM) filter. As a result, the proposed SR method can restore the detail near the edge regions without noise amplification or unnatural SR artifacts. Experimental results show that the proposed method provided a better SR result than existing state-of-the-art methods in the sense of objective measures. The proposed method can be applied to all types of images, including a gray-scale or single-image, RGB color and multispectral images.

Acknowledgements

This work was supported by Institute for Information & communications Technology Promotion (IITP) grant funded by the Korea government (MSIP) (B0101-15-0525, Development of global multi-target tracking and event prediction techniques based on real-time large-scale video analysis), and by the Technology Innovation Program (Development of Smart Video/Audio Surveillance SoC & Core Component for Onsite Decision Security System) under Grant 10047788.

Author Contributions

Wonseok Kang initiated the research and designed the experiments. Soohwan Yu performed experiments. Seungyong Ko analyzed the data. Joonki Paik wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, X.; Hu, Y.; Gao, X.; Tao, D.; Ning, B. A multi-frame image super-resolution method. Signal Process 2010, 90, 405–414. [Google Scholar]

- Zhang, Y. Understanding image fusion. Photogramm. Eng. Remote Sens. 2004, 70, 657–661. [Google Scholar]

- Wick, D.; Martinez, T. Adaptive optical zoom. Opt. Eng. 2004, 43, 8–9. [Google Scholar]

- Li, X.; Orchard, M. New edge-directed interpolation. IEEE Trans. Image Process 2001, 10, 1521–1527. [Google Scholar]

- Zhang, L.; Wu, X. An edge-guided image interpolation algorithm via directional filtering and data fusion. IEEE Trans. Image Process 2006, 15, 2226–2238. [Google Scholar]

- Giachetti, A.; Asuni, N. Real-time artifact-free image upscaling. IEEE Trans. Image Process 2011, 20, 2760–2768. [Google Scholar]

- Freeman, W.; Jones, T.; Pasztor, E.C. Example-based super-resolution. IEEE Comput. Graph. Appl. Mag. 2002, 22, 56–65. [Google Scholar]

- Glasner, D.; Bagon, S.; Irani, M. Super-resolution from a single image. Proceedings of the IEEE International Conference on Computer Vision, Kyoto, Japan, 29 September 2009; pp. 349–356.

- Yang, J.; Wright, J.; Huang, S.; Ma, Y. Image super resolution via sparse representation. IEEE Trans. Image Process 2010, 19, 2861–2873. [Google Scholar]

- Kim, K.; Kwon, Y. Single-image super-resolution using sparse regression and natural image prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1127–1133. [Google Scholar]

- Freedman, G.; Fattal, R. Image and video upscaling from local self-examples. ACM Trans. Graph. 2011, 30, 1–10. [Google Scholar]

- He, H.; Siu, W. Single image super-resolution using gaussian process regression. Proceedings of the IEEE Computer Society Conference on Computer Vision, Pattern Recognition, Providence, RI, USA, 20–25 June 2011; pp. 449–456.

- Shettigara, V.K. A generalized component substitution technique for spatial enhancement of multispectral images using a higher resolution data set. Photogramm. Remote Sens. 1992, 58, 561–567. [Google Scholar]

- Tu, T.-M.; Huang, P.S.; Hung, C.-L.; Chang, C.-P. A fast intensity hue-saturation fusion technique with spectral adjustment for IKONOS imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 309–312. [Google Scholar]

- Choi, M. A new intensity-hue-saturation fusion approach to image fusion with a tradeoff parameter. IEEE Geosci. Remote Sens. Lett. 2006, 44, 1672–1682. [Google Scholar]

- Ballester, C.; Caselles, V.; Igual, L.; Verdera, J. A Variational Model for P+XS Image Fusion. Int. J. Comput. Vis. 2006, 69, 43–58. [Google Scholar]

- Du, Q.; Younan, N.; King, R.; Shah, V. On the performance evaluation of pan-sharpening techniques. IEEE Geosci. Remote Sens. Lett. 2007, 4, 518–522. [Google Scholar]

- Nasrollahi, K.; Moeslund, T.B. Super-resolution: A comprehensive survey. Mach Vis. Appl. 2014, 25, 1423–1468. [Google Scholar]

- Katsaggelos, A.K. Iterative image restoration algorithms. Opt. Eng. 1989, 28, 735–748. [Google Scholar]

- Katsaggelos, A.K.; Biemond, J.; Schafer, R.W.; Mersereau, R.M. A regularized iterative image restoration algorithms. IEEE Trans. Signal Process 1991, 39, 914–929. [Google Scholar]

- Shin, J.; Jung, J.; Paik, J. Regularized iterative image interpolation and its application to spatially scalable coding. IEEE Trans. Consum. Electron. 1998, 44, 1042–1047. [Google Scholar]

- Shin, J.; Choung, Y.; Paik, J. Regularized iterative image sequence interpolation with spatially adaptive contraints. Proceedings of the IEEE International Conference on Image Processing, Chicago, IL, USA, 4–7 October 1998; pp. 470–473.

- Shin, J.; Paik, J.; Price, J.; Abidi, M. Adaptive regularized image interpolation using data fusion and steerable contraints. SPIE Vis. Commun. Image Process 2001, 4310, 798–808. [Google Scholar]

- Zhao, Y.; Yang, J.; Zhang, Q.; Song, L.; Cheng, Y.; Pan, Q. Hyperspectral imagery super-resolution by sparse representation and spectral regularization. EURASIP J. Adv. Signal Process 2011, 2011, 1–10. [Google Scholar]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. Proceedings of the IEEE Computer Society Conference on Computer Vision, Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 60–65.

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process 2004, 13, 600–612. [Google Scholar]

- Wang, Z.; Simoncelli, E. P.; Bovik, A. C. Multi-scale structural similarity for image quality assessment. Proceedings of the IEEE Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 9–12 November 2003; pp. 1398–1402.

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process 2011, 20, 2378–2386. [Google Scholar]

- Mittal, A.; Moorthy, A. K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process 2012, 21, 4695–4708. [Google Scholar]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a completely blind image quality analyzer. IEEE Signal Process. Lett. 2013, 22, 209–212. [Google Scholar]

- Kim, S.; Jun, S.; Lee, E.; Shin, J.; Paik, J. Real-time bayer-domain image restoration for an extended depth of field (EDoF) camera. IEEE Trans. Consum. Electron. 2009, 55, 1756–1764. [Google Scholar]

| Images | Methods | [3] | [4] | [5] | [6] | [7] | [9] | [10] | [11] | [12] | Proposed |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Figure 4a | PSNR | 28.13 | 24.82 | 25.03 | 28.50 | 25.84 | 30.14 | 30.21 | - | 27.49 | 36.64 |

| SSIM [26] | 0.844 | 0.751 | 0.763 | 0.844 | 0.784 | 0.878 | 0.877 | - | 0.827 | 0.964 | |

| MS-SSIM [27] | 0.945 | 0.885 | 0.891 | 0.955 | 0.920 | 0.974 | 0.971 | - | 0.930 | 0.993 | |

| FSIM [28] | 0.875 | 0.825 | 0.830 | 0.877 | 0.854 | 0.903 | 0.905 | - | 0.863 | 0.969 | |

| BRISQUE [29] | 65.30 | 64.29 | 72.66 | 63.22 | 51.98 | 55.51 | 53.84 | 57.78 | 58.68 | 48.30 | |

| NIQE [30] | 9.65 | 11.18 | 12.45 | 8.47 | 7.50 | 9.07 | 11.35 | 9.29 | 8.46 | 7.10 | |

| Figure 4b | PSNR | 27.76 | 24.94 | 25.09 | 26.12 | 25.21 | 28.97 | 29.28 | - | 27.27 | 34.37 |

| SSIM [26] | 0.794 | 0.695 | 0.707 | 0.743 | 0.738 | 0.816 | 0.818 | - | 0.777 | 0.931 | |

| MS-SSIM [27] | 0.939 | 0.870 | 0.877 | 0.909 | 0.919 | 0.961 | 0.962 | - | 0.935 | 0.990 | |

| FSIM [28] | 0.855 | 0.795 | 0.804 | 0.823 | 0.843 | 0.869 | 0.871 | - | 0.848 | 0.951 | |

| BRISQUE [29] | 65.83 | 70.40 | 69.35 | 58.66 | 48.66 | 62.82 | 53.32 | 53.97 | 56.96 | 48.33 | |

| NIQE [30] | 9.65 | 16.13 | 11.37 | 7.97 | 7.15 | 9.91 | 8.47 | 8.12 | 8.41 | 7.05 | |

| Figure 4c | PSNR | 25.04 | 24.69 | 24.91 | 25.03 | 26.54 | 28.68 | 25.51 | - | 26.29 | 33.21 |

| SSIM [26] | 0.712 | 0.680 | 0.694 | 0.708 | 0.766 | 0.824 | 0.819 | - | 0.772 | 0.932 | |

| MS-SSIM [27] | 0.882 | 0.853 | 0.866 | 0.876 | 0.926 | 0.965 | 0.962 | - | 0.914 | 0.987 | |

| FSIM [28] | 0.817 | 0.782 | 0.793 | 0.810 | 0.850 | 0.870 | 0.858 | - | 0.834 | 0.948 | |

| BRISQUE [29] | 58.51 | 66.21 | 70.53 | 61.71 | 51.29 | 51.78 | 49.35 | 51.19 | 57.65 | 49.06 | |

| NIQE [30] | 8.59 | 13.35 | 13.00 | 8.12 | 7.07 | 6.61 | 7.15 | 7.17 | 7.91 | 6.79 | |

| Figure 4d | PSNR | 23.22 | 23.08 | 23.22 | 23.24 | 24.77 | 29.73 | 29.86 | - | 27.34 | 32.38 |

| SSIM [26] | 0.679 | 0.649 | 0.665 | 0.677 | 0.736 | 0.843 | 0.837 | - | 0.796 | 0.939 | |

| MS-SSIM [27] | 0.874 | 0.858 | 0.865 | 0.871 | 0.917 | 0.974 | 0.971 | - | 0.950 | 0.988 | |

| FSIM [28] | 0.824 | 0.793 | 0.804 | 0.818 | 0.853 | 0.895 | 0.886 | - | 0.867 | 0.959 | |

| BRISQUE [29] | 64.41 | 68.59 | 74.00 | 67.63 | 61.84 | 56.83 | 57.22 | 60.81 | 64.12 | 60.83 | |

| NIQE [30] | 8.17 | 13.85 | 11.49 | 8.22 | 7.12 | 7.14 | 7.43 | 7.07 | 8.32 | 7.85 | |

| Figure 4e | PSNR | 26.43 | 26.45 | 26.37 | 26.49 | 26.80 | 32.45 | 33.25 | - | 29.26 | 37.50 |

| SSIM [26] | 0.852 | 0.846 | 0.850 | 0.853 | 0.861 | 0.925 | 0.930 | - | 0.896 | 0.969 | |

| MS-SSIM [27] | 0.918 | 0.915 | 0.915 | 0.918 | 0.935 | 0.985 | 0.985 | - | 0.967 | 0.996 | |

| FSIM [28] | 0.872 | 0.856 | 0.869 | 0.873 | 0.883 | 0.927 | 0.930 | - | 0.895 | 0.967 | |

| BRISQUE [29] | 68.90 | 74.64 | 74.39 | 66.52 | 51.99 | 56.45 | 57.68 | 61.63 | 61.92 | 56.55 | |

| NIQE [30] | 9.93 | 13.78 | 10.64 | 9.91 | 9.86 | 7.72 | 9.07 | 8.97 | 8.94 | 8.37 | |

| Average | PSNR | 26.12 | 24.79 | 24.93 | 25.88 | 25.83 | 29.99 | 29.62 | - | 27.53 | 34.82 |

| SSIM [26] | 0.776 | 0.724 | 0.736 | 0.765 | 0.777 | 0.857 | 0.856 | - | 0.814 | 0.947 | |

| MS-SSIM [27] | 0.912 | 0.876 | 0.883 | 0.906 | 0.923 | 0.972 | 0.970 | - | 0.939 | 0.991 | |

| FSIM [28] | 0.849 | 0.810 | 0.820 | 0.840 | 0.857 | 0.893 | 0.890 | - | 0.861 | 0.959 | |

| BRISQUE [29] | 64.59 | 68.83 | 72.19 | 63.55 | 53.15 | 56.68 | 54.28 | 57.08 | 59.87 | 52.61 | |

| NIQE [30] | 9.20 | 13.66 | 11.79 | 8.54 | 7.74 | 8.09 | 8.69 | 8.12 | 8.41 | 7.43 | |

| Methods | [7] | [9] | [12] | Proposed |

|---|---|---|---|---|

| PSNR | 20.15 | 21.83 | 18.95 | 24.45 |

| SSIM | 0.566 | 0.523 | 0.802 | 0.869 |

| Images | Methods | [3] | [4] | [5] | [6] | [7] | [9] | [10] | [11] | [12] | Proposed |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Figure 11a | PSNR | 17.85 | 17.56 | 17.75 | 17.82 | 18.61 | 21.94 | 21.97 | - | 19.12 | 26.86 |

| SSIM [26] | 0.710 | 0.681 | 0.698 | 0.709 | 0.723 | 0.824 | 0.830 | - | 0.766 | 0.930 | |

| MS-SSIM [27] | 0.837 | 0.844 | 0.843 | 0.840 | 0.882 | 0.948 | 0.955 | - | 0.907 | 0.990 | |

| FSIM [28] | 0.795 | 0.784 | 0.791 | 0.795 | 0.812 | 0.855 | 0.589 | - | 0.823 | 0.929 | |

| BRISQUE [29] | 63.35 | 38.87 | 71.87 | 63.29 | 63.91 | 52.78 | 55.73 | 58.04 | 64.69 | 50.90 | |

| NIQE [30] | 8.31 | 10.24 | 10.10 | 8.50 | 8.92 | 7.30 | 7.83 | 7.24 | 8.51 | 5.97 | |

| Figure 11b | PSNR | 18.14 | 18.82 | 18.86 | 18.21 | 18.89 | 19.60 | 19.82 | - | 18.19 | 22.53 |

| SSIM [26] | 0.641 | 0.654 | 0.662 | 0.666 | 0.618 | 0.670 | 0.680 | - | 0.590 | 0.824 | |

| MS-SSIM [27] | 0.821 | 0.840 | 0.825 | 0.825 | 0.886 | 0.915 | 0.922 | - | 0.853 | 0.971 | |

| FSIM [28] | 0.772 | 0.778 | 0.780 | 0.790 | 0.740 | 0.769 | 0.768 | - | 0.721 | 0.865 | |

| BRISQUE [29] | 52.93 | 46.00 | 67.31 | 50.33 | 46.24 | 51.71 | 39.59 | 41.50 | 39.03 | 37.12 | |

| NIQE [30] | 6.81 | 8.08 | 9.80 | 6.21 | 6.63 | 6.53 | 6.77 | 4.76 | 4.71 | 5.36 | |

| Figure 11c | PSNR | 19.89 | 19.81 | 20.29 | 19.98 | 20.62 | 21.64 | 21.74 | - | 20.14 | 24.94 |

| SSIM [26] | 0.634 | 0.609 | 0.651 | 0.643 | 0.615 | 0.660 | 0.661 | - | 0.600 | 0.856 | |

| MS-SSIM [27] | 0.805 | 0.802 | 0.822 | 0.807 | 0.876 | 0.903 | 0.897 | - | 0.847 | 0.973 | |

| FSIM [28] | 0.815 | 0.796 | 0.813 | 0.826 | 0.780 | 0.812 | 0.811 | - | 0.776 | 0.901 | |

| BRISQUE [29] | 61.30 | 63.46 | 71.18 | 63.32 | 64.93 | 53.94 | 53.28 | 54.58 | 62.75 | 55.88 | |

| NIQE [30] | 7.75 | 9.83 | 10.10 | 7.94 | 8.66 | 6.58 | 7.18 | 6.63 | 7.63 | 5.85 | |

| Figure 11d | PSNR | 18.68 | 19.00 | 19.07 | 18.52 | 19.59 | 20.36 | 20.47 | - | 18.99 | 23.98 |

| SSIM [26] | 0.633 | 0.646 | 0.656 | 0.646 | 0.635 | 0.679 | 0.686 | - | 0.635 | 0.841 | |

| MS-SSIM [27] | 0.818 | 0.828 | 0.835 | 0.816 | 0.882 | 0.912 | 0.910 | - | 0.872 | 0.973 | |

| FSIM [28] | 0.776 | 0.776 | 0.780 | 0.778 | 0.746 | 0.778 | 0.776 | - | 0.753 | 0.873 | |

| BRISQUE [29] | 55.20 | 52.13 | 68.70 | 54.06 | 51.35 | 53.70 | 44.93 | 50.01 | 48.33 | 47.51 | |

| NIQE [30] | 7.11 | 8.69 | 9.48 | 6.66 | 5.06 | 5.65 | 5.96 | 5.11 | 5.33 | 5.56 | |

| Average | PSNR | 18.64 | 18.80 | 18.99 | 18.63 | 19.43 | 20.88 | 21.00 | - | 19.11 | 24.58 |

| SSIM [26] | 0.654 | 0.648 | 0.667 | 0.666 | 0.648 | 0.708 | 0.714 | - | 0.648 | 0.863 | |

| MS-SSIM [27] | 0.820 | 0.828 | 0.831 | 0.822 | 0.881 | 0.919 | 0.921 | - | 0.870 | 0.977 | |

| FSIM [28] | 0.789 | 0.783 | 0.791 | 0.797 | 0.770 | 0.803 | 0.736 | - | 0.768 | 0.892 | |

| BRISQUE [29] | 58.19 | 50.12 | 69.77 | 57.75 | 56.61 | 53.03 | 48.38 | 51.03 | 53.70 | 47.85 | |

| NIQE [30] | 7.49 | 9.21 | 9.87 | 7.33 | 7.32 | 6.52 | 6.94 | 5.93 | 6.55 | 5.68 | |

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, W.; Yu, S.; Ko, S.; Paik, J. Multisensor Super Resolution Using Directionally-Adaptive Regularization for UAV Images. Sensors 2015, 15, 12053-12079. https://doi.org/10.3390/s150512053

Kang W, Yu S, Ko S, Paik J. Multisensor Super Resolution Using Directionally-Adaptive Regularization for UAV Images. Sensors. 2015; 15(5):12053-12079. https://doi.org/10.3390/s150512053

Chicago/Turabian StyleKang, Wonseok, Soohwan Yu, Seungyong Ko, and Joonki Paik. 2015. "Multisensor Super Resolution Using Directionally-Adaptive Regularization for UAV Images" Sensors 15, no. 5: 12053-12079. https://doi.org/10.3390/s150512053