1. Introduction

The use of three-dimensional (3D) data acquisition systems (point clouds or images) for building models of partly-submerged infrastructures is currently undergoing an important development. In the literature, many systems, including industrial solutions, combine underwater and terrestrial sensors to investigate structures, such as dams, harbors or pipelines [

1,

2,

3,

4]. However, it may be noticed that only a small number of published works consider the accuracy assessment of the produced 3D models, by comparing them to some reference models [

5,

6,

7].

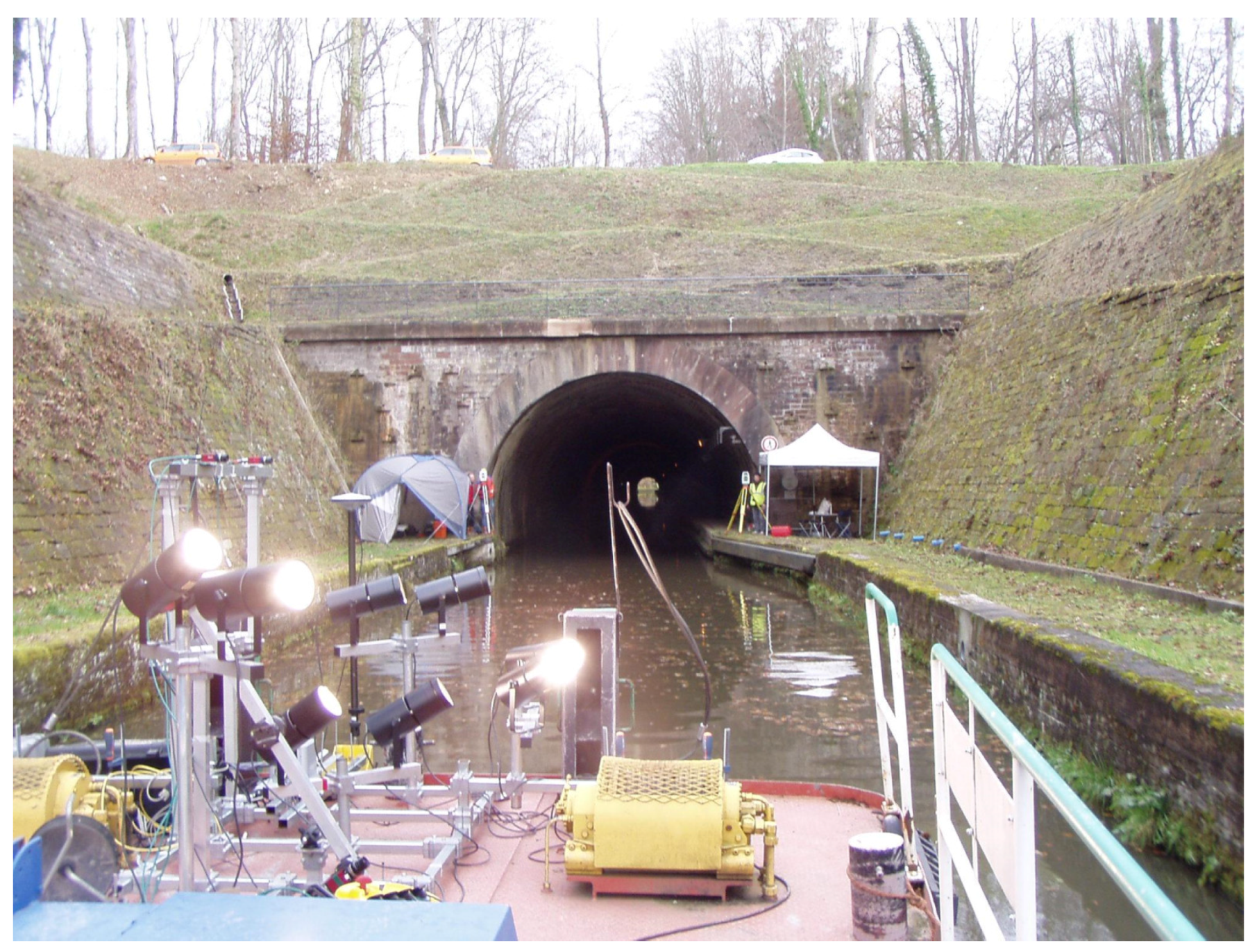

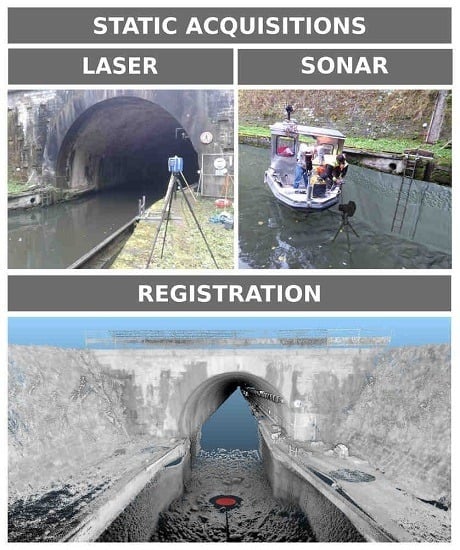

In this paper, we focus on the construction of an accurate 3D model of the entrances of a tunnel canal, from static acquisitions of point clouds. This model shall be used as a reference for future accuracy assessments in the context of the development of an embedded acquisition system devoted to the full 3D modeling of canal tunnels (

i.e., including both their underwater and above-water parts). Indeed, conventional mobile mapping systems cannot be used for positioning a barge, because global navigation satellite systems (GNSS) do not work, neither in tunnels nor at their entrances, which are most of the time bordered by narrow embankments (see

Figure 1 and

Figure 2-left), so innovative solutions must be proposed. Potential application concerns, in France, 31 tunnels currently in use, representing 42 km of underground waterways: the maintenance of these structures is a necessity, not only for preserving the historical heritage they represent, but also for protecting goods and persons. In the context of a partnership between Voies Navigables de France (VNF, the French operator of waterways), the Centre d’Études des tunnels (CETU) and the Cerema, in collaboration with the Photogrammetry and Geomatics Group at INSA-Strasbourg (institut national des sciences appliquées), an image acquisition prototype, embedded on a barge, has been devised for imaging the tunnel vaults and side walls (see

Figure 1). During this project, solutions to geo-reference data precisely in the tunnel have been proposed and evaluated [

8]. This system is going to be equipped with a multibeam echosounder to provide 3D views of the underwater parts of tunnel canals.

Figure 1.

Modular on-board mobile image recording system at the experimental site.

Figure 1.

Modular on-board mobile image recording system at the experimental site.

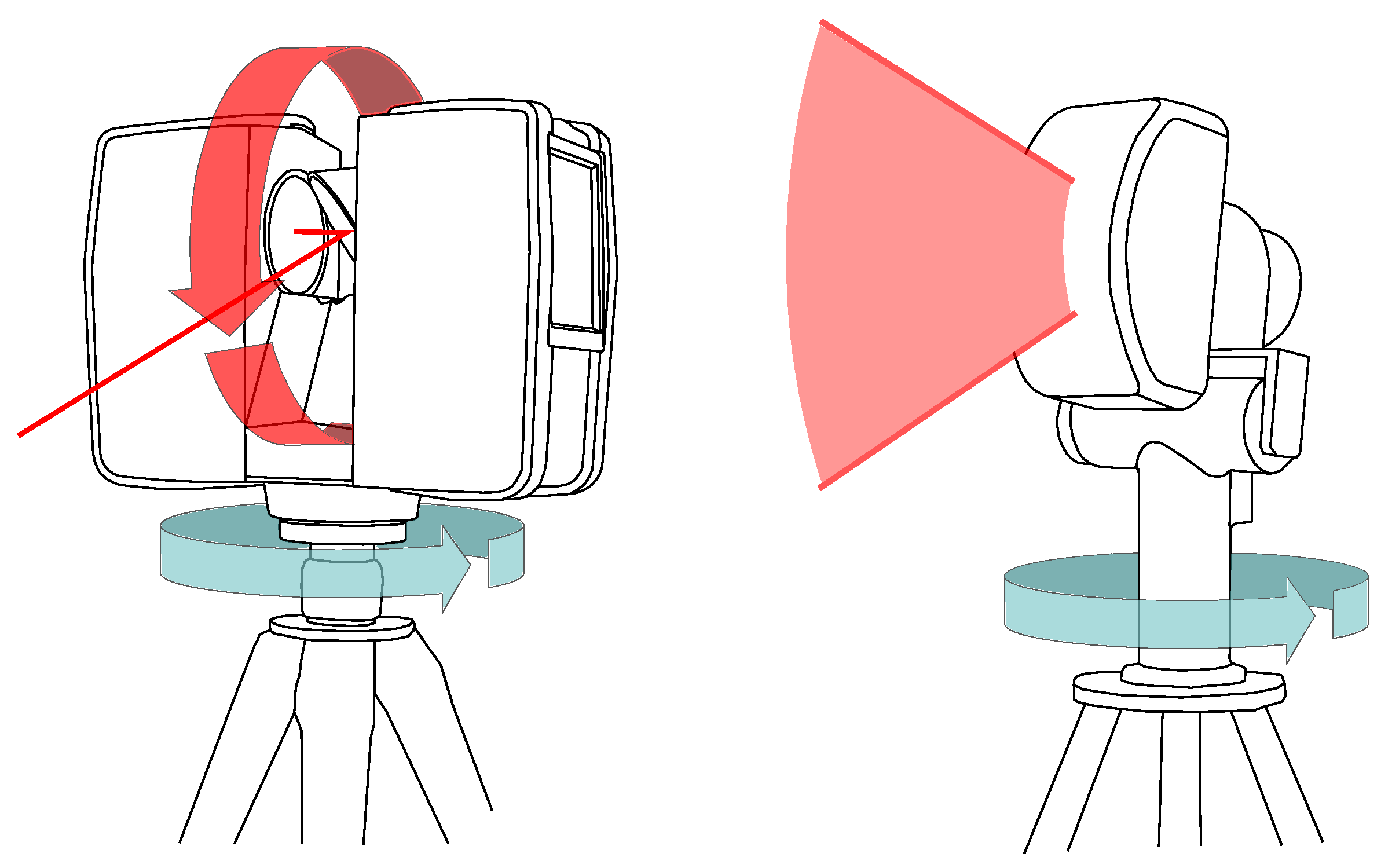

Figure 2.

Constraints that apply to recording systems in tunnel canals. (Left) Global navigation data are not available in tunnels, nor at their entrances, because satellites are masked, hindering conventional mobile mapping; (Center) The turbidity of water prevents using optical imaging devices; (Right) The canal is shallow and narrow, so robust sonar processing algorithms are needed.

Figure 2.

Constraints that apply to recording systems in tunnel canals. (Left) Global navigation data are not available in tunnels, nor at their entrances, because satellites are masked, hindering conventional mobile mapping; (Center) The turbidity of water prevents using optical imaging devices; (Right) The canal is shallow and narrow, so robust sonar processing algorithms are needed.

A 3D reference model will be necessary to assess the accuracy of the model of the whole tunnel provided by the mobile recording system under development. We have chosen to build this model from separate, static acquisitions of the under- and above-water parts of the tunnel entrances. For the above-water parts, point clouds have been collected using a 3D terrestrial laser scanning (TLS) system. A previous evaluation of the resulting 3D terrestrial model has shown that its accuracy is 1.7 cm [

8]. Since the water turbidity (see

Figure 2-center) excludes the use of optical sensors, the acquisitions of the underwater parts of the canal have been performed using a 3D mechanical scanning sonar (MSS) from static positions, in a similar way to a TLS [

9,

10]. This emerging technology may provide more accurate models than mobile systems. However, unlike the processing of TLS data, the registration and geo-referencing of MSS point clouds are complex, and several challenges need to be solved. MSS data are intrinsically noisy, and the narrowness and shallowness of the canal (

Figure 2-right) induce artifacts due to water surface and sidewall reflections. Therefore, robust methods must be sought to alleviate these difficulties and reconstruct an accurate 3D model of the underwater part.

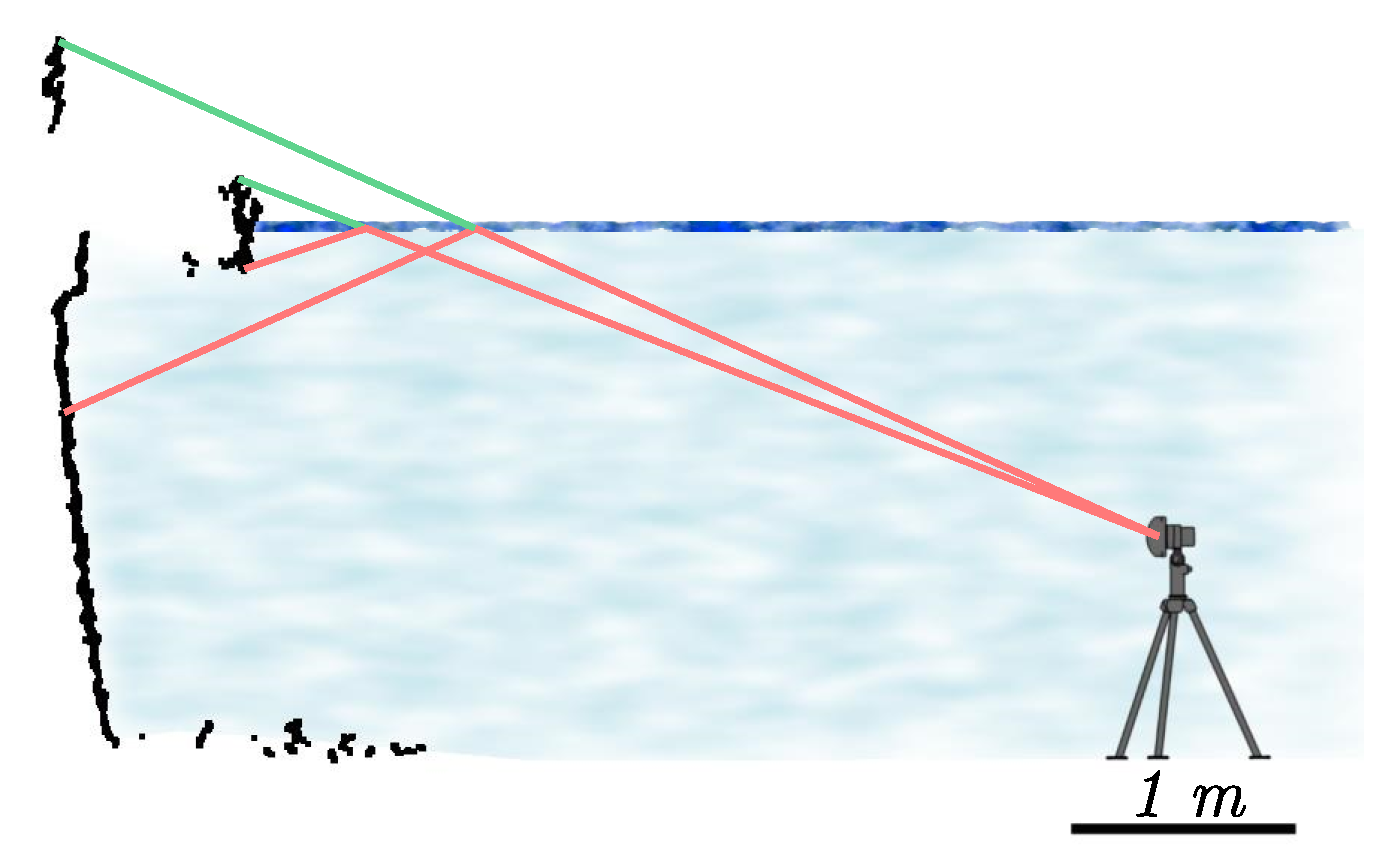

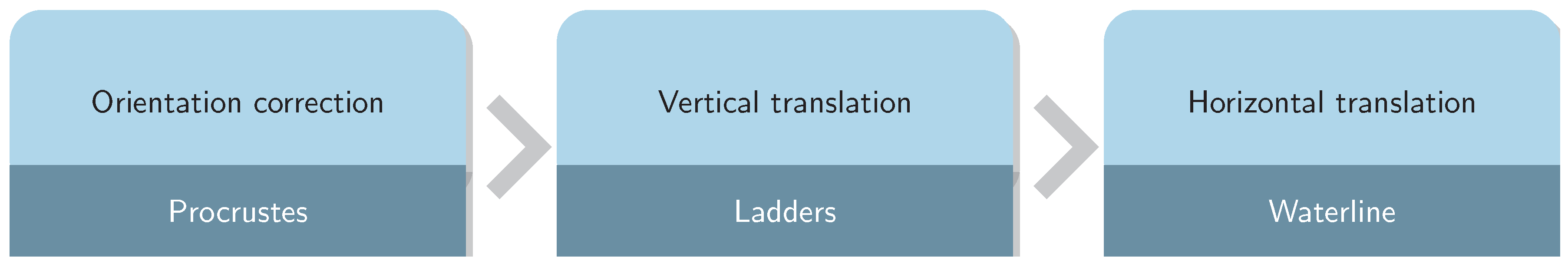

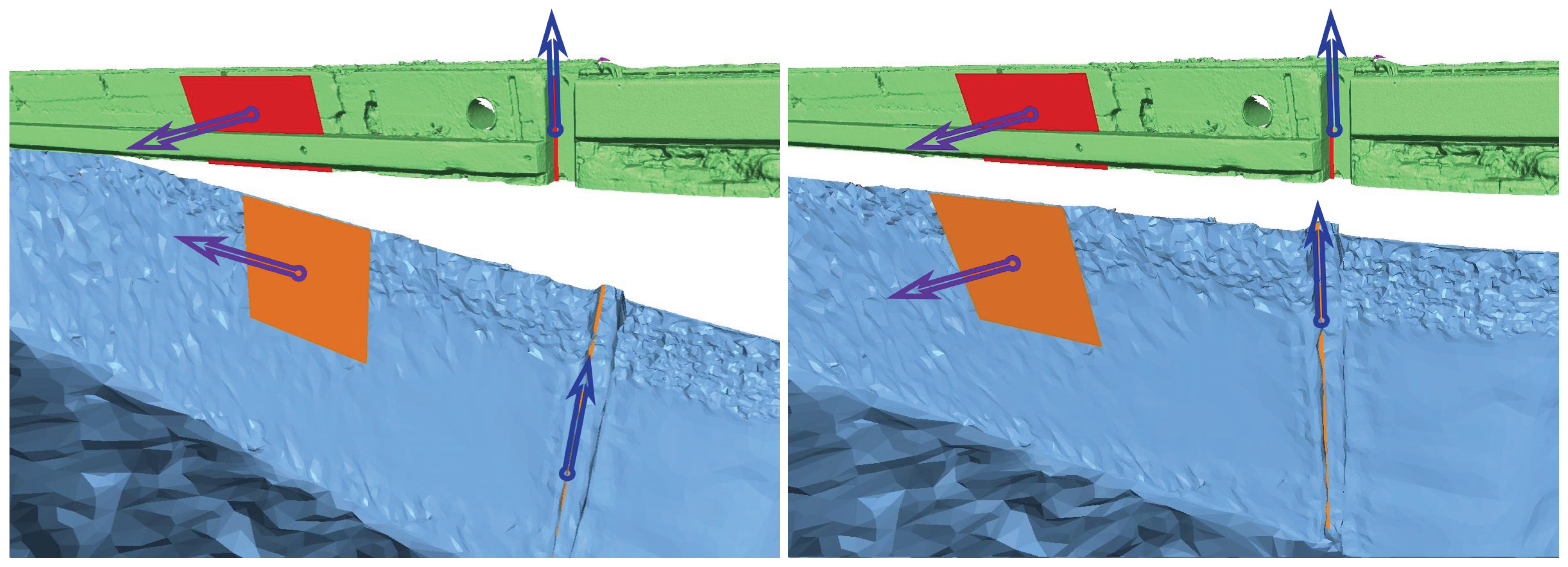

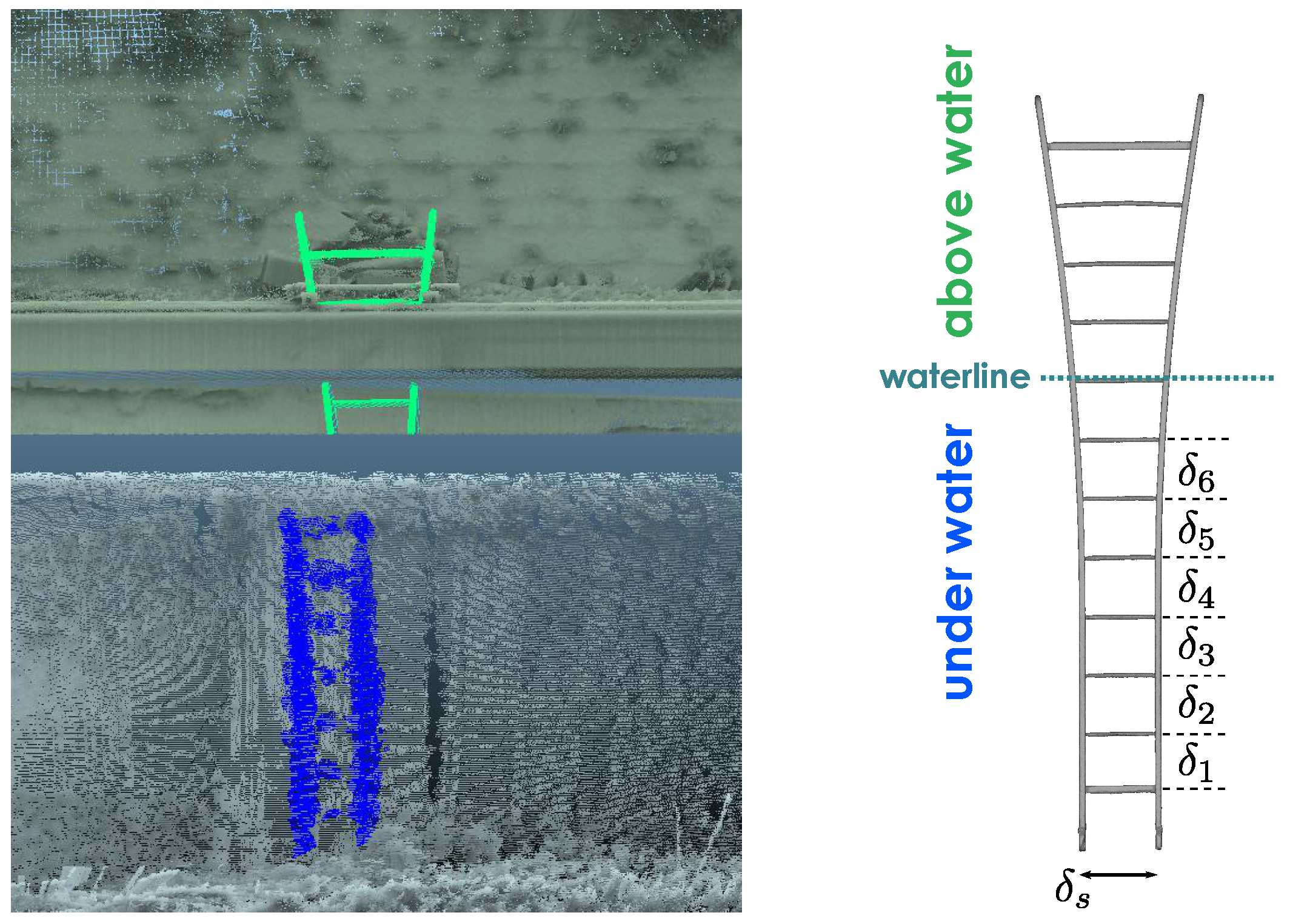

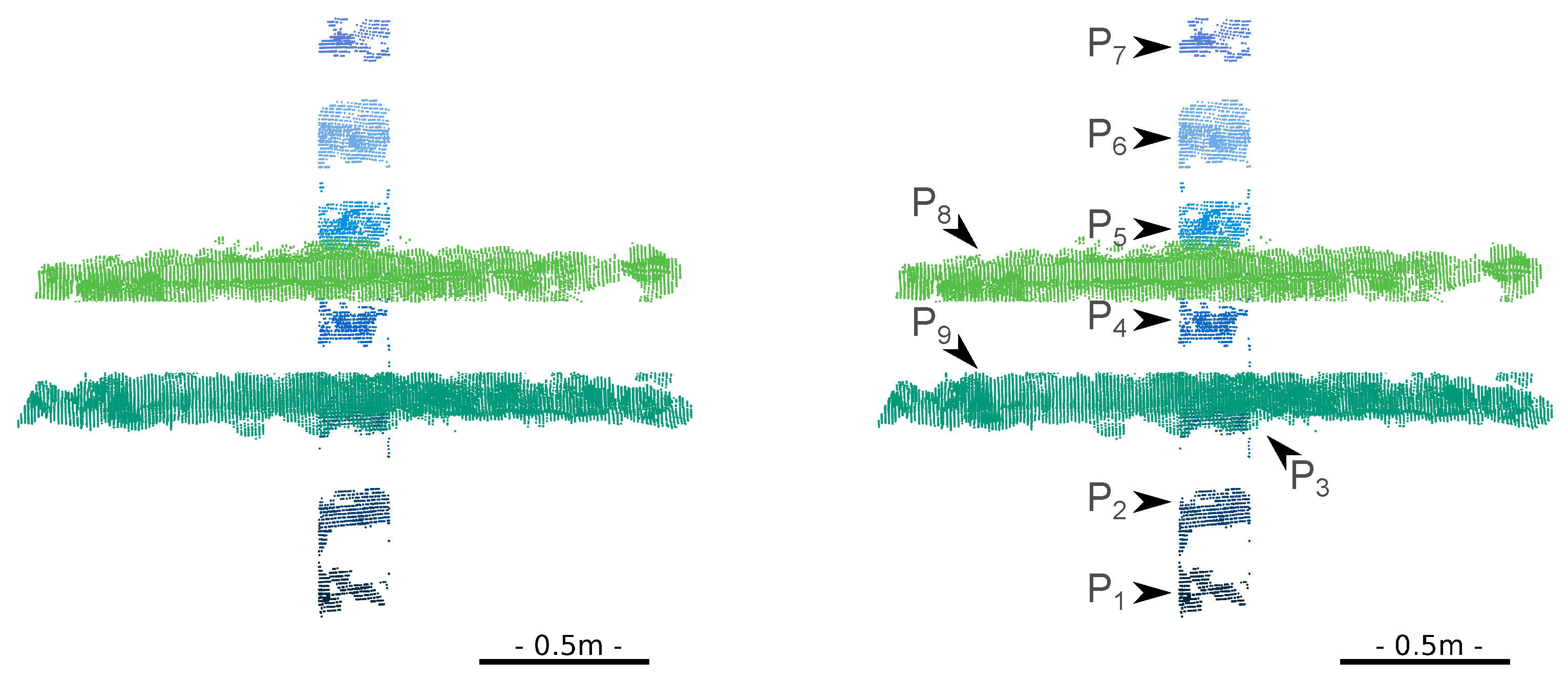

Aligning the 3D underwater model with the TLS one to form the global reference model is the second challenging problem, because, at every scanning position, the location and the orientation of the sonar device are not directly available. In this contribution, we propose to generate directly the full 3D model of the canal tunnel by co-registering the above- and under-water point clouds. Such an approach ideally requires an overlap between the TLS and MSS point clouds. In our case, there is no overlap, but we can exploit some geometric primitives (planes, lines, silhouette of the waterline), which are common to the above- and under-water parts of the structure. Moreover, we partially immersed wooden ladders on each canal bank. The robust fitting of the rungs and stiles of the ladders in both point clouds, using Maximum likelihood-type estimators (M-estimators), provides additional constraints for modeling the canal tunnel.

The paper is organized as follows. We first review related works in

Section 2. Then, in

Section 3, we introduce TLS and MSS data recording systems. Sonar data processing is described in

Section 4.

Section 5 is dedicated to the construction of the full 3D model by co-registering the above- and under-water models. In

Section 6, we comment on the experimental results.

Section 7 concludes the paper and proposes future directions for this work.

2. Related Work

For the purpose of assessing the accuracy of 3D acquisitions of civil engineering structures, the 3D reference model has to be more accurate than the model under inspection. Under certain circumstances, construction plans are available, and an as-built model may be used. For example, in [

11], the evaluation of 3D reconstructions by an underwater SLAM (simultaneous localization and mapping) method was performed with a computer-aided design (CAD) model of a ship hull. Most tunnel canals, however, were bored during the 19th and 20th century (e.g., our test structure, Niderviller’s tunnel, located near Strasbourg (France), was bored between 1839 and 1845), and their construction plans, even limited to the headwalls, are not available or not accurate enough, so other solutions must be sought.

The alternative for building ground-truth models is to survey the object with a very accurate measurement device. For the above-water parts, geo-referenced points or point clouds may be used. In [

6], the accuracy of data collected by a boat-based mobile laser scanning system is evaluated by using a set of reference spheres, positioned by GNSS. In [

12], a set of geo-localized TLS point clouds provided reference data with centimetric precision for a mobile mapping system verification. A similar technique was reported in [

8].

In the case of partly-immersed structures, there are two possibilities. The first one is to immerse an artificial reference object that can be surveyed beforehand, using a static TLS, for example. This technique was recently used in [

7] to evaluate CIDCO (Centre Interdisciplianire de Développement en Cartographie des Océans) sonar prototypes. In this work, a test bench made of concrete panels with protrusions and extrusions was scanned by a TLS at a millimeter resolution, with a 2-cm resampling before being immersed and surveyed. The second one is to exploit existing structures that can be emptied, such as dry docks, or filled, such as dam reservoirs. For example, in [

5], the Blueview company performed acquisitions in dry docks to evaluate a multi-beam sonar equipment. A TLS survey of the empty dry dock was first performed, and sonar acquisitions were made after filling the dock. In [

7], a 3D LiDAR model of a dam was acquired before the reservoir was filled, and then, a survey was made using a multi-beam echosounder (MBES), showing only slight, local differences (less than 5 cm) due to the shift of materials during the filling operation. In a similar manner, it is possible to benefit from natural effects, such as tide. For example, in [

1], a surface was surveyed at high tide using a sonar system, and a total station was used to assess the acquisition process at low tide.

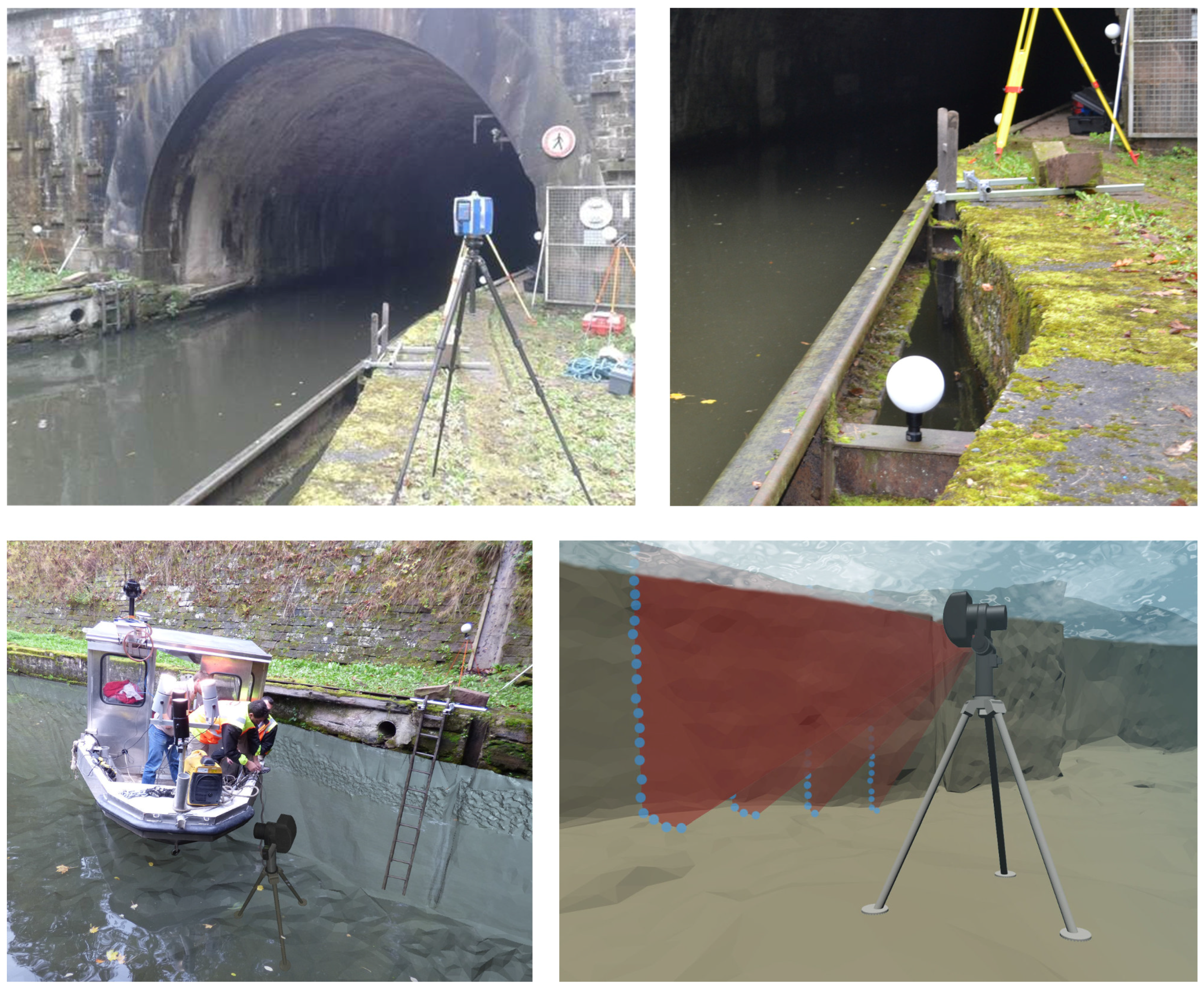

In our case, it would have been interesting to complement the existing TLS survey of the above-water parts of our test structure, Niderviller’s tunnel, after emptying the canal. Unfortunately, such an operation is costly and may even be hazardous due to the age of the tunnel: the canal walls, in poor condition, might crumble. Therefore, canal managers are often reluctant, and drying operations occur very rarely. For example, Niderviller’s tunnel and a nearby one, Arzviller’s tunnel, were emptied in 2009 (before the beginning of our study); see

Figure 3. This was the first time since 1968. Since it is not possible to empty the canal, we have to resort to 3D underwater imaging techniques. Mobile or static systems might be envisioned.

Figure 3.

Entrance of Arzviller’s tunnel during its emptying in 2009.

Figure 3.

Entrance of Arzviller’s tunnel during its emptying in 2009.

In recent years, mobile surveying systems were developed to inspect partially- or totally-immersed open structures, like harbors or dams. Most of these operational systems provide 3D models thanks to MBES for the underwater parts and terrestrial laser scanner (TLS) for out-of-the-water parts. The acquisition is performed in a dynamic way in order to sweep the surveyed structure. The localization of the system is hence of central importance. Most of the time, the associated positioning system combines GNSS and inertial navigation systems (INS); see, e.g., [

1,

3]. However, these methods are unsuitable for canal tunnels due to the lack of a GNSS signal. Alternatives to GNSS/INS systems were proposed in the robotics and computer vision literature. For example, [

13] introduces a SLAM approach to obtain at the same time the 3D model and the mobile localization, thanks to the registration of TLS point clouds acquired with high frequency. In [

8], we proposed a simplified visual odometry technique to estimate the position of our mobile mapping prototype along the tunnel. However, all of these methods suffer from drifts that are especially sensible in elongated structures, such as tunnels, and that can only be corrected using reference points, which are more difficult to set up in the absence of a GNSS signal. Other potentially interesting systems were developed in the context of large-diameter pipe inspection. Recently, two devices were proposed: the ABIS (above and below inspection system) system of the ASI Marine Technology society (see [

4]) and the HD Profiler System of the Hydromax USA society (see [

2]). Both systems acquire laser and sonar data and HD images. The documentation of these commercial solutions is limited. Furthermore, a towed device cannot be used in certain tunnels, which are curved.

In order to avoid the difficulties related to the localization of mobile mapping systems and to obtain an accurate reference model, we choose to perform static acquisitions to build the underwater part of our reference model. Such an approach is classical for TLS data. Thanks to the recent availability of a 3D mechanical scanning sonar (MSS), it is now possible to get a 3D model from sonar acquisition in a static way, as well. The MSS device was used in several underwater surveys, such as the ones introduced in [

9,

10]. In a TLS-like manner, several scanning positions were carried out to get a full model. The co-registration of point clouds was done, in a local coordinate system, with the iterative closest point (ICP) algorithm.

The positioning of underwater cloud points in the same coordinate system as the TLS data can be performed in direct or indirect ways (see [

14]). Both methods require the knowledge of reference points. In the literature, one may find several solutions to define these points underwater. The first method is acoustic positioning based on triangulation. The operating principle is close to GNSS, except, in the water, acoustic waves are used. For example, in [

15], buoys equipped with ultra-short baseline (USBL) transceivers, tied up with GNSS receivers, yield the localization of a remotely-operated vehicle (ROV). When the survey is carried out in shallow water, underwater points can be surveyed with terrestrial methods thanks to a long pole equipped with a prism for a total station or a GPS antenna (see [

16]). Lastly, in [

17], poles with underwater and above-water targets are partially immersed. Thus, under- and above-water acquisitions can be co-registered, because poles create a link between both models. In the present work, we have chosen a similar solution, in which wooden ladders, as well as existing geometric primitives of the structure are used to link the underwater and above-water models.

4. Sonar Data Processing

The first observation of raw sonar data highlights measurement errors and also the noisy nature of MSS point clouds. These elements must be handled. Furthermore, to obtain a full 3D model, underwater and above-water point clouds have to be adjusted by registration.

4.1. Sonar Measurement Errors

The raw sonar output is an acoustic image constructed from the received echoes in each beam. More specifically, from each beam, the echo with the highest intensity is used to estimate the 3D point. However, the acoustic image brings out some measurement errors. In most cases, these errors have a lower intensity than the recorded object, so they do not appear in the output point cloud, but some artifacts may remain.

Some measurement errors in sonar data may come from the scanner device itself. A list of error sources is reported in [

5], along with their consequences on data recording and possible adjustments to alleviate them. Typical problems that may occur are: platform motion, tilt offset errors, insufficient coverage or incorrect sound speed. Some corrections can be applied in post-processing, but in some cases, new scans need to be taken. These rough errors are checked immediately after scanning. However, slight errors may be insensible in the raw point cloud.

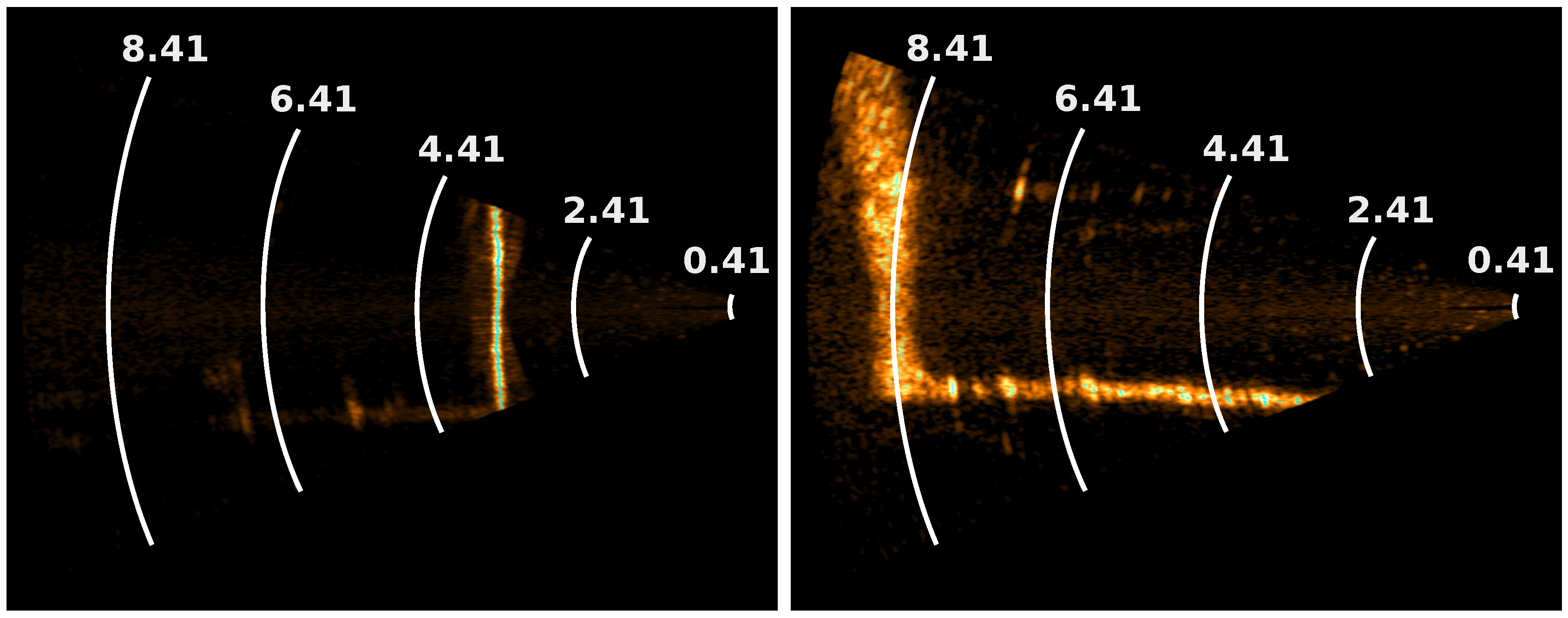

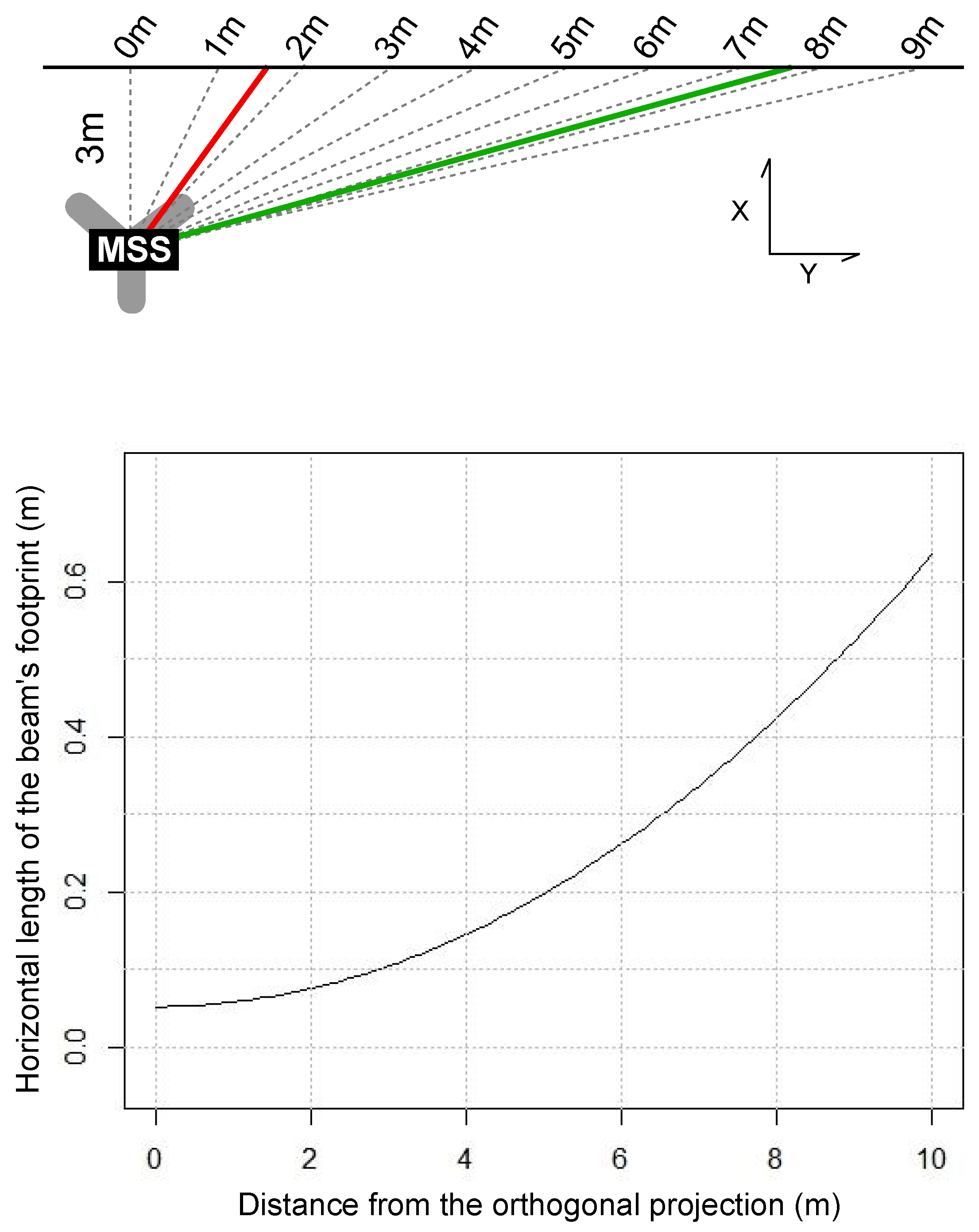

Other errors are due to the configuration in which the MSS scans are operated: shallow water and confined environment. The most visible errors are due to signal reflections on the water surface. In some cases, “phantom” objects may be observed above the water surface (see

Figure 9). However, such reflections can be detected by visual inspection of the profiles, and the artifacts are then easily deleted from the point cloud. It is more difficult to detect surface reflections when the sidewalls of the canal are planar. They make the vertical position of the waterline more difficult to estimate. This is another justification of using ladders to help with geo-referencing the underwater point cloud. Once the MSS vertical position is known, surface artifacts can be deleted. Reflections may also occur on the sidewalls or on the raft. In the latter case, phantom objects are observed underneath the soil level, so they can be discarded.

Figure 9.

Signal reflection on the water surface may cause artifacts.

Figure 9.

Signal reflection on the water surface may cause artifacts.

Furthermore, some errors unavoidably arise due to the presence of objects in the canal, such as the boat hull, cables, fishes or suspended particles. All of these errors are deleted manually, but most of them could be removed automatically, because the canal shape is roughly known.

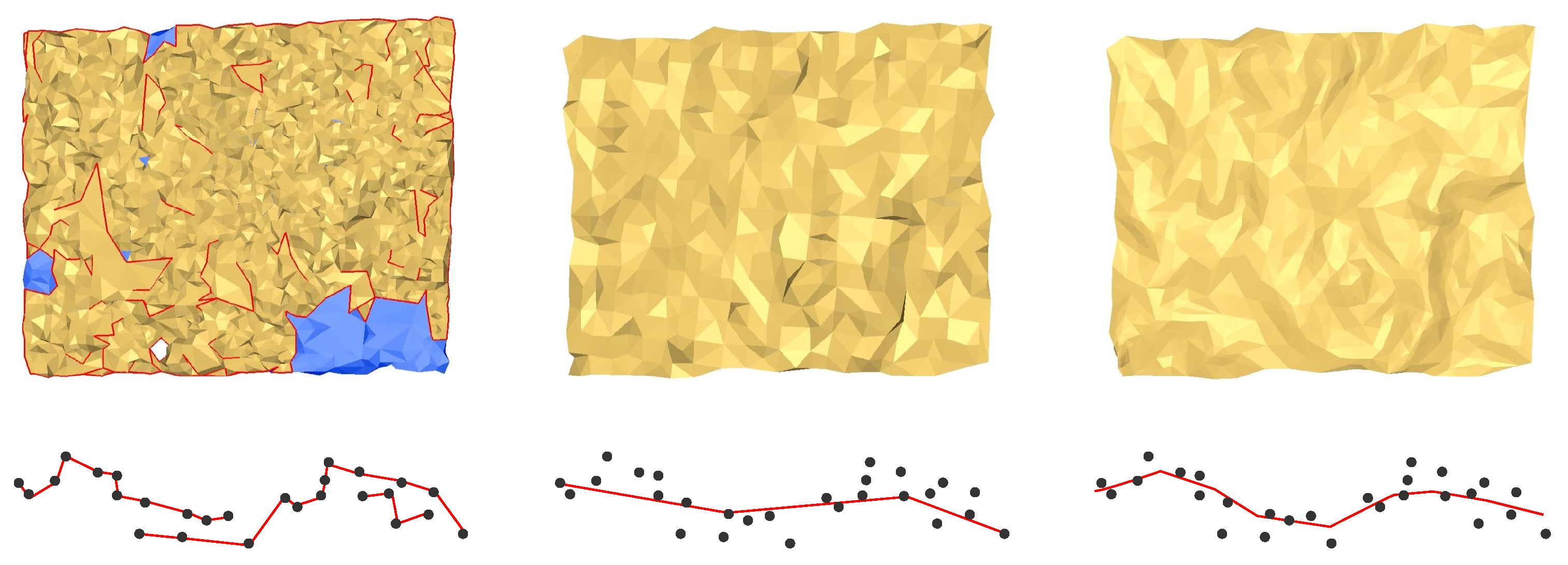

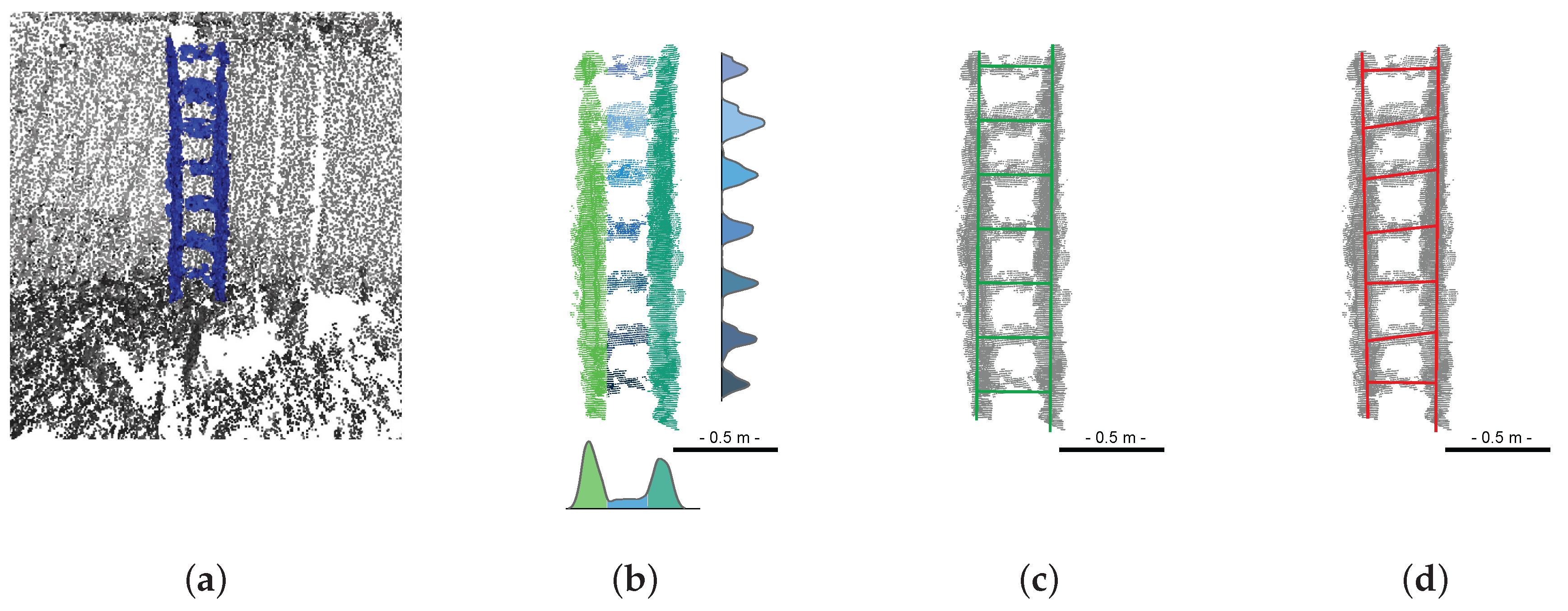

4.2. Denoising and Meshing

The first processing step consists of removing the significant noise exhibited by sonar acquisitions. The proposed solution exploits the meshing phase of the reconstruction process. Indeed, most of the time in surveying applications, surface reconstruction is performed to obtain a simplified digital model of the recorded structure, and this operation is generally the last step in the modeling chain. Here, we use it as a pre-processing step, since it provides a visual control on the result, which highlights errors and guides denoising.

We note that TLS point clouds have a negligible noise level, so their processing only involves outlier removing, and all points may be employed as triangle vertices to reconstruct the surface. This is not the case for MSS clouds, for which artifacts may occur when all points are used for meshing; see

Figure 10 (left). Removing those artifacts reduces to denoising and can be done using two methods:

One is to select evenly-spaced points as mesh vertices for triangulation (

Figure 10 (center)), based on a minimum distance criterion. The price to pay is that details may be lost.

Another way is to compute the nearest surface to points using robust estimators (

Figure 10 (right)). For this purpose, new points are interpolated. However, the risk is to obtain an over-smoothed model.

Figure 10.

Methods for meshing point clouds: Using all points (Left); Using selected points (Center); Meshing using interpolated points (Right).

Figure 10.

Methods for meshing point clouds: Using all points (Left); Using selected points (Center); Meshing using interpolated points (Right).

Meshing of point clouds may be carried out using specialized software; see, e.g., [

18] for a review. We use 3DReshaper

(Genay, France:

www.3dreshaper.com), which has the ability to mesh with both previously-mentioned techniques and also to combine them to perform successive refinements of the model using the point cloud. Thus, the underwater model reconstruction is performed in a coarse-to-fine manner. The process starts with a large-scale mesh made by selecting points according to a minimum distance criterion. Then, points are picked again in the cloud or computed by interpolation to progressively increase the mesh resolution. Point selection involves either a distance-to-mesh or a maximum surface deviation criterion. The parameters are empirically tuned by an operator, and the process requires a trade-off between details and noise.

We note that this step of the process requires many manual operations, like outlier removal or correction of mesh reconstruction mistakes. While such interventions may be supported by photographs or other physical measurements for TLS data, this is not the case for underwater data, except in particular situations. Hence, the construction of the underwater model from MSS data involves an important part of interpretation.

However, the example in

Figure 11 shows that visually-correct results may be achieved using this method. We note that the filtered MSS surface shown on the rightmost part of the figure was obtained without any knowledge of the underwater structure: the photograph was found in VNF archives after the experiment.

Figure 11.

(Left) Photograph on the northern tunnel entry, during its emptying for maintenance in 2009 (source: Voies Navigables de France (VNF) archives); (Right) Visualization of the MSS denoised model (in grey) superimposed on the image. The orientation is done manually, thanks to the masonry block and the cofferdam grooves, which are visible in the model.

Figure 11.

(Left) Photograph on the northern tunnel entry, during its emptying for maintenance in 2009 (source: Voies Navigables de France (VNF) archives); (Right) Visualization of the MSS denoised model (in grey) superimposed on the image. The orientation is done manually, thanks to the masonry block and the cofferdam grooves, which are visible in the model.

4.3. Underwater Registration

The co-registration of MSS data aims at gathering all records in a single point cloud corresponding to the underwater part of the tunnel. For this purpose, the position between scans must be estimated. Since, in our setup, the position and orientation of the underwater scanner cannot be directly measured, we have to resort to the indirect method. Cloud-to-cloud registration seems to be the easiest technique to implement, but it also raises several issues. Some are due to the nature of the technology itself: MSS data are very noisy, and the resolution is rather coarse, so finding correspondences is difficult. Other difficulties are related to the elongated shape of the canal and to our experimental setup: the farther the recorded point, the smaller the grazing angle. Therefore, points in overlapping zones have a poor accuracy, which also influences the quality of registration. In these conditions, it is very difficult to solve the longitudinal ambiguity, i.e., to accurately estimate the translation along the tunnel axis. Immersing geometric reference objects (e.g., ladders) or decreasing the distance between scanning positions to increase the overlap quality are possible solutions to this problem.

6. Experimental Results

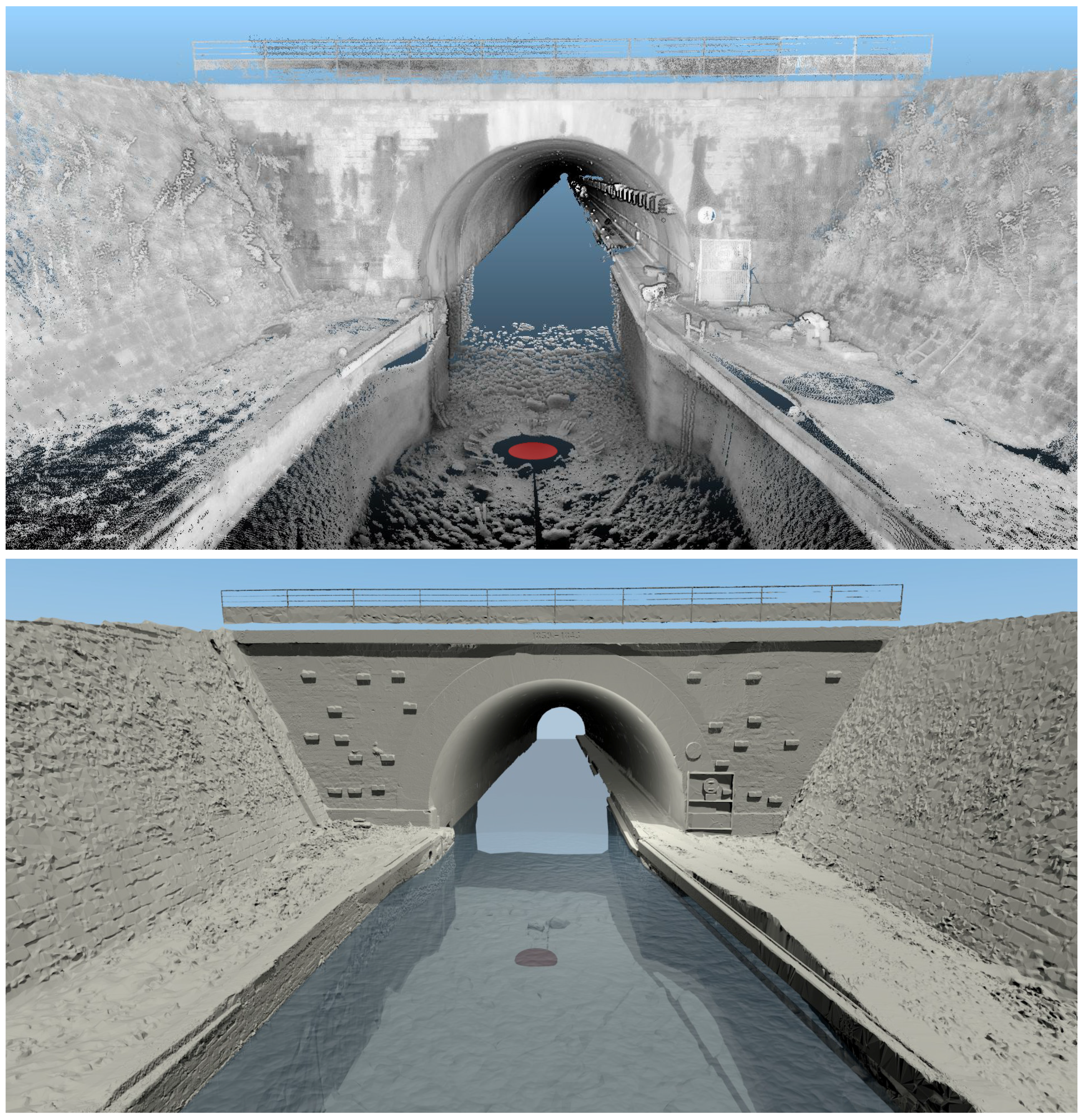

The final 3D, full reference model we obtain with the proposed methodology is shown in

Figure 20. We propose two complementary renderings: mesh and point cloud visualization. The latter provides visual information (such as measurement shadows) that may disappear in the mesh visualization, which is smoother. Moreover, some elements (ladders, equipments, packaging) were discarded from the mesh. In the point cloud visualization (

Figure 20 (top)), it may be noticed that the above-water and underwater models are visually very satisfying. However, the TLS point cloud seems more homogeneous and denser than the underwater one, which has a lower resolution. Moreover, the MSS model appears more and more grainy as the points are away from the scanning position. For example, many imperfections, both within the MSS model and at the intersection of the models, are visible on canal banks at the bottom of the image. The defects are probably due to the wide footprint of the beam at such a distance from the source at a grazing incidence angle, as already seen in

Figure 8. In future experiments, the distances between the MSS positions should be reduced to alleviate these issues.

Figure 20.

Resulting full 3D geo-referenced model of Niderviller’s canal tunnel entrance. (Top) Point cloud visualization; (Bottom) Mesh visualization. The red disk indicates the location of the MSS position.

Figure 20.

Resulting full 3D geo-referenced model of Niderviller’s canal tunnel entrance. (Top) Point cloud visualization; (Bottom) Mesh visualization. The red disk indicates the location of the MSS position.

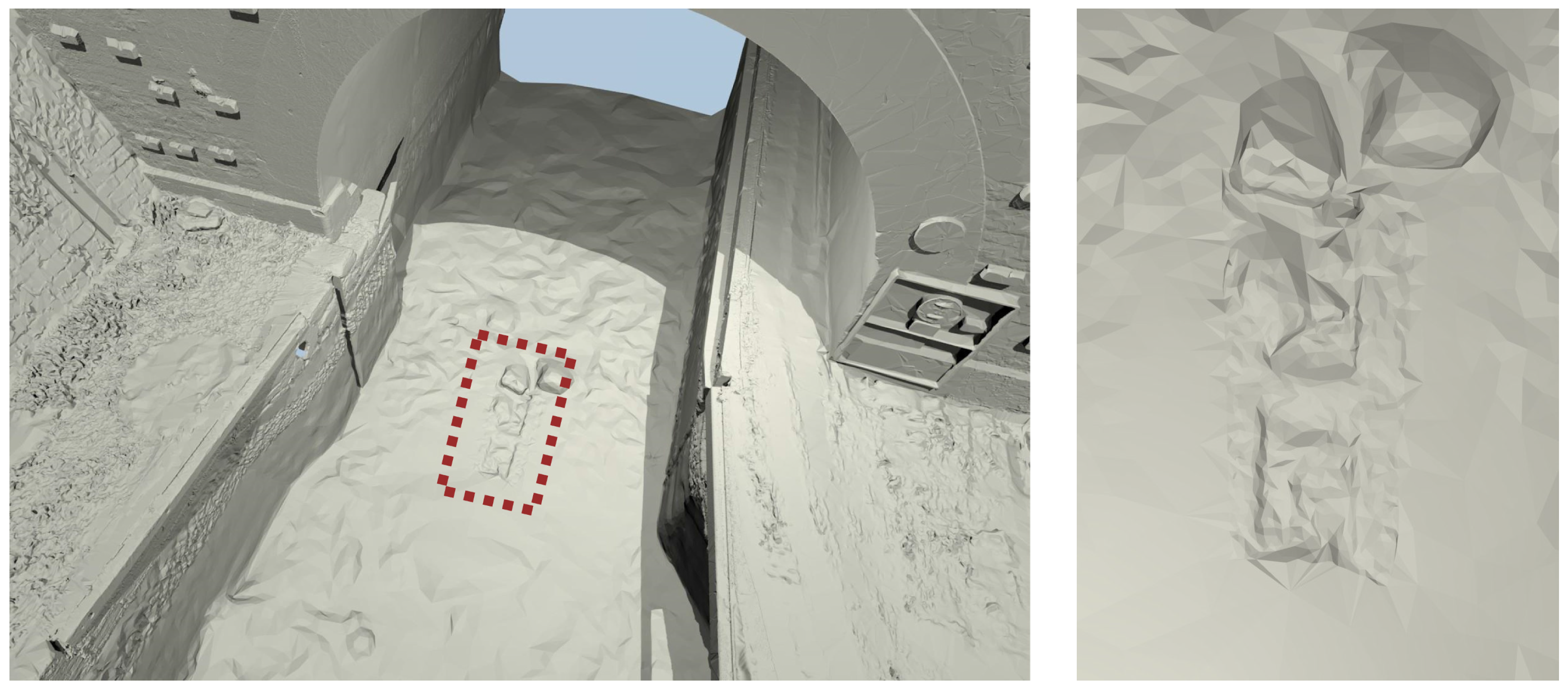

The construction of the MSS model required many operator interventions for interpreting the elements either as noise or as detail. This task is very difficult without any visual references about the underwater part of the canal. However, just at the entrance of Niderviller’s tunnel, in the alignment of the cofferdam grooves, a detail, which suggests a kind of step, may be seen (

Figure 21) and has been conserved as an element of interest. This detail reminds us of the image of Arzviller’s tunnel (see

Figure 3) where a step is clearly visible between the cofferdam grooves. Unfortunately, we did not find a similar picture of the entrance of Niderviller’s tunnel, which could confirm the relevance of this detail in the MSS model.

Figure 21.

Close-up of the reference model showing two blocks and some structure in the alignment of the cofferdam grooves, which seem similar to the one observed in 2009 at Arzviller (

Figure 3).

Figure 21.

Close-up of the reference model showing two blocks and some structure in the alignment of the cofferdam grooves, which seem similar to the one observed in 2009 at Arzviller (

Figure 3).

7. Conclusions and Future Work

In this paper, we have introduced a robust method to build a full 3D model of a canal tunnel. Data have been collected by TLS and MSS devices. The first issue we identified is due to the differences in spatial resolution and beam width between both devices. MSS data are intrinsically noisy and have a much lower resolution than TLS data. In addition, the angular loss of resolution can be rather strong: the oblong shape of the tunnel induces many grazing incidence angles, and the sonar data are rather coarse at large distances from the scanner device.

The first processing step consists of denoising the MSS data by meshing. More specifically, a coarse-to-fine method, which gradually increases the resolution of the mesh, is applied. Of course, differentiating noise from details is a difficult task in the absence of visual or physical reference, and confusion is unavoidable. The interpretation by an operator is required to determine the appropriate trade-off between noise and details. We believe that acoustic and image processing techniques should be explored to devise more automatic and data-driven denoising methods. In particular, moving least squares, bilateral filtering [

28], non-local means filtering [

29,

30,

31] or structure+texture decompositions [

32] seem appealing for this task.

A second challenge concerns the co-registration of the point clouds provided by both scanners. The methods for processing TLS data to generate the geo-referenced above-water 3D model are well-known and can be used without any difficulties in our context. On the other hand, handling the MSS point clouds to build the underwater model is much more complicated. In particular, the weak resolution and noisy aspect of MSS data, along with the lack of salient elements along the canal, make classical registration methods, such as ICP, less efficient. To alleviate this difficulty, the experimental setup must be improved by reducing the distance between MSS positions. An interval of about 5 m would be recommended, instead of 10 meters, as in the current experiment. Moreover, additional targets, such as the ladders that are used in our experiment, could be immersed in the canal. They could make the registration easier, by adding references to both point clouds. Our experiments show that the targets must be carefully chosen and placed on-site: for example, the ladders should be wooden and separated from the canal walls; otherwise, their automatic segmentation becomes problematic.

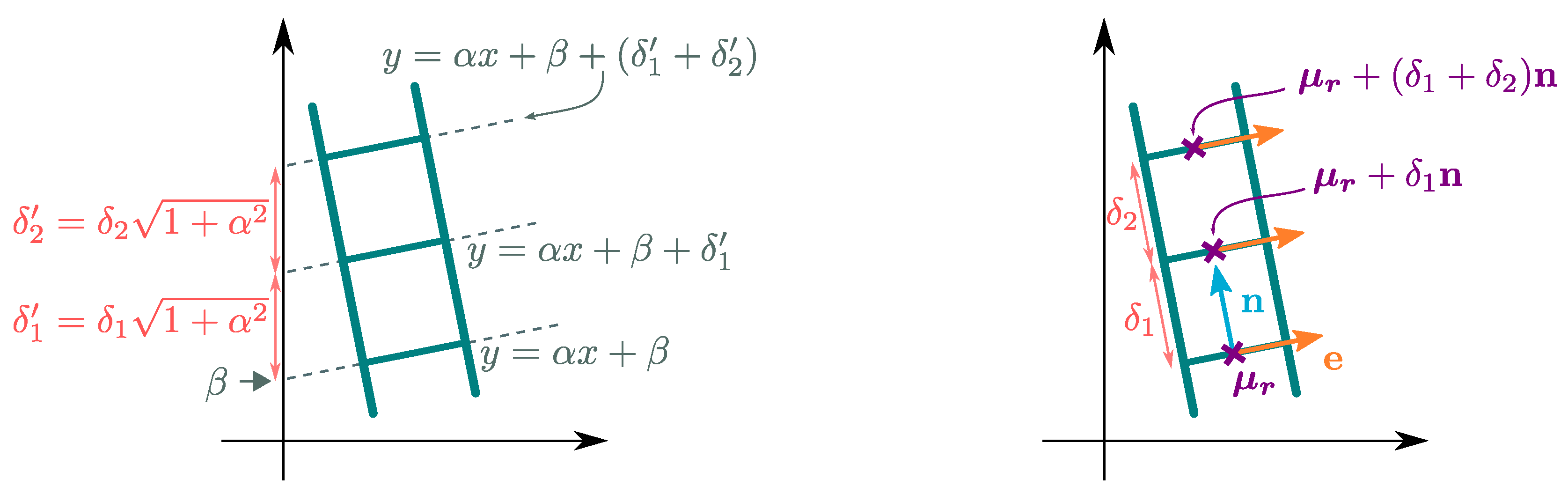

To obtain a full 3D model of the canal tunnel, registering MSS and TSS data is a crucial point. The lack of overlap between point clouds raises difficulties in our application. To solve this challenge, we proposed a three-step procedure in which, first, geometrical entities are exploited to determine the orientation parameters. The second step uses the ladders to estimate the vertical correction: we introduced a robust method based on M-estimation to simultaneously fit lines on the stiles and rungs of the ladder. We assessed the method by comparing the results of the robust fit with real distance measurements. Distance priors are used to increase the precision of the fit thanks to a reference scan of the ladders. The proposed methodology could be adapted to other manufactured targets. Finally, the silhouette of the waterline is extracted in both models, and a 2D ICP algorithm is applied to estimate the remaining horizontal translation vector. Since the TLS point cloud is bound to a geodetic system, the geo-referencing of the model comes as a by-product of our method, without additional complexity.

This experimentation provides an initial overview of underwater acquisition in canal tunnels and yields promising results. Improvements of the model quality may be expected from a better experimental setup (closer scanning positions, more numerous targets). More automatic and data-driven filtering techniques should help with enhancing the data quality and reducing manual interventions. Furthermore, an experiment in a controlled environment, like a dry dock, as proposed in [

5], would allow a fine assessment of the model accuracy. One may foresee that the progress of technology will improve the performances of the acquisition devices. Subsequently, we may expect more accurate models using the proposed methodology. The obtained models should be used as a reference for future acquisitions, either with dynamic underwater acquisitions systems (for assessing mobile mapping solutions) or in static ones (to evaluate the tunnel deformation).