Development of a High Irradiance LED Configuration for Small Field of View Motion Estimation of Fertilizer Particles

Abstract

:1. Introduction

2. Theoretical Background

3. Material and Methods

3.1. General Requirements

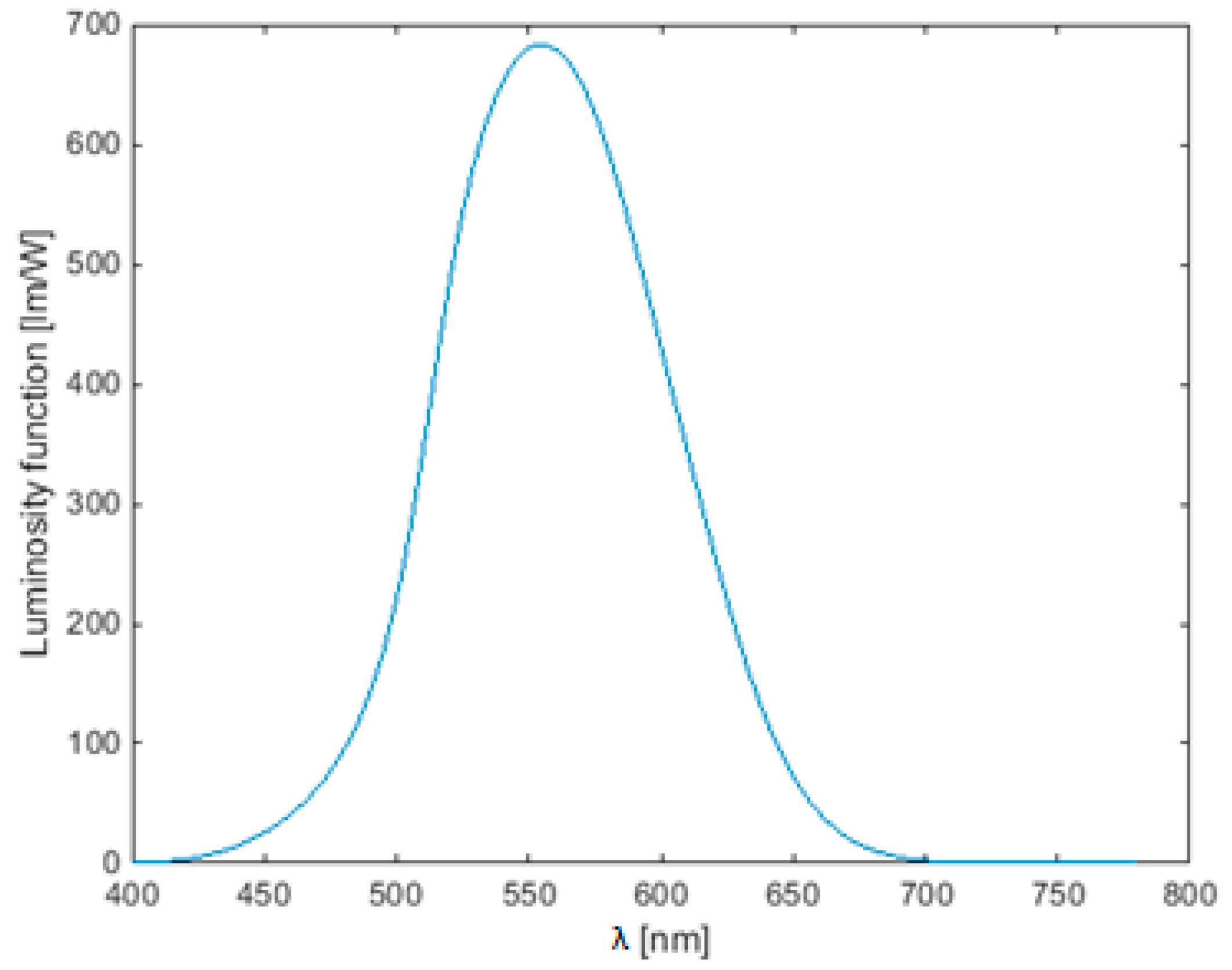

3.2. Lighting Calculations

| Quantity | Photometric | Radiometric |

|---|---|---|

| Energy per unit time | Luminous flux (lm) | Radiant flux (W) |

| Power per unit area | Illuminance (lx) | Irradiance (W/m2) |

| Power per unit solid angle | Luminous intensityn (cd) | Radiant intensity (W/sr) |

| Power per area solid angle | Luminance (cd/m2) | Radiance (W/m2/sr) |

3.3. Multiple Objective Genetic Algorithm

3.4. Simulations

3.4.1. LED Selection

| LED Number | (lm) | (nm) | Name | Part Number |

|---|---|---|---|---|

| 1 | 313 | 567.5 | Lime | LXML-PX02-0000 |

| 2 | 122 | 505.0 | Cyan | LXML-PE01-0070 |

| 3 | 161 | 530.0 | Green | LXML-PM01-0100 |

| 4 | 320 | - | Cool white | LXML-PWC2 |

| 5 | 310 | - | Neutral white | LXML-PWN2 |

| 6 | 21 | 447.5 | Royal blue | LXML-PR01 |

| 7 | 106 | 627.0 | Red | LXM2-PD01-0050 |

| 8 | 140 | 590.0 | Amber | LXML-PL01 |

3.4.2. LED Configuration

| Lens | Cdlm Value (Cd/lm) | FWHM (°) | Diameter (mm) | Part Number |

|---|---|---|---|---|

| Narrow | 4.60 | 23.0 | 23 | 10611 |

| Medium | 2.59 | 28.64 | 23 | 10612 |

| Wide | 1.29 | 44.4 | 23 | 10613 |

4. Results and Discussion

4.1. LED Selection

| LED Number | (mA) | (lm/W) | φ (W) | (%) | (W) |

|---|---|---|---|---|---|

| 1 | 700 | 461.4 | 0.678 | 80.5 | 54.6 |

| 2 | 700 | 213.4 | 0.572 | 92.0 | 52.6 |

| 3 | 700 | 551.9 | 0.292 | 95.6 | 27.9 |

| 4 | 1000 | 329.6 | 0.971 | 78.1 | 75.8 |

| 5 | 1000 | 348.4 | 0.890 | 74.6 | 68.5 |

| 6 | 700 | 20.0 | 0.910 | 76.5 | 69.6 |

| 7 | 700 | 97.0 | 1.093 | 56.1 | 61.3 |

| 8 | 700 | 472.3 | 0.296 | 70.2 | 20.8 |

4.2. LED Configuration

4.2.1. Square and Circular Array

4.2.2. Multiple Objective Genetic Algorithm

| Number of LEDs | Average Irradiance (W/m2) | CV (%) |

|---|---|---|

| 36 | 452 | 1.98 |

| 40 | 504 | 1.97 |

| 44 | 558 | 1.99 |

| 48 | 616 | 2.05 |

| 52 | 648 | 1.95 |

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Van Liedekerke, P.; Tijskens, E.; Dintwa, E.; Anthonis, J.; Ramon, H. A discrete element model for simulation of a spinning disc fertiliser spreader I. Single particle simulations. Powder Technol. 2006, 170, 71–85. [Google Scholar] [CrossRef]

- Hijazi, B.; Decourselle, T.; Vulgarakis Minov, S.; Nuyttens, D.; Cointault, F.; Pieters, J.; Vangeyte, J. The Use of High-Speed Imaging Systems for Applications in Precision Agriculture, New Technologies—Trends, Innovations and Research. Available online: http://www.intechopen.com/books/new-technologies-trends-innovations-andresearch/the-use-of-high-speed-imaging-systems-for-applications-in-precision-agriculture (accessed on 7 November 2015).

- Hijazi, B.; Cointault, F.; Dubois, J.; Coudert, S.; Vangeyte, J.; Pieters, J.; Paindavoine, M. Multi-phase cross-correlation method for motion estimation of fertilizer granules during centrifugal spreading. Precis. Agric. 2010, 11, 684–702. [Google Scholar] [CrossRef]

- Vangeyte, J. Development and Validation of a Low Cost Technique to Predict Spread Patterns of Centrifugal Spreaders. Ph.D. Thesis, University of Leuven, Leuven, Belgium, 2013. [Google Scholar]

- Van Liedekerke, P.; Tijskens, E.; Dintwa, E.; Rioual, F.; Vangeyte, J.; Ramon, H. DEM simulations of the particle flow on a centrifugal fertiliser spreader. Powder Technol. 2009, 190, 348–360. [Google Scholar] [CrossRef]

- Grift, T.E.; Hofstee, J.W. Testing an online spread pattern determination sensor on a broadcast fertiliser spreader. Trans. ASAE 2002, 45, 561–567. [Google Scholar] [CrossRef]

- Prasad, A.K. Particle image velocimetry. Curr. Sci. India 2000, 79, 51–60. [Google Scholar]

- Cointault, F.; Sarrazin, P.; Paindavoine, M. Fast imaging system for particle projection analysis: Application to fertilizer centrifugal spreading. Meas. Sci. Technol. 2002, 13, 1087–1093. [Google Scholar] [CrossRef]

- Cointault, F.; Vangeyte, J. Photographic Imaging Systems to Measure Fertilizer Granule Velocity during Spreading. In Proceedings of the International Fertilizer Society, London, UK, 27 October 2005.

- Hijazi, B.; Vangeyte, J.; Cointault, F.; Dubois, J.; Coudert, S.; Paindavoine, M.; Pieters, J. Two-step cross-correlation-based algorithm for motion estimation applied to fertiliser granules’ motion during centrifugal spreading. Opt. Eng. 2011, 50, 639–647. [Google Scholar]

- Villette, S.; Piron, E.; Cointault, F.; Chopinet, B. Centrifugal spreading of fertilizer: Deducing three-dimensional velocities from horizontal outlet angles using computer vision. Biosyst. Eng. 2008, 99, 496–507. [Google Scholar] [CrossRef]

- Cool, S.; Pieters, J.; Mertens, K.C.; Hijazi, B.; Vangeyte, J. A simulation of the influence of spinning on the ballistic flight of spherical fertilizer grains. Comput. Electron. Agric. 2014, 105, 121–131. [Google Scholar] [CrossRef]

- Hijazi, B.; Cool, S.; Vangeyte, J.; Mertens, K.C.; Cointault, F.; Paindavoine, M.; Pieters, J.C. High speed stereovision setup for position and motion estimation of fertilizer particles leaving a centrifugal spreader. Sensors 2014, 14, 21466–21482. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cointault, F.; Sarrazin, P.; Paindavoine, M. Measurement of the motion of fertilizer particles leaving a centrifugal spreader using a fast imaging system. Precis. Agric. 2003, 4, 279–295. [Google Scholar] [CrossRef]

- Grift, T.E.; Walker, J.T.; Hofstee, J.W. Aerodynamic properties of individual fertilizer particles. Trans. ASAE 1997, 40, 13–20. [Google Scholar] [CrossRef]

- Walker, J.T.; Grift, T.E.; Hofstee, J.W. Determining effects of fertilizer particle shape on aerodynamic properties. Trans. ASAE 1997, 40, 21–27. [Google Scholar] [CrossRef]

- Lei, P.; Wang, Q.; Zou, H. Designing LED array for uniform illumination based on local search algorithm. J. Eur. Opt. Soc. Rapid 2014, 14, 1401–1420. [Google Scholar] [CrossRef]

- Yang, H.; Bergmans, J.; Schenk, T.; Linnartz, J.P.; Rietman, R. Uniform illumination rendering using an array of LEDs: A signal processing perspective. IEEE Trans. Signal Proces. 2009, 57, 1044–1057. [Google Scholar] [CrossRef]

- Moreno, I.; Avendano-Alejo, M.; Tzonchev, R.I. Designing light-emitting diode arrays for uniform near-field irradiance. Appl. Opt. 2006, 46, 2265–2272. [Google Scholar] [CrossRef]

- Wang, K.; Wu, D.; Qin, Z.; Chen, F.; Luo, X.; Liu, S. New reversing design method for LED uniform illumination. Opt. Express 2011, 19, 830–840. [Google Scholar] [CrossRef] [PubMed]

- Whang, A.J.W.; Chen, Y.; Teng, Y. Designing uniform illumination systems by surface-tailored lens and configurations of LED arrays. J. Disp. Technol. 2009, 5, 94–103. [Google Scholar] [CrossRef]

- Cheng, L.; Nong, L.; JianXin, C. The research of LED arrays for uniform illumination. Adv. Inf. Syst. Sci. 2012, 4, 174–184. [Google Scholar]

- Université de Bourgogne; AgroSup et ILVO. High Frequency Stroboscopic Illumination with Large Area of Lighting Unifomity with Power LEDs. Fr. Patent FR 13.57039, 17 July 2013. [Google Scholar]

- Moreno, I.; Sun, C.C. LED array: Where does far-field begin. In Proceedings of the International Conference on Solid State Lighting, San Diego, CA, USA, 16 December 2010.

- Goldberg, D.E.; Holland, J.H. Genetic algorithms and machine learning. Mach. Learn. 1988, 3, 95–99. [Google Scholar] [CrossRef]

- Schaffer, J. Multiple objective optimization with vector evaluated genetic algorithms. In Proceedings of the International Conference on Genetic Algorithms and Their Applications, Pittsburgh, PA, USA, 24 July 1985.

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cool, S.; Pieters, J.G.; Mertens, K.C.; Mora, S.; Cointault, F.; Dubois, J.; Van de Gucht, T.; Vangeyte, J. Development of a High Irradiance LED Configuration for Small Field of View Motion Estimation of Fertilizer Particles. Sensors 2015, 15, 28627-28645. https://doi.org/10.3390/s151128627

Cool S, Pieters JG, Mertens KC, Mora S, Cointault F, Dubois J, Van de Gucht T, Vangeyte J. Development of a High Irradiance LED Configuration for Small Field of View Motion Estimation of Fertilizer Particles. Sensors. 2015; 15(11):28627-28645. https://doi.org/10.3390/s151128627

Chicago/Turabian StyleCool, Simon, Jan G. Pieters, Koen C. Mertens, Sergio Mora, Frédéric Cointault, Julien Dubois, Tim Van de Gucht, and Jürgen Vangeyte. 2015. "Development of a High Irradiance LED Configuration for Small Field of View Motion Estimation of Fertilizer Particles" Sensors 15, no. 11: 28627-28645. https://doi.org/10.3390/s151128627