Hand-Writing Motion Tracking with Vision-Inertial Sensor Fusion: Calibration and Error Correction

Abstract

: The purpose of this study was to improve the accuracy of real-time ego-motion tracking through inertial sensor and vision sensor fusion. Due to low sampling rates supported by web-based vision sensor and accumulation of errors in inertial sensors, ego-motion tracking with vision sensors is commonly afflicted by slow updating rates, while motion tracking with inertial sensor suffers from rapid deterioration in accuracy with time. This paper starts with a discussion of developed algorithms for calibrating two relative rotations of the system using only one reference image. Next, stochastic noises associated with the inertial sensor are identified using Allan Variance analysis, and modeled according to their characteristics. Finally, the proposed models are incorporated into an extended Kalman filter for inertial sensor and vision sensor fusion. Compared with results from conventional sensor fusion models, we have shown that ego-motion tracking can be greatly enhanced using the proposed error correction model.1. Introduction

Motion tracking technologies which aim at translating human motion into computer-understandable instructions have drawn much attention in the past decade. Potential applications in human-to-machine interface technologies include controlling mobile and flying robots, and navigation and control in augmented reality environments. In general, current motion tracking technologies derive pose estimates from electrical signals received from mechanical, magnetic, acoustic, inertial, optical, radio or microwave sensors [1–3]. When applied to gesture recognition, most of these technologies can be used alone with good results, but when it comes to ego-motion tracking (3D motion tracking within an environment using a camera), none of these technologies is good enough on its own owing to limitations in volume, mobility, accuracy, latency, or tracking range.

For example, microelectromechanical systems (MEMS)-based inertial sensors are lightweight, and good for fast motion tracking, but they lack long term stability due to the problem of severe zero drift [4]. Vision sensors may be more accurate in tracking the motion with little accumulation of errors [5], but due to motion blur their ability to resolve fast movements is poor. They also suffer from line-of-sight limitations, and have problems in distinguishing between rotational and translational motion [6]. To overcome the inherent shortcomings of a single sensor, we present results from our custom-built system integrating a MEMS-based inertial sensor (consisting of accelerometers and gyroscopes, and is sometimes refer to as a “μIMU”) and a web-based camera (i.e., a vision sensor) for motion tracking. For convenience of reference, we will call this μIMU + Camera system the “μIC system” from here on. The MEMS inertial sensor which consists of a 3-axis accelerometer and three single axis gyroscopes is able to measure the acceleration and angular rate, while the vision sensor is able to estimate its pose (position and orientation) relative to a checkerboard (used as a visual landmark). By fusing these two sensors, the drift problem of inertial sensor can be corrected when visual measurements are available. In the interval between two visual measurements, or when the images are blurred or lost due to fast movements, the poses are estimated by inertial measurement. Based on this sensor fusion concept, we have demonstrated a more accurate and reliable motion tracking system, which is described in detail in this paper.

Although significant work has been done in the past few years on inertial sensors as well as vision sensors, the majority of the reported work has focused on one or more of the following aspects: (1) relative pose calibration between inertial sensor and vision sensor [7,8]; (2) deterministic errors calibration of inertial sensor [9,10]; (3) stochastic errors identification [11–15], and modeling of inertial sensor (the majority of these work seek to model the stochastic errors in gyroscopes [12,16]); and (4) algorithms of inertial sensor and vision sensor fusion [17–20]. There are relatively few papers addressing the systematic calibration of a μIC system, which incorporates the orientation calibration of checkerboard and gravitational force as well as the relative calibration of inertial sensor and vision sensor. There are also only a few papers discussing how to combine stochastic error identification and modeling with inertial sensor and vision sensor fusion. This paper covers the following aspects: (1) systematic calibration for a μIC system; (2) deterministic and stochastic error identification and error modeling; (3) real-time pose estimation and correction; and (4) temporal information retrieval by using dynamic time warping (DTW).

The remainder of the paper is organized as follows: Section 2 presents the system setup, sensors and system calibrations, stochastic errors analysis by using Allan variance, and error modeling of the stochastic errors. The experimental results are discussed in Section 3. Finally, the conclusions are presented in Section 4.

2. Methodologies of Motion Tracking with μIC System

2.1. System Setup

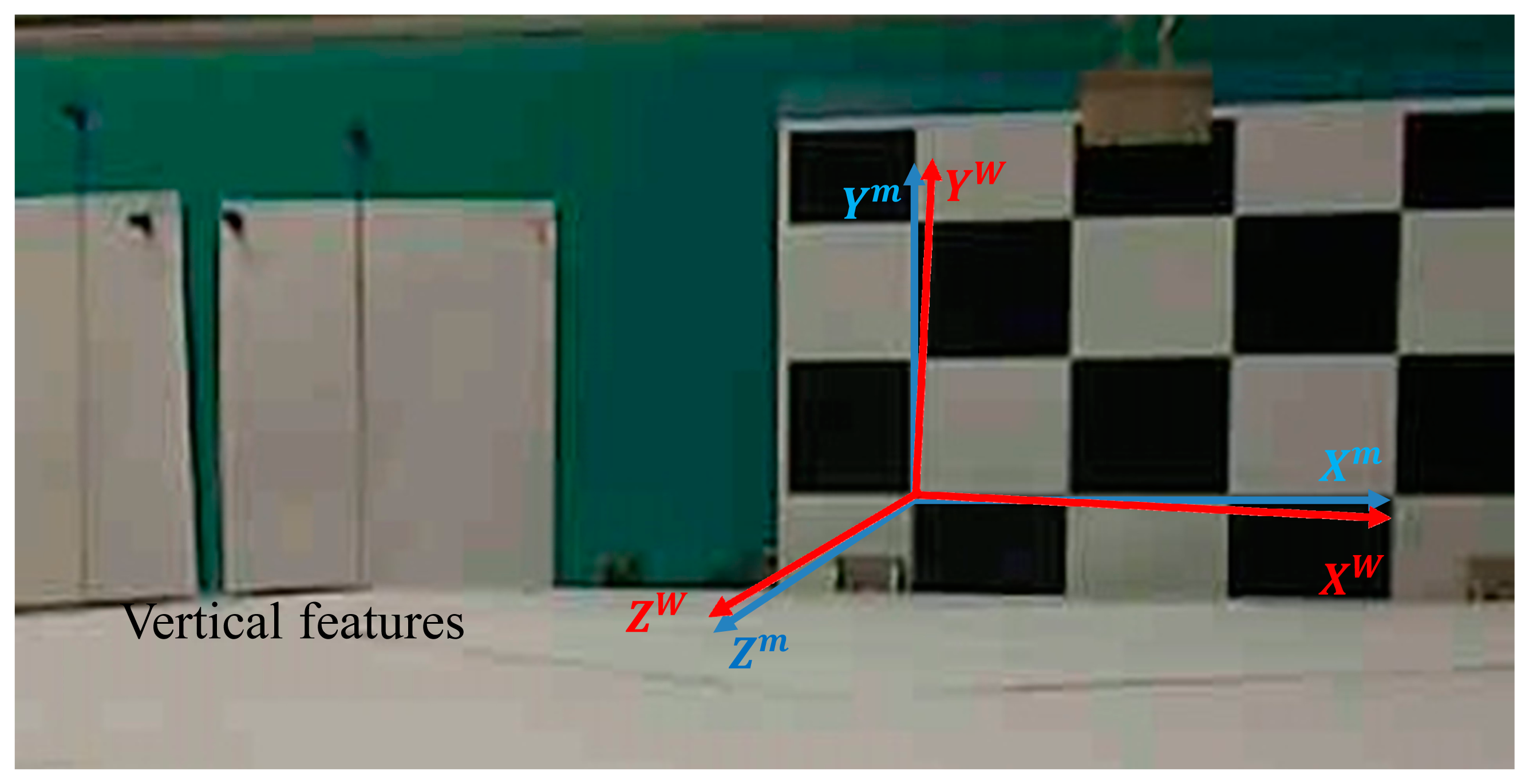

As shown in Figure 1, the experimental setup consists of a 3 × 4 checkerboard pattern, and a custom-built μIC system. The μIC system contains three single axis MEMS gyroscopes (LISY300AL gyroscope, STMicroelectronics, Geneva, Switzerland), a tri-axial MEMS accelerometer (MMA7260 accelerometer, Freescale, Austin, TX, USA), and a web-based vision sensor (Logitech QuickCam Pro 9000, Logitech, Inc., Fremont, CA, USA). With the accelerometers and gyroscope, the accelerations and angular rates of the devices can be measured, which can then be integrated into position and orientation. The checkerboard here is used by vision sensor for positioning. Knowing world coordinates of the corners on the checkerboard and their corresponding image coordinates, it is able to estimate the relative position of camera w.r.t. the world coordinate system. Consequently, the measurements from these two sensors can be fused together.

The maximum sampling rate of the vision sensor is 30 fps, but only about 5 fps has been utilized in this work. The μIMU and vision sensor are fixed in a box, so their relative position will not be changed during the experiments. A pen is attached to one side of the μIC system (Figure 1b), so the trajectory of the movement can be recorded on a normal white paper.

2.2. Inertial Sensor Calibration

Different from motion recognition, the accuracy of motion tracking is highly dependent on the accuracy of the exact angular rates and accelerations. Given the initial orientation and position of the device, the relative positions of the inertial sensor can be obtained in two steps. First, the orientation is updated using the angular rates measured by gyroscope. Second, the accelerations are rotated to the world coordinate frame by using the updated orientation. After the gravitational acceleration, g, is compensated for, the positions can be determined by double integrating the resultant accelerations. However, since the MEMS sensors inherently suffer from high random noise and time varying bias, the performance of the tracking system may degrade if the variants in accelerometer and gyroscope noises are not modeled and compensated properly [12]. This means that the inertial sensor needs to be calibrated carefully. There are two types of error sources in an inertial sensor [21]: deterministic errors, and stochastic errors. Deterministic errors include constant biases, scale factors and non-orthogonalities. These can be directly removed from measurements. Stochastic errors include angle/velocity walk, bias instability, rate random walk, rate ramp, and so on [11], which cannot be directly removed from the measurements, and should be modeled as stochastic process.

2.2.1. Deterministic Error Determination

The mathematical model describing the accelerometer measurements and calibrated accelerations can be expressed as follows [10]:

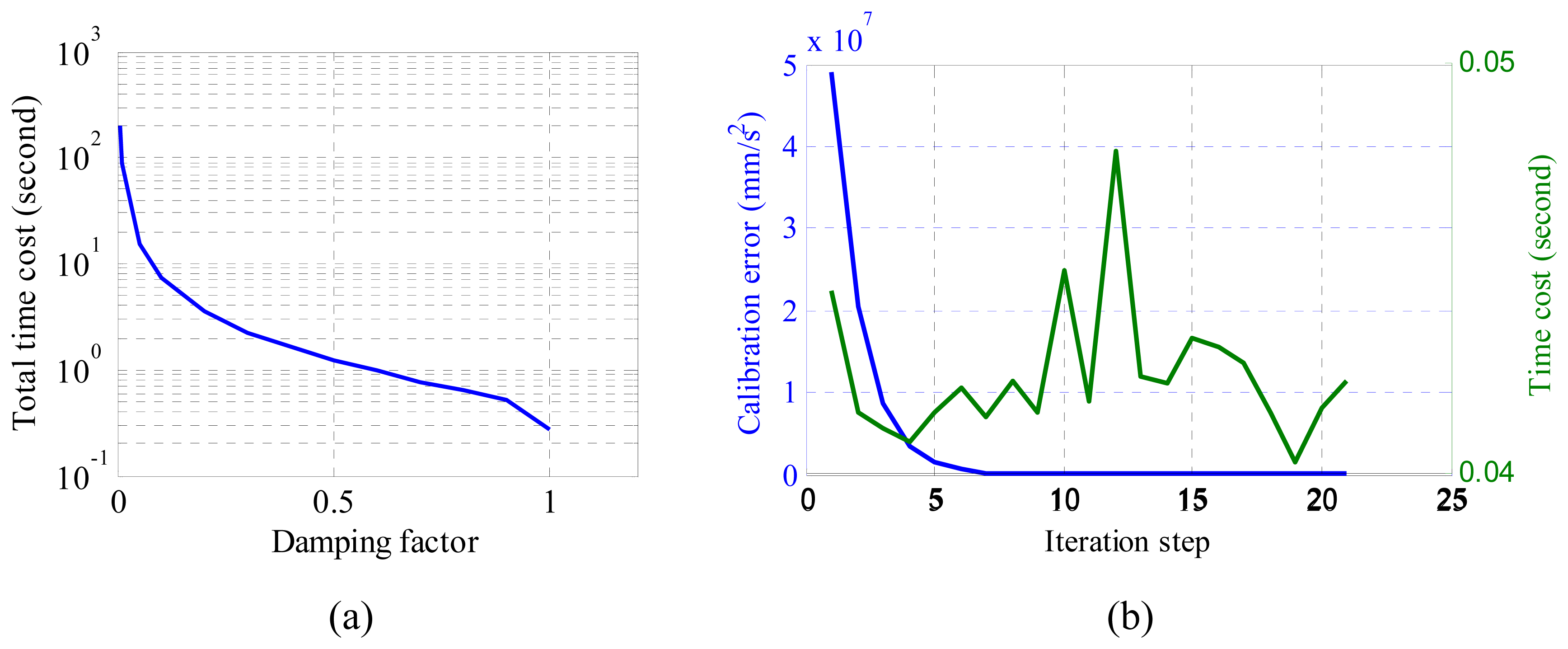

There are many algorithms that could be used to find the solution to the nonlinear least square problem. For example, Newton's method, secant method, Gauss-Newton's method and so on. Among them, Newton's method requires the calculation of second order derivatives. Considering there are 9 unknowns in r(x), 45 s order derivatives should be calculated, which is a huge task. Besides, Newton's method may converge to maximum or saddle point as well as minimum. Though secant method does not require derivative calculation, its convergence rate is slower than Newton's method. If there is a point where the first derivative equals zero on the interval between initial point and optimal point, the algorithm may not converge at all. Compared with them, Gauss-Newton's method only requires first order derivative calculation, and it is much easy to implement. So Gauss-Newton's method [10] is applied to iteratively optimize the estimation in the direction:

Next, the solution to the least square problem is updated as:

Gauss-Newton's method is also very sensitive to initial approximation. If the initial approximate solution is near to the correct one, the algorithm will converge to the correct solution very fast. However, if the current solution is far from the correct one or the linear system is ill-conditioned, the algorithm will converge very slowly or even fail to converge [22]. Hence, the initial guess needs to be set carefully. If the accelerometer had been fabricated perfectly, the scale factors should be one and the non-orthogonalities should be zero, so the initial guess of m matrix is assumed to be an identity matrix. For initial bias estimation, due to the existence of gravitational acceleration, it is not trivial to get an estimation that is close to being true from a single measurement. We adopted the six-position method [23]. The method requires the inertial sensor to be placed on a level surface with the sensitive axis pointing alternatively up and down, so the estimated bias of the sensitive axis is:

2.2.2. Stochastic Error Determination

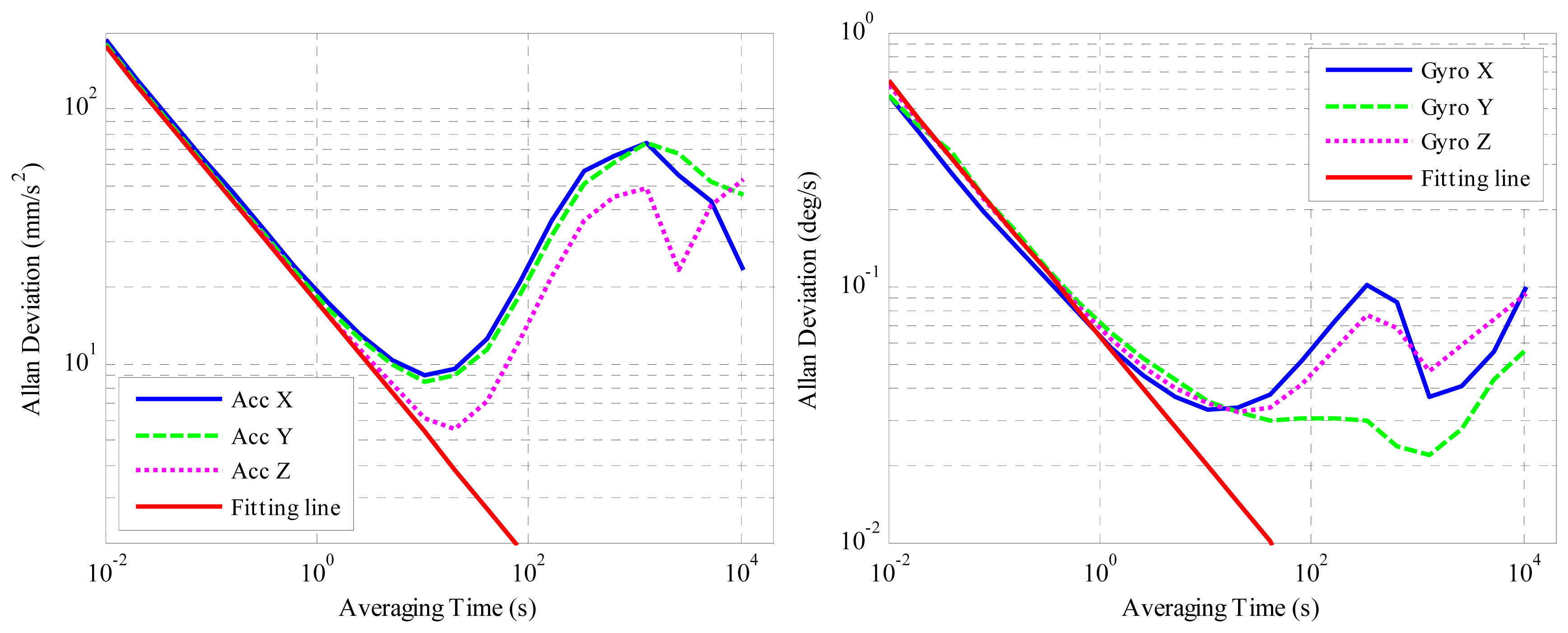

Several approaches are available for stochastic error identification of an inertial sensor, e.g.: (1) Autocorrelation function approach [13], which is not practical for real data analysis because a very long test time is required [12]; (2) ARMA model approach [24] that is model sensitive; (3) PSD approach [25], which can help to identify error parameters but the accuracy of the spectral density estimation has to be considered [26]; and (4) Allan variance approach [27]. In this paper, Allan variance (AVAR) is preferred since it is a time domain analysis technique designed for characterizing noise and stability in clock systems, which can be used to extract the intrinsic noise in the system as a function of the averaging time by analyzing a time sequence [28]. The algorithm and explanation of Allan deviation can be found in [11]. In this paper, twelve hours of static data were collected from the μIMU at room temperature with a sampling frequency of 100 Hz. The outputs were transformed to acceleration in mm/s2 and angular rates in °/s. The Allan variance plot of the accelerometer and gyroscopes are shown in Figure 3.

According to the theory of Allan variance, different error sources appear on the log-log plot of Allan deviation with different slopes [15]. As for the five stochastic errors in inertial sensor: quantization error, angle/velocity random walk (ARW/VRW), bias instability, rate random walk and rate ramp noise, they appear on the log-log plot of Allan deviation with slopes of −1, −0.5, 0, +0.5 and +1 respectively [29]. By fitting straight lines with the corresponding slopes, the noise sources can be identified, and their magnitudes determined. The red solid straight lines with slope −0.5 in Figure 3a,b are used to estimate the velocity/angle random walk. By examining these two figures it can be concluded that the velocity/angle random walk is dominant at smaller averaging times. When the averaging time increases, the bias instability becomes dominant, which is the minimum point on the curve. When the averaging time increases further, the slope of the curve is not the typical +0.5 or +1, so the rate random walk and rate ramp cannot be extracted directly from the curve. Besides, their influences on pose estimation are not as big as ARW/VRW and bias instability, so we focus on modeling the latter two in this paper. The estimated ARW/VRW walk and bias instability magnitudes are listed in Tables 1 and 2.

As for bias instability, it is modeled as a first-order Gauss-Markov process [11]. Because Gauss-Markov models fits a large number of physical processes with reasonable accuracy, and it has a relatively simple mathematical description. As for velocity/angle random walk, it can be modeled as white noise. Knowing the characteristics of these errors, we need to build a model that is able to predict how these errors vary with time, and apply the model to correct tracking accuracy. Error modeling of these errors will be introduced in Section 2.4.

2.3. Rotation Calibration between the Model Coordinate Frame and the World Coordinate Frame

The vision sensor is only capable of estimating its position and orientation (pose) relative to the model coordinate frame. If the model coordinate frame is not aligned accurately with the world coordinate frame, the accelerations could get projected onto the world axes incorrectly. This will induce two problems. Firstly is that the accelerations will be integrated in wrong directions. Secondly acceleration due to gravity cannot be removed correctly. Hence, the relative rotation between model coordinate frame and world coordinate frame should also be calibrated. There are three preconditions for this calibration: (1) the inertial sensor should have been calibrated precisely; (2) the vision sensor should have been calibrated precisely; and (3) the relative rotation ( ) between the inertial sensor and the vision sensor should also have been calibrated. The matter of inertial sensor calibration has been discussed in Section 2.2. As for camera calibration, we utilize the Camera Calibration Toolbox for Matlab [30] by taking 15 images with a 9 × 6 checkerboard. And as for the relative rotation ( ) calibration between inertial sensor frame and camera frame, the algorithm described in [7] is adopted, which seeks to solve the problem by finding the quaternion that maximizes:

The solution to the problem lies in the vector corresponding to the largest eigenvalue of N [31]. The acquisition of vertical vanishing points is illustrated in Figure 4. The two vertical lines appear parallel in the figure but, actually, they are intersecting at some point outside of the figure. This is the vanishing point we are looking for. Meanwhile, the orientation of vision sensor relative to model frame ( ) can also be obtained using the same configuration.

Suppose is the gravitational acceleration measured by the accelerometer expressed in quaternion from. Knowing the relative rotation between inertial sensor body frame and camera frame, we may derive its corresponding value in the camera coordinate frame of reference by using the following equation

The gravitational acceleration can be further rotated to model coordinate frame by using the orientation quaternion :

The true gravitational acceleration in world coordinate frame can be expressed in quaternion form as:

Let be a unit quaternion that rotates measurements from the model coordinate frame to the world coordinate frame. The gravitational acceleration can be rotated to model coordinate frame by using the following equation:

Since the accelerometer has been calibrated accurately, the measured accelerations should be equal to the real gravitational acceleration, that is, . From Equations (12) and (14), the following equation can be obtained:

Substituting the values into Equation (15), four nonlinear equations can be obtained:

Solving the nonlinear equations in Equation (16), the four elements in can be determined.

2.4. Error Modeling of a μIC System

2.4.1. Measurement Model

Following the Allan variance analysis, it was found that the inertial measurements in our experiments were corrupted with ARW/VRW and bias instability. Therefore the inertial measurements were modeled as:

2.4.2. State Space Formulation

From Equations (17) and (18), measurement errors in the angular rates and accelerations can be expressed as follows:

The error equations for velocity in world coordinate frame are obtained by perturbing the velocity equation [16]:

Next the position derivative error ar obtained from the well-known equation:

The augmented angular rate ω̅c = (0, ωx, ωy, ωz)T and the quaternion derivative q̇ is related by:

Substituting Equation (21) into Equation (26), the estimated quaternion derivative becomes

The error in the quaternion derivative turns out to be:

Therefore, these continuous equations can be expressed as follows:

Discretizing the continuous state space model, and combining the characteristics of the Gauss-Markov process, the discrete state space model can be expressed as follows

2.4.3. Measurement Update

In updating the error state with a set of measurements, it is necessary to know how the measurements vary with time. There are two measurements in our system: the pose of the system as measured by the vision sensor, and the accelerations and angular rates measured by the inertial sensor. Each measurement is defined as the measured pose p̃ minus the estimated pose p̂ where p̂ is pose vector obtained by integrating accelerations and angular rates.

Therefore, the measurement residual can be expressed as:

The state is then updated using the following equations:

3. Results and Discussion

3.1. Trajectory Reconstruction with Proposed Algorithms

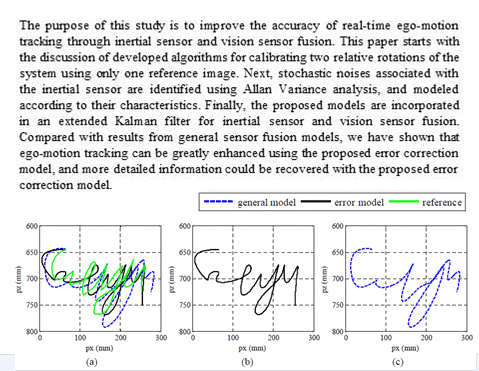

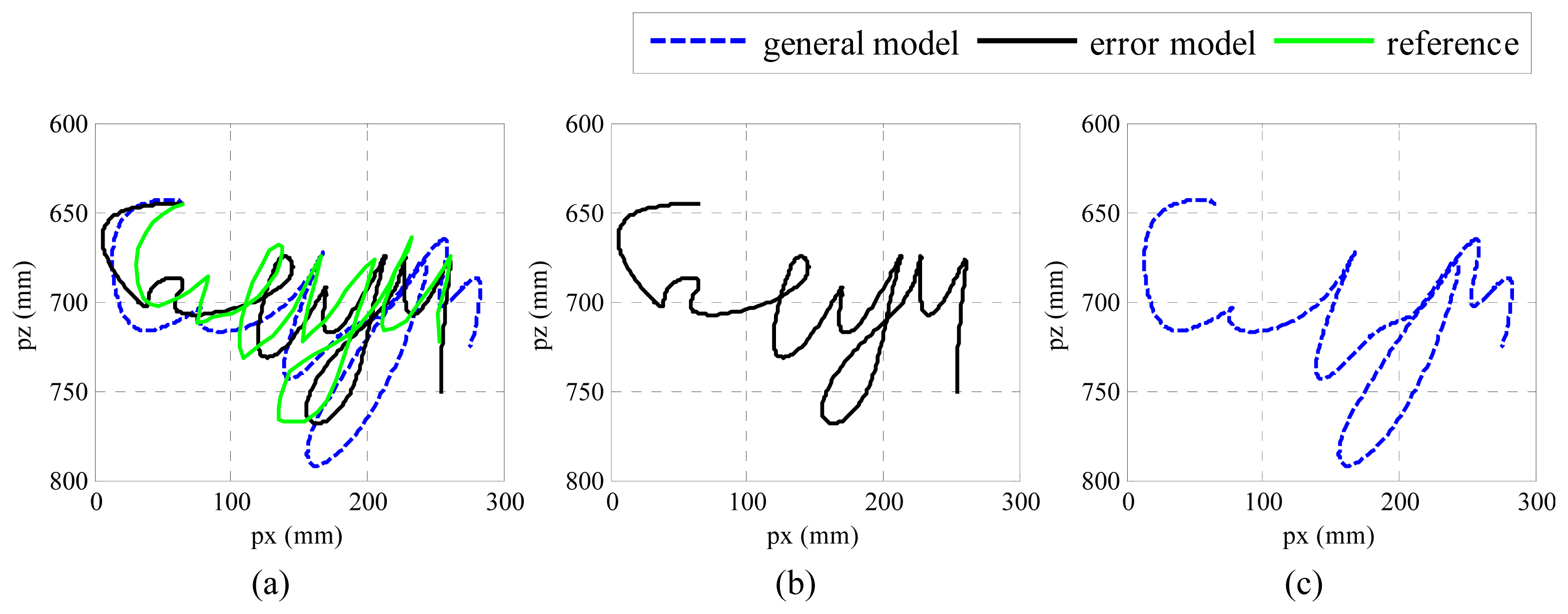

We performed the experiments by writing five English characters “cityu” in one stroke with the μIC system on the platform shown in Figure 1. A pen was attached to one side of the device, so the trajectory of the movement could be recorded on normal white paper. We analyzed the results by comparing the reconstructed trajectory with the one in [33], which we call it general model. In the general model, it assumes that the only noise in inertial sensor is white noise, so the other noises are not modeled. In addition, the deterministic errors in inertial sensor are calibrated by using six-position method, which is not as elegant as Gauss-Newton's method. The comparison is plotted in Figure 5.

From Figure 5c, it can be found the shape of the trajectories can be recovered, although some details may have been lost. Moreover, the estimation position along the x direction is stretched a little bit. In Figure 5b, more detailed information has been recovered, and the result is closer to the reference trajectory in both the x and y directions, which proves that the proposed algorithm may help to recover more detail information.

3.2. Experimental Results Evaluation

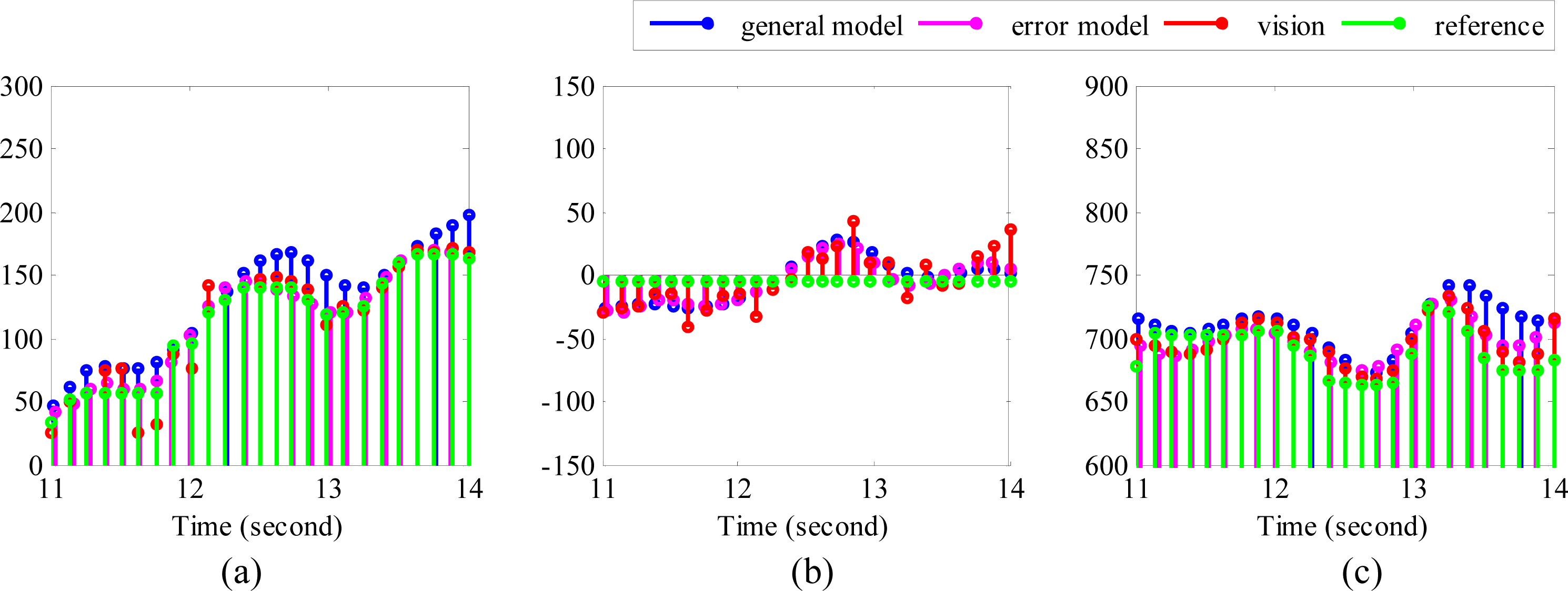

With a view to evaluating the performance of the algorithms quantitatively, the RMSE (root mean square error), and the absolute errors associated with each reconstructed trajectory were calculated. The RMSE may give us an overall error level of the reconstructed trajectory. It indicates the overall deviation of the reconstructed trajectory form the real trajectory, while the absolute error may show us the error level of each data point. The calculations however required the temporal information of the reference to be known. Since temporal information cannot be obtained directly from the recorded trajectory, DTW (dynamic time warping) was applied first to align the reference positions with the positions detected by the vision sensor. Next the corresponding temporal information of the reference was estimated. Before performing DTW, the sampling rate of vision sensor was increased from about 5 fps to about 15 fps through interpolation, so that there will be more data points included while calculating RMSE values. The resulting data points used for calculating RMSE are plotted in Figure 6.

For absolute error calculation, the following formula was applied:

The RMSE values along each axis were calculated by:

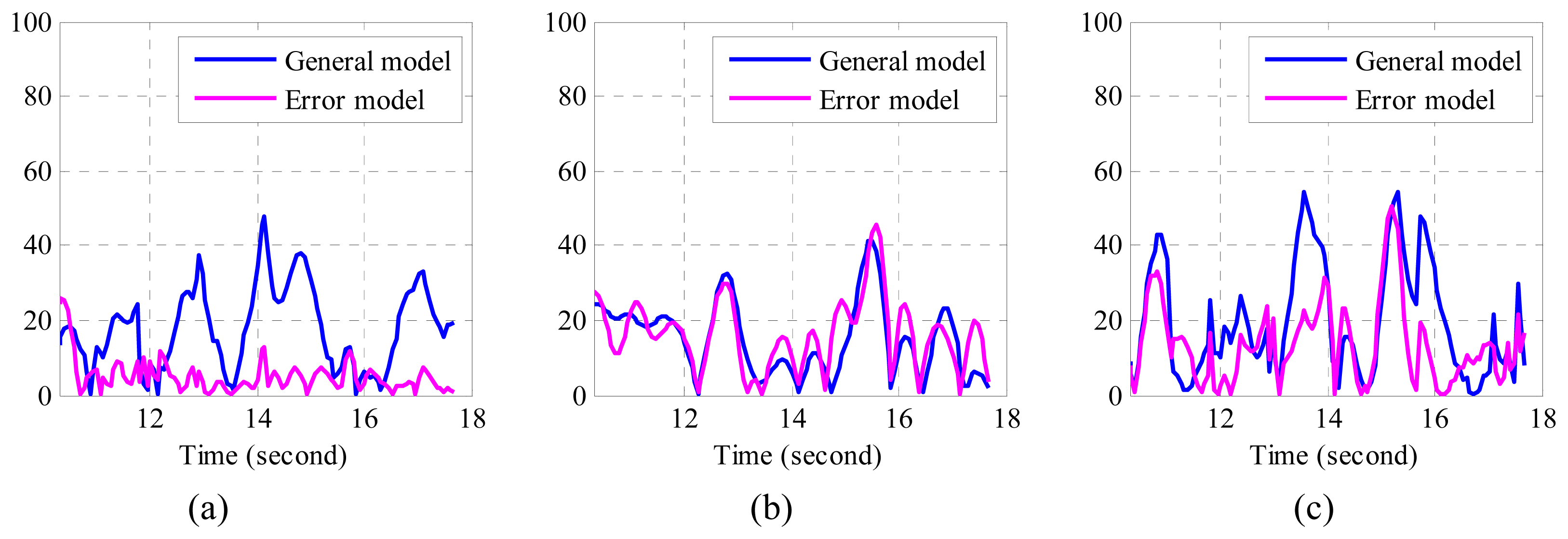

From Figure 7, it can be observed that the absolute errors determined by the error model are much smaller than those determined by the general model, especially in x direction. From Table 3, we find that the maximum RMSE by employing the error model was 18.38 mm, whereas the maximum RMSE by using the general model was 25.84 mm. That is, the mean RMSE has been reduced by 6.75 mm, while the total RMSE in three directions has been reduced by 20.17 mm when the proposed error model is applied. It is easy to understand if we combine these numbers with the trajectories in Figure 5. Smaller error means the algorithm is able to recover more detailed information of real movement, while the bigger one indicates its distance from real movement. Form both number analysis and trajectory comparison, it can be concluded that the proposed algorithms may help to recover more detailed information, which is usually very important in motion tracking. As a matter of fact, the proposed algorithm is not only confined to application regarding inertial sensor and vision sensor fusion, but also can be applied in the other applications that involve inertial sensors.

4. Conclusions

In this paper, algorithms for the μIC system calibration, noise determination and modeling have been presented. For relative rotation calibrations between the two coordinate frames, (inertial sensor body coordinate frame and camera coordinate frame, and model coordinate frame and world coordinate frame), we have shown that only one reference image is required. For deterministic error calibration of the inertial sensor, Gauss-Newton's method has been adopted to iteratively refine the calibration results. We demonstrated that as long as the parameters are chosen properly, this algorithm is able to yield results with given precision in seconds. We then modelled the stochastic errors identified in the inertial sensor, and applied the proposed noise model to the inertial sensor and vision sensor fusion. By comparing the results with and without error correction, we found that the RMSE of reconstructed hand-writing trajectories with error correction have been reduced by 6.75 mm over a 30 cm by 15 cm writing area. Moreover, we have also successfully applied DTW for temporal information retrieval.

Although good results have been achieved, there is still much work to be done in realizing a mobile motion tracking and recognition system based on the fusion of MEMS motion sensors and vision sensors. For example, the radial distortion of the web-based camera should be resolved. As can be seen from Figure 5a, the trajectory in x direction, which is the radial direction of the camera, has been “stretched”. This type d of distortion will deteriorate the fusion results, and hence, a more reliable distortion correction model should be constructed. Another example is the “image blurring” during fast movement of an input motion. Although the pose could be estimated by using inertial senor during the absence of vision sensor, the pose estimation results by using only inertial sensor will deteriorate quickly when the images are lost too often.

Acknowledgments

This work was supported in part by the City University of Hong Kong under the I2RF scheme (project No. 9666011), and in part by the Science Technology and Innovation Committee of Shenzhen Municipality under Project JCYJ20120618140504947.

Author Contributions

Shengli Zhou conducted the experiment, analyzed the data, designed the algorithm, and drafted the paper. Fei Fei and Guanglie Zhang developed the hardware. Wen J. Li conceived the idea of the experiment, pointing out the directions of the experiment, and edited the paper. Wen J. Li and Yunhui Liu gave many helpful suggestions on this research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shin, D.H.; Jang, W.S. Utilization of Ubiquitous Computing for Construction AR Technology. Autom. Constr. 2009, 18, 1063–1069. [Google Scholar]

- Welch, G.; Foxlin, E. Motion Tracking: No Silver Bullet, but a Respectable Arsenal. IEEE Comput. Graph. Appl. 2002, 22, 24–38. [Google Scholar]

- Zhou, S.; Shan, Q.; Fei, F.; Li, W.J.; Kwong, C.P.; Wu, P.C.K.; Meng, B.; Chan, C.K.H.; Liou, J.Y.J. Gesture Recognition for Interactive Controllers Using MEMS Motion Sensors. Proceedings of the 4th IEEE International Conference on Nano/Micro Engineered and Molecular Systems, Shenzhen, China, 5–8 January 2009; pp. 935–940.

- Aron, M.; Simon, G.; Berger, M.O. Use of Inertial Sensors to Support Video Tracking. Comput. Animat. Virtual Worlds 2007, 18, 57–68. [Google Scholar]

- Saeedi, P.; Lawrence, P.D.; Lowe, D.G. Vision-Based 3D Trajectory Tracking for Unknown Environments. IEEE Trans. Robot. 2006, 22, 119–136. [Google Scholar]

- Dias, J.; Vinzce, M.; Corke, P.; Lobo, J. Editorial Special Issue: 2nd Workshop on Integration of Vision and Inertial Sensors. Int. J. Robot. Res. 2007, 26, 515–517. [Google Scholar]

- Lobo, J.; Dias, J. Relative Pose Calibration between Visual and Inertial Sensors. Int. J. Robot. Res. 2007, 26, 561–575. [Google Scholar]

- Bishop, G.; Welch, G. An Introduction to the Kalman Filter. Proceedings of SIGGRAPH, Course 8, Los Angeles, CA, USA, 12–17 August 2001.

- Syed, Z.; Aggarwal, P.; Goodall, C.; Niu, X.; El-Sheimy, N. A New Multi-Position Calibration Method for MEMS Inertial Navigation Systems. Meas. Sci. Technol. 2007, 18, 1897–1907. [Google Scholar]

- Frosio, I.; Pedersini, F.; Borghese, N.A. Autocalibration of MEMS Accelerometers. IEEE Trans. Instrum. Meas. 2009, 58, 2034–2041. [Google Scholar]

- Petkov, P.; Slavov, T. Stochastic Modeling of MEMS Inertial Sensors. Cybern. Inf. Technol. 2010, 10, 31–40. [Google Scholar]

- Saini, V.; Rana, S.; Kuber, M. Online Estimation of State Space Error Model for MEMS IMU. J. Model. Simul. Syst. 2010, 1, 219–225. [Google Scholar]

- Brown, R.G.; Hwang, P.Y. Introduction to Random Signals and Applied Kalman Filtering; John Wiley & Sons: New York, NY, USA, 1992; pp. 80–111. [Google Scholar]

- Lee, J.; Hong, S.; Moon, N.; Oh, S.-J. Acoustic Sensor-Based Multiple Object Tracking with Visual Information Association. EURASIP J. Adv. Signal. Process. 2010, 2010, 591340. [Google Scholar]

- El-Sheimy, N.; Hou, H.; Niu, X. Analysis and Modeling of Inertial Sensors Using Allan Variance. IEEE Trans. Instrum. Meas. 2008, 57, 140–149. [Google Scholar]

- Friedland, B. Analysis Strapdown Navigation Using Quaternions. IEEE Trans. Aerosp. Electron. Syst. 1978, 764–768. [Google Scholar]

- Chroust, S.; Vincze, M. Fusion of Vision and Inertial Data for Motion and Structure Estimation. J. Robot. Syst. 2004, 21, 73–83. [Google Scholar]

- Armesto, L.; Tornero, J.; Vincze, M. Fast Ego-Motion Estimation with Multi-Rate Fusion of Inertial and Vision. Int. J. Robot. Res. 2007, 26, 577–589. [Google Scholar]

- Armesto, L.; Tornero, J.; Vincze, M. On Multi-Rate Fusion for Non-Linear Sampled-Data Systems: Application to a 6D Tracking System. Robot. Auton. Syst. 2008, 56, 706–715. [Google Scholar]

- Hol, J.D.; Schön, T.B.; Luinge, H.; Slycke, P.J.; Gustafsson, F. Robust Real-Time Tracking by Fusing Measurements from Inertial and Vision Sensors. J. Real-Time Image Process. 2007, 2, 149–160. [Google Scholar]

- Yi, Y. On Improving the Accuracy and Reliability of GPS/INS-Based Direct Sensor Georeferencing; Ohio State University: Columbus, OH, USA, 2007. [Google Scholar]

- Lu, C.-P.; Hager, G.D.; Mjolsness, E. Fast and Globally Convergent Pose Estimation from Video Images. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 610–622. [Google Scholar]

- Titterton, D.; Weston, J. Strapdown Inertial Navigation Technology, 2nd ed.; Zarchan, P., Ed.; American Institute of Aeronautics & Astronautics: Reston, VA, USA, 2004. [Google Scholar]

- Seong, S.M.; Lee, J.G.; Park, C.G. Equivalent Arma Model Representation for RLG Random Errors. IEEE Trans. Aerosp. Electron. Syst. 2000, 36, 286–290. [Google Scholar]

- IEEE Standard Specification Format Guide and Test Procedure for Linear, Single-Axis, Non-Gyroscopic Accelerometers. In IEEE Std 1293–1998; The Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 1999; pp. 166–176.

- Quinchia, A.G.; Ferrer, C.; Falco, G.; Falletti, E.; Dovis, F. Analysis and Modelling of MEMS Inertial Measurement Unit. Proceedings of International Conference on Localization and GNSS, Starnberg, Germany, 25–27 June 2012; pp. 1–7.

- IEEE Standard Specification Format Guide and Test Procedure for Single-Axis Laser Gyros. In IEEE Std 647–1995; The Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 1996; pp. 68–79.

- Niu, X.; Chen, Q.; Zhang, Q.; Zhang, H.; Niu, J.; Chen, K.; Shi, C.; Liu, J. Using Allan Variance to Analyze the Error Characteristics of GNSS Positioning. GPS Solut. 2013, 1–12. [Google Scholar]

- Tehrani, M. Ring Laser Gyro Data Analysis with Cluster Sampling Technique. Proceedings of 1983 Technical Symposium East, Washington, DC, USA, 4–8 April 1983; pp. 207–220.

- Bouguet, J.-Y. Camera Calibration Toolbox for Matlab. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/ (accessed on 12 November 2011).

- Horn, B.K. Closed-Form Solution of Absolute Orientation Using Unit Quaternions. J. Opt. Soc. Am. 1987, 4, 629–642. [Google Scholar]

- Woodman, O.J. An Introduction to Inertial Navigation; University of Cambridge: Cambridge, UK, 2007. [Google Scholar]

- Zhou, S.; Fei, F.; Zhang, G.; Mai, J.D.; Liu, Y.; Liou, J.Y.J.; Li, W.J. 2D Human Gesture Tracking and Recognition by the Fusion of MEMS Inertial and Vision Sensors. IEEE Sens. J. 2014, 14, 1160–1170. [Google Scholar]

| Bias Instability | Velocity Random Walk | |

|---|---|---|

| X axis | 9.086 ± 0.201 mm/s2 (at 10.24 s) | 18.946 ± 0.419 mm/s2/ |

| Y axis | 8.540 ± 0.189 mm/s2 (at 10.24 s) | 18.154 ± 0.401 mm/s2/ |

| Z axis | 5.560 ± 0.174 mm/s2 (at 20.48 s) | 17.520 ± 0.548 mm/s2/ |

| Bias Instability | Angle Random Walk | |

|---|---|---|

| X axis | 0.03287 ± 0.000073 °/s (at 10.24 s) | 0.058479 ± 0.001293 °/ |

| Y axis | 0.021762 ± 0.005816 °/s (at 1310.72 s) | 0.066069 ± 0.017658 °/ |

| Z axis | 0.032553 ± 0.001018 °/s (at 20.48 s) | 0.064275 ± 0.002011 °/ |

| Algorithm | X (mm) | Y (mm) | Z (mm) | Mean (mm) | Total (mm) |

|---|---|---|---|---|---|

| General model | 20.47 | 17.6 | 25.84 | 21.33 | 63.91 |

| Error model | 7.47 | 17.89 | 18.38 | 14.58 | 43.74 |

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Zhou, S.; Fei, F.; Zhang, G.; Liu, Y.; Li, W.J. Hand-Writing Motion Tracking with Vision-Inertial Sensor Fusion: Calibration and Error Correction. Sensors 2014, 14, 15641-15657. https://doi.org/10.3390/s140915641

Zhou S, Fei F, Zhang G, Liu Y, Li WJ. Hand-Writing Motion Tracking with Vision-Inertial Sensor Fusion: Calibration and Error Correction. Sensors. 2014; 14(9):15641-15657. https://doi.org/10.3390/s140915641

Chicago/Turabian StyleZhou, Shengli, Fei Fei, Guanglie Zhang, Yunhui Liu, and Wen J. Li. 2014. "Hand-Writing Motion Tracking with Vision-Inertial Sensor Fusion: Calibration and Error Correction" Sensors 14, no. 9: 15641-15657. https://doi.org/10.3390/s140915641