Vehicle Tracking for an Evasive Manoeuvres Assistant Using Low-Cost Ultrasonic Sensors

Abstract

: Many driver assistance systems require knowledge of the vehicle environment. As these systems are increasing in complexity and performance, this knowledge of the environment needs to be more complete and reliable, so sensor fusion combining long, medium and short range sensors is now being used. This paper analyzes the feasibility of using ultrasonic sensors for low cost vehicle-positioning and tracking in the lane adjacent to the host vehicle in order to identify free areas around the vehicle and provide information to an automatic avoidance collision system that can perform autonomous braking and lane change manoeuvres. A laser scanner is used for the early detection of obstacles in the direction of travel while two ultrasonic sensors monitor the blind spot of the host vehicle. The results of tests on a test track demonstrate the ability of these sensors to accurately determine the kinematic variables of the obstacles encountered, despite a clear limitation in range.1. Introduction

Many driver assistance systems require some knowledge of the vehicle's surroundings. As these systems are increasing in complexity and performance, this knowledge of the environment needs to be more complete and reliable, establishing an area around the vehicle that must be continually monitored [1,2]. There are several technical solutions for supervising this area. A first classification distinguishes between long- and short-range sensors. In the first group, laser scanners, radar and computer vision are included. In the second group, ultrasonic and capacitive sensors can be highlighted. These sensors also have a wide variety of performance characteristics and their cost can also vary significantly but long-range sensors are usually more expensive and complex than short-range ones. For this reason, when multiple sensors are included in a specific assistance system, the characteristics of each one should be assessed in order to choose those that provide a satisfactory solution without increasing the price of the system unnecessarily.

A collision avoidance system equipped with a laser scanner on the front of the vehicle is described in [3]. This system can detect obstacles and take actions automatically to stop or avoid a collision, assessing whether there are other vehicles in the opposite direction in the adjacent lane. High performance characteristics are required for this sensor because reliable and accurate data are essential for performing safe autonomous manoeuvres. However, this system has the limitation of not taking into account any obstacles in the adjacent lane that are circulating in the same direction as the host vehicle. In particular, the most critical situation in terms of safety would be the case where the system executes a lane change manoeuvre to avoid an obstacle and simultaneously another vehicle is overtaking the vehicle along the adjacent lane. To avoid this situation, the system needs to monitor the adjacent lane in the same direction in which the host vehicle is moving. Obviously, the use of high-performance sensors such as laser scanners used in the detection at the front is a feasible and reliable solution. However, the cost of the system would increase greatly while the features of the sensor exceed the needs of the application. Therefore, in this paper the feasibility of using low-cost ultrasonic sensors is analyzed to detect vehicles in the blind spot to give information on the kinematics of those vehicles to the collision avoidance system that can perform evasive manoeuvres. This monitoring goes beyond current simple detection systems because it is now necessary to know the relative speed of the obstacles. The application scope must be analyzed given the maximum range of these sensors.

The paper is organized as follows: firstly, Section 2 presents some related work on the use of different sensors for supporting driver assistance systems. Then, Section 3 describes the system layout of the collision avoidance system based on the one presented in [3]. Section 4 describes the method for estimating relative speed using ultrasonic sensors and Section 5 includes the selection of the sensors and the performance tests. Finally, Section 6 discusses the limitations of using this sensor system taking into account the decision conditions managed by the avoidance system, while Section 7 presents the main conclusions.

2. Related Work

In order to supervise the surroundings of a vehicle, there are several sensors that allow monitoring the environment. The most commonly used are laser scanners [4–6], radar [7–9] and computer vision [10–13]. Different studies compare the advantages and limitations of these technologies [14]. They clearly show the great potential of computer vision for reconstructing a model of the environment, although problems arise in adverse weather conditions and in environments with changing lighting conditions. Furthermore, the high computational cost is a relevant drawback. On the other hand, the measuring range of 77 GHz microwave radar and some laser scanners offer values that are similar in distance and reach more than 200 m. This is not so with the 24 GHz radar which generally operates at shorter distances. However, the laser scanner shows great versatility for working over long and short distances with the same device. The main drawback of radar regarding its measurement field is its limited lateral vision and this fact limits the type of applications these sensors can be used for. Under conditions of fog, rain or heavy snow, radar usually has no problem in detection but the laser scanner is affected by weather conditions since the particles can cause the beam to reflect and disperse, which can attenuate the signal or provide false echoes [15]. These problems have been avoided by increasing the number of horizontal scan planes and/or including filtering algorithms [16]. Finally, it should be noted that, bearing in mind the parameters associated with the distances and type of the objects detected, the analyses show computer vision and the laser scanner to be more versatile tools [17].

Numerous studies indicate that if a complete representation of the environment or an increase in the confidence level in detection is required, it is essential to use sensor fusion from multiple information sources [18–21]. This sensor fusion supports safety applications such as Adaptive Cruise Control based on any of the three technologies mentioned [22,23], pre-crash collision avoidance systems integrating computer vision and laser [24] and autonomous driving systems merging several technologies [22,25,26], etc.

On the other hand, other simpler applications such as parking assistants [27–29], finding free parking spots [30,31] or blind spot monitoring, particularly when driving in urban areas [32–35], rely on lower performance and less complex sensors such as ultrasonic sensors whose cost is usually significantly lower than the previously mentioned sensors. However, in this case, their use is usually limited to the detection of obstacles, but they are not of use for tracking and analysing the kinematic characteristics of these obstacles. For example, the sensors used for parking assistant simply warn the driver of the remaining distance to some obstacle. In the case of blind spot monitoring, the system is again limited to alerting the driver of the presence or absence of other vehicles in a specific area around the host vehicle. Other more demanding applications such as adaptive cruise control for urban areas have been based on ultrasonic sensors [36]. Finally, work based on the installation of ultrasonic sensors on a roadside pole to detect vehicles on the road is reported in [37]. Other research using ultrasonic sensors in the automobile field can be found in [38,39]. It is even usual to find research that combines ultrasonic sensors for near range detection and laser scanner [40] or radar [41] for long range detection.

3. System Layout

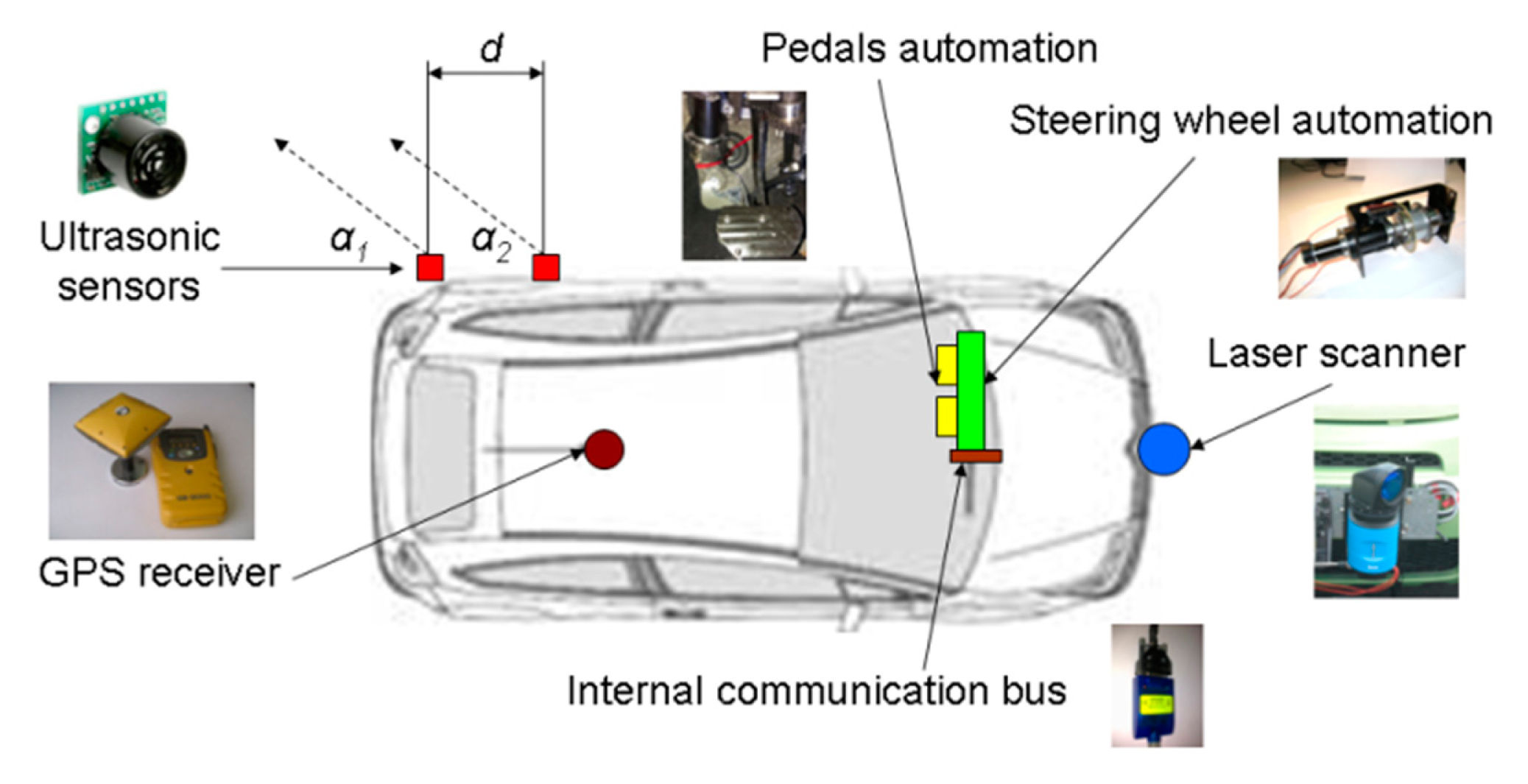

The detection system presented in [3] is complemented by ultrasonic sensors placed on the rear-side part of the vehicle (Figure 1) in order to complete the vehicle surroundings supervision and detect any vehicle circulating in the blind spot on the adjacent lane.

Thus, the primary environment detection sensor consists of the laser scanner on the front. Specifically, the Sick LRS 1000 sensor is used, whose performance with revised obstacle identification algorithms has been satisfactory proven in [24,42].

Moreover, monitoring the adjacent lane is performed by using two low-cost ultrasonic sensors. The parameters of these sensors are three: distance d between them and the angle formed by the beam centre line of each detection sensor and the vehicle side line, α1 and α2. It should be noted that sensor 1 must be located as near as possible to the rear part of the vehicle in order to achieve the detection of a vehicle circulating on the adjacent lane as soon as possible. Likewise, the small distances d and angles should be selected, with the restriction that α1 ≤ α2.

Finally, in the same way as the case described in [3], the system requires measuring the vehicle speed acquired from the internal communication bus, and positioning it on a digital map, provided by a Topcon DGPS RTK GB-300 receiver [43]. The vehicle steering and speed (acceleration and brake pedals) automation is described in detail in [44].

4. Method for Estimating Relative Speed Using Ultrasonic Sensors

4.1. Method

Given the configuration of the ultrasonic sensors shown in Figure 1, the relative speed between the host vehicle and a vehicle moving along the adjacent lane is determined considering the elapsed time between the detection times of the two sensors. In the simplest situation, the distance between the two detections can be approximated by d, although in the case of α1 ≠ α2 or when the path of the two vehicles is not perfectly parallel, this estimation is incorrect. For more accurate results, the point of the sensor beam in which the obstacle is located must be identified. Thus, under the hypothesis that the first point of the vehicle detected by the two sensors of the host vehicle is the same, the following steps are followed in the calculation of the relative speed:

- (a)

Characterization of the sensor beam.

- (b)

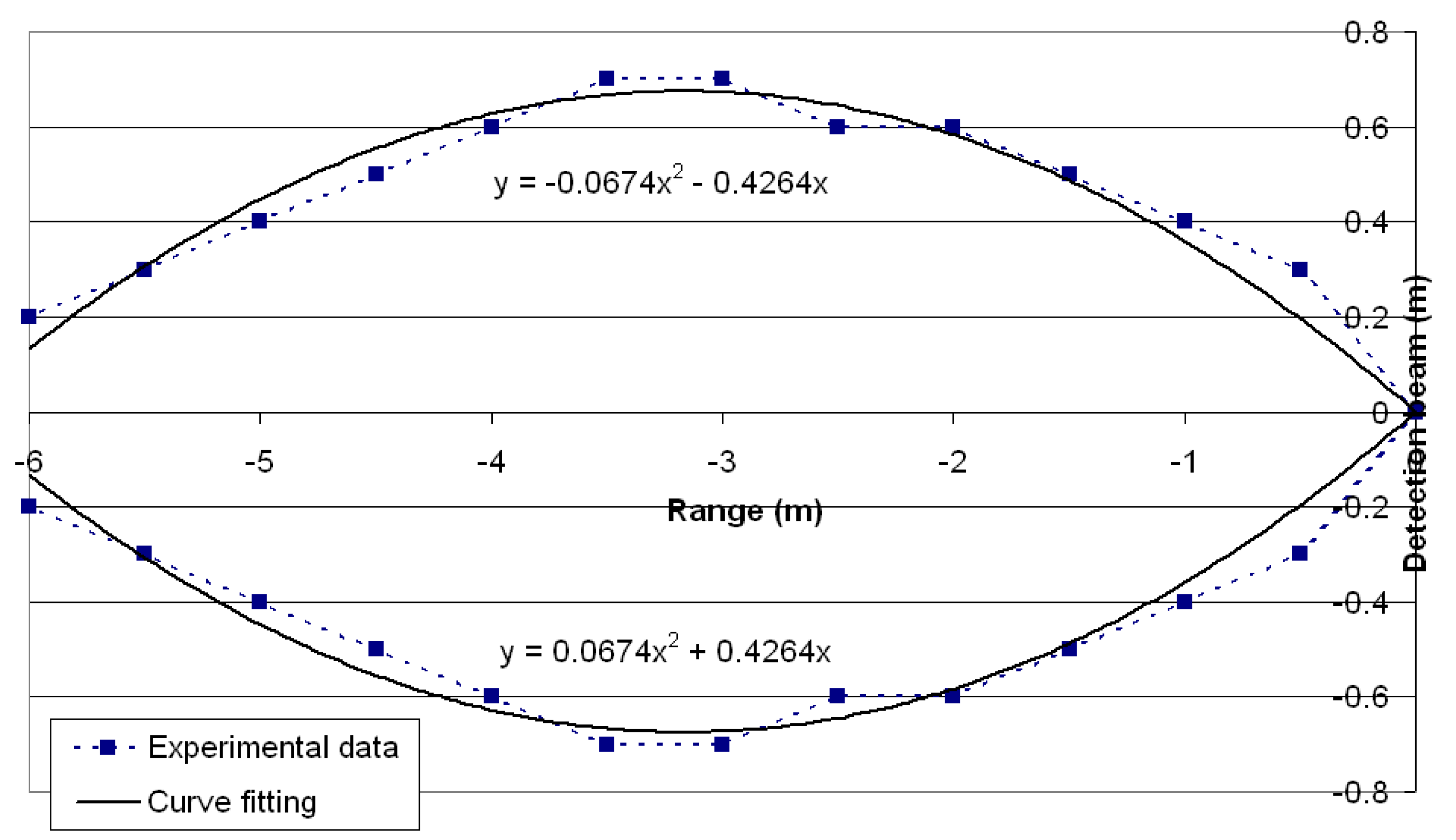

Curve-fitting of the sensor beam. In general, this curve-fitting is possible using second-degree polynomials following Equation (1) (Figure 2a):

- (c)

The beam is rotated the orientation angle αi around the coordinates origin (Figure 2b). The relationship between the old and new coordinates is given by the well-known Equation (2):

Thus, after some calculations, the new form of the parabola in provided by Equation (3):

where:

- (d)

Identification of the detection points using the distances r1 and r2 of the first detected point of the vehicle moving along the lane adjacent to both sensors (Figure 2c). This intersection is located at the intersection of the beam sensor and the circumference whose centre is located at the sensor position and the radius is the distance detected by it.

- (e)

Relative speed (vx, vy) calculation considering the coordinates of the detection points of sensors 1 (x1, y1) and 2 (x2, y2) and the elapsed time between the detection times of the two sensors T using Equation (7):

4.2. Acquisition Frequency Restrictions

Since the relative speed estimation is based on the detection of one point by each sensor, the frequency of the data acquisition should be high enough to ensure that it can detect one preset speed vmax and that the uncertainty in the measurement time at which the first point of the obstacle is detected is sufficiently low. Specifically, the first requirement dictates that the sampling frequency f and the time between two samples Δt follow Equation (8):

Moreover, the resolution regarding the estimation of the relative speed depends on the number of sampling cycles between the detection of the first sensor and the second, and is given by:

5. Performance Tests of the Ultrasonic Sensors

5.1. Ultrasonic Sensors Selection

Table 1 contains the main features of the ultrasonic sensors considered. From these data, the LV-EZ3 sensor is the one that provides greater benefits, as the range, even though limited, is the longest of the sensors considered and, additionally, the sampling frequency exceeds 250 Hz, specified in the section above.

5.2. Sensors Configuration and Layout

Prior to their final assembly the sensors were tested to characterize their operation under similar conditions to those of the subsequent application. Firstly, Figure 3 shows the experimentally measured sensor beam and the curve-fitting using a second degree polynomial.

In addition, several tests were conducted on different configurations of sensors varying d and αi with the purpose of identifying the most convenient configuration. The following conclusions have been reached:

- (1)

There is a minimum value for the angle αi below which an obstacle detection of the characteristics of a vehicle circulating in the adjacent lane would not be reliable and there would be a high probability of false negative signals occurring. Several tests were performed with angle values ranging between 10° and 50°, with 5° increments. The limit value that provides reliable obstacle identification was set at 20° because misdetections have not been found up to this value but higher angles provided more than 25% of misdetections, so the detection results lose their reliability. Moreover, it should be noted that this angle is sufficient given the maximum range of the sensor to detect a vehicle travelling in the adjacent lane and assuming standard vehicle dimensions (1.6 m wide or more) and lanes (3.5 m wide or narrower).

- (2)

When the distance d is small and the sensors are working simultaneously, interferences occur in the measurements. Crosstalk is a common problem when multiple ultrasonic sensors are used. In this situation, a sensor receives the signal that another sensor generates and confusing signals are recorded. This fact can lead to false alarms that need to be filtered. Some solutions can be found in the technical literature. In this sense, in [45] a microcontroller-based method to reduce crosstalk between sensors is described, which is achieved by firing each transducer by a pseudorandom number of pulses so that the echo of each transducer can be uniquely identified. For the proposed system, a simpler but reliable and robust solution is used. Thus, the alternate detection of the two sensors is proposed so that once sensor 1 detects an obstacle it is deactivated and sensor 2 will operate until the obstacle is detected and becomes inactive and activates sensor 1. Activation of the sensor by power switching allows only frequencies up to 1.3 Hz, which are insufficient, as mentioned above. However, the enable signal allows switching and acquisition frequencies of 250 Hz. Despite the above, with high frequencies, the switching method does not prevent interference if the distance d is less than 0.3 m.

As above, the final choice of the sensors in the system meets the following characteristics: d = 0.3 m, α1 = α2 = 20°. Thus, the sensor detects and estimates the speed of vehicle 3 as far away as possible from the host vehicle.

5.3. Vehicle Positioning and Tracking Tests

Finally the assembly of 2 ultrasonic sensors was tested to estimate the overtaking speed of a vehicle simulating the conditions of use of the system. For this purpose, the sensors were located as indicated in Section 4.2. Along the same lines, a Sick LRS 1000 laser scanner was installed in order to compare the speed estimations provided by the two sensors, given the proven reliability of the second [42]. The refresh rate of the laser scanner was 10 Hz with an angular resolution of 0.25°. Moreover, the acquisition frequency of ultrasonic sensors was set to 250 Hz because high frequencies are needed to achieve appropriate resolution results and the correct functioning of these sensors at that frequency had been experimentally verified.

The tests consisted of overtaking a passenger car in a path approximately parallel to the line of sensors at a distance Y of approximately 1.5 m from them, at different speeds v between 10 km/h and 40 km/h with increments of 5 km/h, maintained by the vehicle cruise control (Figure 4), trying to simulate the usual operating conditions of the sensors in the driving assistance system. The cruise control system could not maintain the same speed in every test even when the target value was the same, so 5 repetitions were performed at each speed level.

Table 2 shows the results obtained. Because of the dispersion of the real speed achieved by the vehicle when the target speed was the same, average values are shown, but it should be noted that the dispersion is quite narrow so the calculated errors can be considered representative of each speed level. The laser scanner provides very similar results to the real speed measurements from the vehicle communication bus, as already proven in tests in more complex configurations [3]. Moreover, the measurement procedure with the ultrasonic sensors has also provided good results with very small errors even at high speeds (the maximum error is 2.1% detected when the target speed was set at 40 km/h). It should be noted that, because the system measures relative speeds, since detecting relative speeds above those tested is not usual, the usual range of application of the sensor in the detection system has been properly covered. Thus, the ability of the pair of ultrasonic sensors to position and track other vehicles during overtaking manoeuvres along the adjacent lane has been verified with quite satisfactory results.

6. Discussion of Feasibility of the Proposed Solution for an Evasive Manoeuvres Assistant System

6.1. Driving Scenarios Considered by the Evasive Manoeuvres Assistant System

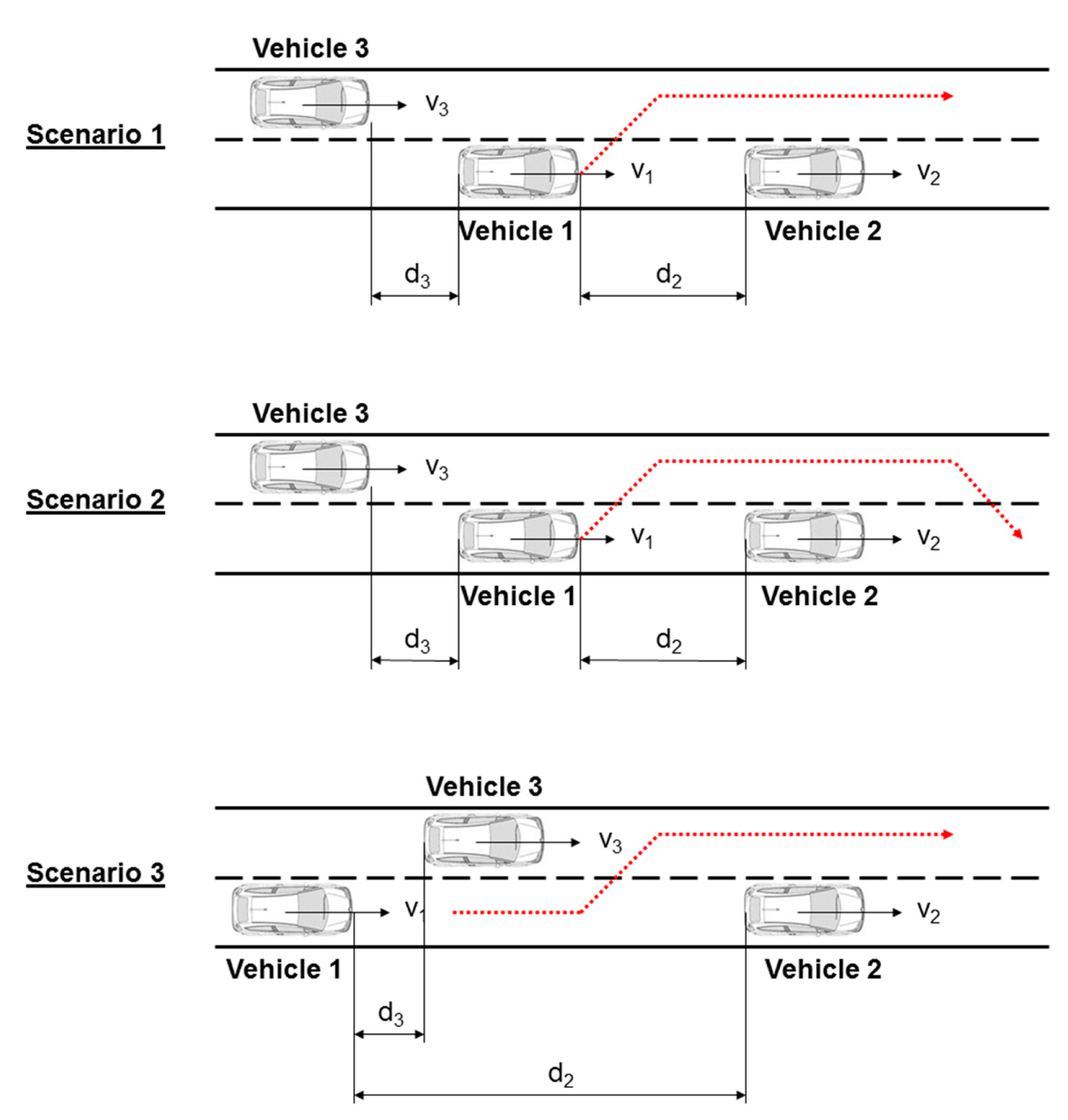

As stated above, this article is intended to overcome the shortcomings of the environment detection system described in [3] using low-cost solutions, thereby covering a wider range of situations in the decision module of the collision avoidance system. Thus, another three possible study cases have been added to the cases already covered in [3] (Figure 5).

In these, vehicle 1 is travelling at a speed v1 greater than that of vehicle 2 v2 that precedes it in the lane, so vehicle 1 can either choose to perform a braking manoeuvre to adapt the speed or an overtaking manoeuvre. In the previously raised scenarios, vehicle 3, which may prevent overtaking manoeuvres, is moving in the opposite direction along the adjacent lane and, therefore, could be detected by the same sensor responsible for detecting vehicle 2. In these new scenarios, vehicle 3 is moving in the same direction, it is overtaking vehicle 1 (v3 > v1) or it is being passed by the latter (v3 < v1) depending on their relative speed.

The decision module system that chooses braking and/or evasive manoeuvres must evaluate in which conditions vehicle 1 can perform these manoeuvres safely to avoid a collision with vehicle 2.

6.1.1. Condition for Safe Braking

The distance between vehicles 1 and 2 should be larger than the value provided by Equation (10) so the speed can be adapted from v1 to v2:

6.1.2. Evasive Manoeuvre (Scenario 1)

In order for the evasive manoeuvre to be possible, the distance between vehicles 1 and 2 should comply with Equation (11):

Furthermore, in this scenario, vehicle 3 should adapt its speed to vehicle 1's speed before crashing. For this reason, Equation (12) should be verified:

6.1.3. Evasive Manoeuvre (Scenario 2)

In this case, Equation (2) should be verified. Apart from that, depending on the value of d3, different scenarios can occur:

- (a)

Vehicle 3 should decelerate and reach v1

- (b)

Vehicle 3 should decelerate and reach a speed

- (c)

Vehicle 3 should not modify its speed

Scenario 2a is the most critical one because it implies a minimum distance d3 below which a collision between vehicles 1 and 3 cannot be avoided. This limit is provided by Equation (12).

Scenario 2c implies that vehicle 1 can perform the overtaking manoeuvre before vehicle 3 collides with it when the latter does not modify its speed. This condition can be expressed mathematically by Equation (13):

In any intermediate situation between Equations (12) and (13), vehicle 3 should modify its speed but its final speed v3′ can be higher than v1. In order to minimize vehicle 3's speed reduction, this final speed should be reached at the end of vehicle 1's manoeuvre, so Equation (14) provides the final speed of vehicle 3:

It should be noted that making in Equation (14) provides Equation (12). Furthermore, if Equation (13) is verified, Equation (14) has no sense, because vehicle 3 should not modify its speed and it can maintain v3. Furthermore, Equations (13) and (14) are not applicable when v1 = v2 because overtaking manoeuvres are not possible in this case.

6.1.4. Evasive Manoeuvre (Scenario 3)

In this case, vehicle 1 should wait to start its manoeuvre until the front part of vehicle 3 reaches the rear part of vehicle 1. Then, the evasive manoeuvre can start and the distance between vehicles 1 and 2 at that moment needs to be large enough to perform the lane change. This condition is provided by Equation (15):

It should be noted that the overtaking manoeuvre is not possible when v1 = v3, so Equation (15) is not applicable in this situation.

6.2. Discussion of the Range of Application

The major limitation of the detection system lies in the range of the sensors [46]. The most critical situation is contained in the condition established in scenarios 1 and 2a by Equation (12) because this equation establishes the limit for not generating an accident between vehicles 1 and 3 while the former avoids vehicle 2 with an evasive manoeuvre. In this case, it can be seen that the relative speed between vehicles 1 and 3 cannot exceed a certain value. The larger distance d3 that the ultrasonic sensors can detect could be approximated by Equation (16):

For example, if a deceleration level of 4 m/s2 [47–49] and a reaction time of 1.5 s [50–52] are chosen for vehicle 3 as typical driver values in this type of manoeuvre, the avoidance system implemented in vehicle 1 can only rely on the ultrasonic sensors speed estimation if v3 − v1 ≤ 10.3 km/h. In the event that vehicle 3 is also equipped with an automatic avoidance system that could take a quicker decision (tr = 0.5 s) and brake harder (8 m/s2 considering that it is an emergency manoeuvre), relative speeds of 21.9 km/h are admissible. This mean that the evasive manoeuvre can only be considered as a safe option by the decision module of the collision avoidance system described in [43] when the relative speed detected is lower than the previous limits. Even if this fact is a clear limitation, this second scenario still provides a relatively acceptable field of application for the ultrasonic sensor-based solution.

Furthermore, the limited range implies that the lateral distance between the host vehicle and the vehicle on the adjacent lane should not be too large. More specifically, when lateral separation is greater than 2.2 m, vehicle 3 cannot be detected using the selected sensors. In spite of this limitation, the speed calculation algorithm based on two ultrasonic sensors is robust, even when vehicles 1 and 3 do not perform exact parallel paths because the exact point in which vehicle 3 enters each sensor detection beam can be obtained and that information is used for calculating vx and vy according to Equation (7).

7. Conclusions

This paper has analyzed the feasibility of supplementing the collision avoidance system presented in [3] using a pair of ultrasonic sensors to monitor the blind spot in the adjacent lane and provide more options for automatic actuation in the decision module of the collision avoidance system. The initial system was based only on a frontal laser scanner but the limitations regarding detecting the vehicle surroundings were clear and more sensors were required. The use of ultrasonic sensors in this system exceeds its conventional application, which is limited to presence detection, but never to the estimation of the kinematic characteristics of obstacles. Therefore, the contributions of this paper emerge in the use of ultrasonic sensors to obtain information on the kinematics of obstacles moving around the vehicle.

Satisfactory results have been obtained in speed estimation using two ultrasonic sensors, with a level of accuracy similar to that obtained by the laser scanner, which is completely adequate for the ultimate purpose of the collision avoidance system. However, it should be noted that, although the laser scanner can work at acquisition frequencies of 10 Hz without affecting its accuracy, because of the precise location of the obstacle with high resolution, in the case of ultrasonic sensors, acquisition frequencies of about 250 Hz are required, since it is critical to identify the specific instant at which each sensor detects the first point of the obstacle. The other limitation of the detection system is the limited range of the ultrasonic sensors. More specifically, there are restrictions on the relative speed between vehicles 1 and 3 but, as shown above they are not as limiting as might first appear and the avoidance system can still work properly in a wide range of scenarios.

Finally, it should be noted that the actual detection system is not complete enough to assess the surroundings of the subject vehicle with full reliability. For this reason, a medium-range sensor needs to be included in the rear part of the vehicle. Then, the information that this new sensor would provide would be fused with the detections of the ultrasonic sensors that work correctly in a short range as presented in this paper. One of the main objectives is to obtain a low-cost detection system in which only the front sensor (responsible for the detection of the primary obstacle) would be a high-performance sensor, while the others would complement the information but without significantly increasing the total cost of the system.

Acknowledgments

The work reported in this paper has been partly funded by the Spanish Ministry of Science and Innovation (SAMPLER project TRA2010-20225-C03-03, and IVANET project TRA 2010-15645). The authors would like to thank Maria Benito and Ma Goucheng for their help during some preliminary tests.

Author Contributions

Felipe Jimenez proposed the collision avoidance system and developed the decision module. José Eugenio Naranjo and Oscar Gómez collaborated in the development of the vehicle automation. José Eugenio Naranjo developed computer applications for data acquisition and analysis. Finally, José J. Anaya helped in the preliminary and final tests.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fuerstenberg, K.; Baraud, P.; Caporaletti, G.; Citelli, S.; Eitan, Z.; Lages, U.; Lavergne, C. Development of a Pre-Crash sensorial system—The CHAMELEON Project. Proceedings of Joint VDI/VW Congress Vehicle Concepts for the 2nd Century of Automotive Technology, Wolfsburg, Germany, November 2001.

- European Enhanced Vehicle-Safety Committee (EEVC). WG 19, Primary and Secondary Safety Interaction; Final Report for European Enhanced Vehicle-Safety Committee: Madrid, Spain, 2004. [Google Scholar]

- Jiménez, F.; Naranjo, J.E.; Gómez, O. Autonomous Manoeuvrings for Collision Avoidance on Single Carriageway Roads. Sensors 2012, 12, 16498–16521. [Google Scholar]

- Fürstenberg, K.Ch.; Dietmayer, K.C.J.; Eisenlauer, S. Multilayer laserscanner for robust object tracking and classification in urban traffic scenes. Proceedings of 9th World Congress on Intelligent Transport Systems, Chicago, IL, USA, 14–17 October 2002.

- García, F.; Jiménez, F.; Naranjo, J.E.; Zato, J.G.; Aparicio, F.; Armingol, J.M.; de la Escalera, A. Environment perception based on lidar sensors for real road applications. Robotica 2012, 30, 185–193. [Google Scholar]

- García, F.; Jiménez, F.; Anaya, J.J.; Armingol, J.M.; Naranjo, J.E.; de la Escalera, A. Distributed pedestrian detection alerts based on data fusion with accurate localization. Sensors 2013, 13, 11687–11708. [Google Scholar]

- Tokoro, S.; Kuroda, K.; Nagao, T.; Kawasaki, T.; Yamamoto, T. Pre-crash sensor for pre-crash safety. Proceedings of the 18th International Conference on Enhanced Safety of Vehicles, Nagoya, Japan, 19–22 May 2003.

- Föulster, F.; Rohling, H. Data association and tracking for automotive radar networks. IEEE Trans. Intell. Transp. Syst. 2005, 6, 370–377. [Google Scholar]

- Polychronopoulos, A.; Tsogas, M.; Amditis, A.J.; Andreone, L. Sensor Fusion for Predicting Vehicles' Path for Collision Avoidance Systems. IEEE Trans. Intell. Transp. Syst. 2007, 8, 549–562. [Google Scholar]

- Broggi, A.; Caraffi, C.; Fedriga, R.I.; Grisleri, P. Obstacle detection with stereo vision for off-road vehicle navigation. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005.

- Caraffi, C.; Cattani, S.; Grisleri, P. Off-Road Path and Obstacle Detection Using Decision Networks and Stereo Vision. IEEE Trans. Intell. Transp. Syst. 2007, 8, 607–618. [Google Scholar]

- De la Escalera, A.; Armingol, J.M.; Pastor, J.M.; Rodriguez, F.J. Visual sign information ex-traction and identification by deformable models for intelligent vehicles. IEEE Trans. Intell. Transp. Syst. 2004, 5, 57–68. [Google Scholar]

- Llorca, D.F.; Sotelo, M.A.; Parra, I.; Ocana, M.; Bergasa, L.M. Error Analysis in a Stereo Vision-Based Pedestrian Detection Sensor for Collision Avoidance Applications. Sensors 2010, 10, 3741–3758. [Google Scholar]

- Widmann, G.; Daniels, M.; Hamilton, L.; Humm, L.; Riley, B.; Schiffmann, J.K.; Schnelkery, D.E.; Wishon, W.H. Comparison of lidar-based and radar-based adaptive cruise control systems. SAE Tech. Pap. 2000. [Google Scholar] [CrossRef]

- Spies, M.; Spies, H. Automobile LidarSensorik: Stand, Trends und zukünftigeHerausforderungen. Adv. Radio Sci. 2006, 4, 99–104. [Google Scholar]

- Fürstenberg, K.Ch.; Lages, U. Pedestrian detection and classification by laserscanners. Proceedings of 9th EAEC International Congress, Paris, France, 16–18 June 2003.

- Campbell, J.L.; Richard, C.M.; Brown, J.L.; McCallum, M. Crash Warning System Interfaces: Human Factors Insights and Lessons Learned; Technical Report DOT HS 810 697; NHTSA: Washington, DC, USA, 2007. [Google Scholar]

- Hall, D.L.; Llinas, J. Handbook of Multisensor Data Fusion; CRC Press, Taylor & Francis Group, Inc: London, England, 2001. [Google Scholar]

- Floudas, N.; Polychronopoulos, A.; Aycard, O.; Burlet, J.; Ahrholdt, M. High Level Sensor Data Fusion Approaches for Object Recognition in Road Environment. Proceedings of the 2007 IEEE Intelligent Vehicles Symposium, Istanbul, Turkey, 13–15 June 2007.

- Fuerstenberg, K.Ch.; Roessler, B. Results of the EC Project INTERSAFE. Proceedings of AMAA 2008. Proceedings of 12th International Conference on Advanced Microsystems for Automotive Applications, Berlin, Germany, 11–12 March 2008.

- García, F. Data Fusion Architecture for Intelligent Vehicles. Ph.D. Thesis, Carlos III University, Madrid, Spain, 2012. [Google Scholar]

- Naranjo, J.E.; González, C.; García, R.; de Pedro, T. ACC+Stop&GoManeuvers with Throttle and Brake Fuzzy Control. IEEE Trans. Intell. Transp. Syst. 2006, 7, 213–225. [Google Scholar]

- Eidehall, A.; Pohl, J.; Gustafsson, F.; Ekmark, J. Toward Autonomous Collision Avoidance by Steering. IEEE Trans. Intell. Transp. Syst. 2007, 8, 84–94. [Google Scholar]

- Jiménez, F.; Naranjo, J.E.; Gómez, O. Autonomous collision avoidance system based on an accurate knowledge of the vehicle surroundings. IET. Intell. Transp. Syst. In Press.

- Broggi, A.; Cattani, S.; Porta, P.P.; Zani, P. A laser scanner-vision fusion system implemented on the TerraMax autonomous vehicle. Proceedings of the IEEE International Conference IROS, Beijing, China, 10–13 October 2006.

- Jurgen, R.K. Autonomous Vehicles for Safer Driving; SAE International: Warrendale, PA, USA, 2013. [Google Scholar]

- Endo, T.; Iwazaki, K.; Tanaka, Y. Development of reverse parking assist with automatic steering. Proceedings of 10th World Congress and Exhibition on Intelligent Transport Systems and Services, Madrid, Spain, 16–20 November 2003.

- Jung, H.G.; Cho, Y.H.; Yoon, P.J.; Kim, J. Scanning Laser Radar-Based Target Position Designation for Parking Aid System. IEEE Trans. Intell. Transp. Syst. 2008, 9, 406–424. [Google Scholar]

- Abdel-Hafez, M.F.; Nabulsi, A.A.; Jafari, A.H.; Zaabi, F.A.; Sleiman, M.; AbuHatab, A. A sequential approach for fault detection and identification of vehicles' ultrasonic parking sensors. Proceedings of 4th International Conference on Modeling, Simulation and Applied Optimization (ICMSAO), Kuala Lumpur, Malaysia, 19–21 April 2011.

- Park, W.-J.; Kim, B.-S.; Seo, D.-E.; Kim, D.-S.; Lee, K.-H. Parking space detection using ultrasonic sensor in parking assistance system. Proceedings of 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, Holland, 4–6 June 2008.

- Mathur, S.; Jin, T.; Kasturirangan, N.; Chandrasekaran, J.; Xue, W.; Gruteser, M.; Trappe, W. ParkNet: Drive-by sensing of road-side parking statistics. Proceedings of the 8th international conference on Mobile systems, applications and services, MOBISYS 2010, San Francisco, CA, USA, 15–18 June 2010.

- Köhler, P.; Connette, C.; Verl, A. Vehicle tracking using ultrasonic sensors & joined particle weighting. Proceedings of 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013.

- Connette, C.; Fischer, J.; Maidel, B.; Mirus, F.; Nilsson, S.; Pfeiffer, K.; Verl, A.; Durbec, A.; Ewert, B.; Haar, T.; et al. Rapid detection of fast objects in highly dynamic outdoor environments using cost-efficient sensors. Proceedings of 7th German Conference on Robotics, ROBOTIK 2012, Munich, Germany, 21–22 May 2012.

- Song, K.-T.; Chen, C.-H.; Huang, C.-H.C. Design and experimental study of an ultrasonic sensor system for lateral collision avoidance at low speeds. Proceedings of IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004.

- Qidwai, U. Fuzzy Blind-Spot scanner for automobiles. Proceedings of IEEE Symposium on Industrial Electronics & Applications, ISIEA 2009, Kuala Lumpur, Malaysia, 4–6 October 2009.

- Alonso, L.; Milanés, V.; Torre-Ferrero, C.; Godoy, J.; Oris, J.P.; de Pedro, T. Ultrasonic sensors in urban traffic driving-aid systems. Sensors 2011, 11, 661–673. [Google Scholar]

- Kim, H.; Lee, J.-H.; Kim, S.-W.; Ko, J.-I.; Cho, D. Ultrasonic vehicle detector for side-fire implementation and extensive results including harsh conditions. IEEE Trans. Intell. Transp. Syst. 2001, 2, 127–134. [Google Scholar]

- Carullo, A.; Parvis, M. An ultrasonic sensor for distance measurement in automotive applications. IEEE Sens. J. 2001, 1, 143–147. [Google Scholar]

- Carullo, A.; Ferraris, F.; Parvis, M. A low cost contact less distance meter for automotive applications. Proceedings of IEEE Instrumentation and Measurement Technology Conference, IMTC-96, Brussels, Belgium, 4–6 June 1996.

- Llorens, J.; Gil, E.; Llop, J.; Escolà, A. Ultrasonic and LIDAR Sensors for Electronic Canopy Characterization in Vineyards: Advances to Improve Pesticide Application Methods. Sensors 2011, 11, 2177–2194. [Google Scholar]

- Fleming, W.J. New Automotive Sensors—A Review. IEEE Sens. J. 2008, 8, 1900–1921. [Google Scholar]

- Jiménez, F.; Naranjo, J.E. Improving the obstacle detection and identification algorithms of a laserscanner-based collision avoidance system. Transp. Res. Part C Emerg. Technol. 2011, 19, 658–672. [Google Scholar]

- Jiménez, F.; Naranjo, J.E.; García, F.; Zato, J.G.; Armingol, J.M.; de la Escalera, A.; Aparicio, F. Limitations of positioning systems for developing digital maps and locating vehicles according to the specifications of future driver assistance systems. IET Intell. Transp. Syst. 2011, 5, 60–69. [Google Scholar]

- Naranjo, J.E.; Jiménez, F.; Gómez, O.; Zato, J.G. Low level control layer definition for autonomous vehicles based on fuzzy logic. Intell. Autom. Soft Comput. 2012, 18, 333–348. [Google Scholar]

- Agarwal, V.; Murali, N.V.; Chandramouli, C. A Cost-Effective Ultrasonic Sensor-Based Driver-Assistance System for Congested Traffic Conditions. IEEE Trans. Intell. Transp. Syst. 2009, 10, 486–498. [Google Scholar]

- Massa, D.P. Choosing an Ultrasonic Sensor for Proximity or Distance Measurement Part 1: Acoustic Considerations. Available online: http://www.sensorsmag.com/sensors/acoustic-ultrasound/choosing-ultrasonic-sensor-proximity-or-distance-measurement-825 (accessed on 28 November 2014).

- Soma, H.; Hiramatsu, K. Driving simulator experiment on drivers' behavior and effectiveness of danger warning against emergency braking of leading vehicle. Proceedings of 16th International Technical Conference on the Enhanced Safety of Vehicles, Windsor, ON, Canada, 31 May–4 June 1998.

- Roenitz, E.; Happer, A.; Johal, R.; Overgaard, R. Characteristic vehicular deceleration for known hazards. SAE Tech. Pap. 1999. [Google Scholar] [CrossRef]

- Wilson, B.H. How soon to brake and how hard to brake: Unified analysis of the envelope of opportunity for rear-end collision warnings. Proceedings of 17th International Technical Conference on the Enhanced Safety of Vehicles, Amsterdam, Holland, 4–7 June 2001.

- Sens, M.J.; Cheny, P.H.; Wiechel, J.F.; Guenther, D.A. Perception/reaction time values for accident reconstruction. SAE Tech. Pap. 1989. [Google Scholar] [CrossRef]

- Olson, P.L. Driver perception response time. SAE Tech. Pap. 1989. [Google Scholar] [CrossRef]

- Pomerleau, D.; Jochem, T.; Thorpe, C.; Batavia, P.; Pape, D.; Hadden, J.; McMilan, N.; Brown, N.; Everson, J. Run-off-Road Collision Avoidance Using IVHS Countermeasures; Final Report; National Highway Traffic Safety Administration (NHTSA): Washington, DC, USA, 1999. [Google Scholar]

| Type | Image | Transducers | Frequency | Range | Output |

|---|---|---|---|---|---|

| LV-EZ3 |  | Simple | 42 KHz | 6.45 m | Analog |

| SRF02 |  | Simple | 40 KHz | 15 cm–6 m | I2C |

| SRF08 |  | Double | 40 KHz | 6 m | I2C |

| SRF10 |  | Double | 40 KHz | 6 m | I2C |

| SRF235 |  | Simple | 235 KHz | 10 cm–1.2 m | I2C |

| Target Speed (km/h) | Real Speed (Internal Communication Bus) (km/h) | Speed Calculation (km/h) | Error Ultrasonic Sensors vs. Real Speed (%) | |

|---|---|---|---|---|

| Laser Scanner | Ultrasonic Sensors | |||

| 10 | 8.4 ± 0.1 | 8.4 | 8.3 | −1.2 |

| 15 | 14.9 ± 0.2 | 14.7 | 14.8 | 0.7 |

| 20 | 20.0 ± 0.2 | 19.8 | 20.1 | 1.5 |

| 25 | 25.4 ± 0.3 | 25.0 | 25.2 | 0.8 |

| 30 | 31.5 ± 0.2 | 30.9 | 31.3 | 1.3 |

| 35 | 35.9 ± 0.3 | 35.4 | 35.8 | 1.1 |

| 40 | 44.3 ± 0.2 | 43.9 | 44.8 | 2.1 |

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiménez, F.; Naranjo, J.E.; Gómez, O.; Anaya, J.J. Vehicle Tracking for an Evasive Manoeuvres Assistant Using Low-Cost Ultrasonic Sensors. Sensors 2014, 14, 22689-22705. https://doi.org/10.3390/s141222689

Jiménez F, Naranjo JE, Gómez O, Anaya JJ. Vehicle Tracking for an Evasive Manoeuvres Assistant Using Low-Cost Ultrasonic Sensors. Sensors. 2014; 14(12):22689-22705. https://doi.org/10.3390/s141222689

Chicago/Turabian StyleJiménez, Felipe, José E. Naranjo, Oscar Gómez, and José J. Anaya. 2014. "Vehicle Tracking for an Evasive Manoeuvres Assistant Using Low-Cost Ultrasonic Sensors" Sensors 14, no. 12: 22689-22705. https://doi.org/10.3390/s141222689