Statistical Process Control of a Kalman Filter Model

Abstract

: For the evaluation of measurement data, different functional and stochastic models can be used. In the case of time series, a Kalman filtering (KF) algorithm can be implemented. In this case, a very well-known stochastic model, which includes statistical tests in the domain of measurements and in the system state domain, is used. Because the output results depend strongly on input model parameters and the normal distribution of residuals is not always fulfilled, it is very important to perform all possible tests on output results. In this contribution, we give a detailed description of the evaluation of the Kalman filter model. We describe indicators of inner confidence, such as controllability and observability, the determinant of state transition matrix and observing the properties of the a posteriori system state covariance matrix and the properties of the Kalman gain matrix. The statistical tests include the convergence of standard deviations of the system state components and normal distribution beside standard tests. Especially, computing controllability and observability matrices and controlling the normal distribution of residuals are not the standard procedures in the implementation of KF. Practical implementation is done on geodetic kinematic observations.1. Introduction

A key part of the process of modeling any processes is the evaluation of whether the mathematical model is accurately chosen. This is often quite difficult to assess. Models are influenced by various sources of uncertainty—such as model noise, imprecision, incompleteness, ambiguity, vagueness and, maybe most important, the lack of complete knowledge and the influence of mathematical over-simplification. Therefore, deterministic mathematical modeling of dynamic systems is usually imperfect, and a statistical approach is necessary to estimate unknown parameters and to evaluate their accuracies. In the modeling of kinematic processes, we must deal with time series of observations and standard techniques of the noise reduction of time series, including filtering and smoothing. By applying least squares estimation in the sequential mode, the removal of noise from a time series has been optimized using the well-known algorithm of Kalman filtering (KF). The algorithm is described in several books [1-6], and implemented in many non-engineering and, especially, engineering tasks [7,8], as well as many others. KF overcomes the problem of uniformly-defined unknown parameters, ensures computational integrity when dealing with redundant observations, minimizes the influence of measurement errors on system state estimation and allows one to compute the accuracy of their estimate.

After formalizing a functional model as a mathematical relationship between the measurements and unknown parameters, a reliable stochastic model is required for the propagation of the observation of random errors into the estimated parameters. In addition, systematic effects or inconsistencies in the data, the removal or appropriate down-weighting of outliers also need to be considered. In the case of KF modeling, very well-known hypothesis testing and identification of significant inconsistencies is performed in the domain of measurements and in the system state domain.

In our contribution, we describe additional, theoretically known, procedures and parameters, which can be used for the evaluation of the functional model and are not used as standards in the evaluation of KF models. We describe:

- (1)

indicators of inner confidence:

- ‐

controllability and observability;

- ‐

determinant of state transition matrix;

- ‐

properties of the a posteriori system state covariance matrix;

- ‐

properties of the Kalman gain matrix;

- (2)

statistical tests:

- ‐

standard deviations of the system state components;

- ‐

test statistics in the domain of the measurements;

- ‐

test statistics for the system state domain.

As a practical example, we use a reference frame as an additional estimator parameter of the theoretical background.

The paper is organized as follows. In Section 2, we describe the theoretical background for a statistical analysis of kinematic and dynamic processes. Indicators of inner confidence and the parameters for robust statistical tests are given in detail. In Section 3, we illustrate an example of the practical implementation of this theory. In Section 4, the results of the practical example are evaluated. Results, the contribution of the work and suggestions for further work are discussed in Section 5, which concludes the paper.

Some of the material covered in this contribution are closely related to the content in [9,10], where more detailed explanations of the practical example presented here, especially the development of the model and measurement system, can be found. The main intent of this contribution is to describe in detail the parameters that enable evaluation of a Kalman filter model in real-time and when no reference frame is available and no additional measurements are performed.

2. Statistical Process Control of Kinematic and Dynamic Processes

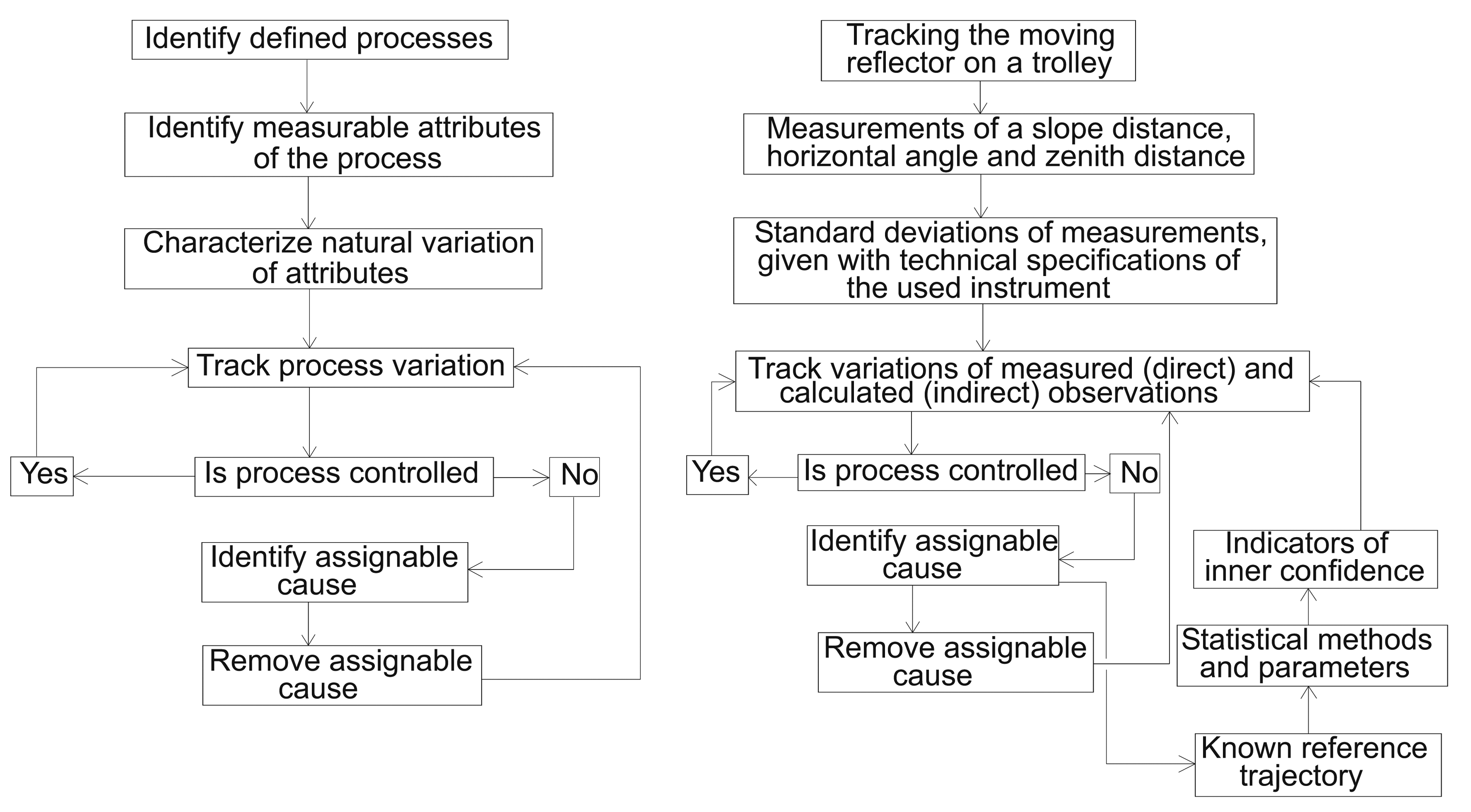

Evaluation models are always approximations that require robust evaluation of derived results to assess accuracy. To minimize model uncertainties and measurement errors, redundant measurements are usually made to check the system. On the other hand, statistical process control, which is based on the outputs of the process and their measurable attributes, is included into the mathematical model and helps the engineer to track and measure the variability of the process to be controlled [11].

In the case of KF, we estimate the state of a dynamic system, which evolves through time and can be modeled using a stochastic equation. KF modeling provides indicators that enable the evaluation of estimated outputs. Because these values are computed through the KF process, they can be only used as indicators of inner confidence. Consistency checks of the model must then be performed using additional statistical methods and parameters and must be addressed as more global tests.

2.1. Indicators of Inner Confidence

2.1.1. Controllability and Observability

“The concepts of controllability and observability are fundamental to modern control theory. These concepts define how well we can control a system, i.e., derive the state to a desired value, and how well we can observe a system, i.e., determine the initial conditions after measuring the estimate outputs” [6] (p. 38).

2.1.2. Controllability

“A discrete-time (deterministic) system is completely controllable if, given an arbitrary destination point in the state space, there is an input sequence that will bring the system from any initial state to this point by a finite number of steps” [1] (p. 30). For the n-state discrete linear time-invariant (for a time-invariant system, using fixed time intervals, the matrices F, G and H are independent of the discrete-time index [4]) system, the controllability condition is that the pair F, G is controllable; that is, the controllability matrix [1]:

2.1.3. Observability

A deterministic system is completely observable if its initial state can be fully and uniquely recovered from a finite number of observations of its output estimates with the given knowledge of associated inputs. For the n-state discrete linear time-invariant system, the observability condition is that the pair F, H is observable; that is, the observability matrix [1]:

“Computation of the observability matrix is subject to model truncation errors and round-off errors, which could make the difference between a singular and non-singular result. Even if the computed observability matrix is close to being singular, this is cause for concern. One should consider a system as poorly observable if its observability matrix is close to being singular. For that purpose, one can use the singular-value decomposition or the condition number of the observability matrix to define a more quantitative measure of unobservability” [4] (p. 44). The condition number of a matrix O is denned as the ratio of the largest singular value smax to the smallest smin of matrix O:

“The reciprocal of its condition number measures how close the system is to being unobservable” [4] (p. 44).

2.1.4. Determinant of State Transition Matrix

In general, the determinant provides important information about the design matrix of a linear system. The system has a unique solution only when the determinant is non-zero; when the determinant is zero, there are either no solutions or many solutions. In the case of KF, we asses and monitor the determinant value of the state transition matrix Fk, which defines the transition from the previous state to the current system state.

2.1.5. Properties of the A Posteriori System State Covariance Matrix

Properties of the a posteriori system state covariance matrix are generally used to obtain preliminary characteristics of the reliability of a KF model. The importance of the convergence of the trace of system state covariance matrix lies in its definition: is the sum of the diagonal elements of the matrix , which represent variances (squares of standard deviations) of the system-state components. If the sum of diagonal elements converges towards some value, then we reason that single elements also converge towards some solution [10].

Eigenvalues of the A Posteriori System State Covariance Matrix

The solution of covariance matrix Pk should theoretically always be a symmetric-positive semi-definite (non-negative definite) matrix. An important caveat to keep in mind is that the two diagnostic properties of a covariance matrix (symmetry and positive definiteness) can be lost due to round-off errors in the course of calculating its propagation equations [1].

Condition Number of the A Posteriori System State Covariance Matrix

Mathematically, matrix is non-singular. However, numerical problems sometimes make matrix indefinite or non-symmetric. Numerical problem can, for example, arise in cases in which some elements of the state-vector x are estimated to a much greater precision than other elements of x. This could arise, e.g., because of discrepancies in the units of the state vector [6]. The discrepancy can be identified using different eigenvalues of the matrix , specially those reflecting the condition number of matrix , defined in [1] as the common logarithm of the ratio of its largest to smallest eigenvalue:

The value of the condition number depends strongly on the value of the process noise intensity scalar σω. The condition number can therefore reflect the ratio between process and measurement noise.

2.1.6. Properties of the Kalman Gain Matrix

The inclusion of measurements into system state estimates are determined by Kalman gain K, which is influenced by the uncertainty in a priori state estimates and uncertainty in measurement estimates. Intuitively, measurements with high precision should result in precise state estimates. Input measurements define the system state, directly or indirectly, and thus, if they have high precision, the KF can rely on them as good indicators of the system state. The Kalman gain matrix should reflect this.

Intuitive Interpretation of the Gain

Taking a simplistic scalar view of the optimal Kalman gain matrix, , it is [1]:

- (1)

proportional to the state prediction variance, ,

- (2)

inversely proportional to the innovation variance, .

According to Bar-Shalom et al., “the gain is:

- (1)

large if the state prediction is inaccurate (has a large variance) and the measurement is accurate (has a relatively small variance),

- (2)

small if the state prediction is accurate (has small variance) and the measurement is inaccurate (has a relatively large variance)” [1] (p. 207).

A large gain indicates a rapid response of the input measurement in updating the state, while a small gain yields a slower response to that measurement [1].

2.2. Statistical Tests

Equations for the estimation of measurement prediction covariance, innovation covariance, Kalman gain, innovation or measurement residual, updated state estimate and its updated covariance all yield filter-calculated covariances, which are exact if all of the modeling assumptions used in the filter derivation are all perfect. In practice, this is not the case, and the validity of the accuracy of these filter estimates has to be tested [1].

2.2.1. Standard Deviations of the System State Components

Output estimates are dependent on KF tuning; tuning “ration” computational attention between processes, measurement noise and a priori information. Through filter tuning, the performance can be evaluated by assessing its associated a posteriori covariance matrix . This is demonstrated by the fact that any estimated system state tends to be bounded between one standard or two standard deviations (66% or 95% confidence, respectively). These standard deviation bounds are defined by the square roots of the corresponding diagonal elements in the computed matrix (standard deviation ). If an estimation error is exceeded by the σ- or 2σ-bound, then the performance of the KF under the parameter setting may have been set to unacceptable values.

2.2.2. Test Statistics in the Domain of the Measurements

A global test of KF estimation could in theory be done by comparing predicted and true values of measurements. The measurement residual, called innovation, dk, can be estimated and computed as a difference between the actual measurement, zk, and the best available prediction based on the system model and previous measurement, [12]:

The null-hypothesis, which supposes that sample observations result purely from chance, is defined as:

The test statistic follows χ2-distribution with the probability relationship:

2.2.3. Test Statistics in the System State Domain

In the system state domain, we test to see whether the KF-filtered system state is comparable to any previous knowledge we may have about the system. To address this comparison, the difference between the filtered and predicted value of the system state should be analyzed. The system state correction or residual vx,k can be written as:

Matrix Kk is a Kalman gain matrix that weights the measurement residuals. The system state residual represents the difference between the system state estimate before and after a measurement update. If this residual has a large value, it indicates that we are not predicting the future system state very well, because when new measurements are applied, there is a large jump in the state estimate. The corresponding covariance matrix, PVx,k, is given by [12]:

The system state corrections have a normal distribution vx,k ∼ N(0, PVx,k), and the appropriate test statistics for the system state corrections is [12]:

3. Implementation and Numerical Analysis

The theoretical backgrounds given in Section 2 are demonstrated using a practical example.

3.1. Simulation of a Kinematic Process

The kinematic observations were performed on a known reference trajectory at the Geodetic Laboratory of the Chair of Geodesy at the Technical University of Munich, Germany; Figure 1. The construction accuracy of the trajectory is 1 mm in both horizontal and vertical position. The “kinematic process” was performed as a tracking problem, with the electronic tacheometer, TCRA1201 Leica Geosystems, used to perform automatic measurements of a moving Leica Geosystems 360°-reflector, installed on a moving trolley. A more detailed description of experimental set-up can be found in [9,10].

3.1.1. Kalman Filter Model for the Simulated Process

Because of the high frequency of measurements we obtained (tsample = Δt = 0.125 s), the movement of the reflector can be described as a movement with approximately constant acceleration a during each sampling period of a length Δt, which is equal to the time-stamp between any two measurement epochs. A discrete third-order kinematic model (discrete Wiener process acceleration model, DWPAM) [1], which is a derivation of KF, was used to process all of the captured measurement triples. The model is three-dimensional per coordinate. In our case, the observed and recorded measurements were horizontal angles hz, zenith distances zr and slope distances d, and the variables of interest are the position, velocity and acceleration components.

We rewrite main elements of a functional model. More details are given in [10]. The KF process equation, where no deterministic component is present, has the following form [10]:

The process noise covariance matrix for all three dimensions Qk is:

In the KF measurement equation, the measurement vector zk is composed of the reflector position components for each time step tk, x, y and z, which were computed out of the KF loop, from direct measurements of horizontal direction hzk, zenith distance zrk and slope distance dk, according to a defined right-handed coordinate system for each time step tk and given coordinates of the instrument stand point. The observation matrix Hk is [10]:

The law of propagation of variances and covariances should be used to calculate the covariance matrix of KF observations zk, where the covariance matrix of direct measurements hz, d and zr is defined with standard deviations, σhz = 1″, σd = 5 mm, σzr = 1″, given with the instrument technical specifications, and is constant through the process.

A more detailed mathematical description of the KF model for numerical experiment is given in [10].

3.2. Evaluation of the Developed Mathematical Model

In this subsection, we present the practical implementation of the theory behind controlling the kinematic process and evaluating output estimate values of the KF model. The performance of our model was evaluated using elements of inner confidence, statistical tests and an independent reference frame (Figure 2). We next define the errors present and determine control limits for the system state components of interest.

3.3. Testing KF DWPAM with Indicators of Inner Confidence

3.3.1. Controllability of KF DWPAM

The controllability matrix C ∈

3.3.2. Observability of KF DWPAM

The observability matrix O ∈

The condition number was computed according to Equation (3). This value measures the sensitivity of a solution of a linear system of equations to errors in observational data. Values for the conditional number near one indicate a well-conditioned matrix. For our KF model, the condition number of the observability matrix is constant through the process and its value is:

The reciprocal of above condition number is:

From the above two numerical values, we conclude that our model is observable.

3.3.3. Determinant of the State Transition Matrix Fk

The determinant of the state transition matrix of KF DWPAM is det(Fk) = 1, which means that the system of linear equations has a unique solution.

3.3.4. Properties of the A Posteriori System State Covariance Matrix

Trace of Matrix

The condition of the convergence of the a posteriori system state covariance matrix is satisfied for our KF DWPAM (see [10], Figure 2).

Eigenvalues of Matrix

The symmetry and positive definiteness of the covariance matrix Pk for our KF DWPAM were controlled using MATLAB operations.

Condition Number of Matrix

If we look at the standard deviations of the system state components, computed through the process (see [10], Figures 3, 4 and 5), we notice different ranks for the precision of position, velocity and acceleration. This is due to the different units on each parameter and because position components are better observed than velocity and acceleration.

The condition number of matrix for σω = 0.010 is shown in Figure 3a. A large condition number indicates near-singularity. For our KF DWPAM, we determine that the condition number is acceptable. Because the value of the condition number depends strongly on the value of the process noise intensity scalar σω, the value was performed for different values of σω, σω = 0.001 : 0.040 : 0.51; Figure 3b. The lower graph represents the condition number for the lowest σω, i.e., σω = 0.001.

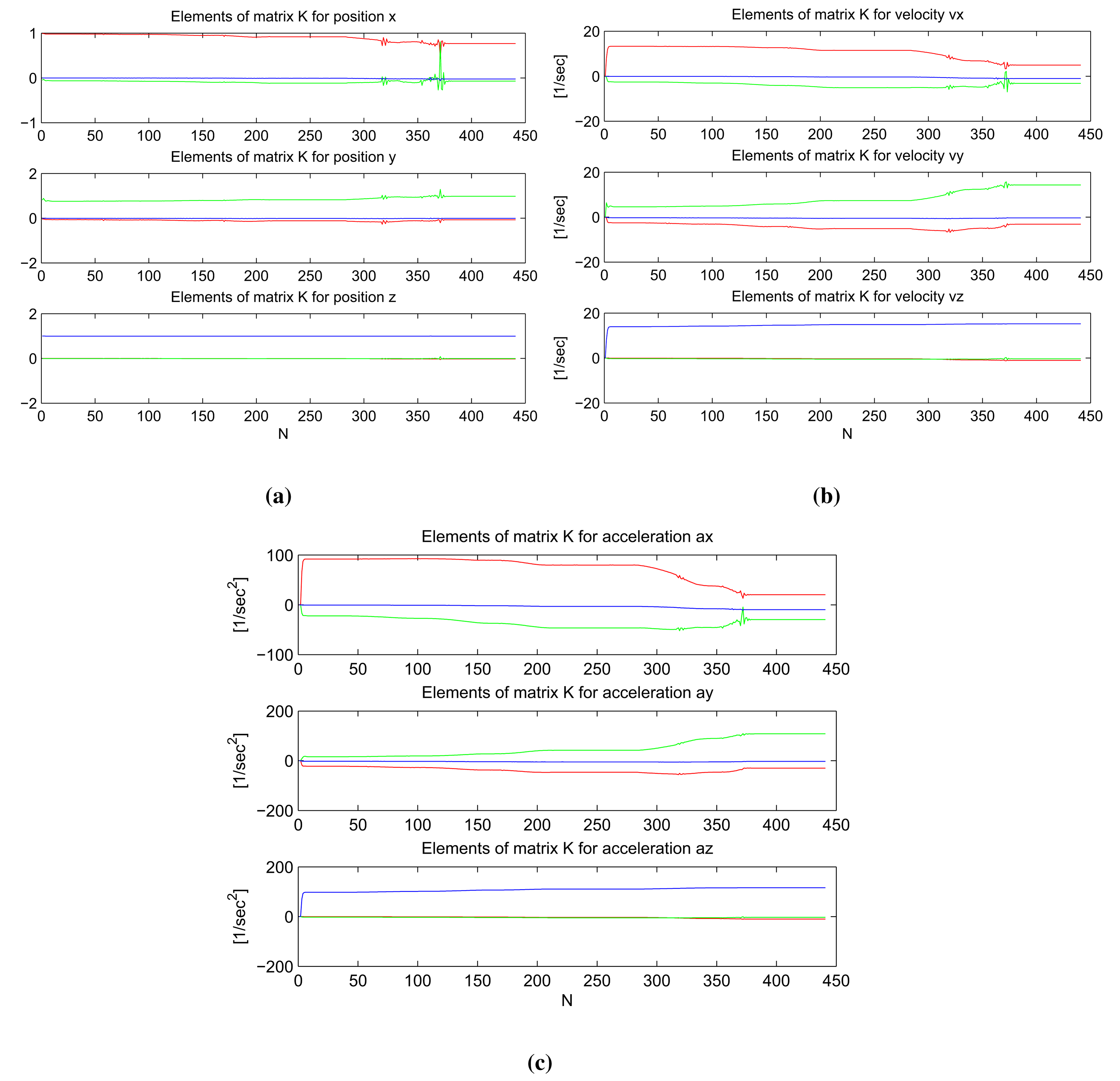

3.3.5. Properties of the Kalman Gain Matrix K

In Figure 4, graphs of the elements of the weighting matrix K are plotted for the position, velocity and acceleration components in all three dimensions. In each graph, the red, green and blue lines represent the element of matrix K for the x, y and z-component of measurement correction. From these graphs, we conclude:

- (1)

The corresponding weight of KF measurement corrections, , is always the biggest for each component of the system state in the sense of coordinate direction. For example, the correction for position in x direction is:

where the element K11, which incorporates x component of KF measurement correction, is the biggest; Figure 4a; elements of K for position x, red line.- (2)

If we look at the graphs for elements of matrix K, which involves incorporating position corrections (Figure 4a), we see that for each position component, the weight of corresponding KF measurement correction in the same coordinate direction is about one, and for the other two KF measurement corrections in perpendicular directions, they are about zero. The same trends exist for velocity (Figure 4b) and acceleration (Figure 4c).

- (3)

When we compare weights for position—x, y, z—velocity—υx, υy, υz—and acceleration—ax, ay, az—we see that weights for position are of rank 100, for velocity of rank 101 and for acceleration of rank 102. The reason for this is probably related to the different units of each of these system state components.

In our model, the values of the elements in matrix K are dependent on the covariance matrix of KF measurements, Rk (see [10], Equation (13)) and cannot be computed off-line. The elements of weighting matrix K depend very heavily on the value of process noise intensity scalar σω, specifically the elements that define velocity and acceleration corrections. We performed several tests with different values of σω to confirm numerically the mathematical validity of our KF model algorithm; i.e., when the process noise is small, there is a high confidence in model and predicted values; therefore, the measurement corrections are weighted less heavily than in the case of big process noise.

3.4. Statistical Tests of KFDWPAM

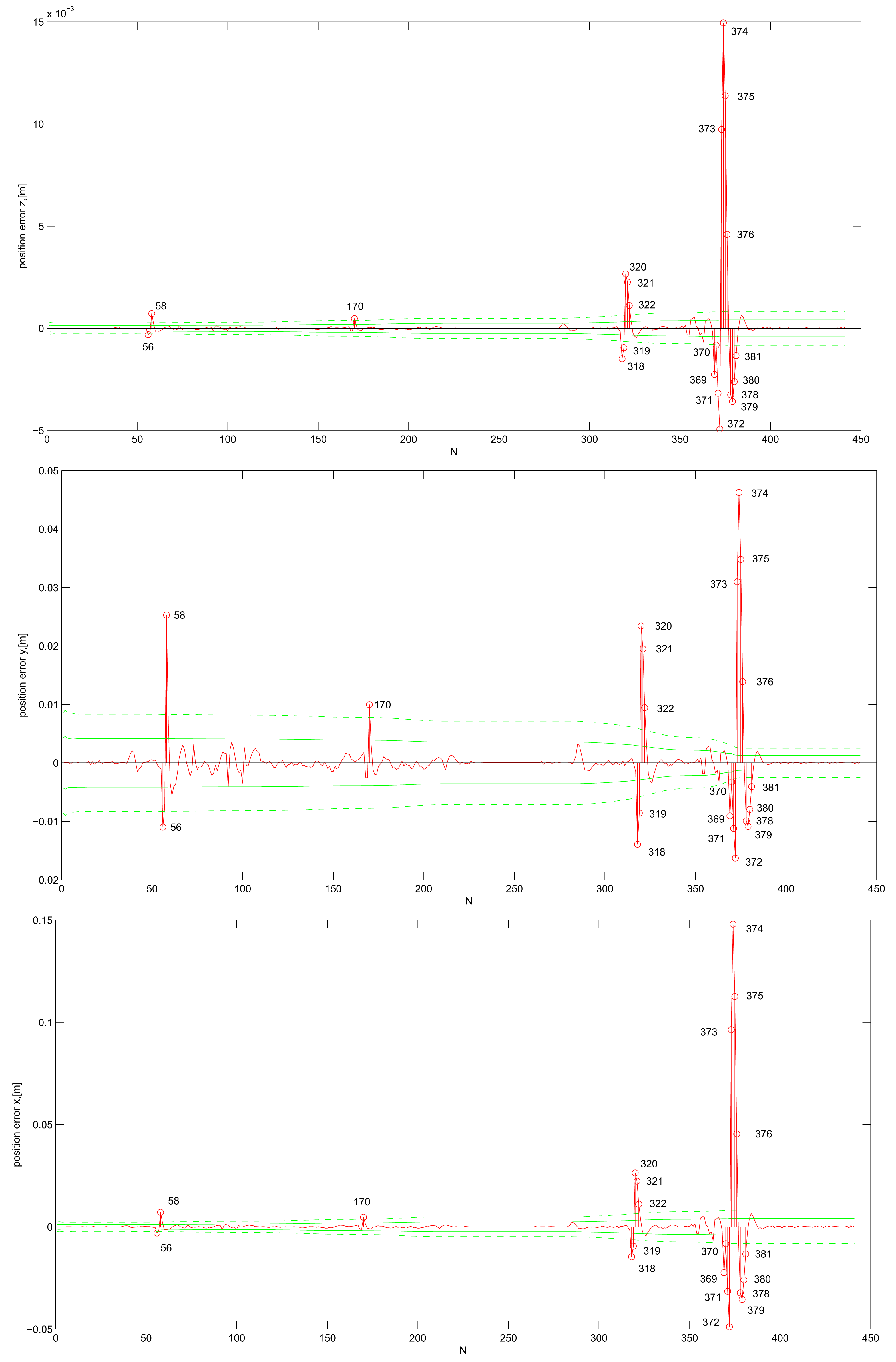

Next, statistical tests in the domain of the measurements and system state were performed to explore possible reasons for the discrepancies we observed between estimation error and σ-bounds.

3.4.1. Standard Deviations of the System State Components in Vector

The main intent of our simulation and KF model was to estimate the position of the moving reflector in three dimensions. For all three estimated position components, we observed a bigger dispersion of predicted and better fitting of filtered values when both are compared to the true/reference positions. The numerical results are given in Table 1.

Ideally, variances of the system state estimates should always include the true state within their errors. We visually present this test by plotting the standard deviations of the system state estimates, that is the square roots of the variances, with the estimation error plots. The standard deviations, computed with the KF, indicate how certain it is that the true state lies within a certain distance from the estimated state. The KF is about 66% (95%) certain that the true state element lies within the 1σ (2σ) confidence interval; i.e., within one (two) deviation from the estimated element. If we plot the deviations into the error plot, ideally the error stays under the deviation lines. For KF DWPAM and σω = 0.1, the 1σ- and 2σ-bounds are drawn for each position component separately; Figure 5. Numerical values are given in Table 2. The number of steps, where the position error exceeds the 2σ-bound, is also plotted. In all three figures, the position error is represented with a red solid line, σ-bound with a green solid line and 2 · σ-bound with a dashed solid line. All plots represent cases where no gross errors (large outliers) were present and for σω = 0.1.

Table 2 shows the reduction of the condition number (at the same time, there is better convergence of trace ) with bigger confidence into the model (smaller σω value). However, at the same time, there is a big reduction of position error, where less true states lie inside the 1σ- or 2σ-bound of estimated positions, which means a high discrepancy between the estimated and true position system state components. For σω = 0.1, we deduce that position state estimates are consistent with their computed standard deviations and σ-bounds. Position errors for all three position components fall about 91% and 95.5% within 1σ and 2σ-uncertainty regions. For σω = 0.01, position state estimates are inconsistent with their computed standard deviations. Only approximately 46% and 61% of estimated positions lie within the 1σ- and 2σ-bound, respectively. We also observe that there is an increasing trend in the deviation in the different position state estimates variables, but still bounded.

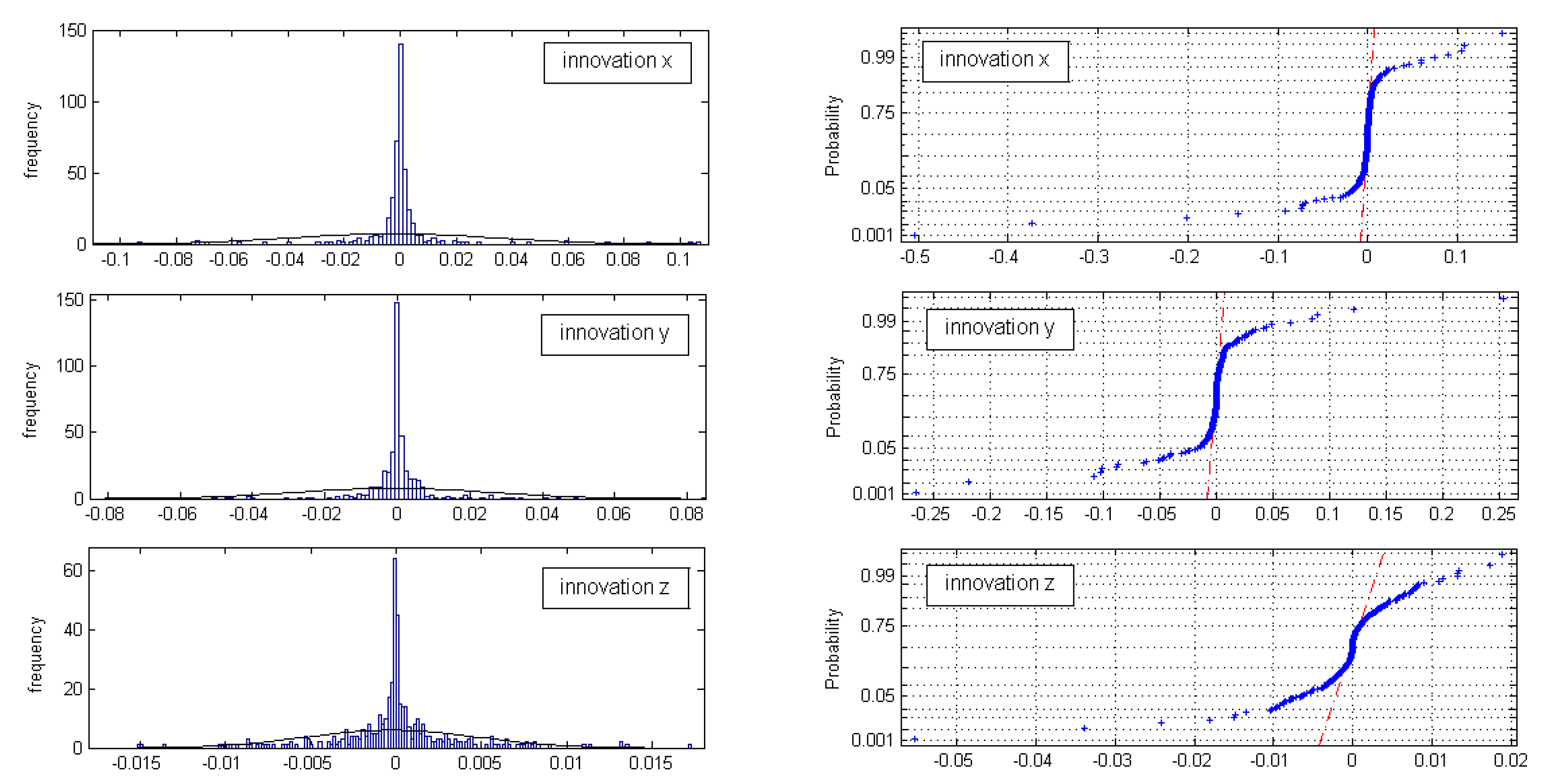

3.4.2. Consistency of the Model in the Domain of the Measurements

We next test the KF measurement innovations together with their covariances using the normalized innovation squared (NIS), Equation (7), and the probability relationship given in Equation (10). For KF DWPAM, the value of degrees of freedom is equal r = 3, and a significance level value of α = 0.05 was used; i.e., . At the 95% confidence level, for a measurement vector that includes three variables, the confidence region of the upper one-sided test is . The normalized innovation squared for KF DWPAM is plotted in Figure 6, left.

The innovations represent measurement residuals, and for this analysis it is assumed that they are normal (Gaussian) distributed variables with zero mean and covariance, given in Dk:

The normal probability plots for all three innovation sets (x,y,z innovation) do not show linear patterns on the whole data; Figure 7. In general, the data are plotted against a theoretical normal distribution in such a way that the points should form an approximate straight line. Departures from this straight line indicate departures from normality. Our data show a reasonably linear pattern in the center of the data, but the tails show departures from the fitted line. In our case, we must deal with short tail normal probability distributed data [13]. In the middle of these plots, the data show a weak S-like pattern. The first few and the last few data on each plot show marked departures from the reference fitted line. In the case of short tails, the first few points overestimate the fitted line above the line, and the last few points underestimate the fitted line. These tail-end outliers may indicate significant deviations from the assumed normal distribution of the measurement innovations.

In Table 3, the estimates of mean μ and the estimate of the standard deviation σ of the normal distribution for each innovation set are given at the 95% confidence interval.

3.4.3. Test Statistics of the Model in the System State Domain

We next run a second formal test for the consistency of the model by examining the normalized system state errors. We ran single-run tests with different process noise scalars σω to check the KF DWPAM consistency in the domain of the system state. Using a process noise variance σω = 0.1, the normalized system state error squared is shown in Figure 6, right. The confidence region for a nine-degree-of-freedom system state vector (n = 9) is 16.919 at a 95% confidence level. KF estimation resulted in 26 points out of N = 441 to be outside the 2σ confidence region; this represents 6% of the total. The statistical test for testing model consistency in the system domain was computed using Equation (13), with the a priori variance computed using a preliminary adjustment and its value set accordingly to .

4. Using the Reference Trajectory as an Additional Estimator Parameter

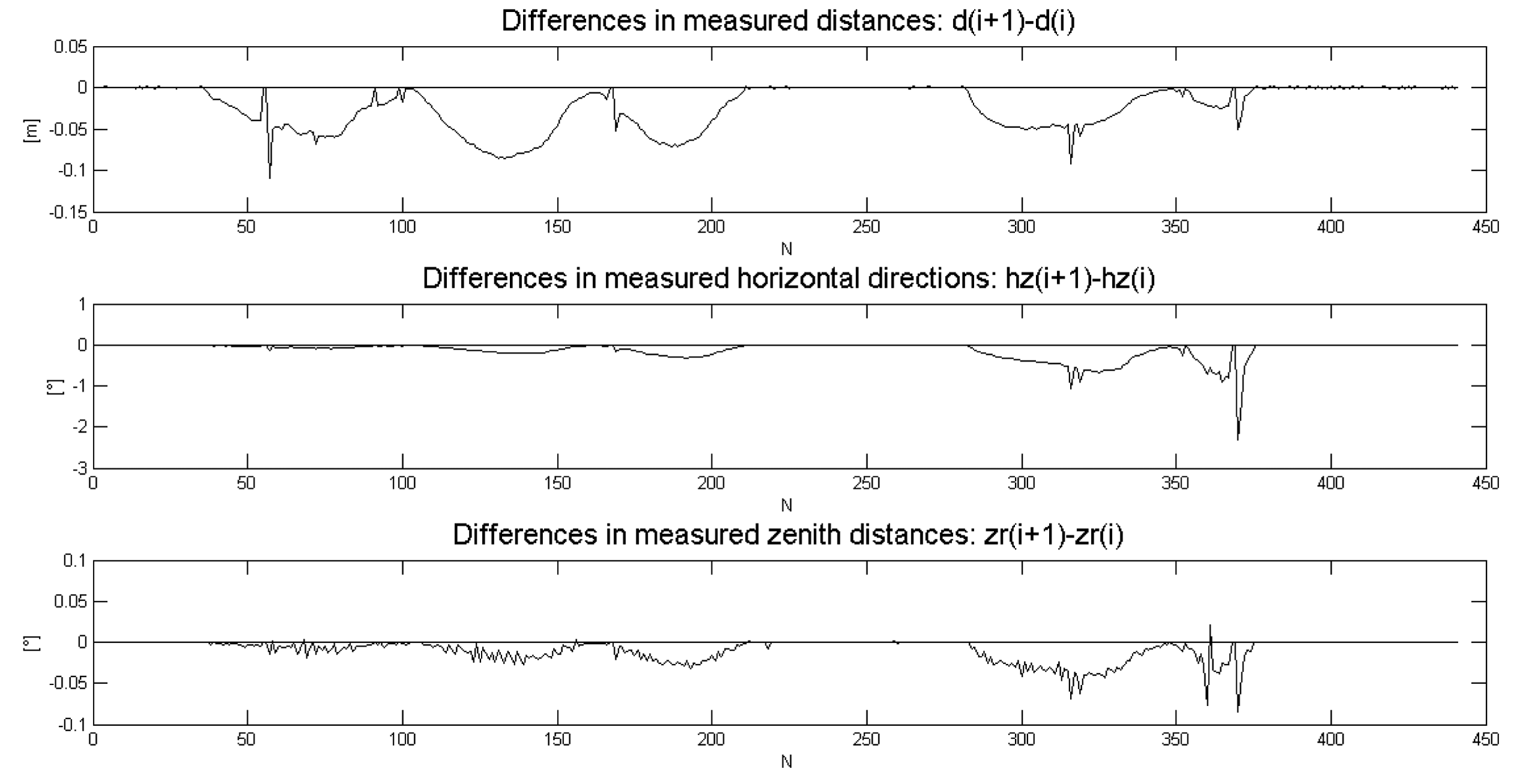

The most direct way to evaluate KF DWPAM accuracy and to find reasons for discrepancies is to use data of direct measurements of distance, horizontal angle and zenith distance and indirect measurements of trolley position on a known reference trajectory In Figure 8, the differences between successive measurement steps k(i + 1) − k(i) of the direct measurements are plotted. The rapid changes in measurements coincide with the observed discrepancies in statistical tests of the model that were discussed above. The reason for these deviations could thus lie in model, in direct observations or in the true motion of the trolley. True trolley motion might have included jerky changes in the inclination of the reflector. This is particularly possible in the x-direction, perpendicular to the trajectory. From our observed changes in direct (KF) measurements (Figure 8) and indirect measurements (Figure 9), we conclude that the reason for the KF DWPAM inconsistency lies in the actual discontinuous motion of the trolley. The KF model cannot follow rapid changes in motion very quickly; consequently, when fast changes occur, bigger values of differences between predicted and true measurement also occur, as in the case of continuous motion.

In our practical example, we assumed and then tested the Gaussian distribution of innovations. We also reason that through the computation of the indirect (KF) measurements from direct measurements, a non-Gaussian distribution can occur. Our tests (default null hypothesis) also confirm that only sample innovations where no big changes in measurements occur have a Gaussian distribution.

5. Conclusions

In this contribution, we presented the theoretical background and a practical example of using and testing a Kalman filter model to control simple trajectory motion. In previous works [9,10], the well-known statistical tests in the system state domain and in the domain of the measurements were presented.

The main contribution of this work is to perform additional parameters for the consistency check of the KF model. The mathematical background and numerical computation for the simulated example are given.

- (1)

First, the determinant of the state transition matrix is checked. It has a non-zero value in a practical example, which confirms that the system has a unique solution.

- (2)

The observability and controllability are checked with the values of their matrix rank and with more quantitative measure, given as the condition number of observability matrix. The rank of the observability matrix is equal to the number of unknown components, i.e., the dimension of the system state vector. Furthermore, the value of the observability matrix condition number is acceptable for our model. The rank of the controllability matrix is equal to three, which is the number of system state components in each direction. The rank is not equal to the total number of the system states. The reason can lie in the pre-assumption that the unknowns in each direction are uncorrelated from each other, which influences the structure of the state transition matrix.

- (3)

Beside a well-known condition of the convergence of the trace, additional properties of the a posteriori system state covariance matrix are observed. The condition number of the a posteriori system state covariance matrix was computed for different values of the process noise intensity scalar, where the values show convergence to an acceptable maximum.

- (4)

In the contribution, the elements of Kalman gain matrix, which defines the weights of KF measurements, were observed. For each position is the weight of corresponding KF measurement correction in the same direction, about one, and for the other two KF measurement corrections in perpendicular directions, about zero. The reason can lie in the pre-assumption that the unknowns in each direction are uncorrelated from each other.

- (5)

The performance of the normal distribution of measurement innovations enables the identification of the presence of the outliers. Errors in the measurement domain are generally not normally distributed. In real-time kinematic time series analysis, we may not have the ability to analyze the detailed nature of measurement errors with classical geodetic least squares adjustment, because for each time step, we will have only at least required a number of measurements. In the case of KF model, the distribution test is done on KF measurement innovations. KF measurement innovations, which represent measurement corrections, require a generally Gaussian distribution. With non-Gaussian errors, Kalman filtering does not produce an optimal general solution of a system state, but rather only the optimal solution for the linear system chosen. Therefore, special attention is required when reporting and using KF-derived confidence bounds. KF-derived variances should be accepted only stringently as representing measurement accuracy. It is then the task of the engineer to scale up or down uncertainty bounds for selected value using the process noise intensity scalar. In other words, the KF model can result in under- or over-estimated values and associated errors.

The highly accurate reference trajectory and the used precise measurement instrument offer the possibility to additionally test Kalman filter modeling and especially to find the reasons for discrepancies and offsets. By investigating direct measurements and differences between sequential measurement steps, conclusions about the reasons for big deviations between predicted and estimated system state components and big offsets in measurement innovations were made. The main reason lies in the jerky changes in the inclination of the reflector on the moving trolley.

Our practical example showed that statistical tests in the measurement domain and in the system state domain and other parameters of the statistical process control can provide appropriate tests for detecting discrepancies in the kinematic processes when no independent external control is available.

Extensions and improvements of the work presented could be gained by using particle or unscented filters [14] and better algorithms based on centroid weighted Kalman filters [15], which should better optimize prediction performance and correction iterations for the systems with non-Gaussian noise. To overcome the problem of incorporating large measurement innovations, adaptive KF with predefined sliding windows should be tested as a possible improved way to deal with the online elimination of outliers.

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable comments and suggestions to improve the quality of the paper. We thank our colleagues for improving the use of English in the manuscript.

Author Contributions

The first author accepts the main responsibility for the manuscript. Its substantial contributions are forming the concept of idea and problem, acquisition of data in former works, analysis and interpretation of data. The second author contributes to his discussions on dynamical systems, Kalman filtering, problem of input parameters and their influence on the results. The last author contributes with final critical approval of the paper.

Conflict of Interest

The authors declare no conflict of interest.

References

- Bar-Shalom, Y.; Li, X.R.; Kirubarajan, T. Estimation with Applications to Tracking and Navigation; John Wiley & Sons, Inc: New York, NY, USA, 2001. [Google Scholar]

- Catlin, D.E. Estimation, Control and the Discrete Kalman Filter; Springer: New York, NY, USA, 1989. [Google Scholar]

- Gibbs, B.P. Advanced Kalman Filtering, Least-Squares and Modeling; John Wiley & Sons, Inc: New York, NY, USA, 2011. [Google Scholar]

- Grewal, M.; Andrews, A. P. Kalman Filtering: Theory and Practice Using MATLAB, 2nd ed.; John Wiley & Sons: New York, NY, USA, 2001. [Google Scholar]

- Jwo, D.; Cho, T. Critical remarks on the linearised and extended Kalman filters with geodetic navigation examples. Measurement 2010, 43, 1077–1089. [Google Scholar]

- Simon, D. Optimal State Estimation: Kalman, H∞ and Nonlinear Appproches; John Wiley & Sons, Inc: New York, NY, USA, 2006. [Google Scholar]

- Eom, K.H.; Lee, S.E.; Kyung, Y.S.; Lee, C.W.; Kim, M.C.; Jung, K.K. Improved Kalman Filter Method for Measurement Noise Reduction in Multi Sensor RFID Systems. Sensors 2011, 11, 10266–10282. [Google Scholar]

- Ligorio, G.; Sabatini, A.M. Extended Kalman Filter-Based Methods for Pose Estimation Using Visual, Inertial and Magnetic Sensors: Comparative Analysis and Performance Evaluation. Sensors 2013, 13, 1919–1941. [Google Scholar]

- Bogatin, S.; Foppe, K.; Wasmeier, P.; Wunderlich, T.A.; Schäfer, T.; Kogoj, D. Evaluation of linear Kalman filter processing geodetic kinematic measurements. Measurement 2008, 41, 561–578. [Google Scholar]

- Gamse, S.; Wunderlich, T.A.; Wasmeier, P.; Kogoj, D. The use of Kalman filtering in combination with an electronic tacheometer. Proceedings of the 2010 International Conference on Indoor Positioning and Navigation, Zurich, Switzerland, 15–17 September 2010.

- The Data and Anlysis Center for Software. Available online: https://sw.csiac.org/techs/abstract/347157 (accessed on 27 August 2014).

- Lippitsch, A.; Lasseur, C. An alternative approach in metrological network deformation analysis employing kinematic and adaptive methods. Proceedings of the 12th FIG Symposium, Baden, Germany, 22–24 May 2006.

- Normal Probability Plot: Data Have Short Tails. Available online: http://www.itl.nist.gov/div898/handbook/eda/section3/normprp2.htm (accessed on 27 August 2014).

- Ristic, B.; Arulampalam, S.; Gordon, N. Beyond Kalman Filter: Particle Filters for Tracking Applications; Artech House: Boston, MA, USA, 2004. [Google Scholar]

- Fu, Z.; Hana, Y. Centroid weighted Kalman filter for visual object tracking. Measurement 2012, 45, 650–655. [Google Scholar]

| ∑ |Predicted-Measured| | ∑ |Filtered-Measured| | |

|---|---|---|

| [m] | [m] | |

| x | 3.785 | 0.935 |

| y | 3.590 | 0.597 |

| z | 1.031 | 0.095 |

| 1σ-Bound (Approximately 66% Inside) | 2σ-Bound (Approximately 95% Inside) | |||||

|---|---|---|---|---|---|---|

| x % inside | y % inside | z % inside | x % inside | y % inside | z % inside | |

| σω = 0.10 | , no gross errors | |||||

| 91.4 | 91.8 | 92.1 | 95.5 | 95.5 | 95.5 | |

| σω = 0.06 | , no gross errors | |||||

| 87.3 | 87.3 | 87.8 | 92.1 | 92.1 | 92.1 | |

| σω = 0.01 | , no gross errors | |||||

| 45.1 | 46.5 | 46.3 | 61.0 | 60.3 | 60.8 | |

| μ[m] | Confidence Interval [μ][m] | σ[m] | Confidence Interval [σ][m] | |

|---|---|---|---|---|

| innovation x | −0.0026 | [−0.0059, 0.0008] | 0.0360 | [0.0338, 0.0385] |

| innovation y | −0.0011 | [−0.0036, 0.0013] | 0.0265 | [0.0248, 0.0283] |

| innovation z | −0.0003 | [−0.0007, 0.0002] | 0.0049 | [0.0046, 0.0053] |

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gamse, S.; Nobakht-Ersi, F.; Sharifi, M.A. Statistical Process Control of a Kalman Filter Model. Sensors 2014, 14, 18053-18074. https://doi.org/10.3390/s141018053

Gamse S, Nobakht-Ersi F, Sharifi MA. Statistical Process Control of a Kalman Filter Model. Sensors. 2014; 14(10):18053-18074. https://doi.org/10.3390/s141018053

Chicago/Turabian StyleGamse, Sonja, Fereydoun Nobakht-Ersi, and Mohammad A. Sharifi. 2014. "Statistical Process Control of a Kalman Filter Model" Sensors 14, no. 10: 18053-18074. https://doi.org/10.3390/s141018053