A New Full Pose Measurement Method for Robot Calibration

Abstract

: Identification of robot kinematic errors during the calibration process often requires accurate full pose measurements (position and orientation) of robot end-effectors in Cartesian space. This paper proposes a new method of full pose measurement of robot end-effectors for calibration. This method is based on an analysis of the features of a set of target points (placed on a rotating end-effector) on a circular trajectory. The accurate measurement is validated by computational simulation results from the Puma robot. Moreover, experimental calibration and validation results for the Hyundai HA-06 robot prove the effectiveness, correctness, and reliability of the proposed method. This method can be applied to robots that have entirely revolute joints or to robots for which only the last joint is revolute.1. Introduction

Advanced applications such as off-line programming, robot-based measurement, and special robot-assisted surgery use highly accurate robotic manipulators. In order to satisfy the accuracy requirements of these applications, robots should undergo a calibration process, requiring practical full pose (position and orientation) measurements of robot end-effectors. The measurements are then used to identify robot kinematic parameters (unknown or approximately known). To acquire a full pose measurement of a robot end-effector (particularly the orientation one), previous researchers have used appropriate measurement devices that are expensive, relatively slow, and difficult to set up. Therefore, the aim of this work was to build a new method of full pose measurement that is accurate, easy to apply, and less time consuming.

Several authors have presented a non-contact full-pose measurement of robot end-effectors using visual systems [1-9] which utilize two cameras to capture 3D images [2,3,7], but the accuracy is low. In order to enhance identification speed and reliability, other researchers put markers, grids [4], or light tripe [5,6] on targets. Other systems involved cameras fixed on end-effectors to view targets more closely instead of zooming, which reduces a camera's field of view [8,9]. These visual systems still have limitations for accurate measurement of position and orientation of robot end-effectors.

Omodei et al. designed an optical device for full pose measurement with greater accuracy, and applied it in the calibration of a SCARA robot [10]; this device is specific and cannot be utilized for general robot types. Everett and Driels attached a special apparatus to the last robot link that has an arrangement of intermediate points, and end-effector full pose measurements are obtained based on these points [11,12]. Everett [11] utilized a single point sensor technique and an orientation fixture to collect the full pose (position and orientation) of robot end-effectors, while Driels used a coordinate measuring machine (CMM) to measure the center positions of five balls arranged on specially designed end-effectors [12]. Both the methods of Everett and those of Driels are reliable for robot calibration, but they have disadvantages due to the manufacturing costs of the special tools attached on the last robot link; the tools need to be pre-calibrated before use, making the measurement process slow, laborious, and time-consuming. Most recently, Nubiola et al. [13] proposed a full pose measurement method of robot end-effector using a single telescoping ball-bar and two planar fixtures, each fixture bears three magnetic cups which are located at the vertices of an equal triangle. This device has a hexapod geometry. This method [13] has some advantages such as highly accurate measurement, low cost and practical applications. However, it would be more perfect if one could enlarge the measurement range, reduce laborious involvement, eliminate the need for prior calibration of fixtures, and increase the number of measurable orientations.

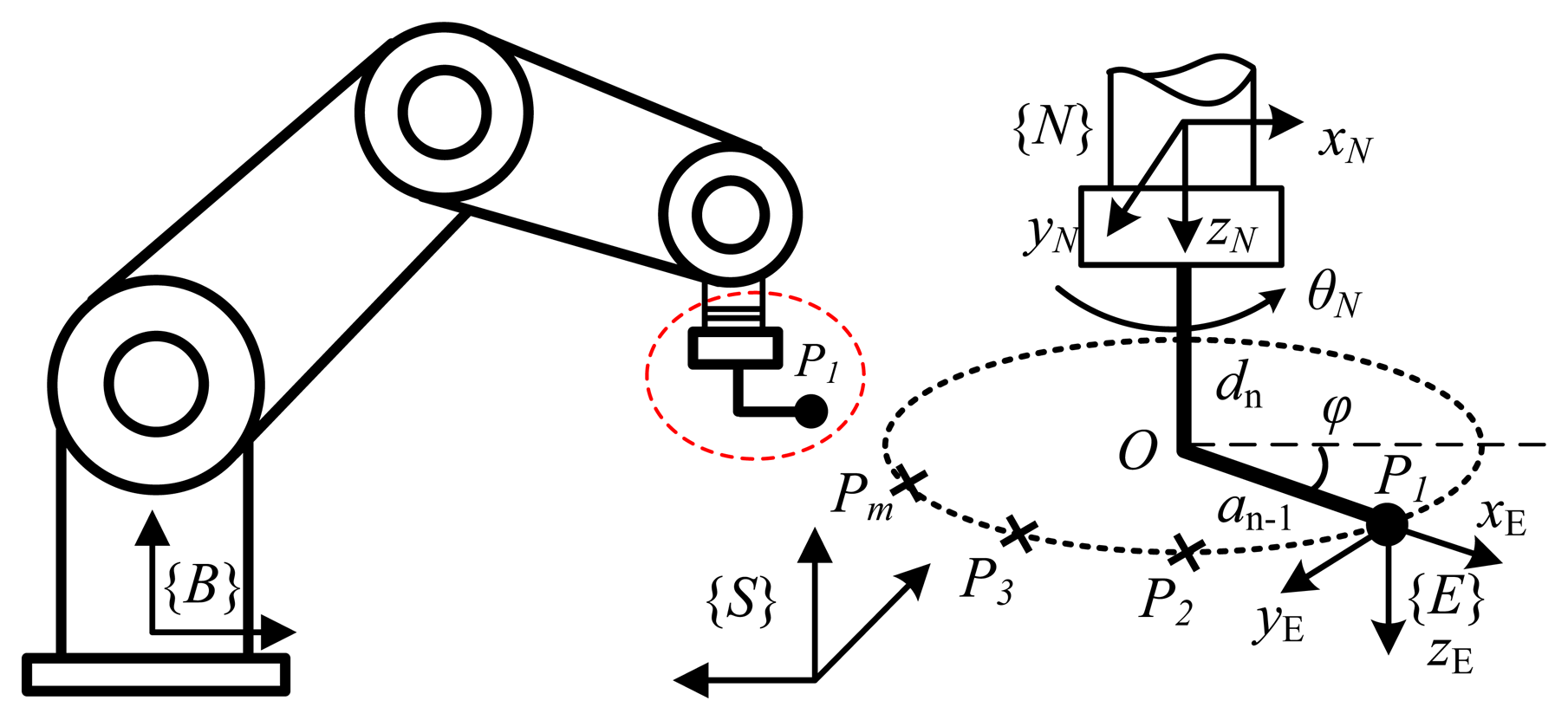

This research proposes a new method for full pose measurement of end-effectors for robot calibration. This method provides a robot's full pose based on a set of discrete points on a circular trajectory, measured by a non-contact 3D coordinate measuring device (e.g., a laser tracker). Devices that utilize laser interferometry are widely used due to their high accuracy, fast measurement, large measuring range, and ease of use [14-17]. For robot configuration, the trajectory of a target fixed on a robot end-effector (tool) is measured when the end-effector is rotated uniquely; in this way one could obtain an arc of a circle and its center point O located on a rotation axis. An orthogonal vector of the rotation plane (which contains the arc of the circle) determines the z axis of the coordinate frame assigned to end-effector {E}. A vector connecting an initial point O with a terminal point P1 (Figure 1) determines the x axis of frame {E}, and axis y completes this orthogonal coordinate system. The proposed method benefits from the high accuracy of available 3D point measuring devices, and can be automated; therefore the application of the method is simple, relatively fast, and easy to set up. It also does not use any special tools that have additional manufacturing costs or need pre-calibration. The measurement accuracy of the method is evaluated by comparing deviation between two frames {E} and {E'} that are fixed on the robot end-effector, where frame {E} is computed by the proposed method, while frame {E'} is obtained by robot forward kinematics. The accuracy of the proposed method is evaluated via simulation on a Puma robot, and is demonstrated via experimental calibration on a Hyundai HA-06 robot.

In Section 2, the principles of the measurement method are presented and the plane and center points of rotation are identified. Section 3 presents an evaluation of the measurement accuracy of the method via a simulation on Puma robot, while Section 4 presents experimental calibration results for an HA-06 robot with full-pose measurements obtained by the proposed method. Section 5 presents our conclusions.

2. Principle of the Measurement Method

The proposed measurement method needs to determine two features: the rotation axis of the last robot joint and rotation center for the acquired position, and an orientation of the robot end-effector (i.e., coordinate frame {E}) at every robot configuration. The basic principle of the measurement method is specified in three steps (Figure 1). In the first step, the measured trajectory of a target on a robot tool is a set of discrete points that lie on an arc of a circle when the last joint of the robot moves. In the second step, the rotation plane that contains the arc is identified. Finally, the center of the rotation situated on the last robot joint is also determined. The two features, which include the orthogonal vector of the rotation plane and the center of the rotation, determine the origin position and orientation of the coordinate frame {E} with respect to the sensor frame {S}. The two computation steps of the proposed method are identification of the plane and center point of rotation.

2.1. Identification of Rotation Plane

In order to establish a rotation plane, the last joint of an N degree of freedom (dof) robot is rotated while the other joints are locked. A target that is fixed on the robot end-effector generates an arc of a circle in Cartesian space; this arc contains the set of m positions of the target, which corresponds to the m angle positions of the last joint. A rotation plane that fits to this set of m points could be identified by applying a least squares algorithm.

A form of a rotation plane equation in Cartesian space can be presented as follows [18]:

A plane, which fits a set of measured points (xi, yi, zi), i = 1,…, m, is obtained by solving the minimization problem for which the objective function is:

The solution for Equation (2) in terms of the coefficients E, F, G can be found as follows:

The covariance values in Equation (3) can be found as follows:

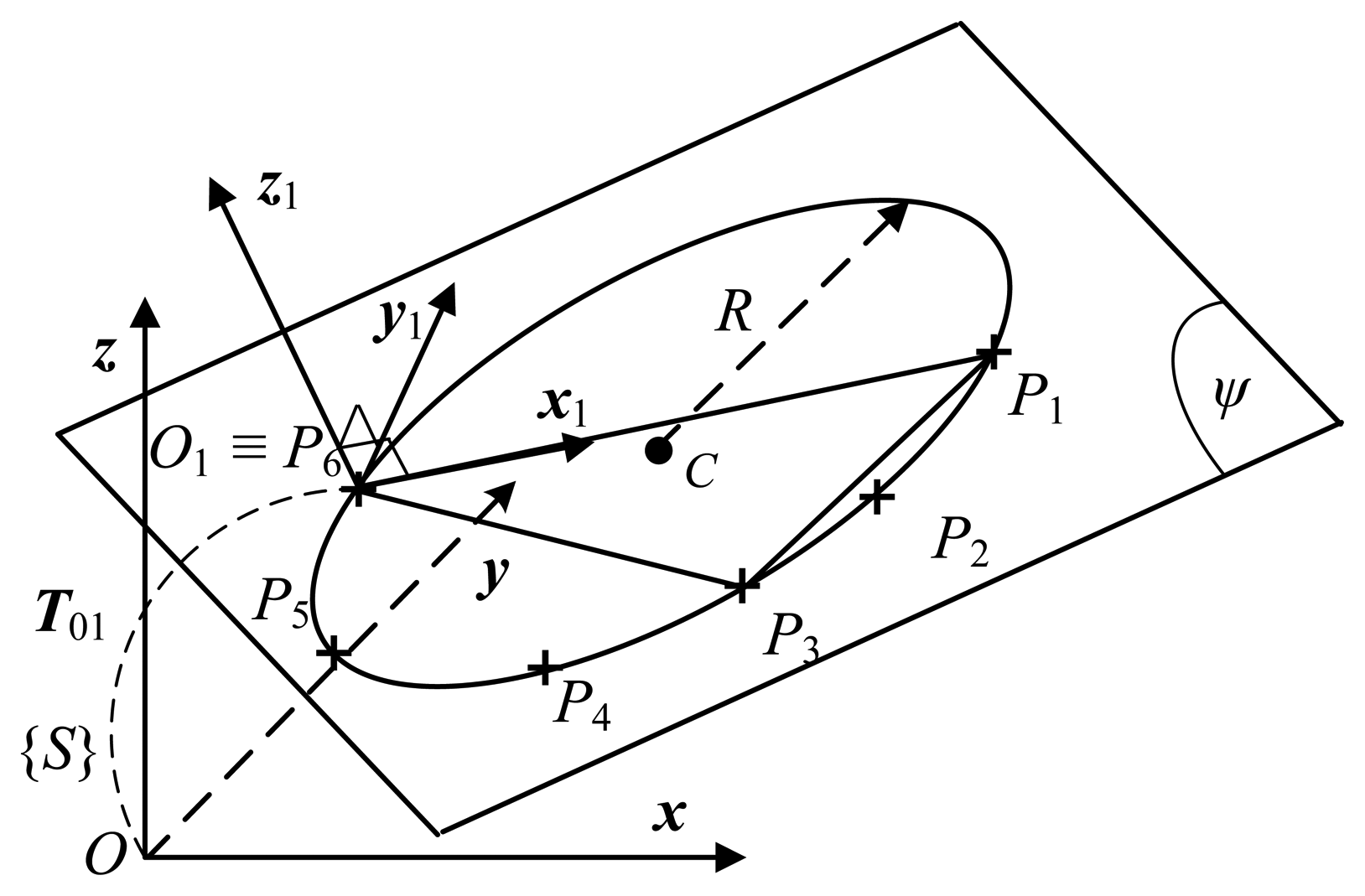

The fitting plane is obtained by minimizing the objective function in Equation (2) in terms of the z coordinate of a sensor reference frame {S}. Therefore, in order to increase the accuracy of the fitting (or eliminate the systematic errors in the z axis), the measured points (point cloud) have to be transferred to another coordinate frame O1x1y1z1 such that the cloud of m points locates very closed to the plane O1x1y1 (the plane ψ in Figure 2) [19]. For simplicity, the frame O1x1y1z1 is going to be constructed for a case m = 6 including measured points P1, P2, P3, P4, P5 and P6 as in Figure 2. First, the farthest three points (P1, P3 and P6) among the six points are selected. A plane ψ, which contains the three points, is obtained. A normal vector of the plane determines the axis z1. The axis x1 is defined by unit vector , then the axis y1 completes the orthogonal coordinate frame O1x1y1z1 by the cross product of the known vectors: y1 = z1 × x1.

Note that the frame O1x1y1z1 is arbitrarily located with respect to the reference frame Oxyz (sensor frame {S}). The points P1 – P6 are transferred to the frame O1x1y1z1. A plane fitting these points is obtained. Then, a circle that is on the identified plane and contains the points is also determined (see Subsection 2.2). The equations of the plane and the circle, which are currently expressed with respect to the frame O1x1y1z1, are going to be transferred back to the reference frame Oxyz for further using in the next step (see Subsection 2.3). As a result, an approximation of 3D point cloud using a plane and a circle will eliminate systematic errors in terms of the z axis of the reference frame.

2.2. Identification of Rotation Center

Theoretically, the trajectory of a point on a rotating rigid body about a fixed axis is a circle. This circle is on an orthogonal plane of the fixed axis. However, in practice, due to some factors (for examples assembly tolerance between spindle and bearing, vibration, measurement noise and so on) the trajectory can be a general curve (for example, an ellipse, circle and so on). If we assume that the deviation between the curve (ellipse) trajectory and the theoretical circle trajectory is sufficiently small, the deviation is then considered as noise. Therefore, a circle trajectory model will be used to fit these points.

After the above rotation plane is obtained, a least squares algorithm is applied to identify a circle that is on the identified rotation plane and contains the set of m measured points [18].

A standard form of a circle equation is as follows:

The circle Equation (7) can be rewritten in the following form:

The circle, which contains the set of measured points (xi, yi, zi), i = 1,…, m, can be obtained by solving the minimization problem for which the objective function is:

A solution of Equation (9) in terms of the coefficients A, B, C can be computed as follows:

In this step the rotation plane is identified. The center point of rotation can also then be identified after some basic manipulation from Equation (8) to Equation (7). The two features of the measured point set are then available for the next derivation step of full-pose measurements.

2.3. Derivation of Full Pose Measurements

A system consists of a robotic manipulator with N dof and a 3D point sensing device as shown in Figure 1. The measurement process is executed in the following steps: first, at a specific robot configuration, a set of m Cartesian positions of target on end-effector is captured in terms of the sensor frame {S}. Next, a rotation plane and the center of an arc of a circle that fits these points are identified (see Subsections 2.1 and 2.2). Finally, a full pose measurement of the end-effector is derived by the following procedure:

- ‐

The robot configures itself such that a target on the end-effector comes to specific position P1.

- ‐

All robot joints are kept fixed except the last one, N. Next, the last joint is rotated in a positive direction such that the target comes to points P2,…, Pm (m ≥ 3), and a set of these points are recorded.

- ‐

The set of points P1,…, Pm are fitted with a plane in Cartesian space (see Section 2.1).

- ‐

On the determined plane, the arc of the circle that fits the set of points is identified (see Section 2.2), and its center O is also determined.

- ‐

An orthogonal vector of the rotation plane n, which has unit length (║n║=1;, defines the axis zE of coordinate frame {E}. A vector that connects center point O and point P1, defines the axis xE of the frame {E}, Finally, the axis yE completes the orthogonal coordinate frame {E} by the cross product of the known vectors: yE= zE × xE.

- ‐

The full pose of the end-effector (i.e., the pose of frame {E}) is described with respect to the sensor frame {S} as in the following transformation matrix:

where the relative position of frame {E} with respect to frame {S} is determined by a column vector of coordinates of point P1: p1 = [xP1yP1zP1]T

Transformation from the last link frame {N} to the tool frame {E} (Figure 1) requires the following basic transformations [20]: rotation about axis z with an angle φ; translation along axis x with distance aN; and translation along axis z with distance dN+ 1 (refer to [20] for more details).

3. Evaluation for Measurement Accuracy

The measurements acquired by the proposed method for the robot calibration process should have an accuracy level. Therefore, it is necessary to evaluate the accuracy of measurements before applying them in the calibration process. This evaluation is performed by comparing deviation (error) between two coordinate frames {E} and {E'}, where frame {E} is computed by the proposed method, and frame {E'} is computed by the direct forward kinematics of the robotic manipulator. Criteria for comparison are: average position errors computed by Equation (14); standard deviations of these position errors computed by Equation (15); average Euler angle errors (order x, y, z) computed by Equation (16); and standard deviations of these Euler angle errors computed by Equation (17):

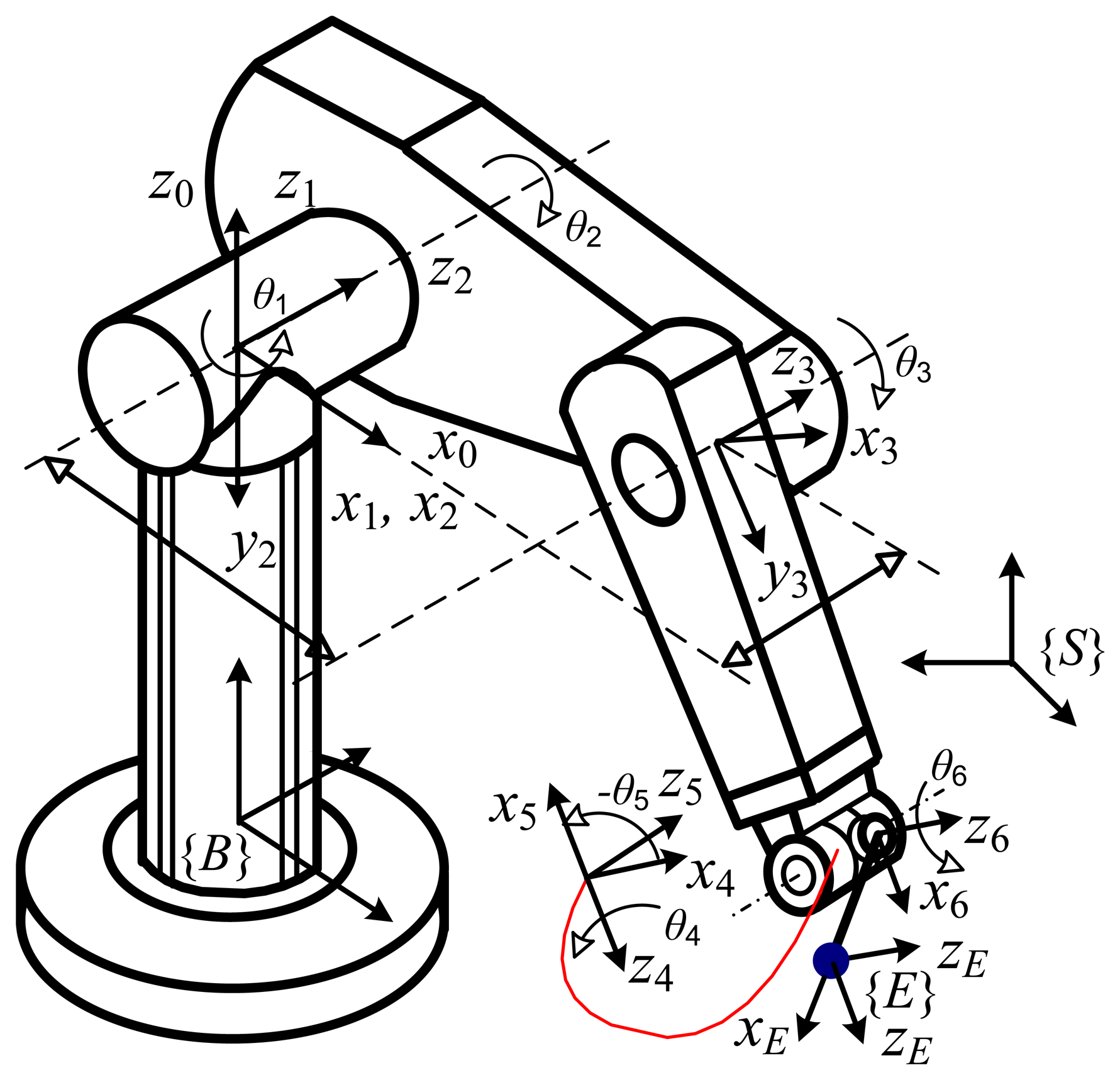

The accuracy of measurements acquired by this method can be evaluated via simulation for a specific robot. The typical Puma manipulator (Figure 3) was utilized in this evaluation process. The nominal model and corresponding Danevit Hartenberg (D-H) parameters of the Puma robot are presented in detail by Craig [20]. Applying the proposed method above, full pose measurements of robot Puma are obtained at a number of g = 30 robot configurations. The forward kinematic transformation determines the full pose of frame {E'} with respect to frame {S}. To compute the full pose of frame {E}, we rotate the last joint through m = 6 angle positions in positive angle increments of 20 degrees; a number of 6 target positions in Cartesian space can then be obtained, and full pose measurements of frame {E} with respect to frame {S} are then determined by the proposed method. Because measurement noise always exists in a practical measurement process and affects the accuracy of measurements, in this simulation Cartesian target positions should be corrupted by adding a (3 × 1) vector of random measurement noise values (Gauss distribution N[0,σ] with standard deviation σ = 0.01 [mm]).

Tables 1 and 2 present the computed comparison results. These results show that position and angle Euler errors are sufficiently small. Therefore, full pose measurements of the robot end-effector obtained by the proposed method are accurate enough for application in robot calibration.

4. Application of the Measurement Method in Practical Calibration

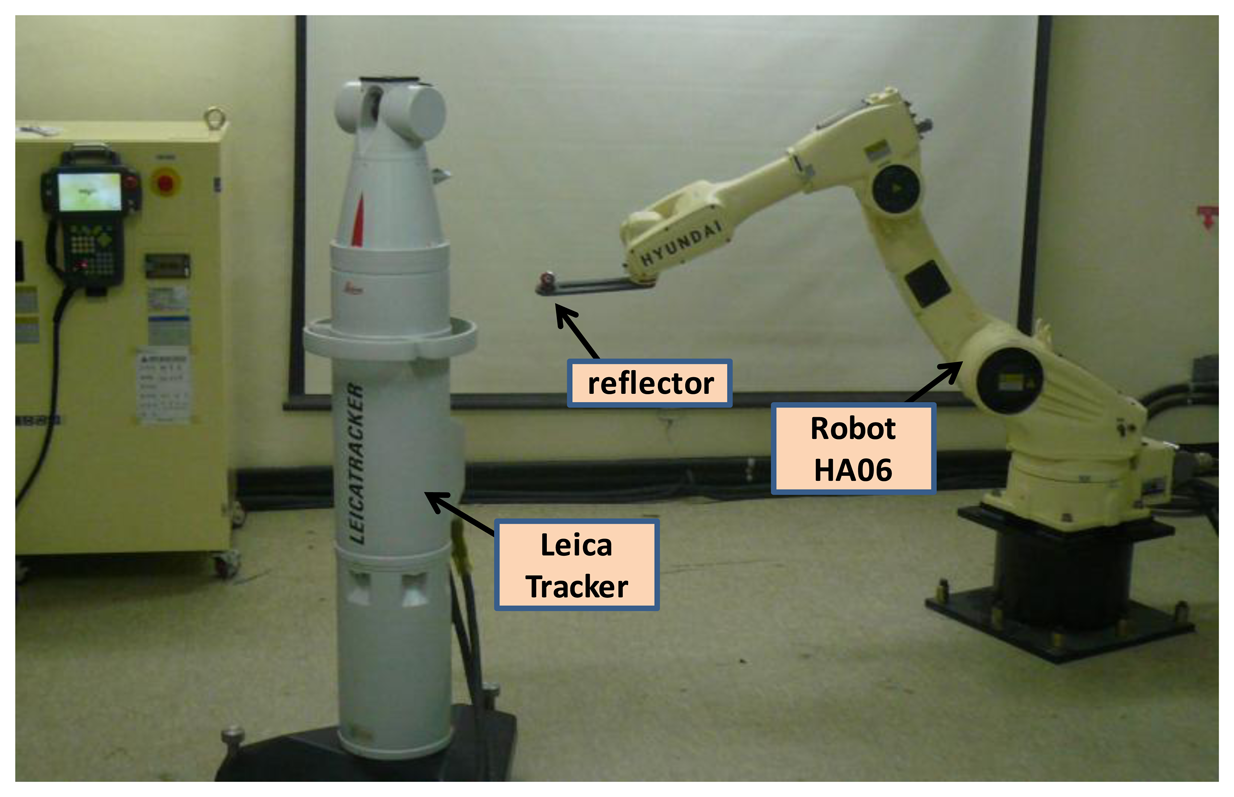

In order to identify robot kinematic errors we must first measure the position and orientation of the robot end-effector. This study applied the proposed measurement method in an experimental calibration for a Hyundai HA-06 robot. The system consisted of a Hyundai HA-06 robot (six dof and repeatability ±0.05 mm), a 3D measuring device (Laser Tracker LTD800 with accuracy of ±5 micron/m), and a laser reflector attached to the robot end-effector (Figure 4).

4.1. Kinematic Model of the HA-06 Robot

The nominal model of the HA-06 robot was established by using the D-H convention [21]. The frames are assigned from the robot base to the end-effector as in Figure 5. The according nominal D-H parameters are given in Table 3. A transformation from the base frame to the end-effector frame is computed as follows:

The base transformation can be computed from six basic transforms as follows:

The parameters y0 and β0 do not exist in the nominal robot model, however, y0 and β0 must be included in the calibration robot model. The tool transformation (sees Figures 1 and 5) needs basic transforms: rotation about axis z with an angle φ, translation along axis x with distance a6, and translation along axis z with distance d7 as follows:

Because the robot model Equation (18) is used for calibration, this nominal model should be slightly modified for a case in which the link has two consecutively parallel axes [22]. Then, the individual transform in Equation (18) is modified to satisfy the properties of the model: complete, proportionality and continuous [23]. Specifically, the transformation is modified as follows:

Robot kinematic error sources can be classified into two types: link geometric errors and non-geometric errors (such as link, joint deformation, joint backlash and so on). Because the HA-06 robot is a light weight manipulator, we assume that the robot has high link and joint stiffness, so the robot pose errors are only caused by link geometric errors. The number of identifiable kinematic parameters of the HA-06 robot is n = 27 (d2 and d3 are dependent, θ6 and φ are dependent).

4.2. Mathematic Formulation for Identification

Robot calibration aims to identify robot model parameters to describe the most correctly its kinematics, consequently the robot pose accuracy is enhanced. This section presents a formulation for the identification of the aforementioned kinematic parameters.

A mathematical formula for error identification is obtained by differentiating homogenous transformation of Equation (18). This transformation describes a relationship between the robot's kinematic errors and its end-effector pose errors as follows [24]:

4.3. Experimental Results for HA-06 Robot Calibration

Measurement for calibration is performed for 56 different robot configurations. For each configuration all robot joints are fixed except for the last joint, which rotates through m = 6 angle positions with positive angle increments of +20 [deg], and six positions of a target on an arc of a circle (m = 6) are recorded. Finally, the full pose (position and orientation) of the end-effector is derived by the proposed procedure (presented above in Section 2.3). As a result, a set Q1 of 56 full end-effector poses is obtained. By using all 56 full poses we can formulate an over-determined system of 6 × 56 = 336 differential equations based on Equation (23). The solution of this system of equations in the sense of least squares is the robot kinematic errors [26] as follows:

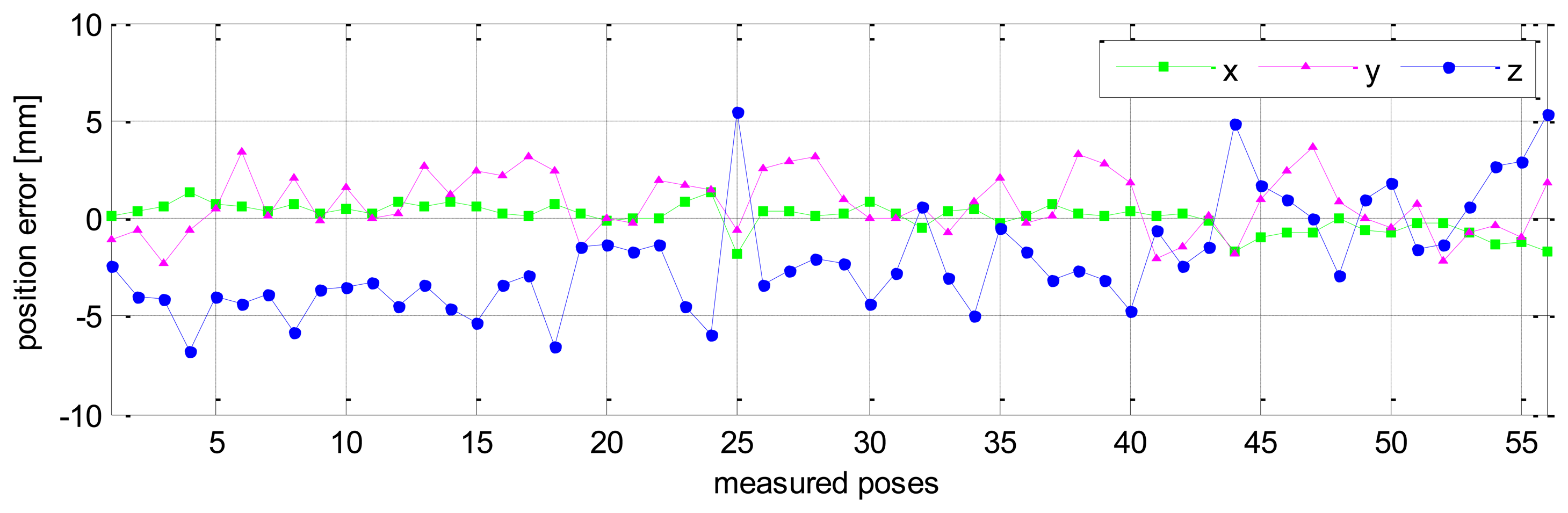

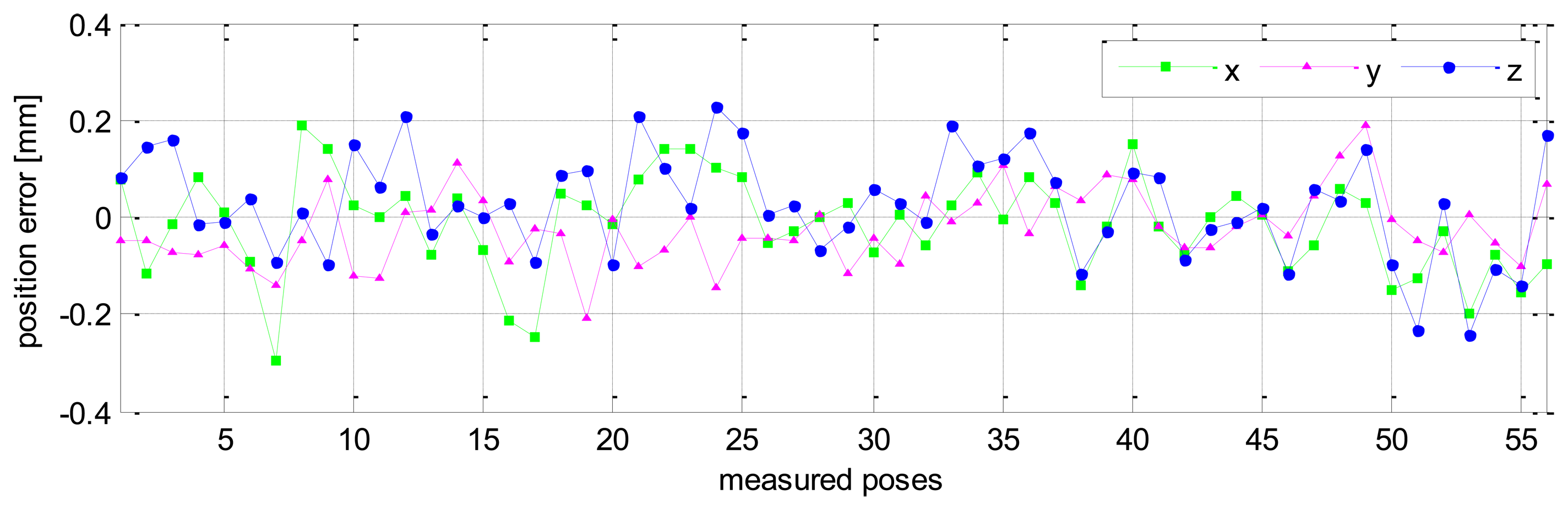

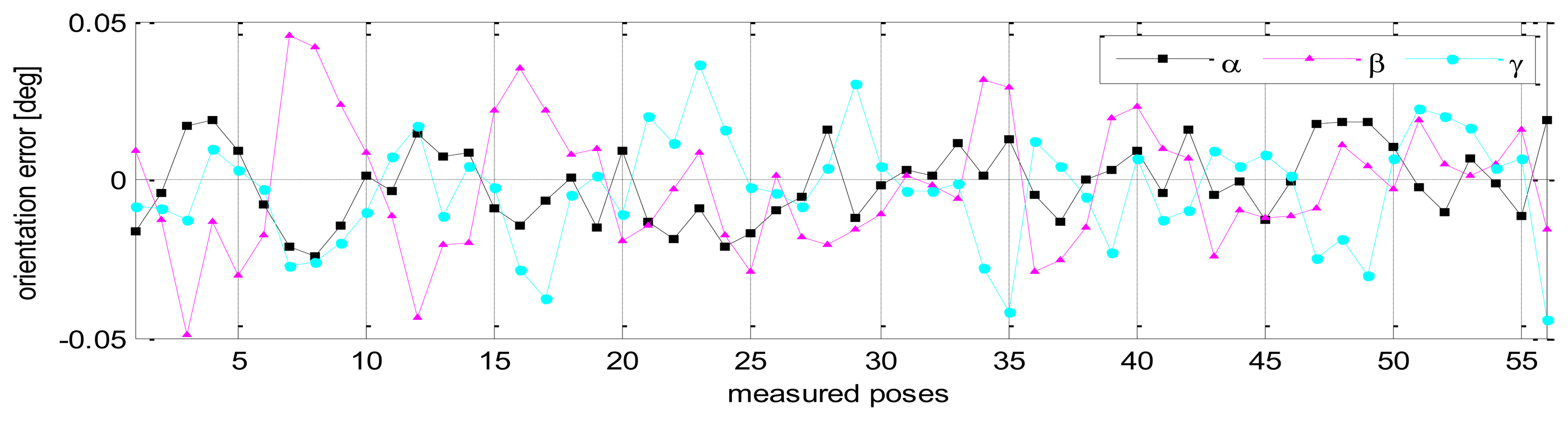

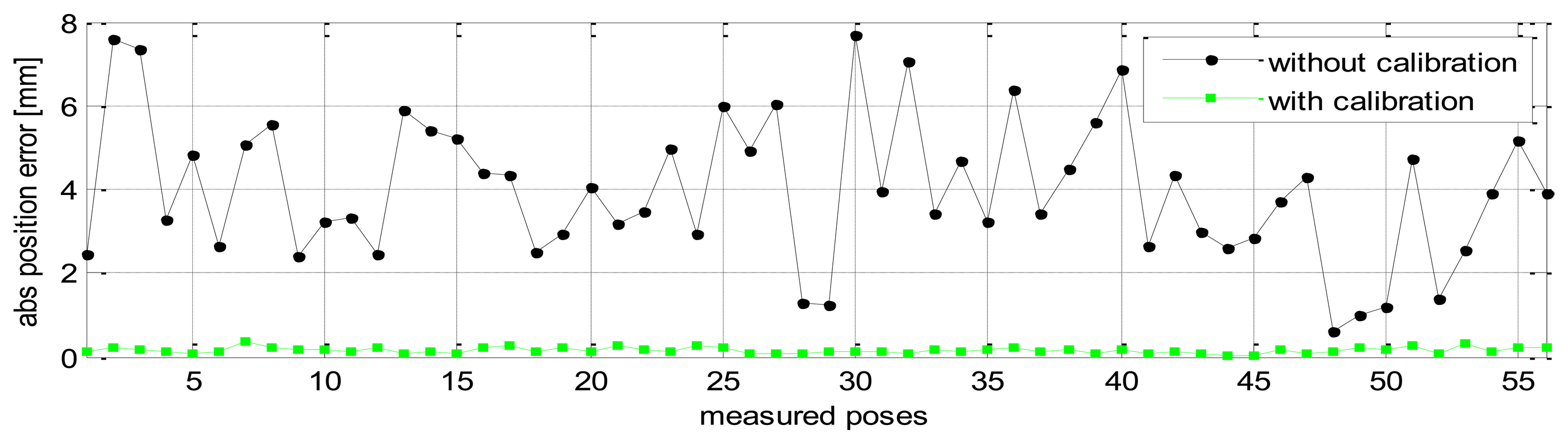

Without calibration, the position accuracy of the HA-06 robot (which is computed over 56 measured configurations) is 3.6573 [mm], and its orientation accuracy about axes x, y, z (which is represented by Euler angle errors) is 0.33022, 0.67187, 1.4004 [deg], respectively (more details are shown in Table 4). After calibration, the position and orientation accuracy of the robot is significantly improved; particularly, the position accuracy is now 0.12933 [mm]; orientation accuracy is 0.00864 [deg] about axis x, 0.01658 [deg] about axis y, and 0.01286 [deg] about axis z (more details are also shown in Table 4). Figures 6 and 7 show the position accuracy of the robot for each configuration along directions x, y, z before and after calibration. The position accuracy along directions x, y, z is always in a range of [-0.3, +0.3] (mm). Figure 8 shows the absolute position accuracy at each measured pose before and after calibration. Figures 9 and 10 show the orientation accuracy of the robot for each configuration about axes x, y, z before and after calibration, respectively. The orientation accuracy about axes x, y, z is always in a range of [-0.05, +0.05] (deg).

The experimental results for the HA-06 robot show that robot pose accuracy is enhanced significantly after calibration. The proposed measurement method supplied the accurate full-pose measurements for this calibration and had some properties of effectiveness, correctness and practical applicability.

In many industrial applications, the robots have to operate over their full wide workspace. Therefore, a robot after calibration should have the same level of accuracy across its workspace. The pose accuracy of the HA-06 robot after calibration was validated with a set of 56 end-effector poses named Q2. The pose set Q2 was selected arbitrarily in whole robot workspace such that they were different from the set Q1. Note that the procedures for measuring both of the pose sets Q1 and Q2 are similar.

The validation results of robot pose accuracy after calibration are illustrated in Figures 11, 12 and 13. Figure 11 shows the robot position accuracy along axes x, y, z. These values are in the range of [-0.3, +0.3] (mm). Figure 12 shows orientation accuracy about axes x, y, z. These values are in the range of [-0.05, +0.05] (deg). Figure 13 presents the robot's absolute position accuracy at the validation poses Q2 (without calibration: mean value is 3.989 [mm], maximum value is 7.7028 [mm]; with calibration: mean value is 0.1544 [mm], maximum value is 0.3403 [mm]). The absolute position accuracy with the pose set Q1 and the pose set Q2 are display on the same graph as in Figure 14. These figures show that the absolute position accuracy is less than 0.4 [mm]. The results in Figures 11, 12, 13 and 14 prove that the robot has the same level of pose accuracy over the workspace. After calibration, the absolute position and orientation accuracies robot HA-06 are better than 0.4 [mm] and 0.05 [deg], respectively.

5. Conclusions

This paper proposes a new method for the full pose measurement of an end-effector for robot kinematic calibration. Full pose measurements could be obtained by analyzing the features of a set of target points (designated on a robot end-effector) on an arc of a circle, such as an orthogonal vector of a rotation plane and a rotation center. These points are measured by using external point sensing devices. This method benefits from the accuracy of available point measurement devices, such as Laser Tracker. The measurement procedure is simple, fast, easy to set up, and can be automated. It also does not use any special apparatus with an arrangement of intermediate measured points; therefore, no additional manufacturing costs or pre-calibration steps are required.

The measurement accuracy of this method was gauged as sufficient based upon computational simulation results from the Puma robot. Experimental calibration for an HA-06 robot was performed to prove the practical effectiveness and accuracy of this method. The experimental results show that after calibration the HA-06 robot had enhanced position accuracy of 0.12933 [mm] (before calibration: 3.6573 [mm]), and increased orientation accuracy about axes x, y, and z of 0.00864 [deg], 0.01658 [deg], and 0.01286 [deg], respectively (before calibration: 0.33022 [deg], 0.67187 [deg] and 1.4004 [deg], respectively). These results provide evidence that the proposed method for full-pose measurement is accurate and reliable for practical robot calibration. The validation results also demonstrated that the HA-06 robot has the same level of pose accuracy over its full workspace. The new measurement method can be applied to robotic manipulators that have all joints or for which only the last joint is revolute. In the future we will evaluate the accuracy of this full pose-based calibration method on robots containing revolute and prismatic joints.

Acknowledgments

This work was supported by the 2011 research fund of University of Ulsan.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hutchinson, S.; Hager, G.D.; Corke, P.I. A tutorial on visual servo control. IEEE Trans. Robot. Auto. 1996, 12, 651–670. [Google Scholar]

- Zhang, Z.; Luong, Q.-T.; Faugeras, O. Motion of an un-calibrated stereo rig: Self-calibration and metric reconstruction. IEEE Trans. Robot. Auto. 1996, 12, 103–113. [Google Scholar]

- Grosso, E.; Metta, G.; Oddera, A.; Sandini, G. Robust visual servoing in 3-D reaching tasks. IEEE Trans. Robot. Auto. 1996, 12, 732–742. [Google Scholar]

- Dudek, G.; Jenkin, M.; Milios, E.; Wilkes, D. Using multiple markers in graph exploration. Proc. SPIE 1989, 1195, 77–87. [Google Scholar]

- Kemmotsu, K.; Kanade, T. Sensor Placement Design for Object Pose Determination with Three Light-Stripe Range Finders. Proceedings of 1994 IEEE International Conference on Robotics and Automation, San Diego, CA, USA, 8–13 May 1994; Volume 2, pp. 1357–1364.

- Khadraoui, D.; Motyl, G.; Martinet, P.; Gallice, J.; Chaumette, F. Visual servoing in robotics scheme using a camera/laser-stripe sensor. IEEE Trans. Robot. Auto. 1996, 12, 743–750. [Google Scholar]

- Hager, G.D.; Chang, W.-C.; Morse, A.S. Robot hand-eye coordination based on stereo vision. IEEE Cont. Syst. 1995, 15, 30–39. [Google Scholar]

- Hashimoto, K.; Ebine, T.; Kimura, H. Visual servoing with hand-eye manipulator-optimal control approach. IEEE Trans. Robot. Auto. 1996, 12, 766–774. [Google Scholar]

- Faibish, S.; Bacakoglu, H.; Goldenberg, A.A. An Hand Eye System for Automated Paper Recycling. Proceedings of 1997 IEEE International Conference on Robotics and Automation, Albuquerque, NM, USA, 20–25 April 1997; pp. 9–14.

- Omodei, A.; Legnani, G.; Adamini, R. Three methodologies for the calibration of industrial manipulators: Experimental results on a SCARA robot. J. Robot. Syst. 2000, 17, 291–307. [Google Scholar]

- Everett, L.J. Completing the forward kinematic calibration of open loop manipulators when single point position sensors are used. J. Robot. Syst. 1989, 6, 19–33. [Google Scholar]

- Morris, R.D. Full-pose calibration of a robot manipulator using a coordinate measuring machine. Int. J. Adv. Manuf. Technol. 1993, 8, 34–41. [Google Scholar]

- Nubiola, A.; Slamani, M.; Bonev, I.A. A new method for measuring a large set of poses with a single telescoping ballbar. Precis. Eng. 2013, 37, 451–460. [Google Scholar]

- Lau, K.; Hocken, R.; Haynes, L. Robot performance measurements using automatic laser tracking techniques. Robot. Comp. Int. Manuf. 1985, 2, 227–236. [Google Scholar]

- Bai, Y.; Zhuang, H.; Roth, Z.S. Experiment Study of PUMA Robot Calibration Using a Laser Tracking System. Proceedings of the 2003 IEEE International Workshop on Soft Computing in Industrial Applications (SMCia/03), Binghamton, NY, USA, 23–25 June 2003; pp. 139–144.

- Umetsu, K.; Furutnani, R.; Osawa, S.; Takatsuji, T.; Kurosawa, T. Geometric calibration of a coordinate measuring machine using a laser tracking system. Meas. Sci. Technol. 2005, 16. [Google Scholar] [CrossRef]

- Nubiola, A.; Bonev, I.A. Absolute calibration of an ABB IRB 1600 robot using a laser tracker. Robot. Comput. Integr. Manuf. 2013, 29, 236–245. [Google Scholar]

- Stone, H.W.; Sanderson, A.C. A Prototype Arm Signature Identification on System. Proceedings of 1987 IEEE International Conference on Robotics and Automation, Raleigh, NC, USA, March 1987; pp. 175–182.

- Abderrahim, M.; Whittaker, A.R. Kinematic model identification of industrial manipulators. Robot. Comp. Int. Manuf. 2000, 16, 1–8. [Google Scholar]

- Craig, J.J. Introduction to Robotics: Mechanics and Control, 2nd ed.; Addison Wiley: Boston, MA, USA, 1989. [Google Scholar]

- Hartenberg, R.S.; Denavit, J. A kinematic notation for lower pair mechanisms based on matrices. Trans. ASME/ J. App. Mech. 1955, 77, 215–221. [Google Scholar]

- Hayati, S.; Tso, K.; Roston, G. Robot Geometry Calibration. Proceedings of 1988 IEEE International Conference on Robotics and Automation, Philadelphia, PA, USA, 24–29 April 1988; Volume 2, pp. 947–951.

- Schröer, K.; Albright, S.L.; Grethlein, M. Complete, minimal and model-continuous kinematic models for robot calibration. Robot. Comp. Int. Manuf. 1997, 13, 73–85. [Google Scholar]

- Veitschegger, W.; Wu, C.-H. Robot accuracy analysis based on kinematics. IEEE J. Robot. Auto. 1986, 2, 171–179. [Google Scholar]

- Bennett, D.J.; Hollerbach, J.M. Autonomous calibration of single-loop closed kinematic chains formed by manipulators with passive endpoint constraints. IEEE Trans. Robot. Auto. 1991, 7, 597–606. [Google Scholar]

- Kang, H.-J.; Jeong, J.-W.; Shin, S.-W.; Suh, Y.-S.; Ro, Y.-S. Autonomous kinematic calibration of the robot manipulator with a linear laser-vision sensor. Lect. Notes Comput. Sci. 2007, 4682, 1102–1109. [Google Scholar]

| Position Error | Δx [mm] | Δy [mm] | Δz [mm] |

|---|---|---|---|

| Average value | Δx̄ = 0.0024074 | Δȳ = 0.0012843 | Δz̄ = 0.0036070 |

| Standard deviation | σx = 0.0088443 | σy = 0.0117850 | σz = 0.0086101 |

| Angle Error | Δα [deg] | Δβ [deg] | Δγ [deg] |

|---|---|---|---|

| Average value | Δᾱ = 0.0074211 | Δβ̄= -3.315 × 10-5 | Δγ̄ = 0.0063015 |

| Standard deviation | σα = 0.0319170 | σβ = 0.005819 | σγ = 0.0345080 |

| i | αi-1 | ai-1 | βi-1 | bi-1 | di | θi |

|---|---|---|---|---|---|---|

| 1 | 0 | 0 | 0 | 0 | 0.36 | θ1 |

| 2 | 90 | 0.200 | - | - | 0 | θ2 |

| 3 | 0 | 0.560 | 0 | - | 0 (×) | θ3 |

| 4 | 90 | 0.130 | - | - | 0.620 | θ4 |

| 5 | −90 | 0.0 | - | - | 0.0 | θ5 |

| 6 | 90 | 0.0 | - | - | 0.1 | θ6 (×) |

| 7 | - | 0.2 | - | - | 0.1 | φ |

| Mean Value | Std. | Max. Value | ||

|---|---|---|---|---|

| Absolute position accuracy [mm] | Before cal. | 3.6573 | 1.55090 | 7.0433 |

| After cal. | 0.12933 | 0.06618 | 0.32229 | |

| Absolute orientation accuracy about x axis: α Euler angle [deg] | Before cal. | 0.33022 | 0.18441 | 0.86927 |

| After cal. | 0.00864 | 0.00593 | 0.02649 | |

| Absolute orientation accuracy about y axis: β Euler angle [deg] | Before cal. | 0.67187 | 0.36829 | 1.4892 |

| After cal. | 0.01658 | 0.01164 | 0.0470 | |

| Absolute orientation accuracy about z axis: γ Euler angle [deg] | Before cal. | 1.4004 | 0.22133 | 1.7003 |

| After cal. | 0.01286 | 0.00962 | 0.0452 | |

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Nguyen, H.-N.; Zhou, J.; Kang, H.-J. A New Full Pose Measurement Method for Robot Calibration. Sensors 2013, 13, 9132-9147. https://doi.org/10.3390/s130709132

Nguyen H-N, Zhou J, Kang H-J. A New Full Pose Measurement Method for Robot Calibration. Sensors. 2013; 13(7):9132-9147. https://doi.org/10.3390/s130709132

Chicago/Turabian StyleNguyen, Hoai-Nhan, Jian Zhou, and Hee-Jun Kang. 2013. "A New Full Pose Measurement Method for Robot Calibration" Sensors 13, no. 7: 9132-9147. https://doi.org/10.3390/s130709132