Entropy in Cell Biology: Information Thermodynamics of a Binary Code and Szilard Engine Chain Model of Signal Transduction

Abstract

:1. Introduction

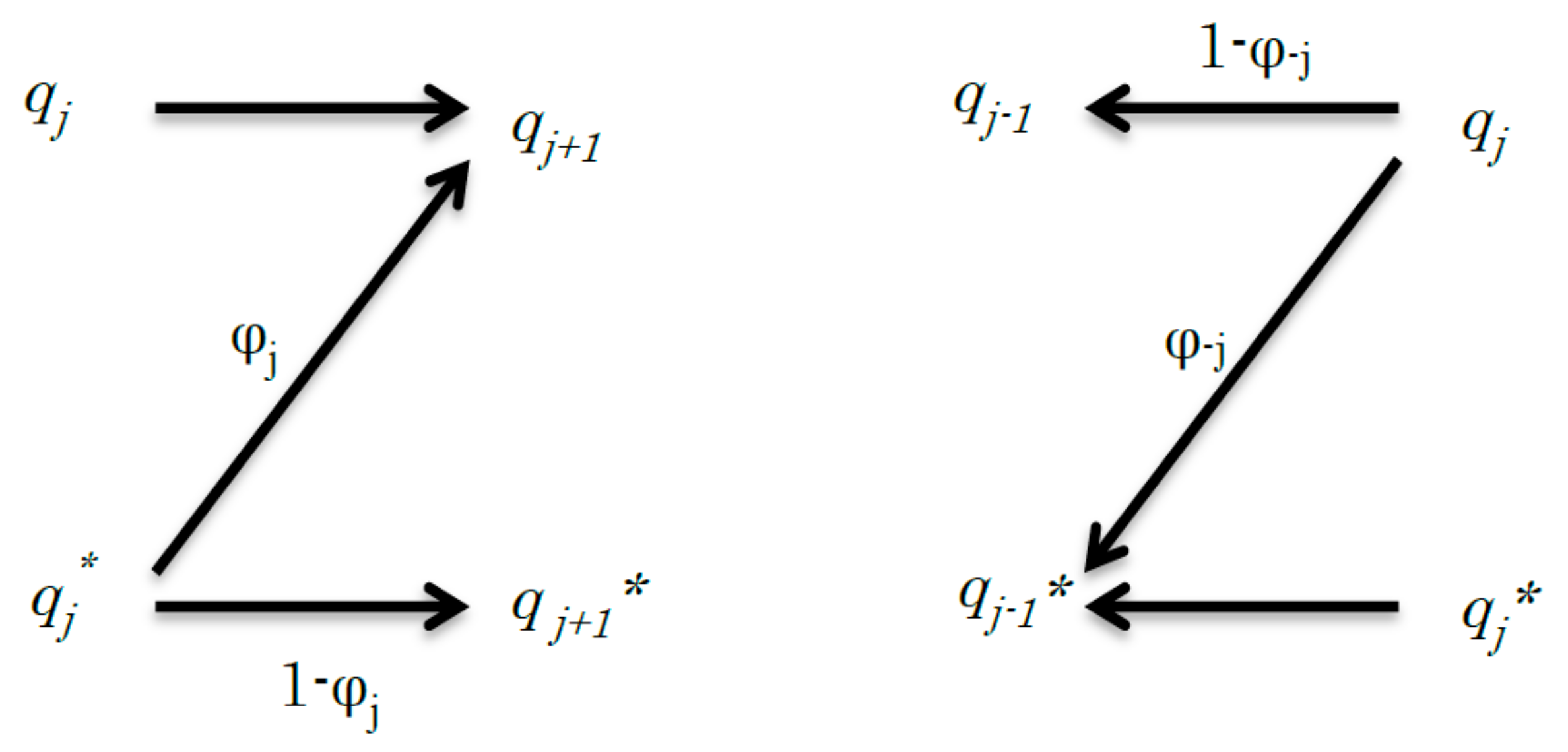

2. A Common BRC Model

3. Binary Code Model of BRCs

4. Mutual Entropy in BRCs

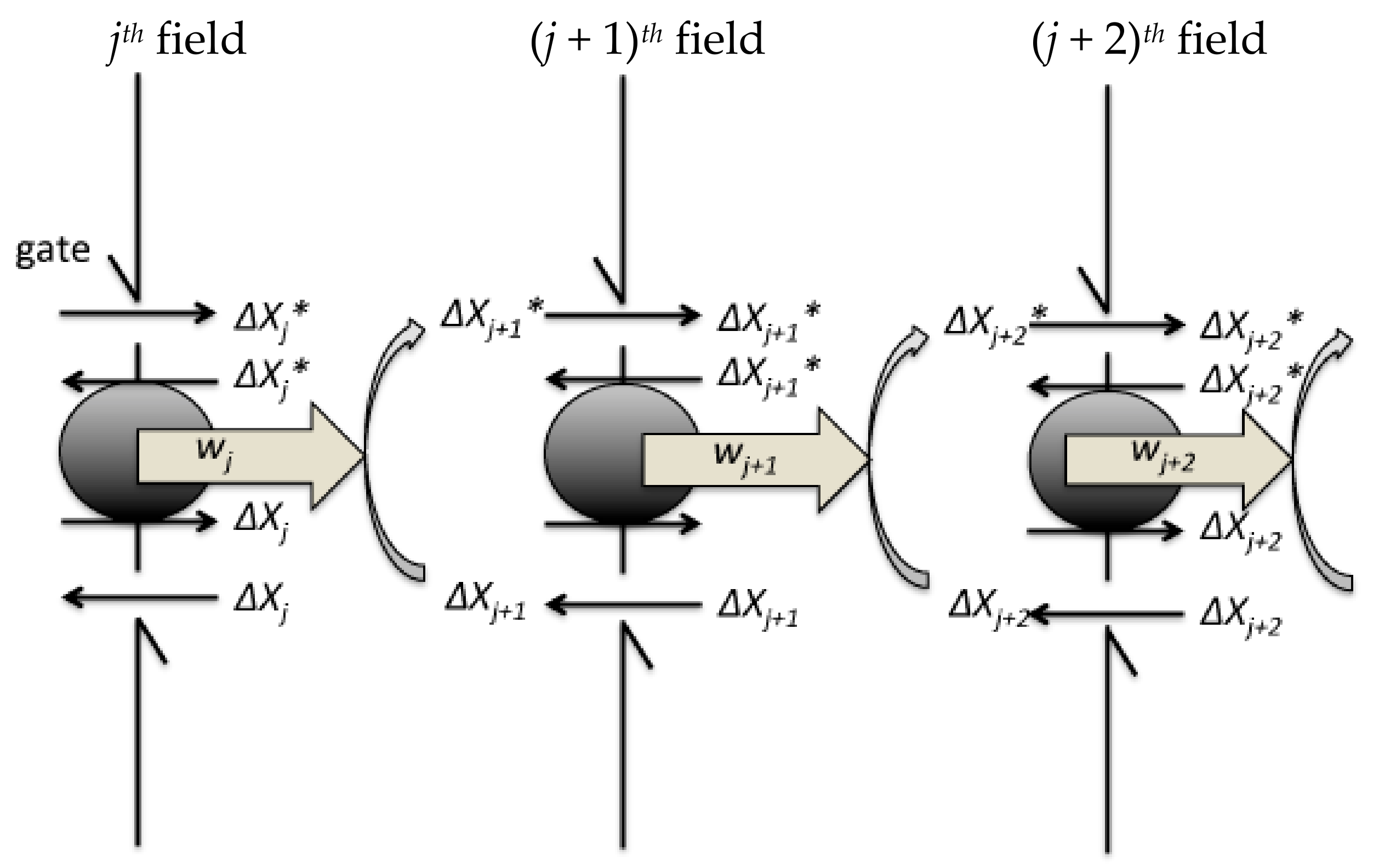

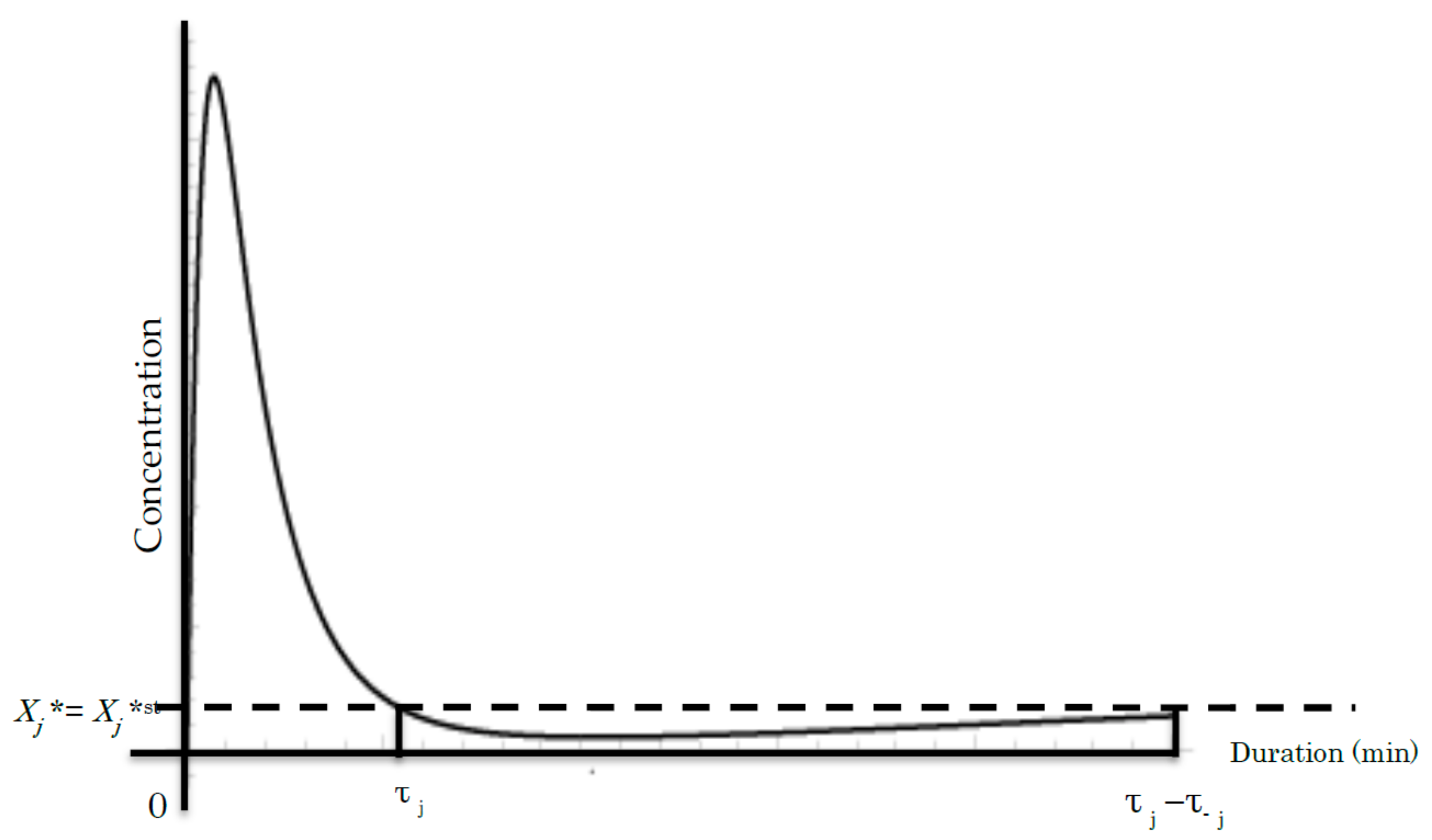

5. Szilard Engine Chain as a BRC Model

- (i)

- When the signal transduction initiates, the controller measures the changes in the concentration of the active molecule Xj+1* and Xj+1 in the jth field.

- (ii)

- At the jth step in the signalling cascade, the feedback controller introduces ΔXj+1* of Xj+1* to the (j + 1)th field from the jth field by opening the forward gate on the boundary in the jth field to the (j + 1)th field. Simultaneously, the controller introduces ΔXj+1 of Xj+1 to the (j + 1)th field from the jth field by opening the back gate on the boundary.

- (iii)

- Subsequently, Xj+1* can flow back with the forward transfer of Xj+1 from the (j + 1)th field to the jth field because of the entropy difference (see Equation (13)). Xj+1 can also flow back with the backward transfer of Xj+1 from the (j + 1)th field to the jth field because of the concentration gradient.

- (iv)

- In (iii), ΔXj+1* and ΔXj+1 can quasi-statically rotate the exchange machinery on the hypothetical partition between the jth and (j + 1)th fields, which has the ability to extract chemical work equivalent to wj+1 = kBThj+1.

- (v)

- As the next step, wj+1 is linked to the modification of Xj+2 into Xj+2*, which further causes the concentration difference of Xj+2* introduced by the feedback controller from the (j + 1)th field to the (j + 2)th field. The next step proceeds as aforementioned in (ii) to (iii).

6. Conservation of the Average Entropy Production

7. Conclusions

- (i)

- The BRC can be expressed by a kind of binary code system consisting of two types of signalling molecules: activated and inactivated.

- (ii)

- The individual reaction step of the BRC can be thought of as a cycle of a Szilard engine chain, in which the process of repeats of signalling molecule activation/inactivation.

- (iii)

- The average entropy production rate is consistent during BRC.

- (iv)

- The signal transduction amount can be calculated through the BRC.

Funding

Acknowledgments

Conflicts of Interest

References

- Guo, M.; Bao, E.L.; Wagner, M.; Whitsett, J.A.; Xu, Y. Slice: Determining cell differentiation and lineage based on single cell entropy. Nucleic Acids Res. 2017, 45, e54. [Google Scholar] [CrossRef] [PubMed]

- Cheng, F.; Liu, C.; Shen, B.; Zhao, Z. Investigating cellular network heterogeneity and modularity in cancer: A network entropy and unbalanced motif approach. BMC Syst. Biol. 2016, 10, 65. [Google Scholar] [CrossRef] [PubMed]

- Maire, T.; Youk, H. Molecular-level tuning of cellular autonomy controls the collective behaviors of cell populations. Cell Syst. 2015, 1, 349–360. [Google Scholar] [CrossRef] [PubMed]

- Olimpio, E.P.; Dang, Y.; Youk, H. Statistical dynamics of spatial-order formation by communicating cells. iScience 2018, 2, 27–40. [Google Scholar] [CrossRef]

- Mora, T.; Walczak, A.M.; Bialek, W.; Callan, C.G., Jr. Maximum entropy models for antibody diversity. Proc. Natl. Acad. Sci. USA 2010, 107, 5405–5410. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tugrul, M.; Paixao, T.; Barton, N.H.; Tkacik, G. Dynamics of transcription factor binding site evolution. PLoS Genet. 2015, 11, e1005639. [Google Scholar] [CrossRef] [PubMed]

- Orlandi, J.G.; Stetter, O.; Soriano, J.; Geisel, T.; Battaglia, D. Transfer entropy reconstruction and labeling of neuronal connections from simulated calcium imaging. PLoS ONE 2014, 9, e98842. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- McGrath, T.; Jones, N.S.; Ten Wolde, P.R.; Ouldridge, T.E. Biochemical machines for the interconversion of mutual information and work. Phys. Rev. Lett. 2017, 118, 028101. [Google Scholar] [CrossRef] [PubMed]

- Crofts, A.R. Life, information, entropy, and time: Vehicles for semantic inheritance. Complexity 2007, 13, 14–50. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Seifert, U. Stochastic thermodynamics of single enzymes and molecular motors. Eur. Phys. J. E Soft Matter 2011, 34, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Ito, S.; Sagawa, T. Information thermodynamics on causal networks. Phys. Rev. Lett. 2013, 111, 18063. [Google Scholar] [CrossRef] [PubMed]

- Ito, S.; Sagawa, T. Maxwell’s demon in biochemical signal transduction with feedback loop. Nat. Commun. 2015, 6, 7498. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sagawa, T.; Ueda, M. Minimal energy cost for thermodynamic information processing: Measurement and information erasure. Phys. Rev. Lett. 2009, 102, 250602. [Google Scholar] [CrossRef] [PubMed]

- Sagawa, T.; Ueda, M. Generalized jarzynski equality under nonequilibrium feedback control. Phys. Rev. Lett. 2010, 104, 090602. [Google Scholar] [CrossRef] [PubMed]

- Sagawa, T.; Kikuchi, Y.; Inoue, Y.; Takahashi, H.; Muraoka, T.; Kinbara, K.; Ishijima, A.; Fukuoka, H. Single-cell E. coli response to an instantaneously applied chemotactic signal. Biophys. J. 2014, 107, 730–739. [Google Scholar] [CrossRef] [PubMed]

- Tsuruyama, T. Information thermodynamics of the cell signal transduction as a szilard engine. Entropy 2018, 20, 224. [Google Scholar] [CrossRef]

- Tsuruyama, T. The conservation of average entropy production rate in a model of signal transduction: Information thermodynamics based on the fluctuation theorem. Entropy 2018, 20, 303. [Google Scholar] [CrossRef]

- Tsuruyama, T. Information thermodynamics derives the entropy current of cell signal transduction as a model of a binary coding system. Entropy 2018, 20, 145. [Google Scholar] [CrossRef]

- Tsuruyama, T. Analysis of Cell Signal Transduction Based on Kullback–Leibler Divergence: Channel Capacity and Conservation of Its Production Rate during Cascade. Entropy 2018, 20, 438. [Google Scholar] [CrossRef]

- Zumsande, M.; Gross, T. Bifurcations and chaos in the mapk signaling cascade. J. Theor. Biol. 2010, 265, 481–491. [Google Scholar] [CrossRef] [PubMed]

- Yoon, J.; Deisboeck, T.S. Investigating differential dynamics of the mapk signaling cascade using a multi-parametric global sensitivity analysis. PLoS ONE 2009, 4, e4560. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Ubl, J.J.; Stricker, R.; Reiser, G. Thrombin (par-1)-induced proliferation in astrocytes via mapk involves multiple signaling pathways. Am. J. Physiol. Cell Physiol. 2002, 283, C1351–C1364. [Google Scholar] [CrossRef] [PubMed]

- Qiao, L.; Nachbar, R.B.; Kevrekidis, I.G.; Shvartsman, S.Y. Bistability and oscillations in the huang-ferrell model of mapk signaling. PLoS Comput. Biol. 2007, 3, 1819–1826. [Google Scholar] [CrossRef] [PubMed]

- Purutçuoğlu, V.; Wit, E. Estimating network kinetics of the mapk/erk pathway using biochemical data. Math. Probl. Eng. 2012, 2012, 1–34. [Google Scholar] [CrossRef] [Green Version]

- Blossey, R.; Bodart, J.F.; Devys, A.; Goudon, T.; Lafitte, P. Signal propagation of the mapk cascade in xenopus oocytes: Role of bistability and ultrasensitivity for a mixed problem. J. Math. Biol. 2012, 64, 1–39. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.L.; Cheng, Y.; Zhou, X.; Lee, K.H.; Nakagawa, K.; Niho, S.; Tsuji, F.; Linke, R.; Rosell, R.; Corral, J.; et al. Dacomitinib versus gefitinib as first-line treatment for patients with EGFR-mutation-positive non-small-cell lung cancer (ARCHER 1050): A randomised, open-label, phase 3 trial. Lancet Oncol. 2017, 18, 1454–1466. [Google Scholar] [CrossRef]

- Andrieux, D.; Gaspard, P. Fluctuation theorem and onsager reciprocity relations. J. Chem. Phys. 2004, 121, 6167–6174. [Google Scholar] [CrossRef] [PubMed]

- Andrieux, D.; Gaspard, P. Temporal disorder and fluctuation theorem in chemical reactions. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2008, 77, 031137. [Google Scholar] [CrossRef] [PubMed]

- Gaspard, P. Fluctuation theorem for nonequilibrium reactions. J. Chem. Phys. 2004, 120, 8898–8905. [Google Scholar] [CrossRef] [PubMed]

- Szilárd, L. Über die entropieverminderung in einem thermodynamischen system bei eingriffen intelligenter wesen. Zeitschrift für Physik 1929, 53, 840–856. [Google Scholar] [CrossRef]

- Szilard, L. On the decrease of entropy in a thermodynamic system by the intervention of intelligent beings. Behav. Sci. 1964, 9, 301–310. [Google Scholar] [CrossRef] [PubMed]

- Schmick, M.; Liu, Q.; Ouyang, Q.; Markus, M. Fluctuation theorem for a single particle in a moving billiard: Experiments and simulations. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2007, 76, 021115. [Google Scholar] [CrossRef] [PubMed]

- Schmick, M.; Markus, M. Fluctuation theorem for a deterministic one-particle system. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2004, 70, 065101. [Google Scholar] [CrossRef] [PubMed]

- Chong, S.H.; Otsuki, M.; Hayakawa, H. Generalized green-kubo relation and integral fluctuation theorem for driven dissipative systems without microscopic time reversibility. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2010, 81, 041130. [Google Scholar] [CrossRef] [PubMed]

- Mina, M.; Magi, S.; Jurman, G.; Itoh, M.; Kawaji, H.; Lassmann, T.; Arner, E.; Forrest, A.R.; Carninci, P.; Hayashizaki, Y.; et al. Promoter-level expression clustering identifies time development of transcriptional regulatory cascades initiated by erbb receptors in breast cancer cells. Sci. Rep. 2015, 5, 11999. [Google Scholar] [CrossRef] [PubMed]

- Xin, X.; Zhou, L.; Reyes, C.M.; Liu, F.; Dong, L.Q. Appl1 mediates adiponectin-stimulated p38 mapk activation by scaffolding the tak1-mkk3-p38 mapk pathway. Am. J. Physiol.-Endocrinol. Metab. 2011, 300, E103–E110. [Google Scholar] [CrossRef] [PubMed]

- Luo, L.F. Entropy production in a cell and reversal of entropy flow as an anticancer therapy. Front. Phys. China 2009, 8, 122–136. [Google Scholar] [CrossRef]

- Tsukada, M.; Ishii, N.; Sato, R. Stochastic automaton models for the temporal pattern discrimination of nerve impulse sequences. Biol. Cybern. 1976, 21, 121–130. [Google Scholar] [CrossRef] [PubMed]

- Ponmurugan, M. Generalized detailed fluctuation theorem under nonequilibrium feedback control. Phys. Rev. E Stat. Nonlinar Soft Matter Phys. 2010, 82, 031129. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.M.; Reid, J.C.; Carberry, D.M.; Williams, D.R.; Sevick, E.M.; Evans, D.J. Experimental study of the fluctuation theorem in a nonequilibrium steady state. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2005, 71, 046142. [Google Scholar] [CrossRef] [PubMed]

- Xiao, T.J.; Hou, Z.; Xin, H. Entropy production and fluctuation theorem along a stochastic limit cycle. J. Chem. Phys. 2008, 129, 114506. [Google Scholar] [CrossRef] [PubMed]

- Seifert, U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 2012, 75, 126001. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Paramore, S.; Ayton, G.S.; Voth, G.A. Transient violations of the second law of thermodynamics in protein unfolding examined using synthetic atomic force microscopy and the fluctuation theorem. J. Chem. Phys. 2007, 127, 105105. [Google Scholar] [CrossRef] [PubMed]

- Berezhkovskii, A.M.; Bezrukov, S.M. Fluctuation theorem for channel-facilitated membrane transport of interacting and noninteracting solutes. J. Phys. Chem. B 2008, 112, 6228–6232. [Google Scholar] [CrossRef] [PubMed]

- Lacoste, D.; Lau, A.W.; Mallick, K. Fluctuation theorem and large deviation function for a solvable model of a molecular motor. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2008, 78, 011915. [Google Scholar] [CrossRef] [PubMed]

- Porta, M. Fluctuation theorem, nonlinear response, and the regularity of time reversal symmetry. Chaos 2010, 20, 023111. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Collin, D.; Ritort, F.; Jarzynski, C.; Smith, S.B.; Tinoco, I., Jr.; Bustamante, C. Verification of the crooks fluctuation theorem and recovery of rna folding free energies. Nature 2005, 437, 231–234. [Google Scholar] [CrossRef] [PubMed]

- Sughiyama, Y.; Abe, S. Fluctuation theorem for the renormalized entropy change in the strongly nonlinear nonequilibrium regime. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2008, 78, 021101. [Google Scholar] [CrossRef] [PubMed]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsuruyama, T. Entropy in Cell Biology: Information Thermodynamics of a Binary Code and Szilard Engine Chain Model of Signal Transduction. Entropy 2018, 20, 617. https://doi.org/10.3390/e20080617

Tsuruyama T. Entropy in Cell Biology: Information Thermodynamics of a Binary Code and Szilard Engine Chain Model of Signal Transduction. Entropy. 2018; 20(8):617. https://doi.org/10.3390/e20080617

Chicago/Turabian StyleTsuruyama, Tatsuaki. 2018. "Entropy in Cell Biology: Information Thermodynamics of a Binary Code and Szilard Engine Chain Model of Signal Transduction" Entropy 20, no. 8: 617. https://doi.org/10.3390/e20080617