In this section, we compare the newly proposed

,

with SFCM [

25], SSCM [

25], NLSFCM [

16] and NLSSCM [

16] on several synthetic and real images. For our clustering ensemble approaches, to generate basic partitions, we directly use SFCM, SSCM, NLSFCM and NLSSCM methods. Since

r is the number of above-mentioned heterogeneous center-based clustering algorithms, here we have

. More specifically, the

and

incorporate membership matrices derived by SFCM, SSCM, NLSFCM and NLSSCM to get their soft partitions. The fuzzification coefficient

m is set to 2 for all the algorithms in our experiments. The size of square window

of

is set to

. More settings and their justifications are provided in the following discussion section.

The SA of algorithm

i on class

j is measured as

where

denotes the set of pixels belonging to class

j that are found by algorithm

i, and

denotes the set of pixels in class

j which is in the reference segmented image. It should be noted that when we have a soft partition based on a fuzzy approach, we need a defuzzification method to assign each pixel to a segment. After we obtain the membership matrix

, we calculate the

to obtain the final results. In other words, we finally classify one pixel or data into the category where it takes the largest membership value.

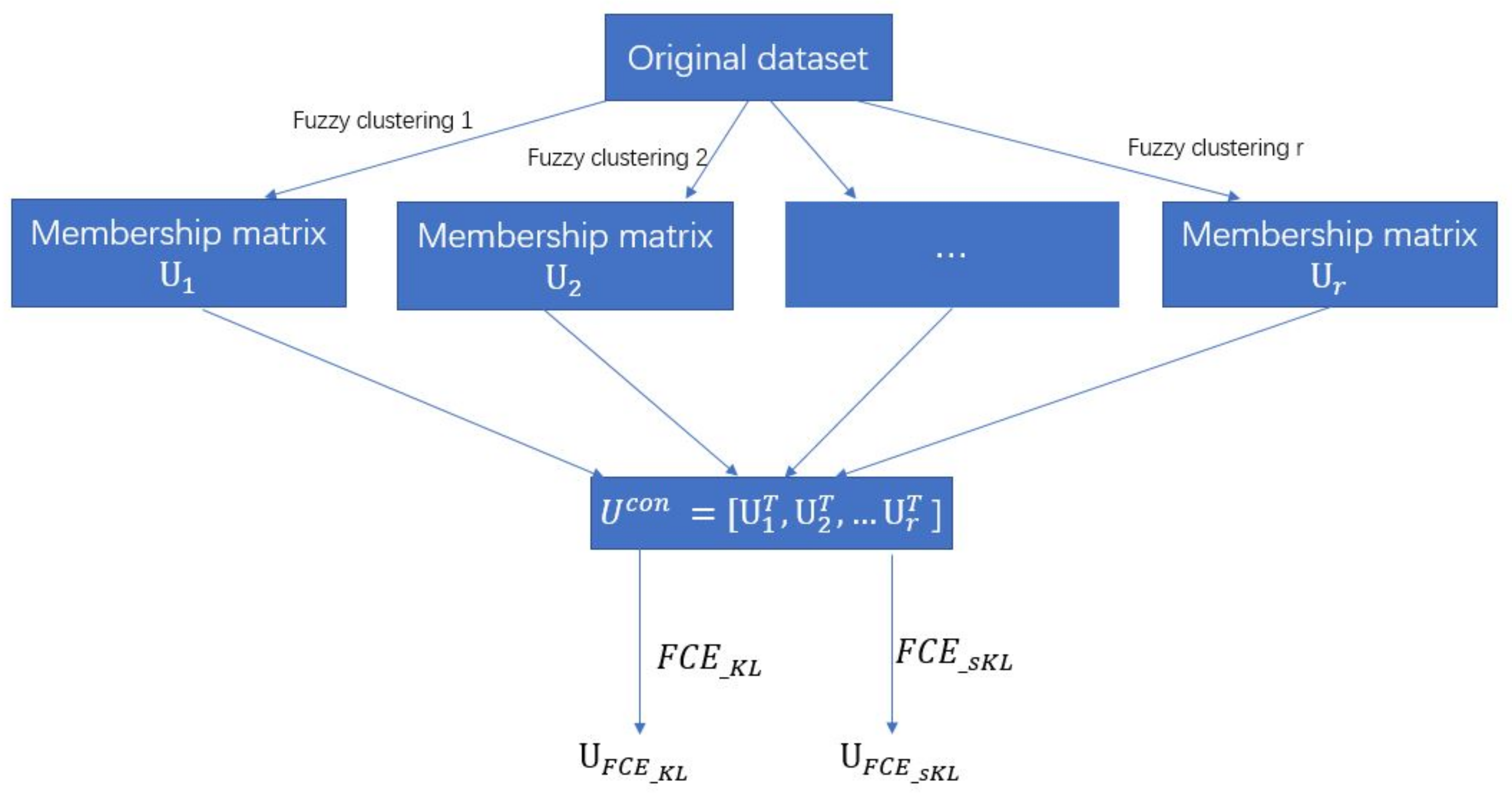

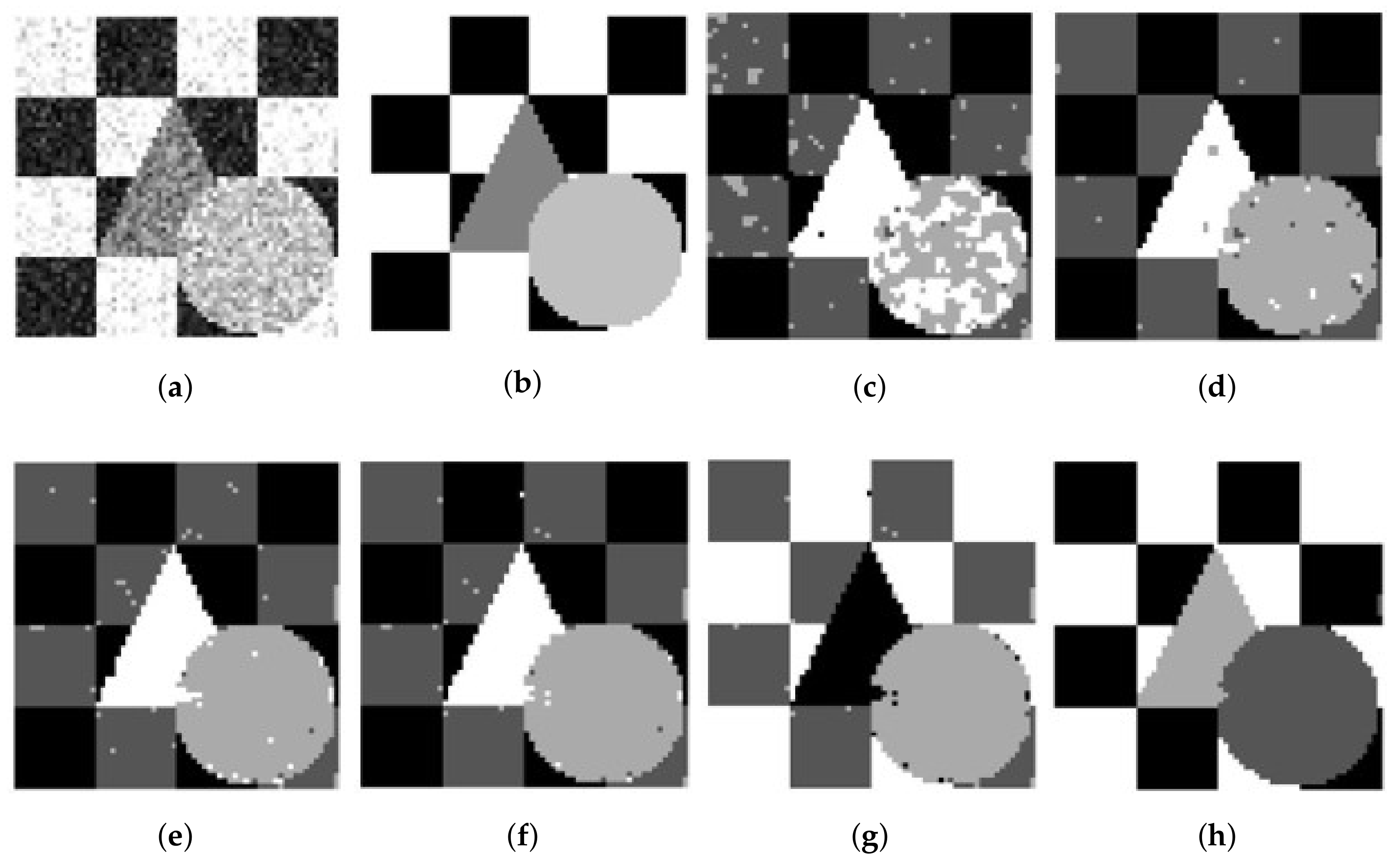

4.1. Synthetic Images

We use a synthetic two-value image, the image ‘Trin’, and the synthetic magnetic resonance (MR) images as testing images first. The synthetic two-cluster image shown in

Figure 2b, with values 1 and 0, is similar to the image used in [

14]. Its size is set to

pixels. The synthetic image of ‘Trin’ contains four regions and its size is set to

pixels. Both synthetic images add Gaussian noise and Rician noise.

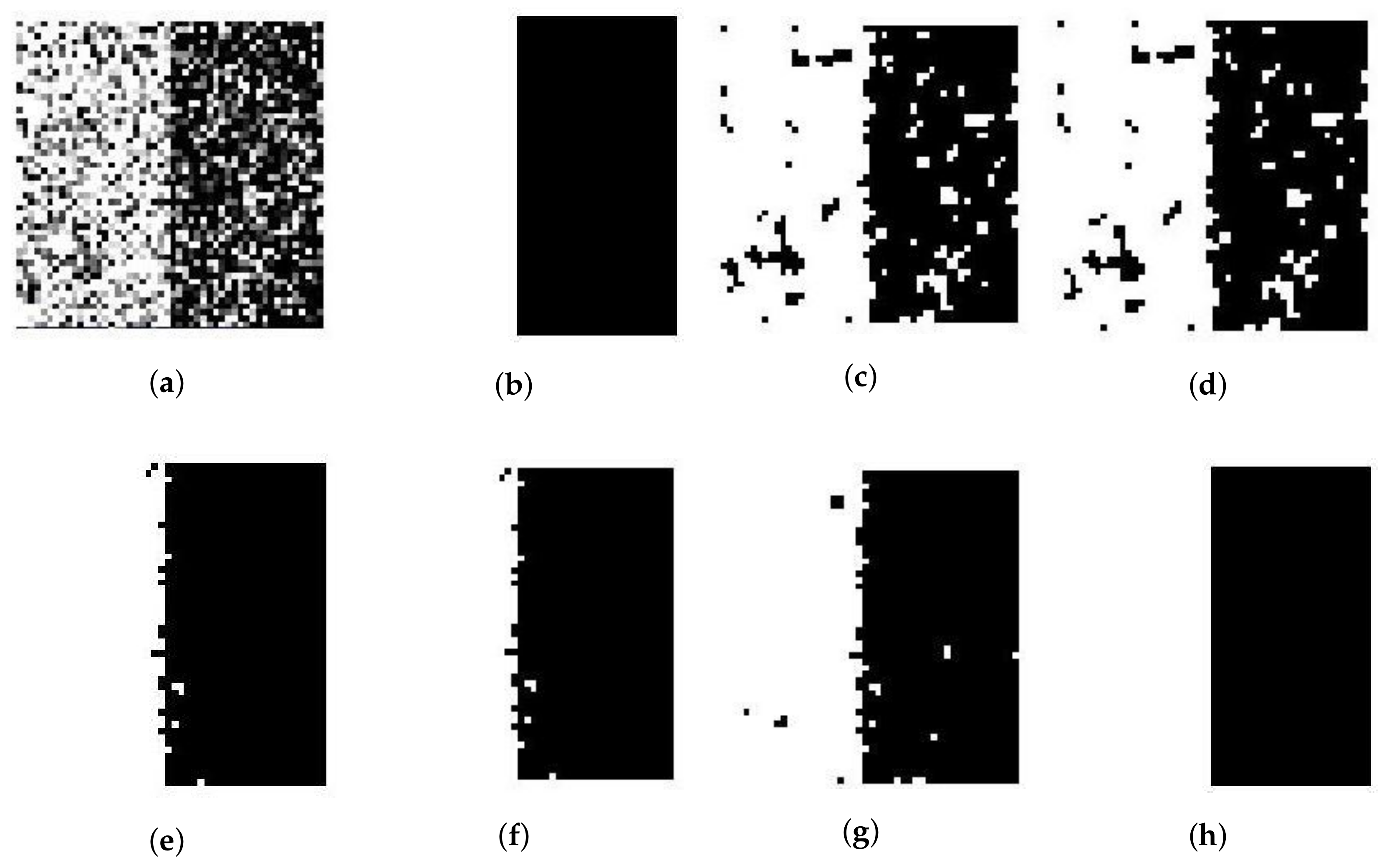

Figure 2 and

Figure 3a present synthetic two-value images with 50% Gaussian noise and 50% Rician noise.

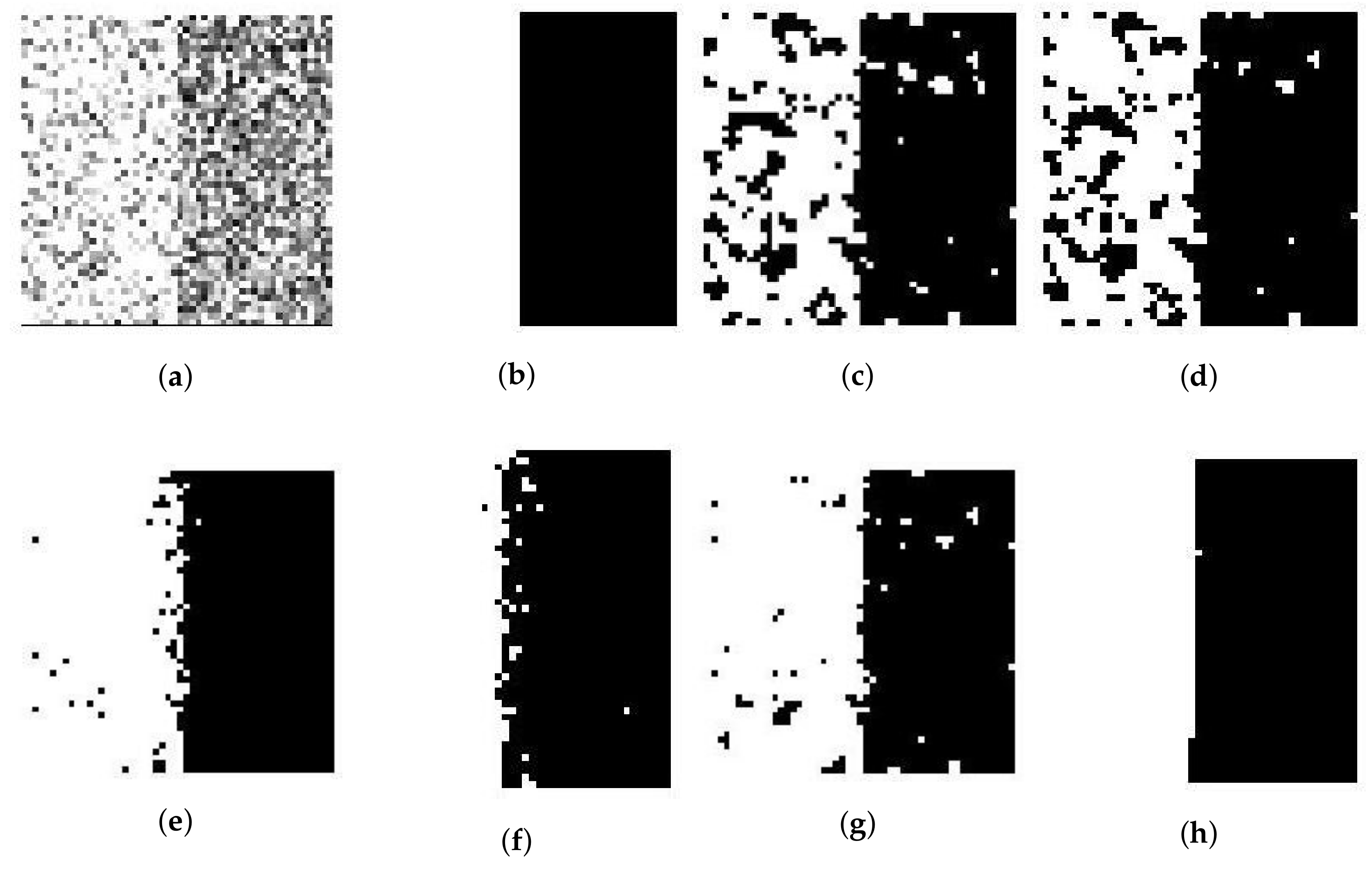

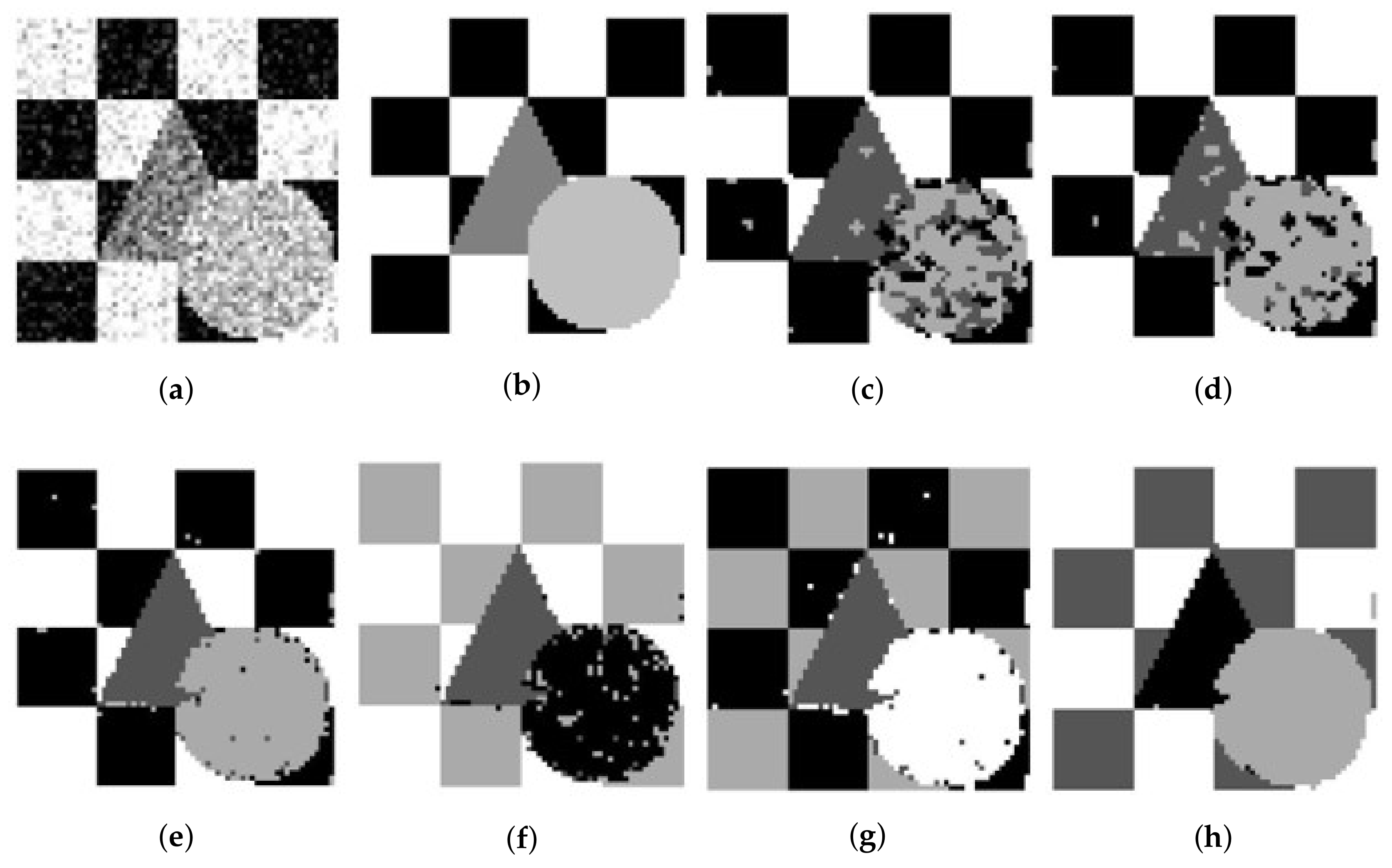

Figure 4 and

Figure 5a show ‘Trin’ with 15% Gaussian noise and 12% Rician noise, respectively. For two sorts of noised images,

Table 2 and

Table 3 show the segmentation accuracies (SAs) of six methods. We obtain these results by running all methods on the same image (no matter the size or noise) for fair comparison.

For the synthetic two-value image,

Table 2 shows that the performance of

and

are as good as that of SFCM, SSCM, NLSFCM and NLSSCM, which means that all of them can easily remove the low-level noise from this image. When Gaussian noise reaches 30% and 50%,

can segment the image better than SFCM and SSCM but worse than NLSFCM and NLSSCM.

can eliminate the noise better than the other five methods for the high-level noised images, and this demonstrates its robustness.

The fuzzy clustering methods, SFCM and SSCM, adjust the memberships with the local information of image. NLSFCM and NLSSCM update the membership values by the nonlocal information. Then, the four methods are all affected by the setting of neighborhood size and weight values when using the local or nonlocal information. In addition, NLSSCM and NLSFCM are affected much more by the initial values in the iteration than SFCM, SSCM and the proposed methods. Then, the simple setting of SFCM, SSCM and their robustness to the initialization may be the reason that some results of SFCM and SSCM in our experiments are the same as the ones in [

25] coincidentally.

On the other hand, our experiments show different results of NLSFCM and NLSSCM when compared to [

16], for we may choose a different size of nonlocal window, a different initialization, or a different computation of the nonlocal weights which are affected much by the similar measurement of patches in the nonlocal window. Some of our results of NLSSCM and NLSFCM are better than the ones in [

16], and some are not. However, the difference is not great. So, the superiority of the proposed approach demonstrated in

Table 2 is still trustworthy.

For the synthetic image “Trin”,

Table 3 demonstrates that individual clustering methods (SFCM, SSCM, NLSFCM and NLSSCM) deteriorate dramatically with the increasing of noise rate. However,

and

can stop this trend and improve the performance.

behaves better than individual clustering methods handling this image with Gaussian noise except 15% and 30%.

removes all Rician noise better than SFCM, SSCM, NLSFCM and NLSSCM. It is obvious that

can handle this image with heavy Gaussian and Rician noise more easily than the other five methods. In order to visually compare the performance, the segmentation results of all of the six methods on the noised two-value image and the image “Trin” are shown in

Figure 2,

Figure 3,

Figure 4 and

Figure 5. All of these figures illustrate that

behaves better than the other five methods.

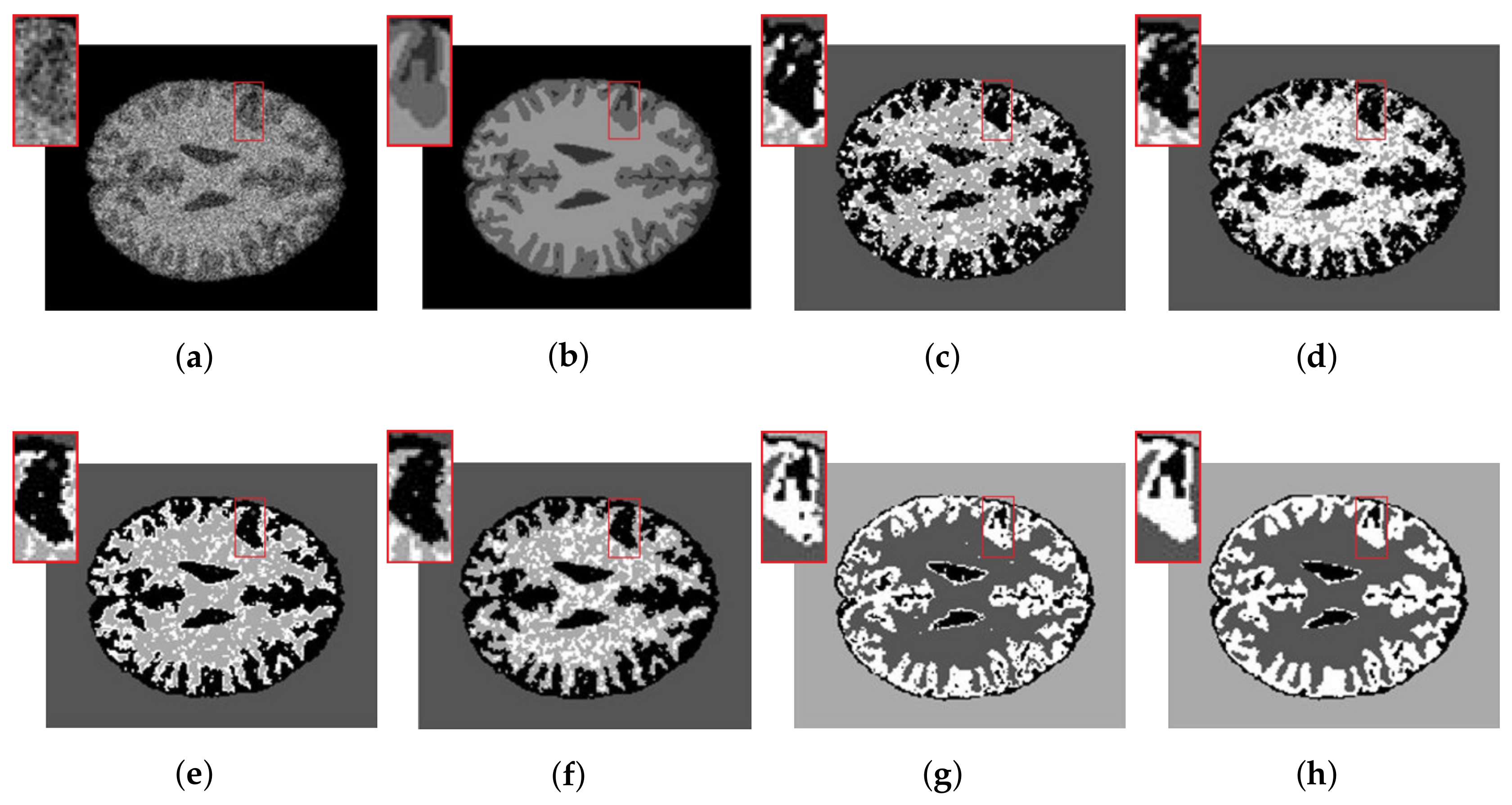

The synthetic MR images of the human brain and their reference segmentations are provided by [

30]. They are T1-weighted MR phantom with slice thickness of 1 mm, having various levels of Rician noise, without intensity inhomogeneity. The Rician noise rates range from 25% to 50% added to the synthetic MR images of the human brain. Since ground truth of the synthetic MR image is available,

Table 4 shows the SAs of the six methods. Both

and

segment these images better than SFCM, SSCM, NLSFCM and NLSSCM. Furthermore, according to the SAs, the superiority of

can be verified easily.

Figure 6a presents the synthetic synthetic MR images with 32% Rician noise. We add the zoom of the image portion highlighted with a red rectangle. Segmentation results in enlarged red rectangles reveal that

and

can remove noise better and obtain smoother regions than the other four methods.

behaves better than

because the latter still produces several misclassified pixels.

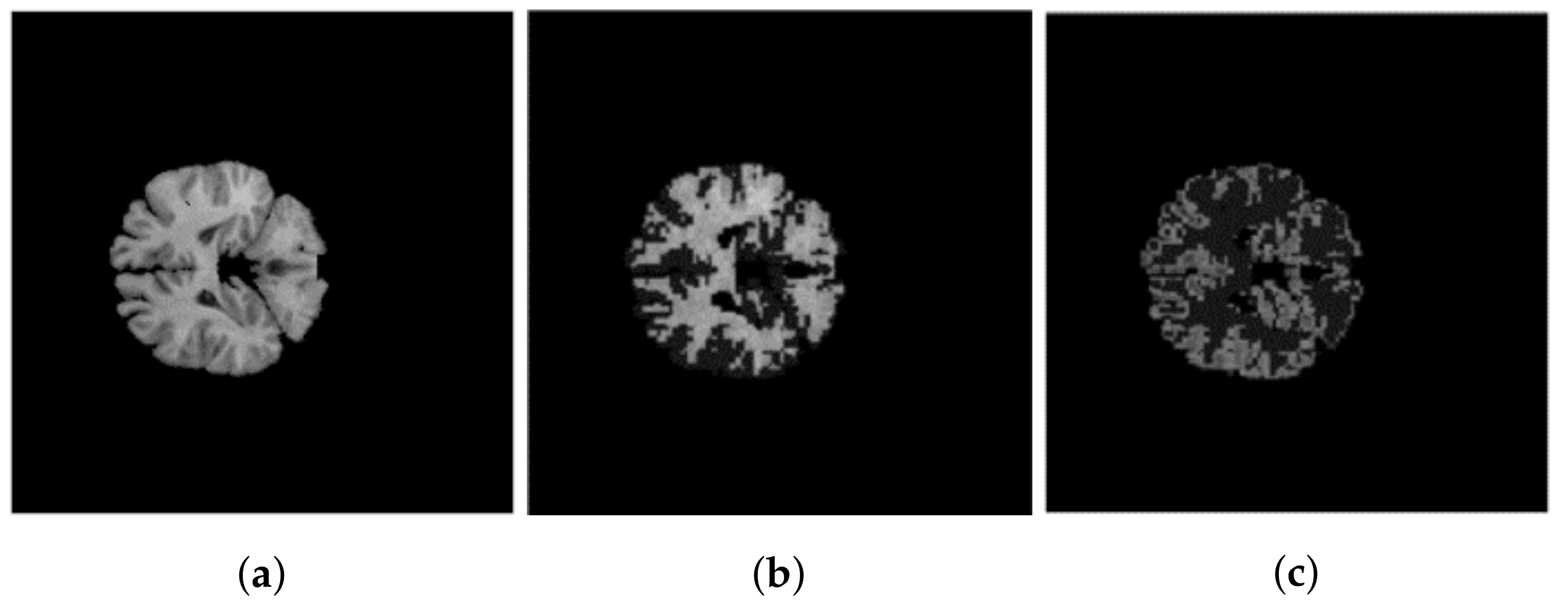

4.2. Real Images

The first real image is the MR brain image obtained from the Internet Brain Segmentation Repository (IBSR) database [

31]. This image should be partitioned into three regions corresponding to cerebrospinal fluid (CSF), white matter (WM) and gray matter (GM). As mentioned in [

32], one general method of MR brain image segmentation involves two parts: the classification and the identification of all of the voxels belonging to a specific structure. Since the CSF in the center of the brain is a continuous volume, it can be well segmented by some contour-mapping algorithm. Therefore, this paper focuses on the segmenting of WM and GM.

Figure 7 illustrates one sample without noise. According to the ground truth of the IBSR image, the SAs of different methods on the images having 12%, 15% and 18% Rician noise are shown in the

Table 5,

Table 6 and

Table 7, in which

stands for SA for the cluster of WM, and

is for the cluster of GM. The

Table 5,

Table 6 and

Table 7 demonstrate excellent performance of

in terms of various levels of noise.

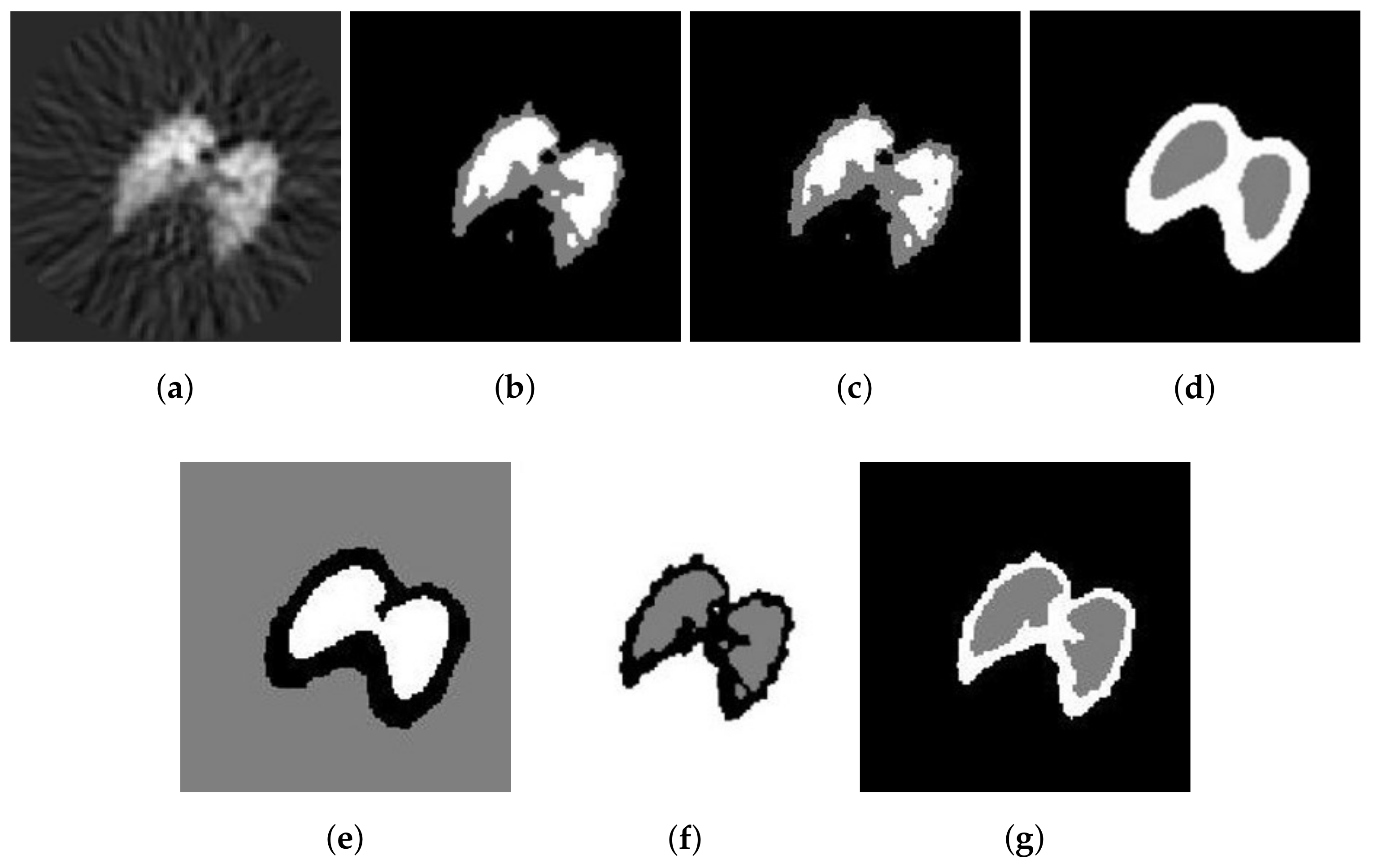

The second real image is the positron emission tomography (PET) lung image of a dog. It is demonstrated in

Figure 8a, with

pixels. The reference segmentations of these images are not available. However, the segmentation results with six methods are illustrated in

Figure 8b–g. Visually, the results obtained by SFCM and SSCM contain many flakes, while the results using NLSFCM and NLSSCM are more robust, such that some details of the lung are ignored. The

Figure 8f,g reveals that

and

outperform the other four methods.

behaves better than

, for

still produces several misclassified pixels.

Another medical image is shown in

Figure 9b, which is a

image of a healthy bone. We add 10% Gaussian noise to this image. From

Figure 9, it is obvious that the performance of

is better than the other five methods.

can remove noise in the image and obtain more homogeneous regions.

The last real image is demonstrated in

Figure 10a, which is a

image of horses. From

Figure 10, SFCM and NLSFCM contain lots of misclassified pixels. In contrast, the segmentation results obtained by

and

have less flakes in the lower-left corner than the other four methods.

and

can well segment the horses from the background.