1. Introduction

Axiomatic approaches to physics are useful for exploring the conceptual basis and logical structure of a theory. One classic example was presented by Robin Giles over fifty years ago in his monograph

Mathematical Foundations of Thermodynamics [

1]. His theory is constructed upon three phenomenological concepts: thermodynamic states, an operation (+) that combines states into composites, and a relation (→) describing possible state transformations. From a small number of basic axioms, Giles derives a remarkable amount of thermodynamic machinery, including conserved quantities (“components of content”), the existence of an entropy function that characterizes irreversibility for possible processes, and so on.

Alternative axiomatic developments of thermodynamics have been constructed by others along different lines. One notable example is the framework of Lieb and Yngvason [

2,

3] (which has recently been used by Thess as the basis for a textbook [

4]). Giles’s abstract system, meanwhile, has found application beyond the realm of classical thermodynamics, e.g., in the theory of quantum entanglement [

5].

Other work on the foundations of thermodynamics has focused on the concept of information. Much of this has been inspired by Maxwell’s famous thought-experiment of the demon [

6]. The demon is an entity that can acquire and use information about the microscopic state of a thermodynamic system, producing apparent violations of the Second Law of Thermodynamics. These violations are only “apparent” because the demon is itself a physical system, and its information processes are also governed by the underlying dynamical laws.

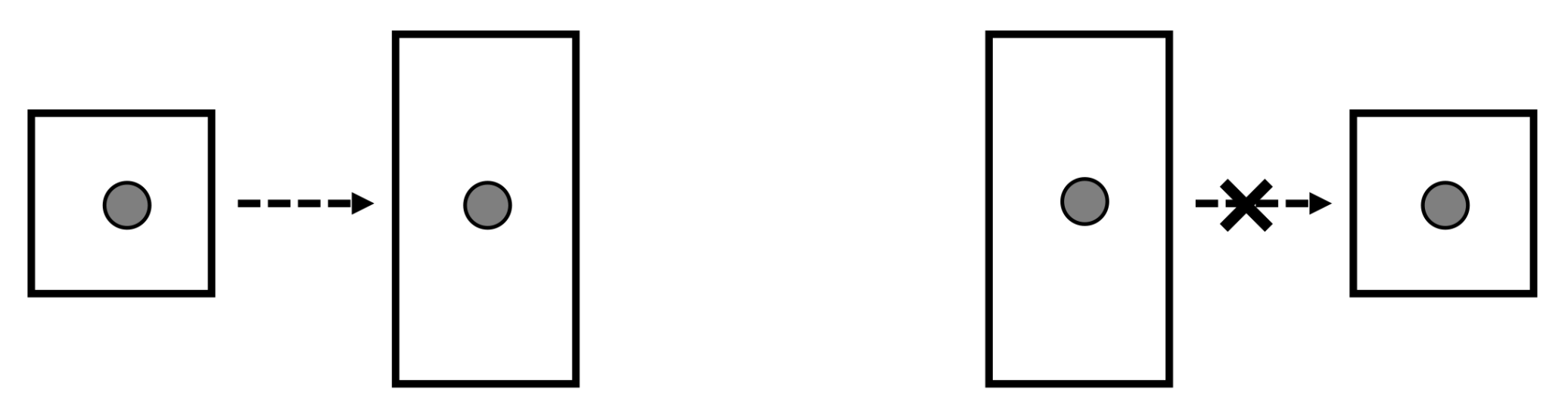

Let us examine a highly simplified example of the demon at work. Our thermodynamic system is a one-particle gas enclosed in a container (a simple system also used in [

7]). The gas may be allowed to freely expand into a larger volume, but this process is irreversible. A “free compression” process that took the gas from a larger to a smaller volume with no other net change would decrease the entropy of the system and contradict the Second Law. See

Figure 1.

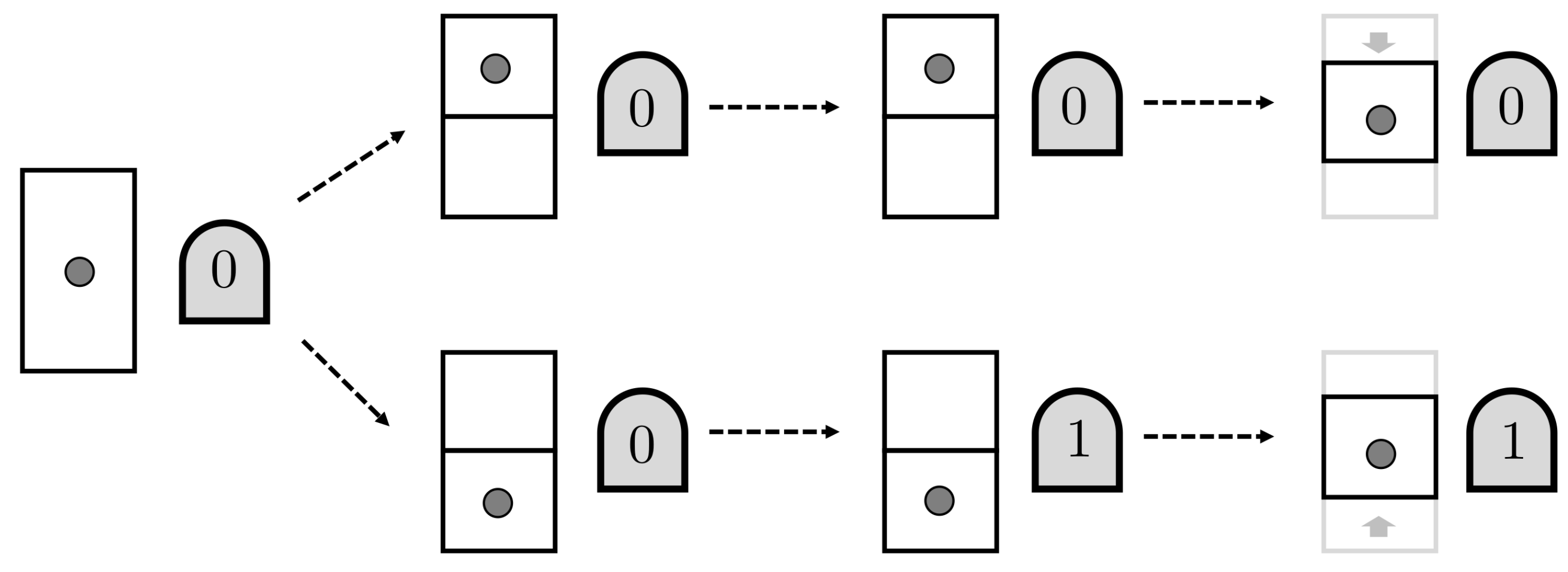

Now, we introduce the demon, which is a machine that can interact with the gas particle and acquire information about its location. The demon contains a one-bit memory register, initially in the state 0. First, a partition is introduced in the container, so that the gas particle is confined to the upper or lower half (

U or

L). The demon measures the gas particle and records its location in memory, with 0 standing for

U and 1 for

L. On the basis of this memory bit value, the demon moves the volume containing the particle. In the end, the gas particle is confined to one particular smaller volume, apparently reducing the entropy of the system. This is illustrated in

Figure 2.

As Bennett pointed out [

8], every demon operation we have described can be carried out reversibly, with no increase in global entropy. Even the “measurement” process can be described by a reversible interaction between the particle location and the demon’s memory bit

b, changing the states according to

where

is the binary negation of

b. However, the demon process as described leaves the demon in a new situation, since an initially blank memory register now stores a bit of information. To operate in a cycle (and thus unambiguously violate the Second Law), the demon must erase this bit and recover the blank memory. However, as Landauer showed [

9], the erasure of a bit of information is always accompanied by an entropy increase of at least

in the surroundings, just enough to ensure that there is no overall entropy decrease in the demon’s operation. The Second Law remains valid.

Information erasure is a physical process. The demon we have described can also erase a stored bit simply by reversing the steps outlined. Depending on the value of the bit register, the demon moves the gas particle to one of two corresponding regions separated by a partition. The same interaction that accomplished the “measurement” process (which takes and ) can now be used to reset the bit value to 0 (taking and ). In other words, the information stored redundantly in both the gas and the register is “uncopied”, so that it remains only in the gas. Finally, the partition is removed and the gas expands to the larger volume. The net effect is to erase the memory register while increasing the entropy of the gas by an amount , in conventional units.

Another link between thermodynamics and information comes from statistical mechanics. As Jaynes has shown [

10,

11], the concepts and measures of information devised by Shannon for communication theory [

12] can be used in the statistical derivation of macroscopic thermodynamic properties. In a macroscopic system, we typically possess only a small amount of information about a few large-scale parameters (total energy, volume, etc.). According to Jaynes, we should therefore choose a probability distribution over microstates that maximizes the Shannon entropy, consistent with our data. That is to say, the rational probability assignment includes no information (in the Shannon sense) except that found in the macroscopic state of the system. This prescription yields the usual distributions used in statistical mechanics, from which thermodynamic properties may be derived.

Axiomatic theories and information analyses each provide important insights into the meaning of thermodynamics. The purpose of this paper is to synthesize these two approaches. We will present an axiomatic basis for thermodynamics that uses information ideas from the very outset. In such a theory, Maxwell’s demon, which accomplishes state changes by acquiring information, is neither a paradox nor a sideshow curiosity. Instead, it is a central conceptual tool for understanding the transformations between thermodynamic states. In our view, thermodynamics is essentially a theory of the descriptions of systems possessed by agents that are themselves (like the demon) physical systems. These descriptions may change as the external systems undergo various processes; however, they also may change when the agent acquires, uses or discards information.

Our work is thus similar in spirit to that of Weilenmann et al. [

13], though our approach is very different. They essentially take the Lieb-Yngvason axiomatic framework and apply it to various quantum resource theories. In particular, for the resource theory of non-uniformity [

14], the Lieb-Yngvason entropy function coincides with the von Neumann entropy of the quantum density operator, which is a measure of quantum information. We, on the other hand, seek to modify axiomatic thermodynamics itself to describe processes involving “information engines” such as Maxwell’s demon. We do not rely on any particular microphysics and have both classical and quantum models for our axioms. The connections we find between information and thermodynamic entropy are thus as general as the axioms themselves. Furthermore, to make our concept of information as clear as possible, we will seek to base our development on the most elementary ideas of state and process.

We therefore take as our prototype the axiomatic theory of Giles [

1]. In fact, Giles’s monograph contains two different axiomatic developments. (The first system is presented in Chapters 1–6 of Giles’s book, and the second is introduced beginning in Chapter 7.) The first, which we might designate Giles I, is based on straightforward postulates about the properties of states and processes. The second, Giles II, is more sophisticated and powerful. The axioms are less transparent in meaning (e.g., the assumed existence of “anti-equilibrium” and “internal” thermodynamic states), but they support stronger theorems. This difference can be illustrated by the status of entropy functions in the two developments. In Giles I, it is shown that an entropy function exists; in fact, there may be many such functions. In Giles II, it is shown that an

absolute entropy function, one that is zero for every anti-equilibrium state, exists and is unique. Giles himself regarded the second system as “The Formal Theory”, which he summarizes in an Appendix of that title.

The information-based system we present here, on the other hand, is closer to the more elementary framework of Giles I. We have taken some care to use notation and concepts that are as analogous as possible to that theory. We derive many of Giles’s propositions, including some that he uses as axioms. Despite the similarities, however, even the most elementary ideas (such as the combination of states represented by the + operation) will require some modifications. These changes highlight the new ideas embodied in axiomatic information thermodynamics, and so we will take some care to discuss them as they arise.

Section 2 introduces the fundamental idea of an eidostate, including how two or more eidostates may be combined. In

Section 3, we introduce the → relation between eidostates:

means that eidostate

A can be transformed into eidostate

B, with no other net change in the apparatus that accomplishes the transformation. The collection of processes has an algebraic structure, which we present in

Section 4. We introduce our concept of information in

Section 5 and show that the structure of information processes imposes a unique entropy measure on pure information states.

Section 6 introduces axioms inspired by Maxwell’s demon and shows their implications for processes involving thermodynamic states.

Section 7 and

Section 8 outline how our information axioms also yield Giles’s central results about thermodynamic processes, including the existence of an entropy function, conserved components of content, and mechanical states. In

Section 10, we see that an entropy function can be extended in a unique way to a wider class of “uniform” eidostates. This, rather remarkably, gives rise to a unique probability measure within uniform eidostates, as we describe in

Section 11.

Section 12 and

Section 13 present two detailed models of our axioms, each one highlighting a different aspect of the theory. We conclude in

Section 14 with some remarks on the connection between information and thermodynamic entropy, a connection that emerges necessarily from the structure of processes in our theory.

2. Eidostates

Giles [

1] bases his development on a set

of

states. The term “state” is undefined in the formal theory, but heuristically it represents an equilibrium macrostate of some thermodynamic system. (Giles, in fact, identifies

with some method of preparation; the concept of a “system” does not appear in his theory, or in ours.) States can be combined using the + operation, so that if

then

also. The new state

is understood as the state of affairs arising from simultaneous and independent preparations of states

a and

b. For Giles, this operation is commutative and associative; for example,

is exactly the same state as

.

We also have a set . However, our theory differs in two major respects. First, the combination of states is not assumed to be commutative and associative. On the contrary, we regard and as entirely distinct states for any . The motivation for this is that the way that a composite state is assembled encodes information about its preparation, and we want to be able to keep track of this information. On the states in , the operation + is simply that of forming a Cartesian pair of states: , and nothing more.

A second and more far-reaching difference is that we cannot confine our theory to individual elements of . A theory that keeps track of information in a physical system must admit non-deterministic processes. For instance, if a measurement is made, the single state a before the measurement may result in any one of a collection of possible states corresponding to the various results.

Therefore, our basic notion is the eidostate, which is a finite nonempty set of states. The term “eidostate” derives from the Greek word eidos, meaning “to see”. The eidostate is a collection of states that may be regarded as possible from the point of view of some agent. The set of eidostates is designated by . When we combine two eidostates into the composite , we mean the set of all combinations of elements of these sets. That is, is exactly the Cartesian product (commonly denoted ) for the sets. Thus, our state combination operation + is very different from that envisioned by Giles. His operation is an additional structure on that requires an axiom to fix its properties, whereas we simply use the Cartesian product provided by standard set theory, which we of course assume.

Some eidostates in are Cartesian products of other sets (which are also eidostates); some eidostates are not, and are thus “prime”. We assume that each eidostate has a “prime factorization” into a finite number of components. Here, is our first axiom:

Axiom 1. Eidostates: is a collection of sets called eidostates such that:

- (a)

Every is a finite nonempty set with a finite prime Cartesian factorization.

- (b)

if and only if .

- (c)

Every nonempty subset of an eidostate is also an eidostate.

Part (c) of this axiom ensures, among other things, that every element a of an eidostate can be associated with a singleton eidostate . Without too much confusion, we can simply denote any singleton eidostate by the element it contains, writing a instead. We can therefore regard the set of states in two ways. Either we may think of as the collection of singleton eidostates in , or we may say that is the collection of all elements of all eidostates: . Either way, the set characterized by Axiom 1 is the more fundamental object.

Our “eidostates” are very similar to the “specifications” introduced by del Rio et al. [

15] in their general framework for resource theories of knowledge. The two ideas, however, are not quite identical. To begin with, a specification

V may be any subset of a state space

, whereas the eidostates in

are required to be finite nonempty subsets of

—and indeed, not every such subset need be an eidostate. For example, the union

of two eidostates is not necessarily an eidostate. The set

therefore does not form a Boolean lattice. Specifications are a general concept applicable to state spaces of many different kinds, and are used in [

15] to analyze different resource theories and to express general notions of approximation and locality. We, however, will be restricting our attention to an

(and

) with very particular properties, expressed by Axiom 1 and our later axioms, that are designed to model thermodynamics in the presence of information engines such as Maxwell’s demon.

Composite eidostates are formed by combining eidostates with the + operation (Cartesian product). The same pieces may be combined in different ways. We say that

are

similar (written

) if they are made up of the same components. That is,

provided there are eidostates

such that

and

for two Cartesian product formulas

and

. Thus,

and so on. The similarity relation ∼ is an equivalence relation on

.

We sometimes wish to combine an eidostate with itself several times. For the integer , we denote by the eidostate , where A appears n times in the nested Cartesian product. This is one particular way to combine the n instances of A, though of course all such ways are similar. Thus, we may assert equality in , but only similarity in .

Finally, we note that we have introduced as yet no probabilistic ideas. An eidostate is a simple enumeration of possible states, without any indication that some are more or less likely than others. However, as we will see in

Section 11, in certain contexts, a natural probability measure for states does emerge from our axioms.

3. Processes

In the axiomatic thermodynamics of Giles, the → relation describes state transformations. The relation

means that there exists another state

z and a definite time interval

so that

where

indicates time evolution over the period

. The pair

is the “apparatus” that accomplishes the transformation from

a to

b. This dynamical evolution is a deterministic process; that is, in the presence of the apparatus

the initial state

a is guaranteed to evolve to the final state

b. This rule of interpretation for → motivates the properties assumed for the relation in the axioms.

Our version of the arrow relation → is slightly different, in that it encompasses non-deterministic processes. Again, we envision an apparatus

and we write

to mean that the initial state

may possibly evolve to

over the stated interval. Then, for eidostates

A and

B, the relation

means that, if

and

, then there exists an apparatus

such that

only if

. Each possible initial state

a in

A might evolve to one or more final states, but all of the possible final states are contained in

B. For singleton eidostates

a and

b, the relation

represents a deterministic process, as in Giles’s theory.

Again, we use our heuristic interpretation of → to motivate the essential properties specified in an axiom:

Axiom 2. Processes: Let eidostates , and .

- (a)

If , then .

- (b)

If and , then .

- (c)

If , then .

- (d)

If , then .

Part (a) of the axiom asserts that it is always possible to “rearrange the pieces” of a composite state. Thus, and so on. Of course, since ∼ is a symmetric relation, implies both and , which we might write as .

Part (b) says that a process from A to C may proceed via an intermediate eidostate B. Parts (c) and (d) establish the relationship between → and +. We can always append a “bystander” state C to any process , and a bystander singleton state s in can be viewed as part of the apparatus that accomplishes the transformation .

We use the → relation to characterize various conceivable processes. A formal process is simply a pair of eidostates . Following Giles, we may say that is:

natural if ;

antinatural if ;

possible if or (which may be written );

impossible if it is not possible;

reversible if ; and

irreversible if it is possible but not reversible.

Thus, any formal process must be one of four types: reversible, natural irreversible, antinatural irreversible, or impossible.

Any nonempty subset of an eidostate is an eidostate. If the eidostate A is an enumeration of possible states, the proper subset eidostate may be regarded as an enumeration with some additional condition present that eliminates one or more of the possibilities. What can we say about processes involving these “conditional” eidostates? To answer this question, we introduce two further axioms. The first is this:

Axiom 3. If and B is a proper subset of A, then .

This expresses the idea that no natural process can simply eliminate a state from a list of possibilities. This is a deeper principle than it first appears. Indeed, as we will find, in our theory, it is the essential ingredient in the Second Law of Thermodynamics.

To express the second new axiom, we must introduce the notion of a uniform eidostate. The eidostate A is said to be uniform provided, for every , we have . That is, every pair of states in A is connected by a possible process. This means that all of the A states are “comparable” in some way involving the → relation.

All singleton eidostates are uniform. Are there any non-uniform eidostates? The axioms in fact do not tell us. We will find models of the axioms that contain non-uniform eidostates and others that contain none. Even if there are states for which and , nothing in our axioms guarantees the existence of an eidostate that contains both a and b as elements. We denote the set of uniform eidostates by .

Now, we may state the second axiom about conditional processes.

Axiom 4. Conditional processes:

- (a)

Suppose and . If and then .

- (b)

Suppose A and B are uniform eidostates that are each disjoint unions of eidostates: and . If and then .

The first part makes sense given our interpretation of the → relation in terms of an apparatus . If every satisfies for only one state b, then the same will be true for every . Part (b) of the axiom posits that, if we can find an apparatus whose dynamics transforms states into states, and another whose dynamics transforms states into states, then we can devise a apparatus with “conditional dynamics” that does both tasks, taking A to B. This is a rather strong proposition, and we limit its scope by restricting it to the special class of uniform eidostates.

We can as a corollary extend Part (b) of Axiom 4 to more than two subsets. That is, suppose and the sets A and B are each partitioned into n mutually disjoint, nonempty subsets: and . In addition, suppose for all . Then, .

4. Process Algebra and Irreversibility

Following Giles, we now explore the algebraic structure of formal processes and describe how the type of a possible process may be characterized by a single real-valued function. Although the broad outlines of our development will follow that of Giles [

1], there are significant differences. (For example, in our theory, the unrestricted set

of eidostate processes does not form a group.)

There is an equivalence relation among formal processes, based on the similarity relation ∼ among eidostates. We say that

if there exist singletons

such that

and

. That is, if we augment the eidostates in

by

x and those in

by

y, the corresponding eidostates in the two processes are mere rearrangements of each other. It is not hard to establish that this is an equivalence relation. Furthermore, equivalent processes (under ≐) are always of the same type. To see this, suppose that if

and

. Then,

, etc., and

Hence,

. It is a straightforward corollary that

if and only if

.

Processes (or more strictly, equivalence classes of processes under ≐) have an algebraic structure. We define the

sum of two processes as

and the

negation of a process as

. We call the − operation “negation” even though in general

is not the additive inverse of

. Such an inverse may not exist. As we will see, however, the negation does yield an additive inverse process in some important special contexts.

The sum and negation operations on processes are both compatible with the equivalence ≐. That is, first, if

then

. Furthermore, if

and

, then

This means that the sum and negation operations are well-defined on equivalence classes of processes. If

and

denote two equivalence classes represented by

and

, then

which is the same regardless of the particular representatives chosen to indicate the two classes being added.

We let

denote the collection of all equivalence classes of formal processes. Then, the sum operation both is associative and commutative on

. Furthermore, it contains a zero element

for some singleton

s. That is, if

,

The set

is thus a monoid (a semigroup with identity) under the operation +. Moreover, the subset

of singleton processes—equivalence classes of formal processes with singleton initial and final states—is actually an Abelian group, since

In

, the negation operation does yield the additive inverse of an element.

The → relation on states induces a corresponding relation on processes. If , we say that provided the process is natural (a condition we might formally write as ). Intuitively, this means that process can “drive process backward”, so that and the opposite of together form a natural process.

Now, suppose that is a collection of equivalence classes of possible processes that is closed under addition and negation. An irreversibility function is a real-valued function on such that

If , then .

The value of determines the type of the processes in :

whenever is natural irreversible.

whenever is reversible.

whenever is antinatural irreversible.

An irreversibility function, if it exists, has a number of elementary properties. For instance, since the process is always reversible for any , it follows that . In fact, we can show that all irreversibility functions on are essentially the same:

Theorem 1. An irreversibility function on is unique up to an overall positive factor.

Proof. Suppose and are irreversibility functions on the set . If is reversible, then . If there are no irreversible processes in , then .

Now, suppose that

contains at least one irreversible process

, which we may suppose is natural irreversible. Thus, both

and

. Consider some other process

. We must show that

We proceed by contradiction, imagining that the two ratios are not equal and (without loss of generality) that the second one is larger. Then, there exists a rational number

(with

) such that

The first inequality yields

, so that the process

(that is,

) must be natural irreversible. The second inequality yields

, so that the process

must be antinatural irreversible. These cannot both be true, so the original ratios must be equal. ☐

The additive irreversibility function is only defined on a set of possible processes. However, we can under some circumstances extend an additive function to a wider domain. It is convenient to state here the general mathematical result we will use later for this purpose:

Theorem 2 (“Hahn–Banach theorem” for Abelian groups)

. Let be an Abelian group. Let ϕ be a real-valued function defined and additive on a subgroup of . Then, there exists an additive function defined on such that for all x in .

Note that the extension is not necessarily unique—that is, there may be many different extensions of a single additive function .

5. Information and Entropy

In our theory, information resides in the distinction among possible states. Thus, the eidostate represents information in the distinction among it elements. However, thermodynamic states such as the s may also have other properties such as energy, particle content, and so on. To disentangle the concept of information from the other properties of these states, we introduce a notion of a “pure” information state.

The intuitive idea is this. We imagine that the world contains freely available memory devices. Different configurations of these memories—different memory records—are distinct states that are degenerate in energy and every other conserved quantity. Any particular memory record can thus be freely created from or reset to some null value.

We therefore define a

record state to be an element

such that there exists

so that

. The state

a can be thought of as part of the apparatus that reversibly exchanges the particular record

r with a null value. In fact, if

A is any eidostate at all, we find that

and so by cancellation of the singleton state

a,

. We denote the set of record states by

. If

, then

, and furthermore

. Any particular record state can be reversibly transformed into any other, a fact that expresses the arbitrariness of the “code” used to represent information in a memory device.

An information state is an eidostate whose elements are all record states, and the set of such eidostates is denoted . All information states are uniform eidostates. An information process is one that is equivalent to a process , where . (Of course, information processes also include processes of the form for a non-record state , as well as more complex combinations of record and non-record states.) Roughly speaking, an information process is a kind of computation performed on information states.

It is convenient at this point to define a bit state (denoted ) as an information state containing exactly two record states. That is, . A bit process is an information process of the form for some —that is, a process by which a bit state is created from a single record state.

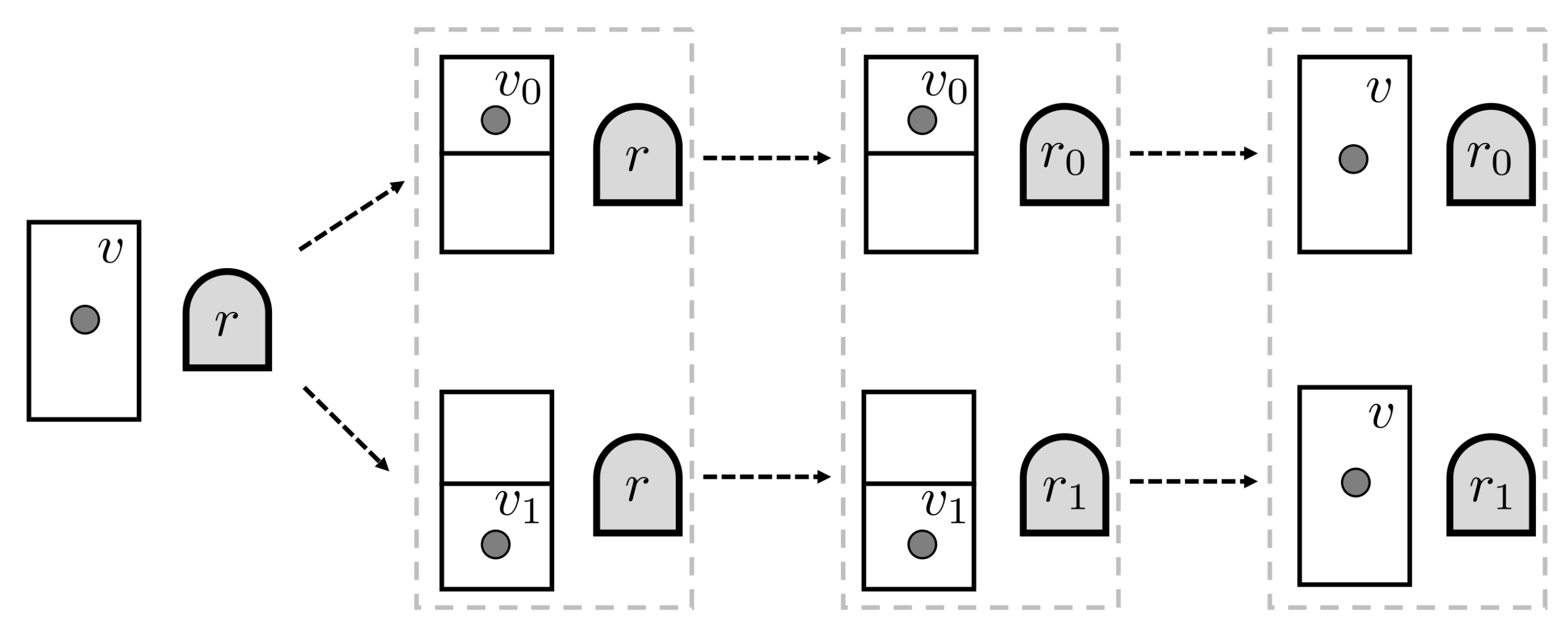

We can illustrate a bit process in a thermodynamics context by considering a thought-experiment involving Maxwell’s demon. We imagine that the demon operates on a one-particle gas confined to a volume, a situation described by gas state

v (see

Figure 3). The demon, whose memory initially has a record state

r, inserts a partition into the container, dividing the volume in two halves labeled 0 and 1. The gas molecule is certainly in one sub-volume or the other. The demon then records in memory which half the particle occupies. Finally, the partition is removed and the particle wanders freely around the whole volume of the container. In thermodynamic terms, the gas state relaxes to the original state

v. The overall process establishes the following relations:

and so

. In our example, the bit process

is natural one.

However, we do not yet know that there actually are information states and information processes in our theory. We address this by a new axiom.

Axiom 5. Information: There exist a bit state and a possible bit process.

Axiom 5 has a wealth of consequences. Since an information state exists, record states necessarily also exist. There are infinitely many record states, since for any we also have distinct states all in . We may have information states in that contain arbitrarily many record states, because contains elements. Furthermore, since every nonempty subset of an information state is also an information state, for any integer there exists so that .

Any two bit states can be reversibly transformed into one another. Consider and . Since and , it follows by Axiom 4 that (and hence .)

If any bit process is possible, then every bit process is possible. Furthermore, every such process is of the natural irreversible type. To see why, consider the bit state and suppose for a record state r. Since , this implies that , which is a violation of Axiom 3. Therefore, it must be that but ; and this is true for any choice of r and .

Now, we can prove that every information process is possible, and that the → relation is determined solely by the relative sizes of the initial and final information states.

Theorem 3. Suppose . Then, if and only if .

Proof. To begin with, we can see that implies . This is because we can write and . Since for every , the finite extension of Axiom 4 tells us that .

Now, imagine that . There exists a proper subset of I so that , and thus . If it happened that , it would follow that , a contradiction of Axiom 4. Hence, implies .

It remains to show that if , it must be that . As a first case, suppose that and . Letting and , we note that (the last being a bit process. It follows that .

We now proceed inductively. Given

, we can imagine a sequence of information states

with successive numbers of elements between

and

, so that

. From what we have already proved,

and by transitivity of the → relation we may conclude that

. Therefore,

if and only if

. ☐

Intuitively, the greater the number of distinct record states in an information state, the more information it represents. Thus, under our axioms, a natural information process may maintain or increase the amount of information, but never decrease it.

We can sharpen this intuition considerably. Let

be the set of information processes, which is closed under addition and negation, and in which every process is possible. We can define a function

on

as follows: For

,

The logarithm function guarantees the additivity of when two processes are combined, and the sign of exactly determines whether . Thus, is an irreversibility function on , as the notation suggests. This function is unique up to a positive constant factor—i.e., the choice of logarithm base. If we choose to use base-2 logarithms, so that a bit process has , then is uniquely determined.

The irreversibility function on information processes can be expressed in terms of a function on information states. Suppose we have a collection of eidostates that is closed under the + operation. With Giles, we define a quasi-entropy on to be a real-valued function such that, for :

.

If is natural irreversible, then .

If is reversible, then .

(A full “entropy” function satisfies one additional requirement, which we will address in

Section 8 below.) Given a quasi-entropy

, we can derive an irreversibility function

on possible

-processes by

.

Obviously,

is a quasi-entropy function on

that yields the irreversibility function on

. We recognize it as the Hartley–Shannon entropy of an information source with

possible outputs [

16]. In fact, this is the only possible quasi-entropy on

. Since every information process is possible, two different quasi-entropy functions

and

can only differ by an additive constant. However, since

for

and

, we know that

for any quasi-entropy. Therefore,

is the unique quasi-entropy function for information states. The quasi-entropy of a bit state is

.

6. Demons

Maxwell’s demon accomplishes changes in thermodynamic states by acquiring and using information. For example, a demon that operates a trapdoor between two containers of gas can arrange for all of the gas molecules to end up in one of the containers, “compressing” the gas without work. As we have seen, it is also possible to imagine a reversible demon, which acquires and manipulates information in a completely reversible way. If such a demon produces a transformation from state x to state y by acquiring k bits of information in its memory, it can accomplish the reverse transformation (from y to x) while erasing k bits from its memory.

Maxwell’s demon is a key concept in axiomatic information thermodynamics, and we introduce a new axiom to describe “demonic” processes.

Axiom 6. Demons: Suppose and such that .

- (a)

There exists such that .

- (b)

For any , either or .

In Part (a), we assert that what one demon can do (transforming a to b by acquiring information in J), another demon can undo (transforming b to a by acquiring information in I). Part (b) envisions a reversible demon. Any amount of information in I is either large enough that we can turn a to b by acquiring I, or small enough that we can erase the information by turning b to a.

A process is said to be demonically possible if one of two equivalent conditions hold:

There exists an information state such that either or .

There exists an information process such that is possible; that is, either or .

It is not hard to see that these are equivalent. Suppose we have such that . Then, for any , . Conversely, we note that for any . Thus, if then as well.

If a process is possible, then it is also demonically possible, since if it is also true that . For singleton processes in , moreover, the converse is also true. Suppose and is demonically possible. Then, there exists such that either or . Either way, either trivially or by an application of Axiom 6, there must be so that . A single record state is a singleton information state in . Thus, by Axiom 6 it must be that either or . Since , we find that , and so is possible.

A singleton process is demonically possible if and only if it is possible. This means that we can use processes involving demons to understand processes that do not. In fact, we can use Axiom 6 to prove a highly significant fact about the → relation on singleton eidostates.

Theorem 4. Suppose . If and are possible, then is possible.

Proof. First, we note the general fact that, if is a possible singleton process, then there exist so that and .

Given our hypothesis, therefore, there must be

such that

and

. Then

That is,

is demonically possible, and hence possible. ☐

This fact is so fundamental that Giles made it an axiom in his theory. For us, it is a straightforward consequence of the axiom about processes involving demons. It tells us that the set of singleton states is partitioned into equivalence classes, within each of which all states are related by ⇌.

This statement is more primitive than, but closely related to, a well-known principle called the Comparison Hypothesis. The Comparison Hypothesis deals with a state relation called

adiabatic accessibility (denoted ≺) which is definable in Giles’s theory (and ours) but is taken as an undefined relation in some other axiomatic developments. According to the Comparison Hypothesis, if

X and

Y are states in a given thermodynamic space, either

or

. Lieb and Yngvason, for instance, show that the Comparison Hypothesis can emerge as a consequence of certain axioms for thermodynamic states, spaces, and equilibrium [

2,

3].

Theorem 4 also sheds light on our axiom about conditional processes, Axiom 4. In Part (a) of this axiom, we suppose that for some and . The axiom itself allows us to infer that for every . However, Theorem 4 now implies that, for every , either or . In other words, Part (a) of Axiom 4, similar to Part (b) of the same axiom, only applies to uniform eidostates.

Finally, we introduce one further “demonic” axiom.

Axiom 7. Stability: Suppose and . If for arbitrarily large values of n, then .

According to the Stability Axiom, if a demon can transform arbitrarily many copies of eidostate

A into arbitrarily many copies of

B while acquiring a bounded amount of information, then we may say that

. This can be viewed as a kind of “asymptotic regularization” of the → relation. The form of the Stability Axiom that we have chosen is a particularly simple one, and it suffices for our purposes in this paper. However, more sophisticated axiomatic developments might require a refinement of the axiom. Compare, for instance, Axiom 2.1.3 in Giles to its refinement in Axiom 7.2.1 [

1].

To illustrate the use of the Stability Axiom, suppose that

and

such that

. Our axioms do not provide a “cancellation law” for information states, so we cannot immediately conclude that

. However, we can show that

for all positive integers

n. The case

holds since

. Now, we proceed inductively, assuming that

for some

n. Then,

Thus,

for arbitrarily large (and indeed all) values of

n. By the Stability Axiom, we see that

. Thus, there is after all a general cancellation law information states that appear on both sides of the → relation.

7. Irreversibility for Singleton Processes

From our two “demonic” axioms (Axioms 6 and 7), we can use the properties of information states to derive an irreversibility function on singleton processes. Let denote the set of possible singleton processes. This is a subgroup of the Abelian group . Thus, if we can find an irreversibility function on , we will be able to extend it to all of .

We begin by proving a useful fact about possible singleton processes:

Theorem 5. Suppose so that is possible. Then, for any integers , either or .

Proof. If , the result is easy. Suppose that , so that for positive integer k. By Axiom 6, either or . We can then append the information state to both sides and rearrange the components. The argument for is exactly similar. ☐

This fact has a corollary that we may state using the → relation on processes. Suppose is a possible singleton process, and let be integers with . Then, either or (so that is either natural or antinatural) for the bit process .

Given

, therefore, we can define two sets of rational numbers:

where

. Both sets are nonempty and every rational number is in at least one of these sets. Furthermore, if

and

, we have that

and

, and so

Hence,

. Since

is itself a natural irreversible process, it follows that

, and so

Every element of is an upper bound for . It follows that and form a Dedekind cut of the rationals, which leads us to the following important result.

Theorem 6. For , define . Then, is an irreversibility function on .

Proof. First, we must show that is additive. Suppose . If and , then , and so . It follows that . The corresponding argument involving and proves that . Thus, must be additive.

Next, we must show that the value of tells us the type of the process . If , then but , and so but . That is, is natural irreversible. Likewise, if , then but , from which we find that must be antinatural irreversible. Finally, if , we find that and for arbitrarily large values of q. From Axiom 7, we may conclude that , and so is reversible. ☐

Notice that we have arrived at an irreversibility function for possible singleton processes—those most analogous to the ordinary processes in Giles or any text on classical thermodynamics—from axioms about information and processes involving demons (Axioms 5–7). In our view, such ideas are not “extras” to be appended onto a thermodynamic theory, but are instead central concepts throughout. In ordinary thermodynamics, the possibility of a reversible heat engine can have implications for processes that do not involve any heat engines at all. In the information thermodynamics whose axiomatic foundations we are exploring, the possibility of a Maxwell’s demon has implications even for situations in which no demon acts.

We now have irreversibility functions for both information processes and singleton processes. These are closely related. In fact, it is possible to prove the following general result:

Theorem 7. If and , then the combined process is natural if and only if .

Since the set of possible singleton processes is a subgroup of the Abelian group of all singleton processes, we can extend the additive irreversiblity function to all of . Though is unique on the possible set , its extension to is generally not unique.

8. Components of Content and Entropy

Our axiomatic theory of information thermodynamics is fundamentally about the set of eidostates

. However, the part of that theory dealing with the set

of singleton eidostates includes many of the concepts and results of ordinary axiomatic thermodynamics [

1]. We have a group of singleton processes

containing a subgroup

of possible processes, and we have constructed an irreversibility function

on

that may be extended to all of

. From these we can establish several facts.

We can construct components of content, which are the abstract versions of conserved quantities. A component of content Q is an additive function on such that, if the singleton process is possible, then . (In conventional thermodynamics, components of content include energy, particle number, etc.)

We can find a sufficient set of components of content. The singleton process is possible if and only if for all Q in the sufficient set.

We can use to define a quasi-entropy on as follows: . This is an additive function on states in such that .

Because the extension of the irreversibility function

from

to all of

is not unique, the quasi-entropy

is not unique either. How could various quasi-entropies differ? Suppose

and

are two different extensions of the same original

, leading to two quasi-entropy functions

and

on

. Then, the difference

is a component of content. That is, if

is possible,

since

and

agree on

, which contains

.

Another idea that we can inherit without alteration is the concept of a

mechanical state. A mechanical state is a singleton state that reversibly stores one or more components of content, in much the same way that we can store energy reversibly as the work done to lift or lower a weight. The mechanical state in this example is the height of the weight. In Giles’s theory [

1], mechanical states are the subject of an axiom, which we also adopt:

Axiom 8. Mechanical states: There exists a subset of mechanical states such that:

- (a)

If , then .

- (b)

For , if then .

Nothing in this axiom asserts the actual existence of any mechanical state. It might be that . Furthermore, the choice of the designated set is not determined solely by the → relations among the states. For instance, the set of record states might be included in , or not. This explains why the introduction of mechanical states must be phrased as an axiom, rather than a definition: a complete specification of the system must include the choice of which set is to be designated as . Whatever choice is made for , the set of mechanical processes (i.e., those equivalent to for ) will form a subgroup of .

A mechanical state may “reversibly store” a component of content Q, but it need not be true that every Q can be stored like this. We say that a component of content Q is non-mechanical if for all . For example, we might store energy by lifting or lowering a weight, but we cannot store particle number in this way.

Once we have mechanical states and processes, we can give a new classification of processes. A process

is said to be

adiabatically natural (

possible,

reversible,

antinatural) if there exists a mechanical process

such that

is natural (possible, reversible, antinatural). We can also define the “adiabatic accessibility” relation for states in

, as mentioned in

Section 6:

whenever the process

is adiabatically natural.

The set

of mechanical states allows us to refine the idea of a quasi-entropy into an

entropy, which is a quasi-entropy

that takes the value

for any mechanical state

m. Such a function is guaranteed to exist. We end up with a characterization theorem, identical to a result of Giles [

1], that summarizes the general thermodynamics of singleton eidostates in our axiomatic theory.

Theorem 8. There exist an entropy function and a set of components of content Q on with the following properties:

- (a)

For any , .

- (b)

For any and component of content Q, .

- (c)

For any , if and only if and for every component of content Q.

- (d)

for all .

An entropy function is not unique. Two entropy functions may differ by a non-mechanical component of content.

The entropy function on

is related to the information entropy function we found for information states in

. Suppose we have

and

. Then, Theorem 7 tells us that

only if

Let represent the set of eidostates that are similar to a singleton state combined with an information state. Then, is an entropy function on . In the next section, we will extend the domain of the entropy function even further, to the set of all uniform eidostates.

9. State Equivalence

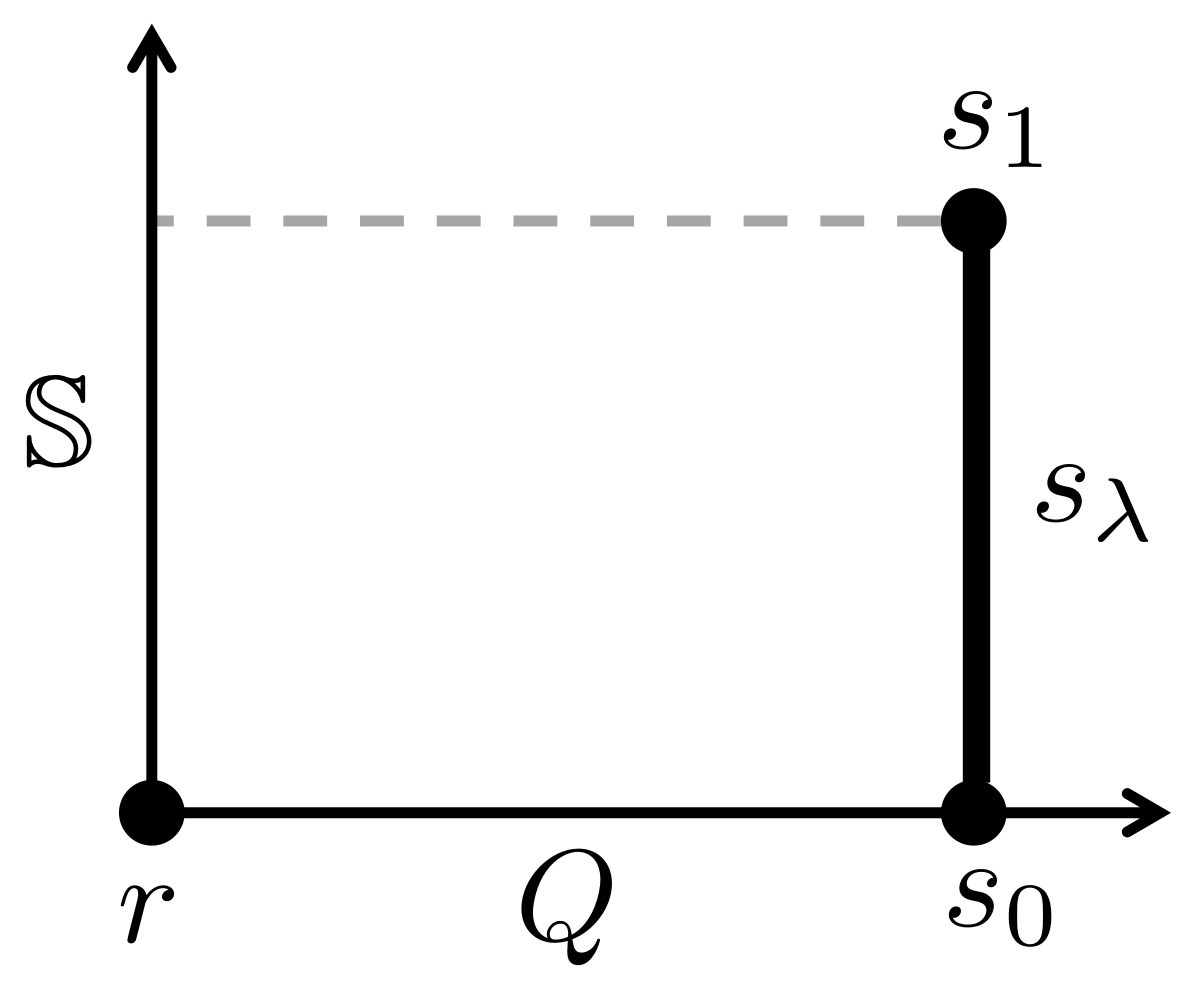

Consider a thought-experiment (illustrated in

Figure 4) in which a one-particle gas starts out in a volume

and a second thermodynamic system starts out in one of three states

,

or

. We assume that all conserved quantities are the same for these three states, but they may differ in entropy. We can formally describe the overall situation by the eidostate

, where

is uniform.

We can reversibly transform each of the states to the same state e, compensating for the various changes in entropy by expanding or contracting the volume occupied by the gas. That is, we can have for adjacent but non-overlapping volumes . Axiom 4 indicates that we can write , where .

Now, we note that

V can itself be reversibly transformed into a singleton eidostate

v. A gas molecule in one of the sub-volumes (eidostate

V) can be turned into a molecule in the whole volume (eidostate

e) by removing internal partitions between the sub-volumes; and when we re-insert these partitions the particle is once again in just one of sub-volumes. Thus,

. To summarize, we have

The uniform eidostate E, taken together with the gas state , can be reversibly transformed into the singleton state . We call this a state equivalence for the uniform eidostate E. By choosing the state e properly (say, by letting for some k), we can also guarantee that the volume v is larger than , so that by free expansion.

This discussion motivates our final axiom, which states that this type of reversible transformation is always possible for a uniform eidostate.

Axiom 9. State equivalence: If E is a uniform eidostate then there exist states such that and .

Axiom 9 closes a number of gaps in our theory. For example, our previous axioms (Axioms 1–8) do not by themselves guarantee than

any state in

has a nonzero entropy. With the new axiom, however, we can prove that such states exist. The bit state

, which is uniform, has some state equivalence given by

. Since the eidostates on each side of this relation are in

, we can determine the entropies on each side. We find that

It follows that at least one of the states

must have

.

State equivalence also allows us to define the entropy of any uniform eidostate

. If

then we let

We must first establish that this expression is well-defined. If we have two state equivalences for the same

E, so that

and

, then

from which it follows that

Thus, our definition for

does not depend on our choice of state equivalence for

E.

Is an entropy function on ? It is straightforward to show that is additive on and that for any mechanical state m. It remains to show that, for any with possible, if and only if . We will use the state equivalences and .

Suppose first that

. Then,

and so

From this, it follows that

and hence

.

We can actually extract one more fact from this argument. If we assume that

is possible, it must also be true that

is a possible singleton process. If we now suppose that

, we know that

and thus

Therefore,

, as desired.

We have extended the entropy to uniform eidostates. It is even easier to extend any component of content function Q to these states. If , then any must have , since . Thus, we can define for any . This is additive because the elements of are combinations of states in E and F. Furthermore, suppose we have a state equivalence . Since we assume in a state equivalence, . By the conditional process axiom (Axiom 4) we know that for any . It follows that .

Now, let

and

be uniform eidostates with state equivalences

. Suppose further that

for every component of content

Q. We know that

,

and

for every component of content. Thus

It follows that

, i.e., that

is a possible eidostate process.

We have therefore extended Theorem 8 to all uniform eidostates. We state the new result here.

Theorem 9 (Uniform eidostate thermodynamics)

. There exist an entropy function and a set of components of content Q on with the following properties:

- (a)

For any , .

- (b)

For any and component of content Q, .

- (c)

For any , if and only if and for every component of content Q.

- (d)

for all .

The set

of uniform eidostates includes the singleton states in

, the information states in

, all combinations of these, and perhaps many other states as well. (Non-uniform eidostates in

might exist, as we will see in the model we discuss in

Section 12, but their existence cannot be proved from our axioms.) The type of every process involving uniform eidostates can be determined by a single entropy function (which must not decrease) and a set of components of content (which must be conserved).

10. Entropy for Uniform Eidostates

We have extended the entropy function from singleton states and information states to all uniform eidostates. It turns out that this extension is unique. The following theorem and its corollaries actually allow us to compute the entropy of any from the entropies of the states contained in E.

Theorem 10. Suppose is a disjoint union of uniform eidostates and . Then Proof. E and

(

) all have equal components of content. Define

Note that, if we replace

by

for

, then these new eidostates are still disjoint and

These eidostates have the same components of content as the original

E. Furthermore,

We can find a uniform eidostate with the same components of content such that is less than or equal to , and . (It suffices to pick to be the state of smallest entropy among , and E.) Then, there exist integers such that

There are disjoint information states containing record states.

There are disjoint information states containing record states.

and have and record states, respectively.

We have

That is, we choose

so that

is between

and

. To put it more simply,

.

Once we have

, we can write that

Adding these inequalities for

and taking the logarithm yields

How far apart are the two ends of this chain of inequalities? Here, is a useful fact about base-2 logarithms: If

, then

. This implies

The two ends of the inequality differ by less than

.

We can get another chain of inequalities by applying Axiom 4 about conditional processes. Since all of our uniform eidostates have the same components of content, we know that

From the axiom, we can therefore say

which implies that

We have two quantities that lie in the same interval. Their separation is therefore bounded by the interval width—i.e., less than

. Therefore,

How big are the numbers

? We can make such numbers as large as we like by considering instead the eidostates

, where

I is an information state. As we have seen,

. Given any

, we can choose

I so that

Then,

, and so

Since this is true for any

, we must have

, and so

as desired. ☐

Theorem 10 has a corollary, which we obtain by applying the theorem inductively:

Theorem 11. If E is a uniform eidostate, The entropy of any uniform eidostate is a straightforward function of the entropies of the states contained therein. If E contains more than one state, we notice that for any . It follows that is a natural irreversible process.

To take a simple example of Theorem 11, consider the entropy of an information state. Every record state

r has

. Thus, for

,

as we have already seen.

11. Probability

An eidostate in represents a state of knowledge of a thermodynamic agent. It is, as we have said, a simple list of possible states, without any assignment of probabilities to them. If the agent is to use probabilistic reasoning, then it needs to assign conditional probabilities of the form where .

The entropy formula in Theorem 11 allows us to make such an assignment based on the entropy itself, provided the eidostate conditioned upon is uniform. Suppose

and let

. Then, the

entropic probability of

a conditioned on

E is

Clearly,

and

. It is worth noting that, although

E is “uniform” (in the sense that all

have exactly the same conserved components of content), the probability distribution

is

not uniform, but assigns a higher probability to states of higher entropy.

We can generalize entropic probabilities a bit further. Let

A be any subset of

(be it an eidostate or not) and

. Then,

is either a uniform eidostate or the empty set ∅. If we formally assign

, then both

E and

have well-defined entropies. Then, we define

Obviously,

. Now, consider two disjoint sets

A and

B along with

. The set

is a disjoint union of uniform eidostates (or empty sets)

and

. Thus,

in accordance with the rules of probability. We also have the usual rule for conditional probabilities. Suppose

and

such that

. Then

The entropy formula in Theorem 11 and the probability assignment in Equation (

50) call to mind familiar ideas from statistical mechanics. According to Boltzmann’s formula, the entropy is

, where

is (depending on the context) the number (or phase space volume or Hilbert space dimension) of the microstates consistent with macroscopic data about a system. In the microcanonical ensemble, a uniform probability distribution is assigned to these microstates. In an eidostate

E comprising non-overlapping macrostates states

, we would therefore expect

, and the probability of state

should be proportional to

. However, in our axiomatic system Theorem 11 and Equation (

50) do not arise from any assumptions about microstates, but solely from the “phenomenological” → relation among eidostates in

.

Even though the entropy function

is not unique, the entropic probability assignment is unique. Suppose

and

are two entropy functions for the same states and processes. Then, as we have seen, the difference

is a component of content. All of the states within a uniform eidostate

E (as well as

E itself) have the same values for all components of content. That is,

for any

. Thus,

(Both probabilities are zero for

, of course.)

The entropic probability assignment is not the only possible probability assignment, but it does have a number of remarkable properties. For instance, suppose

E and

F are two uniform eidostates. If we prepare them independently, we have the combined eidostate

and the probability of some particular state

is

as we would expect for independent events.

The entropic probability also yields an elegant expression for the entropy of a uniform eidostate E.

Theorem 12. Suppose E is a uniform eidostate, and is the entropic probability for state . Thenwhere is the average state entropy in E and is the Shannon entropy of the distribution. Proof. We first note that, for any

,

. We rewrite this as

and take the mean value with respect to the

probabilities:

Therefore,

, as desired. ☐

We previously said that the list of possible states in an eidostate represents a kind of information. Theorem 12 puts this intuition on a quantitative footing. The entropy of a uniform eidostate E can be decomposed into two parts: the average entropy of the states, and an additional term representing the information contained in the distinction among the possible states. For a singleton state, the entropy is all of the first sort. For a pure information state , it is all of the second.

In fact, the decomposition itself uniquely picks out the entropic probability assignment. Suppose

is the entropic probability of

a given

E, and

is some other probability distribution over states in

E. By Gibbs’s inequality [

17],

with equality if and only if

for all

. We find that

and so

with equality if and only if the

distribution is the entropic one.

An agent that employs entropic probabilities will regard the entropy of a uniform eidostate E as the sum of two parts, one the average entropy of the possible states and the other the Shannon entropy of the distribution. For an agent that employs some other probability distribution, things will not be so simple. Besides the average state entropy and the Shannon entropy of the distribution, will include an extra, otherwise unexplained term. Thus, for a given collection of eidostates connected by the → relation, the entropic probability provides a uniquely simple account of the entropy of any uniform eidostate.

It is in this sense we say that the entropic probability “emerges” from the entropy function , just as that function itself emerges from the → relation among eidostates.

Some further remarks about probability are in order. Every formal basis for probability emphasizes a distinct idea about it. In Kolmogorov’s axioms [

18], probability is simply a measure on a sample space. High-measure subsets are more probable. In the Bayesian approach of Cox [

19], probability is a rational measure of confidence in a proposition. Propositions in which a rational agent is more confident are also more probable. Laplace’s early discussion [

20] is based on symmetry. Symmetrically equivalent events—two different orderings of a shuffled deck of cards, for instance—are equally probable. (Zurek [

21] has used a similar principle of “envariance” to discuss the origin of quantum probabilities.) In algorithmic information theory [

16], the algorithmic probability of a bit string is related to its complexity. Simpler bit strings are more probable.

In a similar way, entropic probabilities express facts about state transformations. In a uniform eidostate E, any two states are related by a possible process. If , then the output state is at least as probable as the input state: .

12. A Model for the Axioms

A model for an axiomatic system may serve several purposes. The existence of a model establishes that the axioms are self-consistent. A model may also demonstrate that the system can describe an actual realistic physical situation. If the axioms have a variety of interesting models, then the axiomatic theory is widely applicable. We may almost say that the entire significance of an axiomatic system lies in the range of models for that system.

Terms that are undefined in an abstract axiomatic system are defined within a model as particular mathematical structures. The axioms of the system are provable properties of those structures. Therefore, a model for axiomatic information thermodynamics must include several elements:

A set of states and a collection of finite nonempty subsets of to be designated as eidostates.

A rule for interpreting the combination of states (+) in .

A relation → on .

A designated set of mechanical states (which might be empty).

Proofs of Axioms 1–9 within the model, including the general properties of →, the existence of record states and information states, etc.

The model will therefore involve specific meanings for , , +, → and so forth. It will also yield interpretations of derived concepts and results, such as entropy functions and conserved components of content. In the abstract theory, the combination is simply the Cartesian pair . This definition may suffice for the model, or the model may have a different interpretation of +. In any case, it must be true in the model that implies and .

Our first model for axiomatic thermodynamics is based on a set

of “atomic” states, from which all states in

and all eidostates in

are constructed. We assign entropies and components of content to these states, which extend to composite states by additivity. Let us consider a simple but non-trivial example that has just one component of content

Q. A suitable set

of atomic states is shown in

Figure 5. It includes a special state

r (with

and

) and a continuous set of states

with

and

. The parameter

ranges over the closed interval

.

The set of states includes everything that can be constructed from by finite application of the pairing operation. In this way, we can build up with any non-negative integer value of the component of content and any entropy value . Indeed, there will typically be many different ways to create given values. To obtain and , for example, we might have .

Anticipating somewhat, we call an eidostate uniform if all of its elements have the same Q-value. We calculate the entropy of a uniform eidostate by applying Theorem 11 to it.

Our model allows any finite nonempty set of states to play the role of an eidostate. Hence, we have both uniform and non-uniform eidostates in

. Each eidostate

A has a finite Cartesian factorization

If

A is uniform, then all of its factors are also uniform. If none of its factors are uniform, we say that

A is

completely non-uniform. More generally, we can write down an

NU-decomposition for any eidostate

A:

where

is a completely non-uniform eidostate and

is a uniform eidostate. Of course, if

A itself is either completely non-uniform or uniform, one or the other of these eidostates may be absent from the decomposition. The NU-decomposition is unique up to similarity: If

for completely non-uniform

Ns and uniform

Us, then

and

.

We can now define the → relation on in our model. If , we first write down NU-decompositions and . We say that provided three conditions hold:

Either and both do not exist, or .

Either and both do not exist, or only one exists and its Q-value is 0, or both exist and .

Either and both do not exist, or only exists and , or only exists and , or both exist and .

We may call these the N-criterion, Q-criterion, and -criterion, and summarize their meaning as follows: provided we can transform A to B by: (a) rearranging the non-uniform factors; and (b) transforming the uniform factors in a way that conserves Q and does not decrease .

Now, let us examine each of the axioms in turn.

- Axiom 1

The basic properties of eidostates follow by construction.

- Axiom 2

Part (a) holds because implies that A and B can have the same NU-decomposition. Part (b) holds because similarity, equality (for Q) and inequality (for ) are all transitive. Parts (c) and (d) make use of the general facts that and .

- Axiom 3

If , then . If , then it must be true that , and so . The -criterion fails, so in this case as well.

- Axiom 4

For Part (a), we note that A must be uniform, and so is also uniform. The statement follows from the -criterion. Part (b) also follows from the -criterion.

- Axiom 5

The atomic state r with and is a record state, as is , etc. We can take our bit state to be . Since every information state is uniform with , every information process (including ) is possible.

- Axiom 6

Since all of the states of the form are uniform, the statements in this axiom follow from the -criterion.

- Axiom 7

Suppose . Since J is uniform, it must be that , from which it follows that . The -criterion for and follows from a typical stability argument—that is, if for arbitrarily large values of n, then it must be true .

- Axiom 8

The set of mechanical states may be defined to include all states that can be constructed from the zero-entropy atomic state (, , etc.). The required properties of follow.

- Axiom 9

The uniform eidostate E has and . Choose an integer . Now, let (or if ), , and where . We find that , , and . It follows that and .

This model based on a simple set of atomic states has several sophisticated characteristics, including a non-trivial component of content Q and possible processes involving non-uniform eidostates. It is not difficult to create models of this type that are even richer and more complex. However, it may be objected that this type of model obscures one of the key features of axiomatic information thermodynamics. Here, the entropy function does not emerge from the → relation among eidostates, but instead is imposed by hand to define → within the model. We address this deficiency in our next model.

13. A Simple Quantum Model

Now, we present a model for the axioms in which the entropy function does emerge from the underlying structure. The model is a simple one without mechanical states or non-trivial components of content. Every eidostate is uniform and every process is possible. On the other hand, the model is based on quantum mechanics, and so is not devoid of features of interest.

Consider an agent A that can act upon an external qubit system Q having Hilbert space

. Based on the information the agent possesses, it may assign the states

or

to the qubit. These state vectors need not be orthogonal. That is, it may be that no measurement of Q can perfectly distinguish which of the two states is actually present. The states, however, correspond to states of knowledge of agent A, and the agent is able to perform different operations on Q depending on whether it judges the qubit to be in one state or the other. Our notion of information possessed by the agent is thus similar to Zurek’s concept of

actionable information [

22]. Roughly speaking, information is actionable if it can be used as a control variable for conditional unitary dynamics. This means that the two states of the agent’s memory (

and

in a Hilbert space

) must be distinguishable. Hence, if we include the agent in our description of the entire system, the states

and

are orthogonal, even if

and

are not.

Our model for axiomatic information thermodynamics envisions a world consisting of an agent A and an unbounded number of external qubits. Nothing essential in our model would be altered if the external systems had

—“qudits” instead of qubits. The thermodynamic states of the qubit systems are actually states of knowledge of the agent, and so we must include the corresponding state of the agent’s memory in our physical description. The quantum state space for our model is of the form:

To be a bit more rigorous, we restrict

to vectors of the form

, where

for some finite

n, and

is a designated “zero” ket in

. Physical states in

have

. (The space

is not quite a Hilbert space, since it is not topologically complete, but this mathematical nicety will not affect our discussion.)

Since the Qs are qubits, . The agent space , however, must be infinite-dimensional, so that it contains a countably infinite set of orthogonal quantum states. These are to be identified as distinct records of the agent’s memory.

In our thermodynamic model, the elements of

(the thermodynamic “states”) are projection operators on

. For any

, we have a projection on

of the form

where

is an agent state in

and

is a non-null projection in

for some finite

. The value of

n is determined by a specified integer function

, which we call the

length of the state

a. Heuristically, the thermodynamic state

a means that the state of the world lies in the subspace

onto which

projects. The agent’s memory is in the state

and the the quantum state of the first

external qubits lies somewhere in the subspace onto which

projects. (All of the subsequent qubits are in the state

.)

Two distinct thermodynamic states correspond to orthogonal states of the agent’s memory. If with , then . The projections and are orthogonal to each other (so that ). However, it need not be the case that and are orthogonal.

Given a, the projection projects onto the subspace The dimension of this subspace is . Note that . We will assume that there are subspaces of every finite dimension: For any integer , there exists with .

Suppose

correspond to

and

. Then, we will specify that the combined state

corresponds to

Since the state

entails a distinct state of the agent’s knowledge, the agent state vector

is orthogonal to both

and

. We also note that

.

Here is a clarifying example. Suppose

. Then,

are distinct thermodynamic states and hence orthogonal projections in

, even though they correspond to exactly the same qubit states. The difference between

and

entirely lies in the distinct representations of the states in the agent’s memory.

The eidostates in our model are the finite nonempty collections of states in

. We can associate each eidostate with a projection operator as well. Let

be an eidostate. We define

This is a projection operator because the

projections are orthogonal to one another.

projects onto a subspace

, which is the linear span of the collection of subspaces

.

Interestingly, this subspace

might contain quantum states in which the agent A is entangled with one or more external qubits. Suppose

are associated with single-qubit projections onto distinct states

and

. The eidostate

is associated with the projection

which projects onto a subspace

that contains the quantum state

In this state, the agent does not have a definite memory record state. However, if a measurement is performed on the agent (perhaps by asking it a question), then the resulting memory record

a or

b would certainly be found to be consistent with the state of the qubit system,

or

.

Suppose we combine two eidostates

and

. Then, the quantum state lies in a subspace of dimension

When eidostates combine, subspace dimension is multiplicative.

It remains to define the → relation in our quantum model. We say that if there exists a unitary time evolution operator on such that implies that . That is, every quantum state consistent with A evolves to one consistent with B under the time evolution . (Note that the evolution includes a suitable updating of the agent’s own memory state.) This requirement is easily expressed as a subspace dimension criterion: if and only if .

We are now ready to verify our axioms.

- Axiom 1

This follows from our construction of the eidostates .

- Axiom 2

All of these basic properties of the → relation follow from the subspace dimension criterion.

- Axiom 3

If , then , and so .

- Axiom 4

Again, both parts of this axiom follow from the subspace dimension criterion. If eidostate A is a disjoint union of eidostates and , then .

- Axiom 5

Any state r with functions as a record state. We have assumed that such a state exists. We can take . The bit process is natural () by the subspace dimension criterion. Notice that, for any information state I, .

- Axiom 6

For any and , we have . For Part (a), we can always find a large enough information state so that . For Part (b), either or .

- Axiom 7

If for arbitrarily large values of n, then .

- Axiom 8

It is consistent to take .

- Axiom 9

All of our eidostates are uniform. For any eidostate E, we can choose e so that . (Recall that we have assumed states with every positive subspace dimension.) If we chose to be any state, then .

Our quantum mechanical model is therefore a model of the axioms of information thermodynamics. It is a relatively simple model, of course, having no non-trivial conserved components of content and no mechanical states.

In the quantum model, the entropy of any eidostate is simply the logarithm of the dimension of the corresponding subspace:

. This is the von Neumann entropy of a uniform density operator

. We can, in fact, recast our entire discussion in terms of these mixed states, and this approach does yield some insights. For example, we find that the density operator

for eidostate

E is a mixture of the density operators for its constituent states:

where

is the entropic probability

There are, of course, many quantum states of the agent and its qubit world that do not lie within any eidostate subspace . For example, consider a state associated with a pure state projection . Let be a state orthogonal to . Then, is a perfectly legitimate quantum state that is orthogonal to and every other eidostate subspace. This state represents a situation in which the agent’s memory record indicates that the first external qubits are in state , but the agent is wrong.

The exclusion of such physically possible but incongruous quantum states tells us something significant about our theory of axiomatic information thermodynamics. The set does not necessarily include all possible physical situations; the arrow relations → between eidostates do not necessarily represent all possible time evolutions. Our axiomatic system is simply a theory of what transformations are possible among a collection of allowable states. In this, it is similar to ordinary classical thermodynamics, which is designed to consider processes that begin and end with states in internal thermodynamic equilibrium.

14. Remarks

The emergence of the entropy

, a state function that determines the irreversibility of processes, is a key benchmark for any axiomatic system of thermodynamics. Our axiomatic system does not yield a unique entropy on

, since it is based on the extension of an irreversibility function to impossible processes. However, many of our results and formulas for entropy are uniquely determined by our axioms. The entropy measure for information states, the Hartley–Shannon entropy

, is unique up to the choice of logarithm base. This in turn uniquely determines the irreversibility function on possible singleton processes, since this is defined in terms of the creation and erasure of bit states. There is a unique relationship between the entropy of a uniform eidostate and the entropy of the possible states it contains. Finally, the entropic probability distribution on a uniform eidostate, which might appear at first to depend on the singleton state entropy, is nonetheless unique. It remains to be seen how the axiomatic system developed here for state transformations is related to the axiomatic system, similar in some respects, given by Knuth and Skilling for considering problems of inference [

23]. There, symmetry axioms in a lattice of states give rise to probability and entropy measures. In a similar way, the “entropy first, probability after” idea presented here is reminiscent of Caticha’s “entropic inference” [

24], in which the probabilistic Bayes rule is derived (along with the maximum entropy method) from a single relative entropy functional.