Utility, Revealed Preferences Theory, and Strategic Ambiguity in Iterated Games

Abstract

:1. Introduction

2. Non-Cooperative Game Theory

3. Information Theory

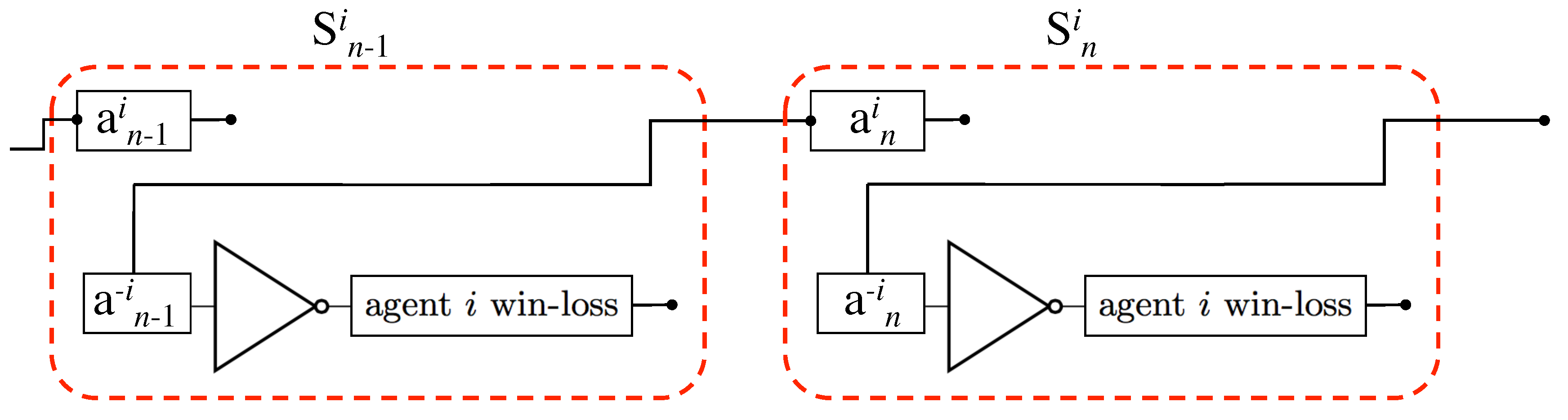

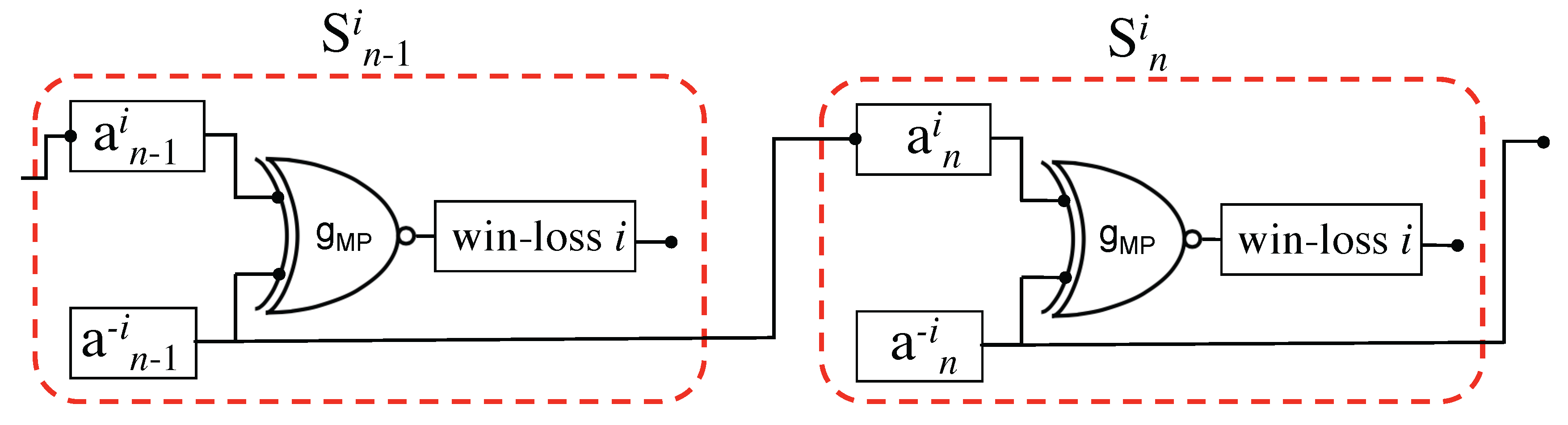

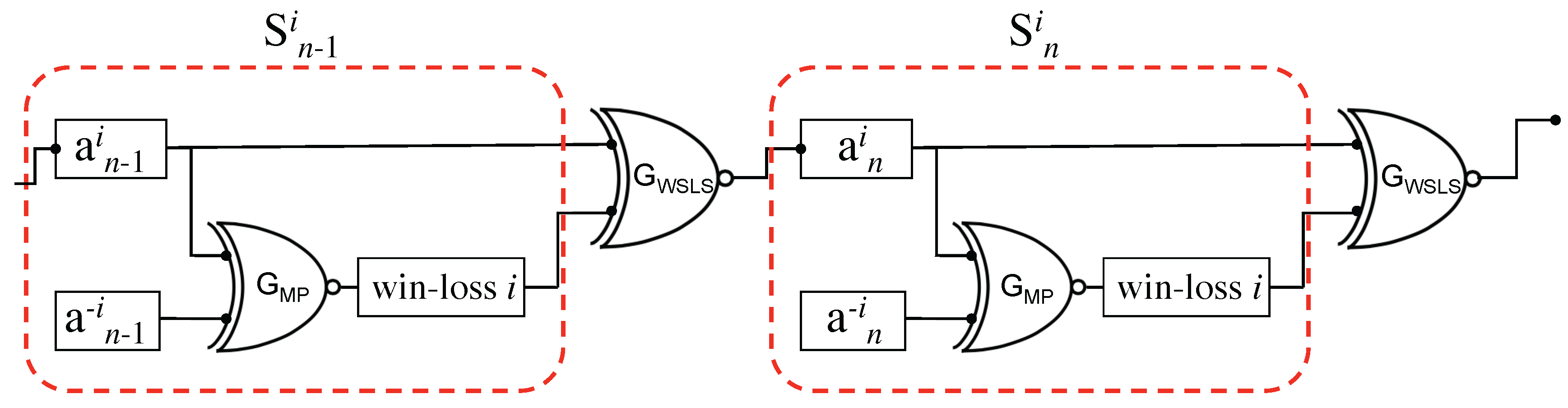

Information Chain Rule and Iterated Games

4. Example Games

4.1. The Traveler’s Dilemma

“Lucy and Pete, returning from a remote Pacific island, find that the airline has damaged the identical antiques that each had purchased. An airline manager says that he is happy to compensate them but is handicapped by being clueless about the value of these strange objects. Simply asking the travelers for the price is hopeless, he figures, for they will inflate it.Instead, he devises a more complicated scheme. He asks each of them to write down the price of the antique as any dollar integer between $2 and $100 without conferring together. If both write the same number, he will take that to be the true price, and he will pay each of them that amount. However, if they write different numbers, he will assume that the lower one is the actual price and that the person writing the higher number is cheating. In that case, he will pay both of them the lower number along with a bonus and a penalty–the person who wrote the lower number will get $2 more as a reward for honesty and the one who wrote the higher number will get $2 less as a punishment. For instance, if Lucy writes $46 and Pete writes $100, Lucy will get $48 and Pete will get $44.”

4.2. Prisoner’s Dilemma

4.3. Matching Pennies

5. Discussion

Acknowledgments

Conflicts of Interest

References

- Von Neumann, J.; Morgenstern, O. Theory of Games and Economic Behavior; Princeton University Press: Princeton, NJ, USA, 2007. [Google Scholar]

- Nash, J. Non-cooperative games. Ann. Math. 1951, 54, 286–295. [Google Scholar] [CrossRef]

- Osborne, M.J.; Rubinstein, A. A Course in Game Theory; MIT Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Sato, Y.; Crutchfield, J.P. Coupled replicator equations for the dynamics of learning in multiagent systems. Phys. Rev. E 2003, 67, 015206. [Google Scholar] [CrossRef] [PubMed]

- Nowak, M.A.; May, R.M. Evolutionary games and spatial chaos. Nature 1992, 359, 826–829. [Google Scholar] [CrossRef]

- Hauert, C.; Doebeli, M. Spatial structure often inhibits the evolution of cooperation in the snowdrift game. Nature 2004, 428, 643–646. [Google Scholar] [CrossRef] [PubMed]

- Axelrod, R.M. The Evolution of Cooperation; Basic Books: New York, NY, USA, 2006. [Google Scholar]

- Nowak, M.A. Evolutionary Dynamics; Harvard University Press: Harvard, MA, USA, 2006. [Google Scholar]

- Axelrod, R. Effective choice in the prisoner’s dilemma. J. Confl. Resolut. 1980, 24, 3–25. [Google Scholar] [CrossRef]

- Axelrod, R.M. The Complexity of Cooperation: Agent-Based Models of Competition and Collaboration; Princeton University Press: Princeton, NJ, USA, 1997. [Google Scholar]

- Nowak, M.; Sigmund, K. A strategy of win-stay, lose-shift that outperforms tit-for-tat in the Prisoner’s Dilemma game. Nature 1993, 364, 56–58. [Google Scholar] [CrossRef] [PubMed]

- Arthur, W.B. Inductive reasoning and bounded rationality. Am. Econ. Rev. 1994, 84, 406–411. [Google Scholar]

- Tesfatsion, L. Agent-based computational economics: Modeling economies as complex adaptive systems. Inf. Sci. 2003, 149, 262–268. [Google Scholar] [CrossRef]

- Binmore, K. Rational Decisions; Princeton University Press: Princeton, NJ, USA, 2008. [Google Scholar]

- Savage, L.J. The Foundations of Statistics; Courier Corporation: North Chelmsford, MA, USA, 1954. [Google Scholar]

- Glimcher, P.W.; Fehr, E. Neuroeconomics: Decision Making and the Brain; Academic Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Sanfey, A.G. Social decision-making: Insights from game theory and neuroscience. Science 2007, 318, 598–602. [Google Scholar] [CrossRef] [PubMed]

- Lee, D. Game theory and neural basis of social decision making. Nat. Neurosci. 2008, 11, 404–409. [Google Scholar] [CrossRef] [PubMed]

- Barraclough, D.J.; Conroy, M.L.; Lee, D. Prefrontal cortex and decision making in a mixed-strategy game. Nat. Neurosci. 2004, 7, 404–410. [Google Scholar] [CrossRef] [PubMed]

- Camerer, C.; Loewenstein, G.; Prelec, D. Neuroeconomics: How neuroscience can inform economics. J. Econ. Lit. 2005, 43, 9–64. [Google Scholar] [CrossRef]

- Lux, T.; Marchesi, M. Scaling and criticality in a stochastic multi-agent model of a financial market. Nature 1999, 397, 498–500. [Google Scholar] [CrossRef]

- Tedeschi, G.; Iori, G.; Gallegati, M. Herding effects in order driven markets: The rise and fall of gurus. J. Econ. Behav. Organ. 2012, 81, 82–96. [Google Scholar] [CrossRef]

- Goeree, J.K.; Holt, C.A. Ten little treasures of game theory and ten intuitive contradictions. Am. Econ. Rev. 2001, 91, 1402–1422. [Google Scholar] [CrossRef]

- Kerr, B.; Riley, M.A.; Feldman, M.W.; Bohannan, B.J. Local dispersal promotes biodiversity in a real-life game of rock–paper–scissors. Nature 2002, 418, 171–174. [Google Scholar] [CrossRef] [PubMed]

- Skyrms, B. The Stag Hunt and the Evolution of Social Structure; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 2000, 85, 461. [Google Scholar] [CrossRef] [PubMed]

- Wibral, M.; Pampu, N.; Priesemann, V.; Siebenhühner, F.; Seiwert, H.; Lindner, M.; Lizier, J.T.; Vicente, R. Measuring information-transfer delays. PLoS ONE 2013, 8, e55809. [Google Scholar] [CrossRef] [PubMed]

- Barnett, L.; Lizier, J.T.; Harré, M.; Seth, A.K.; Bossomaier, T. Information flow in a kinetic Ising model peaks in the disordered phase. Phys. Rev. Lett. 2013, 111, 177203. [Google Scholar] [CrossRef] [PubMed]

- Bossomaier, T.; Barnett, L.; Harré, M.; Lizier, J.T. An Introduction to Transfer Entropy: Information Flow in Complex Systems; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Lizier, J.T.; Prokopenko, M.; Zomaya, A.Y. Local measures of information storage in complex distributed computation. Inf. Sci. 2012, 208, 39–54. [Google Scholar] [CrossRef]

- Basu, K. The traveler’s dilemma: Paradoxes of rationality in game theory. Am. Econ. Rev. 1994, 84, 391–395. [Google Scholar] [CrossRef]

- Wolpert, D.; Jamison, J.; Newth, D.; Harré, M. Strategic choice of preferences: The persona model. BE J. Theor. Econ. 2011, 11. [Google Scholar] [CrossRef]

- Weibull, J.W. Evolutionary Game Theory; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Minsky, M.; Papert, S. Perceptrons; MIT Press: Cambridge, MA, USA, 1969. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Internal Representations by Error Propagation; Technical Report; DTIC Document; DTIC: Fort Belvoir, VA, USA, 1985. [Google Scholar]

- Sato, Y.; Ay, N. Information flow in learning a coin-tossing game. Nonlinear Theory Its Appl. IEICE 2016, 7, 118–125. [Google Scholar] [CrossRef]

- Lizier, J.T.; Prokopenko, M.; Zomaya, A.Y. Local information transfer as a spatiotemporal filter for complex systems. Phys. Rev. E 2008, 77, 026110. [Google Scholar] [CrossRef] [PubMed]

- Lizier, J.T.; Prokopenko, M.; Zomaya, A.Y. Information modification and particle collisions in distributed computation. Chaos Interdiscip. J. Nonlinear Sci. 2010, 20, 037109. [Google Scholar] [CrossRef] [PubMed]

- Albin, P.S.; Foley, D.K. Barriers and Bounds to Rationality: Essays on Economic Complexity and Dynamics in Interactive Systems; Princeton University Press: Princeton, NJ, USA, 1998. [Google Scholar]

- Schumann, A. Payoff Cellular Automata and Reflexive Games. J. Cell. Autom. 2014, 9, 287–313. [Google Scholar]

- Cook, M. Universality in elementary cellular automata. Complex Syst. 2004, 15, 1–40. [Google Scholar]

- Farmer, J.D.; Geanakoplos, J. The virtues and vices of equilibrium and the future of financial economics. Complexity 2009, 14, 11–38. [Google Scholar] [CrossRef]

- Schiff, J.L. Cellular Automata: A Discrete View of the World; John Wiley & Sons: Hoboken, NJ, USA, 2011; Volume 45. [Google Scholar]

- Griffith, V.; Koch, C. Quantifying synergistic mutual information. In Guided Self-Organization: Inception; Springer: Berlin, Germany, 2014; pp. 159–190. [Google Scholar]

- Narayanan, N.S.; Kimchi, E.Y.; Laubach, M. Redundancy and synergy of neuronal ensembles in motor cortex. J. Neurosci. 2005, 25, 4207–4216. [Google Scholar] [CrossRef] [PubMed]

- Wolfram, S. Statistical mechanics of cellular automata. Rev. Mod. Phys. 1983, 55, 601. [Google Scholar] [CrossRef]

- Martin, O.; Odlyzko, A.M.; Wolfram, S. Algebraic properties of cellular automata. Commun. Math. Phys. 1984, 93, 219–258. [Google Scholar] [CrossRef]

- Langton, C.G. Self-reproduction in cellular automata. Phys. D Nonlinear Phenom. 1984, 10, 135–144. [Google Scholar] [CrossRef]

- Wibral, M.; Priesemann, V.; Kay, J.W.; Lizier, J.T.; Phillips, W.A. Partial information decomposition as a unified approach to the specification of neural goal functions. Brain Cogn. 2015. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.; Conroy, M.L.; McGreevy, B.P.; Barraclough, D.J. Reinforcement learning and decision making in monkeys during a competitive game. Cogn. Brain Res. 2004, 22, 45–58. [Google Scholar] [CrossRef] [PubMed]

- Devenow, A.; Welch, I. Rational herding in financial economics. Eur. Econ. Rev. 1996, 40, 603–615. [Google Scholar] [CrossRef]

- Bekaert, G.; Ehrmann, M.; Fratzscher, M.; Mehl, A. The global crisis and equity market contagion. J. Financ. 2014, 69, 2597–2649. [Google Scholar] [CrossRef]

- Langton, C.G. Computation at the edge of chaos: Phase transitions and emergent computation. Phys. D Nonlinear Phenom. 1990, 42, 12–37. [Google Scholar] [CrossRef]

- Harré, M.; Bossomaier, T. Phase-transition—Like behaviour of information measures in financial markets. EPL Europhys. Lett. 2009, 87, 18009. [Google Scholar] [CrossRef]

- Harré, M. Entropy and Transfer Entropy: The Dow Jones and the Build up to the 1997 Asian Crisis. In Proceedings of the International Conference on Social Modeling and Simulation, plus Econophysics Colloquium 2014; Springer: Cham, Switzerland, 2015; pp. 15–25. [Google Scholar]

| Agent | |||

|---|---|---|---|

| Co-op | Defect | ||

| agent i | Co-op | (1 year, 1 year) | (5 years, 0 year) |

| Defect | (0 year, 5 years) | (3 years, 3 years) | |

| |||||

| i’s Win-Loss | WSLS | TfT | |||

| 0 (C) | 0 (c) | 1 (win) | 0 | 0 | |

| 0 (C) | 1 (d) | 0 (loss) | 1 | 1 | |

| 1 (D) | 0 (c) | 1 (win) | 1 | 0 | |

| 1 (D) | 1 (d) | 0 (loss) | 0 | 1 | |

| Agent | |||

|---|---|---|---|

| Heads | Tails | ||

| agent i | Heads | ||

| Tails | |||

| |||||

| WSLS | TfT | ||||

| 1 (H) | 1 (h) | 1 (win) | 1 | 1 | |

| 1 (H) | 0 (t) | 0 (loss) | 0 | 0 | |

| 0 (T) | 1 (h) | 0 (loss) | 1 | 1 | |

| 0 (T) | 0 (t) | 1 (win) | 0 | 0 | |

| |||||

| WSLS | TfT | ||||

| 1 (H) | 1 (h) | 0 (loss) | 0 | 1 | |

| 1 (H) | 0 (t) | 1 (win) | 1 | 0 | |

| 0 (T) | 1 (h) | 1 (win) | 0 | 1 | |

| 0 (T) | 0 (t) | 0 (loss) | 1 | 0 | |

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Harré, M. Utility, Revealed Preferences Theory, and Strategic Ambiguity in Iterated Games. Entropy 2017, 19, 201. https://doi.org/10.3390/e19050201

Harré M. Utility, Revealed Preferences Theory, and Strategic Ambiguity in Iterated Games. Entropy. 2017; 19(5):201. https://doi.org/10.3390/e19050201

Chicago/Turabian StyleHarré, Michael. 2017. "Utility, Revealed Preferences Theory, and Strategic Ambiguity in Iterated Games" Entropy 19, no. 5: 201. https://doi.org/10.3390/e19050201