1. Introduction

Originally, the driving force behind this study was to extend the clear and convincing operational interpretations associated with classical information theory as developed by Shannon [

1] and followers, to the theory promoted by Tsallis for statistical physics and thermodynamics, cf., [

2,

3]. That there are difficulties is witnessed by the fact that, despite its apparent success, some well known physicists still find grounds for criticism. Evidence of this attitude may be found in Gross [

4].

A possible solution to the problem is presented towards the end of our study, in Theorem 18. It is based on the idea that, possibly, what the physicist perceives as the essence in a particular situation could be a result of both the true state of the situation and the physicists preconceptions as expressed by his beliefs. In case there is no deformation from truth and belief to perception, i.e., if “what you see is what is true”, you regain the classical notions of Boltzmann, Gibbs and Shannon.

The approach indicated in Theorem 18 rests on philosophical considerations and associated interpretations. As it turns out, this approach is applicable in a far more abstract setting than needed for the discussion of the particular problem. As a result, a general abstract, quantitative theory is developed. This theory, presented in

Section 2, with its many subsections is the main contribution of our research. A number of possible applications, including Theorem 18, are listed in

Section 3, which has a number of sub-sections covering applications from different areas. They serve as justification for the work which has gone into the development of the general abstract theory. The conclusions are collected in

Section 4.

The theory may be seen as an extension of classical Shannon theory. One does not achieve the same degree of clarity as in the classical theory, where coding provides a solid reference. However, the results developed in

Section 2 and

Section 3 demonstrate that the extension to a more abstract framework is meaningful and opens up for new areas of research. In addition, previous results are consolidated and unified.

The theory of

Section 2 is an abstract theory of

information without probability. Inspiration from Shannon Theory and from the theory of inference within statistics and statistical physics is apparent. However, the ideas are presented here as an independent theory.

Previous endeavours in the direction taken include research by Ingarden and Urbanik [

5] who wrote

“... information seems intuitively a much simpler and more elementary notion than that of probability ... [it] represents a more primary step of knowledge than that of cognition of probability ...”. We also point to Kolmogorov, cf., [

6,

7] who in the latter reference (but going back to 1970, it seems) stated

“Information theory must precede probability theory and not be based on it”. The ideas by Ingarden and Urbanik were taken up by Kampé de Fériet, see the survey in [

8]. The work of Kampé de Fériet is rooted in logic. Logic is also a key ingredient in comprehensive studies over some 40 years by Jaynes, collected posthumously in [

9]. Although many philosophically-oriented discussions are contained in the work of Jaynes, the situations he deals with are limited to probabilistic models and intended mainly for a study of statistical physics.

The work by Amari and Nagaoka in information geometry, cf., [

10], may also be viewed as a broad attempt to free oneself from a tie to probability. There are many followers of the theory developed by Amari and Nagaoka. Here we only mention the recent thesis by Anthonis [

11] which has a base in physics.

In

complexity theory as developed by Solomonoff, Kolmogorov and others, cf., the recent survey [

12] by Rathmanner and Hutter, we have a highly theoretical discipline which aims at inference not necessarily tied to probabilistic modeling. The

Minimum Description Length Principle may be considered an important spin-off of this theory. It is mainly directed at problems of statistical inference and was developed, primarily, by Rissanen and by Barron and Yu, cf., [

13]. We also point to the treatise [

14] by Grünwald. In this work you find discussions of many of the issues dealt with here, including a discussion of the work of Jaynes.

Still other areas of research have a bearing on “information without probability”, e.g., semiotics, philosophy of information, pragmatism, symbolic linguistics, placebo research, social information and learning theory. Many areas within psychology are also of relevance. Some specific works of interest include Jumarie [

15], Shafer and Vovk [

16], Gernert [

17], Bundesen and Habekost [

18], Benedetti [

19] and Brier [

20]. The handbook [

21] edited by Adriaans and Bentham and the encyclopaedia article [

22] by Adriaans collect views on the very concept of

“information”. Over the years, an overwhelming amount of thought has been devoted to that concept in one form or another. Most of this bulk of material is entirely philosophical and not open to quantitative analysis. Part of it is impractical and presently mainly of theoretical interest. Moreover, some is far from Shannon’s theory which we hold as a cornerstone of quantitative information theory. In fact, we consider it a requirement of any quantitative theory of information to be downward compatible with basic parts of Shannon theory. This requirement is largely respected in the present work, but not entirely. For example, we do not know if one can meaningfully lift the concept of coding as known from Shannon theory to a more abstract level.

In many respects, our endeavours go “beyond Shannon”. So does, e.g., Brier in his development of cybersemiotics, cf., [

20,

23]. Brier goes deeper into some of the philosophical aspects than we do and also attempts a broad coverage by incorporating not only the exact natural sciences but also life science, the humanities and the social sciences. Though not foreign to such a wider scope, our study aims at more concrete results by basing the study more directly on quantitative elements. Both studies emphasize the role of the individual in the cognitive process.

A special feature of our development is the appeal to game theoretical considerations, cf., especially

Section 2.10,

Section 2.12 and

Section 2.13. To illuminate the importance we attach to this aspect we quote from Jaynes’ preface to [

9] where he comments on the maximum entropy principle, the central principle of inference promoted by Jaynes:

“... it [maximum entropy] predicts only observable facts (functions of future or past observations) rather than values of parameters which may exist only in our imagination ... it protects us against drawing conclusions not warranted by the data. But when the information is extremely vague, it may be difficult to define any appropriate sample space, and one may wonder whether still more primitive principles than maximum entropy can be found. There is room for much new creative thought here.”

This is one central place where game theory comes in. It represents a main addition, so we claim, to Jaynes’ work In passing, it is noted that at the conference “Maximum Entropy and Bayesian Methods”, Paris 1992, the author had much hoped to discuss the impact of game theoretical reasoning with professor Jaynes. Unfortunately, Jaynes, who died in 1998, was too ill at the time to participate. He never incorporated arguments such as those in [

24] which can be conceived as supportive of his own theory.

The merits of game theory in relation to information theoretical inference were first indicated in the probabilistic, Shannon-like setting, independently of each other, by Pfaffelhuber [

25] and by the author [

26]. More recent references include Harremoës and Topsøe [

27], Grünwald and Dawid [

28], Friedman et al. [

29] (a utility-based work) and Dayi [

30]. As sources of background material [

31,

32,

33] may be helpful.

The quantitative elements we work with are brought into play via a focus on description effort—or just effort. From this concept, general notions of entropy and redundancy (and the close to equivalent notion of divergence) are derived. The information triples we shall take as the key object of study are expressions of the concepts effort/entropy/redundancy (or effort/entropy/divergence). By a “change of sign”, the triples may, just as well, concern utility/max-utility/divergence.

Apart from introducing game theory into the picture, a main feature of the present work lies in its abstract nature with a focus on interpretations rather than on axiomatics which was the emphasis of many previous authors, including Jaynes.

The set of interpretations we shall emphasize in

Section 2 is not be the only one possible. Different sets of interpretations are briefly indicated in

Appendix B and

Appendix C. Though some of this played a role in the development, in statistics, of the notion of properness, we have relegated the material to the appendices, not to disturb the flow of presentation and also as we consider this material to be of lesser significance when comparing it with the main line of thought.

Section 3 may be viewed as a justification of the partly speculative deliberations of

Section 2.1,

Section 2.2,

Section 2.3,

Section 2.4,

Section 2.5,

Section 2.6,

Section 2.7,

Section 2.8,

Section 2.9,

Section 2.10,

Section 2.11,

Section 2.12,

Section 2.13,

Section 2.14,

Section 2.15 and

Section 2.16. Also, in view of the rather elaborate theory of

Section 2 with many new concepts and unusual notation, it may well be that occasional reference to the material in

Section 3 will ease the absorption of the theoretical material.

In

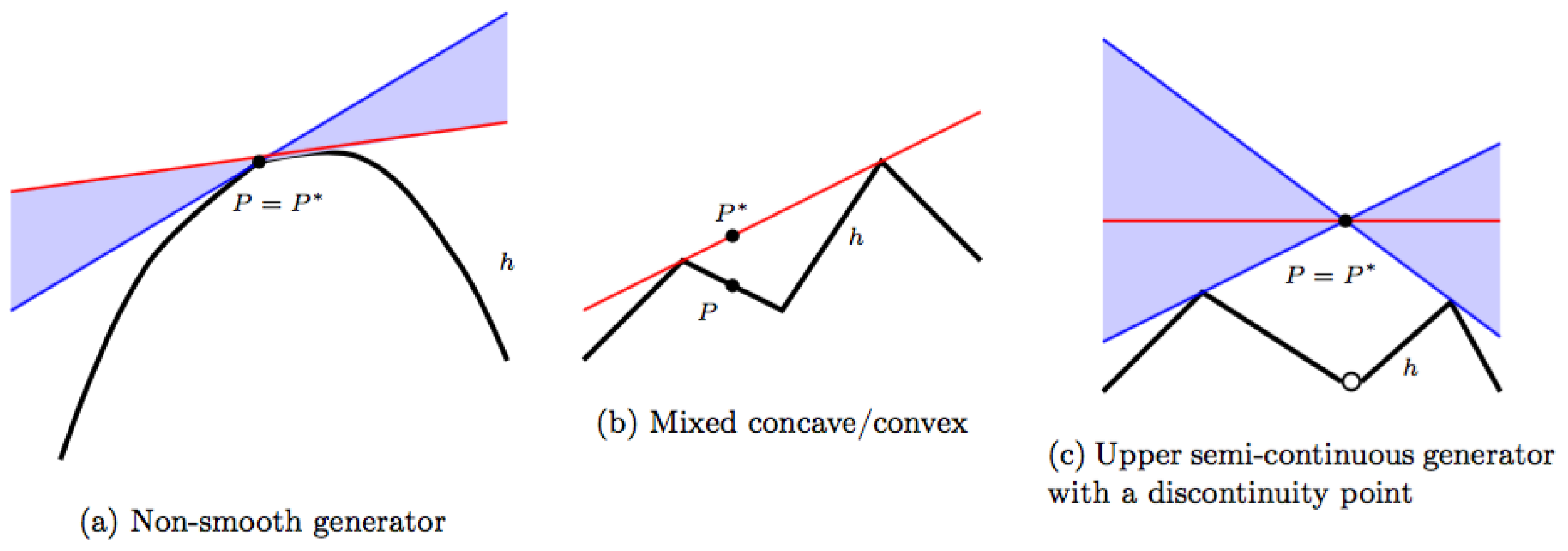

Section 3.1, the natural building stones behind the information triples is presented. This is closely related to the well-known construction associated with Bregman’s name. The construction may be expanded by allowing non-smooth functions as “generators”. Pursuing this leads to situations where the standard notion of

properness breaks down and needs a replacement by weaker notions. Such notions are introduced at the end of

Section 2.10 but may only be appreciated after acquaintance with the less abstract material in

Appendix A.

The applications presented—or indications of potential applications—come from combinatorial geometry, probabilistic information theory, statistics and statistical physics. For most of them, we focus on providing the key notions needed for the theory to work, thus largely leaving concrete applications aside. The aim is to provide enough details in order to demonstrate that our modeling can be applied in quite different contexts. For the case of discrete probabilistic models we do, however, embark on a more thorough analysis. The reason for this is, firstly, that this is what triggered the research reported on and, secondly, with a thorough discussion of modeling in this context, virtually all elements introduced in the many sub-sections of

Section 2 have a clear and natural interpretation. In fact, full appreciation of the abstract theory may only be achieved after reading the material in

Section 3.6 and

Section 3.7.

Our treatment is formally developed independently of previous research. However, unconsciously or not, it depends on earlier studies as referred to above and on the tradition developed over time. More specifically, we mention that our focus on

description effort, especially the notion of

properness, cf.,

Section 2.6, is closely related to ideas first developed for areas touching on meteorology, statistics and information theory.

Previous relevant writings of the author include [

34,

35,

36,

37,

38]. The present study is here published as a substantial expansion of the latter. For instance, elements related to

control—modeling an observer’s active response to belief—and a detailed discussion of Jensen-Shannon divergence as well as more cumbersome technical details were left out in [

38]. Thus, [

38] may best serve as an easy-to-read appetizer to the present more heavy and comprehensive theory.

2. Information without Probability

2.1. The World and You

By we denote the actual world, perhaps one among several possible worlds. Two fictitious persons play a major role in our modeling, “Nature” and “Observer”. These “persons” behave quite differently and, though stereotypical, the reader may associate opposing sexes to them, say female for Nature, male for Observer. The interplay between the two takes place in relation to studies of situations from the world. Observer’s aim is to gain insight about situations studied. It may be helpful to think of Observer as “you”, say a physicist, psychologist, statistician, information theoretician or what the case may be. Nature is seen as an expression of the world itself and reflects the rules of the world. Mostly, such rules may be identified with laws of nature. However, we shall consider models where the rules express an interplay between Nature and Observer and as such may not be absolutes, independent of observer’s interference.

The insight or knowledge sought by Observer will be focused on inference concerning particular situations under study. A different form of inference not focused on any particular situation may also be of relevance if Observer does not know which world he is placed in. Of course, the actual world is a possible world or it could not exist. So Observer may, based on experience gained from situations encountered, attempt to ascertain which one out of a multitude of possible worlds is actualized.

The notions introduced are left as loose indications. They will take more shape as the modeling progresses. The terminology chosen here and later on is intended to provoke associations to common day experiences of the cognitive process. In addition, the terminology is largely consistent with usage in philosophy.

2.2. Truth and Belief

Nature is the holder of truth. Observer seeks the truth but is relegated to belief. However, Observer possesses a conscious and creative mind which can be exploited in order to obtain knowledge as effortlessly as possible. In contrast, Nature does not have a mind—and still, the reader may find it helpful to think of Nature as a kind of “person”!

We introduce a set X, the state space, and a set Y, the belief reservoir. Elements of X, generically denoted by x, are truth instances or states of truth or just states, whereas elements of Y, generically denoted by y, are belief instances. We assume that . Therefore, in any situation, it is conceivable that Observer actually believes what is true. Mostly, will hold. Then, whatever Observer believes, could be true.

Typically, in any situation, we imagine that Nature chooses a state and that Observer chooses a belief instance. This leads to the introduction of certain games which will be studied systematically later on, starting with

Section 2.10.

Though there may be no such thing as absolute truth, it is tempting to imagine that there is and to think of Natures choice as an expression of just that. This then helps to maintain a distinction between Nature and Observer. However, a closer analysis reveals that what goes on at Natures side is most correctly thought of as another manifestation of Observer. Thus the two sides cannot be separated. Rather, a key to our modeling is the interplay between the two.

For some models it may be appropriate to introduce a set of realistic states. States not in are considered unrealistic, out of reach for Observer, typically because they would involve availability of unlimited resources. Moreover, some models involve a set of certain beliefs. Beliefs from are chosen by Observer if he is quite determined on what is going on—but of course, he could be wrong. If nothing is said to the contrary, you can take and .

In a specific situation, Nature’s choice may not be free within all of X. Rather, it may be restricted to a non-empty subset of X, the preparation. The idea is that Observer, perhaps a physicist, can “prepare” a situation, thereby forcing Nature to restrict the choice of state accordingly. For instance, by placing a gas in a heat bath, Nature is restricted to states which have a mean energy consistent with the prescribed temperature.

A situation is normally characterized by specifying a preparation . A state x is consistent—viz., consistent with the preparation of the situation—if . Later on, we shall consider preparation families which are sets, generically denoted by , whose members are preparations.

Faced with a specific situation with preparation

, Observer speculates about the state of truth chosen by Nature. Observer may express his opinion by assigning a belief instance to the situation. If this is always chosen from the preparation

, Observer will only believe what

could be true. Sometimes, Observer may prefer to assign a belief instance in

Y\

P to the situation. Then this instance cannot possibly be one chosen by Nature. Nevertheless, it may be an adequate choice if an instance inside

would contradict Observer’s subjective beliefs. Therefore, the chosen instance may be the “closest” to the actual truth instance in a subjective sense. Anyhow, Observer’s choice of belief instance is considered a subjective choice which takes available information into account such as general insight and any

prior information. Qualitatively, these thoughts agree with Bayesian thinking, and as such enjoy the merits, but are also subject to the standard criticism, which applies to this line of thought, cf., [

12,

39].

2.3. A Tendency to Act, a Wish to Control

Two considerations will lead us to new and important structural elements.

First, we point to the mantra that

belief is a tendency to act. This is a rewording taken from Good [

40] who suggested this point of view as a possible interpretation of the notion of belief. In daily life, action appears more often than not to be a spontaneous reaction in situations man is faced with, rather than a result of rational considerations. Or reaction depends on psychological factors or brain activity largely outside conscious control. In contrast, we shall rely on rational thinking based on quantitative considerations. As a preparation we introduce a set

, the

action space, and a map from

Y into

, referred to as

response. Elements of

are called

actions. We use the notation

to indicate the action which is Observer’s response in situations where Observer’s belief is represented by the belief instance

y. Note that as we have assumed that

,

is well defined for every state

x.

Response need not be injective, thus it is in general not possible to infer Observer’s belief from Observer’s action. Response need not either be surjective, though for most applications it will be so. Actions not in the range are idle for the actual model under discussion but may become relevant if the setting is later expanded.

Belief instances, say and , with the same response are response-equivalent, notationally written .

If the model contains certain beliefs, i.e., if , we assume that contains a special element, the empty action, and that this action is chosen by Observer in response to any certain belief instance. In such cases, Observer sees no reason to take any action. If Observer finds several actions equally attractive, one could allow response to be a set-valued map. However, for the present study we insist that response is an ordinary map defined on all of Y. This will actually be quite important.

For a preparation , denotes the set of with .

Let us turn to another tendency of man, the wish to control. This makes us introduce a set W, the control space. The elements of W are referred to as controls. For the present modeling, this will not, formally, lead to further complications as we shall take W and to be identical: . This simplification may be defended by taking the point of view that in order to exercise control, you have to act, typically by setting up appropriate experiments. Moreover, you may consider it the purpose of Observer’s action to exercise control. Thus, in an idealized and simplified model as here presented, we simply identify the two aspects, action and control. Later elaborations of the modeling may lead to a clear distinction between action and the more passive concept of control. As and W are identified, we shall often use w as a generic element of and we shall denote the empty action—the same as the empty control—by .

The simplest models are obtained when response is an injection or even a bijection. Moreover, simplest among these models are the cases when and response is the identity map. This corresponds to a further identification of belief with action or control. Even then it makes a difference if you think about an element as an expression of belief, as an expression of action or as an expression of control.

Although many models do not need the introduction of (or W), the further development will to a large extent refer first and foremost to -related concepts. Technically, this results in greater generality, as response need not be injective. Belief-type concepts, often indicated by referring to the “Y-domain”, will then be derived from action- or control-based concepts, often indicated by pointing to the “-domain”. The qualifying indication may be omitted if it is clear from the context whether we work in the one domain or the other.

2.4. Atomic Situations, Controllability and Visibility

Two relations will be introduced. Controllability is the primary one from which the other one, visibility, will be derived. These relations constitute refinements which may be disregarded at a first reading. This can be done by taking the relations to be the diffuse relations , in notation below, and . The reader may recall that in general mathematical jargon, a diffuse relation is one without restrictions, i.e., one for which any element is in relation to any other element.

Pairs of states and belief instances or pairs of states and controls are key ingredients in situations from the world. However, not all such pairs will be allowed. Instead, we imagine that offhand, Observer has some limited insight into Natures behaviour and therefore, Observer takes care not to choose “completely stupid” belief instances or controls, as the case may be.

We express these ideas in the -domain by introducing a relation from X to , called controllability and denoted . Thus is a subset of the product set . Elements of are atomic situations (in the -domain). If a preparation is given, it may suffice to consider the restriction which consists of all atomic situations with .

For an atomic situation , we write and say that w controls x or that x can be controlled by w. An atomic situation is an adapted pair if w is adapted to x in the sense that .

For a preparation we write , and call w a control of , if w controls every state in (). We also express this by saying that w controls . By we denote the set of all controls of . We write if is the singleton set . In case is the diffuse relation, for any preparation .

For , denotes the control region of , the set of for which for some . We write if is the singleton set . Clearly, the statements , and are equivalent.

We assume that the following conditions hold:

and normally also that

The first condition is essential and the second one is rather innocent. The third condition is introduced when we want to ensure that

X (or

Y) is not “too large”. Models where (

3) does not hold are considered unrealistic, beyond what man (Observer) can grasp. If response is surjective, it amounts to the condition

. It is illuminating to have models of classical Shannon theory in mind, cf.,

Section 3.7.

For a preparation

, we define the

centre of

(

-domain) as the set of controls in

which control

:

From controllability we derive the relation of

visibility for the

Y-domain, denoted

, and given by

Restrictions are at times of relevance.

If , we say that is an atomic situation (in the Y-domain) and write . Such a situation is an adapted pair if is so in the -domain, i.e., if and is a perfect match if . The two notions coincide if response is injective. An atomic situation is certain if .

Note that we use the same sign, ≻, for visibility and for controllability. The context will have to show if we work in the Y- or in the -domain. We see that if and only if . If this is so, we also say that y covers x or that x is visible from y.

By (

1) and by the defining relation (

5),

for all

, thus

contains the diagonal

. The

outlook (or

view) from

is the set

. Clearly,

. By (2) and (

5), this set is non-empty and, when (

3) holds, for at least one belief instance, the outlook is all of

X.

For a preparation

we write

, and call

y a

viewpoint of

, if

for every

. The set of all viewpoints of

is denoted

. We write

if

is the singleton

. By

, the

centre of (

Y-domain), we denote the set of viewpoints in the preparation:

Note that .

In any situation, Observer should ensure that from his chosen belief instance, every state which could conceivably be chosen by Nature is visible. Therefore, in a situation where the preparation is known to Observer, Observer should only consider belief instances in .

In the sequel we shall often consider bivariate functions, generically denoted by either (-domain) or by f (Y-domain). The -type functions are defined either on or on some subset of the form for some preparation . The range of may be any abstract set but will often be a subset of the extended real line. Given , it is understood that f without the hat denotes the derived function defined by for pairs for which is in the domain of definition of . The domain of definition of the derived function is either or the set if is defined on .

Every derived function depends only on response in the sense that if only . If response is a surjection, there is a natural one-to-one relation between -type functions and Y-type functions which depend only on response.

Consider an f-type function defined on all of . For , denotes the marginal function given y, defined on by . The marginal function given is the function defined by for . We write on to express, firstly, that so that is well defined on all of and, secondly, that this marginal function is finite on . We write if on X.

2.5. Knowledge, Perception and Deformation

Observer strives for knowledge, conceived as the synthesis of extensive experience. Referring to probabilistic thinking, we could point to situations where accidental experimental data are smoothed out over time as you enter the regime of the law of large numbers. However, Observer’s endeavours may result in less definitive insight, a more immediate reaction which we refer to as perception. It reflects how Observer perceives situations from the world or, with a different focus, how situations from the world are presented to Observer.

In the same way as we have introduced truth- and belief instances, we consider knowledge instances, also referred to as perceptions. Typically, they are denoted by z and taken from a set denoted Z, the knowledge base or perception base.

A simplifying assumption for our modeling is that the rules of the world

contain a special function,

, which maps

into

Z, generically,

The derived function, , then maps into Z. Both functions are referred to as the deformation. The context will show which one we have in mind, or .

Thus knowledge can be derived deterministically from truth and belief alone, and as far as belief is concerned, we only have to know the associated response. In terms of perception, Observer’s perception z of an atomic situation is given by .

In our modeling, the world is characterized by the deformation. We may thus talk about the world with deformation , . The rules of the world may contain other structural elements, but such elements are not specified in the present study. Possibilities which could be considered in future developments include context, noise from the environment, and dynamics. To some extent, such features can be expressed in the present modeling by defining and Z appropriately and by introducing suitable interpretations.

In case response is a bijection and

Z contains

X as well as

Y we may consider the deformations

and

defined by

, respectively

. The associated worlds are

and

. In

,

“what you see is what is true”, whereas in

,

“you only see what you believe”—or, in some interpretations, you only see what you want to see. The world

is the

classical world where, optimistically,

truth can be learned, whereas, in

, you cannot learn anything about truth. We refer to

as a

black hole. It is a narcissistic world, a world of extreme scepticism, only reflecting Observer’s beliefs and bearing no trace of Nature. If

Z is provided with a linear structure, we can consider further deformations

depending on a parameter

q by putting

. Worlds associated with deformations of this type are denoted

. These are the worlds we find of relevance for the discussion of Tsallis entropy, cf.,

Section 3.6.

The simplest world to grasp is the classical world, but also the worlds

and even a black hole contain elements which are familiar to us from daily experience, especially in relation to certain psychological phenomena. In this connection we point to

placebo effects, cf., Benedetti [

19], and to

visual attention, cf., Bundesen and Habekost [

18]. Presently, the relevance of our modeling in relation to these phenomena is purely qualitative.

Considering examples as indicated above, it is natural to expect that knowledge is of a nature closely related to the nature of truth and of belief. A key case to look into is that

. However, we shall not make any general assumption in this direction. What we shall do is to follow the advice of Shannon, as far as possible avoiding assumptions which depend on concrete semantic interpretations. As a consequence we shall only in

Section 3.6 introduce more specific assumptions about the representation of knowledge.

2.6. Effort and Description

We turn to the introduction of the key quantitative tool we shall work with. In so doing, we will be guided by the view that perception requires effort. Expressed differently, knowledge is obtained at a cost. Since, according to the previous section, knowledge can be derived from truth and belief alone, or from truth and action, no explicit reference to knowledge is necessary. Instead, we model effort (in the -domain) by a certain bivariate function, the effort function, defined on .

The rules of the world may not point directly to an effort function which Observer can favorably work with. Or there may be several sensible functions to choose from. The actual selection is considered a task for Observer.

Effort, description, experiment and measurement are related concepts. We put emphasis on the notion of description, which is intended to aid Observer in his encounters with situations from the world. Logically, description comes before effort. Effort arises when specific ideas about description are developed into a method of description, which you may here identify with an experiment. The implementation of such a method or the performance of the associated experiment involves a cost and this is what we conceive as specified quantitatively by the effort function.

Description depends on semantic interpretations and is often thought of in loose qualitative terms. However, in order to develop precise concepts which can be communicated among humans, quantitative elements will inevitably appear, typically through a finite set of certain real-valued functions,

descriptors. The descriptors of

Section 3.6 give an indication of what could be involved.

Imagine now that somehow Observer has chosen all elements needed—response, actions, experiments—and settled for an effort function, defined on . Let us agree on what a “good” effort function should mean. Generally speaking, Observer should aim at experiments with low associated effort. Consider a fixed truth instance x and the various possible actions, in principle free to be any action which controls x. It appears desirable that the action adapted to x should be the one preferred by Observer. Thus effort should be minimal in this case, i.e., should hold. Further, if the inequality is sharp except for the adapted action, this will have a training effect which, over time, will encourage Observer to choose the optimal action, .

Formally, we define an

effort function (in the

-domain) as a function

on

with values in

such that, for all

and all

,

Thus, for all

,

. The minimal value of

is the

entropy of

x for which we use the notation

:

This quantity will be discussed more thoroughly in the sequel. If , it is to be expected that when .

The effort function is proper, if, for any with , the minimum of is only achieved for the control adapted to x. As opposed to this notion we have the notion of a degenerate effort function which is one which only depends on the first argument x, i.e., for all , is a constant function.

Note that effort may be negative (but not ). This flexibility will later be convenient as it will allow us to pass freely from notions of effort to notions of utility by a simple change of sign. However, for more standard applications, effort functions will be non-negative.

The set of effort functions and the set of proper effort functions over are ordered positive cones in a natural way. You may note that if, in a sum of effort functions, one of the summands is proper, so is the sum. Two effort functions and , which only differ from each other by a positive finite factor are scalarly equivalent. If an effort function is proper, so is every scalarly equivalent one. There may be many non-scalarly equivalent effort functions. The choice among scalarly equivalent ones amounts to a choice of unit.

Proper effort functions could have been taken as the key primitive concept on which other concepts, especially response, can be based. To illustrate this, assume that and consider a function such that, for every state x for which is not identically , is a singleton. The minimal value of is again the entropy and we may define the set of realistic states by and, more importantly, response by the requirement that . This defines response uniquely on and for , the definition of is really immaterial and any element in which controls x will do.

Turning to the

Y-domain, we define an

effort function (

Y-domain), as a function

such that

Entropy is given by

. If there are certain atomic situation, it is natural to expect that effort vanishes for such situations. The effort function is

proper if equality in (

10) only holds if either

or else

. We also express this by saying that

satisfies the

perfect match principle. An effort function is

degenerate if, for every

,

.

The notions just introduced were defined directly with reference to the Y-domain. However, it lies nearby also to consider functions which can be derived from -effort functions . They are derived effort functions and, in case is proper, proper derived effort functions. The two strategies for definitions, intrinsic and via derivation, give slightly different concepts. In case response is injective, the resulting notions are equivalent. In general, derived effort functions depend only on response, i.e., if and and if then . In the other direction, for a proper derived effort function, you can only conclude response-equivalence, , if and .

Formally, the definitions related to Y-effort functions may be conceived as a special case of the definitions pertaining to the -domain (put and take the identity map as response).

We shall talk about effort functions without a qualifying prefix, or Y, if it is clear from the context what we have in mind. We shall always point out if we have derived functions in mind.

The effort functions introduced determine

net effort. However, the implementation of the method of description—which we imagine lies behind—may, in addition to a specific cost, entail a certain

overhead and, occasionally, it is appropriate to include this overhead in the effort. We refer to

Section 3.6 for instances of this.

We imagine that the choice of effort function involves considerations related to knowledge and to the rules of the world. However, once

, hence also

are fixed, these other elements are only present indirectly. The ideas of

Section 2.5 have thus mainly served as motivation for the further abstract development. The ideas will be taken up again when in

Section 3.6 we turn to a study of probabilistic models.

The author was led to consider proper effort functions in order to illuminate certain aspects of statistical physics, cf., [

34,

37]. However, the ideas have been around for quite some time, especially among statisticians. For them it has been more natural to work with functions taken with the reverse sign by looking at “score” rather than effort. Our notion of proper effort functions, when specialized to a probabilistic setting, matches the notion of

proper scoring rules as you find it in the statistical literature. As to the literature, Csiszár [

41] comments on the early sources, including Brier [

42], a forerunner of research which followed, cf., Good [

40], Savage [

43] (see e.g., Section 9.4) and Fischer [

44]. See also the reference work [

45] by Gneiting and Raftery. For research of Dawid and collaborators—partly in line with what you find here—see [

28,

46,

47,

48].

2.7. Information Triples

As advocated in the last section, effort is a notion of central importance. However, this notion should not stand alone but be discussed together with other fundamental concepts of information. This point of view will be emphasized by the introduction of a notion of information triples, the main notion of the present study. We start by philosophizing over the very concept of information.

Information in any particular situation concerns truth. If is a preparation, “” signifies that the true state is to be found among the states in . If is a singleton, we talk about full information and use the notation “x” rather than “”; otherwise, we talk about partial information.

We shall not be concerned with how information can be obtained—if at all. Perhaps, Observer only speculates about the potential possibility of acquiring information, either through his own activity or otherwise, e.g., via the involvement of an aid or a third party, an informer.

Information will be related to quantitatively defined concepts. As our basis we take a proper effort function . Following Shannon we disregard semantic content. Instead, we focus on the possibility for Observer to benefit from information by a saving of effort. Accordingly, we view as the information content of “x” in an atomic situation with x as truth instance and w as action or control—indeed, if you are told that x is the true state, you need not allocate the effort to the situation which you were otherwise prepared to do. The somewhat intangible and elusive concept of “information” is, therefore, measured by the more concrete and physical notion of effort, hence the unit of information is the same as the unit used for effort.

There is a huge literature elucidating what information really “

is”. Suffice it here to refer to [

21] and, as an example of a discussion more closely targeted on our main themes, we refer to Caticha [

49] who maintains that “

Just as a force is defined as that which induces a change in motion, so information is that which induces a change in beliefs”. One may just as well—or even better—focus on action. Then we can claim that

“information” is that which induces a change of action.

The central concept of the theory developed by Shannon is that of

entropy. This concept was already introduced in the preceding section. Here, we elaborate on possible interpretations. One view is that entropy is

guaranteed saving of effort. With effort given by

we are led to define the entropy

associated with the information “

x” as the minimum over

w of

. Thus, by (

8), (

9) holds.

The considerations above make most sense if, one way or another, Observer eventually obtains full information about the true state. However, if, instead, you view entropy as necessary allocation of effort, understood as the effort you have to allocate in order to have a chance to obtain full information, it does not appear important actually to obtain that information. In passing, one may think that a more neutral terminology such as “necessity” could have been chosen in place of “entropy”. That could be less awkward when you turn to other applications of the abstract theory than classical Shannon theory or statistical physics.

As yet a third route to entropy we suggest to view it as a quantitative expression of the complexity of the various states, maintaining that to evaluate complexity, Observer may use minimal accepted effort, the effort he is willing to allocate to the various states in order to obtain the information in question.

Entropy may also be obtained with reference only to the Y-domain. Indeed, with the derived effort function, for each state x, .

Whichever route to entropy you take—including the game theoretical route of

Section 2.10—it appears that subjective elements are involved, typically through Observer’s choice of description and associated experiments. If, modulo scalar equivalence, the actual world only allows one proper effort function, then entropy and notions related to entropy are of a more objective nature. We shall later see examples of such worlds but also for such worlds subjective elements may enter if Observer is considering which world is the actual one.

Apart from effort itself, and the derived notion of entropy, we turn to the introduction of two other basic concepts which make sense in our abstract setting, viz., redundancy for the -domain and its counterpart, divergence, for the Y-domain.

To define redundancy, consider an atomic situation

. Then

redundancy between

x and

w is measured by the difference between actual and minimal effort, i.e., ideally, as

Assume, for a moment, that entropy is finite-valued. Then redundancy in (

11) is well defined. Furthermore, redundancy is non-negative and only vanishes if

is an adapted pair.

However, we find it important to be able to deal with models for which entropy may be infinite. We do that by simply assuming that appropriate versions of redundancy and divergence exist with desirable properties. The simple device we shall apply in order to reach a sensible definition is to rewrite the defining relation (

11), isolating effort on the left hand side.

With the above preparations, we are ready to introduce the key concepts of our study. We start with concepts for the -domain and follow up after that by parallel concepts for the Y-domain.

We consider certain triples

of functions taking values in

with

and

defined on

and H defined on

X. If need be we may talk about triples over

or we may point to the

-domain. Such triples must satisfy special conditions in order to be of interest. The most important properties to consider are the following four:

The properties (

12), (13) and (15) are considered for all

and (14) for all

. The linking identity (

12) may be written shortly as

or, formally correct with

the projection of

onto

X, as

.

An

information triple is a triple

which satisfies the three first conditions (L, F and S). For such triples the function

is the associated

effort function, H the associated

entropy and

the associated

redundancy. This does not conflict with previous terminology. In particular, the associated effort function is indeed an effort function in the sense of

Section 2.6.

Information triples with the same redundancy are said to be equivalent. Equivalent triples may have quite different properties and one may search for representatives with good properties.

A

proper information triple in the

Y-domain is an information triple for which redundancy is proper, i.e., (15) holds. Clearly, the effort function of a proper information triple is proper in the sense of

Section 2.6. Moreover, if a triple is proper, so is any equivalent one.

An information triple is degenerate if redundancy vanishes: for all . The effort function of a degenerate information triple is degenerate.

Among the four defining properties, the last three (FSP) only involve redundancy. Accordingly, a function

defined on

is a

general redundancy function if it satisfies the fundamental inequality as well as the requirements of soundness and properness. Note that for such a redundancy function,

is a proper information triple and that any equivalent information triple may be obtained from

by a natural process of addition related to any function on

X with values in

, taking this function as the entropy function. To be precise, what is involved structurally is that you add information triples, one of which is proper and the other degenerate, viz., you add

and

. For further details on this theme, see

Section 3.1.

Normally, given a proper effort function

, there is a natural way to extend the redundancy function as defined by (

11) when

, so that a proper information triple emerges. For this reason, we may talk about the information triple

generated by . Then, the problem of indeterminacy of redundancy disappears. The slightly strengthened assumption that redundancy can be defined “appropriately” on all of

will, as it turns out, present no limitation in concrete cases of interest.

We turn briefly to

Y-type triples. They are triples

with

and D defined on

and H defined on

X. Key properties to consider are quite parallel to what we have discussed for the

-domain:

An information triple in the Y-domain is a triple which satisfies the conditions L, F and S. For such triples, is the associated effort, H the associated entropy and D the associated divergence.

A

proper information triple is one for which divergence is proper. Such triples are intrinsically defined in the sense that they do not depend on any action space or response function. If divergence vanishes, the triple is

degenerate. The effort function of a proper information triple is proper in the sense of

Section 2.6 and the effort function of a degenerate triple is degenerate.

A triple is a derived information triple, respectively a derived proper information triple, if there exists a triple satisfying the corresponding properties for the -domain such that is derived from and D from . Note that a derived proper information triple need not be a proper information triple according to the intrinsic definition. Indeed, from you can only conclude that x and y are response equivalent. Of course, if response is injective, the two types of proper information triples for the Y-domain—intrinsically defined or defined via derivation—are equivalent concepts.

A general divergence function D on is a function on which satisfies the F, S and P-requirements. Note that we include the property of properness in the definition. A general derived divergence function is one which can be derived from a general redundancy function.

For the Y-domain, notions of equivalence (same divergence!) and of addition of information triples are defined in the obvious manner.

Instead of taking triples as introduced above as the basis, it is quite often more natural to focus on triples of the “opposite nature”. This refers to situations where it is appropriate to focus on a positively oriented quantity such as utility or pay-off rather than on effort. Typically, this is the case for studies of economy, meteorology and statistics where one also meets the notion of “score” as previously indicated. In order to distinguish the two types of triples from each other, we may refer to them as being effort-based, respectively utility-based.

For the -domain, is a utility-based information triple if is so as an effort-based triple and, for the Y-domain, is a utility-based information triple if is so as an effort-based triple. Properness and other concepts introduced for effort-based triples carry over in the obvious way to utility-based triples.

For utility-based triples, and U are called utility, M is called max-utility. As for effort-based triples, is redundancy and D divergence. The linking identity takes the form () which can never result in the indeterminate form since, by definition, and U, hence also M, can never assume the value .

In view of the main examples we have in mind, we have found it most illuminating to take effort rather than utility as the basic concept to work with, and hence to develop the main results for effort-based quantities. Anyhow, even if you are primarily interested in considerations based on effort, you are easily led to consider also utility-based quantities as we shall see right away in the next section.

The concept of proper information triples is, except for minor technical details, equivalent to the concept of proper effort functions. Apart from a slight technical advantage, the triples constitute a preferable base for information theoretical investigations as the three truly basic notions of information are all emphasized together with their basic interrelationship—the linking identity. Historically, the notions arose for classical probabilistic information theoretical models, cf.,

Section 3.7. Effort functions go back to Kerridge [

50] who coined the term

inaccuracy, entropy goes back to Shannon [

1] and divergence to Kullback [

51]. The term “redundancy” which we have used for another side of divergence, corresponds to one usage in information theory, though there the term is used in several other ways which are not expressed in our abstract setting.

As an aside, it is tempting for the author to point to the pioneering work of Edgar Rubin going back to the twenties. Unfortunately, this was only published posthumously in 1956, cf., [

52,

53,

54]. Rubin made experiments over human speech and focused on what he called

the reserve of understanding. This is a quantitative measure of the amount you can cut out of a persons speech without seriously disrupting a listeners ability to understand what has been said. It can be conceived as a forerunner of the notion of redundancy.

Our way to information triples was through effort and one may ask why we did not go directly to the triples. For one thing, triples lead to a smooth axiomatic theory, as will be demonstrated in the present research, compare also with our previous contribution [

55]. However, though axiomatization can be technically attractive, we find that a focus on interpretation as in our more philosophical and speculative approach, is of primary importance and contributes best to an understanding of central concepts of information. Axiomatics only comes in after basic interpretations are in place.

A comment on the choice of terminology in relation to the concept of properness is in place. This concept is at times considered to be unnecessarily strong and we shall later, at the end of

Section 2.10 and in

Appendix A, develop weaker notions. When only a redundancy function or a divergence function is given and not a full information triple, we have chosen to incorporate the requirement of properness in its usual form in the definition of what we understand by a general redundancy function or a general divergence function.

2.8. Relativization, Updating

In this section we shall work entirely in the

Y-domain. We start by considering a proper effort-based information triple

over

. Often, it is natural to measure effort relative to some standard performance rather than by

itself. An especially important instance of this kind of

relativization concerns situations where Observer originally fixed a

prior, say

, but now wants to update his belief by replacing

with a

posterior y. Perhaps Observer—through his own actions or via an informer—has obtained the information “

” for some preparation

. If

, Observer may want to replace

by a posterior

. In a first attempt of a reasonable definition, the associated

updating gain is given by the quantity

obtained by comparing performance under the posterior with performance under the prior:

A difficulty with (

20) concerns the possible indeterminate form

. If we ignore the difficulty and apply the linking identity (

16) to both terms in (

20), entropy

cancels out and we find the expression

This is less likely to be indeterminate. When not of the indeterminate form

, we therefore agree to use (

21) as the formal definition of updating gain, more precisely of

relative updating gain with as prior. For the present study, we shall only work with updating gain when the marginal function

(defined in accordance with concepts and notation introduced in

Section 2.4) is finite on some preparation

under consideration. Assuming that this is the case, we realize that

is a proper utility-based information triple over

. For such triples we put

, i.e., we take

as the only certain belief instance. Max-utility is identified as the marginal function

on

and divergence is the original divergence function restricted to

.

It is important to note that the triples which occur in this way by varying

and

do not require the full effort function

in order to make sense. It suffices to start out with a general divergence function on

. When the construction is based on a general divergence function D, we refer to (

22) as the

updating triple generated by D and with

as prior.

Though rather trivial, the observations regarding updating gain are important as they show that results in that setting may be obtained from results based on effort. To emphasize this, we introduce—based only on a general divergence function D—the effort-based information triple

associated with (

22) as the triple

with

given by

This is a perfectly feasible effort-based triple over whenever is finite on . Clearly, it is proper.

In

Section 2.13 and

Section 2.15 we shall derive results about minimum divergence (information projections) from results about maximum entropy by exploiting the simple facts here uncovered.

As we have seen, natural information triples may be derived from a general divergence function by a simple process of

relativization. While we are at it, we note that in case

, also

reverse divergence defines a genuine divergence function on

(in contrast, reverse description effort need not define a genuine effort function). Therefore, if

and we put

,

defines a genuine proper information triple (when restricting the variables

x and

y appropriately). However, these triples are not found to be that significant.

2.9. Feasible Preparations, Core and Robustness

We claim that description is a key to obtainable information, to what can be known. Not every possible information “” for any odd preparation can be expected to reflect a realistic situation. The question we ask is “what can Observer know?” or “what kind of information can Observer hope to obtain?”. We thus want to investigate “limits to knowledge” and “limits to information”. In order to provide an answer, we shall identify classes of preparations which represent feasible information. These classes will be defined with reference to an effort function . For this section, need not be proper.

Given

and a

level , we define the

level set and the

sub level set by

i.e., as the set of states which are controlled by

w, either at the

level h or at the

maximum level h. These sets are genuine preparations whenever they are non-empty. When

w is the response of a state

,

is non-empty whenever

. As level- and sub level sets for other functions will appear later on, cf.,

Section 2.14, we may for clarity refer to

and to

as, respectively,

-level sets and

-sub level sets.

The preparations in (

26) we call

primitive strict, respectively

primitive slack preparations. A

general strict, respectively a

general slack preparation is a finite non-empty intersection of primitive strict, respectively primitive slack preparations. The

genus of these preparations is the smallest number of primitive preparations (either strict or slack as the case may be) which can enter into the definition just given. Thus primitive preparations are of genus 1.

If

are elements of

and

are real numbers, the sets

define strict, respectively slack preparations of genus at most

n whenever they are non-empty. The set

is the

corona of

whenever it is non-empty.

The preparations introduced above via the representation (

27) are those we consider to be feasible and we formally refer to them as the

feasible preparations. They provide the answer to the question about what can be known. They are the key ingredients in situations which Observer can be faced with. In any such situation a main problem concerns

inference, an issue we shall take up in the next section.

Often, families of feasible preparations are of interest. Given , we denote by , respectively , the families which consist of all preparations , respectively , which can be obtained by varying .

Clearly, the feasible preparations can also be expressed by reference to the derived effort function rather than . We use the notation and for, respectively, the -level set and the -sub level set . If , and (note that for an expression such as , the nature of q determines if this is a - or a -level set). For finite sequences of elements of Y and of real numbers, the sets and are defined in the obvious manner as are the families of preparations , respectively .

The level sets may be used to define certain special belief instances or controls which will later, theoretically as well as for applications, play a significant role. Given is a certain preparation

. Then, the

core of

consists of all belief instances

y for which the effort

is finite and independent of

x as long as

x is consistent. This notion, appropriately adjusted, also makes sense for the

-domain. Notation and defining requirements are given as follows:

If , respectively , we also say that y, respectively w, is robust.

We shall refine the notions above in two ways. Firstly, for a family

of preparations—such as a family of the form

defined above—the

core is defined as the intersection of the individual cores:

The second refinement we have in mind depends on on an

auxiliary preparation

, assumed to be a subset of the given preparation

. For the

-domain, a control

is a

-robust strategy for Observer if there exists a finite constant

h, such that the following two conditions hold:

When

we recover the original notion of robustness. The similar notion for belief instances is defined in the obvious way. Notation and defining relations for the corresponding adjustments of the notion of core are as follows:

From a formal point of view, it does not matter if we use -type sets or -type sets as the basis for the definition of feasible preparations. However, entering into more speculative interpretations, the -type sets which emphasize control seem preferable. Individual controls or a collection of such controls point to experiments which Observer may perform. An experimental setup identifies a certain preparation, and thus determines what is known to Observer. Determining all preparations which can arise in this way, we are led to the class of feasible preparations as defined above.

As to the nature of the various controls, we imagine that they are derived from description. To control a situation, you must be able to describe it, and with a description you have the key to control. We may imagine that, corresponding to a control w, Observer can realize a certain experimental setup consisting of various parts – measuring instruments and the like. In particular, there is a special handle which is used to fix the level of effort. If the level, perhaps best thought of as a kind of temperature, is fixed to be h, the states available to Nature are those in the appropriate feasible preparation. Several experiments can be carried out with the same equipment by adjusting the setting of the handle. If Observer wants to constrain the states by other means, he can add equipment corresponding to another control and choose a level for the experimental setup constructed based on . The result is a restriction of the available states to the intersection of the two preparations involved. If the preparation is and the actual state is not inside this preparation, you may imagine that the result is overheating and breakdown of the experimental setup! Thus you must keep the state inside the preparation and this may well be what requires an effort as specified by .

2.10. Inference via Games, Some Basic Concepts

For this section, is an effort-based information triple over and the derived triple over . Further, a preparation is given, conceived as the partial information “”. In practice, will be a feasible preparation, but we need not assume so for this section.

The process of inference concerns the identification of “sensible” states in —ideally only one such state, the inferred state. In many cases, this can be achieved by game theoretical methods involving a two-person zero-sum game. As it turns out, this will result in double inference where also either control instances or belief instances will be identified—ideally, only one such instance, the inferred control or the inferred belief instance as the case may be.

An inferred state, say , brings Observer as close as possible to the truth in a way specified by the method applied. The same may be said about an inferred belief instance—or you may find it more appropriate to view an inferred belief instance as a final representation of Observers subjective views and conviction. Turning to controls, an inferred control is conceived as an invitation to Observer to act, say regarding the setup of experiments and performance of subsequent observations. In this way, actions by Observer as dictated by an inferred control is conceived as that which is needed for Observer in order to justify the inference about truth. In short, double inference gives Observer information both about what can be inferred about truth and how.

Given

, we shall study two closely related two-person zero-sum games, the

control game , and the

belief game , also referred to as the

derived game. If need be, we may write

and

. The games have Nature and Observer as players and

, respectively

as

objective function. Nature is understood to be a

maximizer, Observer a

minimizer. For both games,

strategies for Nature involve the choice of a consistent state. Observer strategies for

are controls from which every state in

can be controlled. For

, Observer strategies are belief instances from which every state in

is visible, in other words, they are viewpoints of

. Thus pairs of permissible strategies for the two games are either pairs

with

and

(with the understanding that

) or pairs

with

and

(with the understanding that

). In consistency with the discussion in

Section 2.4, an observer strategy may be thought of as a strategy which is not “completely stupid” whatever the strategy of Nature, as long as that strategy is consistent. The choice of strategy for Observer may be a real choice, whereas, for Nature, it is often more appropriate to have a fictive choice in mind which reflects Observer’s speculations over what the truth could be.

A remark is in order regarding models where it is unnatural to work with controls and only belief is involved. Then the basis will be an effort-based information triple over and only one type of game, will be involved. Formally, this may be considered a derived game by artificially introducing , , by taking response to be the identity map and by taking to be identical with Thus the approach we shall take with a primary focus on the control games, based on objects for the -domain is, formally, the more general one.

Following standard philosophy of game theory, Observer should always be prepared for a choice by Nature which is least favourable to him. One can argue that in our setting anything else would mean that Observer would not have used all available information. The line of thought goes well with Jaynes thinking as collected in [

9], though there you find no reference to game theory.

In order for our exposition to be self-contained and also because our games are slightly at variance with what is normally considered, we shall here give full details regarding definitions and proofs. As references to game theory and applications to the physical sciences, ref. [

32,

56,

57] may be useful.

Let us introduce basic notions for the control game and then comment more briefly on the derived game. The two

values of

are, for Nature,

and, for Observer,

Note the slight deviation from usual practice in that

w in the infimum in (

36) varies over

and not just over

or some other set independent of

x. Philosophically, one may argue that Nature does not know of the restriction to

—this is something Observer has arranged—and hence cannot know of any restriction besides the natural one

. As the infimum in (

36) is nothing but the entropy

, the value for Nature is the

maximum entropy value, also referred to as the MaxEnt

-value:

Problems on the determination of

and associated strategies are classical problems known from information theory or statistical physics. If

and

,

is an

optimal strategy for Nature, also referred to as a MaxEnt-

state or MaxEnt-

strategy. The archetypal concrete problems of this nature are discussed in

Section 3.7.

As to the value for Observer, we identify the supremum in (

37) with the

risk associated with the strategy

w and denote it by

:

The value for Observer then is the

minimal risk of the game, also referred to as the MinRisk-

value:

An

optimal strategy for Observer is a control

with

, also referred to as a MinRisk-

control or a MinRisk-

strategy. Note the general validity of the

minimax inequality:

Indeed, for arbitrary

and arbitrary

,

and taking supremum over

x and infimum over

w, (

41) follows. If (

41) holds with equality and defines a finite quantity, the game is said to be in

game theoretical equilibrium, or just in

equilibrium, and the common value of

and

is the

value of the game.

A further notion of equilibrium is attached to Nash’s name. It should, however, be said that for the relatively simple case here considered (two players, zero sum), the ideas we need originated with von Neumann, see [

58,

59] and, for a historical study, Kjeldsen [

60]. A pair of permissible strategies

is a

Nash equilibrium pair for if, with these strategies, none of the players have an incentive to change strategy—provided the opponent does not do so either. This means, for Nature, that

and, for Observer, that

The inequalities (

42) and (

43) constitute a special case of the celebrated

saddle-value inequalities of game theory. Note that, in our case, one of these inequalities (

43), is automatic if

is an adapted pair. This implies that

and that

as follows from the following trivial observation:

Proposition 1. If and are permissible strategies for the two players in and if is adapted to , then and .

Proof. By hypothesis, , and , hence , equivalent to the statement . ☐

Key notions and definitions for the belief game

are quite parallel to what we have discussed for the control game. Briefly, the values of

are

(for Nature) and

(for Observer) and notions of strategies and optimal strategies are defined in an obvious manner. We notice that the value for Nature in

is

, the same as the value for Nature in

and that the notion of optimal strategies for Nature in the two games are equivalent notions. We use Ri as notation for

risk in

, i.e., for

Therefore, if

and one of these belief instances is a viewpoint of

, then so is the other and the associated risks are the same. The value for Observer in

is

The game

is in

equilibrium if the two values of the game coincide and are finite. A pair

of permissible strategies is a

Nash equilibrium pair for

if the two

saddle-value inequalities hold:

Basic relationships between the values for the players in the belief game and the control game may be summarized as follows.

Proposition 2. The values for Nature in and in coincide and are equal to the MaxEnt value . The corresponding values for Observer in the two games are , respectively . In general, If response is surjective, equality holds in (

49).

Equality also holds if is in equilibrium. In that case also is in equilibrium and the values for the two games coincide: . Proof. The first statement regarding the values for Nature is trivial and also noted above. The inequality (

49) follows by (

45), which also implies that equality holds in case response is surjective. If

is in equilibrium, apply the minimax inequality to

, exploit equilibrium of

as well as the inequality (

49) and you find that

It follows that also is in equilibrium. Clearly, the values for the two games coincide. ☐

As it will turn out, in a great many cases of relevance for the applications, it is possible rather directly to identify optimal strategies for the players and to show that the games considered are in equilibrium. Furthermore, in many cases there is a natural relationship between the - and the -type games with the effect that, typically, there is a unique optimal strategy for Observer in and this strategy, a certain control, is adapted to any optimal strategy for Nature in the games and . Even more so, there is a tendency for the unique optimal control to be robust.

Results to support these claims will be taken up in

Section 2.12. The results require that somehow you have good candidates for the hoped-for optimal strategies. For this, the indicated tendency towards robustness is a clue to how such candidates can actually be found in concrete cases of interest. In fact, a search for optimal objects via robustness is very efficient and more natural than the usual approach via the differential calculus as we shall also comment on in

Section 2.12.

2.11. Refined Notions of Properness

The discussion to follow may appear unnecessary since normally, the standard notion of properness will apply. However, there are interesting cases where this is not so. Therefore, there is a need to look for suitable weaker notions which are still strong enough to have desirable consequences especially regarding properties of optimal strategies. As justification of the good sense in considering also the weaker notions of properness presented below we point to the general results of

Section 2.12 and to the extended applicability of a a well-known construction due to Bregman, cf.,

Section 3.1 and

Appendix A.

With assumptions as in

Section 2.10, let us assume that

is in equilibrium and, for simplicity, that there is a unique MaxEnt-state

. Let us think of the system which Observer is studying as a physical system subject to the laws of statistical physics. Then Observer will expect that after some lead-in time, the system will stabilize and

will represent the true state of the system. Observer aims at choosing a control which is optimal and at the same time adapted to Natures choice,

. Unfortunately, Observer does not know which state this is among the consistent states. So Observer cannot just choose the control

adapted to

, but has to somehow choose some control of

, say

w.

At this point we introduce a built-in learning mechanism operating over time which may lead Observer in the right direction. The idea is illuminated by introducing an all-knowing being, Guru. Guru will not reveal the truth to Observer directly but may respond to specific questions. With this option, Observer may eventually end up by a choice of just the right control.

The three questions we shall consider all concern the entropy

which Observer expects to be the MaxEnt-value. The questions are all related to the inequality

The questions put higher and higher demands on the chosen control w and are as follows:

- :

Does (

50)

hold for ? - :

Does (

50)

hold for all consistent x? - :

Does (

50)

even hold with equality for all consistent x?

With Question , Observer wants to know if the effort he applies is minimal. Clearly, in view of the linking identity and the fundamental inequality—and as by the assumed equilibrium of —the question is equivalent to asking if . If the reply is negative, Observer knows that his choice cannot be optimal and he will then choose another control. But even with an affirmative answer, i.e., when , Observer may not be satisfied and may, therefore, continue the questioning. If the information triple is proper, an affirmative answer to will tell Observer that and he may be satisfied—even though it could still happen, as examples will show, that w is not optimal. Further questioning may thus only be needed if the information triple is not proper—or not known to be proper.

For the second question, , Observer is worried about his risk in case the state should somehow change. The question is equivalent to asking if . With a negative reply, Observer will dismiss the choice of w, if for no other reason, because w cannot be optimal then. If the reply is positive, w is optimal and one may wonder if Observer will still find any further checking necessary. The suggested third question reflects the ambition of Observer that he wants the control to be robust at the level .

Motivated by our considerations, we shall say that the information triple is , or -proper over if, with , we can conclude that w is adapted to from affirmative answers to, respectively, question , or . If we just talk about, say -properness, it is understood that the conditions hold with . If the entropy function is finite-valued, -properness is equivalent to (standard) properness.

Concerning questions being asked to Guru, one may wonder why Observer does not simply ask directly either if the chosen control is optimal or if it is adapted to the truth. In this connection, we remark that questions which can be asked to Guru must depend on the possibilities for Observer’s communication with the system. For a further discussion of this, one should replace Guru with some mathematically defined rules for this communication. Such rules may reflect the kind of experiments and associated measurements which Observer can perform on the system.

2.12. Inference via Games, Some Basic Results

We shall investigate the possibility to identify optimal strategies based on a suggestion of possible candidates. Moreover, when optimal strategies exist, we shall look at the ensuing consequences. This approach will involve problems which are easy to handle technically and yet, it may be argued that from an applied point of view the results obtained are of greater significance than theoretically more sophisticated results, such as those developed in

Section 2.16. Several examples illustrating this point of view are listed in

Section 3.

As in the previous section, an effort-based information triple over , the underlying triple, is given together with a preparation .

When we speak about an optimal state without any further specification it is understood that we have an optimal strategy for Nature in one of the games or in mind. As we observed in the previous section it does not matter which game we think of. Moreover, when we speak of an optimal belief instance, respectively an optimal control it is also clear what we have in mind, viz., an optimal strategy for Observer in , respectively in .

In our first result we investigate situations where, in addition to a requirement of equilibrium, there exist optimal strategies for both players.